Multitask Siamese Network for Remote Photoplethysmography and Respiration Estimation

Abstract

:1. Introduction

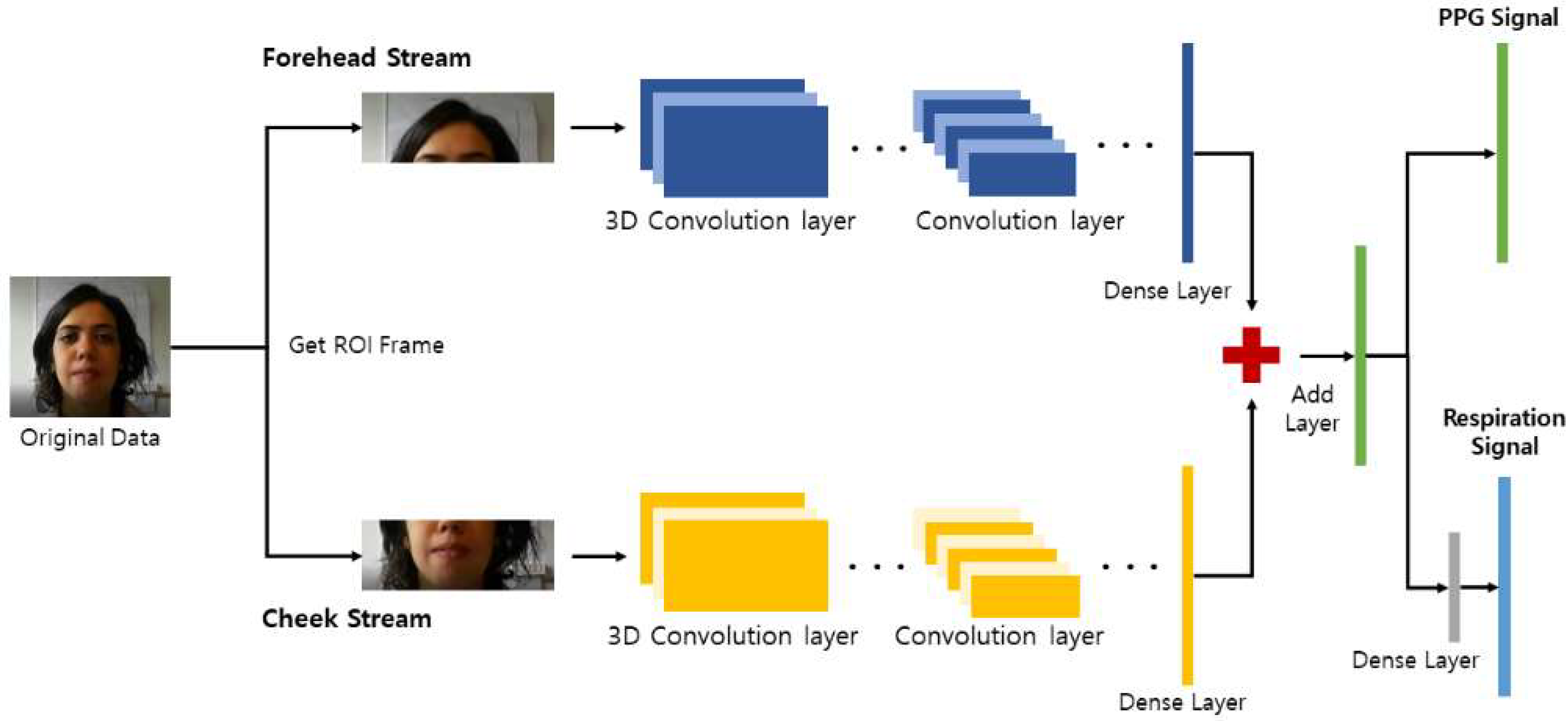

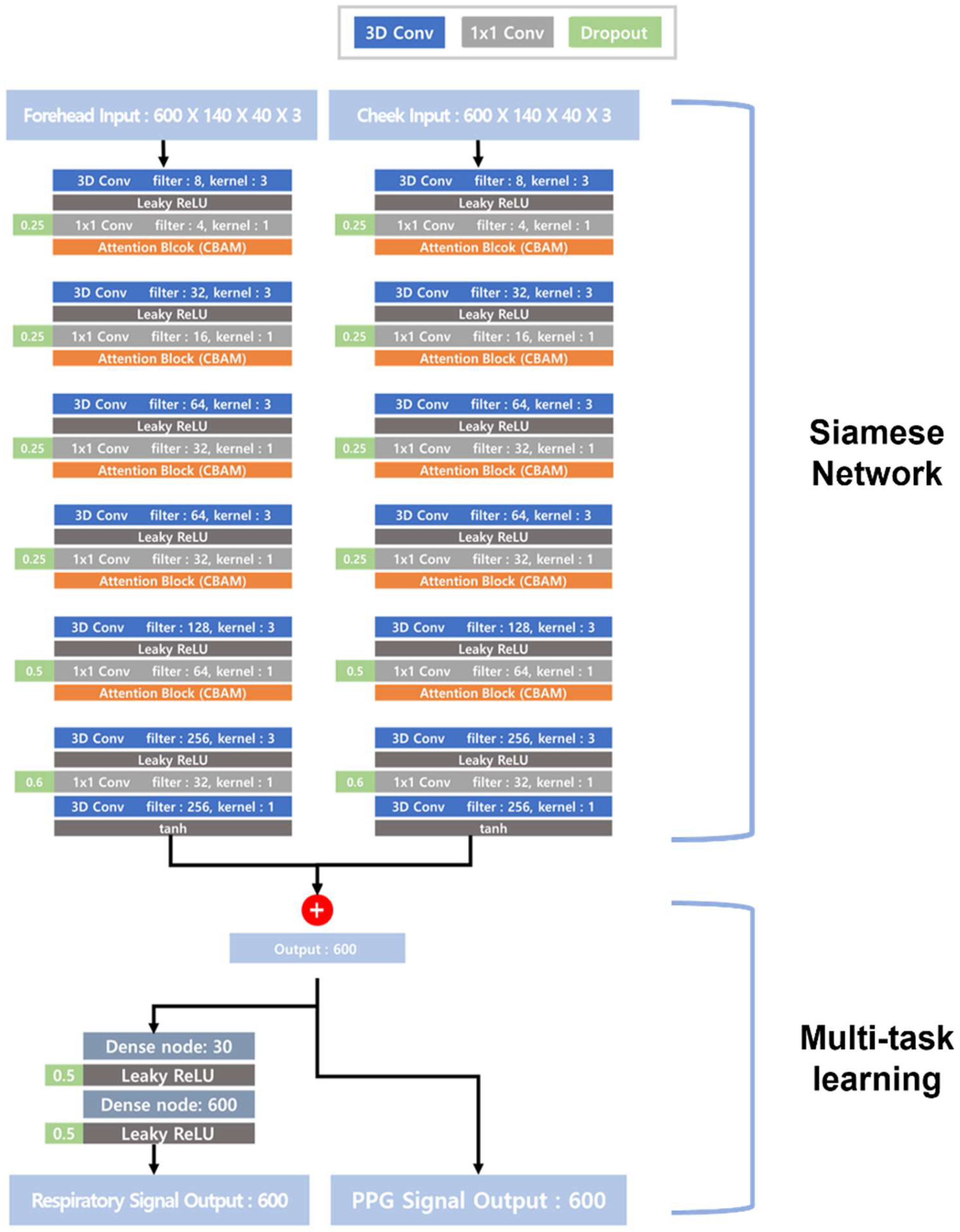

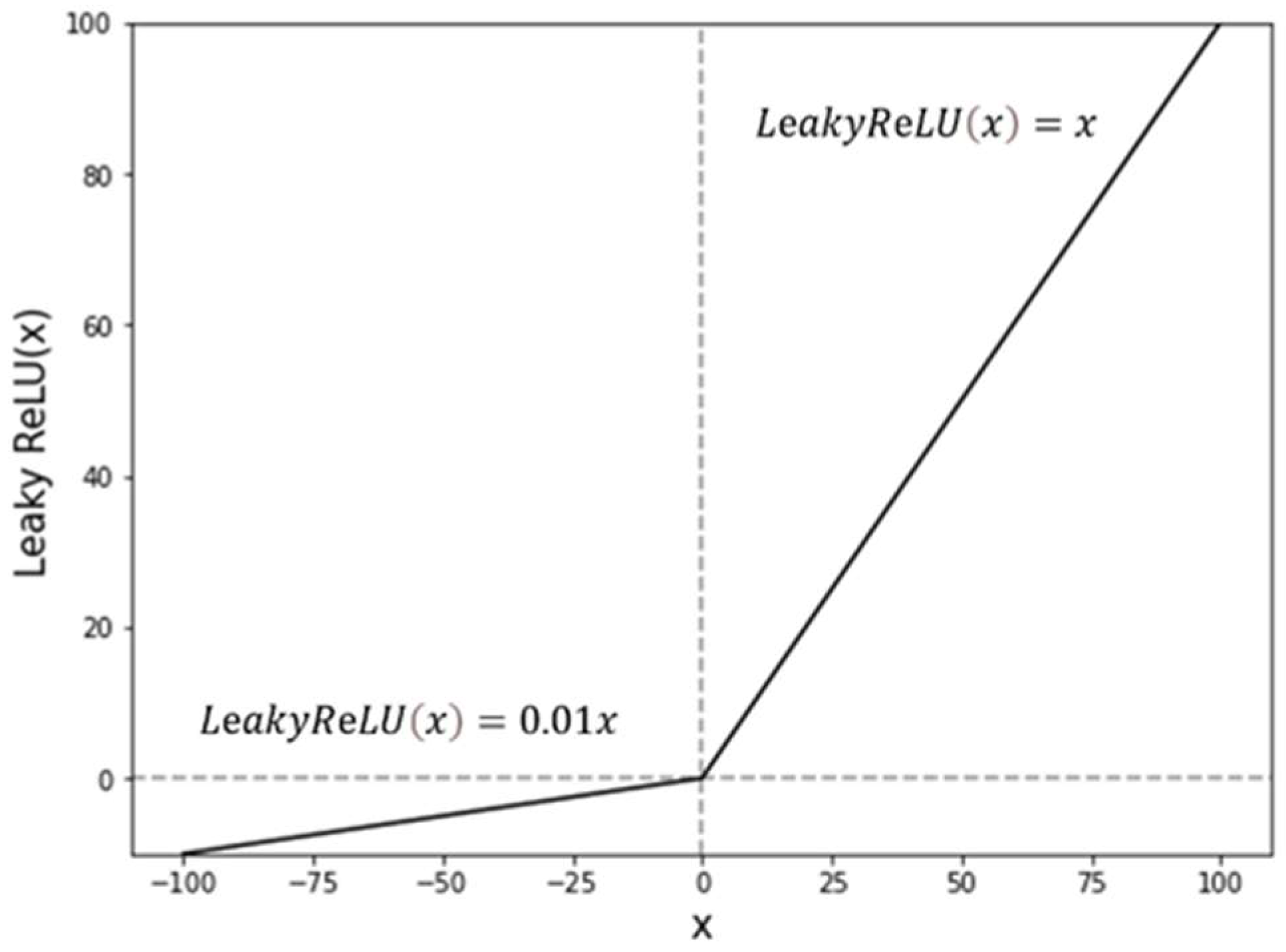

2. Algorithm

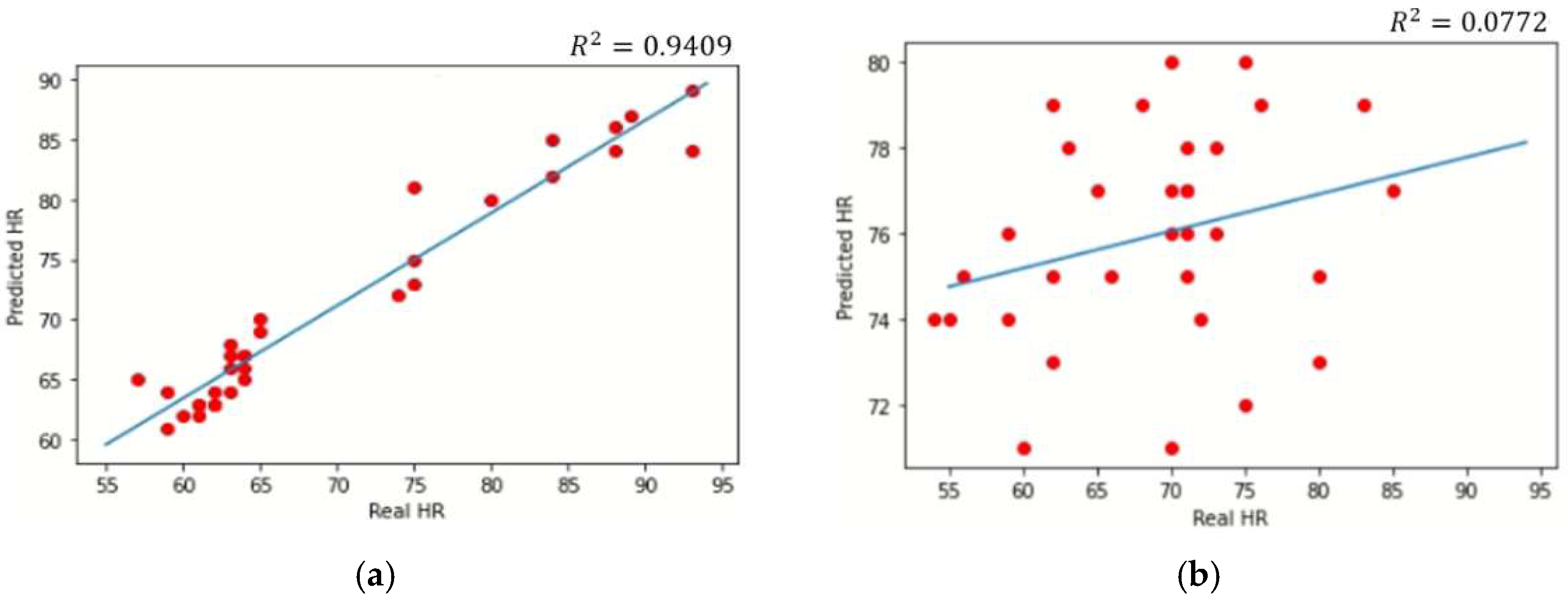

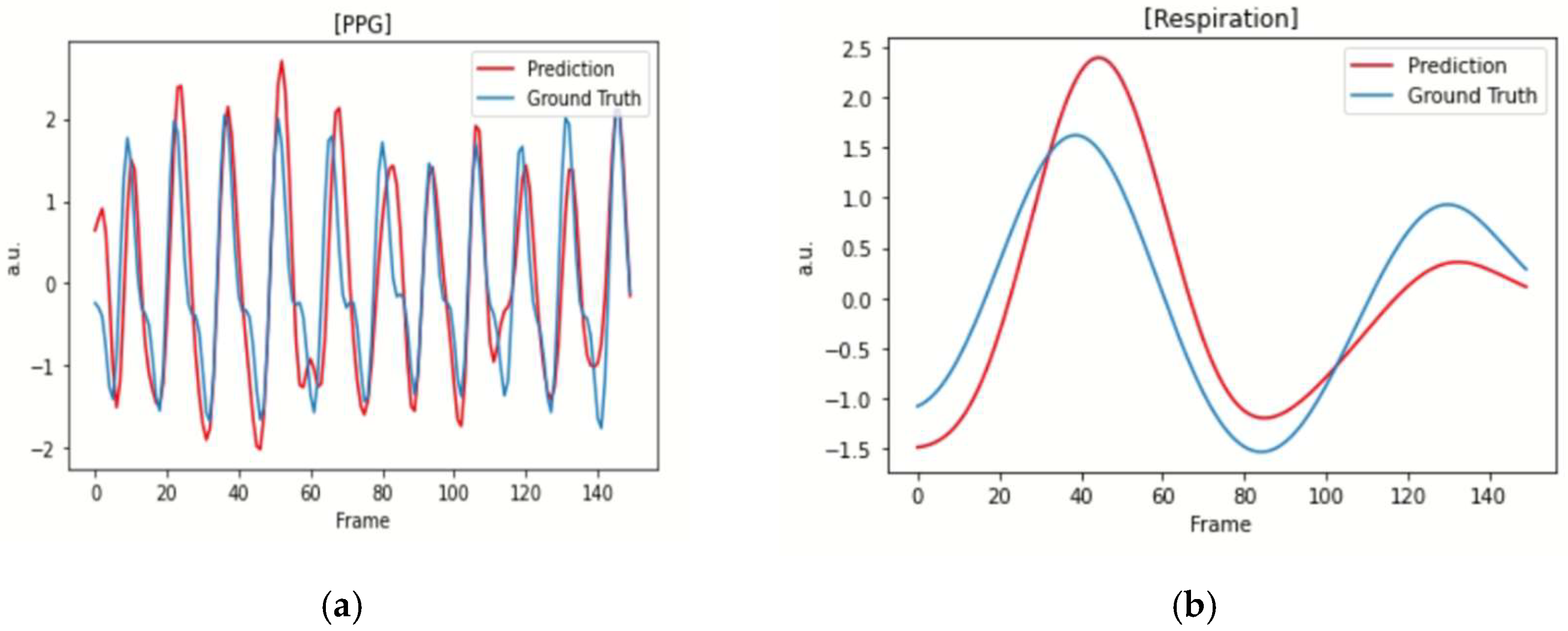

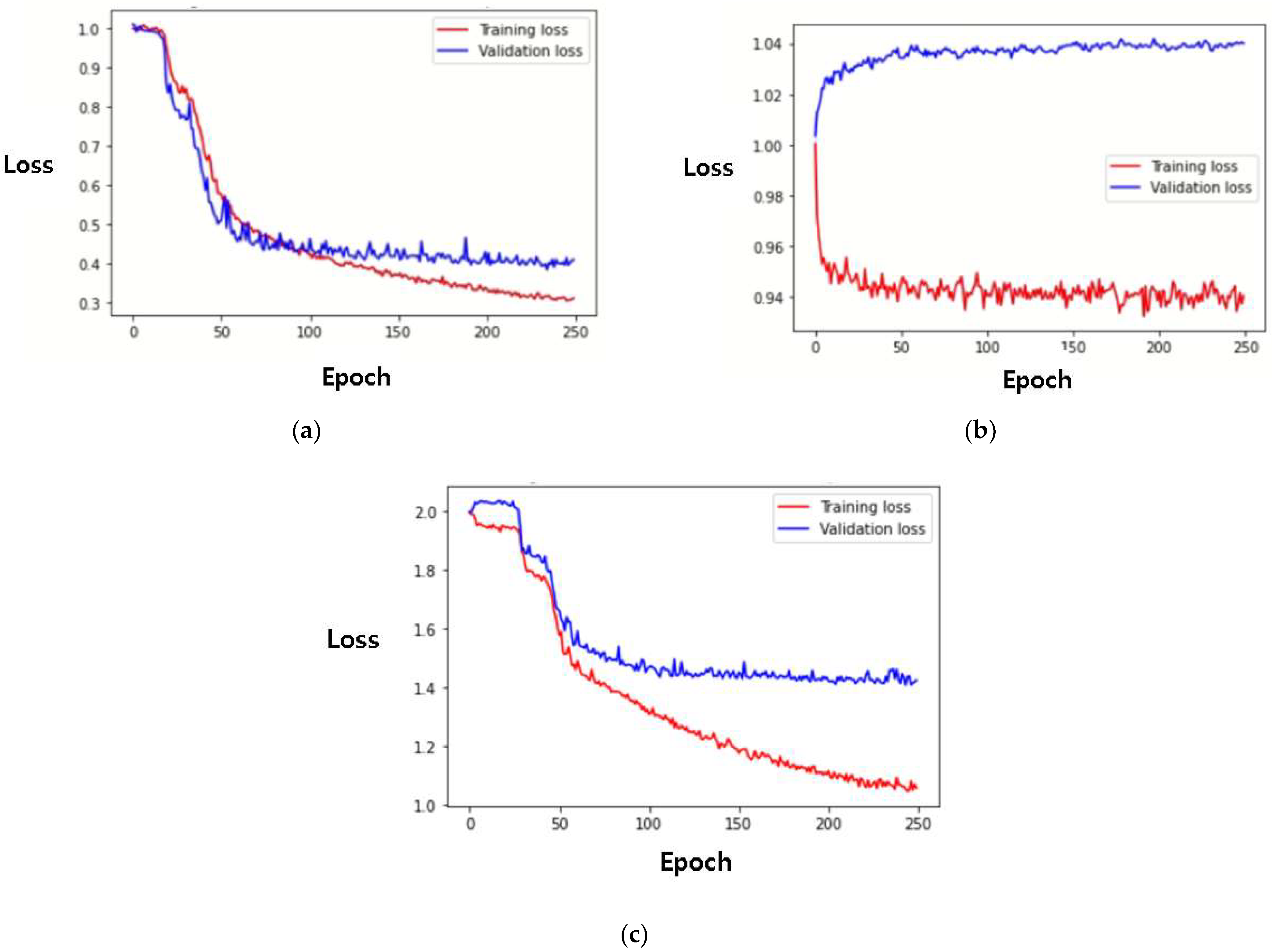

3. Methods

3.1. Dataset

3.2. Pre/Postprocessing

3.3. Environment and Evaluation

4. Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Massaroni, C.; Nicolò, A.; Schena, E.; Sacchetti, M. Remote Respiratory Monitoring in the Time of COVID-19. Front. Physiol. 2020, 11, 635. [Google Scholar] [CrossRef] [PubMed]

- Homayoonnia, S.; Lee, Y.; Andalib, D.; Rahman, M.S.; Shin, J.; Kim, K.; Kim, S. Micro/nanotechnology-inspired rapid diagnosis of respiratory infectious diseases. Biomed. Eng. Lett. 2021, 11, 335–365. [Google Scholar] [CrossRef] [PubMed]

- Natarajan, A.; Su, H.; Heneghan, C. Assessment of physiological signs associated with COVID-19 measured using wearable devices. NPJ Digit. Med. 2020, 3, 156. [Google Scholar] [CrossRef] [PubMed]

- Fox, K.; Borer, J.S.; Camm, A.J.; Danchin, N.; Ferrari, R.; Lopez Sendon, J.L.; Steg, P.G.; Tardif, J.; Tavazzi, L.; Tendera, M. Resting Heart Rate in Cardiovascular Disease. J. Am. Coll. Cardiol. 2007, 50, 823–830. [Google Scholar] [CrossRef] [Green Version]

- World Health Organization (WHO). The Top 10 Causes of Death. Available online: https://www.who.int/news-room/fact-sheets/detail/the-top-10-causes-of-death (accessed on 7 May 2022).

- Cretikos, M.A.; Bellomo, R.; Hillman, K.; Chen, J.; Finfer, S.; Flabouris, A. Respiratory rate: The neglected vital sign. Med. J. Aust. 2008, 188, 657–659. [Google Scholar] [CrossRef]

- Penzel, T.; Kantelhardt, J.W.; Lo, C.; Voigt, K.; Vogelmeier, C. Dynamics of Heart Rate and Sleep Stages in Normals and Patients with Sleep Apnea. Neuropsychopharmacology 2003, 28, S48–S53. [Google Scholar] [CrossRef]

- Vandenbussche, N.L.; Overeem, S.; van Dijk, J.P.; Simons, P.J.; Pevernagie, D.A. Assessment of respiratory effort during sleep: Esophageal pressure versus noninvasive monitoring techniques. Sleep Med. Rev. 2015, 24, 28–36. [Google Scholar] [CrossRef]

- Seo, W.; Kim, N.; Kim, S.; Lee, C.; Park, S. Deep ECG-Respiration Network (DeepER Net) for Recognizing Mental Stress. Sensors 2019, 19, 3021. [Google Scholar] [CrossRef] [Green Version]

- Tamura, T.; Maeda, Y.; Sekine, M.; Yoshida, M. Wearable Photoplethysmographic Sensors–Past and Present. Electronics 2014, 3, 282–302. [Google Scholar] [CrossRef]

- Pirhonen, M.; Peltokangas, M.; Vehkaoja, A. Acquiring Respiration Rate from Photoplethysmographic Signal by Recursive Bayesian Tracking of Intrinsic Modes in Time-Frequency Spectra. Sensors 2018, 18, 1693. [Google Scholar] [CrossRef] [Green Version]

- Charlton, P.H.; Birrenkott, D.A.; Bonnici, T.; Pimentel, M.A.F.; Johnson, A.E.W.; Alastruey, J.; Tarassenko, L.; Watkinson, P.J.; Beale, R.; Clifton, D.A. Breathing Rate Estimation from the Electrocardiogram and Photoplethysmogram: A Review. RBME 2018, 11, 2–20. [Google Scholar] [CrossRef] [Green Version]

- Bellenger, C.R.; Miller, D.; Halson, S.L.; Roach, G.; Sargent, C. Wrist-Based Photoplethysmography Assessment of Heart Rate and Heart Rate Variability: Validation of WHOOP. Sensors 2021, 21, 3571. [Google Scholar] [CrossRef] [PubMed]

- Vescio, B.; Salsone, M.; Gambardella, A.; Quattrone, A. Comparison between electrocardiographic and earlobe pulse photo-plethysmographic detection for evaluating heart rate variability in healthy subjects in short- and long-term recordings. Sensors 2018, 18, 844. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Elgendi, M. On the Analysis of Fingertip Photoplethysmogram Signals. Curr. Cardiol. Rev. 2012, 8, 14–25. [Google Scholar] [CrossRef] [PubMed]

- Schrumpf, F.; Sturm, M.; Bausch, G.; Fuchs, M. Derivation of the respiratory rate from directly and indirectly measured respiratory signals using autocorrelation. Curr. Dir. Biomed. Eng. 2016, 2, 241–245. [Google Scholar] [CrossRef]

- van der Kooij, K.; Naber, M. An open-source remote heart rate imaging method with practical apparatus and algorithms. Behav. Res. Methods 2019, 51, 2106–2119. [Google Scholar] [CrossRef]

- Takano, C.; Ohta, Y. Heart rate measurement based on a time-lapse image. Med. Eng. Phys. 2007, 29, 853–857. [Google Scholar] [CrossRef]

- Gailite, L.; Spigulis, J.; Lihachev, A. Multilaser photoplethysmography technique. Lasers Med. Sci. 2008, 23, 189–193. [Google Scholar] [CrossRef]

- Tarassenko, L.; Villarroel, M.; Guazzi, A.; Jorge, J.; Clifton, D.A.; Pugh, C. Non-contact video-based vital sign monitoring using ambient light and auto-regressive models. PM 2014, 35, 807–831. [Google Scholar] [CrossRef]

- Abuella, H.; Ekin, S. Non-Contact Vital Signs Monitoring Through Visible Light Sensing. JSEN 2020, 20, 3859–3870. [Google Scholar] [CrossRef] [Green Version]

- Gudi, A.A.; Bittner, M.; van Gemert, J.C. Real-Time Webcam Heart-Rate and Variability Estimation with Clean Ground Truth for Evaluation. Appl. Sci. 2020, 10, 8630. [Google Scholar] [CrossRef]

- van Gastel, M.J.H.; Stuijk, S.; de Haan, G. Robust respiration detection from remote photoplethysmography. Biomed. Opt. Express 2016, 7, 4941–4957. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wallace, B.; Kassab, L.Y.; Law, A.; Goubran, R.; Knoefel, F. Contactless Remote Assessment of Heart Rate and Respiration Rate Using Video Magnification. IM-M 2022, 25, 20–27. [Google Scholar] [CrossRef]

- Tsou, Y.; Lee, Y.; Hsu, C.; Chang, S. Siamese-rPPG Network; ACM: Rochester, NY, USA, 2020; pp. 2066–2073. [Google Scholar]

- Liu, X.; Fromm, J.; Patel, S.; McDuff, D. Multi-Task Temporal Shift Attention Networks for On-Device Contactless Vitals Measurement. arXiv 2020, arXiv:2006.03790. [Google Scholar]

- Eun, J.C.; Kim, J.-T. Die-to-die Inspection of Semiconductor Wafer using Bayesian Twin Network. IEEE Trans. Smart Process. Comput. 2021, 10, 382–389. [Google Scholar]

- Kwon, S.; Kim, J.; Lee, D.; Park, K. ROI Analysis for Remote Photoplethysmography on Facial Video. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milano, Italy, 25–29 August 2015; pp. 4938–4941. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Maas, A.L.; Hannun, A.Y.; Ng, A.Y. Rectifier nonlinearities improve neural network acoustic models. In Proceedings of the International Machine Learning Society, Atlanta, GA, USA, 16–21 June 2013; Volume 30, p. 1. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.; Kweon, I.S. CBAM: Convolutional Block Attention Module. arXiv 2018, arXiv:1807.06521. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Available online: https://thoughttechnology.com/biograph-infiniti-software-platform-t7900/# (accessed on 8 April 2022).

- Heusch, G.; Anjos, A.; Marcel, S. A Reproducible Study on Remote Heart Rate Measurement. arXiv 2017, arXiv:1709.00962. [Google Scholar]

- King, D.E. Dlib-ml: A Machine Learning Toolkit. J. Mach. Learn. Res. 2009, 10, 1755. [Google Scholar]

- Abadi, M. TensorFlow: Learning Functions at Scale; ACM: Rochester, NY, USA, 2016; p. 1. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Wang, Z.; Kao, Y.; Hsu, C. Vision-Based Heart Rate Estimation Via a Two-Stream CNN; IEEE: New York, NY, USA, 2019; pp. 3327–3331. [Google Scholar]

- Hu, M.; Qian, F.; Guo, D.; Wang, X.; He, L.; Ren, F. ETA-rPPGNet: Effective Time-Domain Attention Network for Remote Heart Rate Measurement. IEEE Trans. Instrum. Meas. 2021, 70, 2506212. [Google Scholar] [CrossRef]

- Tsou, Y.; Lee, Y.; Hsu, C. Multi-task Learning for Simultaneous Video Generation and Remote Photoplethysmography Estimation. In Computer Vision–ACCV 2020; Springer International Publishing: Cham, Switzerland, 2021; pp. 392–407. [Google Scholar]

- Massaroni, C.; Lopes, D.S.; Lo Presti, D.; Schena, E.; Silvestri, S. Contactless Monitoring of Breathing Patterns and Respiratory Rate at the Pit of the Neck: A Single Camera Approach. J. Sens. 2018, 2018, 4567213. [Google Scholar] [CrossRef]

- Brieva, J.; Ponce, H.; Moya-Albor, E. A Contactless Respiratory Rate Estimation Method Using a Hermite Magnification Technique and Convolutional Neural Networks. Appl. Sci. 2020, 10, 607. [Google Scholar] [CrossRef] [Green Version]

- Nakajima, K.; Tamura, T.; Miike, H. Monitoring of heart and respiratory rates by photoplethysmography using a digital filtering technique. Med. Eng. Phys. 1996, 18, 365–372. [Google Scholar] [CrossRef]

- Howard, A.G. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Shojaedini, S.V.; Beirami, M.J. Mobile sensor based human activity recognition: Distinguishing of challenging activities by applying long short-term memory deep learning modified by residual network concept. Biomed. Eng. Lett. 2020, 10, 419–430. [Google Scholar] [CrossRef]

- Yadav, S.P. Vision-based Detection, Tracking, and Classification of Vehicles. IEIE Trans. Smart Process. Comput. 2020, 9, 427–434. [Google Scholar] [CrossRef]

- Nowara, E.M.; Marks, T.K.; Mansour, H.; Veeraraghavan, A. SparsePPG: Towards Driver Monitoring Using Camera-Based Vital Signs Estimation in Near-Infrared. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 1272–1281. [Google Scholar]

- Wang, Z.-K.; Kao, Y.; Hsu, C.-T. Vision-based Heart Rate Estimation via a Two-stream CNN. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 3327–3331. [Google Scholar] [CrossRef]

- Wang, W.; Stuijk, S.; de Haan, G. A Novel Algorithm for Remote Photoplethysmography: Spatial Subspace Rotation. IEEE Trans. Biomed. Eng. 2016, 63, 1974–1984. [Google Scholar] [CrossRef]

- Li, P.; Benezeth, K.N.Y.; Gomez, R.; Yang, F. Model-based Region of Interest Segmentation for Remote Photoplethysmography. In Proceedings of the 14th International Conference on Computer Vision Theory and Applications, Prague, Czech Republic, 25–27 February 2019; pp. 383–388. [Google Scholar]

- Li, X.; Chen, J.; Zhao, G.; Pietikäinen, M. Remote Heart Rate Measurement from Face Videos under Realistic Situations. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Špetlík, R.; Franc, V.; Matas, J. Visual Heart Rate Estimation with Convolutional Neural Network. In Proceedings of the British Machine Vision Conference, Newcastle, UK, 3–6 September 2018. [Google Scholar]

| Model | HR (BPM) | ||

|---|---|---|---|

| R | MAE | RMSE | |

| Siamese rPPG network [25] | 0.73 | 0.70 | 1.29 |

| Model by Z.-K. Wang et al. [38] | 0.40 | 8.09 | 9.96 |

| ETA-rPPGNet [39] | 0.77 | 4.67 | 6.65 |

| Model by Y.-Y. Tsou et al. [40] | 0.72 | 0.68 | 1.65 |

| Siamese network with convolution block attention module (CBAM) (proposed) | 0.97 | 2.31 | 3.29 |

| Model | HR (BPM) | RR (RPM) | |||

|---|---|---|---|---|---|

| R | MAE | RMSE | MAE | RMSE | |

| Multitask temporal shift convolutional attention network (MTTS-CAN) [26] | 0.20 | 7.97 | 10.38 | 9.0 | 9.50 |

| Multitask Siamese network (MTS, proposed) | 0.97 | 2.84 | 3.52 | 4.21 | 4.83 |

| Model | # of Parameters |

|---|---|

| Siamese rPPG network [25] | 11.80 M |

| Multitask temporal shift convolutional attention network (MTTS-CAN) [26] | 0.93 M |

| Siamese network with convolution block attention module (CBAM) (proposed) | 0.69 M |

| Multitask Siamese network (MTS, proposed) | 0.72 M |

| Method | MAE | RMSE |

|---|---|---|

| 2SR [44] | 20.98 | 25.84 |

| CHROME [45] | 7.80 | 12.45 |

| LiCVPR [46] | 19.98 | 25.59 |

| HR-CNN [47] | 8.10 | 10.78 |

| Two stream [48] | 8.09 | 9.96 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, H.; Lee, J.; Kwon, Y.; Kwon, J.; Park, S.; Sohn, R.; Park, C. Multitask Siamese Network for Remote Photoplethysmography and Respiration Estimation. Sensors 2022, 22, 5101. https://doi.org/10.3390/s22145101

Lee H, Lee J, Kwon Y, Kwon J, Park S, Sohn R, Park C. Multitask Siamese Network for Remote Photoplethysmography and Respiration Estimation. Sensors. 2022; 22(14):5101. https://doi.org/10.3390/s22145101

Chicago/Turabian StyleLee, Heejin, Junghwan Lee, Yujin Kwon, Jiyoon Kwon, Sungmin Park, Ryanghee Sohn, and Cheolsoo Park. 2022. "Multitask Siamese Network for Remote Photoplethysmography and Respiration Estimation" Sensors 22, no. 14: 5101. https://doi.org/10.3390/s22145101

APA StyleLee, H., Lee, J., Kwon, Y., Kwon, J., Park, S., Sohn, R., & Park, C. (2022). Multitask Siamese Network for Remote Photoplethysmography and Respiration Estimation. Sensors, 22(14), 5101. https://doi.org/10.3390/s22145101