1. Introduction

The traditional underwater acoustic array takes the cross-correlation output of the signal received by the sonar system as the test statistic of the reconstruction algorithm [

1]. However, due to the influence of multi-path propagation and noise in the real wave-guide environment, the attenuation phenomenon of the spatial correlation of the received signals of different array elements is extremely easy to produce [

2,

3]. How to improve the correlation of the received signal to achieve precise reconstruction of the underwater array is a research hot spot. Li proposed a frequency shift compensation method based on wave-guide invariant to improve the correlation of signals received by the sonar system [

4,

5]. However, the wave-guide invariant will change with the topography in complex terrain. This problem can be improved by the Compressed Sensing (CS) theory, which can reduce the distortion of the signal effectively by setting a reasonable threshold to remove the small characteristic part and retain the essential characteristic part of the signal.

CS theory, proved by Emmanuel Candes et al., pointed out that a sparse or compressible signal could be reconstructed exactly in some transform domains [

6]. CS method is an emerging signal processing theory on sampling, which allows the acquiring and reconstruction of signals using sub-Nyquist measurements [

7,

8]. Recovering the original signal from the compressed observation data is a convex optimization problem, and solving a linear optimization problem based on the norm is an NP-hard problem. It can be solved by using a greedy algorithm with progressive approximation strategy, in which the orthogonal matching pursuit (OMP) algorithm is the most representative [

9]. Subsequently, scholars have studied many improved algorithms based on orthogonal matching pursuit algorithm [

10,

11,

12]. Though the algorithms mentioned above can improve the spatial correlation of underwater acoustic signal and realize the detection and estimation of underwater acoustic signal, they need sparsity as a prior knowledge. Thong T.DO developed a sparse adaptive matching pursuit (SAMP) algorithm in 2009 [

13], but the fixed step size of SAMP is not flexible enough to avoid overestimation and underestimation. Most of the improved algorithms employ the method of reducing step-size if the algorithm starts to converge, however the improvement effect is not ideal. A sparsity adaptive subspace tracking (SASP) algorithm was proposed [

14], which has many iterations and high computational complexity. Therefore, the algorithm is not feasible in the underwater target detection system with high real-time requirement.

Therefore, the algorithm of underwater acoustic signal reconstruction based on sparsity adaptive needs further research and exploration, it is very important for the signal processing to find an algorithm that takes into account both the reconstruction time and reconstruction accuracy.

2. Theoretical Analysis

2.1. Compressed Sensing

Assume that a real valued and discrete signal

with

P sparsity can be represented by

P significant coefficients over an N-dimensional basis, where

[

15]. Ignoring background noise, the compressed sensing could be described as recovering a high-dimensional signal

from a low-dimensional signal

:

where

,

is the sensing matrix with more columns

N than rows

M. Generally, reconstructing

from

can be regarded as a problem of solving the equation whose solution is infinite. Considering the sparse constraint of

, the reconstruction problem can be described as the following minimization [

16]:

where

is the

norm of

x. Since the instability of

, the solution of the Equation (2) is not convergent. The

-norm minimization problem is NP-hard, which is solved by selecting atoms to the support set of the unknown signal sequentially in the greedy algorithm. Another solution method is to convert the Equation (2) to an

-norm minimization one:

This kind of reconstruction is called as convex optimization algorithm, which is performs better in the stability. However, the algorithm run-time becomes longer as the dimension increases. Many improved greedy methods such as SAMP and SASP algorithms perform better in both stability and run-time. Moreover, the RIP criterion has been proposed for

to guarantee that

has the unique solution corresponding to the measurement vector. The sensing matrix

must satisfy the RIP criterion of order

P if there

) such that

for any

P-sparse vector

(

).

Greedy algorithm and convex optimization algorithm are the two main methods of signal reconstruction as mentioned above. Unlike the convex optimization method, the greedy method does not constrain the sparsity of the signal, but directly seeks the sparsity solution by means of matching. This algorithm has many advantages, such as simple implementation, low computational complexity and fast convergence speed. It meets the requirement of underwater acoustic signal processing for the sonar system.

2.2. Ship Radiated Noise

In this paper, the corresponding mathematical model of the underwater acoustic signal received by the sonar system is established according to the characteristics of the frequency spectrum. The propeller and its own motor and other propulsion devices, as well as the ship body shuttle water movement, will produce noise radiation in the water when the ship is sailing. According to the noise source, ship-radiated noise has three types, propeller noise, hydrodynamic noise and mechanical noise, whose frequency spectrum includes a line spectrum and continuous spectrum.

The line spectrum is mainly formed by mechanical vibration, propeller resonance and blade cutting flow. The frequency

of the line spectrum is related to the ship speed

v and the propeller blades number

l, which satisfies the following relationships [

17]

where

m denotes the number of harmonics.

The continuous spectrum is mainly caused by the wide-band noise generated by the cavitation of the propeller, which is caused by the random breakdown of a large number of bubbles near the propeller. The mathematical model of ship-radiated noise

can be expressed as

where

and

are the time domain expression of continuous spectrum and line spectrum.

There are many algorithms for signal reconstruction. The two main methods are the greedy algorithm and convex optimization algorithm, as mentioned above. Unlike the convex optimization algorithm, the greedy algorithm does not restrict the sparse nature of the signal. Rather, it uses the matching method to find it.

3. SAVSMP Algorithm

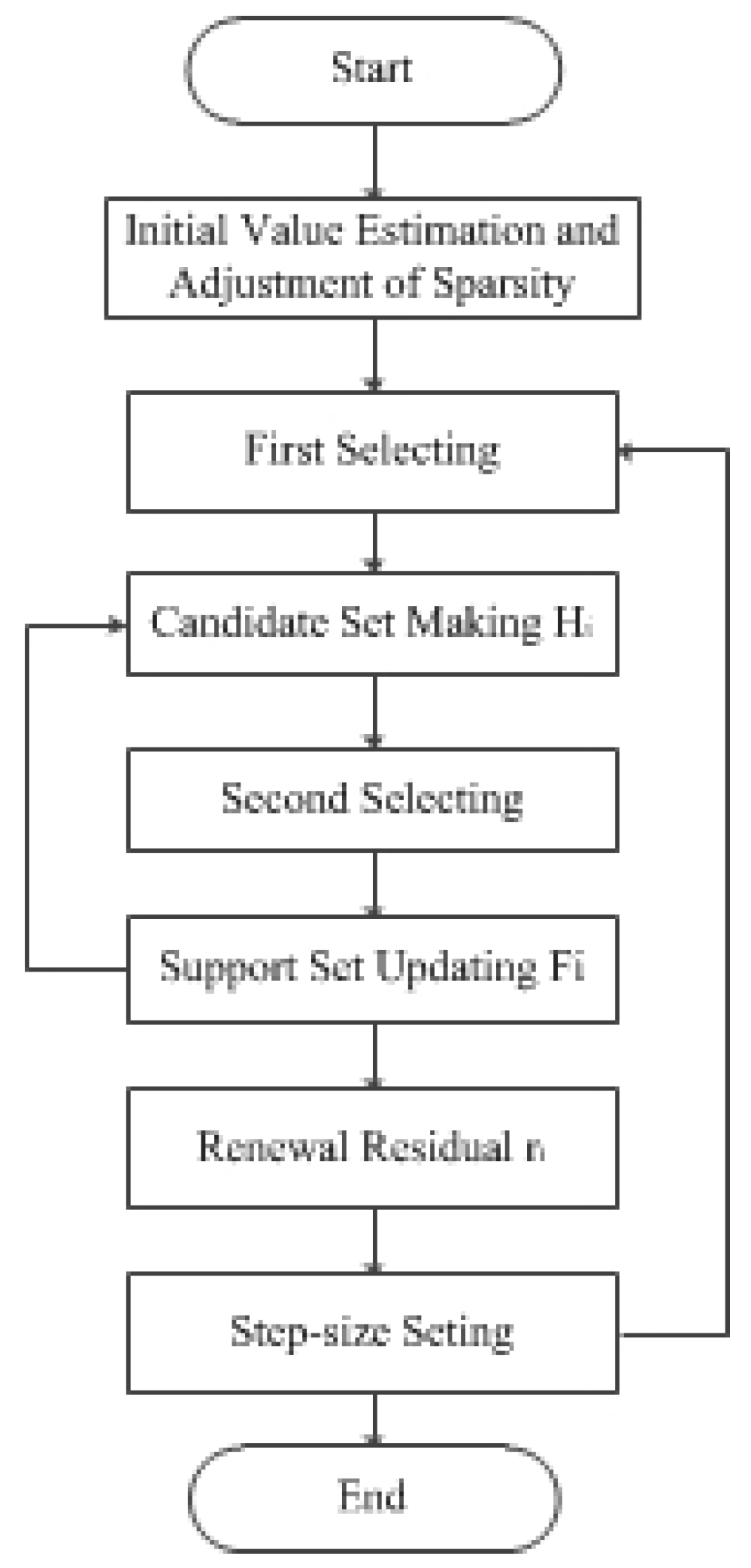

In order to overcome the shortcomings of the SAMP and SASP algorithms in underwater acoustic signal reconstruction, a sparse adaptive variable step size matching pursuit (SAVSMP) algorithm is proposed for the sonar system.The flow chart of the main procedure is shown in

Figure 1. The algorithm includes initial sparsity estimation, first selecting, candidate set making, second selecting, support set updating, residual updating and step-size setting parts. The novelty of the SAVSMP algorithm is that new methods are employed in initial sparsity estimation part, first selecting part and step-size setting part.

3.1. Initial Sparsity Estimation

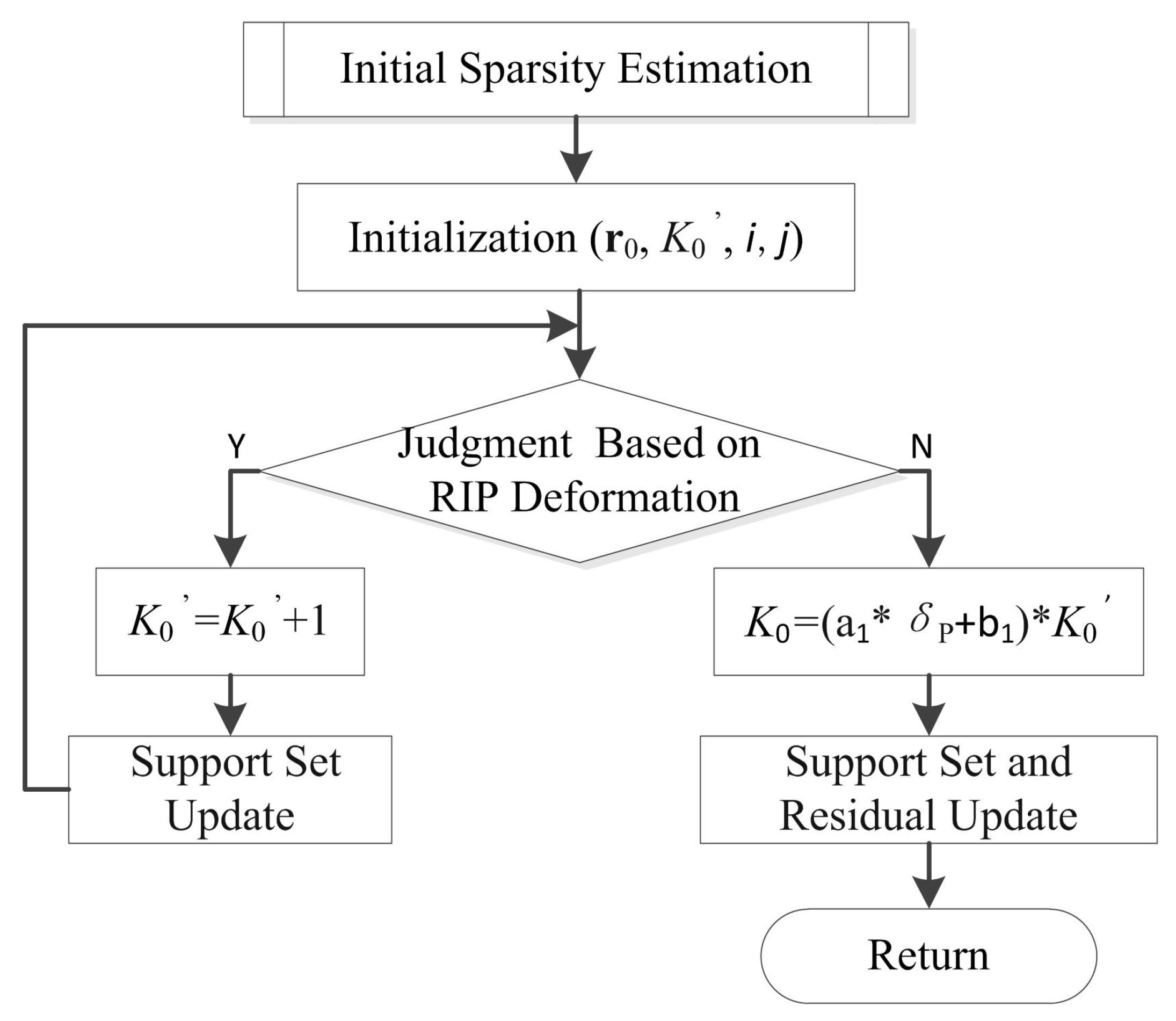

The flow chart of the initial sparsity estimation is shown in

Figure 2.

There are several stages in the recovery process, and each stage includes several iterations. Here,

i denotes the iteration index, and

j indicates the stage index. Moreover,

and

are denoted as the initial residual and initial sparsity. The initial sparsity is similar to SASP and AStMP algorithms, which select atoms by the RIP criterion. The criterion is formally defined as Theorem 1, which is proved by [

14].

Theorem 1. If the sensing matrixΦsatisfies the RIP criterion with the parameters , when , one haswhere is the support set consisting of selected atoms. Furthermore, we have Theorem 2 as follows. Theorem 2. If the sensing matrixΦsatisfies the deformation of the RIP criterion with the parameters when , one has If Equation (8) is satisfied, the initial sparsity

=

+1. The support set will be updated as

After updating, and algorithm continuous to return to the judgment part. It indicates that the initial sparsity estimation is completed when the criterion is not met.

It can be seen from the Equation (8) that the larger the is, the smaller the would be; on the contrary, the smaller the is, the larger the that could be obtained. Therefore, should be adjusted to in order to prevent over-estimation and under-estimation of the sparsity estimation. Taking and as the independent variable and dependent variable, respectively, the function can be obtained by the MATLAB curve fitting, + , . The Sum of Squares due to Error (SSE) takes the value of 4.437 × 10, which means the goodness-of-fit of the obtained formula is high.

The over-estimated value is reduced when is smaller, and the under-estimated value is increased when is larger. However, is halved whatever value the RIP constant takes in the AStMP algorithm which will result in under-estimation. After updating the support set and the residual, the subprogramme returns.

3.2. First Selecting

The greedy algorithms usually use the inner product criterion to measure the similarity between vectors. Suppose

, and

are two n-dimensional vectors, the inner product criterion between them can be defined as follows [

18]:

It can be seen that the inner product criterion realizes the selection of atoms by calculating the angle cosine of the sensing matrix and the residual. The greater the absolute value, the more relevant the residual is to the selected atom from the sensing matrix. However, this matching method can not reflect the effect of the important components of the amplified data when measuring the similarity of signals [

19]. Therefore, a better method needs to be found to select the atom that best matches the residual.

The Dice coefficient criterion is introduced here, and its definition is as follows [

20,

21,

22]:

It can be seen from Equations (10) and (11), cos(x,y) and Dice(x,y) have the same range of values [0, 1], and will be 1 when the two vectors are equal. It is easy to find that the denominator of the inner product criterion is

which represents the geometric average of the square sum calculated by the two vectors, while the denominator of the Dice coefficient criterion is

which represents the arithmetic average of the square sum calculated by the two vectors. According to the average theory, in general, the geometric mean focuses on the average change trend of the overall sample, while the arithmetic average represents the unbiased estimate of the individual expectations. Consequently, the arithmetic mean is better to avoid partial information loss and preserve the integrity of the signal [

23]. Therefore, the Dice coefficient criterion is more conducive to select the appropriate atom than the inner product criterion, it can reflect the important role of each element of the signal [

24,

25]. Therefore, the proposed algorithm SAVSMP uses, the following formula to match the best atoms [

26,

27]:

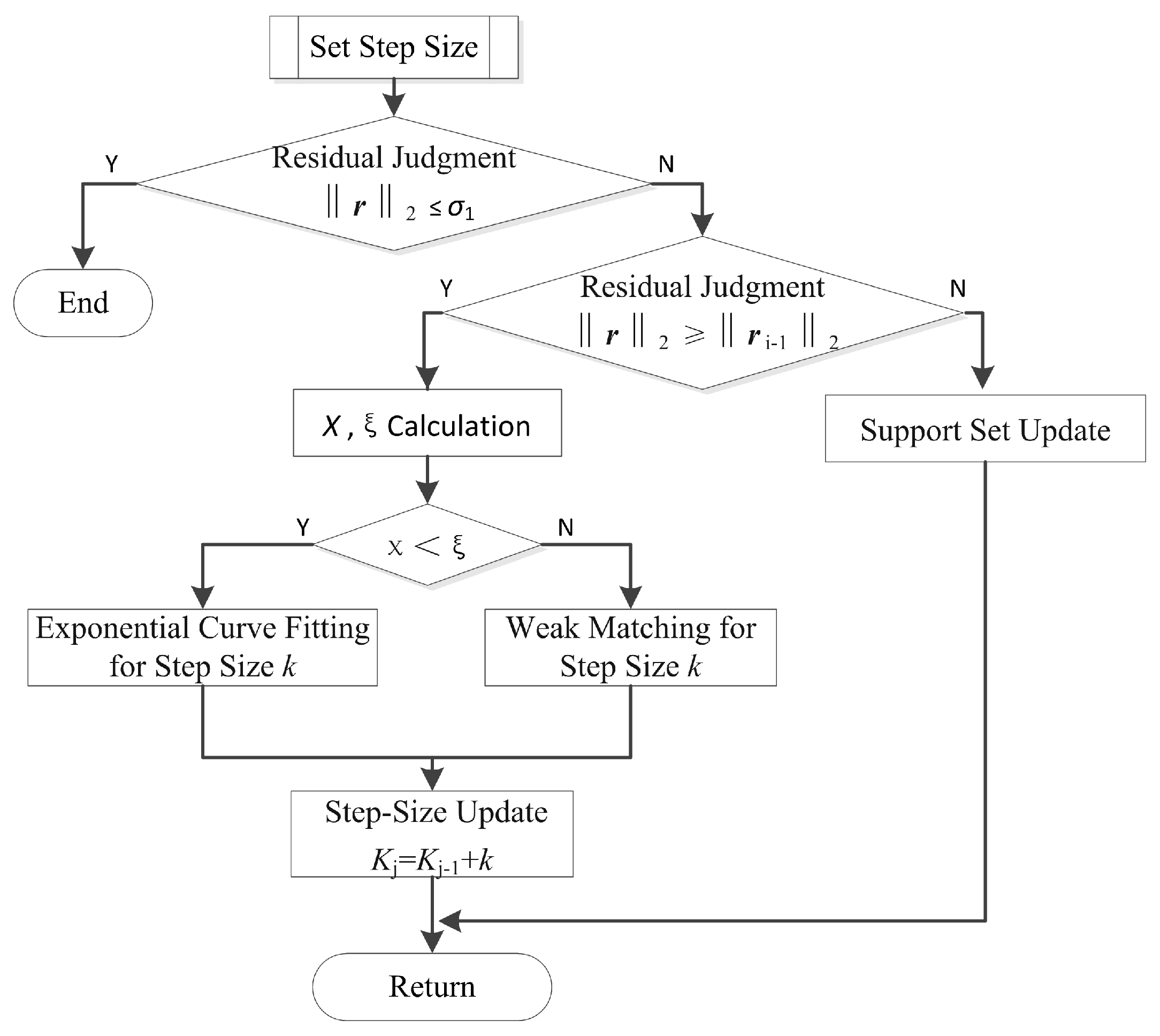

3.3. Step-Size Setting

Adaptive step size is used in the The SAVSMP algorithm, which will reduce the running time effectively. The residual

is compared with the threshold

, firstly. If

, the operation of the program would be terminated, Otherwise, the residuals between the two adjacent iterations would be compared. If

, calculate the value of

, which is defined as

. At the initial iteration of the greedy algorithms, the value of

is equal to 1 since the residual

is assigned as

at first. The residual

decreases gradually as the number of iterations increases, and the iteration will end when

reaches the threshold. Therefore, it can be concluded as

. This is because most of the effective atoms are put into the support set when

is about 10 [

28,

29],

, where

is set as the threshold to choose the method for the step-size updating. SAVSMP uses the exponential curve fitting method if

is smaller than

; otherwise, it employs the weak matching method to update the step-size. The flow chart of the step-size setting is shown in

Figure 3.

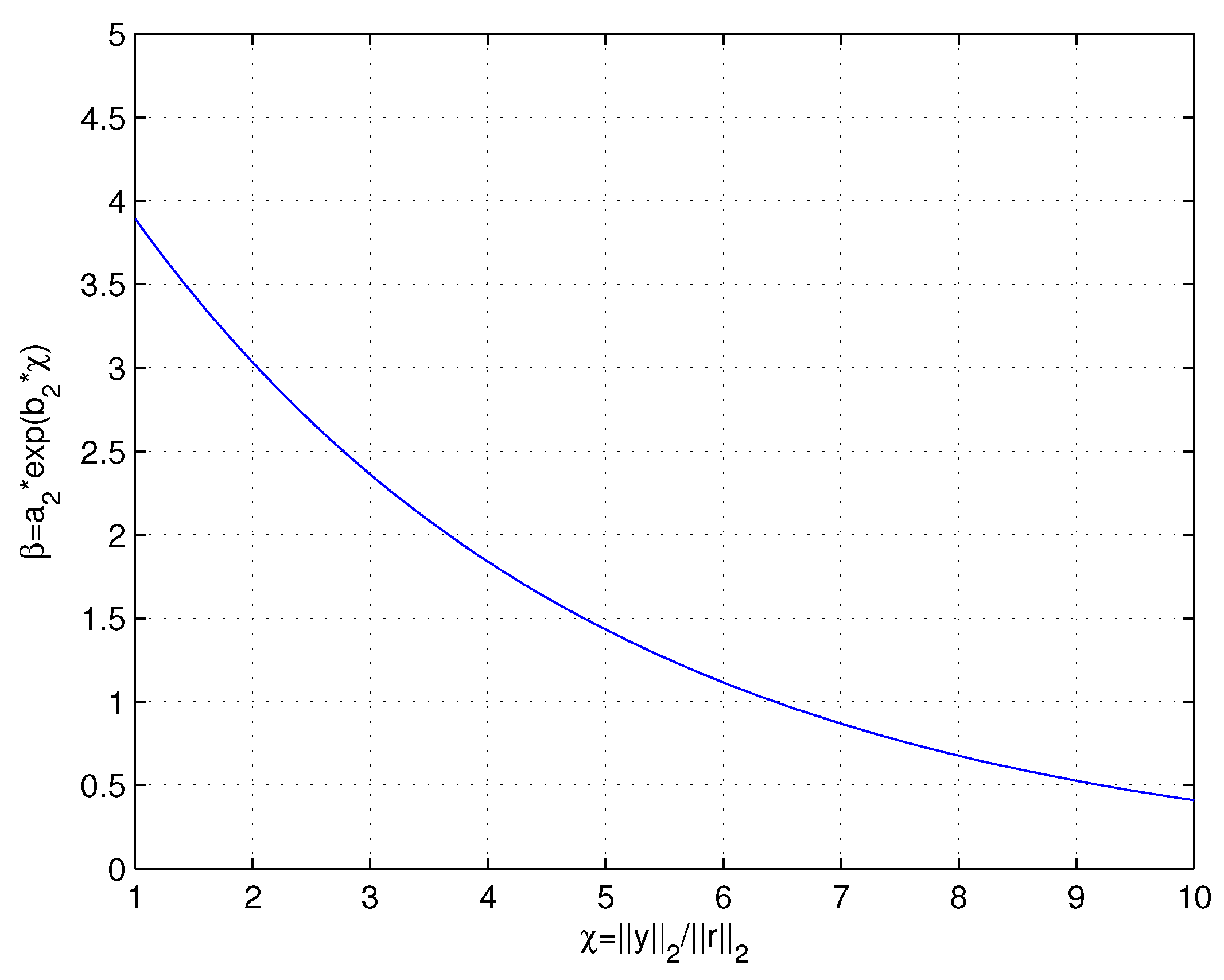

In the exponential curve fitting method, the residual energy ratio

and the step-size scaling ratio

are set as the independent variable and dependent variable. According to the expected change relation that

decreases exponentially with the increase of

, the function

,

, where

is obtained by Matlab simulation.The SSE of the function is about 0.011, which means the goodness-of-fit of the obtained function is high. The relation between

and

is shown in

Figure 4, it can be seen that the step size changes in a nonlinear manner instead of undergoing linear growth.

A weak mating method is employed to realize the close approach of the sparsity level. The algorithm selects the maximum value

and the intermediate value

of the inner product v between the observation matrix

and the residual

to determine the threshold setting. The definitions of

v,

and

are shown below:

The threshold

is set as

, where

,

,

, and

are set as 2, 3, and 3, respectively, considering a suitable threshold

could help to select atoms more efficiently. Atoms are selected and put into the supporting set when

. The step-size can be set by the parameter

, and

can give consideration to both reconstruction accuracy and computation speed [

28]. and the selected atoms are put into the supporting set. The step size of each stage can be adjusted by

, and the experimental results show that the performance of the reconstruction and the computation speed can be considered at the same time when

.

4. Performance Analysis of SAVSMP Algorithm

SAVSMP algorithm includes two aspects of initial sparsity estimation and signal reconstruction. The use of Dice coefficient criterion in SAVSMP algorithm facilitates the selection of better-matched atoms. In addition, with the idea of step size changing adaptively, the reconstruction accuracy of SAVSMP is comparable to that of SAMP with a fixed step size. The using of the initial sparsity estimation in SAVSMP is equivalent to reducing the sparsity of the target signal indirectly. The complexity of the initial sparsity estimation is mostly concentrated in the calculation of M times projections, so it is relatively small. However, reconstructing the target signal through the least squares method accounts for a significant portion of the computation. As a result, the whole complexity of the SAVSMP is determined by the stage number. Each stage can be considered as a single SP algorithm with a small sparsity. The proposed SAVSMP algorithm is summarized in Algorithm 1.

The proposed SAVSMP algorithm is summarized in Algorithm 1. It can be seen that the computation complexity of initial sparsity estimation part is mainly focused on the projection operation of Equation (9). The computation complexity of signal reconstruction part is mainly concentrated in the projection operation of Equation (12), while the signal reconstruction using least squares only accounts for a small amount of computation. Therefore, the computational complexity of SAVSMP algorithm depends on the computation of Equations (9) and (12). According to the analysis, Equation (9) needs

iterations and Equation (12) needs

iterations. Because the computation complexity of one projection operation is

, the computation complexity of SAVSMP algorithm is

. Since the step size increases at a larger slope before the threshold and linearly at a smaller slope after the threshold,

is obtained. Comparing the computational complexity of the OMP Algorithm

[

9], the SAMP algorithm

[

13] and the SASP algorithm

[

14], it can be concluded that the proposed SAVSMP algorithm has lower computational complexity.

Each stage of the proposed algorithm can be considered as the SP algorithm which can reconstruct the signal via finite iterations [

10]. Therefore, SAVSMP can also reconstruct

from

via finite stages if

satisfies the RIP criterion with the parameter

. Since

is monotonically decreasing, the algorithm will converge to a local minimum.

| Algorithm 1 Process of the SAVSMP Algorithm. |

| Input: Measurement vector y, Sensing matrix , RIP constant , |

| parameter ,, . |

| Initialization: |

| If |

| , |

| end if |

| , , |

| |

| repeat:,,, |

| , |

| if |

| quit the iteration. |

| else if |

| , |

| if |

| if |

| , |

| else , |

| , , |

| , |

| , |

| , |

| end if |

| else |

| |

| end if |

| |

| else |

| |

| end if |

| output: |

5. Simulation Results

The performance of SAVSMP algorithm is evaluated via a comparison with SAMP, SASP, OMP, and AStMP algorithms. A Gaussian sparse signal is used, the length N = 256, the sparsity P = 44 and the number of measurements M = 128. The sensing matrix is a Gaussian random matrix with =1, = 0. The parameters of , , take the value of , 10, 0.9, respectively. The background noise is Gaussian white noise, and the array is a uniform linear array composed of nine elements, and the array element spacing is half of the wavelength. All simulations are implemented in Matlab 2011a on the PC with 3.50 GHz Intel Core i3 processor and 4.0 GB memory in the Windows 7 system.

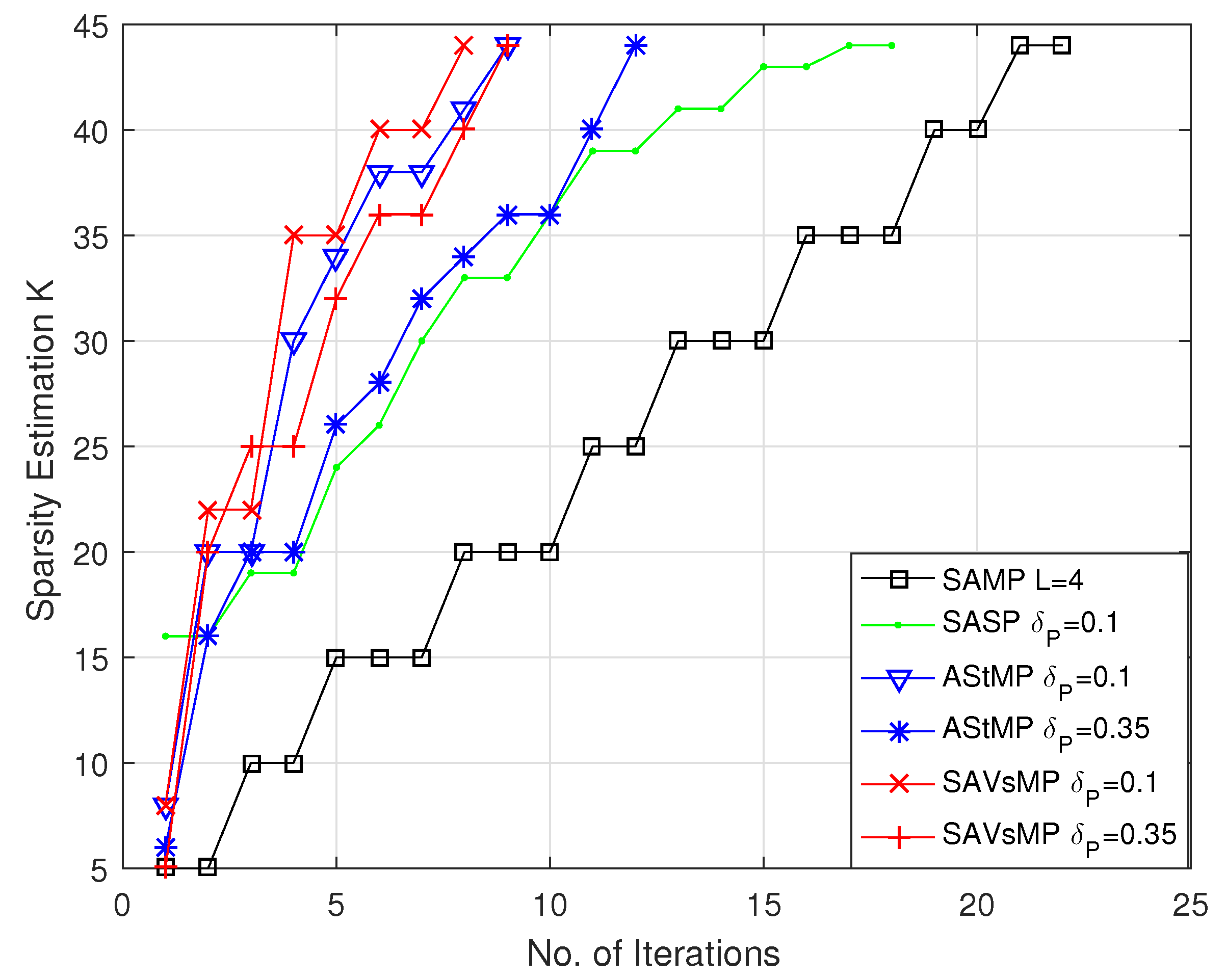

5.1. Sparsity Estimation

The initial sparsity estimation

of the SAVSMP algorithm is shown in

Figure 5. Taking a smaller value for

will lead to overestimation, whereas taking a larger value for

will lead to underestimation. The SAVSMP algorithm adjusts

to

, and

. As a result, the over-estimated value is reduced and the under-estimated value is increased. For example,

is halved when

takes the value of 0.1 and tripled when

takes the value of 0.35. However,

is halved whatever value the RIP constant

takes in AStMP algorithm.

Estimated sparsity

after treatment of the SAVSMP and AStMP algorithms are shown in

Figure 6. Compared with the AStMP algorithm, the SAVSMP algorithm overcomes the under-estimation and over-estimation of initial sparsity estimation which can easily lead to higher iteration times and lower reconstruction accuracy. In addition, the SAVSMP algorithm eliminates the large influence of the RIP parameter

on the estimated value, which mainly focuses on values between 20 and 25 whatever

is.

5.2. Iteration Times

From the analysis of the proposed algorithm, the smaller the iteration times, the lower the computational complexity can be deduced. The iteration times comparison of different algorithms are shown in

Figure 7. The SAVSMP algorithm needs 6 and 8 iterations, respectively, while

takes the smaller value of 0.1 and larger value of 0.35. However, taking the value of 0.1 or 0.35 for

has implications in the AStMP algorithm, which needs 8 or 12 iterations, respectively. The SASP algorithm needs 18 iterations, which is nearly three times that of the SAVSMP algorithm. The SAMP algorithm requires 23 iterations, which is the greatest number compared with these algorithms. Therefore, the iteration times of the SAVSMP algorithm is minimal, and

has little influence on the iteration times.

5.3. Reconstruction Accuracy

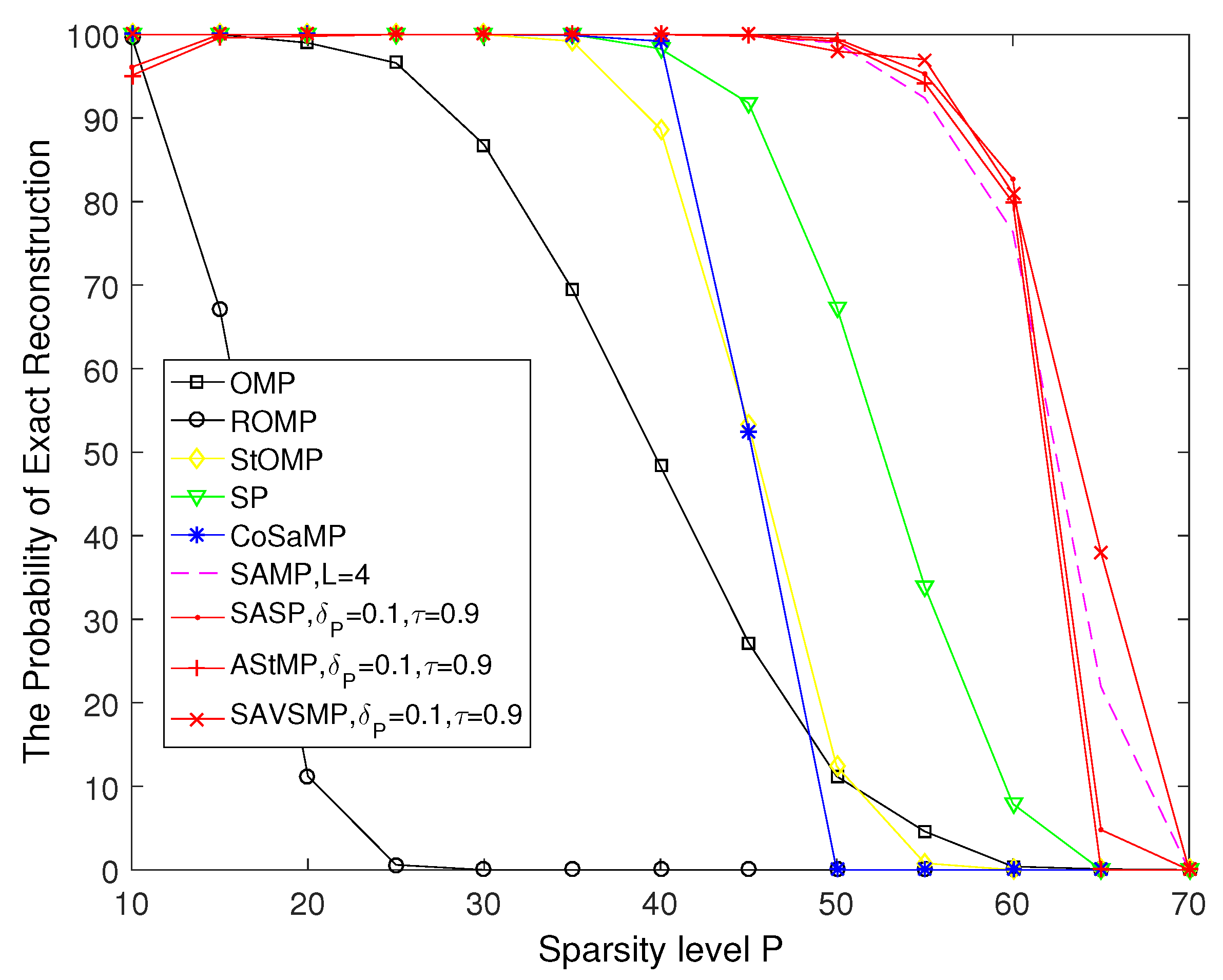

To ensure the validity of the experimental data, 500 independent simulations are employed to calculate the reconstruction probability of the OMP, ROMP, StOMP, SP, CoSaMP, SAMP, SASP, AStMP, and SAVSMP algorithms.

The probability of exact reconstruction versus the sparsity level P of different algorithms is shown in

Figure 8. It is shown that the reconstruction probability decrease as P increases. Compared with other algorithms, the OMP algorithm performs the worst. Although ROMP algorithm is more accurate than OMP algorithm, it is much lower than the StOMP, SP, and CoSaMP algorithms, which can achieve

reconstruction accuracy when P is small and drop dramatically when

. The reconstruction probability of the SAMP, SASP, AStMP, and SAVSMP algorithms is close when P is small; however, the SAVSMP algorithm performs better than other algorithms when P is greater than 60.

The reconstruction probability versus the number of measurements M of different algorithms is shown in

Figure 9. It can be seen that the reconstruction probability increase as M increases. Compared with other algorithms, the ROMP algorithm performs the worst. Although OMP algorithm is more accurate than ROMP algorithm, it is much lower than the StOMP, SP and CoSaMP algorithms, which can achieve 100% reconstruction accuracy when M is larger than 95. The precision of the SAMP, SASP, AStMP, and SAVSMP algorithms are close when M is larger than 80; however, there construction probability of the SAVSMP algorithm is higher than other algorithms when P is smaller than 75. Therefore, SAVSMP algorithm shows obvious advantages compared with other algorithms in terms of reconstruction probability.

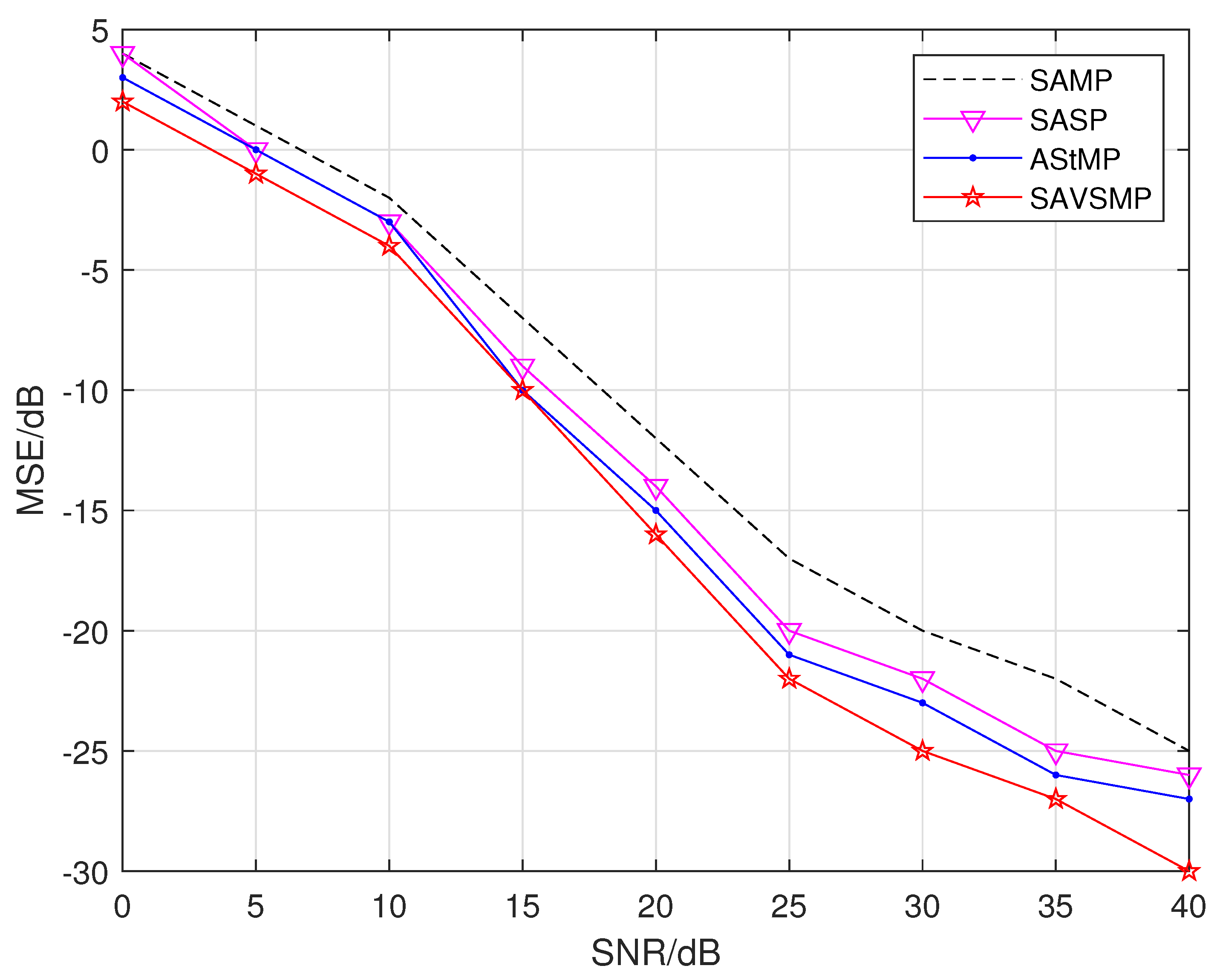

The mean square error (MSE) versus the signal-to-noise ratio (SNR) of different algorithms, such as the SAMP, SASP, AStMP, and SAVSMP algorithm, is shown in

Figure 10. It is shown that the reconstruction error of each algorithm decreases gradually with the increase of SNR. The MSE of SAVSMP algorithm is less than other algorithms, and the performance advantage is more obvious with the increase of signal-to-noise ratio. It can be seen that the proposed algorithm has certain robustness.

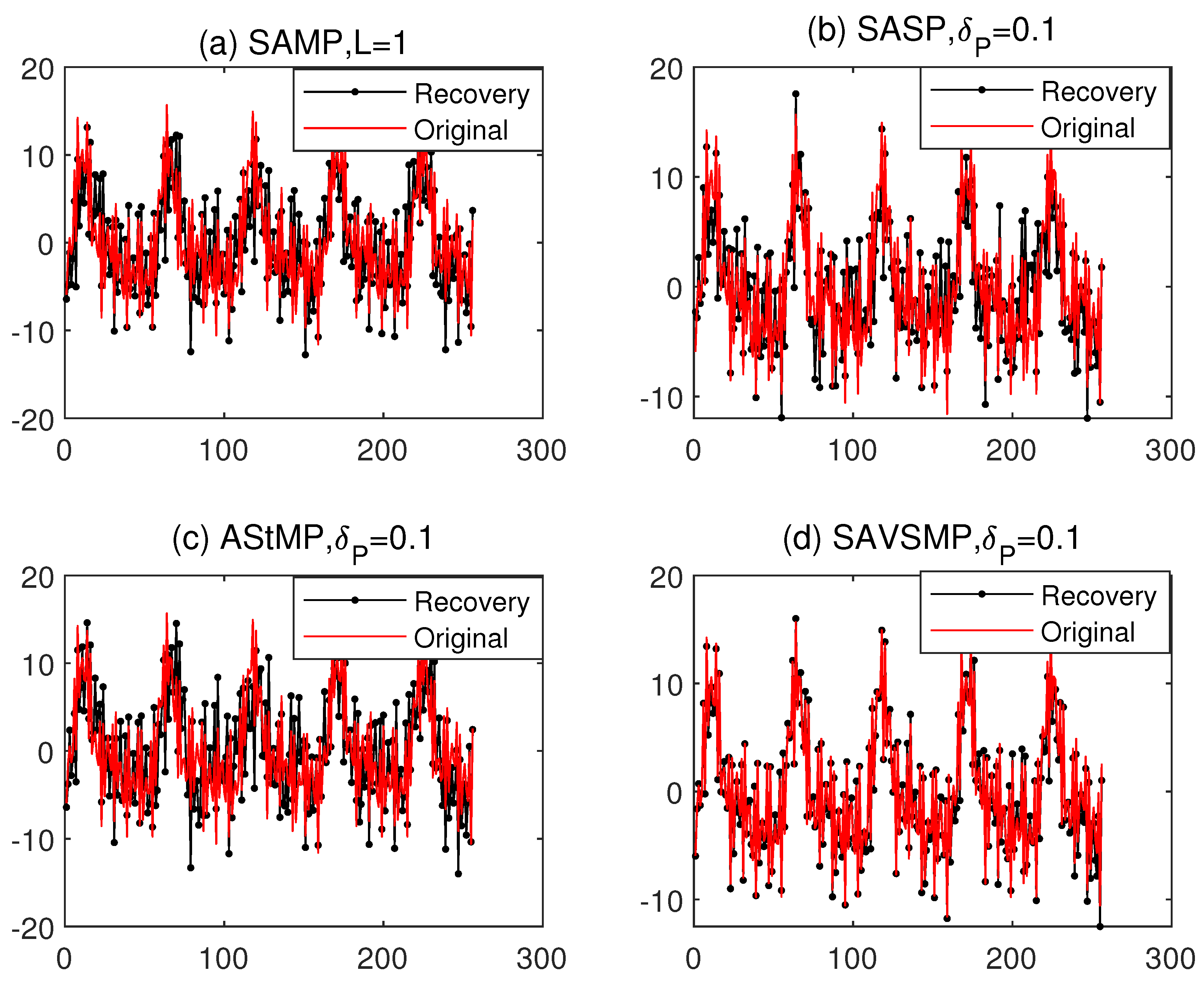

5.4. Underwater Acoustic Signal Reconstruction Experiment

To verify the applicability of the algorithm to non-Gaussian sparse signals, this section uses underwater acoustic signal radiated by the ship to test the theoretical application value of SAVSMP algorithm. The ship-radiated noise obeying non Gaussian distribution consists of line spectrum and continuous spectrum.

Suppose that the propeller blades number is 3, the speed is 420 r/min, and the shaft frequency is

Hz. Since the basic frequency is set as 21 Hz, the second harmonic, the third harmonic, and the fourth harmonic are 42 Hz, 63 Hz, and 84 Hz, respectively [

17]. In addition, on the premise of satisfying the acoustic characteristics of the line spectrum, 150 Hz, 450 Hz, and 800 Hz are selected from the range of 100–1000 Hz [

30]. Amplitude values of the selected seven frequency points are set to 3.5, 3.1, 2.2, 1.7, 1.5, 2.2, and 2, respectively, and the initial phases in Radian are set to 0.1, 2, −1, 3, 1, 0, and −1, respectively. The continuous spectrum peak frequency is set as 900 Hz, then 11 discrete frequency points from 0–900 Hz and 5 discrete frequency points from 1000–5000 Hz are selected. The corresponding frequency response of the finite impulse response (FIR) filter can be obtained if the desired response is given. The continuous spectrum can be obtained by applying the white Gaussian noise to the filter. Adding the line spectrum and the continuous spectrum, the spectrum of the ship-radiated noise can be obtained.

The reconstruction of the ship-radiated noise by the SAMP, SASP, AStMP, and SAVSMP algorithms are shown in

Figure 11. Obviously, the SAVSMP algorithm can achieve underwater acoustic signal reconstruction with higher precision.

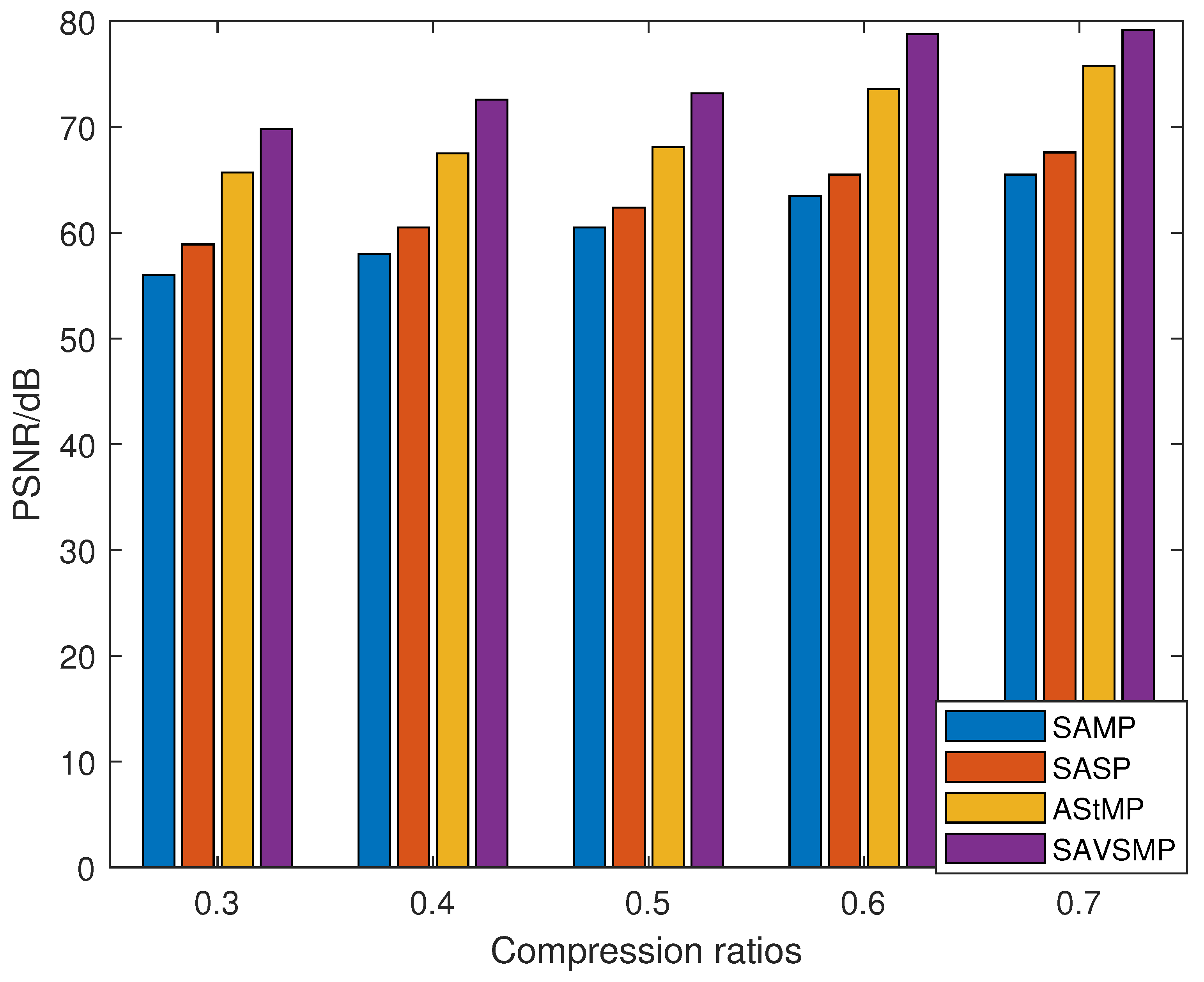

In addition to the reconstructed signal contrast, the peak signal-to-noise ratio (PSNR) will be used to evaluate the quality of the reconstruction signal. The PSNR is expressed as [

31]

where Framesize represents the total number of pixels of the target signal, and

and

represent the nth pixel value of the original signal and the reconstructed signal, respectively. From Equation (

16), we can see that larger PSNR means higher reconstruction quality. The compression rations versus the PSNR of the four algorithms are shown in

Figure 12. The figure reveals that the PSNR will increases when the compression ratio increases. Compared with other algorithms in the figure, the SAVSMP algorithm has the highest PSNR for the underwater acoustic signal reconstruction.

6. Conclusions

In this work, a novel sparsity adaptive algorithm SAVSMP for underwater acoustic signal reconstruction has been presented. Since the SAVSMP algorithm estimates the sparsity by RIP criterion, it overcomes the disadvantage of the previous algorithms which require the prior information on the signal sparsity. SAVSMP selects atoms into the support set with an adaptive step size that can converge to the real sparsity quickly. In order to avoid over-estimation or under-estimation, SAVSMP proceeds the initial sparsity estimation adaptively by curing fitting method. Both the theoretical analyse and the systematical simulations demonstrate that the algorithm can accurately reconstruct ordinary random signal with low complexity. Given the significant advantage of high PSNR, SAVSMP can be used in reconstruction for underwater acoustic signals received by the sonar system.

Author Contributions

Conceptualization, N.L.; Data curation, N.L.; Formal analysis, N.L.; Investigation, X.Y.; Methodology, X.Y.; Project administration, H.L.; Resources, H.L.; Software, H.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Natural Science Foundation of China Nos. 11173010 and U1531101.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

We would like to acknowledge Wenyun Gao, Xiaofei Niu, Wei Fu for their support during data collection, figures preparing and the English proofreading.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Gurley, K.; Kijewski, T.; Kareem, A. First-and Higher-Order Correlation Detection Using Wavelet Transforms. J. Eng. Mech. 2003, 129, 188–201. [Google Scholar] [CrossRef] [Green Version]

- Duda, T.F.; Collis, J.M.; Lin, Y.T.; DeFerrari, H.A. Horizontal Coherence of Low-Frequency Fixed-Path Sound in a Continental Shelf Region with Internal-Wave Activity. J. Acoust. Soc. Am. 2012, 131, 1782–1797. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yang, T.C. Temporal Coherence of Sound Transmissions in Deep Water Revisited. J. Acoust. Soc. Am. 2008, 124, 113–127. [Google Scholar] [CrossRef]

- Su, X.X.; Zhang, R.H.; Li, F.H. Improvement of the longitudinal correlations of acoustical field by using the wave guide invariance. Acta Acust. 2006, 4, 305–309. [Google Scholar]

- Li, Y.; Lee, K.; Bresler, Y. Identifiability in Blind Devolution with Subspace or Sparsity Constraints. IEEE Trans Inf. Theory 2016, 62, 4266–4275. [Google Scholar] [CrossRef] [Green Version]

- Donoho, D.L. Compressed sensing. IEEE Trans. Inf. Theory 2006, 52, 1289–1306. [Google Scholar] [CrossRef]

- Candes, E.J.; Wakin, M.B. An Introduction To Compressive Sampling. IEEE Signal Process. Mag. 2005, 25, 21–30. [Google Scholar] [CrossRef]

- Candes, E.J.; Romberg, J.K.; Tao, T. Robust Uncertainty Principles: Exact Signal Reconstruction from Highly Incomplete Fourier Information. IEEE Trans. Inf. Theory 2006, 52, 489–509. [Google Scholar] [CrossRef] [Green Version]

- Tropp, J.A.; Gilbert, A.C. Signal recovery from random measurements via orthogonal matching pursuit. IEEE Trans. Inf. Theory 2007, 53, 4655–4666. [Google Scholar] [CrossRef] [Green Version]

- Dai, W.; Milenkovic, O. Subspace Pursuit for Compressive Sensing: Closing the Gap between Performance and Complexity; Illinois University: Champaign, IL, USA, 2008. [Google Scholar]

- Needell, D.; Vershynin, R. Signal recovery from random measurements via regularized orthogonal matching pursuit. IEEE J. Sel. Top. Signal Process. 2010, 4, 310–316. [Google Scholar] [CrossRef] [Green Version]

- Donoho, D.L.; Tsaig, Y.; Drori, I. Sparse solution of under determined systems of linear equations by stage wise orthogonal matching pursuit. IEEE Trans. Inf. Theory 2012, 58, 1094–1121. [Google Scholar] [CrossRef]

- Do, T.T.; Gan, L.; Nguyen, N. Sparsity adaptive matching pursuit algorithm for practical compressed sensing. In Proceedings of the 42nd Asilomar Conference on Signal, System, and Computers, Pacific Grove, CA, USA, 26–29 October 2008; pp. 581–587. [Google Scholar]

- Yang, C.; Feng, W.; Feng, H. A sparsity adaptive subspace pursuit algorithm for compressive sampling. Acta Electron. Sin. 2010, 38, 1914–1917. (In Chinese) [Google Scholar]

- Sun, T. Compressive Sensing and Imaging Applications. Ph.D. Thesis, Rice University, Houston, TX, USA, 2011. [Google Scholar]

- Candes, E.J.; Romberg, J.K.; Tao, T. Stable signal recovery from incomplete and inaccurate measurements. Commun. Pure Appl. Math. 2006, 59, 1207–1223. [Google Scholar] [CrossRef] [Green Version]

- Liu, B. Principles of Underwater Acoustics; Harbin Engineering University: Harbin, China, 2009; pp. 216–231. (In Chinese) [Google Scholar]

- Salton, G.; McGill, M.J. Introduction to Modern Information Retrieval; McGraw-Hill: New York, NY, USA, 1987. [Google Scholar]

- Lei, Y. Atom Selection in Sparse Dictionary with Compressive Sensing OMP Reconstruction Algorithm; South China University of Technology: Guangzhou, China, 2011. (In Chinese) [Google Scholar]

- Dice, L.R. Measures of the amount of ecologic association between species. Ecology 1945, 26, 297–302. [Google Scholar] [CrossRef]

- Mulekar, M.S.; Brown, C.S. Distance and Similarity Measures. In Encyclopedia of Social Network Analysis and Mining; Springer: New York, NY, USA, 2017. [Google Scholar]

- Yong, R.; Ye, J.; Liang, Q.F. Estimation of the joint roughness coefficient (JRC) of rock joints by vector similarity measures. Bull. Eng. Geol. Environ. 2018, 77, 735. [Google Scholar] [CrossRef]

- Bullen, P.S.; Mitrinovic, D.S.; Vasic, P.M. Means and Their Inequalities; Springer: New York, NY, USA, 1988. [Google Scholar]

- Wang, D.; Wu, Y.; Cao, W. Improved Reconstruction Algorithm for Compressed Sensing. J. Northwestern Poly. Tech. Univ. 2017, 35, 774–779. (In Chinese) [Google Scholar]

- Wang, F.; Zhao, Z.; Liu, X. Improved Sparsity Adaptive Matching Pursuit Algorithm. Comput. Sci. 2018, 45, 234–238. (In Chinese) [Google Scholar]

- Zhang, L.; Liang, D. Study of spectral reflectance reconstruction based on an algorithm for improved orthogonal matching pursuit. J. Opt. Soc. Korea 2016, 20, 515–523. [Google Scholar]

- Yu, H.; Ma, C.; Wang, H. Transmission Line Fault Location Method Based on Compressed Sensing Estimation of Traveling Wave Natural Frequencies. Trans. China Electrotech. Spciety 2017, 32, 140–148. (In Chinese) [Google Scholar]

- Fu, Y.; Liu, S.; Ren, C. Adaptive Step-size Matching Pursuit Algorithm for Practical Sparse Reconstruction. Circuits Syst. Signal Process. 2017, 36, 2276–2284. [Google Scholar] [CrossRef]

- Li, N.; Yin, X.; Guo, H. Variable step-size matching pursuit based on oblique projection for compressed sensing. IET Image Process. 2020, 14, 766–773. [Google Scholar] [CrossRef]

- Zhang, Q. Study On Blind Source Separation of Underwater Acoustic Signals D; Harbin Institute of Technology: Harbin, China, 2013. [Google Scholar]

- Yao, S.; Sangaiah, A.K.; Zheng, Z. Sparsity estimation matching pursuit algorithm based on restricted isometry property for signal reconstruction. Future Gener. Comput. Syst. 2018, 88, 747–754. [Google Scholar] [CrossRef]

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).