Mobile Edge Computing Task Offloading Strategy Based on Parking Cooperation in the Internet of Vehicles

Abstract

:1. Introduction

2. Related Works

- A moving edge computing framework based on roadside parking cooperation is proposed. In the case of no RSU or insufficient vehicle local computing resources, roadside parking was added as an offloading platform;

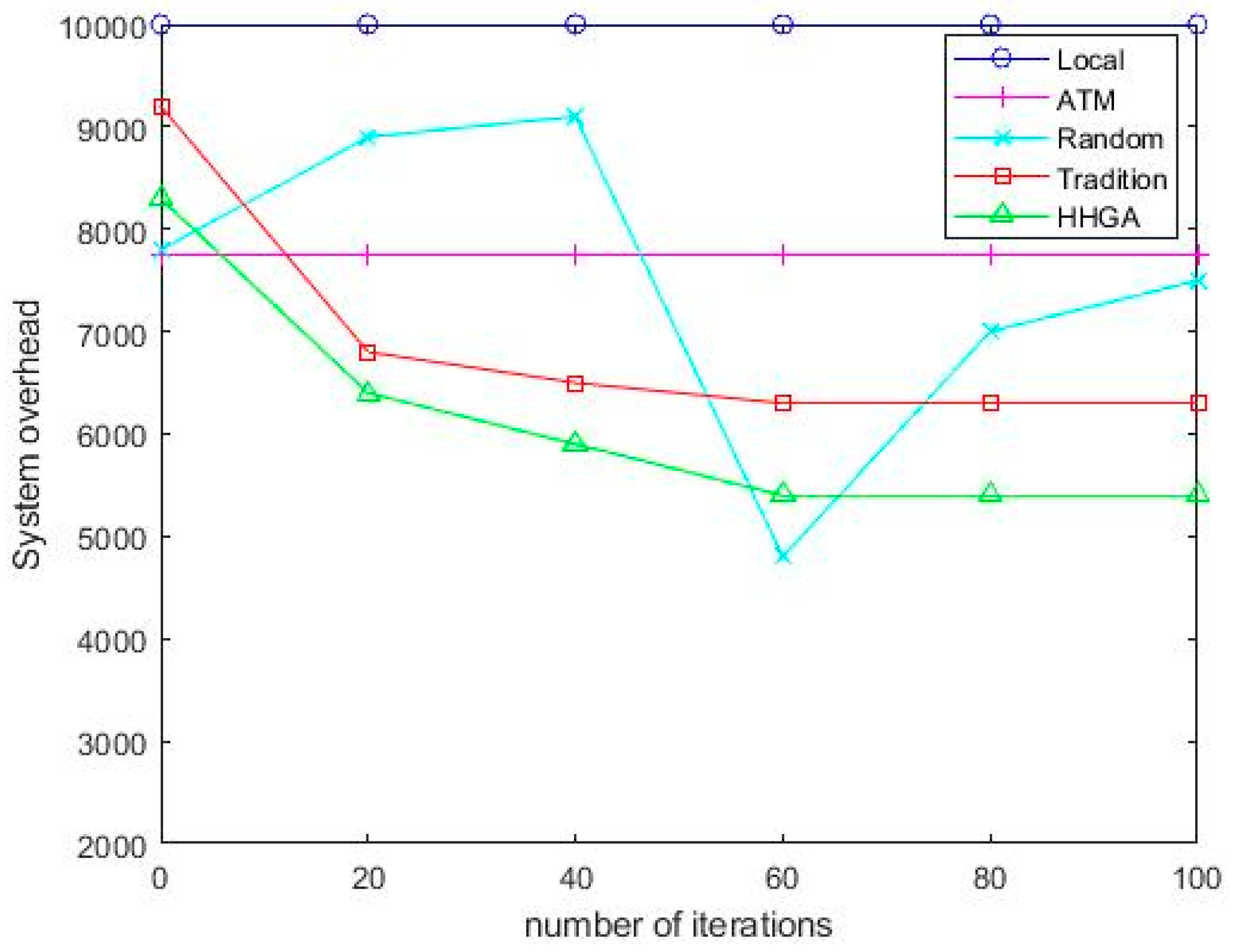

- After the global optimal solution was generated by the crossover and variation in the traditional genetic algorithm, a mountain-climbing algorithm was added to search for the local optimal solution, which improves the convergence speed and reduces the system overhead;

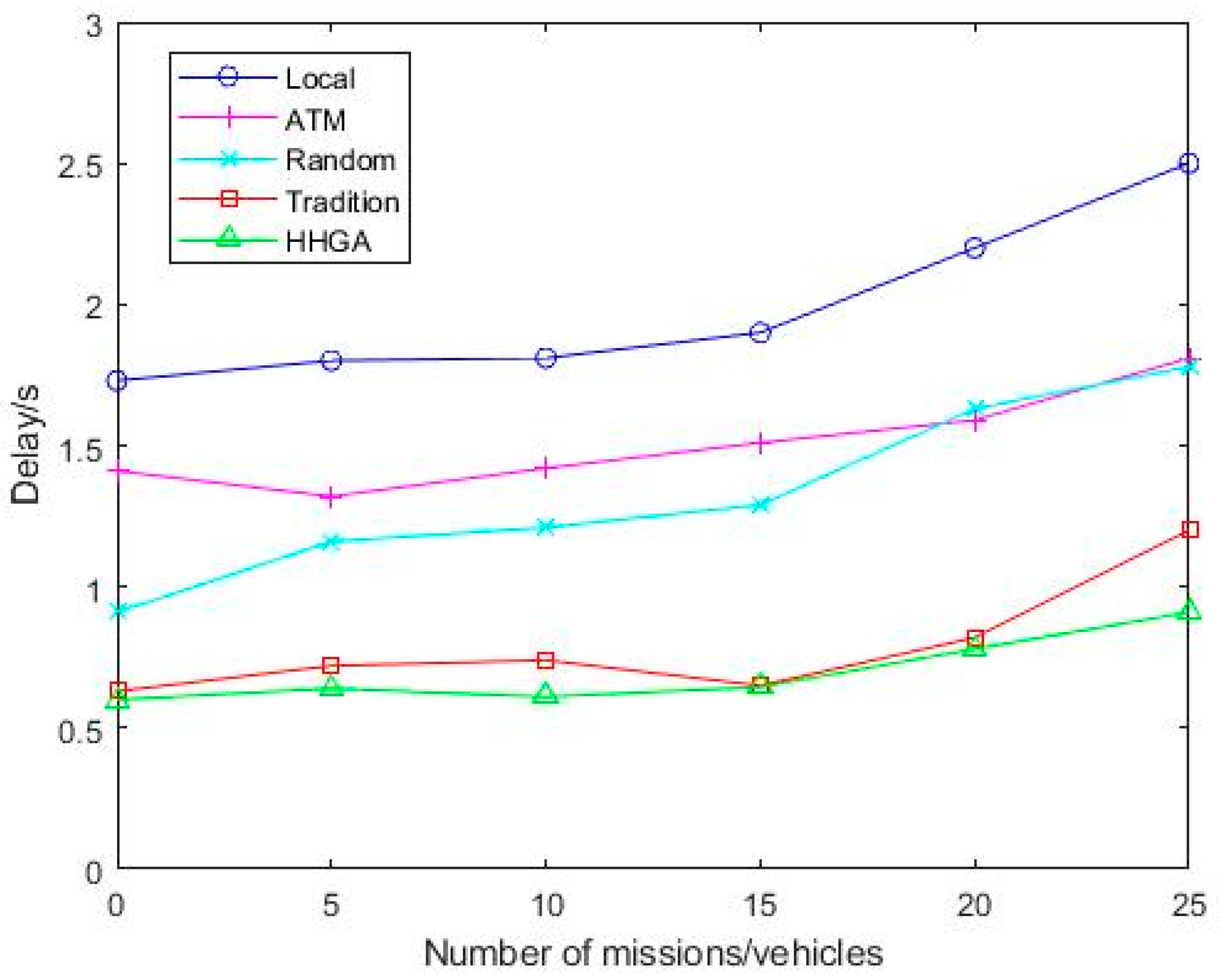

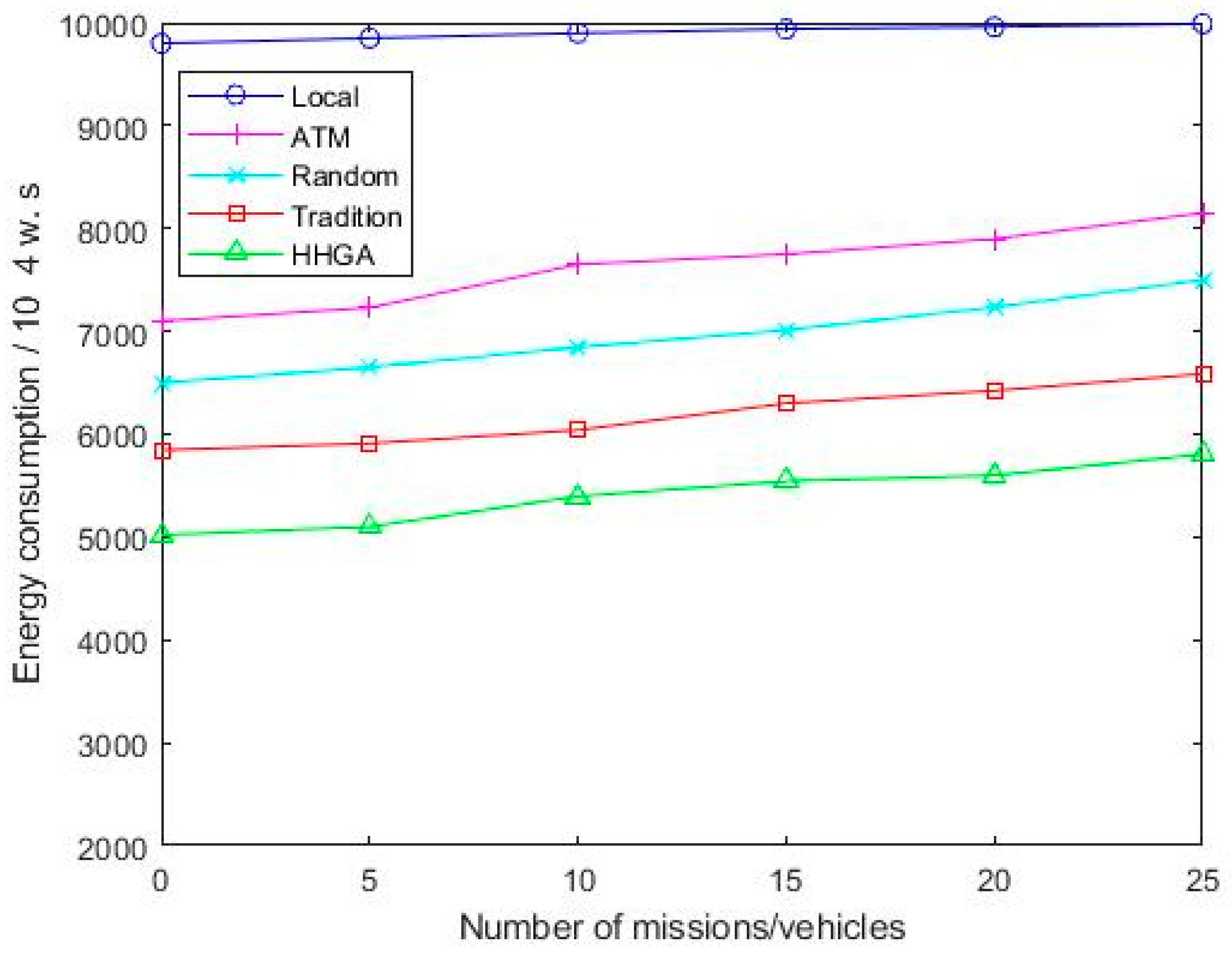

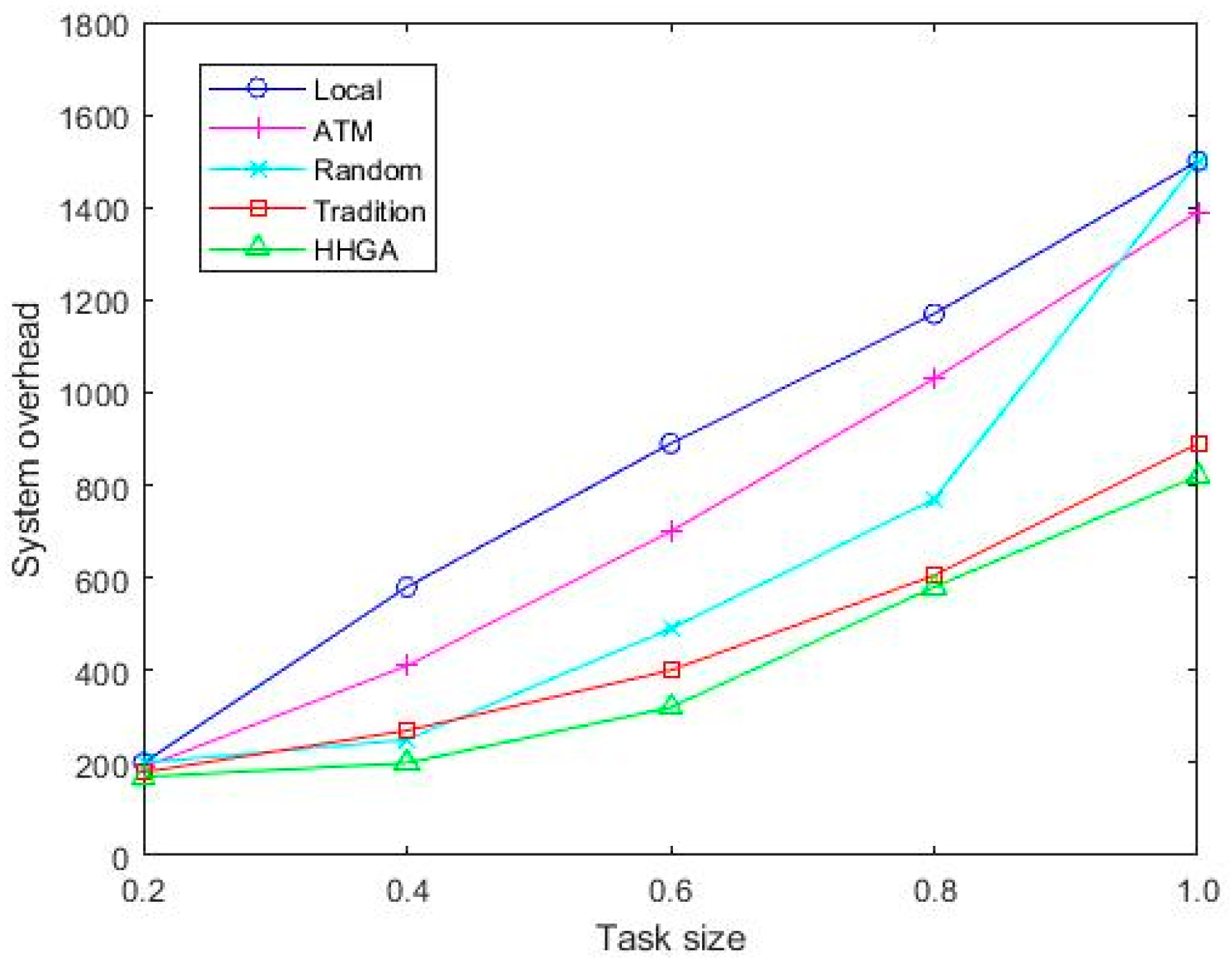

- In order to evaluate the proposed task offloading scheme based on a hybrid genetic algorithm, it was compared and analyzed with Local, ATM, Random, and Tradition task offloading methods in aspects of system overhead, delay, and energy consumption;

- Finally, we evaluated our method in detail from two aspects: task number and task size. Our scheme is superior to the other four offloading schemes in system overhead, delay, and energy consumption. In other words, our method produces less system cost for the same task guarantee, or equivalently, it provides a higher quality of service guarantee for the same system cost.

3. System Model

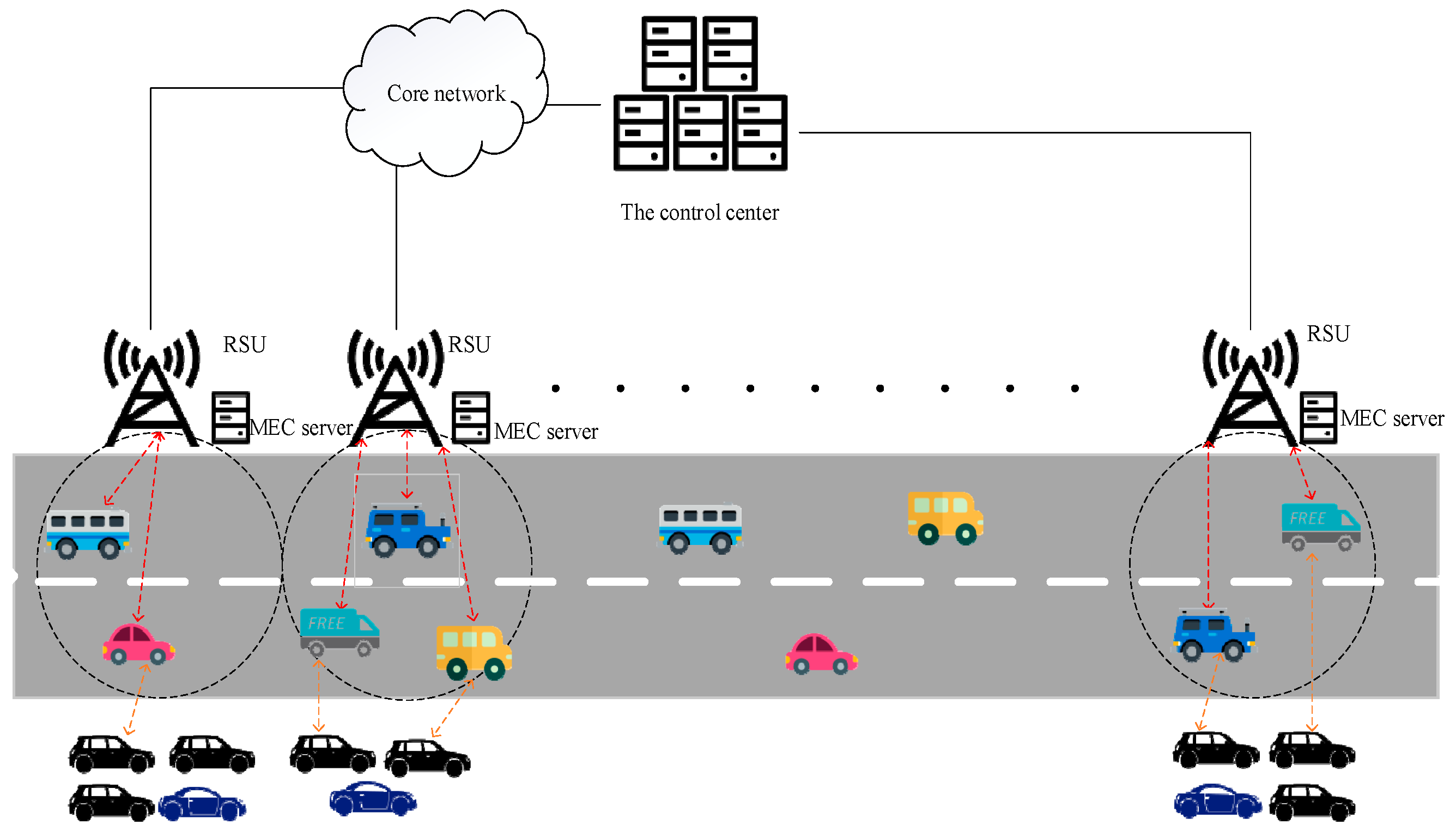

3.1. Network Model

3.2. Communication Model

3.3. Calculation Model

3.3.1. Local Computing Model

3.3.2. MEC Calculation Model

3.3.3. Cloud Server Computing Model

3.3.4. Calculation Model of Roadside Parking

3.4. Problem Expression

4. A Hybrid Algorithm Based on Hill-Climbing Algorithm and Genetic Algorithm (HHGA)

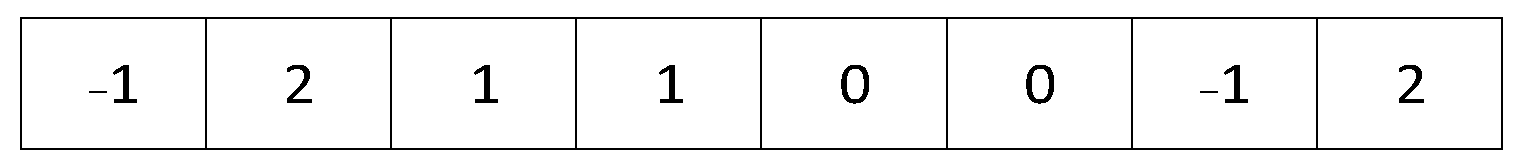

4.1. Integer Coding and Initial Population

4.2. Fitness Function

4.3. Select Operations

- (1)

- The fitness value of individuals in the population is superimposed on the total fitness value of 1;

- (2)

- The fitness value of each divided by the total fitness is worth the probability of individual selection;

- (3)

- Calculate the cumulative probability of individuals to construct a roulette wheel;

- (4)

- Generate a random number within the interval of [0, 1]. If the random number is less than or equal to the cumulative probability of the individual and greater than the cumulative probability of individual 1, select the individual to enter the offspring population.

4.4. Cross Operations

4.5. Mutation Operation

4.6. Climbing Operation and Termination Rules

| Algorithm 1: HHGA algorithm |

| Input: Population size, M Selection probability, Crossover probability, Mutation probability, Number of iterations, gen Output: W |

| 1.t = 0; |

| 2.Initialize ; |

| 3.Repair ; |

| 4.Calculate |

| 5.Store best solutions of in old ; |

| 6.while t < gen do |

| 7. Selection operation , to ; |

| 8. Crossover operation to ; |

| 9. Mutation operation to ; |

| 10. hill-climbing operation to ; |

| 11. Store the best fitness individuals of in new ; |

| 12. if (old ) > (new )then |

| new ld |

| 13. end if |

| 14. old = new |

| 15. find the worst fitness value in and replace it with new ; |

| 16. t = t + 1; |

| 17.end while |

5. Simulation Verification and Analysis

5.1. Simulation Parameter Setting

5.2. Comparison Scheme Settings

- Strategy 1:

- Moving Vehicle Local Execution Policy (Local): all tasks need to be executed only on the moving vehicle;

- Strategy 2:

- MEC Server Policy (ATM): all tasks need to be offloaded and executed on the MEC server;

- Strategy 3:

- Random Offloading Policy (Random): tasks are randomly offloaded on moving vehicles, MEC servers, roadside vehicles, and cloud servers;

- Strategy 4:

5.3. Impact of Number of Tasks on Algorithm Performance

5.4. Impact of Task Size on Algorithm Performance

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Qureshi, K.N.; Alhudhaif, A.; Haidar, S.W.; Majeed, S.; Jeon, G. Secure data communication for wireless mobile nodes in intelligent transportation systems. Microprocess. Microsyst. 2022, 90, 104501. [Google Scholar] [CrossRef]

- Xu, X.; Li, Y.; Huang, T.; Xue, Y.; Peng, K.; Qi, L.; Dou, W. An energy-aware computation offloading method for smart edge computing in wireless metropolitan area networks. J. Netw. Comput. Appl. 2020, 133, 75–85. [Google Scholar] [CrossRef]

- Zhang, K.; Mao, Y.; Leng, S.; He, Y.; Zhang, Y. Mobile-Edge Computing for Vehicular Networks: A Promising Network Paradigm with Predictive Off-Loading. IEEE Veh. Technol. Mag. 2017, 12, 36–44. [Google Scholar] [CrossRef]

- Li, Q.; Wang, S.; Zhou, A.; Ma, X.; Yang, F.; Liu, A.X. QoS Driven Task Offloading with Statistical Guarantee in Mobile Edge Computing. IEEE Trans. Mob. Comput. 2022, 21, 278–290. [Google Scholar] [CrossRef]

- Karim, A. Development of secure Internet of Vehicle Things (IoVT) for smart transportation system. Comput. Electr. Eng. 2022, 102, 108101. [Google Scholar] [CrossRef]

- Sharma, S.; Kaushik, B. A survey on internet of vehicles: Applications, security issues & solutions. Veh. Commun. 2019, 20, 100182. [Google Scholar] [CrossRef]

- Fang, J.; Zhang, M.; Ye, Z.; Shi, J.; Wei, J. Smart collaborative optimizations strategy for mobile edge computing based on deep rein forcement learning. Comput. Electr. Eng. 2021, 96, 107539. [Google Scholar] [CrossRef]

- Feng, C.; Han, P.; Zhang, X.; Yang, B.; Liu, Y.; Guo, L. Computation offloading in mobile edge computing networks: A survey. J. Netw. Comput. Appl. 2022, 202, 103366–103402. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, W.; Yang, J.; Yang, L.; Yeo, C.K. Market-based dynamic resource allocation in Mobile Edge Computing systems with multi-server and multi-user. Comput. Commun. 2021, 165, 43–52. [Google Scholar] [CrossRef]

- Lu, J.; Jiang, J. Deep reinforcement learning-based multi-objective edge server placement in Internet of Vehicles. Comput. Commun. 2022, 187, 172–180. [Google Scholar] [CrossRef]

- Zhang, K.; Mao, Y.; Leng, S.; Maharjan, S.; Zhang, Y. Optimal delay constrained offloading for vehicular edge computing networks. In Proceedings of the 2017 IEEE International Conference on Communications, Paris, France, 21–25 May 2017; pp. 1–6. [Google Scholar]

- Tran, T.X.; Pompili, D. Joint Task Offloading and Resource Allocation for Multi-Server Mobile-Edge Computing Networks. IEEE Trans. Veh. Technol. 2019, 68, 856–868. [Google Scholar] [CrossRef] [Green Version]

- Mahenge, M.P.J.; Li, C.; Sanga, C.A. Energy-efficient task offloading strategy in mobile edge computing for resource-intensive mobile applications. Digit. Commun. Netw. 2022; in press. [Google Scholar] [CrossRef]

- Karimi, E.; Chen, Y.; Akbari, B. Task offloading in vehicular edge computing networks via deep reinforcement learning. Comput. Commun. 2022, 189, 193–204. [Google Scholar] [CrossRef]

- Kuang, Z.; Ma, Z.; Li, Z.; Deng, X. Cooperative computation offloading and resource allocation for delay minimization in mobile edge computing. J. Syst. Arch. 2021, 118, 102167. [Google Scholar] [CrossRef]

- Zhang, N.; Guo, S.; Dong, Y.; Liu, D. Joint task offloading and data caching in mobile edge computing networks. Comput. Netw. 2020, 182, 107446. [Google Scholar] [CrossRef]

- Tan, L.T.; Hu, R.Q.; Hanzo, L. Twin-Timescale Artificial Intelligence Aided Mobility-Aware Edge Caching and Computing in Vehicular Networks. IEEE Trans. Veh. Technol. 2019, 68, 3086–3099. [Google Scholar] [CrossRef] [Green Version]

- Yang, L.; Zhang, H.; Li, M.; Guo, J.; Ji, H. Mobile Edge Computing Empowered Energy Efficient Task Offloading in 5G. IEEE Trans. Veh. Technol. 2018, 67, 6398–6409. [Google Scholar] [CrossRef]

- Guo, H.; Liu, J. Collaborative Computation Offloading for Multiaccess Edge Computing Over Fiber–Wireless Networks. IEEE Trans. Veh. Technol. 2018, 67, 4514–4526. [Google Scholar] [CrossRef]

- Qiao, G.; Leng, S.; Zhang, K.; He, Y. Collaborative Task Offloading in Vehicular Edge Multi-Access Networks. IEEE Commun. Mag. 2018, 56, 48–54. [Google Scholar] [CrossRef]

- Ma, C.; Zhu, J.; Liu, M.; Zhao, H.; Liu, N.; Zou, X. Parking Edge Computing: Parked-Vehicle-Assisted Task Offloading for Urban VANETs. IEEE Internet Things J. 2021, 8, 9344–9358. [Google Scholar] [CrossRef]

- Li, B.; Hou, F.; Ding, H.; Wu, H. Community based parking: Finding and predicting available parking spaces based on the Internet of Things and crowdsensing. Comput. Ind. Eng. 2021, 162, 107755. [Google Scholar] [CrossRef]

- Chen, M.; Wang, T.; Zhang, S.; Liu, A. Deep reinforcement learning for computation offloading in mobile edge computing environment. Comput. Commun. 2021, 175, 1–12. [Google Scholar] [CrossRef]

- Deb, K.; Pratap, A.; Agarwal, S.; Meyarivan, T. A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans. Evol. Comput. 2002, 6, 182–197. [Google Scholar] [CrossRef] [Green Version]

- Jately, V.; Azzopardi, B.; Joshi, J.; Balaji, V.V.; Sharma, A.; Arora, S. Experimental Analysis of hill-climbing MPPT algorithms under low irradiance levels. Renew. Sustain. Energy Rev. 2021, 150, 111467. [Google Scholar] [CrossRef]

- Hameed, M.A.; Jamsheela, O.; Robert, B.S. Relative performance of Roulette wheel GA and Rank GA is dependent on chromosome parity. Mater. Today Proc. 2021; in press. [Google Scholar] [CrossRef]

- Magoula, L.; Barmpounakis, S.; Stavrakakis, I.; Alonistioti, N. A genetic algorithm approach for service function chain placement in 5G and beyond, virtualized edge networks. Comput. Netw. 2021, 195, 108157. [Google Scholar] [CrossRef]

- Zhang, Y.; Wei, C.; Zhao, J.; Qiang, Y.; Wu, W.; Hao, Z. Adaptive mutation quantum-inspired squirrel search algorithm for global optimization problems. Alex. Eng. J. 2022, 61, 7441–7476. [Google Scholar] [CrossRef]

- Wang, H.; Li, X.; Ji, H.; Zhang, H. Federated Offloading Scheme to Minimize Latency in MEC-Enabled Vehicular Networks. In Proceedings of the 2018 IEEE Globecom Workshops (GC Wkshps), Abu Dhabi, United Arab Emirates, 9–13 December 2018; pp. 1–6. [Google Scholar]

- Gao, J.X.; Wang, J. Multi-edge Collaborative Computing Unloading Scheme Based on Genetic Algorithm. Comput. Sci. 2021, 48, 9. [Google Scholar]

| Experimental Parameters | Numerical |

|---|---|

| The launch rate at which a moving vehicle uploads a task | 5 W |

| Computing resources for moving vehicles | 1G cycles/s |

| Computing resources for MEC | 4G cycles/s |

| Computing resources provided by the cloud server | 10G cycles/s |

| Curbside parking provides computing resources | 1G cycles/s |

| Equipment power for moving vehicles/roadside parking | 8 W |

| Device power of the MEC server | 30 W |

| Device power of the cloud server | 70 W |

| Populations M | 60 |

| Maximum number of iterations | 100 |

| Crossover rate | 0.85 |

| Mutation rate | 0.02 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shen, X.; Chang, Z.; Niu, S. Mobile Edge Computing Task Offloading Strategy Based on Parking Cooperation in the Internet of Vehicles. Sensors 2022, 22, 4959. https://doi.org/10.3390/s22134959

Shen X, Chang Z, Niu S. Mobile Edge Computing Task Offloading Strategy Based on Parking Cooperation in the Internet of Vehicles. Sensors. 2022; 22(13):4959. https://doi.org/10.3390/s22134959

Chicago/Turabian StyleShen, Xianhao, Zhaozhan Chang, and Shaohua Niu. 2022. "Mobile Edge Computing Task Offloading Strategy Based on Parking Cooperation in the Internet of Vehicles" Sensors 22, no. 13: 4959. https://doi.org/10.3390/s22134959

APA StyleShen, X., Chang, Z., & Niu, S. (2022). Mobile Edge Computing Task Offloading Strategy Based on Parking Cooperation in the Internet of Vehicles. Sensors, 22(13), 4959. https://doi.org/10.3390/s22134959