The Effect of Time Window Length on EEG-Based Emotion Recognition

Abstract

:1. Introduction

2. Related Work

2.1. Characteristics of EEG Signals

2.2. EEG Features

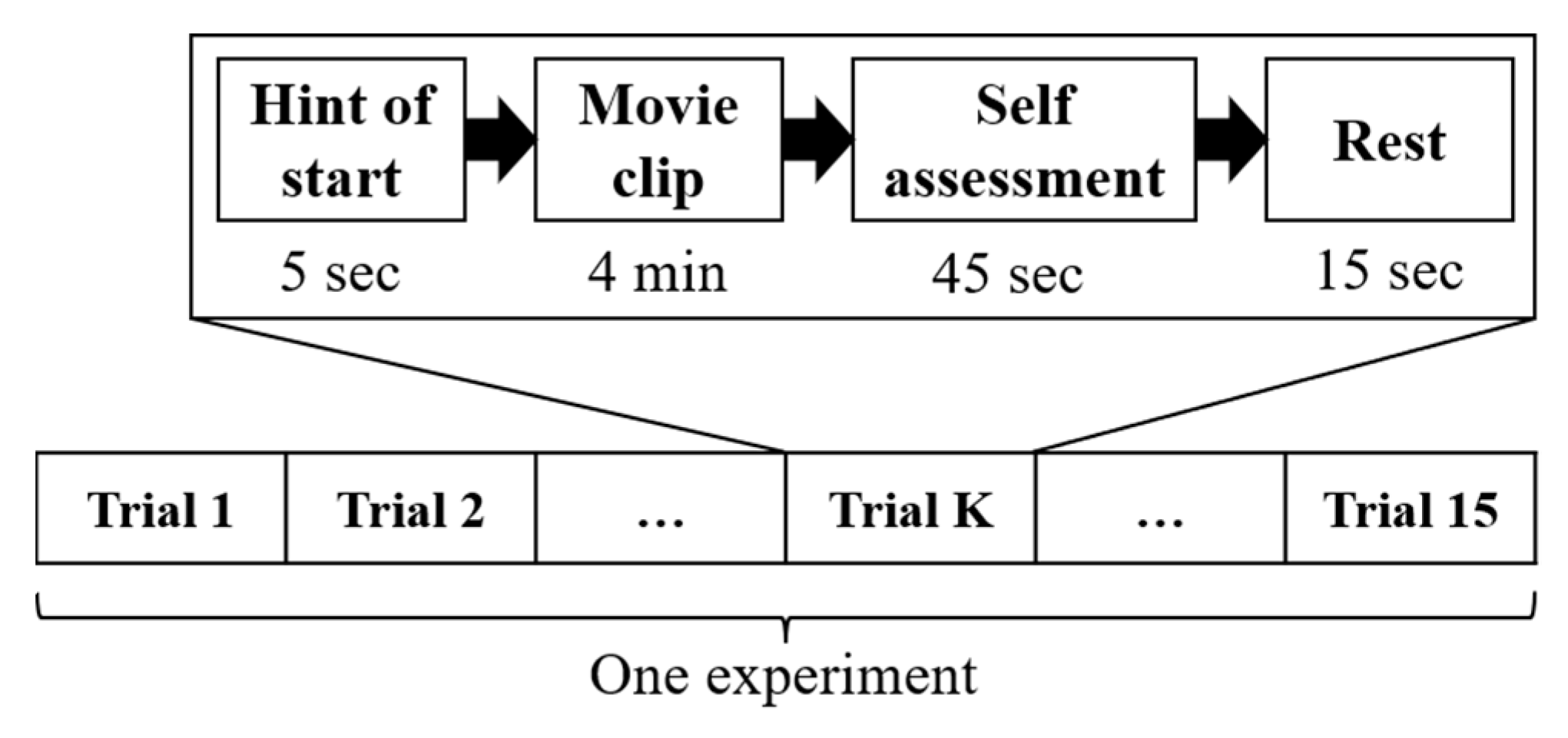

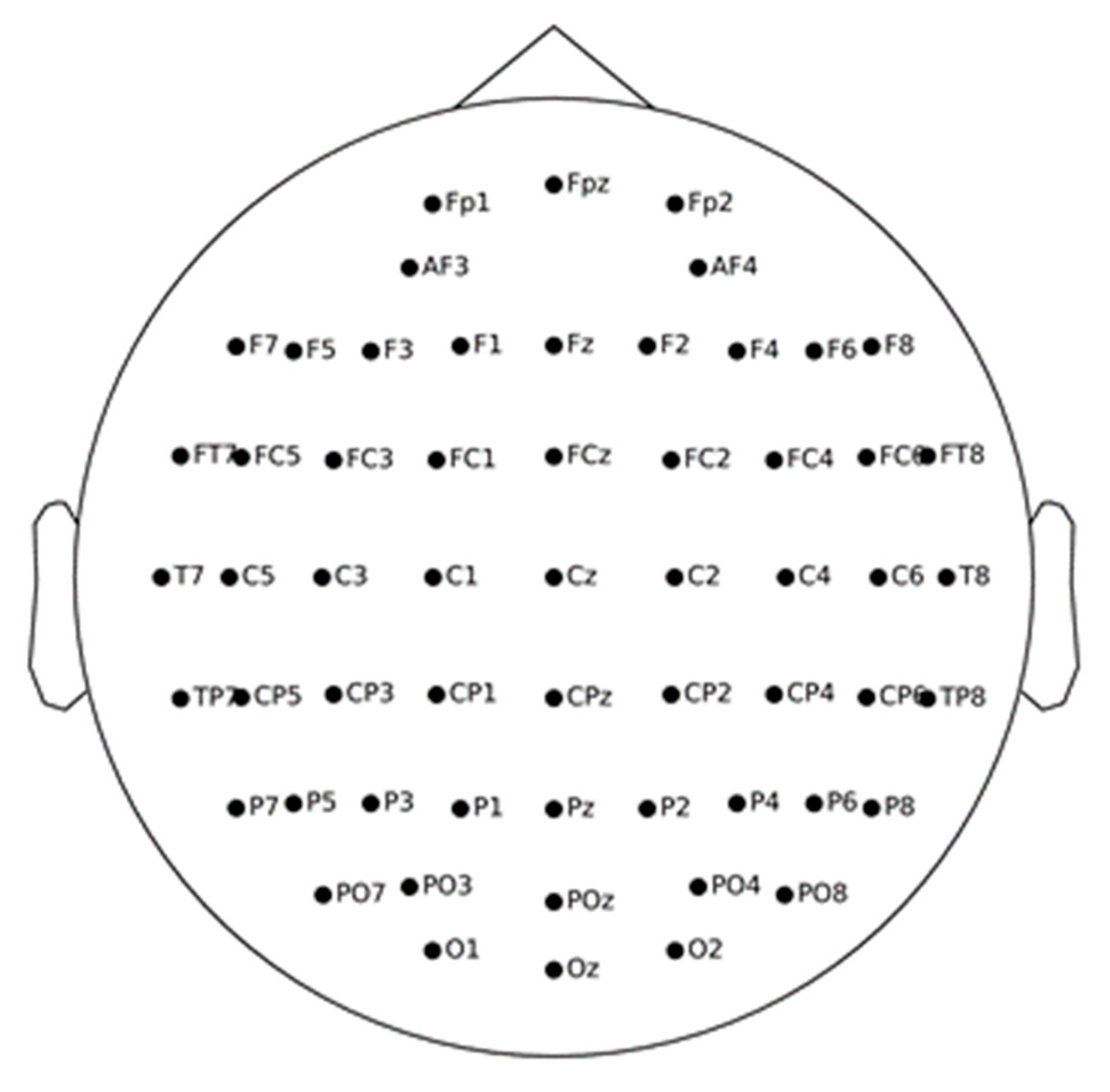

3. Dataset and Experiments

4. Feature Extraction

4.1. Power Spectral Density (PSD) and Differential Entropy (DE)

4.2. Extracting Features Based on TW

4.3. Experimental-Level Batch Normalization (ELBN)

5. Results and Discussion

5.1. The Effect of TW Length on Emotion Recognition without ELBN

5.2. The Effect of TW Length on Emotion Recognition with ELBN

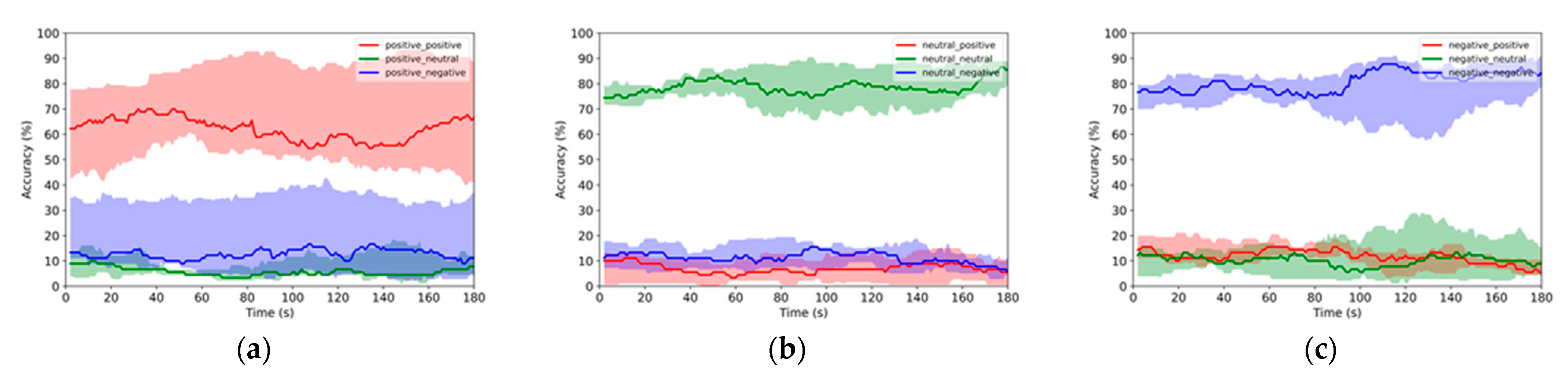

5.3. Online Emotion Recognition

5.4. Influence of TW Length on Emotion Recognition

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Toisoul, A.; Kossaifi, J.; Bulat, A.; Tzimiropoulos, G.; Pantic, M. Estimation of continuous valence and arousal levels from faces in naturalistic conditions. Nat. Mach. Intell. 2021, 3, 42–50. [Google Scholar] [CrossRef]

- Li, G.; Yan, W.; Li, S.; Qu, X.; Chu, W.; Cao, D. A temporal-spatial deep learning approach for driver distraction detection based on EEG signals. In IEEE Transactions on Automation Science and Engineering; IEEE: New York, NY, USA, 2021. [Google Scholar] [CrossRef]

- Amorese, T.; Cuciniello, M.; Vinciarelli, A.; Cordasco, G.; Esposito, A. Synthetic vs Human Emotional Faces: What Changes in Humans’ Decoding Accuracy. IEEE Trans. Hum. Mach. Syst. 2021, 52, 390–399. [Google Scholar] [CrossRef]

- Atkinson, J.; Campos, D. Improving BCI-based emotion recognition by combining EEG feature selection and kernel classifiers. Expert Syst. Appl. 2016, 47, 35–41. [Google Scholar] [CrossRef]

- Tarnowski, P.; Kołodziej, M.; Majkowski, A.; Rak, R.J. Emotion recognition using facial expressions. Procedia Comput. Sci. 2017, 108, 1175–1184. [Google Scholar] [CrossRef]

- Chen, J.; Zhang, P.; Mao, Z.; Huang, Y.; Jiang, D.; Zhang, Y. Accurate EEG-based emotion recognition on combined features using deep convolutional neural networks. IEEE Access 2019, 7, 44317–44328. [Google Scholar] [CrossRef]

- Giannakaki, K.; Giannakakis, G.; Farmaki, C.; Sakkalis, V. Emotional state recognition using advanced machine learning techniques on EEG data. In Proceedings of the IEEE 30th International Symposium on Computer-Based Medical Systems (CBMS), Thessaloniki, Greece, 22–24 June 2017; IEEE: New York, NY, USA, 2017; pp. 337–342. [Google Scholar]

- Jeevan, R.K.; Rao, V.M.S.P.; Kumar, P.S.; Srivikas, M. EEG-based emotion recognition using LSTM-RNN machine learning algorithm. In Proceedings of the 1st International Conference on Innovations in Information and Communication Technology (ICIICT), Chennai, India, 25–26 April 2019; IEEE: New York, NY, USA, 2019; pp. 1–4. [Google Scholar]

- Qing, C.; Qiao, R.; Xu, X.; Cheng, Y. Interpretable emotion recognition using EEG signals. IEEE Access 2019, 7, 94160–94170. [Google Scholar] [CrossRef]

- Quante, L.; Zhang, M.; Preuk, K.; Schießl, C. Human Performance in Critical Scenarios as a Benchmark for Highly Automated Vehicles. Automot. Innov. 2021, 4, 274–283. [Google Scholar] [CrossRef]

- George, F.P.; Shaikat, I.M.; Ferdawoos, P.S.; Parvez, M.Z.; Uddin, J. Recognition of emotional states using EEG signals based on time-frequency analysis and SVM classifier. Int. J. Electr. Comput. Eng. 2019, 9, 2088–8708. [Google Scholar] [CrossRef]

- Asghar, M.A.; Khan, M.J.; Fawad; Amin, Y.; Rizwan, M.; Rahman, M.; Badnava, S.; Mirjavadi, S.S. EEG-based multi-modal emotion recognition using bag of deep features: An optimal feature selection approach. Sensors 2019, 19, 5218. [Google Scholar] [CrossRef] [Green Version]

- Pan, C.; Shi, C.; Mu, H.; Li, J.; Gao, X. EEG-based emotion recognition using logistic regression with Gaussian kernel and Laplacian prior and investigation of critical frequency bands. Appl. Sci. 2020, 10, 1619. [Google Scholar] [CrossRef] [Green Version]

- Wu, D. Online and offline domain adaptation for reducing BCI calibration effort. IEEE Trans. Hum. Mach. Syst. 2016, 47, 550–563. [Google Scholar] [CrossRef] [Green Version]

- Li, G.; Ouyang, D.; Yuan, Y.; Li, W.; Guo, Z.; Qu, X.; Green, P. An EEG data processing approach for emotion recognition. IEEE Sens. J. 2022, 22, 10751–10763. [Google Scholar] [CrossRef]

- Li, J.; Qiu, S.; Du, C.; Wang, Y.; He, H. Domain adaptation for EEG emotion recognition based on latent representation similarity. IEEE Trans. Cogn. Dev. Syst. 2019, 12, 344–353. [Google Scholar] [CrossRef]

- Abtahi, F.; Ro, T.; Li, W.; Zhu, Z. Emotion analysis using audio/video, emg and eeg: A dataset and comparison study. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 15–18 March 2018; IEEE: New York, NY, USA, 2018; pp. 10–19. [Google Scholar]

- Lin, Y.P.; Wang, C.H.; Jung, T.P.; Wu, T.L.; Jeng, S.K.; Duann, J.R.; Chen, J.H. EEG-based emotion recognition in music listening. IEEE Trans. Biomed. Eng. 2010, 57, 1798–1806. [Google Scholar]

- Zheng, W.-L.; Dong, B.-N.; Lu, B.-L. Multimodal emotion recognition using EEG and eye tracking data. In Proceedings of the 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Chicago, IL, USA, 26–30 August 2014; IEEE: New York, NY, USA, 2014; pp. 5040–5043. [Google Scholar]

- Zhuang, N.; Zeng, Y.; Tong, L.; Zhang, C.; Zhang, H.; Yan, B. Emotion recognition from EEG signals using multidimensional information in EMD domain. BioMed Res. Int. 2017, 2017, 8317357. [Google Scholar] [CrossRef]

- Gianotti, L.R.; Lobmaier, J.S.; Calluso, C.; Dahinden, F.M.; Knoch, D. Theta resting EEG in TPJ/pSTS is associated with individual differences in the feeling of being looked at. Soc. Cogn. Affect. Neurosci. 2018, 13, 216–223. [Google Scholar] [CrossRef] [Green Version]

- Matthews, G.; Reinerman-Jones, L.; Abich, J., IV; Kustubayeva, A. Metrics for individual differences in EEG response to cognitive workload: Optimizing performance prediction. Personal. Individ. Differ. 2017, 118, 22–28. [Google Scholar] [CrossRef]

- Li, M.; Xu, H.; Liu, X.; Lu, S. Emotion recognition from multichannel EEG signals using K-nearest neighbor classification. Technol. Health Care 2018, 26, 509–519. [Google Scholar] [CrossRef]

- Lu, Y.; Wang, M.; Wu, W.; Han, Y.; Zhang, Q.; Chen, S. Dynamic entropy-based pattern learning to identify emotions from EEG signals across individuals. Measurement 2020, 150, 107003. [Google Scholar] [CrossRef]

- Subha, D.P.; Joseph, P.K.; Acharya, U.R.; Lim, C.M. EEG signal analysis: A survey. J. Med. Syst. 2010, 34, 195–212. [Google Scholar] [CrossRef]

- Lo, P.-C.; Chung, W.-P. An efficient method for quantifying the multichannel EEG spatial-temporal complexity. IEEE Trans. Biomed. Eng. 2001, 48, 394–397. [Google Scholar]

- Willett, F.R.; Avansino, D.T.; Hochberg, L.R.; Henderson, J.M.; Shenoy, K.V. High-performance brain-to-text communication via handwriting. Nature 2021, 593, 249–254. [Google Scholar] [CrossRef]

- Kang, H.; Nam, Y.; Choi, S. Composite common spatial pattern for subject-to-subject transfer. IEEE Signal Processing Lett. 2009, 16, 683–686. [Google Scholar] [CrossRef]

- Zheng, W.-L.; Lu, B.-L. Investigating critical frequency bands and channels for EEG-based emotion recognition with deep neural networks. IEEE Trans. Auton. Ment. Dev. 2015, 7, 162–175. [Google Scholar] [CrossRef]

- Jatupaiboon, N.; Pan-ngum, S.; Israsena, P. Emotion classification using minimal EEG channels and frequency bands. In Proceedings of the 10th International Joint Conference on Computer Science and Software Engineering (JCSSE), Khon Kaen, Thailand, 29–31 May 2013; IEEE: New York, NY, USA, 2013; pp. 21–24. [Google Scholar]

- Unde, S.A.; Shriram, R. PSD based Coherence Analysis of EEG Signals for Stroop Task. Int. J. Comput. Appl. 2014, 95, 1–5. [Google Scholar] [CrossRef]

- Shi, L.-C.; Jiao, Y.-Y.; Lu, B.-L. Differential entropy feature for EEG-based vigilance estimation. In Proceedings of the 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, 3–7 July 2013; IEEE: New York, NY, USA, 2013; pp. 6627–6630. [Google Scholar]

- Frantzidis, C.; Bratsas, C.; Papadelis, C.; Konstantinidis, E.; Pappas, C.; Bamidis, P. Toward emotion aware computing: An integrated approach using multichannel neurophysiological recordings and affective visual stimuli. IEEE Trans. Inf. Technol. Biomed. 2010, 14, 589–597. [Google Scholar] [CrossRef]

- Kroupi, E.; Yazdani, A.; Ebrahimi, T. EEG correlates of different emotional states elicited during watching music videos. In Proceedings of the International Conference on Affective Computing and Intelligent Interaction, Memphis, TN, USA, 9–12 October 2011; Springer: Berlin/Heidelberg, Germany, 2011; pp. 457–466. [Google Scholar]

- Petrantonakis, P.C.; Hadjileontiadis, L.J. Emotion recognition from EEG using higher order crossings. IEEE Trans. Inf. Technol. Biomed. 2010, 14, 186–197. [Google Scholar] [CrossRef]

- Petrantonakis, P.C.; Hadjileontiadis, L.J. Emotion recognition from brain signals using hybrid adaptive filtering and higher order crossings analysis. IEEE Trans. Affect. Comput. 2010, 1, 81–97. [Google Scholar] [CrossRef]

- Soroush, M.Z.; Maghooli, K.; Setarehdan, S.; Nasrabadi, A.M. Emotion classification through nonlinear EEG analysis using machine learning methods. Int. Clin. Neurosci. J. 2018, 5, 135–149. [Google Scholar] [CrossRef]

- Daly, I.; Malik, A.; Hwang, F.; Roesch, E.; Weaver, J.; Kirke, A.; Williams, D.; Miranda, E.; Nasuto, S.J. Neural correlates of emotional responses to music: An EEG study. Neurosci. Lett. 2017, 573, 52–57. [Google Scholar] [CrossRef]

- Bhatti, M.H.; Khan, J.; Khan MU, G.; Iqbal, R.; Aloqaily, M.; Jararweh, Y.; Gupta, B. Soft computing-based EEG classification by optimal feature selection and neural networks. IEEE Trans. Ind. Inform. 2019, 15, 5747–5754. [Google Scholar] [CrossRef]

- Hassouneh, A.; Mutawa, A.; Murugappan, M. Development of a real-time emotion recognition system using facial expressions and EEG based on machine learning and deep neural network methods. Inform. Med. Unlocked 2020, 20, 100372. [Google Scholar] [CrossRef]

- Duan, R.-N.; Wang, X.-W.; Lu, B.-L. EEG-based emotion recognition in listening music by using support vector machine and linear dynamic system. In Proceedings of the International Conference on Neural Information Processing, Lake Tahoe, NV, USA, 3–6 December 2018; Springer: Berlin/Heidelberg, Germany, 2012; pp. 468–475. [Google Scholar]

- Dabas, H.; Sethi, C.; Dua, C.; Dalawat, M.; Sethia, D. Emotion classification using EEG signals. In Proceedings of the 2nd International Conference on Computer Science and Artificial Intelligence, London, UK, 26–28 July 2018; pp. 380–384. [Google Scholar]

- Song, T.; Zheng, W.; Song, P.; Cui, Z. EEG emotion recognition using dynamical graph convolutional neural networks. IEEE Trans. Affect. Comput. 2018, 11, 532–541. [Google Scholar] [CrossRef] [Green Version]

- Belakhdar, I.; Kaaniche, W.; Djemal, R.; Ouni, B. Single-channel-based automatic drowsiness detection architecture with a reduced number of EEG features. Microprocess. Microsyst. 2018, 58, 13–23. [Google Scholar] [CrossRef]

- Burgess, A.P.; Gruzelier, J.H. Short duration power changes in the EEG during recognition memory for words and faces. Psychophysiology 2000, 37, 596–606. [Google Scholar] [CrossRef]

- Yang, K.; Tong, L.; Shu, J.; Zhuang, N.; Yan, B.; Zeng, Y. High gamma band EEG closely related to emotion: Evidence from functional network. Front. Hum. Neurosci. 2020, 14, 89. [Google Scholar] [CrossRef]

- Pereira, E.T.; Gomes, H.M.; Veloso, L.R.; Mota, M.R.A. Empirical Evidence Relating EEG Signal Duration to Emotion Classification Performance. IEEE Trans. Affect. Comput. 2021, 12, 154–164. [Google Scholar] [CrossRef]

- Jenke, R.; Peer, A.; Buss, M. Feature extraction and selection for emotion recognition from EEG. IEEE Trans. Affect. Comput. 2014, 5, 327–339. [Google Scholar] [CrossRef]

- Tao, W.; Li, C.; Song, R.; Cheng, J.; Liu, Y.; Wan, F.; Chen, X. EEG-based Emotion Recognition via Channel-wise Attention and Self Attention. IEEE Trans. Affect. Comput. 2020, 1. [Google Scholar] [CrossRef]

- Wei, C.; Chen, L.-L.; Song, Z.-Z.; Lou, X.-G.; Li, D.-D. EEG-based emotion recognition using simple recurrent units network and ensemble learning. Biomed. Signal Processing Control. 2020, 58, 101756. [Google Scholar] [CrossRef]

- Demir, F.; Sobahi, N.; Siuly, S.; Sengur, A. Exploring Deep Learning Features for Automatic Classification of Human Emotion Using EEG Rhythms. IEEE Sens. J. 2021, 21, 14923–14930. [Google Scholar] [CrossRef]

| No. | Emotion | Film Clip Sources | #Clips |

|---|---|---|---|

| 1 | negative | Tangshan Earthquake | 2 |

| 2 | negative | Back to 1942 | 3 |

| 3 | positive | Lost in Thailand | 2 |

| 4 | positive | Flirting Scholar | 1 |

| 5 | positive | Just Another Pandora’s Box | 2 |

| 6 | neutral | World Heritage in China | 5 |

| TW Length (s) | Number of TWs in Each Trial | Epochs Contained in Each TW | Feature Format (58 × 5 × N) |

|---|---|---|---|

| 180 | 1 | 180 | 58 × 5 × 1 |

| 90 | 2 | 90 | 58 × 5 × 2 |

| 60 | 3 | 60 | 58 × 5 × 3 |

| 30 | 6 | 30 | 58 × 5 × 6 |

| 20 | 9 | 20 | 58 × 5 × 9 |

| 10 | 18 | 10 | 58 × 5 × 18 |

| 5 | 36 | 5 | 58 × 5 × 36 |

| 4 | 45 | 4 | 58 × 5 × 45 |

| 3 | 60 | 3 | 58 × 5 × 60 |

| 2 | 90 | 2 | 58 × 5 × 90 |

| 1 | 180 | 1 | 58 × 5 × 180 |

| Classifier | Parameter Setting |

|---|---|

| KNN | n_neighbors = 5, p = 2, metric = ‘minkowski’ |

| LR | solver = ‘liblinear’, random_state = 10 |

| SVM | random_state = 10 |

| GNB | \ |

| MLP | solver = ‘lbfgs’, alpha = 1e−5, hidden_layer_sizes = (100, 3), random_state = 1, max_iter = 1e5 |

| Bagging | base_estimator = lr, n_estimators = 500, max_samples = 1.0, max_features = 1.0, bootstrap = True, bootstrap_features = False, n_jobs = 1, random_state = 1 (lr = sklearn.linear_model.LogisticRegression(solver = ‘liblinear’, random_state = 1)) |

| Set # | The Trials Used for Training | The Trials Used for Testing |

|---|---|---|

| 1 | [0, 1, 2, 4, 5, 6, 9, 10, 11, 12, 13, 14] | [3, 7, 8] |

| 2 | [0, 1, 2, 3, 6, 7, 8, 9, 10, 12, 13, 14] | [4, 5, 11] |

| 3 | [1, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 14] | [0, 2, 12] |

| 4 | [0, 1, 3, 4, 5, 6, 7, 8, 9, 10, 11, 14] | [2, 12, 13] |

| 5 | [0, 1, 2, 3, 4, 5, 7, 9, 10, 11, 13, 14] | [6, 8, 12] |

| 6 | [0, 1, 2, 5, 6, 7, 9, 10, 11, 12, 13, 14] | [3, 4, 8] |

| 7 | [0, 1, 2, 3, 4, 6, 7, 8, 9, 11, 12, 13] | [5, 10, 14] |

| 8 | [0, 2, 3, 4, 5, 7, 9, 10, 11, 12, 13, 14] | [1, 6, 8] |

| 9 | [0, 3, 4, 5, 6, 7, 8, 10, 11, 12, 13, 14] | [1, 2, 9] |

| 10 | [0, 1, 2, 3, 4, 5, 6, 8, 10, 11, 12, 13] | [7, 9, 14] |

| TW Length (s) | KNN | LR | SVM | GNB | MLP | Bagging |

|---|---|---|---|---|---|---|

| 180 | 54.22(3.18) | 66.81(5.81) | 49.48(6.32) | 39.63(5.42) | 62.59(5.52) | 66.30(5.72) |

| 90 | 55.33(2.64) | 67.85(6.05) | 51.78(4.96) | 39.85(6.36) | 67.78(4.77) | 68.07(6.37) |

| 60 | 56.37(2.43) | 67.70(6.13) | 52.89(4.19) | 39.70(6.84) | 66.67(5.17) | 68.67(5.76) |

| 30 | 56.67(3.24) | 67.41(7.53) | 53.04(4.21) | 39.56(6.75) | 66.81(5.77) | 68.15(6.37) |

| 20 | 56.37(2.78) | 67.63(6.50) | 52.89(4.01) | 39.56(6.94) | 66.52(4.63) | 67.78(5.51) |

| 10 | 56.15(2.77) | 66.37(6.34) | 52.74(4.08) | 39.41(6.99) | 63.63(5.32) | 68.30(5.61) |

| 5 | 56.07(2.86) | 65.70(6.12) | 52.81(4.01) | 39.48(6.98) | 65.26(4.99) | 67.63(5.40) |

| 4 | 56.00(2.84) | 65.41(6.10) | 52.81(4.01) | 39.56(6.98) | 66.22(4.99) | 67.26(5.28) |

| 3 | 56.00(2.84) | 65.63(5.93) | 52.81(4.01) | 39.56(6.98) | 64.96(5.10) | 67.19(5.52) |

| 2 | 56.00(2.84) | 65.63(5.15) | 52.81(4.01) | 39.56(6.98) | 61.85(7.59) | 66.96(5.76) |

| 1 | 56.00(2.84) | 65.78(4.70) | 52.81(4.01) | 39.56(6.98) | 65.04(4.34) | 67.19(6.04) |

| TW Length (s) | KNN | LR | SVM | GNB | MLP | Bagging |

|---|---|---|---|---|---|---|

| 180 | 65.78(4.81) | 77.19(7.45) | 70.00(4.09) | 48.30(2.91) | 66.30(8.91) | 76.74(6.35) |

| 90 | 66.59(5.53) | 76.81(7.62) | 71.48(5.90) | 48.96(2.93) | 72.67(5.35) | 77.41(7.11) |

| 60 | 66.37(5.21) | 76.22(7.65) | 71.56(6.23) | 48.96(2.82) | 76.15(6.97) | 77.70(7.11) |

| 30 | 66.22(5.06) | 76.81(7.25) | 71.93(6.21) | 48.96(2.87) | 74.81(6.76) | 78.00(6.40) |

| 20 | 65.85(5.04) | 78.67(6.61) | 72.07(6.25) | 48.96(2.87) | 75.70(6.16) | 78.30(6.52) |

| 10 | 66.15(4.91) | 78.67(5.37) | 72.22(6.44) | 49.19(3.03) | 72.07(8.17) | 78.30(5.73) |

| 5 | 66.30(4.85) | 78.59(5.21) | 72.59(6.56) | 49.26(3.24) | 73.48(7.52) | 78.52(5.59) |

| 4 | 66.30(4.85) | 78.30(5.41) | 72.59(6.56) | 49.26(3.24) | 74.67(5.79) | 78.74(5.72) |

| 3 | 66.30(4.85) | 78.15(5.04) | 72.59(6.56) | 49.26(3.24) | 75.11(8.21) | 78.74(6.09) |

| 2 | 66.30(4.85) | 77.70(5.08) | 72.59(6.56) | 49.26(3.24) | 68.59(5.12) | 78.81(6.19) |

| 1 | 66.30(4.85) | 77.19(5.69) | 72.59(6.56) | 49.26(3.24) | 73.11(7.96) | 78.89(6.15) |

| TW Length (s) | KNN | LR | SVM | GNB | MLP | Bagging |

|---|---|---|---|---|---|---|

| 180 | 64.89(4.06) | 73.33(6.87) | 72.59(3.94) | 56.44(5.94) | 72.37(4.76) | 74.37(5.55) |

| 90 | 65.85(3.80) | 77.93(6.56) | 73.41(3.86) | 59.78(6.01) | 73.04(4.58) | 78.37(6.56) |

| 60 | 67.48(3.55) | 77.04(6.93) | 73.70(4.64) | 60.44(5.95) | 74.30(8.73) | 77.78(6.20) |

| 30 | 67.56(2.94) | 77.48(5.94) | 74.37(4.94) | 60.37(6.01) | 71.26(7.97) | 77.78(5.26) |

| 20 | 67.26(3.06) | 77.85(6.09) | 74.37(4.97) | 60.44(6.30) | 74.30(5.14) | 78.67(5.13) |

| 10 | 67.63(3.16) | 79.04(6.03) | 74.44(5.18) | 60.30(6.02) | 69.93(5.19) | 78.67(5.52) |

| 5 | 67.70(3.11) | 79.04(6.29) | 74.44(5.13) | 60.37(6.13) | 73.41(6.56) | 79.41(5.79) |

| 4 | 67.78(3.07) | 79.04(6.17) | 74.44(5.13) | 60.37(6.13) | 73.26(4.25) | 79.33(5.76) |

| 3 | 67.78(3.07) | 79.48(5.82) | 74.44(5.13) | 60.37(6.13) | 72.96(6.96) | 79.41(5.56) |

| 2 | 67.85(3.03) | 79.48(5.81) | 74.44(5.13) | 60.37(6.13) | 70.59(7.61) | 79.33(5.51) |

| 1 | 67.85(3.01) | 79.11(5.72) | 74.44(5.13) | 60.37(6.13) | 73.85(6.53) | 79.56(5.30) |

| TW Length (s) | KNN | LR | SVM | GNB | MLP | Bagging |

|---|---|---|---|---|---|---|

| 180 | 70.67(5.63) | 75.78(8.55) | 75.70(5.47) | 67.48(6.22) | 73.33(7.50) | 77.70(7.21) |

| 90 | 74.15(4.91) | 79.70(8.02) | 77.41(6.01) | 69.26(6.98) | 75.70(6.82) | 80.89(7.79) |

| 60 | 72.37(5.93) | 79.04(8.29) | 77.78(5.80) | 69.41(6.84) | 75.78(7.16) | 80.22(7.65) |

| 30 | 73.19(5.47) | 81.56(7.10) | 77.26(4.94) | 69.41(6.98) | 73.70(7.28) | 81.48(7.37) |

| 20 | 72.96(5.31) | 81.56(7.74) | 77.48(5.32) | 69.63(6.94) | 76.15(7.84) | 81.26(7.73) |

| 10 | 73.26(5.41) | 82.37(6.50) | 77.56(5.13) | 69.48(6.89) | 76.22(6.72) | 82.15(6.64) |

| 5 | 73.41(5.31) | 82.59(6.34) | 77.56(5.13) | 69.41(6.74) | 78.37(7.47) | 82.30(6.67) |

| 4 | 73.41(5.22) | 82.44(6.60) | 77.63(5.24) | 69.41(6.74) | 77.04(7.40) | 82.37(6.57) |

| 3 | 73.41(5.22) | 82.81(6.82) | 77.63(5.24) | 69.41(6.74) | 76.00(6.50) | 82.30(6.42) |

| 2 | 73.48(5.22) | 82.96(6.94) | 77.63(5.24) | 69.41(6.74) | 77.19(6.76) | 82.22(6.55) |

| 1 | 73.33(5.16) | 82.52(7.13) | 77.63(5.24) | 69.41(6.74) | 76.30(6.44) | 82.15(6.81) |

| Feature | Frequency Band | Processing | Fp1 | Fpz | Fp2 | AF3 | AF4 | C3 | C1 | Cz | C2 | C4 | C6 | P1 | Pz | P2 | P4 | P8 | PO7 | PO3 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PSD | Delta | Without ELBN | .000 | .000 | .000 | .000 | .000 | .003 | .019 | .712 | .066 | .002 | .000 | .301 | .086 | .109 | .085 | .000 | .036 | .156 |

| Theta | .017 | .022 | .030 | .029 | .325 | .024 | .235 | .798 | .513 | .129 | .001 | .036 | .008 | .019 | .005 | .000 | .001 | .013 | ||

| Alpha | .582 | .632 | .671 | .367 | .767 | .334 | .482 | .994 | .673 | .623 | .172 | .484 | .439 | .484 | .449 | .046 | .149 | .406 | ||

| Beta | .437 | .252 | .497 | .398 | .539 | .000 | .020 | .814 | .082 | .001 | .000 | .326 | .216 | .368 | .092 | .000 | .000 | .001 | ||

| Gamma | .218 | .032 | .246 | .064 | .457 | .000 | .000 | .454 | .002 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | ||

| Delta | With ELBN | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .038 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | |

| Theta | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .001 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | ||

| Alpha | .001 | .002 | .005 | .000 | .278 | .005 | .009 | .515 | .032 | .001 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | ||

| Beta | .076 | .000 | .041 | .014 | .022 | .000 | .000 | .045 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | ||

| Gamma | .009 | .000 | .016 | .014 | .050 | .000 | .000 | .011 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | ||

| DE | Delta | Without ELBN | .008 | .003 | .349 | .567 | .266 | .577 | .442 | .756 | .965 | .827 | .770 | .004 | .008 | .079 | .628 | .386 | .141 | .109 |

| Theta | .013 | .000 | .894 | .003 | .218 | .000 | .000 | .098 | .071 | .509 | .061 | .431 | .000 | .000 | .211 | .059 | .000 | .000 | ||

| Alpha | .000 | .000 | .000 | .000 | .061 | .352 | .728 | .143 | .000 | .003 | .030 | .000 | .000 | .013 | .000 | .013 | .002 | .068 | ||

| Beta | .000 | .001 | .056 | .476 | .494 | .000 | .002 | .000 | .011 | .000 | .000 | .114 | .000 | .013 | .063 | .466 | .000 | .007 | ||

| Gamma | .202 | .243 | .000 | .000 | .007 | .126 | .005 | .000 | .001 | .018 | .120 | .000 | .054 | .000 | .000 | .001 | .086 | .000 | ||

| Delta | With ELBN | .000 | .000 | .000 | .053 | .001 | .014 | .000 | .010 | .212 | .061 | .186 | .000 | .000 | .000 | .000 | .000 | .016 | .000 | |

| Theta | .000 | .000 | .095 | .000 | .000 | .000 | .000 | .001 | .000 | .000 | .000 | .000 | .000 | .001 | .000 | .000 | .000 | .000 | ||

| Alpha | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | ||

| Beta | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | ||

| Gamma | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 | .000 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ouyang, D.; Yuan, Y.; Li, G.; Guo, Z. The Effect of Time Window Length on EEG-Based Emotion Recognition. Sensors 2022, 22, 4939. https://doi.org/10.3390/s22134939

Ouyang D, Yuan Y, Li G, Guo Z. The Effect of Time Window Length on EEG-Based Emotion Recognition. Sensors. 2022; 22(13):4939. https://doi.org/10.3390/s22134939

Chicago/Turabian StyleOuyang, Delin, Yufei Yuan, Guofa Li, and Zizheng Guo. 2022. "The Effect of Time Window Length on EEG-Based Emotion Recognition" Sensors 22, no. 13: 4939. https://doi.org/10.3390/s22134939

APA StyleOuyang, D., Yuan, Y., Li, G., & Guo, Z. (2022). The Effect of Time Window Length on EEG-Based Emotion Recognition. Sensors, 22(13), 4939. https://doi.org/10.3390/s22134939