Abstract

The failure of the traditional initial alignment algorithm for the strapdown inertial navigation system (SINS) in high latitude is a significant challenge due to the rapid convergence of polar longitude. This paper presents a novel vision aided initial alignment method for the SINS of autonomous underwater vehicles (AUV) in polar regions. In this paper, we redesign the initial alignment model by combining inertial navigation mechanization equations in a transverse coordinate system (TCS) and visual measurement information obtained from a camera fixed on the vehicle. The observability of the proposed method is analyzed under different swing models, while the extended Kalman filter is chosen as an information fusion algorithm. Simulation results show that: the proposed method can improve the accuracy of the initial alignment for SINS in polar regions, and the deviation angle has a similar estimation accuracy in the case of uniaxial, biaxial, and triaxial swing modes, which is consistent with the results of the observable analysis.

1. Introduction

Autonomous underwater vehicles (AUV) have always been an important tool to undertake Marine military tasks and complete Marine resource development, especially in polar resource exploration, and has attracted more and more attention from researchers around the world [1,2]. Due to the special geographical environment and geomagnetic characteristics in polar regions, common satellite navigation, radio navigation, and geomagnetic navigation can not work effectively in polar regions for a long time [3,4,5]. Ionospheric scintillation often occurs in high latitude areas, and the strong phase change and amplitude fluctuation of the signal may interfere with the work of the global positioning system (GPS) receiver, which has a great impact on the accuracy, reliability, and availability of GPS systems [6]. The inertial navigation system (INS) has high autonomy and is not affected by external factors such as climate and positions, so it can continuously provide speed and attitude information. Therefore, as one of the main components of the AUV navigation system, the inertial navigation system has become the key for AUV to complete its polar resource exploration mission [7].

However, accurate initial alignment of navigation equipment must be completed before starting navigation; otherwise, navigation accuracy will be affected [8]. However, with the increase of latitude, the included angle between the angular velocity vector of the Earth’s rotation and the gravitational acceleration vector decreases until it overlaps. Therefore, the strap-down inertial navigation system cannot achieve self-alignment in the polar region [9,10,11], and the initial alignment must be completed by other methods. Therefore, the drift navigation system [12], grid navigation system [13,14,15], and horizontal navigation system [16,17] are designed and developed, and initial alignment is carried out on this basis. However, none of these navigation systems has global navigation capability. The transfer alignment of navigation system based on the main inertial navigation system (MINS) is the main method of strap-down inertial navigation system initial alignment in the polar region [18,19]. In order to realize the initial alignment of the polar region, a fast transfer alignment scheme of speed and attitude matching is proposed in the [20], which requires the aircraft to perform simple motions. In Ref. [21], aiming at the initial alignment problem of ship inertial navigation in the polar region, an MINS transfer alignment model based on an inverse coordinate system is established. Based on the grid coordinate system, Ref. [22] estimates and corrects the inertial navigation errors of airborne weapons by matching the speed and attitude of the information of the main inertial navigation system with the polar transfer alignment method of airborne weapons. However, these algorithms are based on the information of the main inertial navigation system. These methods cannot be used to perform initial alignments of inertial navigation on an AUV without a high precision main inertial navigation system. Therefore, the application of these methods has great limitations.

In recent years, the topic of vision-assisted inertial navigation has attracted extensive attention. The low cost, low weight, and low power of the camera make it an ideal auxiliary system for the inertial navigation system, and it has certain applications in indoor auxiliary navigation [23], UAV navigation [24], and intelligent vehicle navigation [25]. After function extraction, function matching, tracking, and motion estimation of image sequence, the attitude and orientation information are updated. The visual feature points and constraints between the two images are obtained by matching the camera, so as to determine the movement information of the device [26,27]. On this basis, vision and navigation technology are gradually integrated and become a new research focus and development direction in the navigation field.

Aiming at the problem that the strapdown inertial navigation system of AUV cannot self-align in the polar region, this paper proposes a visually-assisted initial alignment method of strapdown inertial navigation by combining vision with inertial navigation and taking the visual measurement position and attitude as the observation amount. In practice, the strapdown inertial navigation system (SINS) on the AUV can use known feature points for alignment during the day and stars for alignment at night. Because it does not rely on other high precision navigation equipment, it can meet the use of complex situations.

The main work of this paper is as follows:

- (1)

- To solve the problem that strap-down inertial navigation system cannot use a traditional mechanized system in a high latitude area, the establishment of a horizontal mechanized system based on a horizontal coordinate system (TCS) can better meet the requirements of initial alignment of AUV in polar areas.

- (2)

- Designing an initial alignment method of SINS assisted by visual measurement information, combine SINS with visual measurement, update the state equation and measurement equation by using the observation result of AUV’s motion as the measurement information, design extended kalman filter (EKF), and obtain the initial alignment method of SINS assisted by vision.

- (3)

- The observability measure of the initial alignment method of the SINS was analyzed, and the initial alignment simulation experiment of the shaking base was designed. The same alignment accuracies was obtained through different swinging modes.

The rest of this article is organized as follows: Section 2 describes the establishment of the TCS and horizontal mechanized system. In Section 3, SINS is combined with visual measurement, and an EKF alignment method designed by the visual model is proposed. Section 4 constructs the observability matrix and analyzes the observability of the algorithm. In Section 5, numerical simulation and simulation of strap-down inertial navigation system in the polar region are carried out to verify the performance of the method. In Section 6, the pitch uniaxial swing scheme is used for experimental verification. Finally, the conclusions and future work are summarized in Section 7.

2. Polar Initial Alignment Algorithm Based on Abscissa System

2.1. Transversal Earth Coordinate System (TEF) and Transversal Geographic Coordinate System (TGF) Definition and Parameter Conversion

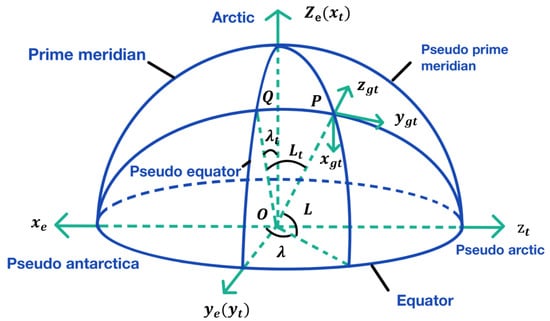

The horizontal longitude and latitude of a sphere are defined by referring to the definition method of geographic longitude and latitude of a sphere, as shown in Figure 1. The traditional longitude line is turned to the equator, which is called pseudo-longitude and latitude. The transverse earth coordinate system, namely system, uses E/W coils as the pseudo equator, coils as the pseudoprime meridians, and the intersection of the equator and the pseudoprime meridian as the pseudo pole. In the horizontal earth coordinate system, the northeast celestial geographic coordinate system is called the transverse geographic coordinate system, denoted as the system. Taking point P as an example, the direction of point P tangent to the pseudo meridian and pointing to the pseudo-North Pole is defined as false north (), and the direction of point P perpendicular to the local horizontal plane is defined as false celestial (). The P point is tangent to the pseudo-east coil (). , , and constitute the right-handed Cartesian coordinate system. The coordinate system is the horizontal geographic coordinate system defined by the pseudo-earth coordinate system.

Figure 1.

Transversal coordinate system.

According to the definition of TCS, the e-frame can turn to the -frame by rotating the e-frame around axis . Based on the theory of rotation, the conversion relationship between e-frame and -frame can be expressed as follows:

By relying on the mathematical model of the spherical right angle, the transfer relationship of latitude and longitude between g-frame and -frame can be defined as:

In addition, the conversion relationship between the -frame and g-frame can be expressed as follows:

where the transformation matrix of the e-frame to the g-frame is:

2.2. The Mechanization Equations of Latitude and Longitude in the -Frame

The transversal g-frame is defined as the navigation coordinate system in the transversal n-frame, and its mechanical equations are similar to the North-pointed INS.

- (1)

- Attitude angle differential equation:

- (2)

- Velocity differential equation:

The mechanics equation of the vehicle in TNF is shown as follows:

where , .

- (3)

- Position differential equation:

The differential equation of transversal latitude , transversal longitude , and height h can be expressed as follows:

- (4)

- Error equation of attitude:

In the case where the scale factor error and installation error of the SINS gyroscope have been compensated, the vector form of the attitude error equation can be expressed as follows:

where the partial derivative of with pseudo speed , , and transversal position , , h is:

Gyro error is composed of random constant and Gaussian white noise :

- (5)

- Velocity error equation

In the case where the accelerometer’s scale factor error and installation error have been compensated, the vector form of the velocity error equation can be expressed as follows:

Assume that the accelerometer error consists of a random constant and Gaussian white noise , i.e., .

- (6)

- Position error equation

The component of the position error equation is:

3. Visually Assisted SINS Initial Alignment Algorithm in the Polar Region

3.1. The Theoretical Basis of Visual Positioning

The system state variables of filter can be expressed as follows:

where is the misalignment angle of SINS, is the velocity error, is the position error, is the constant drift error of gyroscope, is the constant drift error of accelerometer, is the setting-angle error between INS and camera. The error caused by lever arm effect between camera and INS is .

The state equation of the system can be expressed as follows:

is the navigation coordinate system, and the horizontal geographic coordinate system is the navigation coordinate system .

3.2. The Establishment of the Measurement Equation of Filter

The transformation relation between AUV body coordinate system and camera coordinate system is represented by a dimensional rotation matrix C and a translation vector T:

The above formula is expressed in the homogeneous coordinate form:

where M is defined as the relative pose transfer matrix, where:

where is the component of the rotation matrix T of the AUV body coordinate system and camera coordinate system.

According to the pinhole imaging model, the conversion relationship between the camera coordinate system and image plane coordinate system is as follows:

where f is the focal length of the camera. Substitute Equation into Equation , and derive:

In the formula, the internal parameter matrix contains the parameters that reflect the internal optical and geometric characteristics of the camera, and the external parameter matrix contains the attitude transfer matrix C and position transfer vector T that reflects the spatial position relationship between the camera coordinate system and the three-dimensional reference coordinate system.

- (1)

- Visual measurement equation

The position coordinates of feature points in the image plane coordinate system (P system) can be obtained from the image. The subscript i indicates the ith feature point.

The feature points satisfy the collinear equation:

is the position coordinate of the feature point i in the n-frame. is the position coordinate of camera optical center under the n-frame. is the attitude transformation matrix of the -frame to the c-frame. is the position coordinate of the imaging of feature point i in the p-frame. is the projection length of the distance from the feature point i to the optical center of the camera on the optical axis. and are the camera’s equivalent focal lengths. and are the position coordinates of the intersection of the camera optical axis and the image in the p-frame. For the camera that has been calibrated, are known quantities.

The position coordinates of the image of n (n > 6) feature points in the p-frame and the position coordinates of feature points in the n-frame can be obtained at time t. Based on the linear approximation of the position coordinates of feature points in the p-frame and n-frame, the transformation matrix of the n-frame to the c-frame at this moment and the position of the camera under the navigation system can be obtained by using the principle of the least-squares method.

The relationship between the transformation matrix and can be expressed as follows:

The relationship between the position and can be expressed as follows:

where the is the transformation matrix from b-frame to -frame, the is the position coordinates of the vehicle under the n-frame.

After the camera is calibrated and fixed with the vehicle, the transformation matrix and the translation vector of the b-frame to the c-frame can be obtained. Then, the attitude transformation matrix and the position coordinate of the vehicle under the n-frame can be calculated with and .

- (2)

- Establishment of the measurement equation

The measurement equation of the system can be expressed as follows:

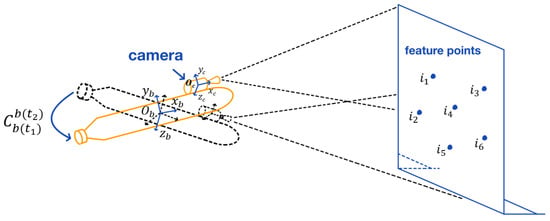

where is the attitude angle corresponding to the attitude transformation matrix. is the attitude matrix measured by the camera. is the transformation matrix between camera to INS. is the attitude matrix measured by the INS. is the position measured by the INS. is the position measured by the camera. is the visual measurement noise. is the transformation matrix of the b-frame to the c-frame. Thereinto, the schematic diagram of visual aided INS alignment platform is shown in Figure 2.

Figure 2.

Schematic diagram of the alignment platform of the visual aided INS.

4. Observability Analysis of SINS Polar Initial Alignment Algorithm with Visual Assistance

The observability of the system is different under different swaying modes. Observability analysis theory is used to analyze the observability of the system under different maneuvers and to find the maneuvering method that is most suitable for AUV initial alignment in the polar region.

In this paper, we analyze the observability of the system by the analytical method.

When swaying around the inertial measurement unit (IMU), since the position of the IMU does not change and the true velocity is zero, the error equation can be simplified to make the analysis intuitive and simple. Then, we can get:

The state space model is:

where:

is observability matrix, is the matrix consisting of observables and their derivatives. We use rows 1th to 18th for analysis:

where:

Can be written as:

In the formula, is yaw, is roll, and is pitch. From the order of the derivative of observations, corresponds to the zero-order derivative of the observations, so they have the highest degree of observability. and correspond to the first-order derivatives of the observations, and they have the second highest degree of observability. corresponds to the second-order derivative of the observation with the lowest observability.

It can be seen from the first three rows that is the coefficient of . is a constant in the static state, so cannot be accurately estimated by these three equations. Due to the coupling between and , the inaccuracy of will affect the estimation accuracy of . When in the three-axis swing, keeps changing and the coefficient of also changes. We can obtain many sets of nonlinear equations through multiple measurements at different times, and then accurately estimate the six values of . In the same way, the estimated value of will be more accurate in the triaxial swing state than in the stationary state. In addition, can be derived from the 16th and 17th rows. can be estimated through the second derivative of the position error and the estimation accuracy is . Therefore, the and the will converge faster and more accurately than the in theory. In addition, taking into account the coupling relationship between , it will have an advantage on the estimation of .

However, in engineering, it is difficult to perform triaxial sway, so the following is to analyze the observability of uniaxial or biaxial sway.

For uniaxial sway, the change of pitch will affect nine values of . The change of roll and yaw will affect six values of . Therefore, the estimation accuracy of uniaxial sway is pitch > roll = yaw. However, the estimation accuracy will be affected by the initial attitude with uniaxial sway. One degree of freedom will be ignored at a certain initial attitude. For example, when the initial pitch and yaw are both zero, the change in yaw will only cause the change of four values of . When taking biaxial sway, the initial attitude will not affect the estimation accuracy and at least eight values in will change. Thus, the effects of biaxial sway and triaxial sway are basically the same in theory.

5. Simulation

Assume that, in the simulation experiment, the initial position of AUV in g-frame is , and in the -frame is . The initial state of yaw is , pitch and rolls are , and the speed is . AUV sways around the yaw axis, pitch axis, and roll axis with a swing amplitude of .

Set the initial value of filter: the initial value of system state is . Initial misalignment angles are and . Speed error is . Position error is . Gyro constant drift error is . Random noise is . Accelerometer constant bias is . Random noise is . The difference in attitude angle between the camera and the IMU is . The misalignment angle after calibration is . The lever arm between the camera and IMU is . The error caused by the lever arm is . The accuracy of visual measurement is related to the distance between the camera and the marker. It is also related to the distance of the feature point on the marker. If the distance between the feature points is farther, the measurement accuracy will be higher. In addition, the closer the distance between the camera and the marker, the higher the measurement accuracy will be. When the distance between the feature points is 30 mm and the distance between the camera and the marker is 2000 mm, the distance error is below 10 mm, the attitude error is below , and both are white noise. Thus, in this simulation, the attitude measurement noise is set to , the position measurement noise is , the update period of the inertial navigation data are , the update period of the camera data are , and the filtering period is 1 s. Then, the initial variance matrix , the system matrix Q, and the measurement noise matrix R are as follows:

According to the above simulation conditions, the Kalman filtering method is used to carry out the moving base alignment simulation with the simulation time of 200 s.

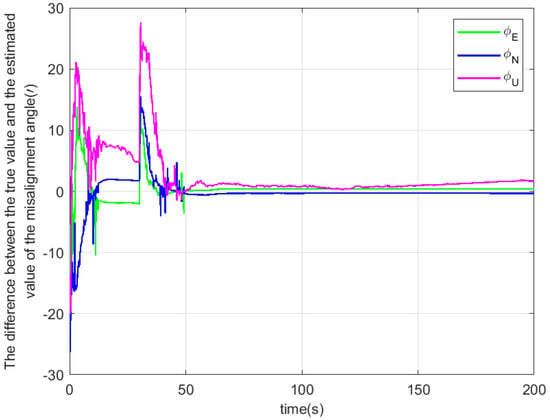

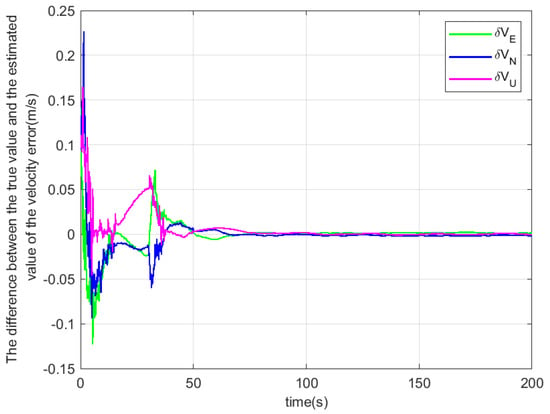

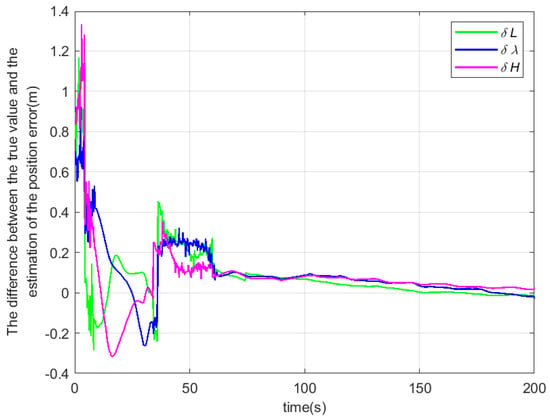

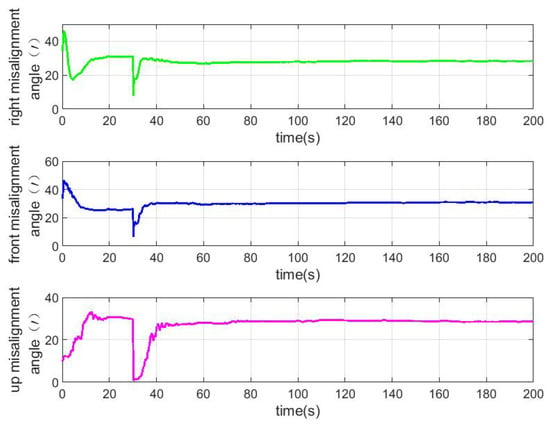

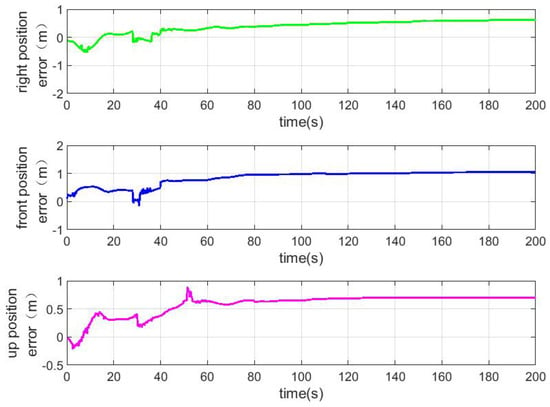

According to the above simulation conditions, The difference between the true value and the estimated value is shown in Figure 3, Figure 4 and Figure 5. The residual mounting misalignment angle between the camera and the IMU after calibration is shown in Figure 6. The residual rod arm error between the camera and IMU after calibration is shown in Figure 7.

Figure 3.

The difference between the true value and the estimated value of the misalignment angle.

Figure 4.

The difference between the true value and the estimated value of the velocity error.

Figure 5.

The difference between the true value and the estimated value of the position error.

Figure 6.

Residual mounting misalignment angle between camera and IMU after calibration.

Figure 7.

Residual bar arm error between camera and IMU after calibration.

The figure shows the state estimation under the condition of triaxial sway. Because the initial attitude error is large, in order to obtain a better estimation effect, the initial navigation system will be modified once with the current estimation value to reduce the nonlinearity of the model in the 30th second.

The estimates for the triaxial swing are shown in Table 1.

Table 1.

Estimates under triaxial sway.

As can be seen from the table, there is little difference in the estimation accuracy of the east misalignment angle, north misalignment angle, velocity error, and position error, whether swinging or stationary. However, there is a significant difference in the estimation accuracy of sky error angle under different swinging modes. The estimation accuracy of the celestial misalignment angle is significantly higher than that of the single-axis roll, single-axis yaw, and stationary rotation when the rotation is three-axis, two-axis, and single-axis pitching. Meanwhile, the residual installation error angle between the camera and the inertial navigation can be accurately estimated, which is consistent with the theoretical analysis. When the gyroscope is biased at and the accelerometer is biased at , the triaxial alignment accuracy of the strap-down inertial navigation system is and . For the estimation of the deviation angle, it has the same effect in the case of uniaxial, biaxial, and triaxial swing after alignment 200 s, which is consistent with the results of the observable analysis. In addition, the residual rod arm error between the camera and the inertial navigation device can only be accurately estimated under the condition of triaxial and biaxial swing, and the residual rod arm error between the camera and the inertial navigation device can not be accurately estimated under the condition of uniaxial and static swing. At the same time, the added zero drift can not be observed under any circumstances and the gyro zero bias estimation is not accurate; there is a large deviation.

In engineering, the rod arm between the camera and the inertial navigation device can be obtained through calibration, and the accuracy can be up to a millimeter-level. Therefore, the AUV can only carry out the pitching single-axis swing for initial alignment in the polar region, which will greatly reduce the difficulty of engineering alignment compared with the three-axis swing.

6. Experimental Verification

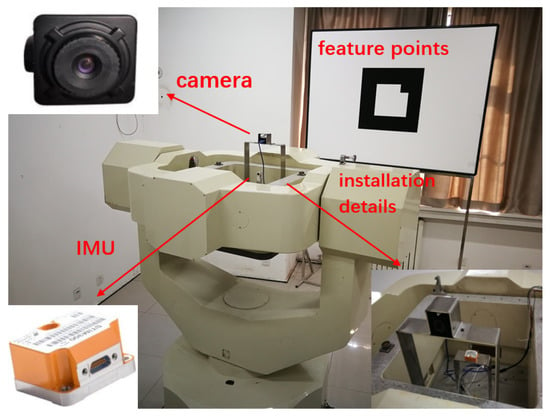

In Section 5, the initial alignment schemes of SINS under different maneuvering schemes are analyzed and simulated. The results show that the effect of single axis swing scheme in pitch is basically the same as that of the three-axis swing scheme. However, in practical engineering applications, it is difficult to realize the three-axis swing, and the pitching single-axis swing scheme is simple and easy to operate. Therefore, the pitch uniaxial swing scheme was adopted in this experiment to verify the initial alignment algorithm of the strapdown inertial navigation pole region based on visual assistance. Considering the feasibility and operability of practical engineering, a hand-pushed turntable is used to simulate the rocking motion of the vehicle.

STIM300 is selected as inertial measurement element, camera selection industrial camera DYSMT205A. By fixing the bracket, the camera and STIM300 are fixed on the turntable bracket to ensure that the relative position and pose of the inertial measurement element and the camera remain unchanged, as shown in Figure 8. The camera uses an AR Marker to obtain pose information. AR Marker is placed directly in front of the camera and will not move or change during the whole experiment. Before the motion of hand push simulated vehicle swing, the AR Marker position and attitude information of AR Marker were measured and recorded with high-precision equipment. The lever arm error of the system is very small and can be ignored.

Figure 8.

Overall connection diagram of the experiment.

A total of three groups of data were recorded in the experiment. Because of the high accuracy of pitch angle and roll angle, they are not given here. Using the vision-aided initial alignment algorithm, the yaw angle converges to – after stationary. The error results with the yaw angle value obtained by the corresponding turntable are recorded in Table 2.

Table 2.

The difference between the yaw angle obtained by the visual aid initial alignment algorithm and the corresponding turntable.

It can be seen from Table 2 that the yaw angle error can be limited to about , which includes the small angle error of axis unalignment between the camera and the turntable as well as the small angle error of axis unalignment between STIM300 and the turntable. The results show that the error of yaw angle is within an acceptable range and can meet the actual needs, which verifies the initial alignment algorithm of SINS based on visual assistance.

7. Conclusions

This paper solves the problem that the strap-down inertial navigation system of AUV cannot realize self-alignment in the polar region. Combining navigation with vision, a visually-assisted initial alignment method of SINS based on an abscise-coordinate system is proposed. When the AUV is swinging, the position and attitude of the AUV itself are calculated by the motion of known feature points relative to the camera. SINS is combined with visual measurement, and the observation result of AUV’s motion is used as the measurement information to update the state equation and measurement equation, in order to complete the high-precision initial alignment of SINS with visual assistance. Simulation results show that this algorithm can estimate the error angle effectively; the error between the estimated value and the true value is close to zero and does not diverge with time and has good convergence. The experimental results show that the yaw angle error can meet the practical needs and has strong practical application. At the same time, AUV only needs to pitch a single axis swing that can meet the needs of polar alignment, greatly reducing the difficulty of engineering to achieve the initial alignment of the AUV polar region. Therefore, the algorithm can better meet the requirements of AUV initial alignment in the polar region. Compared with the traditional polar alignment method, this method is simpler and more widely applicable. At present, only simulation verification and analysis have been carried out, and field investigation and test have been actively carried out in the later stage.

Author Contributions

Conceptualization, F.Z.; methodology, F.Z.; software, X.G. and W.S.; validation, X.G. and W.S.; investigation, W.S.; resources, F.Z.; data curation, X.G.; writing—original draft preparation, F.Z.; writing—review and editing, W.S.; visualization, X.G.; supervision, F.Z.; project administration, F.Z.; funding acquisition, F.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| SINS | Strapdown inertial navigation system |

| AUV | Autonomous underwater vehicles |

| TCS | Transverse coordinate system |

| EKF | Extended Kalman filter |

| INS | Inertial navigation system |

| GPS | Global positioning system |

| MINS | Main inertial navigation system |

| TEF | Transversal Earth coordinate system |

| TGF | Transversal geographic coordinate system |

| IMU | Inertial measurement unit |

References

- González-García, J.; Gómez-Espinosa, A.; García-Valdovinos, L.G.; Salgado-Jiménez, T.; Cuan-Urquizo, E.; Escobedo Cabello, J.A. Experimental Validation of a Model-Free High-Order Sliding Mode Controller with Finite-Time Convergence for Trajectory Tracking of Autonomous Underwater Vehicles. Sensors 2022, 22, 488. [Google Scholar] [CrossRef] [PubMed]

- Yan, Z.; Wang, L.; Wang, T.; Zhang, H.; Zhang, X.; Liu, X. A Polar Initial Alignment Algorithm for Unmanned Underwater Vehicles. Sensors 2017, 17, 2709. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Babich, O.A. Extension of the Basic Strapdown INS Algorithms to Solve Polar Navigation Problems. Gyroscopy Navig. 2019, 10, 330–338. [Google Scholar] [CrossRef]

- Ngwira, C.M.; Mckinnell, L.A.; Cilliers, P.J. GPS phase scintillation observed over a high-latitude Antarctic station during solar minimum. J. Atmos. Solar-Terr. Phys. 2010, 72, 718–725. [Google Scholar] [CrossRef]

- Andalsvik, Y.L.; Jacobsen, K.S. Observed high-latitude GNSS disturbances during a less-than-minor geomagnetic storm. Radio Sci. 2014, 49, 1277–1288. [Google Scholar] [CrossRef]

- Meziane, K.; Kashcheyev, A.; Jayachandran, P.T.; Hamza, A.M. On the latitude-dependence of the GPS phase variation index in the polar region. In Proceedings of the 2020 IEEE International Conference on Wireless for Space and Extreme Environments (WiSEE), Vicenza, Italy, 12–14 October 2020; pp. 72–77. [Google Scholar] [CrossRef]

- Wu, R.; Wu, Q.; Han, F.; Zhang, R.; Hu, P.; Li, H. Hybrid Transverse Polar Navigation for High-Precision and Long-Term INSs. Sensors 2018, 18, 1538. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liu, M.; Gao, Y.; Li, G.; Guang, X.; Li, S. An Improved Alignment Method for the Strapdown Inertial Navigation System (SINS). Sensors 2016, 16, 621. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liu, W.C.; Tan, Z.Y.; Bian, H.W. A plication of wander azimuth INS /GPS integra-ted navigation in polar region. Fire Control Command Control 2013, 38, 69–71. (In Chinese) [Google Scholar]

- Cui, W.; Ben, Y.; Zhang, H. A Review of Polar Marine Navigation Schemes. In Proceedings of the 2020 IEEE/ION Position, Location and Navigation Symposium (PLANS), Portland, OR, USA, 20–23 April 2020; pp. 851–855. [Google Scholar] [CrossRef]

- Li, Q.; Ben, Y.; Yu, F. System reset of transversal strapdown INS for ship in polar region. Measurement 2015, 60, 247–257. [Google Scholar] [CrossRef]

- Salychev, O.S. Applied Inertial Navigation Problems and Solutions; The BMSTU Press: Moscow, Russia, 2004. [Google Scholar]

- Ge, H.R.; Xu, X.; Huang, L.; Zhao, H. SINS/GNSS Polar Region Integrated Navigation Method based on Virtual Spherical Model. Navig. Position. Timing 2021, 8, 81–87. [Google Scholar] [CrossRef]

- Yan, Z.; Wang, L.; Zhang, W.; Zhou, J.; Wang, M. Polar Grid Navigation Algorithm for Unmanned Underwater Vehicles. Sensors 2017, 17, 1599. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Di, Y.R.; Huang, H.; Ruan, W. Underwater Vehicle Pole-aligned Network navigation Technology. Ship Sci. Technol. 2021, 43, 77–82. [Google Scholar]

- Yan, Z.; Wang, L.; Wang, T.; Yang, Z.; Li, J. Transversal navigation algorithm for Unmanned Underwater Vehicles in the polar region. In Proceedings of the 2018 37th Chinese Control Conference (CCC), Wuhan, China, 25–27 July 2018; pp. 4546–4551. [Google Scholar] [CrossRef]

- Yan, Z.; Wang, L.; Wang, T.; Zhang, H.; Yang, Z. Polar Transversal Initial Alignment Algorithm for UUV with a Large Misalignment Angle. Sensors 2018, 18, 3231. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lin, X.; Bian, H.; Wang, R. Analysis of Navigation Performance of Initial Error Based on INS Transverse Coordinate Method in Polar Region. In Proceedings of the 2018 IEEE CSAA Guidance, Navigation and Control Conference (CGNCC), Xiamen, China, 10–12 August 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Cheng, J.H.; Liu, J.X.; Zhao, L. Review on the Development of Polar Navigation and Positioning Support Technology. Chin. Ship Res. 2021, 16, 16–29. [Google Scholar] [CrossRef]

- Kain, J.E.; Cloutier, J.R. Rapid Transfer Alignment for Tactical Weapon Applications. In Proceedings of the AIAA Guidance, Navigation, and Control Conference, Boston, MA, USA, 14–19 August 1989; pp. 1290–1300. [Google Scholar]

- Sun, F.; Yang, X.L.; Ben, Y.Y. Research on Polar Transfer Alignment Technology Based on Inverse coordinate System. J. Proj. Arrows Guid. 2014, 34, 179–182. [Google Scholar]

- Wu, F.; Qin, Y.Y.; Zhou, Q. Polar Transfer Alignment Algorithm for Airborne Weapon. J. Chin. Inert. Technol. 2013, 21, 141–146. [Google Scholar]

- Rantanen, J.; Makela, M.; Ruotsalainen, L.; Kirkko-Jaakkola, M. Motion Context Adaptive Fusion of Inertial and Visual Pedestrian Navigation. In Proceedings of the 2018 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Nantes, France, 24–27 September 2018; pp. 206–212. [Google Scholar] [CrossRef]

- Shao, W.; Dou, L.; Zhao, H.; Wang, B.; Xi, H.; Yao, W. A Visual/Inertial Relative Navigation Method for UAV Formation. In Proceedings of the 2020 Chinese Control and Decision Conference (CCDC), Hefei, China, 22–24 August 2020; pp. 1831–1836. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, Y.; Yuan, Q.; Wu, H. Intelligent Vehicle Visual Navigation System Design. In Proceedings of the 2010 International Conference on Machine Vision and Human-machine Interface, Kaifeng, China, 24–25 April 2010; pp. 522–525. [Google Scholar] [CrossRef]

- Jiayun, L.; Fubin, Z. A vision assisted navigation method suitable for polar regions used by autonomous underwater vehicle. In Proceedings of the OCEANS 2018 MTS/IEEE Charleston, Charleston, SC, USA, 22–25 October 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Chen, M.; Xu, J.H.; Yu, P. Research on the Integrated Navigation Technology of Inertial-Aided Visual Odometry. In Proceedings of the 2018 IEEE CSAA Guidance, Navigation and Control Conference (CGNCC), Xiamen, China, 10–12 August 2018; pp. 1–5. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).