Early-Stage Alzheimer’s Disease Categorization Using PET Neuroimaging Modality and Convolutional Neural Networks in the 2D and 3D Domains

Abstract

:1. Introduction

2. Dataset Description

3. Methods

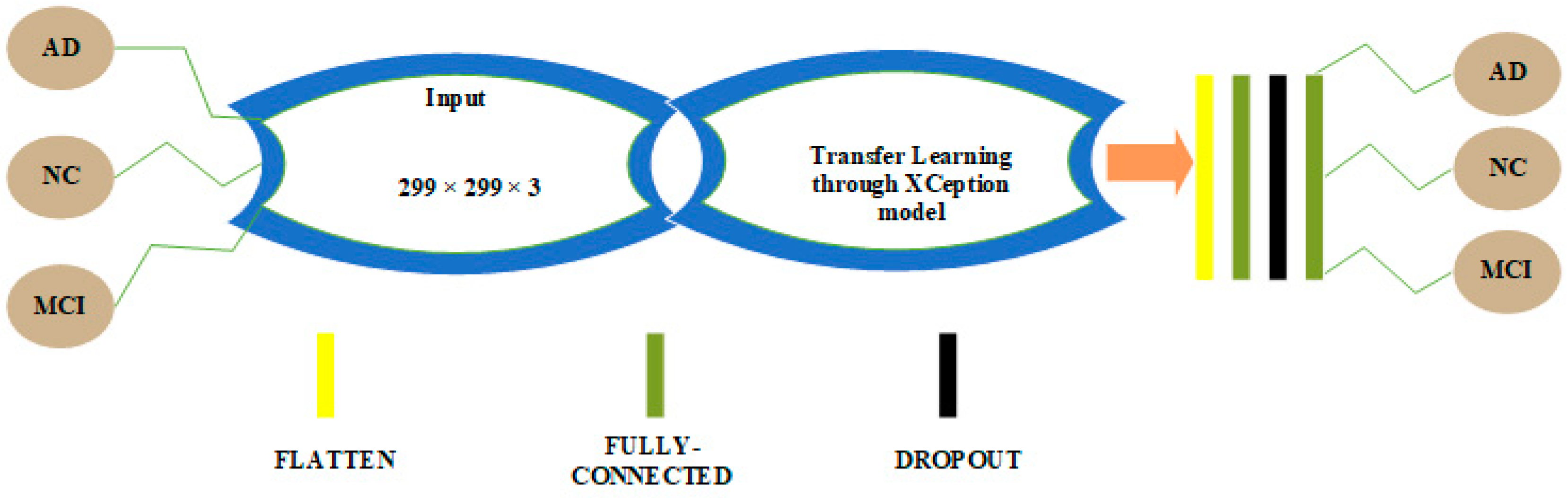

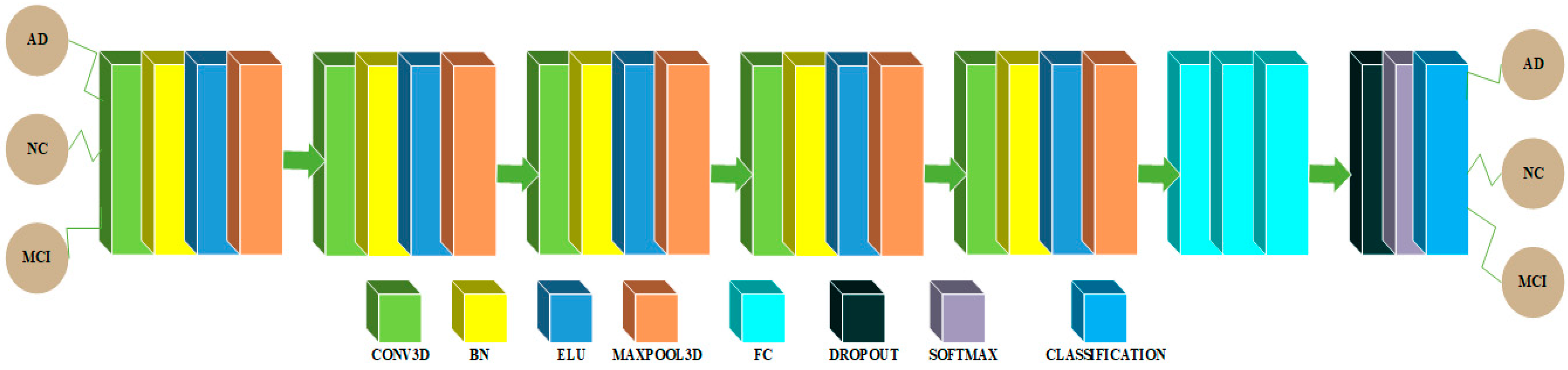

3.1. CNN Architectures in 2D and 3D Domains for Multiclass Categorization among AD, MCI and NC Classes

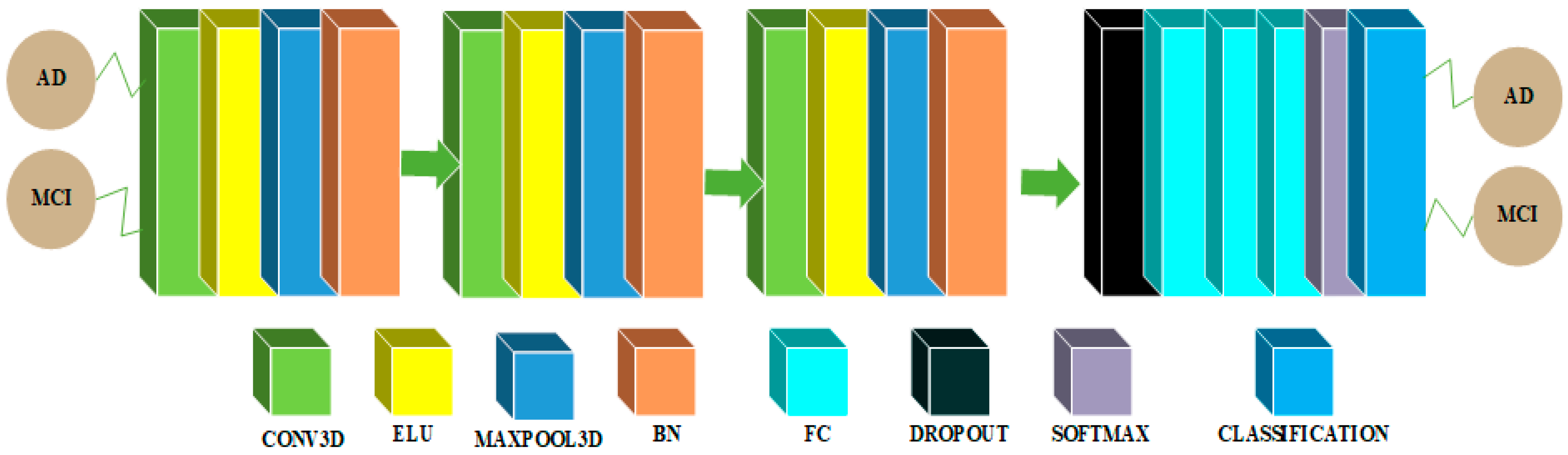

3.2. CNN Architectures in 2D and 3D Domains for Binary Classification among AD and MCI Classes

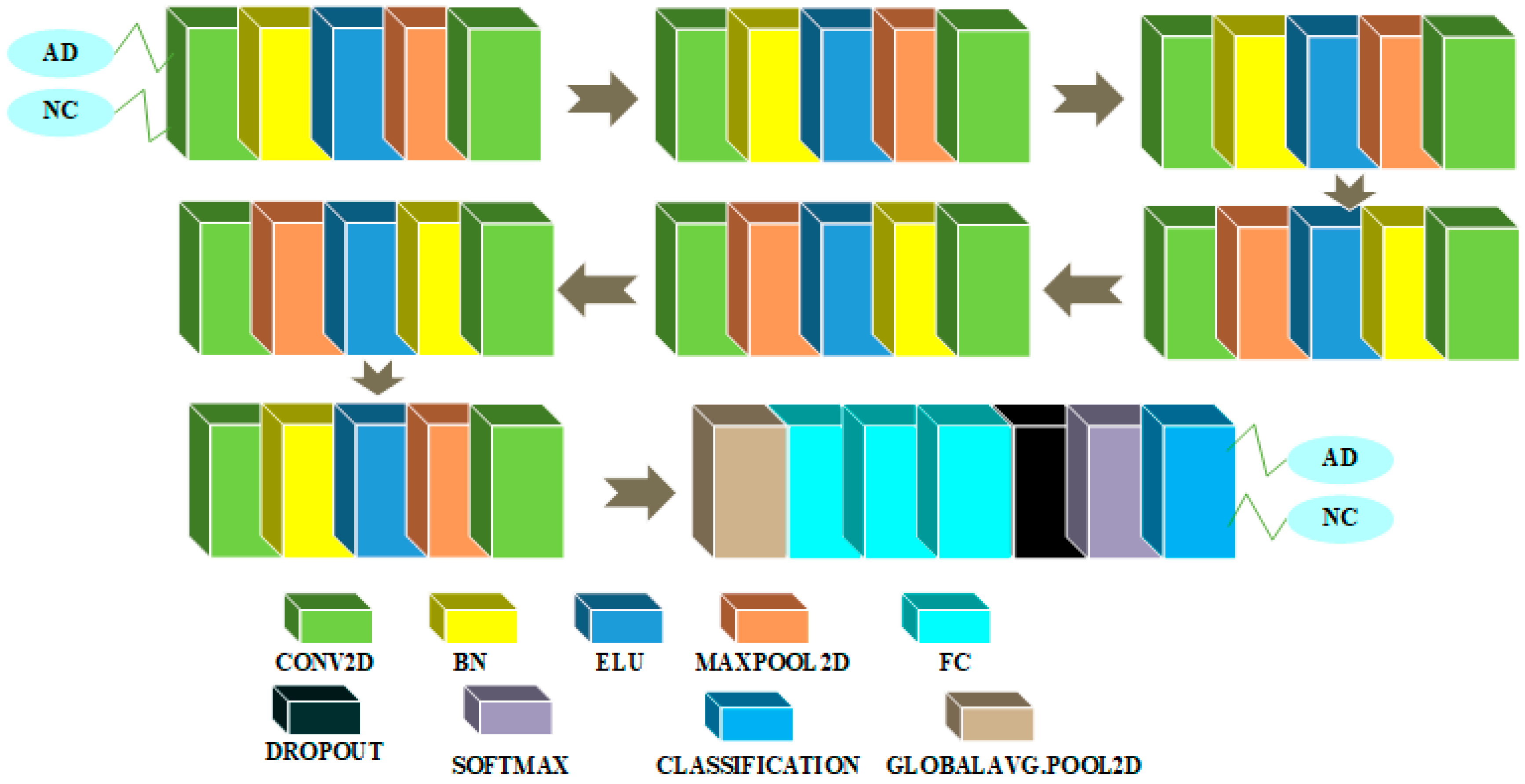

3.3. CNN Architectures in 2D and 3D Domains for AD-NC Binary Classification

3.4. CNN Architectures in 2D and 3D Domains for Binary Classification of MCI and NC

4. Experiments

5. Results and Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lu, D.; Popuri, K.; Ding, G.W.; Balachandar, R.; Beg, M.F. Multimodal and Multiscale Deep Neural Networks for the Early Diagnosis of Alzheimer’s Disease using structural MR and FDG-PET images. Sci. Rep. 2018, 8, 5697. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liu, S.; Liu, S.; Cai, W.; Che, H.; Pujol, S.; Kikinis, R.; Feng, D.; Fulham, M.J. Multimodal neuroimaging feature learning for multiclass diagnosis of Alzheimer’s disease. IEEE Trans. Biomed. Eng. 2015, 62, 1132–1140. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liu, M.; Cheng, D.; Yan, W. Classification of Alzheimer’s Disease by Combination of Convolutional and Recurrent Neural Networks Using FDG-PET Images. Front. Neuroinform. 2018, 12, 35. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Martí-Juan, G.; Sanroma-Guell, G.; Piella, G. A survey on machine and statistical learning for longitudinal analysis of neuroimaging data in Alzheimer’s disease. Comput. Methods Programs. Biomed. 2020, 189, 105348. [Google Scholar] [CrossRef] [PubMed]

- Shahwar, T.; Zafar, J.; Almogren, A.; Zafar, H.; Rehman, A.U.; Shafiq, M.; Hamam, H. Automated Detection of Alzheimer’s via Hybrid Classical Quantum Neural Networks. Electronics 2022, 11, 721. [Google Scholar] [CrossRef]

- Chételat, G.; Desgranges, B.; de la Sayette, V.; Viader, F.; Eustache, F.; Baron, J.C. Mild cognitive impairment: Can FDG-PET predict who is to rapidly convert to Alzheimer’s disease? Neurology 2003, 60, 1374–1377. [Google Scholar] [CrossRef]

- Bouwman, F.H.; Schoonenboom, S.N.; van der Flier, W.M.; van Elk, E.J.; Kok, A.; Barkhof, F.; Blankenstein, M.A.; Scheltens, P. CSF biomarkers and medial temporal lobe atrophy predict dementia in mild cognitive impairment. Neurobiol. Aging 2007, 28, 1070–1074. [Google Scholar] [CrossRef]

- Akbari, H.; Sadiq, M.T.; Rehman, A.U.; Ghazvini, M.; Naqvi, R.A.; Payan, M.; Bagheri, H.; Bagheri, H. Depression recognition based on the reconstruction of phase space of EEG signals and geometrical features. Appl. Acoust. 2021, 179, 108078. [Google Scholar] [CrossRef]

- Rehman, A.U.; Jiang, A.; Rehman, A.; Paul, A.; Din, S.; Sadiq, M.T. Identification and role of opinion leaders in information diffusion for online discussion network. J. Ambient. Intell. Humaniz. Comput. 2020, 1–13. [Google Scholar] [CrossRef]

- Ahmad, I.; Ullah, I.; Khan, W.U.; Rehman, A.U.; Adrees, M.S.; Saleem, M.Q.; Cheikhrouhou, O.; Hamam, H.; Shafiq, M. Efficient Algorithms for E-Healthcare to Solve Multiobject Fuse Detection Problem. J. Healthc. Eng. 2021, 2021, 9500304. [Google Scholar] [CrossRef]

- Tufail, A.B.; Ma, Y.; Zhang, Q. Binary classification of Alzheimer’s disease using sMRI imaging modality and deep learning. J. Digit. Imaging 2020, 33, 1073–1090. [Google Scholar] [CrossRef] [PubMed]

- Tufail, A.B.; Ma, Y.; Kaabar, M.K.A.; Rehman, A.U.; Khan, R.; Cheikhrouhou, O. Classification of Initial Stages of Alzheimer’s Disease through Pet Neuroimaging Modality and Deep Learning: Quantifying the Impact of Image Filtering Approaches. Mathematics 2021, 9, 3101. [Google Scholar] [CrossRef]

- Tufail, A.B.; Ullah, K.; Khan, R.A.; Shakir, M.; Khan, M.A.; Ullah, I.; Ma, Y.; Ali, S. On Improved 3D-CNN-Based Binary and Multiclass Classification of Alzheimer’s Disease Using Neuroimaging Modalities and Data Augmentation Methods. J. Healthc. Eng. 2022, 2022, 1302170. [Google Scholar] [CrossRef] [PubMed]

- Tufail, A.B.; Ma, Y.; Kaabar, M.K.A.; Martínez, F.; Junejo, A.R.; Ullah, I.; Khan, R. Deep learning in cancer diagnosis and prognosis prediction: A minireview on challenges, recent trends, and future directions. Comput. Math. Methods Med. 2021, 2021, 9025470. [Google Scholar] [CrossRef] [PubMed]

- Tufail, A.B.; Ma, Y.; Zhang, Q.; Khan, A.; Zhao, L.; Yang, Q.; Adeel, M.; Khan, R.; Ullah, I. 3D convolutional neural networks-based multiclass classification of Alzheimer’s and Parkinson’s diseases using PET and SPECT neuroimaging modalities. Brain Inform. 2021, 8, 1–9. [Google Scholar] [CrossRef]

- Tufail, A.B.; Ma, Y.; Zhang, Q.; Zhao, L. Joint Multiclass Classification of the subjects of Alzheimer’s and Parkinson’s Diseases through Neuroimaging Modalities and Convolutional Neural Networks. In Proceedings of the 2020 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Seoul, Korea, 16–19 December 2020; pp. 2840–2846. [Google Scholar]

- Khan, R.; Yang, Q.; Ullah, I.; Rehman, A.U.; Tufail, A.B.; Noor, A.; Rehman, A.; Cengiz, K. 3D convolutional neural networks based automatic modulation classification in the presence of channel noise. IET Commun. 2022, 16, 497–509. [Google Scholar] [CrossRef]

- Khan, R.; Yang, Q.; Tufail, A.B.; Ma, Y.; Noor, A. Binary Classification of Modulation Formats in the Presence of Noise through Convolutional Neural Networks. In Proceedings of the 2020 15th IEEE International Conference on Signal Processing (ICSP), Beijing, China, 6–9 December 2020; pp. 386–390. [Google Scholar]

- Tufail, A.B.; Ullah, I.; Khan, W.U.; Asif, M.; Ahmad, I.; Ma, Y.; Khan, R.; Ali, S. Diagnosis of Diabetic Retinopathy through Retinal Fundus Images and 3D Convolutional Neural Networks with Limited Number of Samples. Wirel. Commun. Mob. Comput. 2021, 2021, 6013448. [Google Scholar] [CrossRef]

- Tufail, A.B.; Rizvi, S.A.R.; Siddiqui, A.M.; Younis, M.S.; Abidi, A. Independent component analysis based assessment of linked gray and white matter in the initial stages of Alzheimer’s disease using structural MRI phase images. In Proceedings of the 2013 International Computer Science and Engineering Conference (ICSEC), Bangok, Thailand, 4–6 September 2013; pp. 334–338. [Google Scholar]

- Tufail, A.B.; Ullah, I.; Khan, R.; Ali, L.; Yousaf, A.; Rehman, A.U.; Alhakami, W.; Hamam, H.; Cheikhrouhou, O.; Ma, Y. Recognition of Ziziphus lotus through Aerial Imaging and Deep Transfer Learning Approach. Mob. Inf. Syst. 2021, 2021, 4310321. [Google Scholar] [CrossRef]

- Vieira, S.; Pinaya, W.H.; Mechelli, A. Using deep learning to investigate the neuroimaging correlates of psychiatric and neurological disorders: Methods and applications. Neurosci. Biobehav. Rev. 2017, 74, 58–75. [Google Scholar] [CrossRef] [Green Version]

- Zeiler, M.D.; Fergus, R. Visualizing and Understanding Convolutional Networks. arXiv 2013, arXiv:1311.2901. [Google Scholar]

- Ceylan, G.; Öztürk, G. Revised polyhedral conic functions algorithm for supervised classification. Turk. J. Electr. Eng. Comput. Sci. 2020, 28, 2735–2749. [Google Scholar] [CrossRef]

- Rehman, A.U.; Aimin, J.; Rehman, A.; Paul, A. Weighted Based Trustworthiness Ranking in Social Internet of Things by using Soft Set Theory. In Proceedings of the 2019 IEEE 5th International Conference on Computer and Communications (ICCC), Chengdu, China, 6–9 December 2019; pp. 1644–1648. [Google Scholar] [CrossRef]

- Chen, Y.; Xia, Y. Iterative sparse and deep learning for accurate diagnosis of Alzheimer’s disease. Pattern Recognit. 2021, 116, 107944. [Google Scholar] [CrossRef]

- Venugopalan, J.; Tong, L.; Hassanzadeh, H.R.; Wang, M.D. Multimodal deep learning models for early detection of Alzheimer’s disease stage. Sci. Rep. 2021, 11, 3254. [Google Scholar] [CrossRef] [PubMed]

- Mahendran, N.; Vincent, D.R. A deep learning framework with an embedded-based feature selection approach for the early detection of the Alzheimer’s disease. Comput. Biol. Med. 2022, 141, 105056. [Google Scholar] [CrossRef]

- Gharaibeh, M.; Almahmoud, M.; Ali, M.Z.; Al-Badarneh, A.; El-Heis, M.; Abualigah, L.; Altalhi, M.; Alaiad, A.; Gandomi, A.H. Early Diagnosis of Alzheimer’s Disease Using Cerebral Catheter Angiogram Neuroimaging: A Novel Model Based on Deep Learning Approaches. Big Data Cogn. Comput. 2022, 6, 2. [Google Scholar] [CrossRef]

- Xu, A.; Kouznetsova, V.L.; Tsigelny, I.F. Alzheimer’s Disease Diagnostics Using miRNA Biomarkers and Machine Learning. J. Alzheimer’s Dis. 2022, 86, 841–859. [Google Scholar] [CrossRef]

- Hu, J.; Qing, Z.; Liu, R.; Zhang, X.; Lv, P.; Wang, M.; Wang, Y.; He, K.; Gao, Y.; Zhang, B. Deep Learning-Based Classification and Voxel-Based Visualization of Frontotemporal Dementia and Alzheimer’s Disease. Front. Neurosci. 2021, 14, 626154. [Google Scholar] [CrossRef]

- Kwak, K.; Niethammer, M.; Giovanello, K.S.; Styner, M.; Dayan, E. Differential Role for Hippocampal Subfields in Alzheimer’s Disease Progression Revealed with Deep Learning. Cereb. Cortex 2022, 32, 467–478. [Google Scholar] [CrossRef]

- Tian, J.; Smith, G.; Guo, H.; Liu, B.; Pan, Z.; Wang, Z.; Xiong, S.; Fang, R. Modular machine learning for Alzheimer’s disease classification from retinal vasculature. Sci. Rep. 2021, 11, 238. [Google Scholar] [CrossRef]

- Zhang, X.; Han, L.; Zhu, W.; Sun, L.; Zhang, D. An Explainable 3D Residual Self-Attention Deep Neural Network For Joint Atrophy Localization and Alzheimer’s Disease Diagnosis using Structural MRI. arXiv 2022, arXiv:2008.04024. [Google Scholar] [CrossRef]

- Pang, Z.; Wang, X.; Wang, X.; Qi, J.; Zhao, Z.; Gao, Y.; Yang, Y.; Yang, P. A Multi-modal Data Platform for Diagnosis and Prediction of Alzheimer’s Disease Using Machine Learning Methods. Mobile Netw. Appl. 2021, 26, 2341–2352. [Google Scholar] [CrossRef]

- Hazarika, R.A.; Kandar, D.; Maji, A.K. An experimental analysis of different Deep Learning based Models for Alzheimer’s Disease classification using Brain Magnetic Resonance Images. J. King Saud Univ.-Comput. Inf. Sci. 2021; in press. [Google Scholar] [CrossRef]

- Liu, J.; Li, M.; Luo, Y.; Yang, S.; Li, W.; Bi, Y. Alzheimer’s disease detection using depthwise separable convolutional neural networks. Comput. Methods Programs Biomed. 2021, 203, 106032. [Google Scholar] [CrossRef]

- Basher, H.A.; Kim, B.C.; Lee, K.H.; Jung, H.Y. Volumetric Feature-Based Alzheimer’s Disease Diagnosis From sMRI Data Using a Convolutional Neural Network and a Deep Neural Network. IEEE Access 2021, 9, 29870–29882. [Google Scholar] [CrossRef]

- Ghoraani, B.; Boettcher, L.N.; Hssayeni, M.D.; Rosenfeld, A.; Tolea, M.I.; Galvin, J.E. Detection of mild cognitive impairment and Alzheimer’s disease using dual-task gait assessments and machine learning. Biomed. Signal Process. Control 2021, 64, 102249. [Google Scholar] [CrossRef] [PubMed]

- Murugan, S.; Venkatesan, C.; Sumithra, M.G.; Gao, X.Z.; Elakkiya, B.; Akila, M.; Manoharan, S. DEMNET: A Deep Learning Model for Early Diagnosis of Alzheimer Diseases and Dementia From MR Images. IEEE Access 2021, 9, 90319–90329. [Google Scholar] [CrossRef]

- AbdulAzeem, Y.; Bahgat, W.M.; Badawy, M. A CNN based framework for classification of Alzheimer’s disease. Neural. Comput. Appl. 2021, 33, 10415–10428. [Google Scholar] [CrossRef]

- Zhou, J.; Hu, L.; Jiang, Y.; Liu, L. A Correlation Analysis between SNPs and ROIs of Alzheimer’s Disease Based on Deep Learning. Biomed. Res. Int. 2021, 2021, 8890513. [Google Scholar] [CrossRef]

- Savaş, S. Detecting the Stages of Alzheimer’s Disease with Pre-trained Deep Learning Architectures. Arab. J. Sci. Eng. 2022, 47, 2201–2218. [Google Scholar] [CrossRef]

- Koga, S.; Ikeda, A.; Dickson, D.W. Deep learning-based model for diagnosing Alzheimer’s disease and tauopathies. Neuropathol. Appl. Neurobiol. 2021, 48, e12759. [Google Scholar] [CrossRef]

- Odusami, M.; Maskeliūnas, R.; Damaševičius, R.; Krilavičius, T. Analysis of Features of Alzheimer’s Disease: Detection of Early Stage from Functional Brain Changes in Magnetic Resonance Images Using a Finetuned ResNet18 Network. Diagnostics 2021, 11, 1071. [Google Scholar] [CrossRef] [PubMed]

- Salunkhe, S.; Bachute, M.; Gite, S.; Vyas, N.; Khanna, S.; Modi, K.; Katpatal, C.; Kotecha, K. Classification of Alzheimer’s Disease Patients Using Texture Analysis and Machine Learning. Appl. Syst. Innov. 2021, 4, 49. [Google Scholar] [CrossRef]

- Toğaçar, M.; Cömert, Z.; Ergen, B. Enhancing of dataset using DeepDream, fuzzy color image enhancement and hypercolumn techniques to detection of the Alzheimer’s disease stages by deep learning model. Neural Comput. Appl. 2021, 33, 9877–9889. [Google Scholar] [CrossRef]

- Lei, B.; Liang, E.; Yang, M.; Yang, P.; Zhou, F.; Tan, E.; Lei, Y.; Liu, C.; Wang, T.; Xiao, X.; et al. Predicting clinical scores for Alzheimer’s disease based on joint and deep learning. Expert Syst. Appl. 2022, 187, 115966. [Google Scholar] [CrossRef]

- Zhang, J.; Zheng, B.; Gao, A.; Feng, X.; Liang, D.; Long, X. A 3D densely connected convolution neural network with connection-wise attention mechanism for Alzheimer’s disease classification. Magn. Reson. Imaging 2021, 78, 119–126. [Google Scholar] [CrossRef]

- Janghel, R.R.; Rathore, Y.K. Deep Convolution Neural Network Based System for Early Diagnosis of Alzheimer’s Disease. IRBM 2021, 42, 258–267. [Google Scholar] [CrossRef]

- Azulay, A.; Weiss, Y. Why do deep convolutional networks generalize so poorly to small image transformations? J. Mach. Learn. Res. 2019, 20, 1–25. [Google Scholar]

- Weiner, M.W.; Aisen, P.S.; Jack, C.R., Jr.; Jagust, W.J.; Trojanowski, J.Q.; Shaw, L.; Saykin, A.J.; Morris, J.C.; Cairns, N.; Beckett, L.A.; et al. The Alzheimer’s disease neuroimaging initiative: Progress report and future plans. Alzheimers. Dement. 2010, 6, 202–211.e7. [Google Scholar] [CrossRef] [Green Version]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1800–1807. [Google Scholar]

- Haghighi, S.; Jasemi, M.; Hessabi, S.; Zolanvari, A. PyCM: Multiclass confusion matrix library in Python. J. Open Source Softw. 2018, 3, 729. [Google Scholar] [CrossRef] [Green Version]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Wen, J.; Thibeau-Sutre, E.; Diaz-Melo, M.; Samper-González, J.; Routier, A.; Bottani, S.; Dormont, D.; Durrleman, S.; Burgos, N.; Colliot, O. Convolutional neural networks for classification of Alzheimer’s disease: Overview and reproducible evaluation. Med. Image Anal. 2020, 63, 101694. [Google Scholar] [CrossRef] [PubMed]

- Samper-González, J.; Burgos, N.; Bottani, S.; Fontanella, S.; Lu, P.; Marcoux, A.; Routier, A.; Guillon, J.; Bacci, M.; Wen, J.; et al. Reproducible evaluation of classification methods in Alzheimer’s disease: Framework and application to MRI and PET data. NeuroImage 2018, 183, 504–521. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bäckström, K.; Nazari, M.; Gu, I.Y.; Jakola, A.S. An efficient 3D deep convolutional network for Alzheimer’s disease diagnosis using MR images. In Proceedings of the IEEE International Symposium on Biomedical Imaging (ISBI), Washington, DC, USA, 4–7 April 2018; pp. 149–153. [Google Scholar]

- Aderghal, K.; Afdel, K.; Benois-Pineau, J.; Catheline, G. Improving Alzheimer’s stage categorization with Convolutional Neural Network using transfer learning and different magnetic resonance imaging modalities. Heliyon 2020, 6, e05652. [Google Scholar] [CrossRef] [PubMed]

- Oh, K.; Chung, Y.C.; Kim, K.W.; Kim, W.S.; Oh, I.S. Author correction: Classification and visualization of Alzheimer’s disease using volumetric convolutional neural network and transfer learning. Sci. Rep. 2020, 10, 5663. [Google Scholar] [CrossRef] [Green Version]

- Yagis, E.; Citi, L.; Diciotti, S.; Marzi, C.; Atnafu, S.W.; De Herrera, A.G.S. 3D Convolutional Neural Networks for Diagnosis of Alzheimer’s Disease via Structural MRI. In Proceedings of the 2020 IEEE 33rd International Symposium on Computer-Based Medical Systems, CBMS, Rochester, MN, USA, 28–30 July 2020. [Google Scholar]

- Ieracitano, C.; Mammone, N.; Hussain, A.; Morabito, F.C. A Convolutional Neural Network based self-learning approach for classifying neurodegenerative states from EEG signals in dementia. In Proceedings of the 2020 International Joint Conference on Neural Networks, IJCNN, Glasgow, UK, 19–24 July 2020. [Google Scholar]

- Aderghal, K.; Boissenin, M.; Benois-Pineau, J.; Catheline, G.; Afdel, K. Classification of sMRI for AD Diagnosis with Convolutional Neuronal Networks: A Pilot 2-D+ɛ Study on ADNI. In Proceedings of the International Conference on Multimedia Modeling, MMM, Reykjavik, Iceland, 4–6 January 2017. [Google Scholar]

- Aderghal, K.; Benois-Pineau, J.; Afdel, K. FuseMe: Classification of sMRI images by fusion of Deep CNNs in 2D+ε projections. In Proceedings of the International Workshop on Content-Based Multimedia Indexing, CBMI, Florence, Italy, 19–21 June 2017. [Google Scholar]

- Razavi, F.; Tarokh, M.J.; Alborzi, M. An intelligent Alzheimer’s disease diagnosis method using unsupervised feature learning. J. Big Data 2019, 6, 32. [Google Scholar] [CrossRef]

- Ahmed, O.B.; Mizotin, M.; Benois-Pineau, J.; Allard, M.; Catheline, G.; Amar, C.B.; Alzheimer’s Disease Neuroimaging Initiative. Alzheimer’s disease diagnosis on structural MR images using circular harmonic functions descriptors on hippocampus and posterior cingulate cortex. Comput. Med. Imaging Graph. 2015, 44, 13–25. [Google Scholar] [CrossRef]

- Khagi, B.; Kwon, G.R. 3D CNN Design for the Classification of Alzheimer’s Disease Using Brain MRI and PET. IEEE Access 2020, 8, 217830–217847. [Google Scholar] [CrossRef]

| Research Group | Number of Subjects | Age | Weight | FAQ Total Score | NPI-Q Total Score |

|---|---|---|---|---|---|

| NC | 102 | 76.01 (62.2–86.6) | 75.7 (49–130.3) | 0.186 (0–6) | 0.402 (0–5) |

| MCI | 97 | 74.54 (55.3–87.2) | 77.13 (45.1–120.2) | 3.16 (0–15) | 1.97 (0–17) |

| AD | 94 | 75.82 (55.3–88) | 74.12 (42.6–127.5) | 13.67 (0–27) | 4.07 (0–15) |

| Domain | Performance Metrics |

|---|---|

| 3D | RCI = 0.2054, |

| CEN = ‘AD’: 0.5088, ‘MCI’: 0.8038, ‘NC’: 0.5346, | |

| IBA = ‘AD’: 0.5660, ‘MCI’: 0.1091, ‘NC’: 0.5745, | |

| GM = ‘AD’: 0.7928, ‘MCI’: 0.4914, ‘NC’: 0.7406, | |

| MCC = ‘AD’: 0.5784, ‘MCI’: 0.1462, ‘NC’: 0.4614 | |

| 2D | RCI = 0.03, |

| CEN = ’AD’: 0.74, ’MCI’: 0.77, ’NC’: 0.76, | |

| IBA = ’AD’: 0.203, ’MCI’: 0.28, ’NC’: 0.1, | |

| GM = ’AD’: 0.574, ’MCI’: 0.51, ’NC’: 0.48, | |

| MCC = ’AD’: 0.22, ’MCI’: 0.029, ’NC’: 0.125 |

| Domain | Performance Metrics |

|---|---|

| 3D | SEN = 0.7021, |

| SPEC = 0.7320, | |

| F1-score = 0.7097, | |

| Precision = 0.7174, | |

| Balanced Accuracy = 0.7170 | |

| 2D | SEN = 0.5395, |

| SPEC = 0.5976, | |

| F1-score = 0.5520, | |

| Precision = 0.5651, | |

| Balanced Accuracy = 0.5686 |

| Domain | Performance Metrics |

|---|---|

| 3D | SEN = 0.8723, |

| SPEC = 0.9118, | |

| F1-score = 0.8865, | |

| Precision = 0.9011, | |

| Balanced Accuracy = 0.8921 | |

| 2D | SEN = 0.4288, |

| SPEC = 0.6782, | |

| F1-score = 0.4823, | |

| Precision = 0.5511, | |

| Balanced Accuracy = 0.5535 |

| Domain | Performance Metrics |

|---|---|

| 3D | SEN = 0.5979, SPEC = 0.6471, |

| F1-score = 0.6073, Precision = 0.6170, | |

| Balanced Accuracy = 0.6225 | |

| 2D | SEN = 0.4729, SPEC = 0.5358, |

| F1-score = 0.4823, Precision = 0.4921, | |

| Balanced Accuracy = 0.5043 |

| Authors | Data | Method(s) | Accuracy | Classification Task |

|---|---|---|---|---|

| Oh et al. [60] | MRI | Inception auto-encoder based CNN architecture | 84.5% | AD/NC |

| Ekin Yagis et al. [61] | MRI | 3D-CNN architectures | 73.4% | AD/NC |

| Cosimo Ieracitano et al. [62] | MRI | Electroencephalo graphic signals | 85.78% | AD/NC |

| Proposed approach | PET | 3D-CNN whole brain | 89.21% | AD/NC |

| Karim Aderghal et al. [63] | MRI | 2D CNNs hippocampal region | 66.5% | AD/MCI |

| Karim Aderghal et al. [64] | MRI | 2D CNNs coronal, sagittal and axial projections | 63.28% | AD/MCI |

| Firouzeh Razavi et al. [65] | MRI + PET + CSF | Scattered filtering and softmax regression | 71.2% | AD/MCI |

| Proposed approach | PET | 3D-CNN whole brain | 71.70% | AD/MCI |

| Olfa Ben Ahmed et al. [66] | MRI | Circular Harmonic Functions | 69.45% | NC/MCI |

| Proposed approach | PET | 3D-CNN whole brain | 62.25% | NC/MCI |

| Bijen Khagi et al. [67] | PET, MRI | DL architecture employing 3D-CNN layers | 50.21% | AD/NC/MCI Multiclass |

| Proposed approach | PET | 3D-CNN whole brain | 59.73% | AD/NC/MCI Multiclass |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tufail, A.B.; Anwar, N.; Othman, M.T.B.; Ullah, I.; Khan, R.A.; Ma, Y.-K.; Adhikari, D.; Rehman, A.U.; Shafiq, M.; Hamam, H. Early-Stage Alzheimer’s Disease Categorization Using PET Neuroimaging Modality and Convolutional Neural Networks in the 2D and 3D Domains. Sensors 2022, 22, 4609. https://doi.org/10.3390/s22124609

Tufail AB, Anwar N, Othman MTB, Ullah I, Khan RA, Ma Y-K, Adhikari D, Rehman AU, Shafiq M, Hamam H. Early-Stage Alzheimer’s Disease Categorization Using PET Neuroimaging Modality and Convolutional Neural Networks in the 2D and 3D Domains. Sensors. 2022; 22(12):4609. https://doi.org/10.3390/s22124609

Chicago/Turabian StyleTufail, Ahsan Bin, Nazish Anwar, Mohamed Tahar Ben Othman, Inam Ullah, Rehan Ali Khan, Yong-Kui Ma, Deepak Adhikari, Ateeq Ur Rehman, Muhammad Shafiq, and Habib Hamam. 2022. "Early-Stage Alzheimer’s Disease Categorization Using PET Neuroimaging Modality and Convolutional Neural Networks in the 2D and 3D Domains" Sensors 22, no. 12: 4609. https://doi.org/10.3390/s22124609

APA StyleTufail, A. B., Anwar, N., Othman, M. T. B., Ullah, I., Khan, R. A., Ma, Y.-K., Adhikari, D., Rehman, A. U., Shafiq, M., & Hamam, H. (2022). Early-Stage Alzheimer’s Disease Categorization Using PET Neuroimaging Modality and Convolutional Neural Networks in the 2D and 3D Domains. Sensors, 22(12), 4609. https://doi.org/10.3390/s22124609