Abstract

This paper proposes a learnable line encoding technique for bounding boxes commonly used in the object detection task. A bounding box is simply encoded using two main points: the top-left corner and the bottom-right corner of the bounding box; then, a lightweight convolutional neural network (CNN) is employed to learn the lines and propose high-resolution line masks for each category of classes using a pixel-shuffle operation. Post-processing is applied to the predicted line masks to filtrate them and estimate clear lines based on a progressive probabilistic Hough transform. The proposed method was trained and evaluated on two common object detection benchmarks: Pascal VOC2007 and MS-COCO2017. The proposed model attains high mean average precision (mAP) values (78.8% for VOC2007 and 48.1% for COCO2017) while processing each frame in a few milliseconds (37 ms for PASCAL VOC and 47 ms for COCO). The strength of the proposed method lies in its simplicity and ease of implementation unlike the recent state-of-the-art methods in object detection, which include complex processing pipelines.

1. Introduction

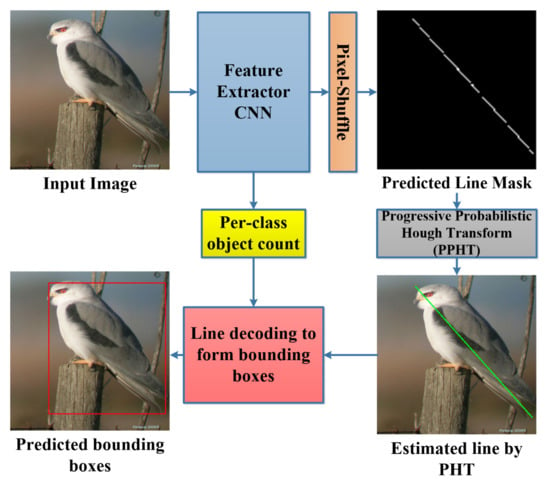

Object detection tasks are one of the most important tasks in computer vision as it is mainly included in understanding and analyzing a scene in images, and this task becomes more efficient when it is performed in real time in order to be useful in live-video processing. The object detection is usually performed using bounding box regression by predicting the x and y values of the top-left corner in addition to the width and the height of the box. The recent object detection methods are mainly classified into two main categories: two-stage methods and single-stage methods. The two-stage method is usually complex as it consists of a stage for object proposals and another stage for object classification and bounding box regression; this concept is applied in many recent methods such as RCNN [], Fast RCNN [], Faster RCNN [], and Mask RCNN []. The two-stage methods attain high mean average precision (mAP); however, they are extremely slow (0.2–10 frames per second (FPS)) as such pipelines are computationally expensive and include complex processing techniques. On the other hand, the single-stage object detection methods employ fully convolutional neural network architectures and perform the object detection task at a high speed (20–140 FPS) such as Yolo V1 [], V2 [], V3 [], V4 [], Single Shot Detection (SSD) [], and RetinaNet []; however, the mAP values for such methods are lower than those for the two-stage methods as they mainly depend on small-scale grids that introduce accuracy loss in learning the bounding box coordinates. A good object detection method should have a trade-off between high accuracy and high processing speed, which is the goal of this paper—achieving a relatively high speed and accuracy. An overview of the proposed method is shown in Figure 1.

Figure 1.

Overview of the proposed object detection method using line-encoded bounding boxes.

We propose a fast object detection method by training a CNN model to predict line-encoded bounding box masks for each class object. The CNN predicts high-resolution line masks using a lightweight pixel-shuffle operation [] inspired by a technique employed in the image super-resolution task. An important post-processing stage is employed to filter out the predicted lines and to estimate fine bounding boxes out of the lines by exploiting the progressive probabilistic Hough transform (PPHT) [] to find clear lines based on a proposed iterative technique under constraints. The contribution of the work presented is as follows:

- We propose new bounding box encoding and learning techniques. The bounding box encoding technique is based on encoding the top-left and bottom-right corners of the bounding box in a single line learnable by segmentation map prediction.

- We propose a robust post-processing technique to solve the problem of multiple detections of the same object and the problem of many detections of a deformed line of a single object.

- The proposed method can successfully achieve a good trade-off between speed and accuracy. It realizes real-time processing (27fps) while keeping a high mAP in object detection.

The rest of the paper is organized as follows: Related work, which details the recent methods in object detection; the Proposed Method, which contains the details of our implementation; Benchmarks For Training and Validation, which contains the dataset employed to train and test our proposed method; Evaluation Metrics of Object Detection and Ablation Study, which contains two main studies on the scale of the line mask and the up-sampling techniques employed in our method; Complexity Analysis of the Proposed Model, Experimental Results, Limitation, and Future Work, and finally the conclusion of the paper.

2. Related Work

The recent deep-learning-based object detection methods have shown a superior ability of the CNN models to learn and perform object detection accurately and rapidly. As we previously mentioned in Section 1, there are two main CNN-based methods for object detection: two-stage methods and single-stage methods. Sermanet et al. [] proposed Overfeat, which is one of the early deep-learning-based two-stage object detection methods in which they trained a CNN image classifier (AlexNet []) and then applied the trained classifier on every batch of the image using a sliding window with different window scales; however, this method was very slow due to the high number of computations required for classification of each image patch. The authors of [] proposed RCNN, which is a two-stage CNN model for object detection and employed a selective search method [] to propose a limited number of regions (typically 2000 regions) for classification by a CNN image classifier (VGG16 []) instead of the classification of the whole image with different scale windows; this method still provides an extremely low frame rate (0.2 FPS). Later in 2015, Girshick et al. [] proposed Fast R-CNN in which the author reduced the complexity of RCNN by feeding the image to a CNN (VGG16) then applying the selective search method on the feature maps obtained from the CNN instead of applying it on the whole image; the author also proposed ROI pooling to reshape all the proposed features into squares and then feeding them to a class classification + bounding box-regression CNN. Fast R-CNN attained a relatively low speed of 2 FPS, although much better (10× faster) than RCNN. Ren et al. [] proposed Faster R-CNN in which they solved the drawbacks in both R-CNN and Fast R-CNN by eliminating the need for the computationally expensive selective search method; they feed the input image to a CNN (VGG16) to propose a few regions (typically 300 regions) for classification and then another CNN (VGG16 or ResNet []) is used to classify the regions and regress the bounding boxes. Faster R-CNN attained a high mAP at a speed of 10 FPS, which is still relatively low.

The recent single-stage object detection methods showed an average accuracy but attained a high speed in frame processing. The first single-stage object detection method was proposed by [] under the name “You Only Look Once” or YOLO; they proposed a grid-based detection method using a convolutional architecture (specifically Darknet) in which each cell in the grid predicts the class category in that cell in addition to x, y, w, and h coordinates of the bounding box where x and y are the coordinates of the top-left corner of the cell and w and h are the widths and the height of the bounding box of the object exist in that cell; however, although YOLO is fast enough for real-time processing (it can work with a speed of 45 FPS), it has a major problem, which is the failure to detect small objects, as the grid was too small (). YOLOV2 or YOLO9000 [] was proposed by the first and the last authors of YOLO to improve the speed and the accuracy of YOLO; they added patch normalization layers after the convolutional layers in the YOLO architecture, which improved the mAP by 2%; they also used bigger image size, typically , instead of the small image size () used in the initial YOLO version; this modification also increased the mAP by 4%. They also reduced the original Darknet architecture from 26 layers to 19 layers (Darknet-19) to speed up the process (they achieved a frame rate of 67 FPS at image size). They also proposed anchor boxes to limit the shapes of the predicted bounding boxes to specific object-based shapes instead of the arbitrary boxes predicted by YOLO. YOLOV3 is proposed by [] of YOLOV2 to improve the detection of small objects; the authors employed Darknet-54, which is a deeper CNN than YOLOV1 and V2 and also employed multiple-scale detection using an architecture similar to the feature pyramid network (FPN) []. The detection in YOLOV3 is achieved at three different scales (small, medium, and large) and a non-maximum suppression is applied to obtain the detections with the highest scores. YOLOV3 attained a higher mAP than YOLOV1 and V2 but the frame rate was reduced to 35 FPS at image size. YOLOV4 was proposed by [], where they improved the mAP by 10% over YOLOV3 by presenting a new backbone (CSPDarknet53) employing cross-spatial partial connections. They proposed three main parts: backbone, neck (path aggregation networks [] with spatial pyramid pooling []), and head (dense prediction block); YOLOV4 attains a speed of 62 FPS at the best mAP value with an image size of . Duan et al. [] proposed Center-Net, a keypoint-based method to detect the objects using three points (top-left, center, and bottom-right points) and achieved high accuracy in detection. Tan et al. [] proposed EfficientDet, which is a fast and accurate object detection method based on the successful architectures of EfficientNet [] originally proposed for classification. The author also proposed the bi-directional feature pyramid network (BiFPN), which allows the feature fusion of multiscale features.

In the proposed method we employ a CNN backbone (specifically Xception []) to extract the image features, then the obtained low scale features are upscaled using the pixel-shuffle algorithm inspired by the efficient sub-pixel CNN [] originally presented for the real-time image super-resolution task. This algorithm can up-scale many low-resolution images of shape (where is the scaling factor) into a high-resolution image of shape () through pixel shuffling from the depth channel. This algorithm is fast and efficient in the construction of higher resolution images and especially segmentation masks as explored in detail in our previous research [,]. The progressive probabilistic Hough transform (PPHT) [] is a popular method for straight line detection from a small set of edge points instead of all edge points used in the standard Hough transform (SHT) [], thus PPHT is much faster than HT. As PPHT is an iterative method, a random edge point is selected for each iteration for voting, then the condition of the line is tested. If a specific line has a large number of votes from the randomly selected points, the stopping rule is satisfied and the line is approved as a detection. PPHT can be tuned using the algorithm parameters to control the estimated line/lines, such as controlling whether to combine multiple sparse points or not based on their alignment. The line estimation using PHT is efficient and rapid enough to be performed as a post-processing step to the detected lines in our proposed method, which targets the real-time object detection task.

3. Proposed Method

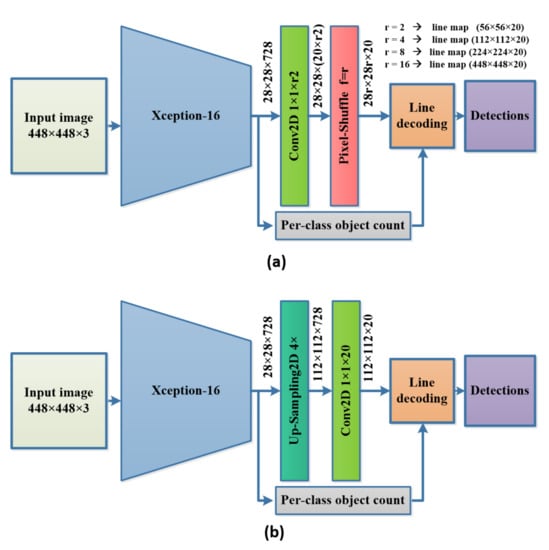

The proposed method consists of three main parts. Firstly, the backbone used for feature extraction (Xception-16) is a modified version of Xception with two output branches. Secondly, we use the pixel-shuffle operation, which is used to upscale the final features based on the depth channel. Finally, the post-processing stage combines the probabilistic Hough transform and the per-class object count to decode the line and obtain the bounding boxes.

3.1. Xception-16 Architecture

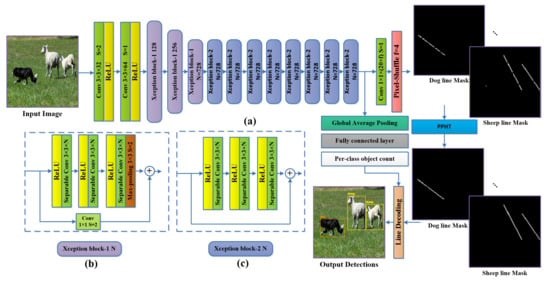

Xception [] is an efficient feature extractor network presented initially for ImageNet ILSVRC [] image classification and attained a top-5 accuracy of 0.945, which is relatively high compared to the current state-of-the-art (SOTA) methods. Chollet et al. [] proposed the depth-wise separable convolution (DW-Conv) as the building block of Xception architecture. DW-Conv consists of two convolution operations. Firstly, the depth-wise convolution performs convolution on each channel separately. Secondly, the point-wise convolution applies a convolution on the input. DW-Conv is much faster than the standard convolution as it learns fewer parameters so it is key to the fast processing in our proposed method. Xception also proved to be a good feature extractor in recent research for multiple computer vision tasks, as it proved to be light enough for real-time applications because of the relatively low FLOPs count and a number of other parameters [,]; it also proved to be compatible with the pixel-shuffle [] operation (also employed in our proposed method and is introduced in Section 3.2) as Xception with the pixel-shuffle showed high accuracy in performing the semantic segmentation task in DTS-Net []. As our method performs the semantic segmentation as a secondary task to predict the encoded line, we adopted a modified version of Xception for its robustness and high accuracy. We propose Xception-16, which cuts the original Xception architecture at the layer `block13_sepconv2_act’, which is equivalent to the input image scale divided by 16 (i.e., input image of size produces features of scale using the proposed Xception-16), then we add two branches, the first with a convolution2D layer followed by the pixel-shuffle operation to construct the line mask in the required scale and the second branch with global average pooling (GAP) followed by a fully connected layer (FC) to predict the per-class object count. The name Xception-16 comes from the final feature scale or the down-scaling obtained from the network, which is of the input image size. The Xception-16 architecture is shown in Figure 2a.

Figure 2.

The proposed method. (a) The Xception-16 architecture with two branches: one for predicting the line mask for each object and the other branch for per-class object count by regression, which is employed in the post-processing stage of the line decoding. (b) Xception block-1 is the Xception block that consists of three sequential RELU+ separable convolution2D and a 3 × 3 max-pooling with stride (s) of 2, a skip connection with convolution2D and stride of 2. (c) Xception block-2 is the Xception block that consists of three sequential RELU+ separable convolution2D.

3.2. Pixel-Shuffle as a Feature Map Upscaling Algorithm

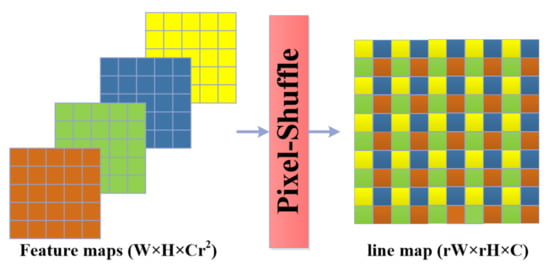

The pixel-shuffle algorithm is proposed by [] for real-time image super resolution, as the algorithm is fast and efficient in constructing large-scale images from many small-scale images through pixel-reordering from each small-scale image to form super pixels in the large-scale images, as shown in Figure 3.

Figure 3.

Pixel-shuffle: the pixels are mapped from the small-scale feature maps of size () to form super pixels in the large-scale line map of the size ().

The pixel-shuffle algorithm can upscale small-scale images of shape into a large image of scale through a rearranging operation to map the pixels depending on the location of each pixel according to (1):

where L and S are the large-scale and small-scale images, x and y are the horizontal and the vertical location of a pixel, C is the number of channels, r is the square root of the upscaling factor , and mod() is the modulus operation. In our proposed method, we add a convolutional2D layer after the Xception-16’s last layer, which produces a 728 feature map of 1/16 of the input image size to adjust the depth channels so that after the pixel-shuffle, it produces line maps equivalent to the number of the categories at the required scale. We try different upscaling factors to obtain 1/1, 1/2, 1/4, and 1/8 of the input image size in the experiment section to reveal the effect of the line-map scale on the mean average precision. The objective function used for line segmentation is a pixel-wise multilabel classification to allow the existence of multiple lines in the same location but in different masks; the employed function is binary cross-entropy as shown in Equation (2):

where C is the number of classes, P is the number of pixels in the line mask, y is the ground truth image label, and is the predicted image label.

3.3. Per-Class Object Count Regression

The second output branch from the proposed CNN is used to predict the object count of each class. The object count is used to ensure that the number of the detected objects equals the predicted number of objects per class. In the case of non-matching, a correction technique is followed using pre-defined PPHT parameter cases. The prediction of the per-class object count has to be performed by applying the GAP layer to the output from the Xception-16 backbone to obtain a 1D feature vector, then an FC layer is added to obtain dense predictions of the objects per class. This task is performed through regression with a mean squared error loss as shown in Equation (3):

where y and are the ground truth and the predicted object count, respectively, and N is the number of classes. The overall loss is the sum of the two losses in (2) and (3) with equal weights as shown in (4).

3.4. Bounding Box Encoding and Line Decoding Algorithms

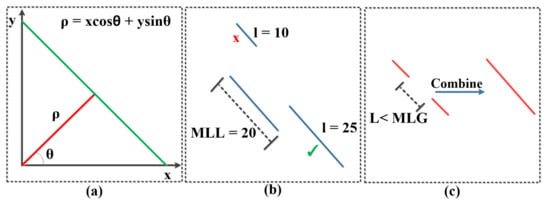

The PPHT [] algorithm contains five main parameters that should be carefully tuned in order to achieve the best line detection results. There are three parameters related to the edge points accumulator or line detector (, , and t where is the resolution of the distance of the accumulator in pixels, is the resolution of the angles of the accumulator in radians, and t is the votes threshold of the accumulator to verify any line detection). Another two parameters, which are the minimum line length (MLL) and the maximum line gap (MLG), are used to define the shortest length of the line to be considered as detection and the maximum gap between any two points to consider them as one line, respectively. A visual illustration of PPHT parameters is shown in Figure 4.

Figure 4.

PPHT algorithm parameters illustration. (a) and of a line in polar coordinates. (b) Minimum line length condition to accept or reject lines. (c) Maximum line gap (MLG) condition to combine two points or line segments.

In the proposed method, the Xception-16 network is trained to predict line masks using a binary segmentation approach. The ground truth lines are generated from the bounding box annotations provided in each dataset used. Each line is produced depending on each object, whereas the line beginning is the top-left corner and the end of the line is the bottom-right corner of the bounding box. Formally, all the bounding boxes are encoded in a negative-slope line format. Since the objects are encoded in a one-pixel-thick line, that means that each line is unique and easy to be separated from other lines of the same class as there is a line mask for each class category and the lines have different slopes according to the alignment of the objects, which are different for the instances of the same class. We apply PPHT to the predicted lines for each class category obtained from the Xception16+Pixel-shuffle, but the PPHT algorithm can produce a different number of lines based on the selection of the parameters. As such, we use the per-class object count prediction to provide a reference of the number of the lines that should be produced from each line mask of the classes. If the algorithm fails to match the exact per-class object count, it performs distance measurements between the produced lines from PPHT in each case and the count vector to predict, as much as possible, lines close to the true number of lines in the count vector. This proposed algorithm for line detection depends mainly on the scale of the line masks; as such, we apply PPHT three times (three parameter sets are empirically selected to detect small, medium, and large objects) on the masks that have line segments; each time we try three pre-selected different resolution, resolution, and threshold (t) but we keep the MLL and MLG at fixed values dependent on the line mask scale. The exact values selected for each scale are described in greater detail in the Experimental Results section. When the number of the detected lines per class (using PPHT with the designed conditions) matches or closely match the number of the objects per class (obtained from the per-class object count predictions of Xception-16), the bounding boxes are formed based on the detected lines by decoding the beginning and the end of the line to obtain the top-left and the bottom-right corners of the bounding boxes. The sequence of the algorithm is stated in Algorithm 1. In Algorithm 1, the function refers to the decoding of the begging and the end of detected lines to the top-left and the bottom-right of the bounding boxes; and are just drawing functions for visualization of the detected lines and bounding boxes.

| Algorithm 1 Line Decoding |

Input: predicted line-masks , number of classes N, Predicted Count vector C Initialize , , , , , , = 0 for to N do for to do if then = PPHT(, = 1, = , , , ) .append() = 100 for to do if abs(—len(lines)) < diff then diff = abs(—len(lines)) < diff lines = lines[0:] end if end for .append(decode_line()) end if end for show_boxes(), show_lines() end for |

4. Benchmarks for Training and Validation

For the proposed method training and validation, we employ three common object detection datasets: PASCAL VOC2007 [], VOC2012 [], and MS-COCO2017 []. PASCAL VOC2007 is a popular dataset for the common objects in the scenes: it consists of 20 classes of objects. The dataset contains 5,011 images for training and 4,952 images for validation. PASCAL VOC2012 has the same classes as PASCAL VOC2007 but with different training and validation images: it consists of 5716 training images and 5823 images for validation. For better model training, we trained the proposed model on both PASCAL VOC2007 and VOC2012 training sets and we tested the model on the PASCAL VOC2007 dataset test set. For training and testing on PASCAL VOC datasets, we used an image size of . The third dataset used for validation was MS-COCO which is a larger dataset for common objects in the scenes and contains 80 class categories. The MS-COCO dataset consists of 118,287 training images and 5000 validation images. We used an image size of for training and testing on the MS-COCO dataset. The bounding box annotations are provided for the three mentioned datasets.

5. Evaluation Metrics of Object Detection

For the proposed method of performance measurement, we evaluated the proposed method on PASCAL VOC2007, VOC2012, and COCO minival (validation set of MS-COCO2017) to measure the mean average precision (mAP) at an intersection over union greater than a threshold; 0.5 is used as a threshold in the evaluation of most object detection methods. The average precision (AP) metric is the measure of the average value of the precision for recall values over 0 to 1. The precision and the recall can be defined as in Equation (5):

where TP, FP, and FN are the true positive, false positive, and false negative of the predictions, respectively. The precision measures how accurate the predictions are and the recall measures whether the model can predict the positives. The AP is the area under the precision-recall curve. The mAP is the mean value of the AP over all the classes; it is usually measured at an IOU value of 0.5, but in MS-COCO evaluation several IOU values are used (from 0.5 to 0.95 with step of 0.05) and the average of those IOU values is calculated to obtain AP. Further, the AP for the small, medium, and large objects is calculated according to the annotation of the objects in the image. IOU can be defined as in Equation (6):

where and are the predicted and the ground truth bounding boxes, respectively.

6. Training and Test Setup

The proposed method was trained using a desktop computer with Nvidia RTX3090 GPU, Intel Corei7-8700 CPU @3.20 GHz clock speed, and 64 gigabytes of RAM. The training was performed using the Tensorflow Keras environment where the trained models have been trained using Adam’s optimization method with an initial learning rate of 0.001 for approximately 250 epochs. A translation and horizontal flipping operations are adopted during training as an augmentation to prevent overfitting and provide more generalization of data. The original Xception model is initialized with ImageNet classification weights then the network is cut to be the modified version “Xception-16” to speed up the training process. The inference was performed using an Nvidia Titan XP GPU with the other configuration mentioned before.

7. Ablation Study

We performed two main studies: one on the scale of the line mask used for box decoding and the second on the up-sampling techniques used for forming the line mask.

7.1. Study on the Scale of the Line Mask

We performed four separate training experiments for the proposed model using four different up-sampling scales of the pixel-shuffle module. We experimented with 1/8, 1/4, 1/2, and full scales to determine which scale has the best performance in terms of mAP on PASCAL VOC2007. The number of channels at the final Conv2D layer before the pixel-shuffle is changed so that the obtained line masks are formed at the desired scale. The value of r of the pixel shuffle is also changed according to that too (r = 2 for 1/8 scale, r = 4 for 1/4 scale, r = 8 for 1/2 scale, and r = 16 for full-scale) as shown in Figure 5a.

Figure 5.

Ablation study architectures. (a) The architecure for the different scales of the line mask (1/8, 1/4, 1/2, 1/1 of the input image). (b) The architecure for the different upsampling techniques (bilinear, nearest neigbour, pixel-shuffle).

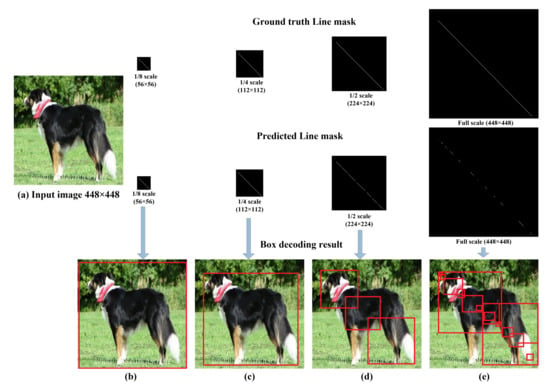

Figure 6 shows the obtained line mask in a sample test image with the corresponding decoded bounding box in each case. The smallest scale (1/8 scale) gives a solid-continuous line in the line mask but due to the small scale, the decoded box using PPHT is too wide and does not exactly fit the object. In the case of the 1/4 scale, the detected line has a few small gaps but still, the PPHT is able to detect the line and merge the line segments. In the case of the 1/2 scale, the PPHT detects multiple segments and cannot easily merge the line segments and fails in many cases, resulting in a big loss in the mAP; the full-scale case also generates many tiny line segments and sparse points, which totally confuses the PPHT algorithms and make it impossible to detect the objects properly. The bad line mask results in larger scales and comes from the fact that the density of the line pixels is very small compared to the scale of the mask as shown in Table 1. As a result of the previous experiments, we selected the 1/4 scale, which has a good trade between the tightness of the bounding box and the relatively high density of line pixels, which is enough for PPHT to detect it without much effort in tuning the PPHT algorithm parameters. The best parameters of PPHT (shown in Table 1) were tuned manually by trial and error to obtain the best possible mAP. We also compared the speed of the model on different scales and, as expected, the lower the scale, the higher the frame rate; we selected the 1/4 scale as the best one based on the mAP sacrificing the better frame rates in the case of the 1/8 scale.

Figure 6.

Comparison between the decoded boxes using different scales of the line masks during training and test. (a–e) show the input image and the decoded boxes using the predicted line masks of scales 1/8, 1/4, 1/2, and 1, respectively.

Table 1.

Comparison between the different scales of the line mask in terms of mAP and speed on PASCAL VOC2007 with the best selection of , and . The best value is shown in bold.

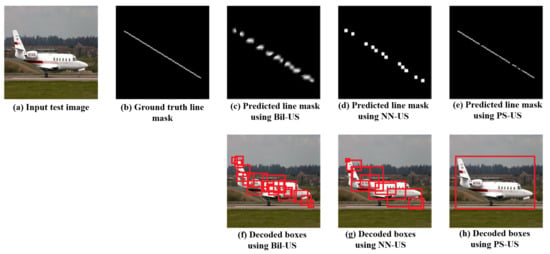

7.2. Study on the Up-Sampling Technique for the Line Mask

We experimented with three different up-sampling techniques to form the line masks. The up-sampling techniques up-sample the final features extracted using Xception-16 four times, and then a Conv2D layer is used to reshape the number of the filters to be equal to the number of classes, as shown in Figure 5b. We trained each model using each one of the up-sampling techniques and then compared the pixel-shuffle with the bilinear and nearest neighbor up-sampling techniques at a line mask scale of 1/4 of the input image.

The bilinear up-sampling and the nearest neighbor up-sampling showed a poor performance in obtaining solid lines; they produced many gaps and thick line segments, which result in low mAP values (lower than 10) so we could not produce notable results to compare with the pixel-shuffle-based results. In spite of our effort to tune the PPHT parameters, the performances of the bilinear and the nearest-neighbor-based up-sampling are much lower than that for the pixel-shuffle, which produces a thin solid line with few gaps, as indicated in the sample results shown in Figure 7.

Figure 7.

Comparison between the predicted line mask and the decoded boxes in case of using the bilinear up-sampling (Bil-US), nearest neighbor up-sampling (NN-US), and pixel-shuffle up-sampling (PS-US).

8. Complexity Analysis of the Proposed Model

We analyze the proposed model including the Xception-16 feature architecture plus the two branches (The pixel-shuffle for line segmentation with 1/4 scale of the input image and the fully connected layer for the per-class regression). The analysis was performed using the TensorFlow profiler [], specifically the tf.profiler.ProfileOptionBuilder.float_operation() function, to calculate the number of floating point operations (FLOPs) for the different layers, i.e., Convolution2D, Depthwise separable convolution2D, and max pooling2D, and the other operations in the model, i.e., multiplication and addition operations. Table 2 shows a detailed analysis of the proposed CNN models with the two image sizes used for PASCAL VOC and MS-COCO datasets. It is obvious in Table 2 that the convolution2D operations take most of the computations and the depth-wise separable convolution takes fewer computations since the depthwise separable convolution is much less complex than the conventional convolution2D operation.

Table 2.

The complexity analysis for the proposed architecture calculating the number of floating point operations in billions (B) for the convolution2D (Conv2D) layer, depth-wise separable convolution2D (DWConv2D), maxpooling2D (MP2D), multiplications, and additions. The total number of floating point operations (FLOPs) in billions and the total number of parameters (Params.) in full precision are also shown. The image size of and are the image sizes for the models trained on PASCAL VOC and MS-COCO, respectively.

9. Experimental Results

The proposed method was trained to predict line masks with a scale of 1/4 of the input image, i.e., for PASCAL VOC2007 and VOC2012, the input RGB images are resized to and the ground truth line masks (which are binary masks) are made at and, for MS-COCO, we used a bigger image size of and the line masks have the size of .

9.1. Evaluation of the Per-Class Count Regression

We evaluated the branch of the per-class object counts separately to ensure the ability of the model to predict the number of objects of each class in the image. The obtained values in the predicted count vector are floating numbers; we round the vector values to the nearest integer values first and then we measure the accuracy of predicting the integer value of each object. We could attain a counting accuracy of 97% on the PASCAL VOC2007 test set and of 92% on the MS-COCO minival (MS-COCO val2017). Those accuracies are the basis of the success of our method, as in our algorithm, we force the PPHT to predict lines equivalent to the per-class object count.

9.2. Evaluation Results on Pascal Voc2007

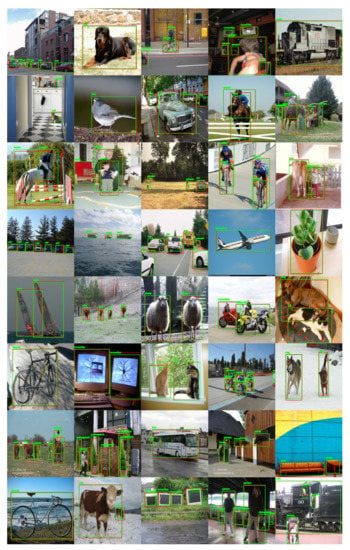

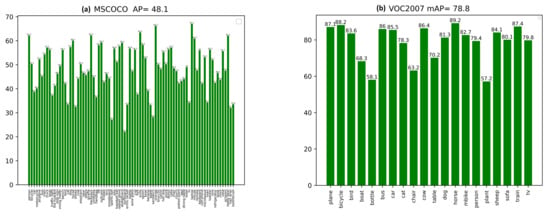

While training the PASCAL VOC dataset, we combined the training and validation datasets of PASCAL VOC2007 and PASCAL VOC2012 to increase the training data as much as possible. During the testing and performance evaluation, we evaluated the VOC2007 test set. The proposed method could attain an mAP @ IOU of 0.5 values of 78.8 on PASCAL VOC2007. This high mAP is obtained by tuning the best parameters of PPHT so the model can detect both small and large objects. The tuning of PPHT parameters is very sensitive and needs to choose the best combinations of , , and t as each parameter has a great impact on the final detection results; the parameters selected for each one of the datasets, according to Algorithm 1, are shown in Table 1. While tuning the PPHT parameters, we notice that increasing t provides more points for line detection but also has a negative effect when many small boxes are detected. is the angular resolution of the accumulator, and when it increases, PPHT can combine very close lines, while , which is the distance resolution of the accumulator, controls the length of the detected line segments. All the parameters should be tuned together to obtain the best detection results. Figure 8 shows the sample results obtained by the proposed method on the PASCAL VOC2007 test set where the results show the ability of the proposed model to detect both small and large objects accurately. The mAP of each class of the two datasets is also reported in Figure 9a. The model could process the frames at a rate of 27 FPS, which is good enough for real-time applications.

Figure 8.

Sample results obtained by the proposed method on random images from PASCAL VOC2007 test set. The green and red bounding boxes refer to the detection and ground truth boxes, respectively.

Figure 9.

(a) The obtained evaluation mAP value of each class of PASCAL VOC2007 test set and the overall mean AP value at IOU of 0.5; (b) the AP value for each class MS-COCO minival and the overall mean value of AP.

9.3. Evaluation Results on Ms-COCO Minival

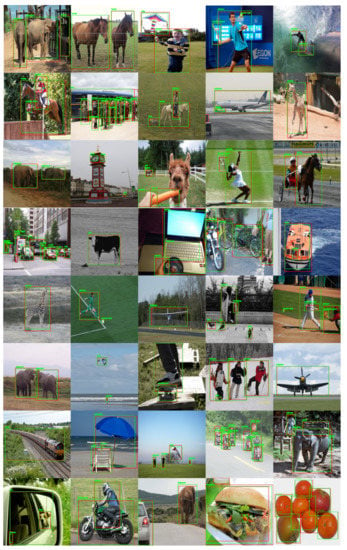

During the evaluation on MS-COCO minival, the proposed method could attain a box average precision (AP) of 48.1, which is relatively high for a hard dataset such as MS-COCO. The model could produce accurate detections as shown in Figure 10; however, the model struggles with the very crowded scenes of sports matches with a large number of people; such scenes are common in MS-COCO images. We also used the manual tuning of the PPHT parameters to achieve the best detection results; the PPHT parameters are reported in Table 3. Figure 10 shows sample detection results obtained by the proposed model that was trained on the MS-COCO dataset. The AP of each class is also shown in Figure 9b. The model could attain a frame rate of 21.3 FPS, which still is an acceptable speed for real-time processing.

Figure 10.

Sample results were obtained by the proposed method on random images from MS-COCO minival dataset. The green and red boxes refer to the detected and ground truth boxes, respectively.

Table 3.

The best PPHT parameters obtained during tuning for each dataset, mAP, and FPS for the proposed model tested on the VOC2007 test set in addition to AP, AP, on MS-COCO minival using the same terms.

9.4. Performance Comparison with SOTA Methods

We compared the proposed method (LEOD-Net) with the state-of-the-art (SOTA) methods of object detection. As there are hundreds of object detection methods, we selected the most relevant methods at least in terms of complexity and input image size; we also included a few popular two-stage methods in our comparison to highlight the high accuracy of our method. Regarding our model that was trained on PASCAL VOC2007, it can outperform the other SOTA methods, including the two-stage methods, in terms of AP, except for YOLOV4, which is a recent method applying multiple techniques to increase the performance. The speed is considered average as it is not as high as YOLOV2 and it is not slow similar to the two-stage methods as reported in Table 4. Regarding our model that was trained on MS-COCO 2017, it ranked as the second-best method (AP) after YOLOV4 (AP) as reported in Table 5. The evaluation on the COCO minival dataset was performed according to a recent study in []. Regarding the speed comparison on the MS-COCO dataset, we did not include the comparison of the speed as each method was tested on a different environment and hardware. In general, the proposed method (LEOD-Net) could attain a notable mAP that can work in real-time, which is the best trade-off for any object detection method.

Table 4.

Comparison with SOTA methods on the VOC2007 test set while training the model on VOC2007+VOC2012 trainval datasets together. The best value of AP is shown in bold. The second best value is shown with underline.

Table 5.

Comparison between the proposed method (LEOD-NET) and SOTA methods on COCO val2017 (COCO minival) while training the model on COCO train2017 dataset. The best value of AP is shown in bold. The second best value is shown with underline.

10. Limitations and Future Work

Although we have attained good performance for our model, the model has a weakness in the optimization of PPHT parameters. To address this weakness, we aim to employ an automatic parameter selection using a search method in a future work instead of the manual tuning of the PPHT parameters. We believe that more tuning for the PPHT may produce better object detection results, so automatic tuning can be very helpful since the parameters have cross relations and also depend on other factors such as image size and quality of the estimated features before the pixel-shuffle operation. In addition, we plan to employ vision transformers (ViT) [] in a future work to exploit the general context learning, which can be achieved using ViT and can be used to generate richer line features. Since the obtained object detection results are so promising, we aim also to extend the method in the future to perform instance segmentation in collaboration with our previous semantic segmentation method proposed in [], which is one of the most-difficult high-level computer vision tasks. This method uses the original Xception architecture for semantic segmentation, so the same architecture can be trained to perform both object detection and semantic segmentation simultaneously, which is instance segmentation. In addition, the future method could attain real-time processing since our proposed method and the suggested segmentation method can work at a high processing speed.

11. Conclusions

We propose an object detection method using line-encoded bounding boxes (LEOD-Net), which proved to be efficient enough for object detection at a high speed (27 fps on PASCAL VOC and 21.3 on MS-COCO) via our experiments. The proposed method exploits the progressive probabilistic Hough transform to refine the initial pseudo-line masks predicted by the proposed CNN model and form the bounding boxes. The parameters of PPHT are so sensitive in the output detection and should be tuned carefully to obtain the best line decoding results. The proposed method outperforms many SOTA methods in terms of accuracy and some methods in terms of frame processing speed. The obtained qualitative results show the high performance of the proposed method in detecting accurate bounding boxes which match the object boundaries. Finally, the mAP values are good enough for accurate object detection tasks.

Author Contributions

Conceptualization, H.I. and A.S.; methodology, H.I. and A.S.; software, H.I.; formal analysis, H.I.; investigation, H.-S.K.; resources, H.-S.K.; data curation, H.I.; writing—original draft preparation, H.I.; writing—review and editing, H.I. and H.-S.K.; validation, H.I. and H.-S.K.; visualization, H.-S.K.; supervision, H.-S.K.; project administration, H.-S.K.; funding acquisition, H.-S.K. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the MSIT (Ministry of Science and ICT), Korea, under the Grand Information Technology Research Center support program (IITP-2022-2020-0-01462) supervised by the IITP (Institute for Information & communications Technology Planning & Evaluation) and in part by the Research Projects of “Development of automatic screening and hybrid detection system for hazardous material detecting in port container” funded by the Ministry of Oceans and Fisheries.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets used in this paper are public datasets.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.; Liao, H.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.E.; Fu, C.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the European Conference of computer vision (ECCV), Amsterdam, The Netherlands, 8–16 October 2016. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2999–3007. [Google Scholar]

- Shi, W.; Caballero, J.; Huszár, F.; Totz, J.; Aitken, A.P.; Bishop, R.; Rueckert, D.; Wang, Z. Real-Time Single Image and Video Super-Resolution Using an Efficient Sub-Pixel Convolutional Neural Network. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1874–1883. [Google Scholar]

- Galamhos, C.; Matas, J.; Kittler, J. Progressive probabilistic Hough transform for line detection. In Proceedings of the 1999 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (Cat. No PR00149), Fort Collins, CO, USA, 23–25 June 1999; Volume 1, pp. 554–560. [Google Scholar]

- Sermanet, P.; Eigen, D.; Zhang, X.; Mathieu, M.; Fergus, R.; LeCun, Y. Overfeat: Integrated recognition, localization and detection using convolutional networks. In Proceedings of the 2nd International Conference on Learning Representations (ICLR), Toulon, France, 24–26 April 2017. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Lake Tahoe, NV, USA, 3–6 December 2012; Volume 25. [Google Scholar]

- Uijlings, J.R.R.; van de Sande, K.E.A.; Gevers, T.; Smeulders, A.W. Selective Search for Object Recognition. Int. J. Comput. Vis. (IJCV) 2013, 104, 154–171. [Google Scholar] [CrossRef] [Green Version]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Duan, K.; Bai, S.; Xie, L.; Qi, H.; Huang, Q.; Tian, Q. CenterNet: Keypoint Triplets for Object Detection. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 6568–6577. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10778–10787. [Google Scholar]

- Tan, M.; Le, Q. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning (ICML), Beach, CA, USA, 9–15 June 2019. [Google Scholar]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1800–1807. [Google Scholar]

- Ibrahem, H.; Salem, A.; Kang, H.-S. DTS-Net: Depth-to-Space Networks for Fast and Accurate Semantic Object Segmentation. Sensors 2022, 22, 337. [Google Scholar] [CrossRef] [PubMed]

- Aich, S.; van der Kamp, W.; Stavness, I. Semantic Binary Segmentation Using Convolutional Networks without Decoders. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 182–1824. [Google Scholar]

- Duda, R.O.; Hart, P.E. Use of the Hough transformation to detect lines and curves in pictures. Commun. ACM 1972, 15, 11–15. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Bianco, S.; Cadene, R.; Celona, L.; Napoletano, P. Benchmark Analysis of Representative Deep Neural Network Architectures. IEEE Access 2018, 6, 64270–64277. [Google Scholar] [CrossRef]

- Ibrahem, H.; Salem, A.D.A.; Kang, H.-S. Real-Time Weakly Supervised Object Detection Using Center-of-Features Localization. IEEE Access 2021, 9, 38742–38756. [Google Scholar] [CrossRef]

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The PASCAL Visual Object Classes Challenge 2007 (VOC2007) Results. Available online: http://www.pascal-network.org/challenges/VOC/voc2007/workshop/index.html (accessed on 28 January 2022).

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The PASCAL Visual Object Classes Challenge 2012 (VOC2012) Results. Available online: http://www.pascal-network.org/challenges/VOC/voc2012/workshop/index.html (accessed on 28 January 2022).

- Lin, T.-Y.; Maire, M.; Serge, J. Belongie and James Hays and Pietro Perona and Deva Ramanan and Piotr Dollár and C. Lawrence Zitnick. Microsoft COCO: Common Objects in Context. In Proceedings of the European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014. [Google Scholar]

- Available online: https://www.tensorflow.org/api_docs/python/tf/compat/v1/profiler/ProfileOptionBuilder (accessed on 6 May 2022).

- Wenkel, S.; Alhazmi, K.; Liiv, T.; Alrshoud, S.; Simon, M. Confidence Score: The Forgotten Dimension of Object Detection Performance Evaluation. Sensors 2021, 21, 4350. [Google Scholar] [CrossRef] [PubMed]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).