Deep Neural Networks Based on Span Association Prediction for Emotion-Cause Pair Extraction

Abstract

:1. Introduction

- We propose a span representation method for the ECPE task, which takes advantage of the idea of span association from the perspective of grammatical idioms;

- We designed a span-related pairing method to obtain candidate emotion-cause pairs, and establish a multi-dimensional information interaction mechanism to screen candidate emotion-cause pairs. At the same time, we simplified the model architecture and the number of trainable parameters was reduced;

- We experimented with our end-to-end model on a benchmark corpus, and the results showed that our method outperformed the state-of-the-art benchmarks.

2. Related Work

2.1. Emotion Cause Extraction

2.2. Emotion-Cause Pair Extraction

3. Model

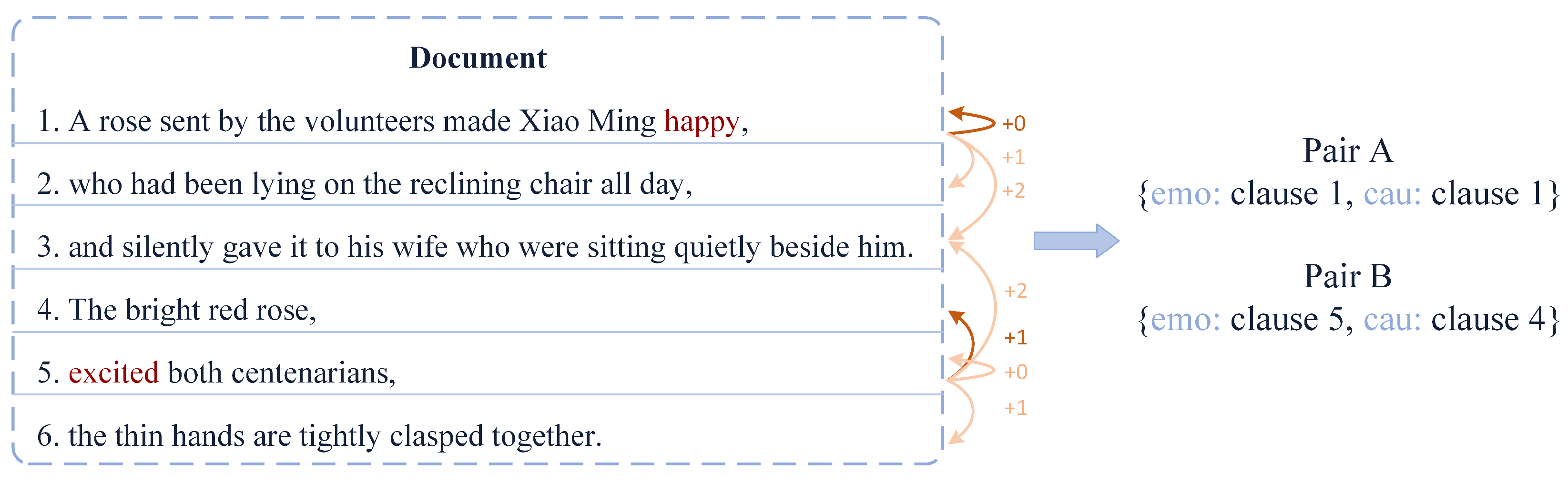

3.1. Problem Definition

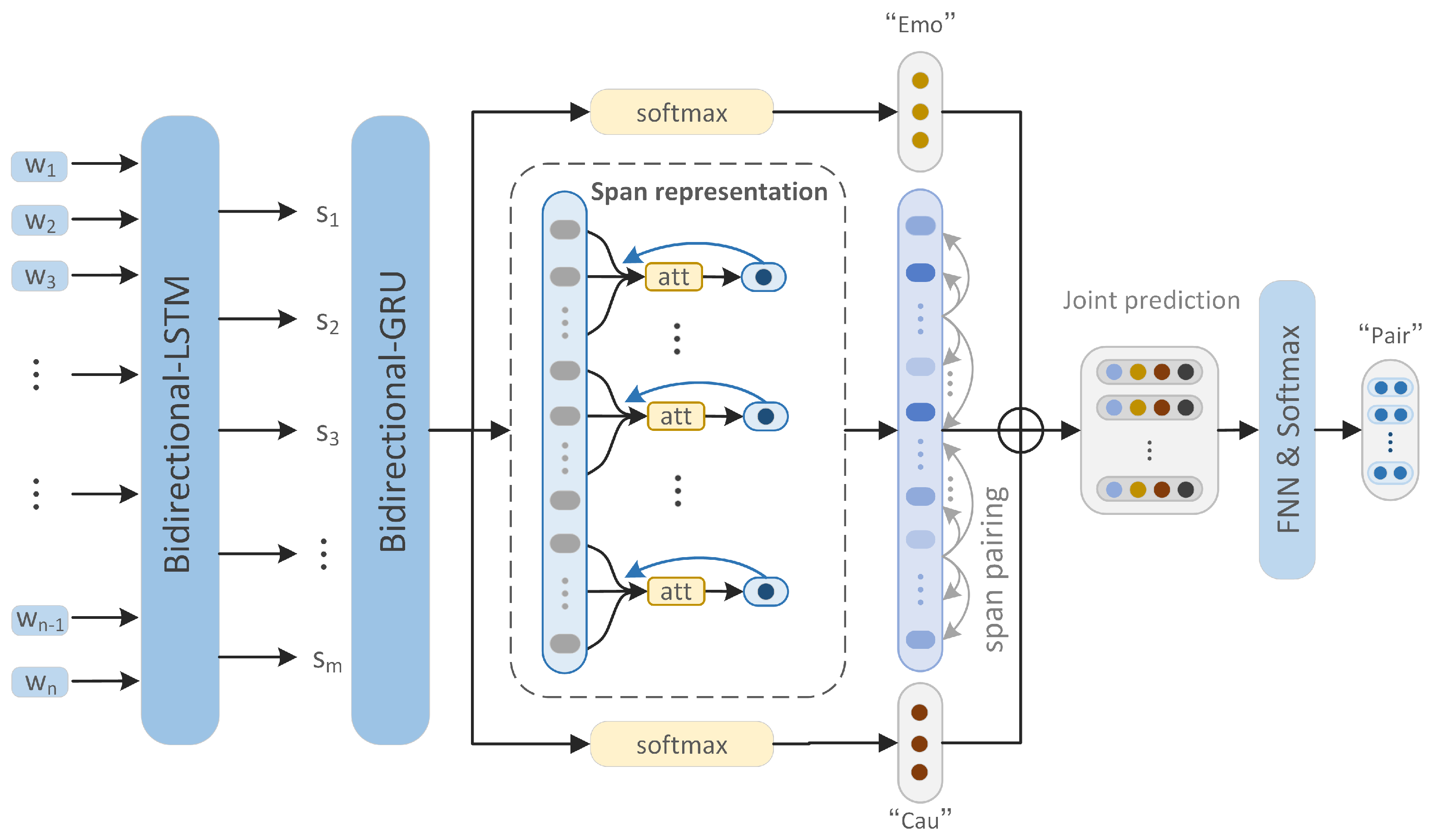

3.2. Overall Framework

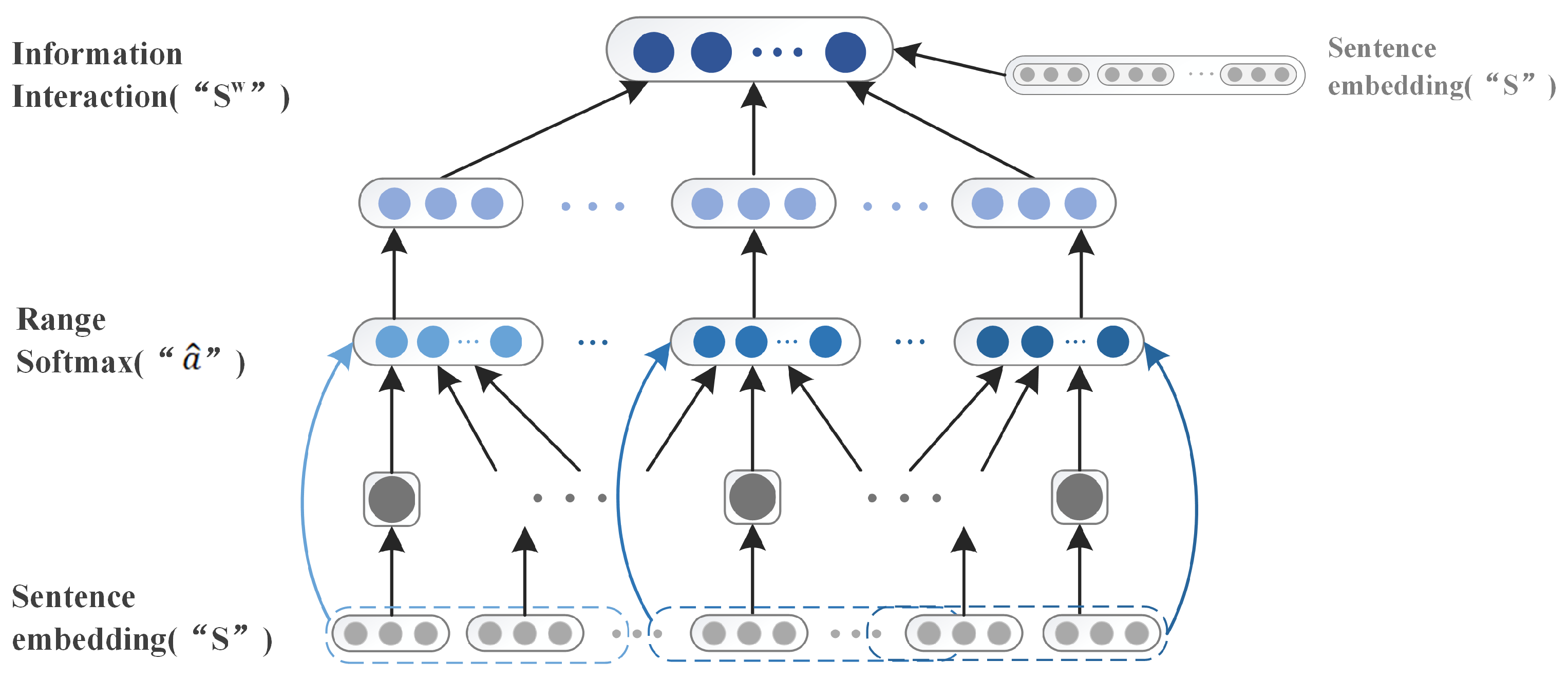

3.3. Span Representation

3.4. Span Association Pairing

| Algorithm 1 Span association pairing algorithm. |

| Input: An input sentence |

| Output: The candidate pair P |

| 1: for i in do |

| 2: for j in do |

| 3: if j in then |

| 4: |

| 5: |

| 6: |

| 7: |

| 8:Return P |

3.5. Emotion-Cause Pair Prediction

4. Experiment

4.1. Implementation Details and Evaluation Metrics

4.2. Baseline Models

- Indep: The first model proposed by Xia and Ding [2] is a two-step model. In the first step, emotion extraction and cause extraction are regarded as two independent tasks, respectively, and the emotion and cause are extracted through Bi-LSTM; in the second step, emotion and cause are paired and the classifier is used for binary classification.

- Inter-CE [2]: The general process of the model is the same as that of Indep. It is an interactive multi-task learning method that uses the prediction of cause extraction to strengthen emotion extraction.

- Inter-EC [2]: This is another interactive multi-task learning method that uses predictions from emotion extraction to reinforce cause extraction, the rest of the model is the same as Indep.

- E2EECPE: An end-to-end model proposed by Song et al. [7], this is a multi-task learning linking framework that exploits a biaffine attention to mine the relationship between any two clauses.

- ECPE-2D: Proposed by Ding et al. [8], tthis model realizes all the interactions of emotion-cause pairs in 2D, and uses the self-attention mechanism to calculate the attention matrix of emotion-cause pairs. Here, we choose the Inter-EC model with better effect.

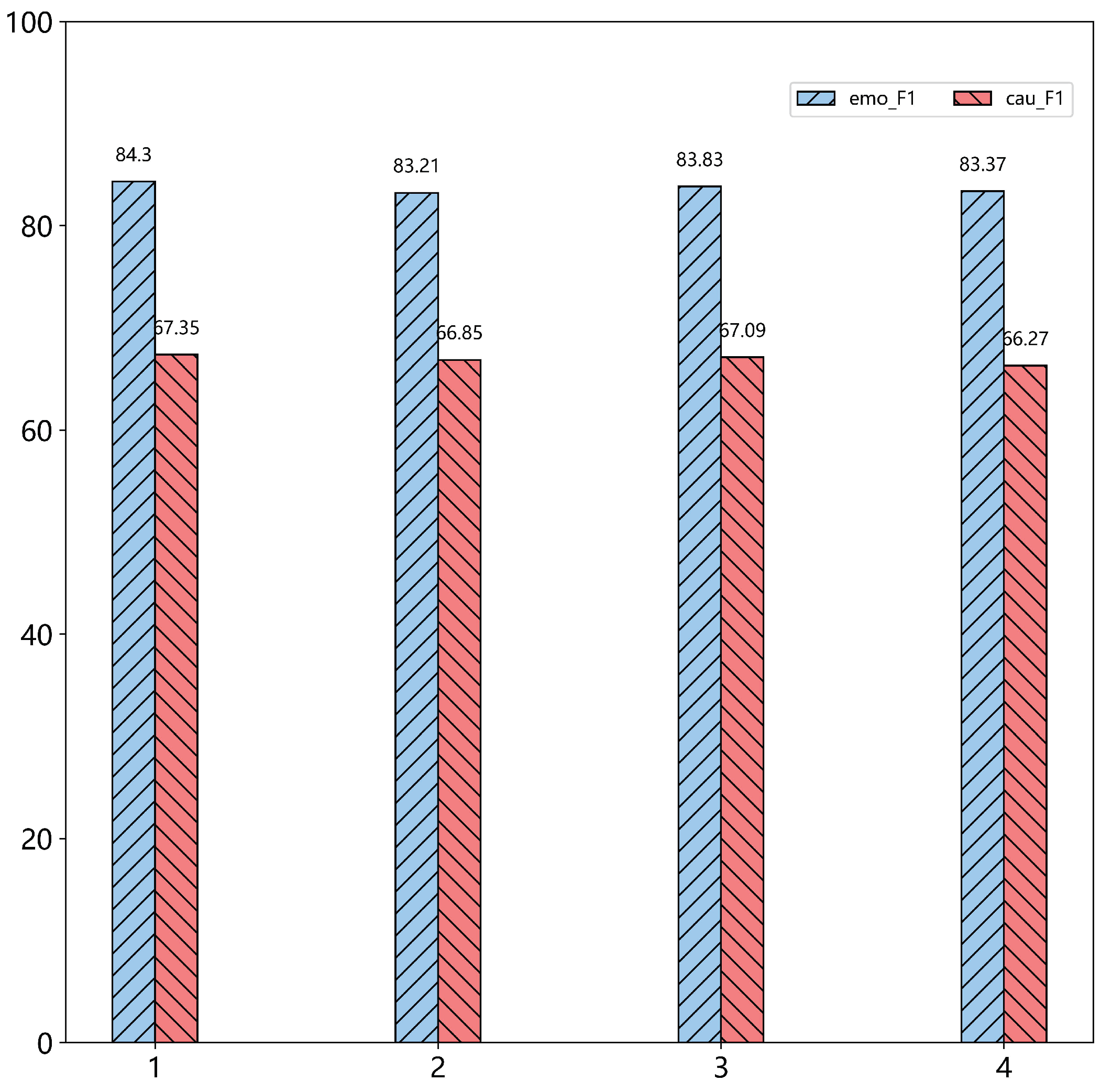

4.3. Overall Performance

4.4. Further Discussions

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Lee, S.Y.M.; Chen, Y.; Huang, C.R. A text-driven rule-based system for emotion cause detection. In Proceedings of the NAACL HLT 2010 Workshop on Computational Approaches to Analysis and Generation of Emotion in Text, Los Angeles, CA, USA, 5 June 2010; pp. 45–53. [Google Scholar]

- Xia, R.; Ding, Z. Emotion-Cause Pair Extraction: A New Task to Emotion Analysis in Texts. arXiv 2019, arXiv:1906.01267. [Google Scholar]

- Wei, P.; Zhao, J.; Mao, W. Effective Inter-Clause Modeling for End-to-End Emotion-Cause Pair Extraction. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 3171–3181. [Google Scholar]

- Chen, X.; Li, Q.; Wang, J. A Unified Sequence Labeling Model for Emotion Cause Pair Extraction. In Proceedings of the 28th International Conference on Computational Linguistics, Barcelona, Spain, 8–13 December 2020; pp. 208–218. [Google Scholar]

- Singh, A.; Hingane, S.; Wani, S.; Modi, A. An End-to-End Network for Emotion-Cause Pair Extraction. arXiv 2021, arXiv:2103.01544. [Google Scholar]

- Xia, R.; Zhang, M.; Ding, Z. RTHN: A RNN-Transformer Hierarchical Network for Emotion Cause Extraction. arXiv 2019, arXiv:1906.01236. [Google Scholar]

- Song, H.; Zhang, C.; Li, Q.; Song, D. End-to-end Emotion-Cause Pair Extraction via Learning to Link. arXiv 2020, arXiv:2002.10710. [Google Scholar]

- Ding, Z.; Xia, R.; Yu, J. ECPE-2D: Emotion-cause pair extraction based on joint two-dimensional representation, interaction and prediction. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 3161–3170. [Google Scholar]

- Xu, R.; Hu, J.; Lu, Q.; Wu, D.; Gui, L. An ensemble approach for emotion cause detection with event extraction and multi-kernel SVMs. Tsinghua Sci. Technol. 2017, 22, 646–659. [Google Scholar] [CrossRef]

- Chen, Y.; Hou, W.; Cheng, X. Hierarchical Convolution Neural Network for Emotion Cause Detection on Microblogs. In Proceedings of the 27th International Conference on Artificial Neural Networks, Rhodes, Greece, 4–7 October 2018. [Google Scholar]

- Wan, J.; Ren, H. Emotion Cause Detection with a Hierarchical Network. In Proceedings of the Sixth International Congress on Information and Communication Technology, London, UK, 25–26 February 2021. [Google Scholar]

- Yan, H.; Gui, L.; Pergola, G.; He, Y. Position Bias Mitigation: A Knowledge-Aware Graph Model for Emotion Cause Extraction. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing, ACL/IJCNLP, Bangkok, Thailand, 1–6 August 2021; pp. 3364–3375. [Google Scholar]

- Khooshabeh, P.; de Melo, C.; Volkman, B.; Gratch, J.; Blascovich, J.; Carnevale, P.J. Negotiation Strategies with Incongruent Facial Expressions of Emotion Cause Cardiovascular Threat. In Proceedings of the Annual Meeting of the Cognitive Science Society, Berlin, Germany, 31 July–3 August 2013. [Google Scholar]

- Russo, I.; Caselli, T.; Rubino, F.; Boldrini, E.; Martínez-Barco, P. EMOCause: An Easy-adaptable Approach to Extract Emotion Cause Contexts. WASSA@ACL 2011, 2011, 153–160. [Google Scholar]

- Yada, S.; Ikeda, K.; Hoashi, K.; Kageura, K. A Bootstrap Method for Automatic Rule Acquisition on Emotion Cause Extraction. In Proceedings of the 2017 IEEE International Conference on Data Mining Workshops (ICDMW), New Orleans, LA, USA, 18–21 November 2017; pp. 414–421. [Google Scholar]

- Hu, J.; Shi, S.; Huang, H. Combining External Sentiment Knowledge for Emotion Cause Detection. In Proceedings of the 8th CCF International Conference, NLPCC 2019, Dunhuang, China, 9–14 October 2019. [Google Scholar]

- Li, X.; Song, K.; Feng, S.; Wang, D.; Zhang, Y. A Co-Attention Neural Network Model for Emotion Cause Analysis with Emotional Context Awareness. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; pp. 4752–4757. [Google Scholar]

- Li, X.; Gao, W.; Feng, S.; Zhang, Y.; Wang, D. Boundary Detection with BERT for Span-level Emotion Cause Analysis. Find. Assoc. Comput. Linguist. 2021, 2021, 676–682. [Google Scholar]

- Li, X.; Gao, W.; Feng, S.; Wang, D.; Joty, S.R. Span-level Emotion Cause Analysis with Neural Sequence Tagging. In Proceedings of the 30th ACM International Conference on Information & Knowledge Management, Queensland, Australia, 1–5 November 2021; pp. 3227–3231. [Google Scholar]

- Hu, G.; Lu, G.; Zhao, Y. FSS-GCN: A graph convolutional networks with fusion of semantic and structure for emotion cause analysis. Knowl. Based Syst. 2021, 212, 106584. [Google Scholar] [CrossRef]

- Li, X.; Gao, W.; Feng, S.; Wang, D.; Joty, S.R. Span-Level Emotion Cause Analysis by BERT-based Graph Attention Network. In Proceedings of the 30th ACM International Conference on Information & Knowledge Management, Queensland, Australia, 1–5 November2021; pp. 3221–3226. [Google Scholar]

- Turcan, E.; Wang, S.; Anubhai, R.; Bhattacharjee, K.; Al-Onaizan, Y.; Muresan, S. Multi-Task Learning and Adapted Knowledge Models for Emotion-Cause Extraction. arXiv 2021, arXiv:2106.09790. [Google Scholar]

- Ding, Z.; He, H.; Zhang, M.; Xia, R. From Independent Prediction to Re-ordered Prediction: Integrating Relative Position and Global Label Information to Emotion Cause Identification. Proc. AAAI Conf. Artif. Intell. 2019, 33, 6343–6350. [Google Scholar]

- Tang, H.; Ji, D.; Zhou, Q. Joint multi-level attentional model for emotion detection and emotion-cause pair extraction. Neurocomputing 2020, 409, 329–340. [Google Scholar] [CrossRef]

- Fan, C.; Yuan, C.; Du, J.; Gui, L.; Yang, M.; Xu, R. Transition-based Directed Graph Construction for Emotion-Cause Pair Extraction. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 3707–3717. [Google Scholar]

- Fan, W.; Zhu, Y.; Wei, Z.; Yang, T.; Ip, A.W.H.; Zhang, Y. Order-guided deep neural network for emotion-cause pair prediction. Appl. Soft Comput. 2021, 112, 107818. [Google Scholar] [CrossRef]

- Chen, F.; Shi, Z.; Yang, Z.; Huang, Y. Recurrent synchronization network for emotion-cause pair extraction. Knowl. Based Syst. 2022, 238, 107965. [Google Scholar] [CrossRef]

- Zhang, Y.; Yang, Q. A Survey on Multi-Task Learning. IEEE Trans. Knowl. Data Eng. 2021. [Google Scholar] [CrossRef]

- Thung, K.H.; Wee, C.Y. A brief review on multi-task learning. Multimed. Tools Appl. 2018, 77, 29705–29725. [Google Scholar] [CrossRef]

- Kuang, Z.; Zhang, X.; Yu, J.; Li, Z.; Fan, J. Deep Embedding of Concept Ontology for Hierarchical Fashion Recognition. Neurocomputing 2020, 425, 191–206. [Google Scholar] [CrossRef]

- Kuang, Z.; Yu, J.; Li, Z.; Zhang, B.; Fan, J. Integrating Multi-Level Deep Learning and Concept Ontology for Large-Scale Visual Recognition. Pattern Recognit. 2018, 78, 198–214. [Google Scholar] [CrossRef]

- Mittal, A.; Vaishnav, J.T.; Kaliki, A.; Johns, N.; Pease, W. Emotion-Cause Pair Extraction in Customer Reviews. arXiv 2021, arXiv:2112.03984. [Google Scholar]

- Guyon, I.; Statnikov, A.R. Results of the Cause-Effect Pair Challenge; Springer: Berlin/Heidelberg, Germany, 2019. [Google Scholar]

- Li, F.; Lin, Z.; Zhang, M.; Ji, D. A Span-Based Model for Joint Overlapped and Discontinuous Named Entity Recognition. arXiv 2021, arXiv:2106.14373. [Google Scholar]

- Lee, K.; He, L.; Lewis, M.; Zettlemoyer, L. End-to-end Neural Coreference Resolution. arXiv 2017, arXiv:1707.07045. [Google Scholar]

- Goldberg, Y.; Levy, O. word2vec Explained: Deriving Mikolov et al.’s negative-sampling word-embedding method. arXiv 2014, arXiv:1402.3722. [Google Scholar]

| Document | Percentage | |

|---|---|---|

| ALL | 1945 | 100% |

| 1 pair | 1746 | 89.77% |

| 2 pairs | 177 | 9.10% |

| ≥3 pairs | 22 | 1.13% |

| Emotion Ext | Cause Ext | Emotion-Cause Pair Ext | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Models | P(%) | R(%) | F1(%) | P(%) | R(%) | F1(%) | P(%) | R(%) | F1(%) | |

| Indep | 83.75 | 80.71 | 82.10 | 69.02 | 56.73 | 62.05 | 68.32 | 50.82 | 59.18 | −7.02% |

| Inter-CE | 84.94 | 81.22 | 83.00 | 68.09 | 56.34 | 61.51 | 69.02 | 51.35 | 59.01 | −7.29% |

| Inter-EC | 83.64 | 81.07 | 82.30 | 70.41 | 60.83 | 65.07 | 67.21 | 57.05 | 61.28 | −3.72% |

| E2EECPE | 85.95 | 79.15 | 82.38 | 70.62 | 60.30 | 65.03 | 64.78 | 61.05 | 62.80 | −1.34% |

| ECPE-2D | 84.63 | 81.95 | 83.19 | 72.17 | 62.66 | 67.01 | 71.31 | 57.86 | 63.65 | 0 |

| SAP-ECPE | 86.31 | 81.58 | 83.83 | 70.11 | 64.42 | 67.09 | 72.18 | 58.92 | 64.75 | +1.73% |

| Method | Trainable Parameters | |

|---|---|---|

| SAP-ECPE | 933,755 | 11.92% |

| ECPE-2D(Inter-EC) | 1,060,116 | 0 |

| Method | P(%) | R(%) | F1(%) | |

|---|---|---|---|---|

| Ours w/o Span pepresentation | 72.43 | 57.25 | 63.87 | −1.36% |

| Ours w/o Span association pairing | 67.37 | 60.25 | 63.54 | −1.87% |

| Ours | 72.18 | 58.92 | 64.75 | 0 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, W.; Yang, Y.; Peng, Z.; Xiong, L.; Huang, X. Deep Neural Networks Based on Span Association Prediction for Emotion-Cause Pair Extraction. Sensors 2022, 22, 3637. https://doi.org/10.3390/s22103637

Huang W, Yang Y, Peng Z, Xiong L, Huang X. Deep Neural Networks Based on Span Association Prediction for Emotion-Cause Pair Extraction. Sensors. 2022; 22(10):3637. https://doi.org/10.3390/s22103637

Chicago/Turabian StyleHuang, Weichun, Yixue Yang, Zhiying Peng, Liyan Xiong, and Xiaohui Huang. 2022. "Deep Neural Networks Based on Span Association Prediction for Emotion-Cause Pair Extraction" Sensors 22, no. 10: 3637. https://doi.org/10.3390/s22103637

APA StyleHuang, W., Yang, Y., Peng, Z., Xiong, L., & Huang, X. (2022). Deep Neural Networks Based on Span Association Prediction for Emotion-Cause Pair Extraction. Sensors, 22(10), 3637. https://doi.org/10.3390/s22103637