Improving the Accuracy of Estimates of Indoor Distance Moved Using Deep Learning-Based Movement Status Recognition

Abstract

1. Introduction

2. Related Work

2.1. Distance Estimation

2.2. Movement Status Recognition

3. Methodology

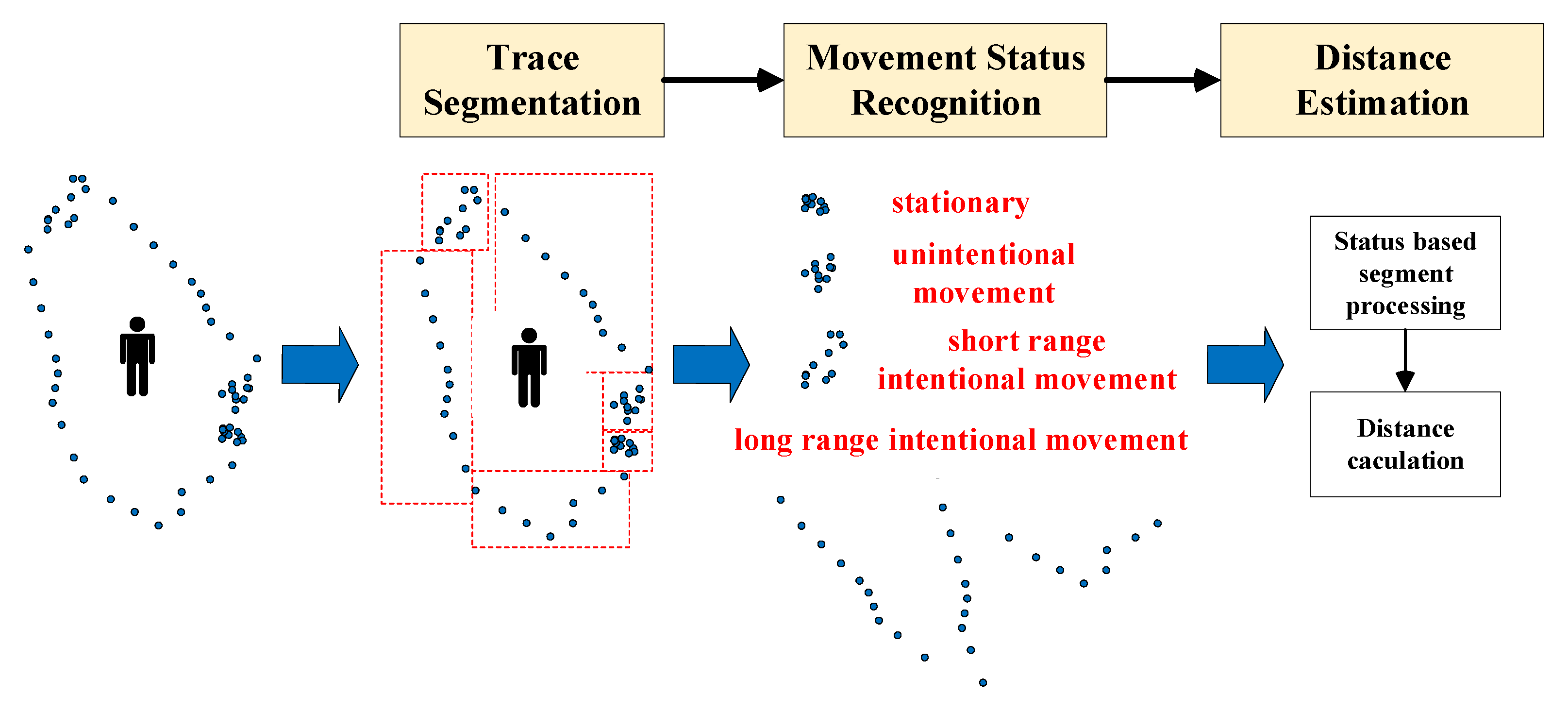

3.1. Overview

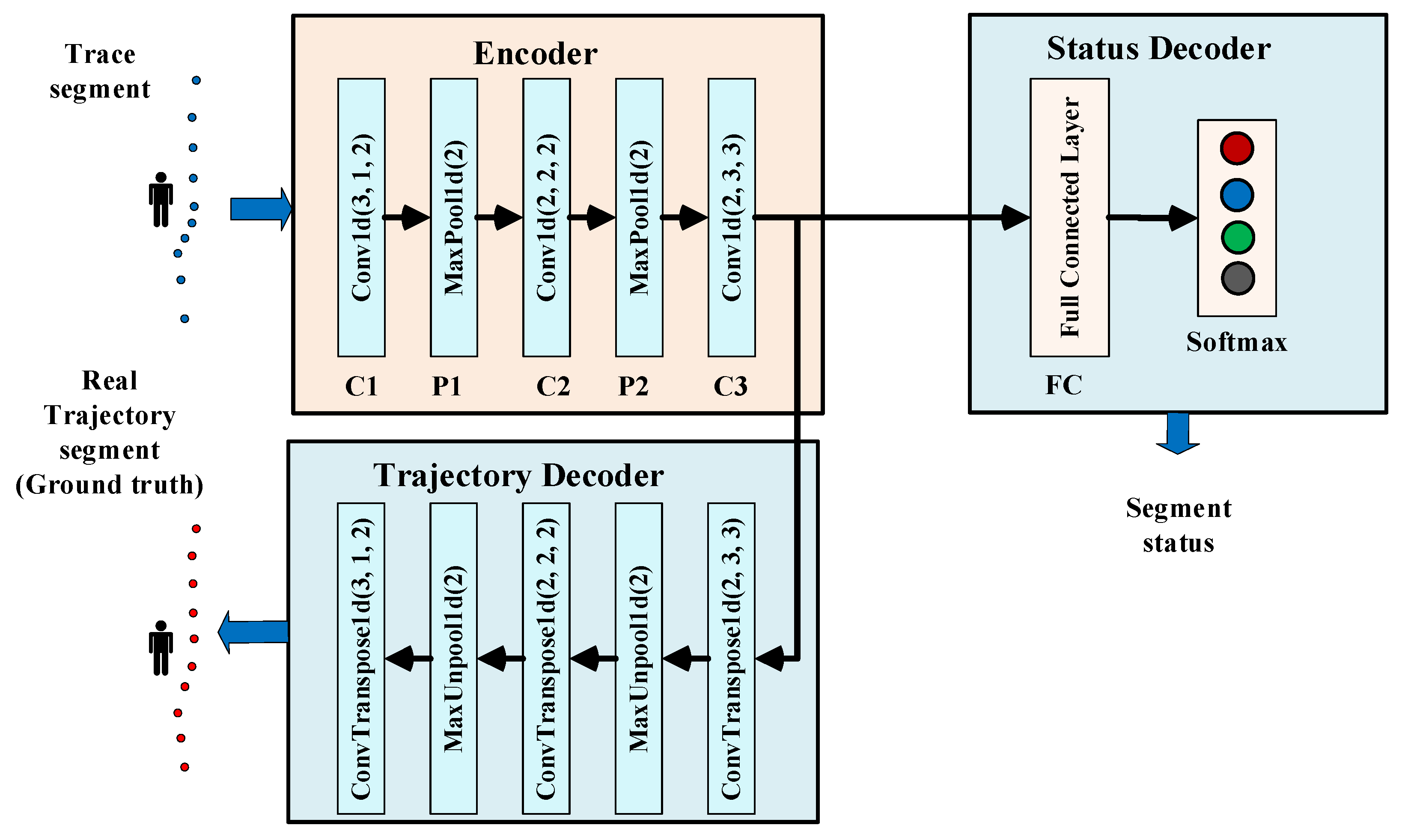

3.2. Deep Learning-Based Movement Status Recognition

3.3. Distance Estimation

4. Performance Evaluation

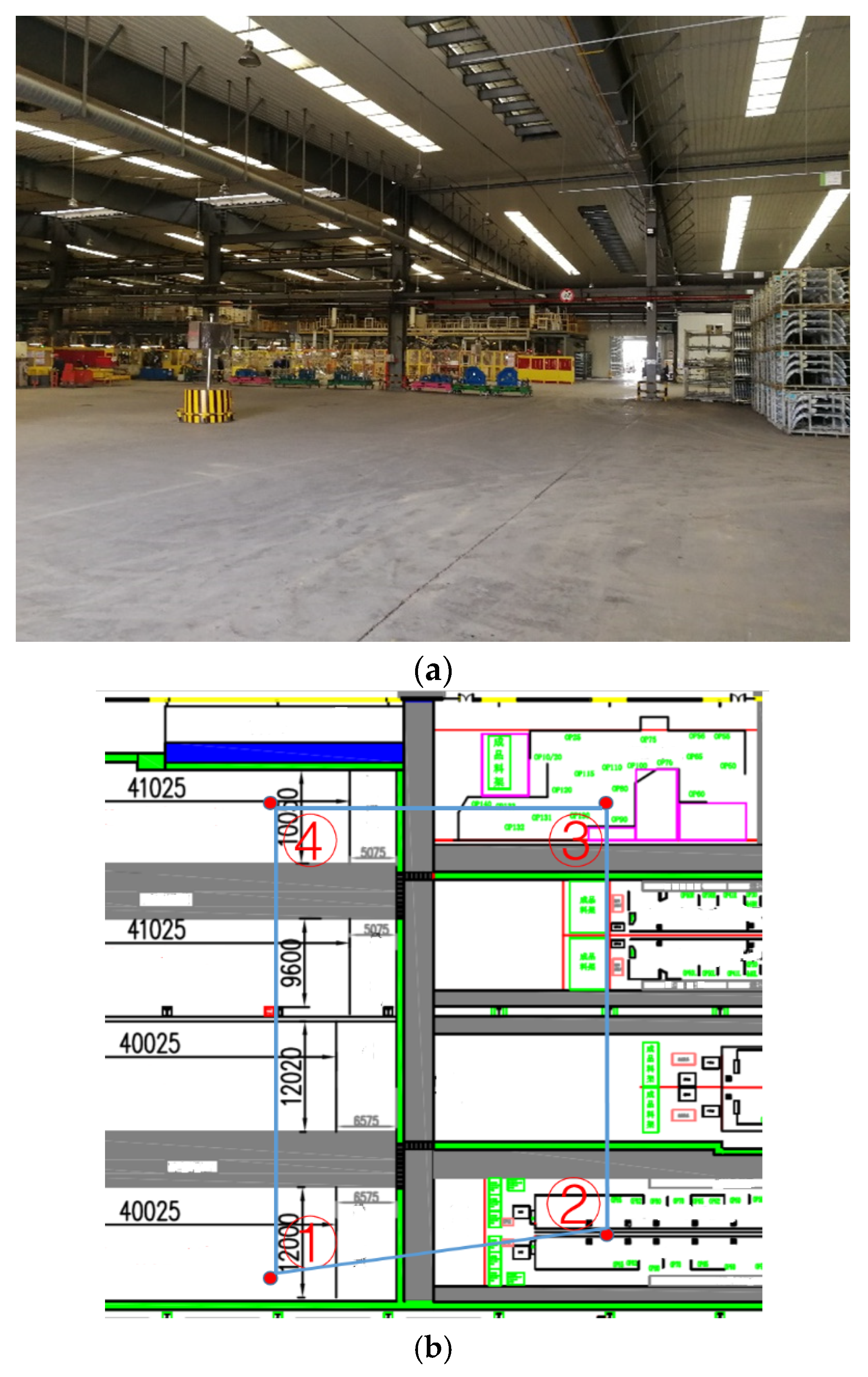

4.1. Setup

4.2. Movement Status Classification Performance

4.2.1. Metrics

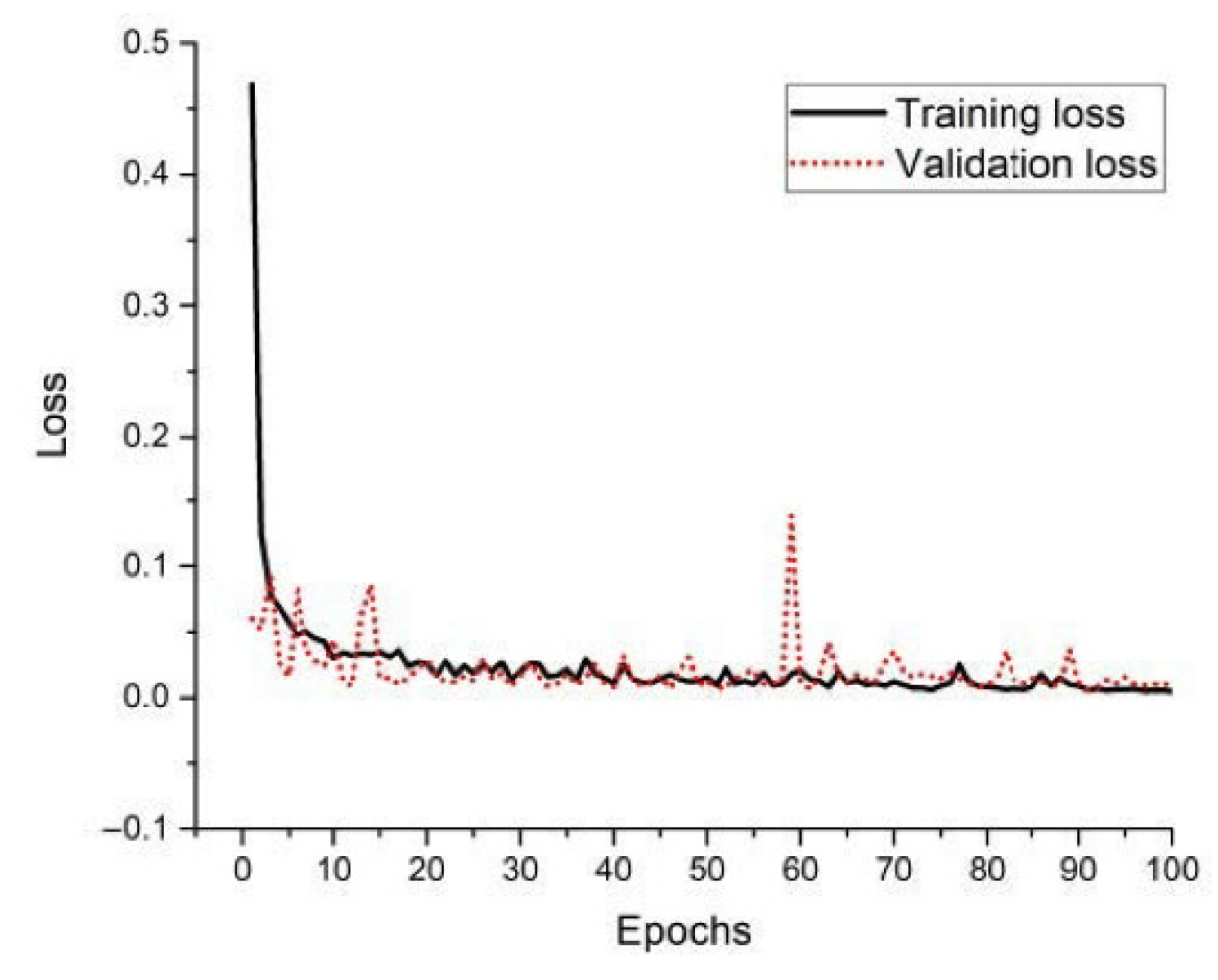

4.2.2. Classifier Implementation and Training

4.2.3. Comparison with Other Classifying Methods

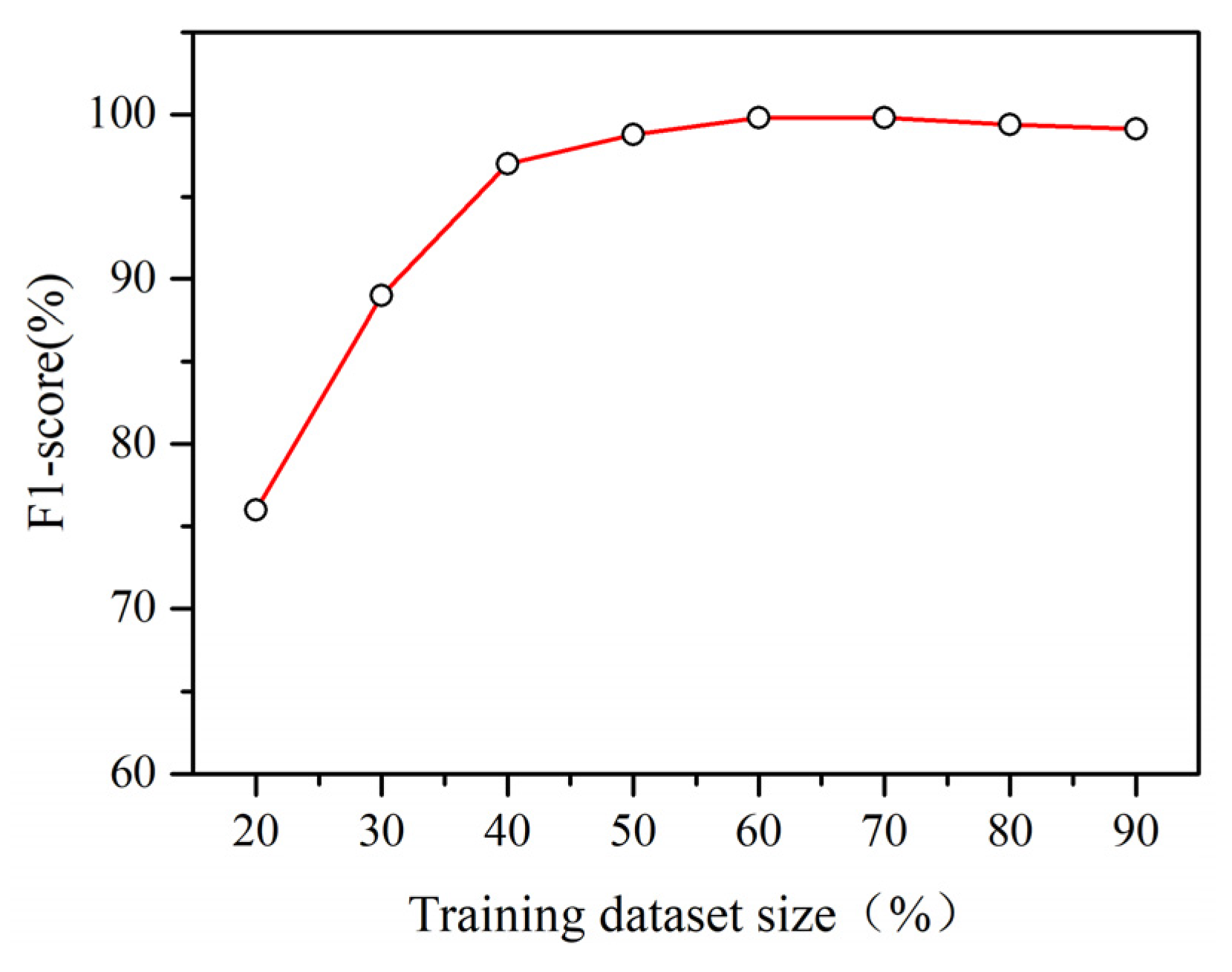

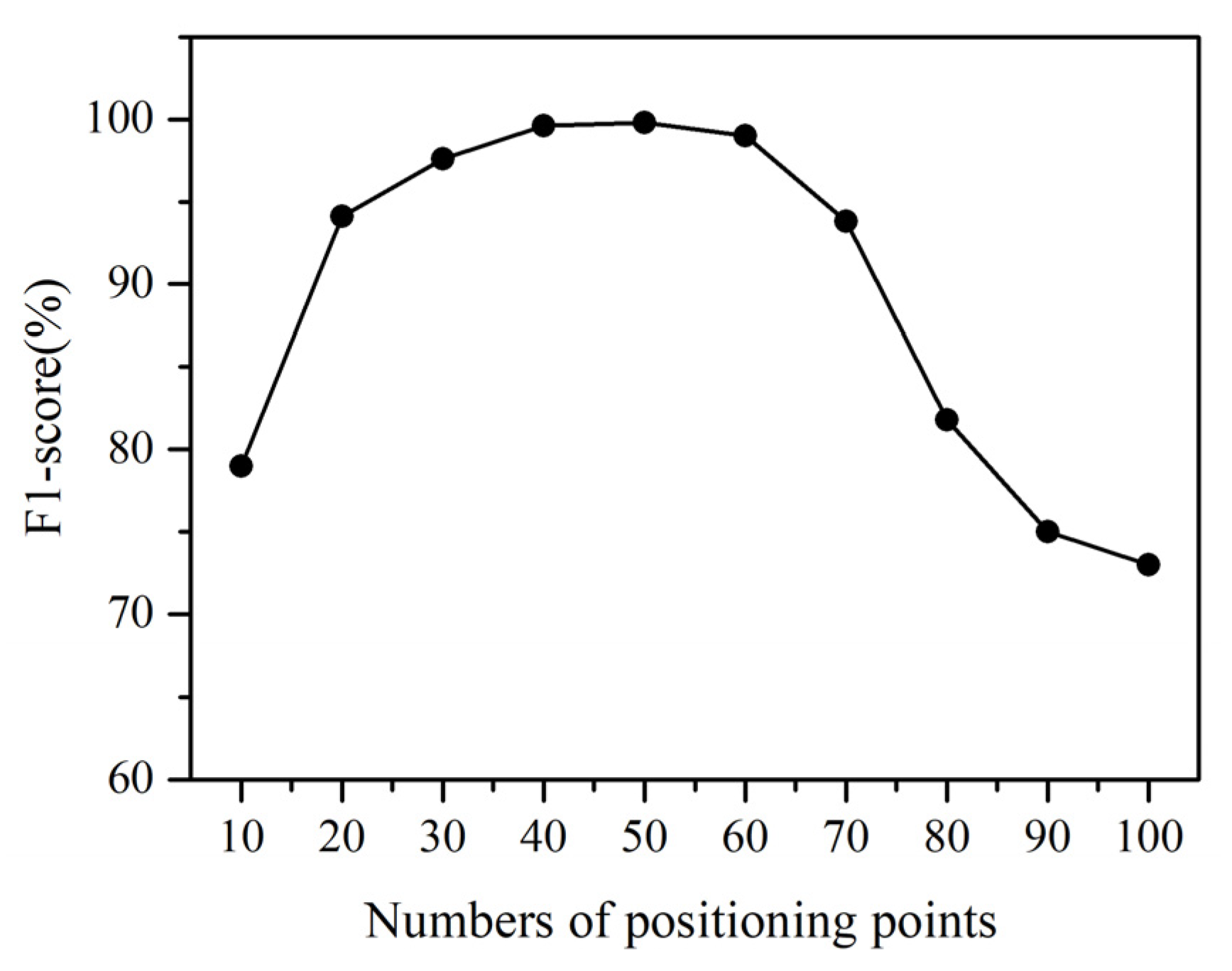

4.3. Impact of Trace Segment Size

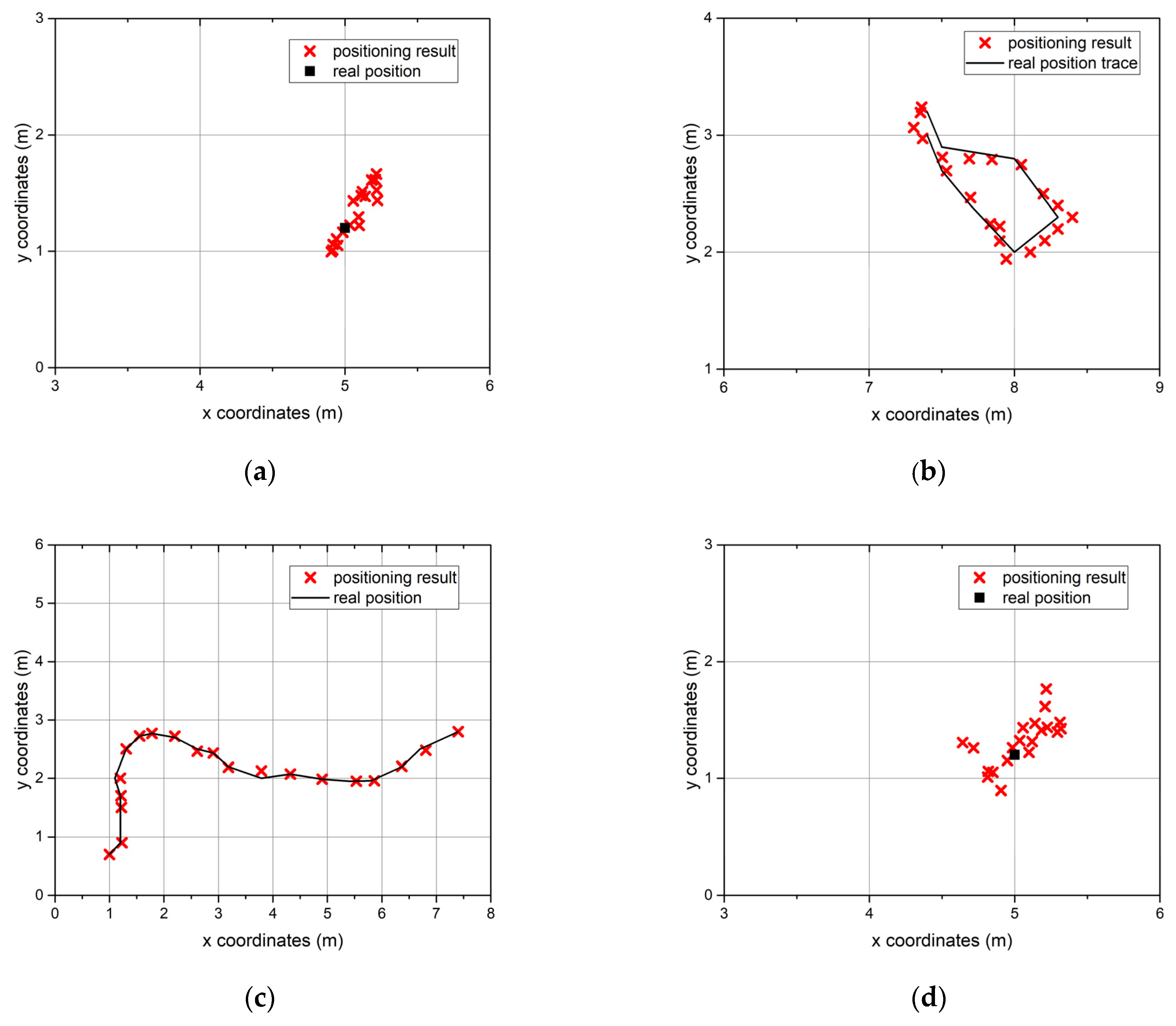

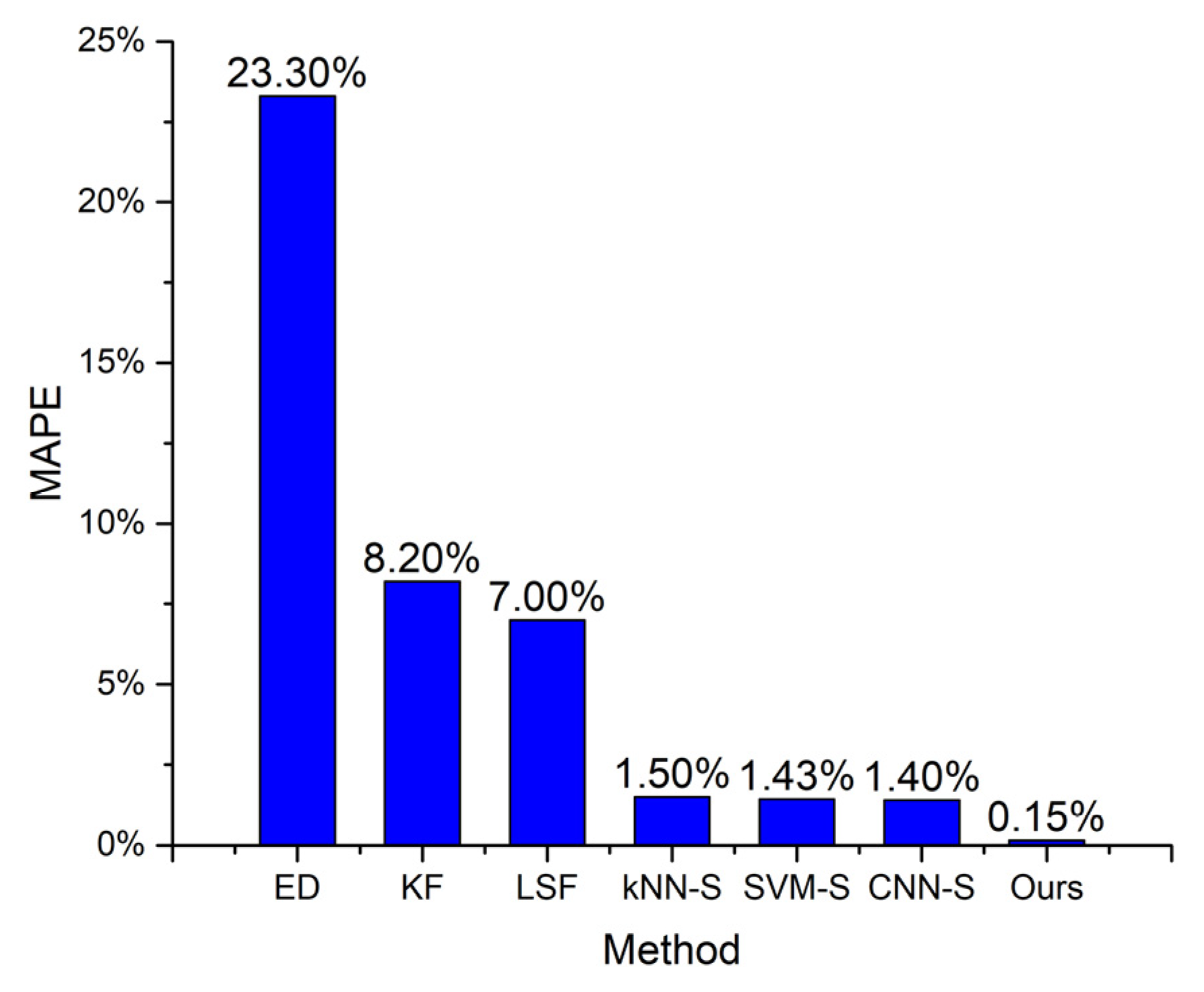

4.4. Distance Estimation Results

4.4.1. Metrics

4.4.2. Comparison with Other Distance Estimating Methods

- ED, calculating the Euclidean distance between the consecutive points of raw trace and using the sum of these distances as the estimated distance;

- KF, utilizing Kalman filter to process the raw trace, and using the distance of processed trace as the estimated distance;

- LSF, utilizing least square fitting to process the raw trace, and using the distance of processed trace as the estimated distance;

- kNN-S, dividing the raw trace into segments, classifying the statuses of segments by kNN, and using status-based estimation to obtain the final distance;

- SVM-S, dividing the raw trace into segments, classifying the statuses of segments by SVM, and using status-based estimation to obtain the final distance;

- CNN-S, dividing the raw trace into segments, classifying the statuses of segments by CNN, and using status-based estimation to obtain the final distance.

5. Conclusions and Future Directions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Liu, F.; Liu, J.; Yin, Y.; Wang, W.; Hu, D.; Chen, P.; Niu, Q. Survey on WiFi-based indoor positioning techniques. IET Commun. 2020, 14, 1372–1383. [Google Scholar] [CrossRef]

- Spachos, P.; Plataniotis, K.N. BLE Beacons for Indoor Positioning at an Interactive IoT-Based Smart Museum. IEEE Syst. J. 2020, 14, 3483–3493. [Google Scholar] [CrossRef]

- Alarifi, A.; Al-Salman, A.; Alsaleh, M.; Alnafessah, A.; Al-Hadhrami, S.; Al-Ammar, M.A.; Al-Khalifa, H.S. Ultra Wideband Indoor Positioning Technologies: Analysis and Recent Advances. Sensors 2016, 16, 707. [Google Scholar] [CrossRef] [PubMed]

- Qin, F.; Zuo, T.; Wang, X. CCpos: WiFi Fingerprint Indoor Positioning System Based on CDAE-CNN. Sensors 2021, 21, 1114. [Google Scholar] [CrossRef]

- Wang, X.; Wang, X.; Mao, S. Deep Convolutional Neural Networks for Indoor Localization with CSI Images. IEEE Trans. Netw. Sci. Eng. 2020, 7, 316–327. [Google Scholar] [CrossRef]

- Abbas, M.; Elhamshary, M.; Rizk, H.; Torki, M.; Youssef, M. WiDeep: WiFi-based Accurate and Robust Indoor Localization System using Deep Learning. In Proceedings of the 2019 IEEE International Conference on Pervasive Computing and Communications PerCom, Kyoto, Japan, 11–15 March 2019. [Google Scholar] [CrossRef]

- Zabalegui, P.; de Miguel, G.; Goya, J.; Moya, I.; Mendizabal, J.; Adín, I. Residual based fault detection and exclusion methods applied to Ultra-Wideband navigation. Measurement 2021, 179, 109350. [Google Scholar] [CrossRef]

- Einicke, G. (Ed.) Smoothing, Filtering and Prediction: Estimating The Past, Present and Future; InTech: Rijeka, Croatia, 2012. [Google Scholar] [CrossRef]

- Mooney, S.J.; Sheehan, D.M.; Zulaika, G.; Rundle, A.G.; McGill, K.; Behrooz, M.R.; Lovasi, G.S. Quantifying Distance Overestimation from Global Positioning System in Urban Spaces. Am. J. Public Health 2016, 106, 651–653. [Google Scholar] [CrossRef] [PubMed]

- Yin, Y.; Shah, R.R.; Wang, G.; Zimmermann, R. Feature-based Map Matching for Low-Sampling-Rate GPS Trajectories. ACM Trans. Spat. Algorithms Syst. 2018, 4, 1–24. [Google Scholar] [CrossRef]

- Dewhirst, O.P.; Evans, H.K.; Roskilly, K.; Harvey, R.J.; Hubel, T.Y.; Wilson, A.M. Improving the accuracy of estimates of animal path and travel distance using GPS drift-corrected dead reckoning. Ecol. Evol. 2016, 6, 6210–6222. [Google Scholar] [CrossRef]

- Tong, X.; Wan, Y.; Li, Q.; Tian, X.; Wang, X. CSI Fingerprinting Localization with Low Human Efforts. IEEE/ACM Trans. Networking 2021, 29, 372–385. [Google Scholar] [CrossRef]

- Zheng, Y.; Li, Q.; Chen, Y.; Xie, X.; Ma, W.-Y. Understanding mobility based on GPS data. In Proceedings of the 10th International Conference on Ubiquitous Computing—UbiComp ’08, Seoul, Korea, 21–24 September 2008; p. 312. [Google Scholar] [CrossRef]

- Brunsdon, C. Path estimation from GPS tracks. In Proceedings of the 9th International Conference on GeoComputation, National Centre for Geocomputation, National University of Ireland, Maynooth, Ireland, 3–5 September 2007. [Google Scholar]

- De Sousa, R.S.; Boukerche, A.; Loureiro, A.A.F. A Map Matching Based Framework to Reconstruct Vehicular Trajectories from GPS Datasets. In Proceedings of the 2020 IEEE International Conference on Communications (ICC), Dublin, Ireland, 7–11 June 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Mizzi, C.; Fabbri, A.; Rambaldi, S.; Bertini, F.; Curti, N.; Sinigardi, S.; Luzi, R.; Venturi, G.; Davide, M.; Muratore, G.; et al. Unraveling pedestrian mobility on a road network using ICTs data during great tourist events. EPJ Data Sci. 2018, 7, 44. [Google Scholar] [CrossRef]

- Lopez, A.J.; Semanjski, I.; Gillis, D.; Ochoa, D.; Gautama, S. Travelled Distance Estimation for GPS-Based Round Trips Car-Sharing Use Case. ToMS 2016, 5, 121–129. [Google Scholar] [CrossRef][Green Version]

- Prentow, T.; Thom, A.; Blunck, H.; Vahrenhold, J. Making Sense of Trajectory Data in Indoor Spaces. In Proceedings of the 2015 16th IEEE International Conference on Mobile Data Management, Pittsburgh, PA, USA, 15–18 June 2015; pp. 116–121. [Google Scholar] [CrossRef]

- Torkamani, S.; Lohweg, V. Survey on time series motif discovery: Time series motif discovery. WIREs Data Mining Knowl. Discov. 2017, 7, e1199. [Google Scholar] [CrossRef]

- Bian, J.; Tian, D.; Tang, Y.; Tao, D. Trajectory Data Classification: A Review. ACM Trans. Intell. Syst. Technol. 2019, 10, 1–34. [Google Scholar] [CrossRef]

- Zheng, Y. Trajectory Data Mining: An Overview. ACM Trans. Intell. Syst. Technol. 2015, 6, 1–41. [Google Scholar] [CrossRef]

- Lee, J.-G.; Han, J.; Li, X.; Gonzalez, H. TraClass: Trajectory classification using hierarchical region-based and trajectory-based clustering. Proc. VLDB Endow. 2008, 1, 1081–1094. [Google Scholar] [CrossRef]

- Joo, R.; Bertrand, S.; Tam, J.; Fablet, R. Hidden Markov Models: The Best Models for Forager Movements? PLoS ONE 2013, 8, e71246. [Google Scholar] [CrossRef]

- Gao, Y.-J.; Li, C.; Chen, G.-C.; Chen, L.; Jiang, X.-T.; Chen, C. Efficient k-Nearest-Neighbor Search Algorithms for Historical Moving Object Trajectories. J. Comput. Sci. Technol. 2007, 22, 232–244. [Google Scholar] [CrossRef]

- Fawaz, H.I.; Forestier, G.; Weber, J.; Idoumghar, L.; Muller, P.-A. Deep learning for time series classification: A review. Data Min. Knowl. Disc. 2019, 33, 917–963. [Google Scholar] [CrossRef]

- Chen, W.; Shi, K. Multi-scale Attention Convolutional Neural Network for time series classification. Neural Netw. 2021, 136, 126–140. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.; Lee, I. End-to-end trajectory transportation mode classification using Bi-LSTM recurrent neural network. In Proceedings of the 2017 12th International Conference on Intelligent Systems and Knowledge Engineering (ISKE), Nanjing, China, 24–26 November 2017; pp. 1–5. [Google Scholar] [CrossRef]

- Liu, H.; Wu, H.; Sun, W.; Lee, I. Spatio-Temporal GRU for Trajectory Classification. In Proceedings of the 2019 IEEE International Conference on Data Mining (ICDM), Beijing, China, 8–11 November 2019; pp. 1228–1233. [Google Scholar] [CrossRef]

- Dabiri, S.; Heaslip, K. Inferring transportation modes from GPS trajectories using a convolutional neural network. Transp. Res. Part C Emerg. Technol. 2018, 86, 360–371. [Google Scholar] [CrossRef]

| Precision | Recall | F1-Score | |

|---|---|---|---|

| kNN | 83.53% | 82.69% | 83.11% |

| SVM | 86.13% | 85.75% | 85.94% |

| CNN | 88.05% | 87.76% | 87.90% |

| Our classifier | 97.81% | 97.78% | 97.79% |

| Training Time | Average Classifying Time for One Segment | |

|---|---|---|

| kNN | / | 1.32 s |

| SVM | 6.3 s | 0.83 s |

| CNN | 451 s | 0.0079 s |

| Our classifier | 876 s | 0.0079 s |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, Z.; Zhang, W.; Shi, K. Improving the Accuracy of Estimates of Indoor Distance Moved Using Deep Learning-Based Movement Status Recognition. Sensors 2022, 22, 346. https://doi.org/10.3390/s22010346

Ma Z, Zhang W, Shi K. Improving the Accuracy of Estimates of Indoor Distance Moved Using Deep Learning-Based Movement Status Recognition. Sensors. 2022; 22(1):346. https://doi.org/10.3390/s22010346

Chicago/Turabian StyleMa, Zhenjie, Wenjun Zhang, and Ke Shi. 2022. "Improving the Accuracy of Estimates of Indoor Distance Moved Using Deep Learning-Based Movement Status Recognition" Sensors 22, no. 1: 346. https://doi.org/10.3390/s22010346

APA StyleMa, Z., Zhang, W., & Shi, K. (2022). Improving the Accuracy of Estimates of Indoor Distance Moved Using Deep Learning-Based Movement Status Recognition. Sensors, 22(1), 346. https://doi.org/10.3390/s22010346