A Novel Method of Aircraft Detection under Complex Background Based on Circular Intensity Filter and Rotation Invariant Feature

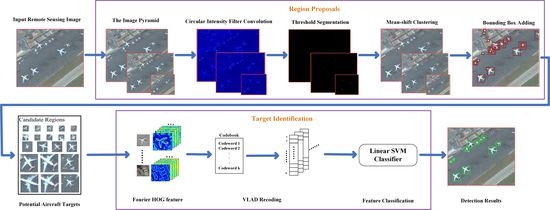

Abstract

:1. Introduction

2. Aircraft Target Center Determination Based on Circular Intensity Filtering

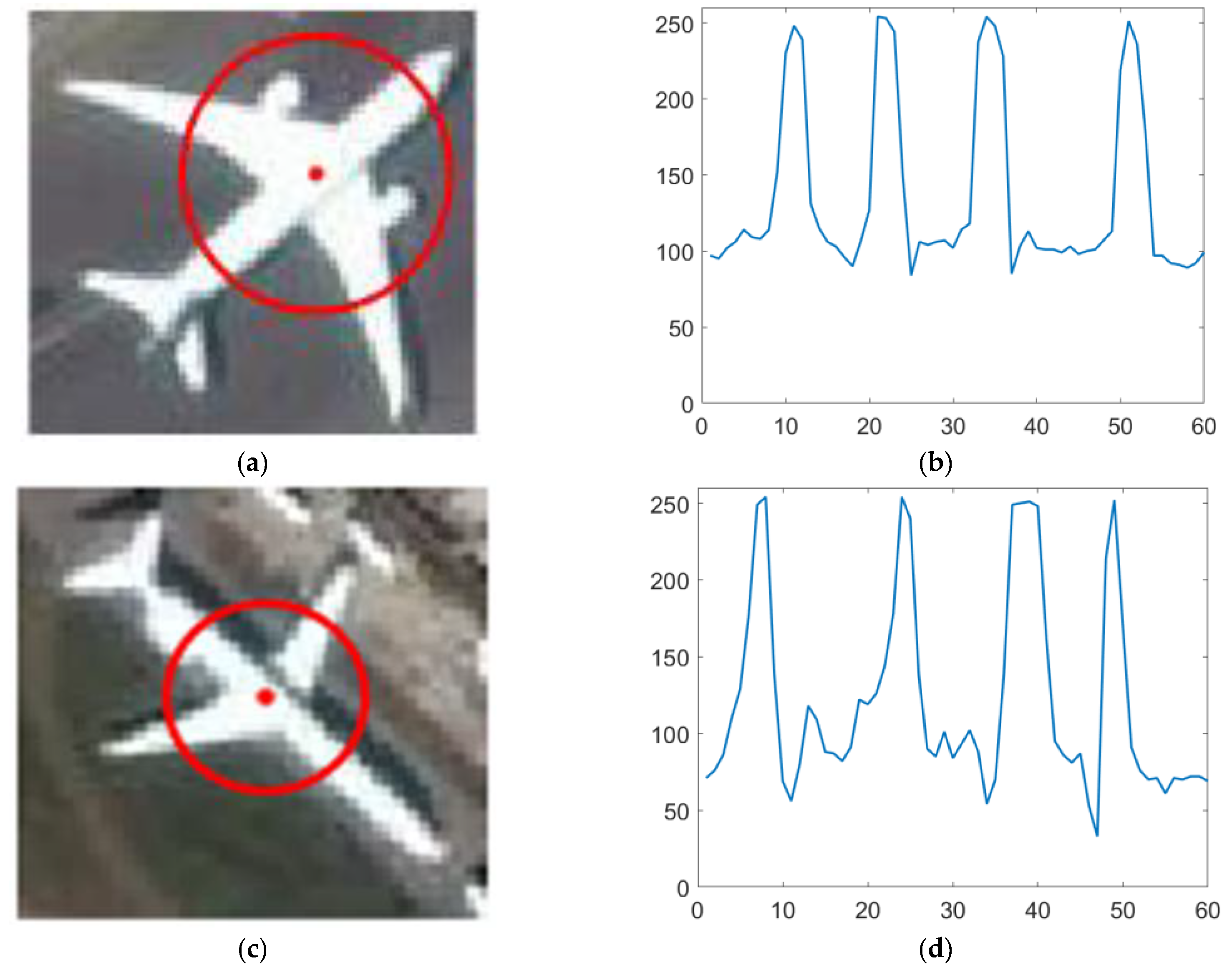

2.1. Circular Intensity Filter

2.2. Centroid Clustering

2.3. Multi-Scale RSIs Pyramid

3. Rotation-Invariant Feature Based on Fourier HOG Feature and VLAD

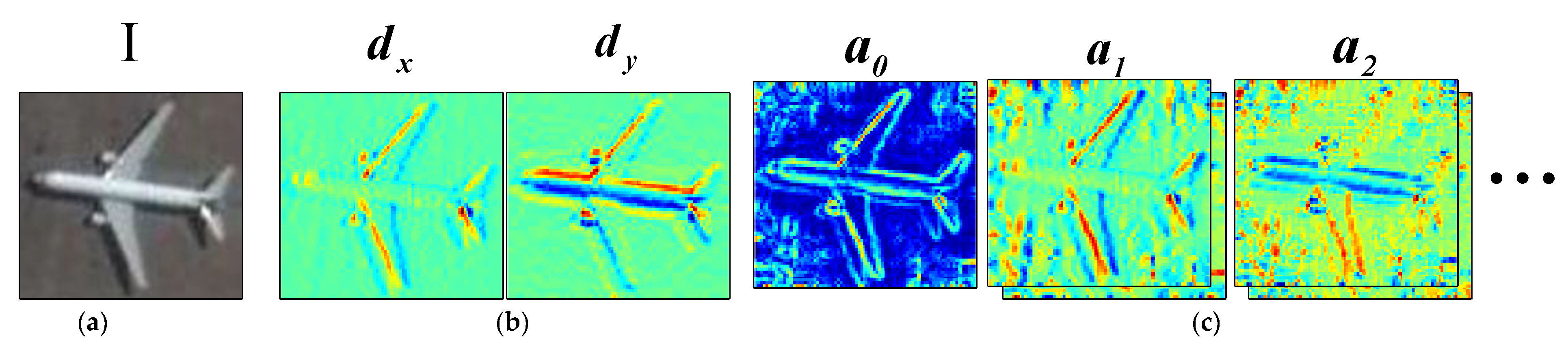

3.1. Fourier HOG

3.2. VLAD Representation

4. Experiments

4.1. Dataset and Evaluation Criteria

4.2. Parameter Settings and Comparison Experiments for Region Proposals

4.2.1. The Size of Bounding Boxes

4.2.2. Thresholds Determination in the Segmentation

4.2.3. The Comparative Experiments of Region Proposal Method

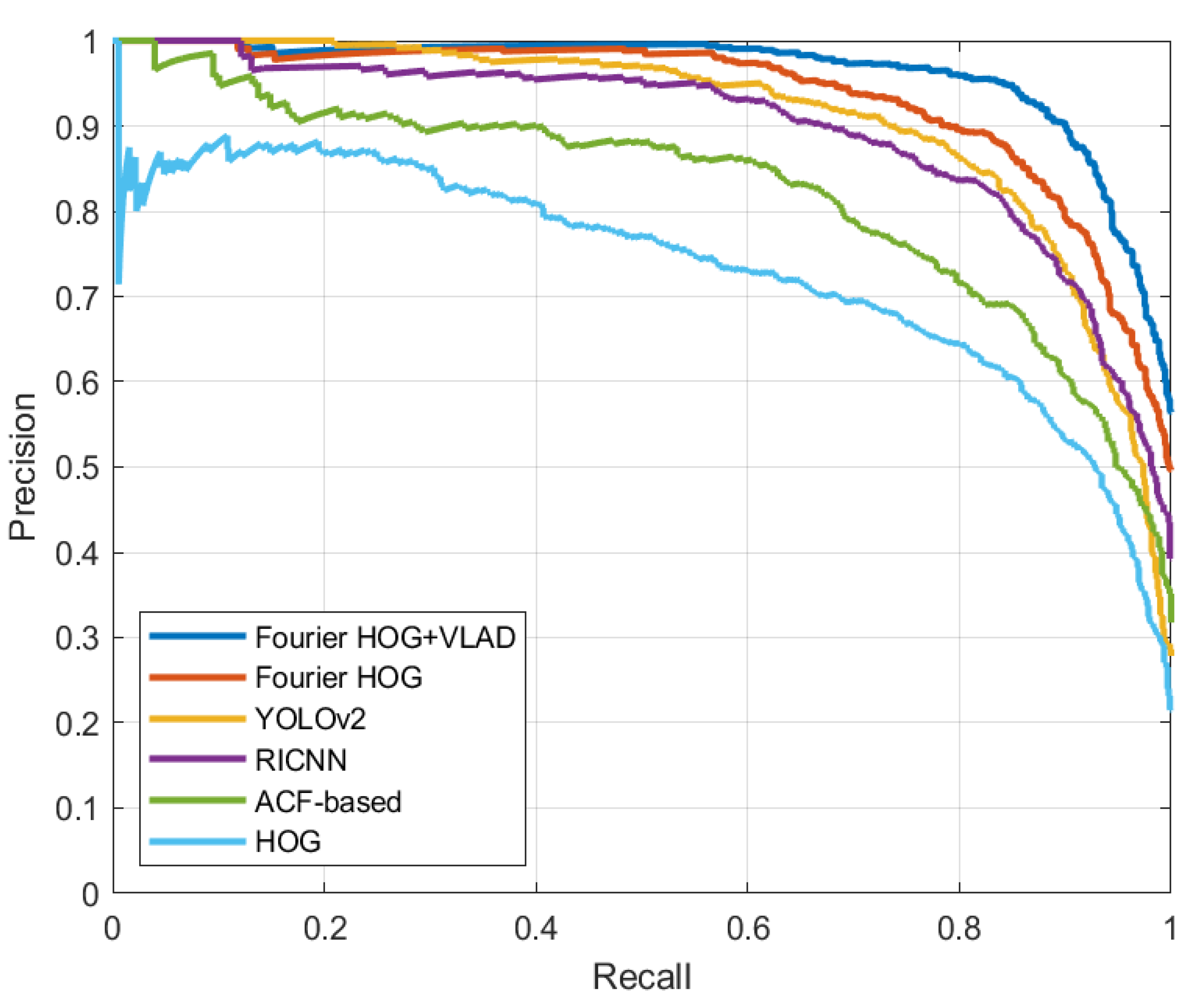

4.3. Comparison of Overall Detection Performances

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Xu, X.; Chen, W.; Zhao, G.; Li, Y.; Lu, C.; Yang, L. Wireless whispering-gallery-mode sensor for thermal sensing and aerial mapping. Light Sci. Appl. 2018, 7, 1–6. [Google Scholar] [CrossRef] [PubMed]

- Gao, F.; Xu, Q.; Li, B. Robust aircraft segmentation from very high-resolution images based on bottom-up and top-down cue integration. J. Appl. Remote Sens. 2016, 10, 975–979. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J. A survey on object detection in optical remote sensing images. ISPRS J. Photogramm. Remote Sens. 2016, 117, 11–28. [Google Scholar] [CrossRef] [Green Version]

- Liu, G.; Sun, X.; Fu, K.; Wang, H. Aircraft recognition in high-resolution satellite images using coarse-to-fine shape prior. IEEE Geosci. Remote Sens. Lett. 2013, 10, 573–577. [Google Scholar] [CrossRef]

- Wang, W.; Nie, T.; Fu, T.; Ren, J.; Jin, L. A novel method of aircraft detection based on high-resolution panchromatic optical remote sensing images. Sensors 2017, 17, 1047. [Google Scholar] [CrossRef] [Green Version]

- Yan, H. Aircraft detection in remote sensing images using centre-based proposal regions and invariant features. Remote Sens. Lett. 2020, 11, 787–796. [Google Scholar] [CrossRef]

- Li, W.; Xiang, S.; Wang, H.; Pan, C. Robust airplane detection in satellite images. In Proceedings of the IEEE International Conference on Image Processing, Brussels, Belgium, 11–14 September 2011. [Google Scholar]

- He, J.; Guo, Y.; Yuan, H. Ship target automatic detection based on hypercomplex flourier transform saliency model in high spatial resolution remote-sensing images. Sensors 2020, 20, 2536. [Google Scholar] [CrossRef]

- Liu, Q.; Xiang, X.; Wang, Y.; Luo, Z.; Fang, F. Aircraft detection in remote sensing image based on corner clustering and deep learning. Eng. Appl. Artif. Intel. 2020, 87, 103333. [Google Scholar] [CrossRef]

- Zhao, A.; Fu, K.; Sun, H.; Sun, X.; Li, F.; Zhang, D.; Wang, H. An effective method based on ACF for aircraft detection in remote sensing images. IEEE Geosci. Remote Sens. Lett. 2017, 14, 744–748. [Google Scholar] [CrossRef]

- Zhang, W.; Sun, X.; Fu, K.; Wang, C.; Wang, H. Object detection in high-resolution remote sensing images using rotation invariant parts based model. IEEE Geosci. Remote Sens. Lett. 2013, 11, 74–78. [Google Scholar] [CrossRef]

- Ding, P.; Zhang, Y.; Deng, W.; Jia, P.; Kuijper, A. A light and faster regional convolutional neural network for object detection in optical remote sensing images. ISPRS J. Photogramm. Remote Sens. 2018, 141, 208–218. [Google Scholar] [CrossRef]

- Wu, H.; Zhang, H.; Zhang, J.; Xu, F. Typical target detection in satellite images based on convolutional neural networks. In Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics, Hong Kong, China, 9–12 October 2015. [Google Scholar]

- Wu, Q.; Feng, D.; Cao, C.; Zeng, X.; Feng, Z.; Wu, J.; Huang, Z. Improved Mask R-CNN for aircraft detection in remote sensing mages. Sensors 2021, 21, 2618. [Google Scholar] [CrossRef]

- Luo, R.; Chen, L.; Xing, J.; Yuan, Z.; Tan, S.; Cai, X.; Wang, J. A fast aircraft detection method for SAR images based on efficient bidirectional path aggregated attention network. Remote Sens. 2021, 13, 2940. [Google Scholar] [CrossRef]

- Thomas, N.; Max, W. Semi-supervised classification with graph convolutional networks. arXiv 2016, arXiv:1609.02907. [Google Scholar]

- Hong, D.; Gao, L.; Yao, J.; Zhang, B.; Plaza, A.; Chanussot, J. Graph convolutional networks for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2021, 59, 5966–5978. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.; Kaiser, L.; Polosukhin, I. Attention is all you need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Chen, H.; Qi, Z.; Shi, Z. Remote sensing image change detection with transformers. IEEE Trans. Geosci. Remote Sens. 2021. [Google Scholar] [CrossRef]

- Hong, D.; Zhu, H.; Yao, J.; Gao, L.; Zhang, B.; Plaza, A.; Chanussot, J. SpectralFormer: Rethinking hyperspectral image classification with transformers. IEEE Trans. Geosci. Remote Sens. 2021. [Google Scholar] [CrossRef]

- Liu, B.; Wu, H.; Su, W.; Zhang, W.; Sun, J. Rotation-invariant object detection using Sector-ring HOG and boosted random ferns. Vis. Comput. 2018, 34, 707–719. [Google Scholar] [CrossRef]

- Cai, H.; Su, Y. Airplane detection in remote sensing image with a circle-frequency filter. In Proceedings of the International Conference on Space Information Technology, Wuhan, China, 19–20 November 2005. [Google Scholar]

- Fukunaga, K.; Hostetler, L. The estimation of the gradient of a density function, with applications in pattern recognition. IEEE Trans. Inform. Theory 1975, 21, 32–40. [Google Scholar] [CrossRef] [Green Version]

- Roodposhti, M.; Lucieer, A.; Anees, A.; Bryan, B. A robust rule-based ensemble framework using mean-shift segmentation for hyperspectral image classification. Remote Sens. 2019, 11, 2057. [Google Scholar] [CrossRef] [Green Version]

- Lang, F.; Yang, J.; Yan, S.; Qin, F. Superpixel segmentation of polarimetric Synthetic Aperture Radar (SAR) images based on generalized mean shift. Remote Sens. 2018, 10, 1592. [Google Scholar] [CrossRef] [Green Version]

- Yun, S.; Kim, S. TIR-MS: Thermal infrared mean-shift for robust pedestrian head tracking in dynamic target and background variations. Appl. Sci. 2019, 9, 3015. [Google Scholar] [CrossRef] [Green Version]

- Liu, K.; Skibbe, H.; Schmidt, T.; Blein, T.; Palme, K.; Brox, T.; Ronneberger, O. Rotation-Invariant HOG descriptors using Fourier analysis in polar and spherical coordinates. Int. J. Comput. Vision 2014, 106, 342–364. [Google Scholar] [CrossRef]

- Dong, C.; Liu, J.; Xu, F.; Liu, C. Ship detection from optical remote sensing images using multi-scale analysis and Fourier HOG descriptor. Remote Sens. 2019, 11, 1529. [Google Scholar] [CrossRef] [Green Version]

- Wu, X.; Hong, D.; Tian, J.; Chanussot, J.; Li, W.; Tao, R. ORSIm Detector: A novel object detection framework in optical remote sensing imagery using spatial-frequency channel features. IEEE Trans. Geosci. Remote Sens. 2019, 57, 5146–5158. [Google Scholar] [CrossRef] [Green Version]

- Wu, X.; Hong, D.; Chanussot, J.; Xu, Y.; Tao, R.; Wang, Y. Fourier-based rotation-invariant feature boosting: An efficient framework for geospatial object detection. IEEE Geosci. Remote Sens. Lett. 2020, 17, 302–306. [Google Scholar] [CrossRef] [Green Version]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005. [Google Scholar]

- Jegou, H.; Douze, M.; Schmid, C.; Perez, P. Aggregating local descriptors into a compact image representation. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010. [Google Scholar]

- Csurka, G.; Dance, C.; Fan, L.; Willamowski, J.; Bray, C. Visual categorization with bags of keypoints. In Proceedings of the European Conference on Computer Vision, Prague, Czech Republic, 11–14 May 2004. [Google Scholar]

- Perronnin, F.; Sánchez, J.; Mensink, T. Improving the fisher kernel for large-scale image classification. In Proceedings of the European Conference on Computer Vision, Berlin, Germany, 5–11 September 2010. [Google Scholar]

- Xiao, Z.; Liu, Q.; Tang, G.; Zhai, X. Elliptic Fourier transformation-based histograms of oriented gradients for rotationally invariant object detection in remote-sensing images. Int. J. Remote Sens. 2015, 36, 618–644. [Google Scholar] [CrossRef]

- Long, Y.; Gong, Y.; Xiao, Z. Accurate object localization in remote sensing images based on convolutional neural networks. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2486–2498. [Google Scholar] [CrossRef]

- Zitnick, C.L.; Dollar, P. Edge Boxes: Locating object proposals from edges. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014. [Google Scholar]

- Uijlings, J.R.R.; van de Sande, K.E.A.; Gevers, T.; Smeulders, A.W.M. Selective search for object recognition. Int. J. Comput. Vision 2013, 104, 154–171. [Google Scholar] [CrossRef] [Green Version]

- Cheng, G.; Zhou, P.; Han, J. Learning rotation-invariant convolutional neural networks for object detection in VHR optical remote sensing images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 7405–7415. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Wan, L.; Zheng, L.; Huo, H.; Fang, T. Affine invariant description and large-margin dimensionality reduction for target detection in optical remote sensing images. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1116–1120. [Google Scholar] [CrossRef]

- Burges, C. A Tutorial on Support Vector Machines for Pattern Recognition. In Data Mining and Knowledge Discovery; Kluwer Academic Publishers: Boston, MA, USA, 1998; pp. 121–167. [Google Scholar]

| Combinations of thresholds | t0 = 0.2 | t0 = 0.3 | t0 = 0.3 | t0 = 0.35 | t0 = 0.4 | t0 = 0.4 |

| t1 = 0.2 | t1 = 0.3 | t1 = 0.3 | t1 = 0.35 | t1 = 0.4 | t1 = 0.4 | |

| t2 = 0.4 | t2 = 0.4 | t2 = 0.5 | t2 = 0.5 | t2 = 0.4 | t2 = 0.5 | |

| Number of candidate regions/Per image | 235 | 181 | 163 | 127 | 78 | 73 |

| Recall | 0.984 | 0.976 | 0.976 | 0.935 | 0.911 | 0.91 |

| Method | Number of Candidate Regions per Image | Recall | Time (s) per Image |

|---|---|---|---|

| EdgeBoxes | 6109 | 0.928 | 0.426 |

| Selective Search | 3892 | 0.941 | 11.446 |

| Proposed | 155 | 0.953 | 0.513 |

| Method | HOG | ACF-Based | RICNN | YOLOv2 | Fourier HOG | Proposed Method |

|---|---|---|---|---|---|---|

| AP | 0.697 | 0.808 | 0.874 | 0.881 | 0.905 | 0.934 |

| Mean Time (s) per Image | 0.72 | 2.23 | 8.84 | 0.15 | 2.37 | 1.31 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, X.; Liu, J.; Xu, F.; Xie, Z.; Zuo, Y.; Cao, L. A Novel Method of Aircraft Detection under Complex Background Based on Circular Intensity Filter and Rotation Invariant Feature. Sensors 2022, 22, 319. https://doi.org/10.3390/s22010319

Chen X, Liu J, Xu F, Xie Z, Zuo Y, Cao L. A Novel Method of Aircraft Detection under Complex Background Based on Circular Intensity Filter and Rotation Invariant Feature. Sensors. 2022; 22(1):319. https://doi.org/10.3390/s22010319

Chicago/Turabian StyleChen, Xin, Jinghong Liu, Fang Xu, Zhihua Xie, Yujia Zuo, and Lihua Cao. 2022. "A Novel Method of Aircraft Detection under Complex Background Based on Circular Intensity Filter and Rotation Invariant Feature" Sensors 22, no. 1: 319. https://doi.org/10.3390/s22010319

APA StyleChen, X., Liu, J., Xu, F., Xie, Z., Zuo, Y., & Cao, L. (2022). A Novel Method of Aircraft Detection under Complex Background Based on Circular Intensity Filter and Rotation Invariant Feature. Sensors, 22(1), 319. https://doi.org/10.3390/s22010319