5.1. Facial Expression Recognition

As discussed above, we used AffectNet [

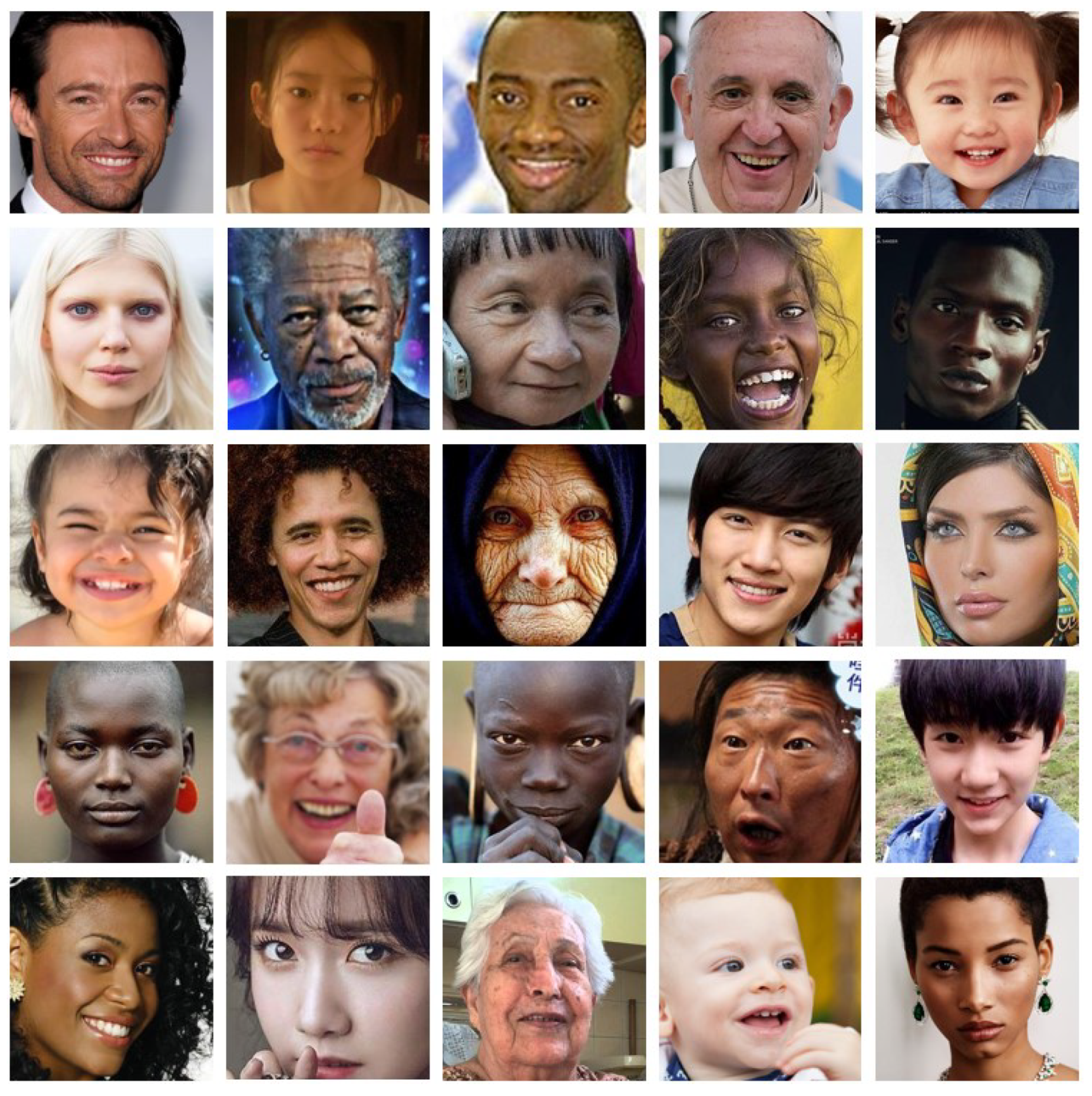

33], one of the largest databases for facial expressions, to train and evaluate our FER models. It contains approximately 1M facial images collected in the wild and annotation information for the FER. AffectNet contains a significant number of emotions on faces of people of different races, ages and gender.

Figure 5 shows sample images in the AffectNet. Moreover, the biggest reason we used AffectNet is that it contains manually annotated intensity of valence and arousal. We only used manually annotated images containing 320,739 training samples and 4500 validation samples, excluding uncertain images. Unfortunately, AffectNet has not yet released the test samples. Hence, we compare the validation set results between their baseline methods in

Section 6.1.

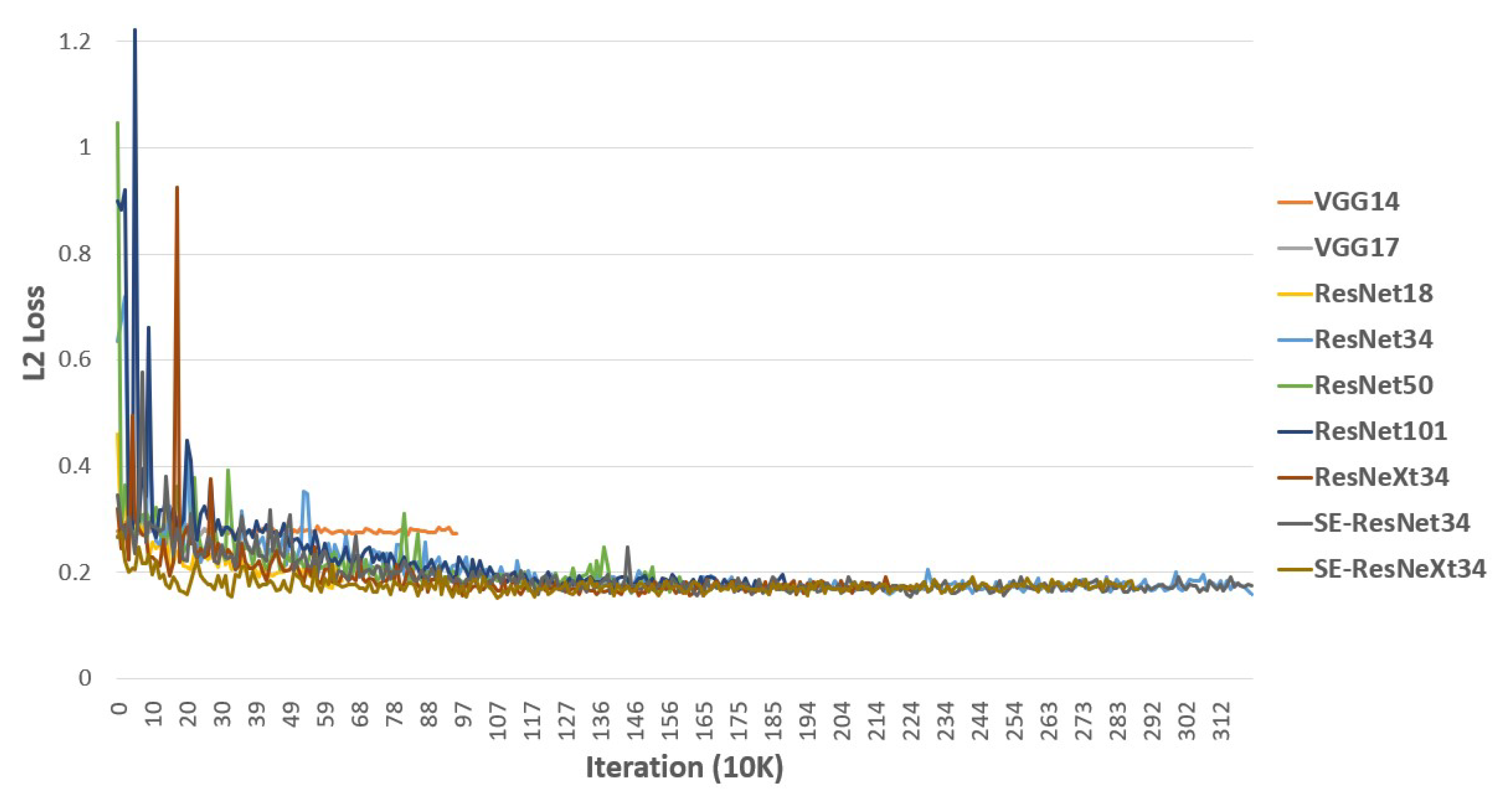

We proposed various FER models for a comparative experiment. Every proposed FER model, evaluated in this study, consists of input shape

, several CNN layers and one fully connected layer as the trainable layers. Because we used RGB images, we set the depth of the input image to 3; however, the depth of the input image can be changed to 1 when using the binary images from the NIR camera to secure more robustness against changes in illuminance, as proposed by Gao et al. [

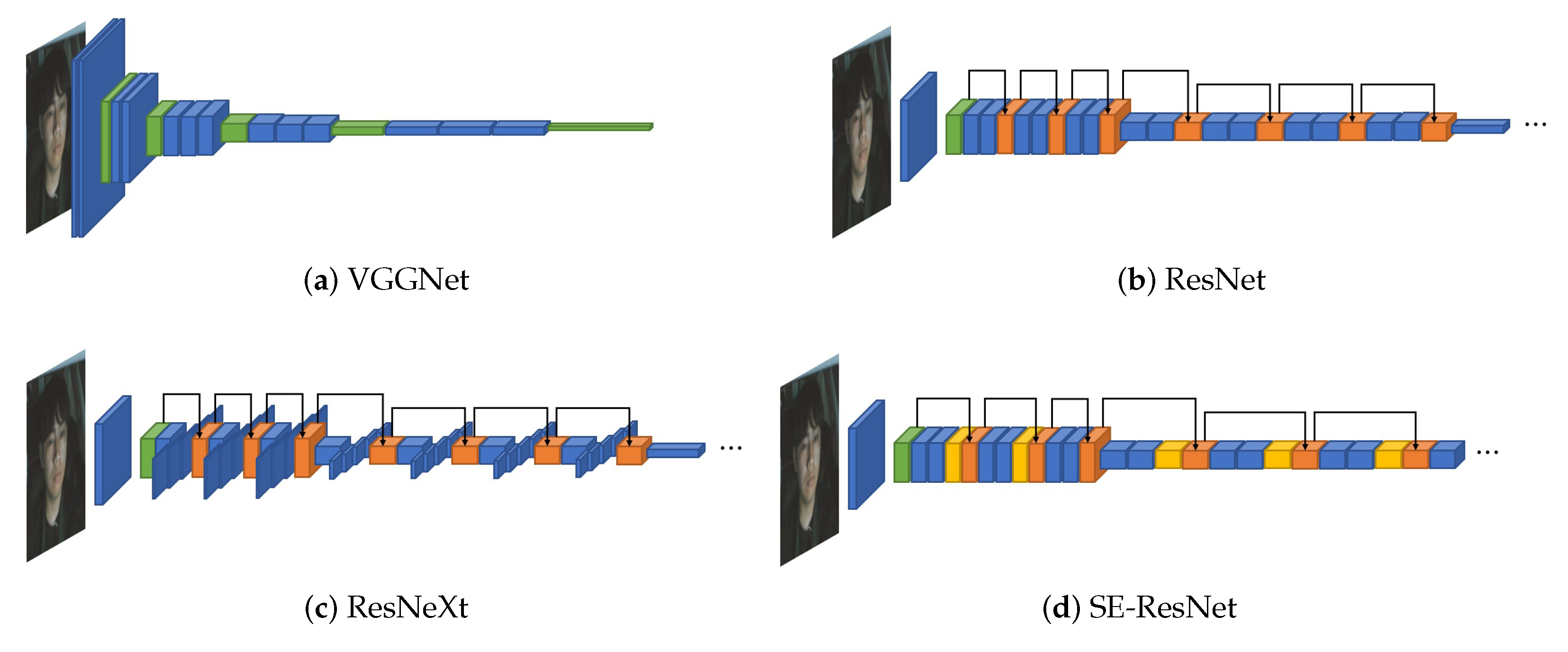

5]. In the following, we distinguished the models by their base architectures and number of trainable layers. To validate the effectiveness according to the model’s depth, we designed the models with different depths using VGGNet [

28] and ResNet [

29]. To validate the performance of the parallelization and the channel-wise attention of CNN layers, we applied ResNeXt [

30] and SE block [

31] to our FER models. All of our FER models are described as follows, and detailed configurations of each structure are outlined in

Table 2, one per column.

VGG14 is based on VGGNet architectures and consisted of 13 CNN layers. The last three fully connected layers are replaced with one fully connected layer with only two units, to represent valence and arousal, respectively. The model has 14 trainable layers, thus it is called VGG14.

VGG17 is based on VGGNet, and three more CNN layers are added to VGG14. It consists of 16 CNN layers and 1 fully connected layer.

ResNet18 is based on ResNet and has 18 trainable layers (17 CNN layers and 1 fully connected layer). Compared with VGG17, there is only one more CNN layer; however, ResNet18 has the shortcut connection for every two CNN layers, except the first CNN layer. The layers between shortcut connections are represented as curly brackets in

Table 2.

ResNet34 is based on ResNet and has 34 trainable layers (33 CNN layers and 1 fully connected layer). It also has shortcut connections for every two CNN layers, except the first CNN layer. The layers between shortcut connections are represented as curly brackets in

Table 2.

ResNet50 is based on ResNet and has 50 trainable layers (49 CNN layers and 1 fully connected layer). It has a shortcut connection for every three CNN layers, except the first CNN layer. The layers between shortcut connections are represented as curly brackets in

Table 2.

ResNet101 is based on ResNet and has 101 trainable layers (100 CNN layers and 1 fully connected layer). It also has a shortcut connection for every three CNN layers, except the first CNN layer. The layers between shortcut connections are represented as curly brackets in

Table 2.

ResNeXt34 is based on ResNeXt, and it is composed of 34 trainable layers (33 CNN layers and 1 fully connected layer). The cardinality is set to 32. The last CNN layers between shortcut connections are propagated by splitting them into 32 on a channel basis. In

Table 2, the shortcut connections are represented as curly brackets, and the splitting operation is represented as every last layer in curly brackets.

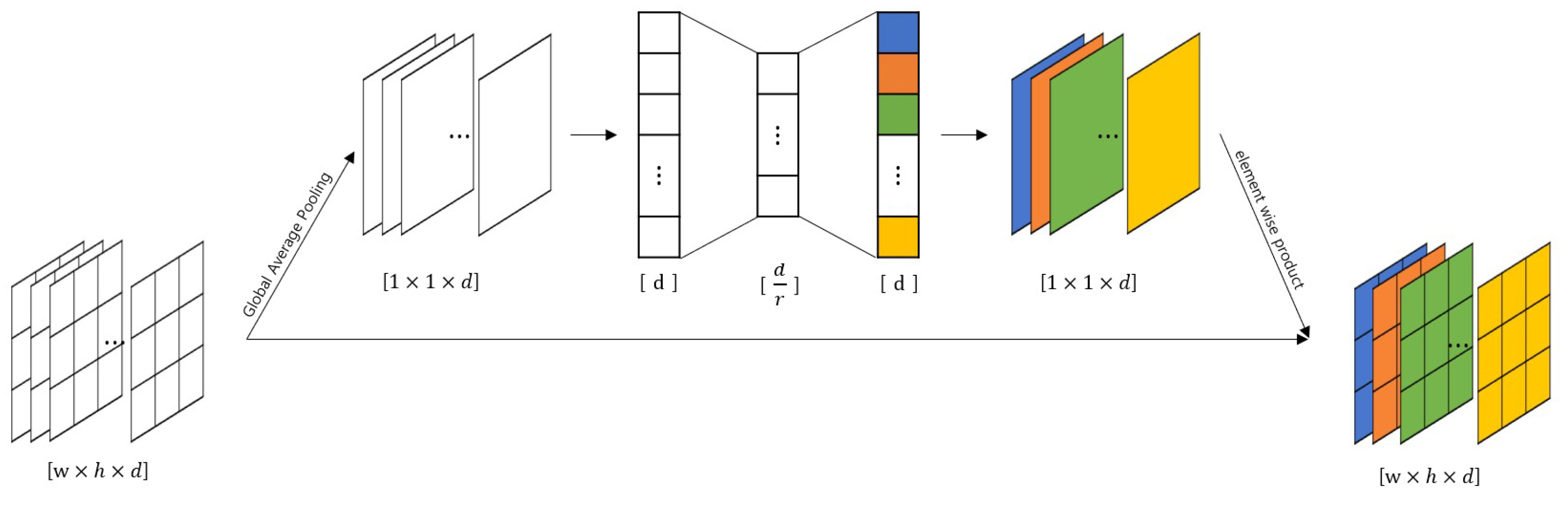

SE-ResNet34 applies the SE block to ResNet34. The SE blocks are positioned between the last CNN layers of shortcut connections and the merge points of the shortcut connections, as shown in

Table 2. The detailed structure is shown in

Figure 3, and the reduction ratio

r is set to 4.

SE-ResNeXt34 applies the SE block to ResNeXt34. The SE blocks are positioned between the last CNN layers of shortcut connections and the merge points of the shortcut connections, as shown in

Table 2. The structure is the same as the SE block of SE-ResNet34, and the reduction ratio

r is also set to 4.

We trained our FER models to minimize the distance between the predicted (

) and true (

) values of the valence and arousal using AffectNet [

33]. L2 loss function measures the distance and is shown as follows:

where

n is the number of training samples,

is the predicted valence value of

ith training sample,

is the true valence value of

ith training sample,

is the predicted arousal value of

ith training sample, and

is the true arousal value of

ith training sample. We used the Adam algorithm [

63], a popular optimizer, to optimize the model parameters. We set the learning rate to 0.001 and the first and second moments to 0.9 and 0.999, respectively. We tried to train over 10 epochs (over 3,207,390 iterations), and the training was terminated when the loss value on the validation set was stable. To compare our models, we used root mean squared error (RMSE) on the validation set:

where

m is the number of validation samples,

is the predicted value of

ith validation sample and

is the true value of

ith validation sample. The RMSE values of valence and arousal are compared separately.

5.2. Sensor Fusion Emotion Recognition

Thirteen volunteers (six men and seven women) participated in this study, five times per participant. The experiment had to be conducted by inducing eight emotions for all participants; hence, it was impossible to conduct all experiments in a single day. We experimented by grouping two similar emotions (2 emotions/session × 4 session = 8 emotions). One session was conducted as a pretest. All experiments were conducted after obtaining approval from Kookmin University’s IRB (KMU-202005-HR-235).

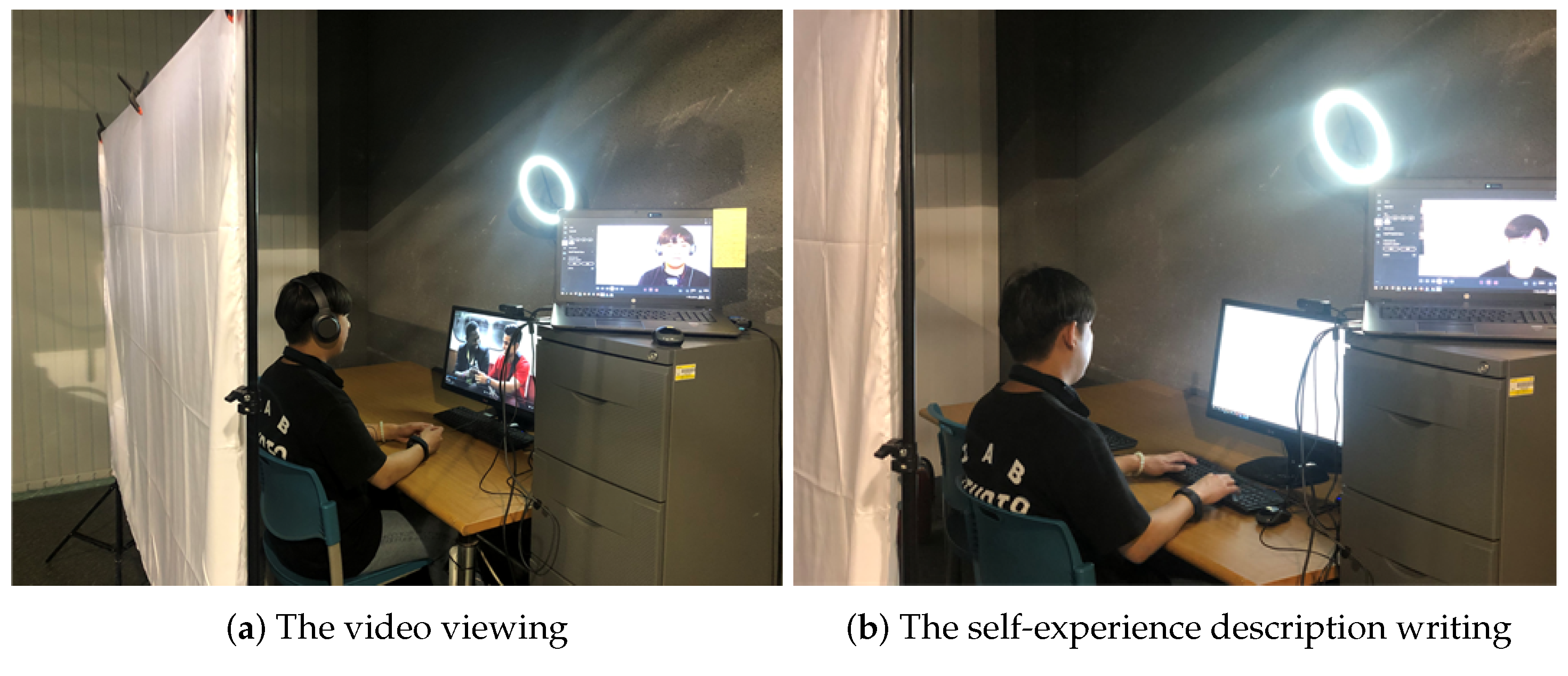

To study the eight emotions defined in

Section 4.2, we needed to induce the participants into each emotion situation or state. We applied a technique that combines film watching and writing passages, as shown in

Figure 6. After watching a 4–6 min video to induce the desired emotion, the researchers asked 70 people who are not familiar with our study to watch the video online and gathered their opinion about emotional state after viewing. After confirming that the emotion was induced as intended, the video was used in this experiment. To increase the emotions’ duration and reinforce the emotions induced through the video, we asked the participants to freely describe for 13 min their own experiences related to the emotions induced. The video viewing and self-experience description are two of the most valid emotion induction and reinforcement techniques [

53]. During video viewing and self-experience description, we recorded the driver’s facial image and measured his/her EDA. After that, the participants’ self-reported emotions were asked through a survey. Then, the driving was carried out for 5 min in the driving simulator. We recorded the driver’s face image and measured his/her EDA. After finishing the driving, the experimenters debriefed the purpose of the study. In other words, by neutralizing the participants’ emotions, we made sure that the participants’ moods are close to the baseline level when they are leaving the laboratory.

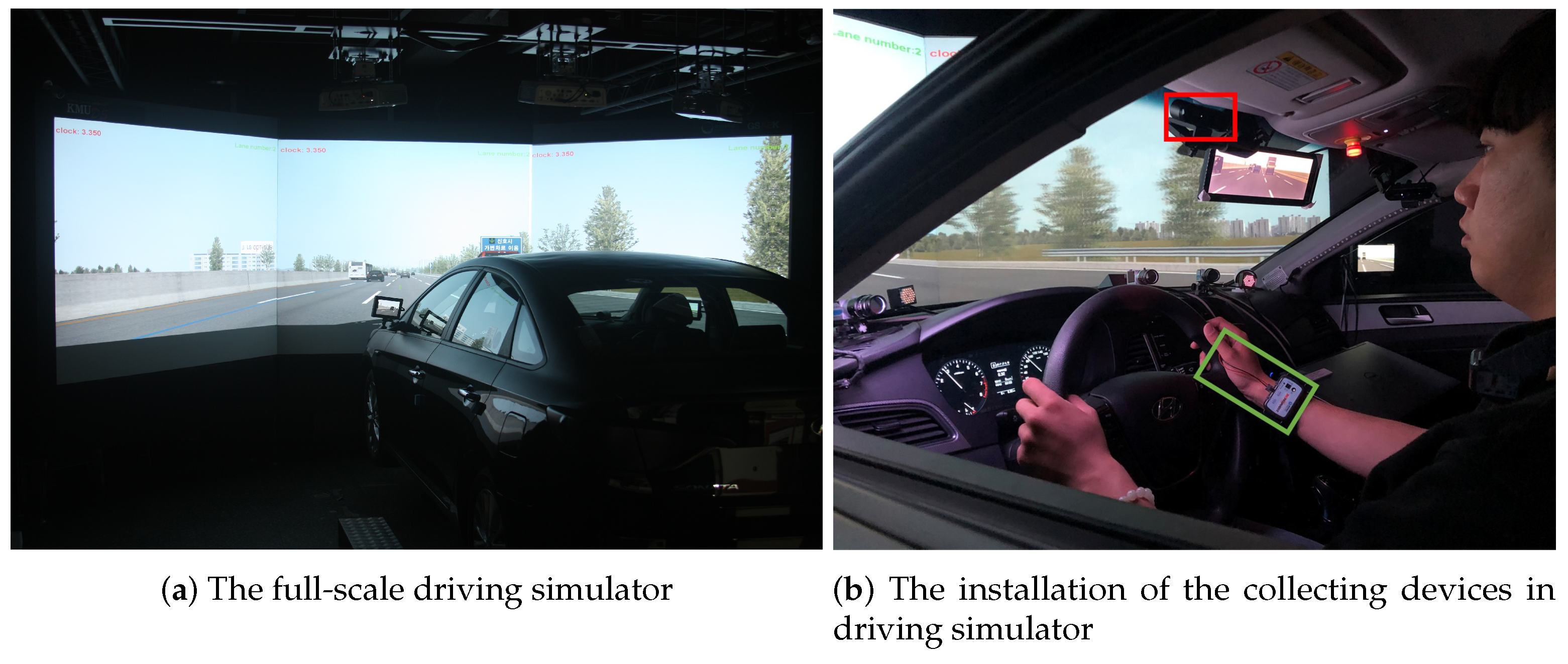

In the experiment, we used a full-scale driving simulator with six DOF motion base equipped with AV Simulation’s SCANeR Studio 1.7 (AVSimulation, Boulogne-Billancourt, France,

https://www.avsimulation.com/ (accessed on 18 March 2021)). The LF Sonata, a Hyundai midsize sedan, was utilized as a cabin. Three-channel projectors and three 2080 mm × 1600 mm screens were connected horizontally to visualize the driving scene. The participants’ physiological signals of EDA was collected using a BioPac bioinstrument (BIOPAC Systems, Inc., Goleta, CA, USA,

https://www.biopac.com/ (accessed on 18 March 2021)). The bioinstrument guarantees excess 70 dB of signal to noise ratio (SNR). To acquire a reliable EDA signal, we removed the dead skin cells on the hand to prevent the interruption of signal collection and applied an isotonic electrode paste to the electrode for increasing accuracy. In addition, before starting all experiments, we observed the EDA waveform to confirm that there is no visible noise throughout the signal. For the driver’s face image, we used BRIO 4K (Logitech, Lausanne, Switzerland,

https://www.logitech.com/, accessed on 18 March 2021) with 720 × 720 pixel and 30 fps for video viewing, self-experience description and in the driving simulator.

Figure 7a shows the full-scale driving simulator.

Figure 7b shows the installation of the BioPac bioinstrument and the camera in the driving simulator while the driver is driving. The camera was installed between the windshield and the headliner in front of the sun visor to avoid that driver’s face being partially occluded by the steering wheel or hand.

Among the data collected through simulation, the video and EDA data collected while driving were used for training and evaluating the SFER model and the rest of the data were used for reference. The driving data were acquired while each volunteer was driving for about 5 min per each emotion. The driver’s facial expressions images were acquired with a 30 Hz sampling rate, and the EDA data were acquired when considering a 100 Hz sampling rate. The acquired driving data cannot be used for training of the FER models. To train the FER models, the true valence and arousal values are required for each facial image, but the driving data involve the induced emotion as the ground truth label.

To validate the effectiveness of input features and model structure, we proposed various SFER models. Each proposed SFER model, evaluated in this study, consists of fully connected layers as the input layer, output layer and multiple hidden layers. Every output vector from the hidden layers passes through the ReLU activation function, and the output vector from the output layer passes through the softmax activation function. In the proposed SFER model, we set the number of output layer units to 8 because the number of emotional state categories for driver

is defined in eight categories, as described in

Section 4.2. The models are distinguished by their input features, several hidden layers

and several maximum units

of hidden layers. In the following, the front part of the model name means the kind of input features. If the front part of the model name is VA or E, the model uses only the output value of the FER model

or the EDA

value as an input. If the model name starts with VAE, both of the output value of the FER model

and EDA

value are used as input values. The number in parentheses of the model name means the number of hidden layers and maximum units as

. All the proposed SFER models are described as follows.

Table 3 presents their detailed configurations.

E: This only uses EDA value measured as the input value. The number of input layer units is 1, and this SFER model recognizes the driver’s emotional state with only bio-physiological information. The number of hidden layers is 3 and the number of maximum units is 64.

E: This only uses EDA value measured equal to E. However, the number of layers and maximum units of the model are made deeper and wider than E. The number of hidden layers is 8 and the number of maximum units is 512.

VA: This only uses valence and arousal values from the FER model as input values. The number of input layer units is 2, and this SFER model recognizes the driver’s emotional state with only the FER information. The number of hidden layers is 3 and the number of maximum units is 64.

VA: This only uses valence and arousal values equal to VA. However, the number of layers and maximum units of the model are made deeper and wider than VA. The number of hidden layers is 8 and the number of maximum units is 512.

VAE: This uses valence , arousal and EDA values as input values. The number of input layer units is 3, and this SFER model recognizes the driver’s emotional state with both FER and bio-physiological information. The number of hidden layers is 3 and the number of maximum units is 64.

VAE: This uses valence , arousal and EDA values equal to VAE. However, the number of layers and maximum units of the model are made deeper and wider than VAE. The number of hidden layers is 8 and the number of maximum units is 512.

VAE: This uses valence , arousal , and EDA values as input values. The number of hidden layers is 9 and the number of maximum units is 1024.

VAE: This uses valence , arousal and EDA values as input values. The number of hidden layers is 9 and the number of maximum units is 2048.

VAE: This uses valence , arousal and EDA values as input values. The number of hidden layers is 11 and the number of maximum units is 1024.

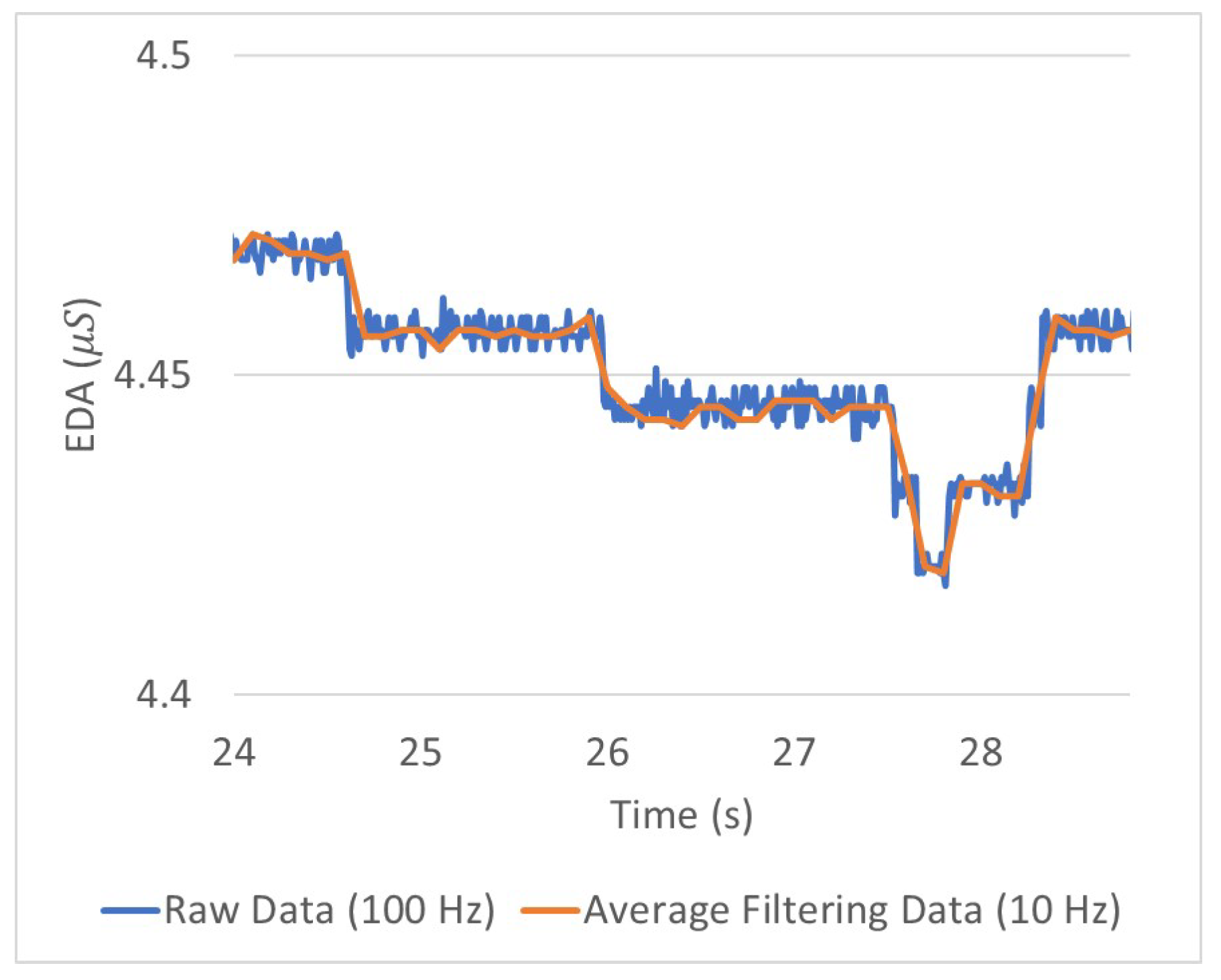

The minimum vehicle control cycle required by the industry is 10 Hz. To satisfy this requirement, all the proposed SFER models have a 10 Hz recognition frequency. The time window of input data was set to 0.1 s because the driver’s emotions can change in the same period according to the vehicle control state that changes at least 10 Hz in a period. Hence, to train and evaluate these proposed SFER models, filtering was required for each input datum,

,

and

E. The output value of the FER model

, which has valence and arousal value per input image, has a sampling rate of 30 Hz. Therefore, the filtering value was calculated as the average value of three valence and arousal values for the previous 0.1 s in every 10 Hz. Because when the EDA data were acquired a 100 Hz sampling rate was considered, the filtering value of the EDA value

was calculated as the average value of ten EDA values for the previous 0.1 s in every 10 Hz. The average filtering reduces the fine residual noise remaining in the EDA waveform, as shown in

Figure 8.

Through the average filtering with a time window of 0.1 s, the total number of input data in which one valence, one arousal and one EDA value set is observed is 310,389. The data were divided into training and test sets at an 8:2 ratio; 20% of the training set was used for validation to prevent overfitting. Hence, we trained the SFER model with a training and validation set containing 198,610 and 49,653 data, respectively. We evaluated the trained SFER model with a test set involving 62,066 data.

The proposed SFER models require all input and output to be numeric because they operate by a series of numerical operations from input to output. This means that the driver’s defined emotional state categories

must be converted to a numerical form, and the SFER models’ output value

is needed to be converted back into the categories

. One-hot encoding, which is the most widespread approach for this conversion, creates a separate binary column for each possible category and inserts 1 into the corresponding column. The converted categories

is shown as follows:

where

, as defined in

Section 4.2. Then, induced emotion to the driver

composed of

C is also converted into

, comprised of

. Hence, we can find the numerical cross-entropy loss between the induced emotion (

) and predicted emotion

:

where

n is the number of training samples,

is the one-hot encoded predicted emotion of the

ith training sample and

is the one-hot encoded induced emotion of the

ith training sample. The sum of all elements of

is 1 because the output vector passed through softmax activation function to predict. We used the Adam algorithm [

63], the same as the FER model training, to optimize the parameter of our proposed SFER models. We set the learning rate to 0.001 and the first and second moments to 0.9 and 0.999, respectively. We tried to train over 30 epochs (over 5,958,300 iterations), and the accuracy evaluation of each model was performed when the loss value on the validation set reached stable point. In order for trained model to output the recognized emotional state, we obtained the index with the largest value of

and converted it to the emotional category of the corresponding index among the

C. Through this conversion,

was converted back into the recognized real emotional state (

), comprised of

C. We compared the SFER models’ accuracy through the correctly recognized ratio with

and

Y.