DeepLocBox: Reliable Fingerprinting-Based Indoor Area Localization

Abstract

1. Introduction

2. Related Work

2.1. Quantification of Localization Performance

2.2. Deep Learning for Fingerprinting

- Point estimation: Xiao et al. [31] modeled the problem as regression task and applied a deep MLP model to estimate the position. Jaafar and Saab [32] realized point estimation using a MLP regression model after initial room classification. Using the data collected during walking along a predefined path, Sahar and Han [33] as well as Xu et al. [34], Elbes et al. [35], and Chen et al. [15] utilized LSTM with a regression output layer to predict the exact position. Ibrahim et al. [36] utilized a convolutional neural network (CNN) on RSS time-series data to estimate the coordinate on the lowest layer of their hierarchical prediction model (building and floor on higher levels). In a multi-task deep learning system, Lin et al. [37] utilized a MLP with regression output at the final stage of their architecture to estimate the position of the user. Wang et al. [38] utilized Angle of Arrival (AoA) images extracted from channel state information (CSI) as input to train a CNN network with regression output for point estimation. Li et al. [39] predicted the uncertainty of the fingerprint location estimation via an artificial neural network (ANN). They used the uncertainty to adapt the measurement noise in an extended Kalman filter that integrates the WLAN fingerprinting information.

- Reference point classification: Mittal et al. [41] as well as Sharan and Hwang [42] utilized CNN to predict a unique reference point location modeled as classification problem. Li et al. [43] applied restricted Boltzmann machines (RBM) on CSI fingerprinting data to estimate a reference point location. Chen et al. [44] tackled device-free localization. They located a person within a room (determined the correct reference point) by applying CNN on CSI data. Using geomagnetic field data, Al-homayani and Mahoor [45] classified the reference point of users carrying a smartwatch. Rizk et al. [46] utilized cellular data for deep learning-based reference point classification. In the work by Shao et al. [47], magnetic and WLAN data were combined using a CNN.

- Area classification: Whereas [48] Liu et al. estimated the probability over predefined areas, Laska et al. [18] proposed a framework for adaptive indoor area localization using deep learning to classify the correct segment of a set of predefined segments. Njima et al. [49] constructed 3D input images that consist of the RSS data and the kurtosis values derived from the RSS data. Those are fed to a CNN that predicts the correct area/region of a pre-segmented floor plan.

- Building/floor classification: Kim et al. [50] proposed a deep model consisting of stacked auto-encoders (SAE) and a MLP for hierarchical classification of buildings and floors. Gu et al. [51] utilized a combination of SAE on WLAN fingerprints and additional sensor data for floor identification. Song et al. [52] determined buildings and floors by combining SAE and a one-dimensional CNN. Additionally, they equiped their model with the standard regression head to estimate the position given a classified floor.

2.3. Technologies Applied for Fingerprinting

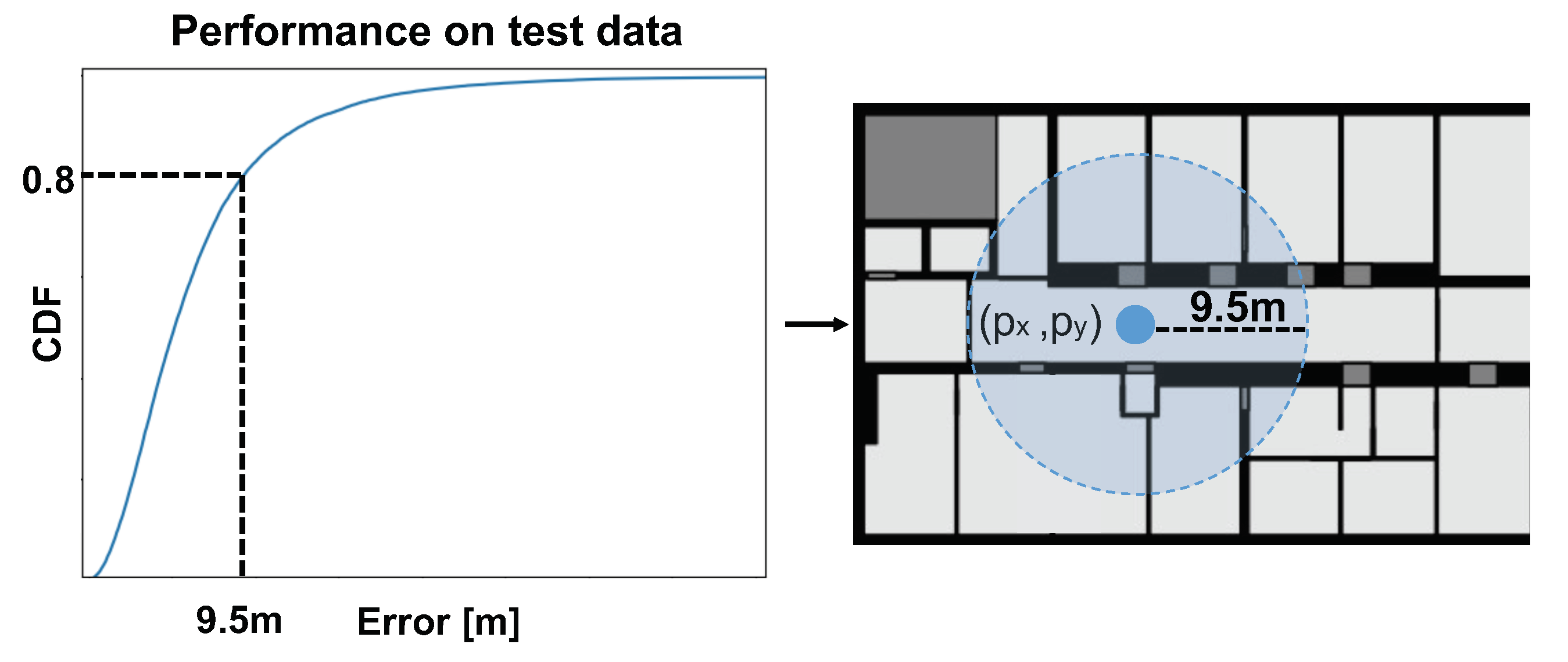

3. Quantification of Localization Performance

3.1. Space Estimation

3.2. Point Estimation

4. DeepLocBox (DLB)

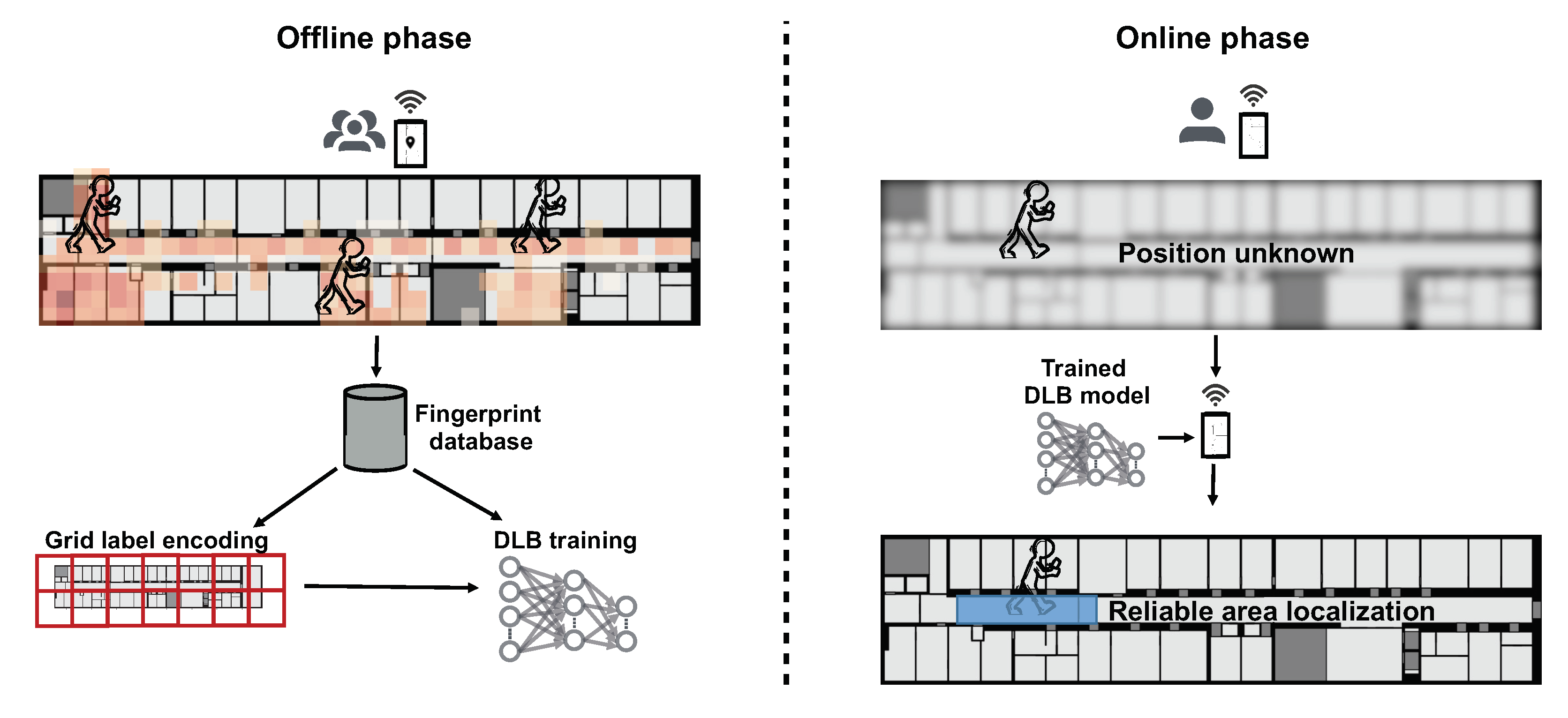

4.1. System Overview

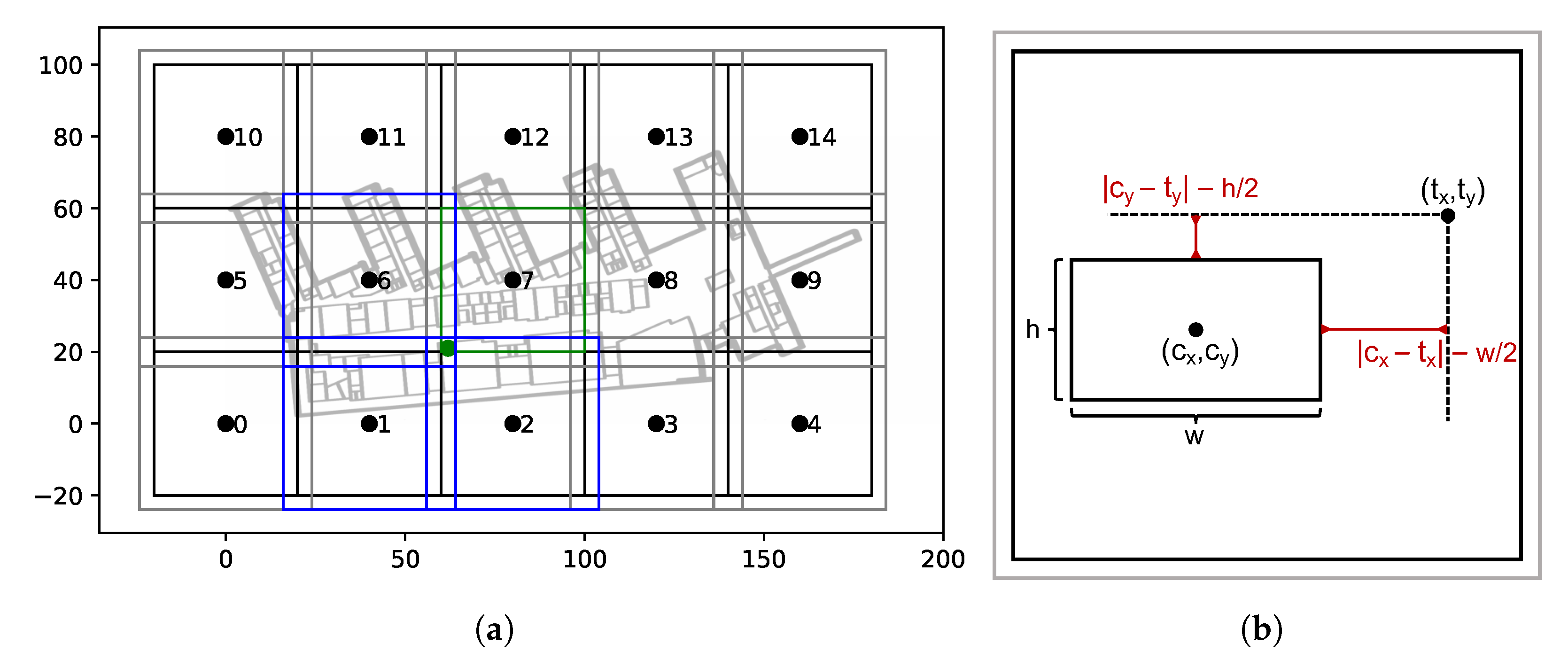

4.2. Model Description

- Center loss: The center loss captures the deviation in the predicted center of the box from the ground truth point. is given as the squared distance:

- Size loss: The size loss regulates the box dimensions. We define it as

4.3. Data Encoding and Label Augmentation

4.4. Derivatives of Loss Function

- Center loss: is independent of the width and the height of the predicted box; therefore, it holds thatThe partial derivatives with respect to the center are given asand analogously

- Size loss: The delta values of are given as

5. Evaluation

5.1. Model Architecture and Preprocessing

5.2. Datasets

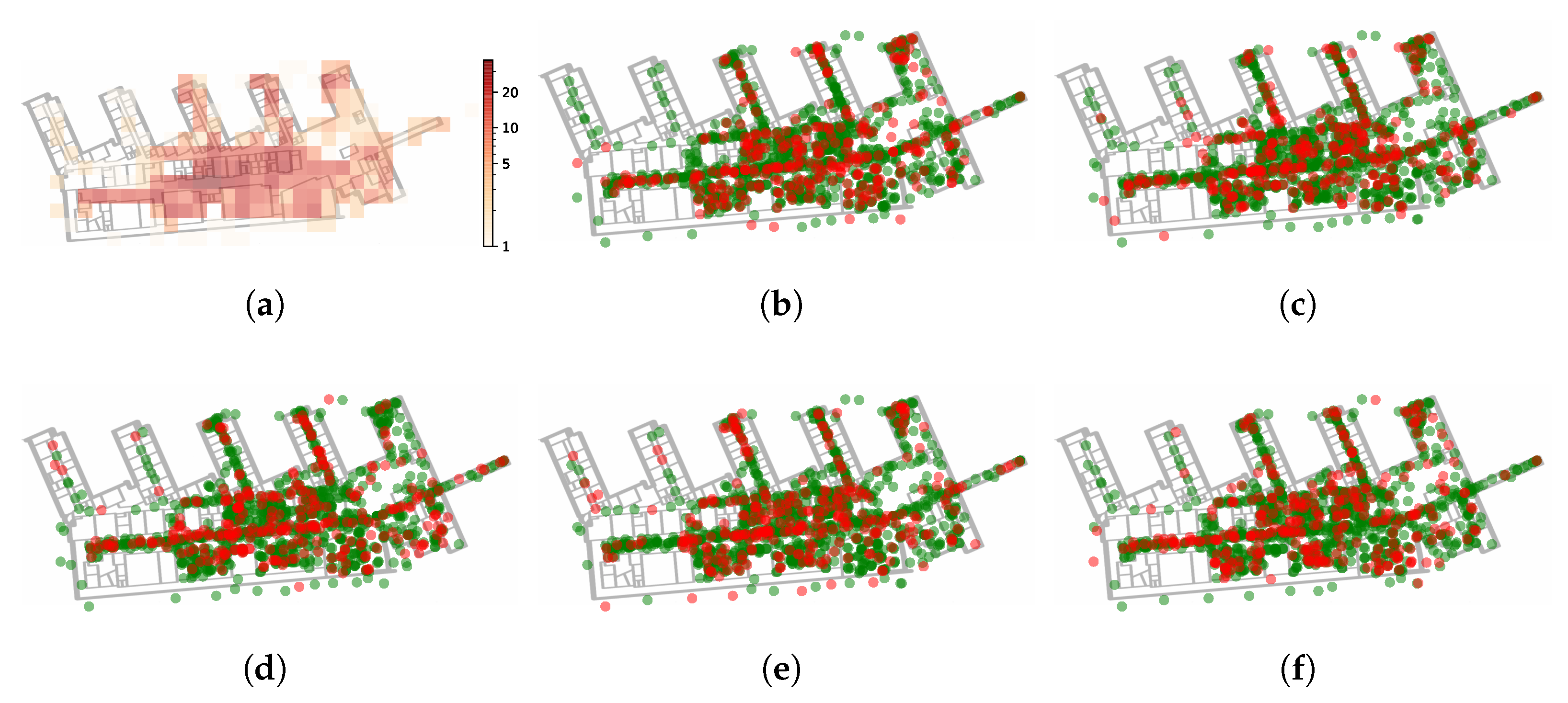

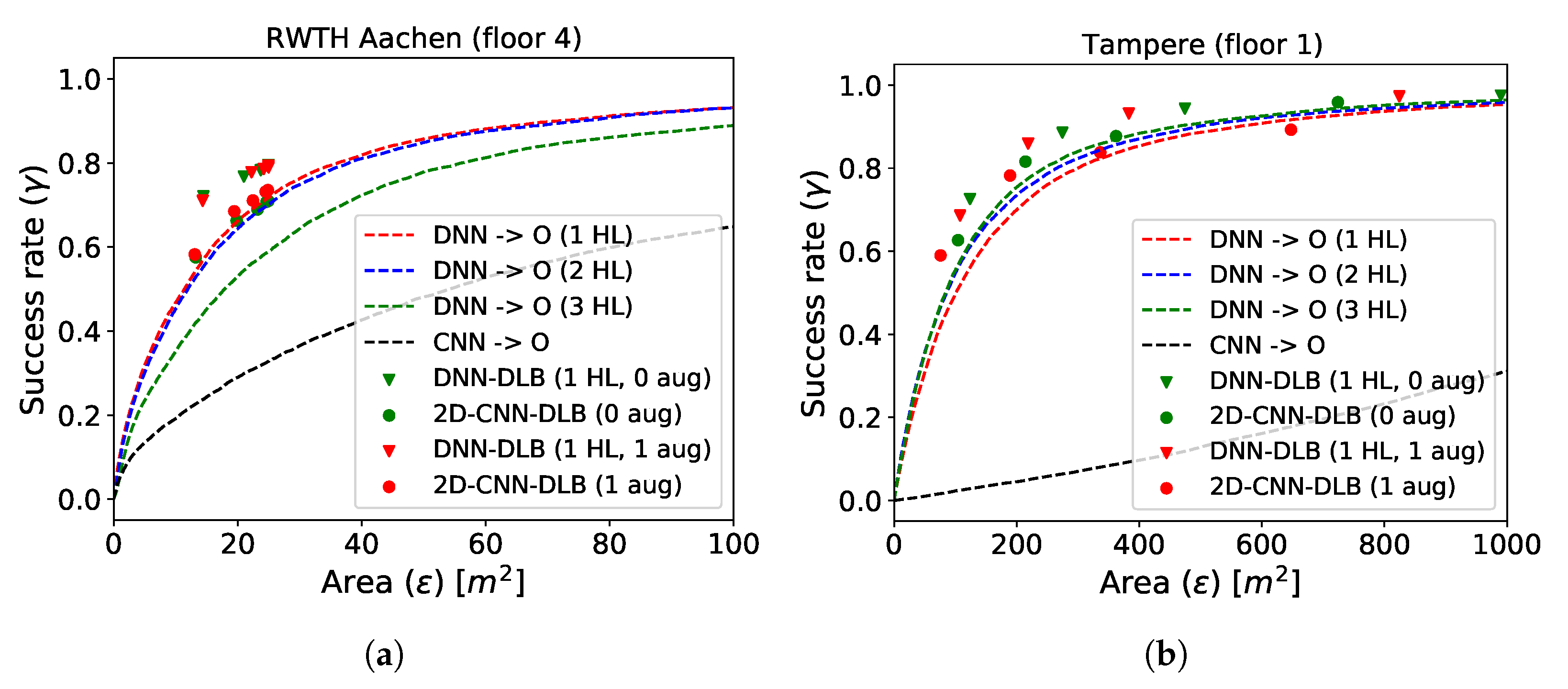

5.2.1. Dataset Collected in RWTH Aachen University

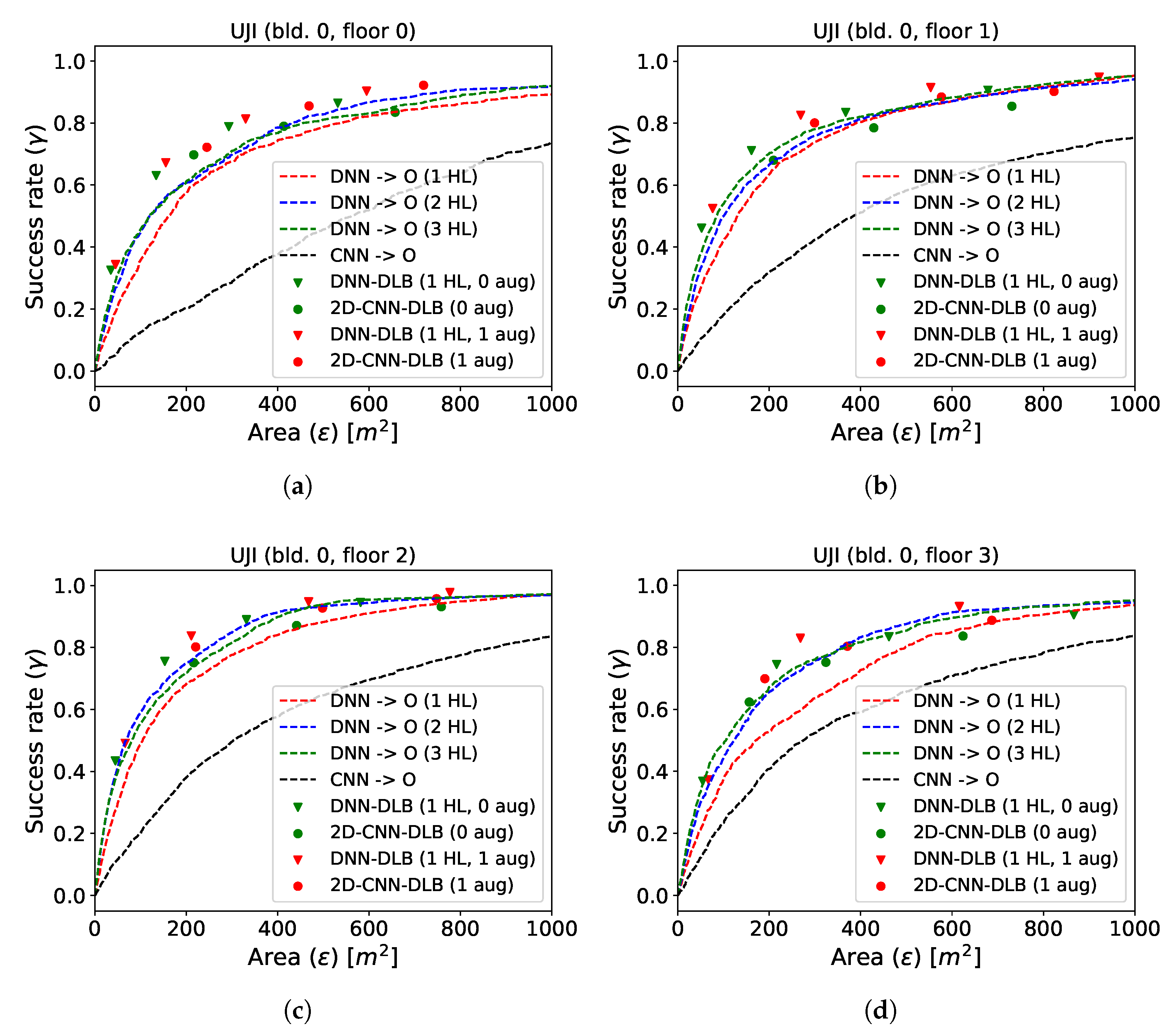

5.2.2. UJIIndoorLoc Dataset

5.2.3. Dataset Collected in Tampere, Finland

5.3. Performance Analysis

5.3.1. Single Building/Floor Positioning

5.3.2. Multi-Building/Multi-Floor Positioning

5.4. Discussion

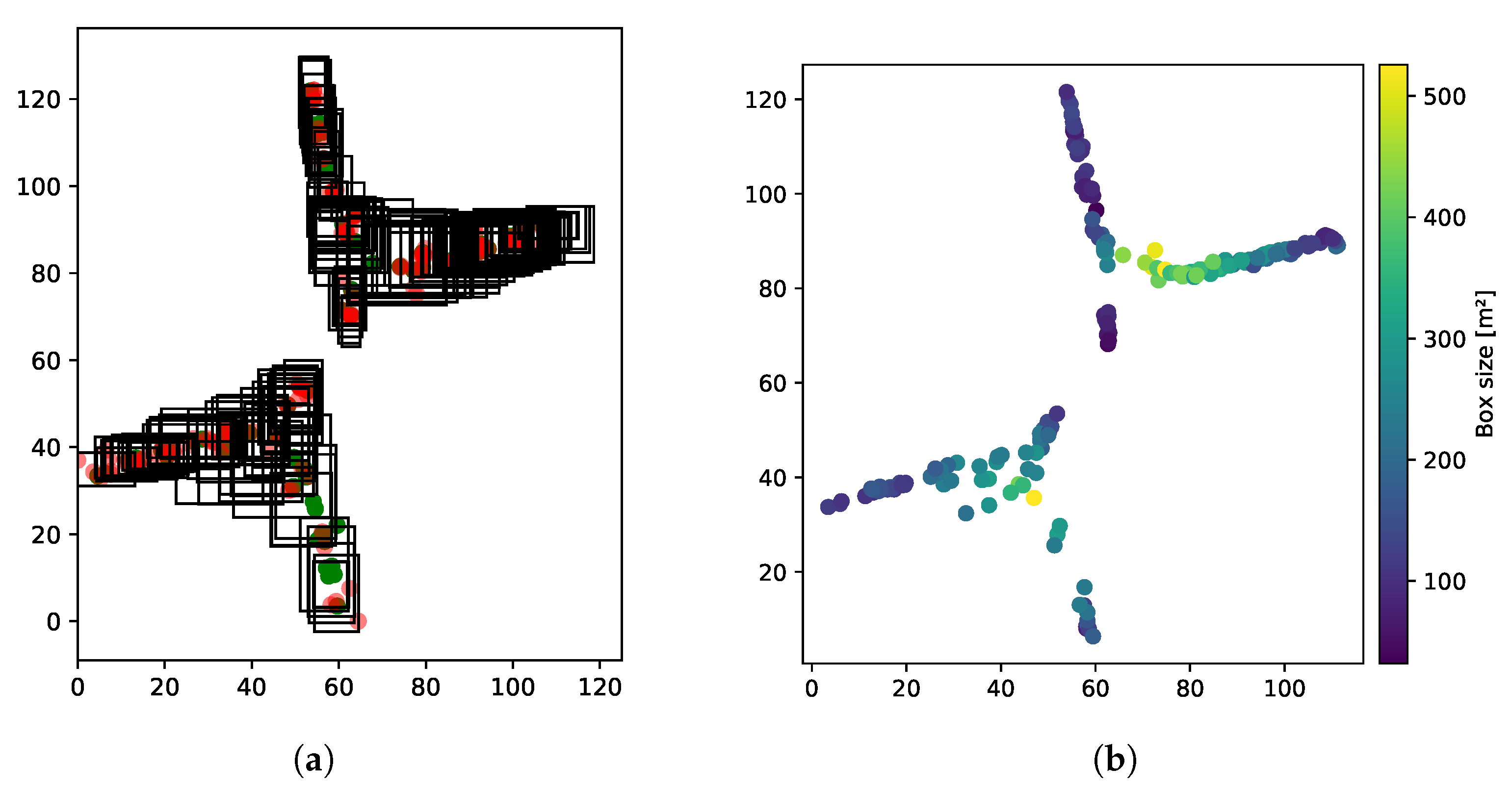

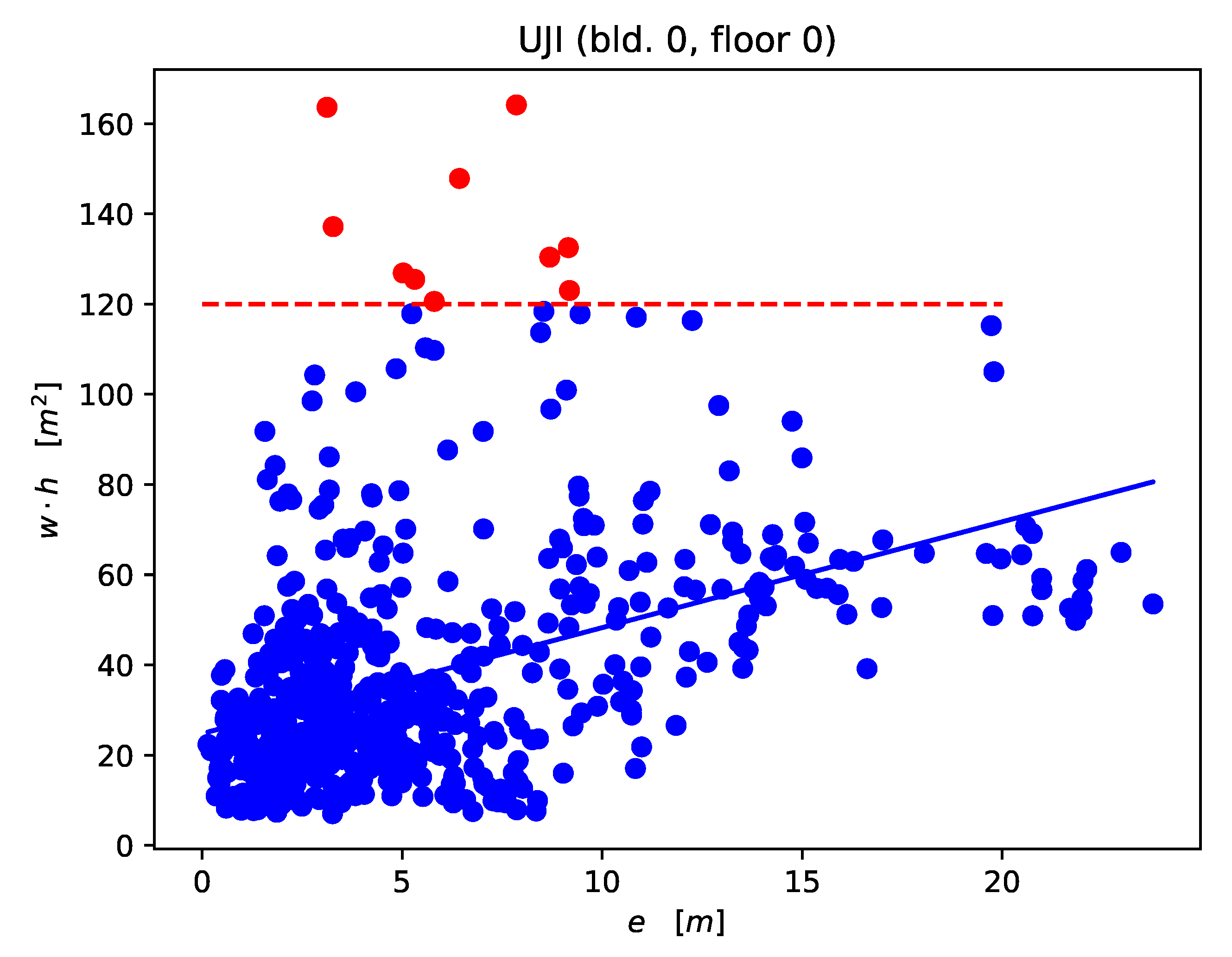

5.4.1. Correlation between Box Size and Center Error

- (1)

- A significant positive correlation between the box size and the center error of the predicted boxes exists.

- (2)

- The correlation between error and box size is larger for the same components as opposed to the opposite components.

5.4.2. Multi-Building/Multi-Floor Performance

5.4.3. Limitations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Basiri, A.; Lohan, E.S.; Moore, T.; Winstanley, A.; Peltola, P.; Hill, C.; Amirian, P.; e Silva, P.F. Indoor Location Based Services Challenges, Requirements and Usability of Current Solutions. Comput. Sci. Rev. 2017, 24, 1–12. [Google Scholar] [CrossRef]

- Zafari, F.; Gkelias, A.; Leung, K.K. A Survey of Indoor Localization Systems and Technologies. IEEE Commun. Surv. Tutor. 2019, 21, 2568–2599. [Google Scholar] [CrossRef]

- D’Aloia, M.; Cortone, F.; Cice, G.; Russo, R.; Rizzi, M.; Longo, A. Improving Energy Efficiency in Building System Using a Novel People Localization System. In Proceedings of the 2016 IEEE Workshop on Environmental, Energy, and Structural Monitoring Systems (EESMS), Bari, Italy, 13–14 June 2016; IEEE: Bari, Italy, 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Wang, Y.; Shao, L. Understanding Occupancy Pattern and Improving Building Energy Efficiency through Wi-Fi Based Indoor Positioning. Build. Environ. 2017, 114, 106–117. [Google Scholar] [CrossRef]

- Xiao, J.; Zhou, Z.; Yi, Y.; Ni, L.M. A Survey on Wireless Indoor Localization from the Device Perspective. ACM Comput. Surv. 2016, 49, 1–31. [Google Scholar] [CrossRef]

- Sanam, T.F.; Godrich, H. An Improved CSI Based Device Free Indoor Localization Using Machine Learning Based Classification Approach. In Proceedings of the 2018 26th European Signal Processing Conference (EUSIPCO), Roma, Italy, 3–7 September 2018; IEEE: Rome, Italy, 2018; pp. 2390–2394. [Google Scholar] [CrossRef]

- Ahmetovic, D.; Murata, M.; Gleason, C.; Brady, E.; Takagi, H.; Kitani, K.; Asakawa, C. Achieving Practical and Accurate Indoor Navigation for People with Visual Impairments. In Proceedings of the 14th Web for All Conference on The Future of Accessible Work—W4A’17, Perth, Australia, 2–4 April 2017; ACM Press: Perth, Australia, 2017; pp. 1–10. [Google Scholar] [CrossRef]

- Ho, T.W.; Tsai, C.J.; Hsu, C.C.; Chang, Y.T.; Lai, F. Indoor Navigation and Physician-Patient Communication in Emergency Department. In Proceedings of the 3rd International Conference on Communication and Information Processing—ICCIP’17, Tokyo Japan, 24–26 November 2017; ACM Press: Tokyo, Japan, 2017; pp. 92–98. [Google Scholar] [CrossRef]

- Real Ehrlich, C.; Blankenbach, J. Indoor Localization for Pedestrians with Real-Time Capability Using Multi-Sensor Smartphones. Geo Spat. Inf. Sci. 2019, 22, 73–88. [Google Scholar] [CrossRef]

- Kárník, J.; Streit, J. Summary of Available Indoor Location Techniques. IFAC Pap. 2016, 49, 311–317. [Google Scholar] [CrossRef]

- He, S.; Chan, S.H. Wi-Fi Fingerprint-Based Indoor Positioning: Recent Advances and Comparisons. IEEE Commun. Surv. Tutor. 2016, 18, 466–490. [Google Scholar] [CrossRef]

- Xia, S.; Liu, Y.; Yuan, G.; Zhu, M.; Wang, Z. Indoor Fingerprint Positioning Based on Wi-Fi: An Overview. ISPRS Int. J. Geo Inf. 2017, 6, 135. [Google Scholar] [CrossRef]

- Alarifi, A.; Al-Salman, A.; Alsaleh, M.; Alnafessah, A.; Al-Hadhrami, S.; Al-Ammar, M.; Al-Khalifa, H. Ultra Wideband Indoor Positioning Technologies: Analysis and Recent Advances. Sensors 2016, 16, 707. [Google Scholar] [CrossRef]

- Li, T.; Wang, H.; Shao, Y.; Niu, Q. Channel State Information–Based Multi-Level Fingerprinting for Indoor Localization with Deep Learning. Int. J. Distrib. Sens. Netw. 2018, 14, 1550147718806719. [Google Scholar] [CrossRef]

- Chen, Z.; Zou, H.; Yang, J.; Jiang, H.; Xie, L. WiFi Fingerprinting Indoor Localization Using Local Feature-Based Deep LSTM. IEEE Syst. J. 2019, 14, 3001–3010. [Google Scholar] [CrossRef]

- Hsieh, C.H.; Chen, J.Y.; Nien, B.H. Deep Learning-Based Indoor Localization Using Received Signal Strength and Channel State Information. IEEE Access 2019, 7, 33256–33267. [Google Scholar] [CrossRef]

- Haider, A.; Wei, Y.; Liu, S.; Hwang, S.H. Pre- and Post-Processing Algorithms with Deep Learning Classifier for Wi-Fi Fingerprint-Based Indoor Positioning. Electronics 2019, 8, 195. [Google Scholar] [CrossRef]

- Laska, M.; Blankenbach, J.; Klamma, R. Adaptive Indoor Area Localization for Perpetual Crowdsourced Data Collection. Sensors 2020, 20, 1443. [Google Scholar] [CrossRef]

- He, S.; Tan, J.; Chan, S.H.G. Towards Area Classification for Large-Scale Fingerprint-Based System. In Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Heidelberg, Germany, 12–16 September 2016; pp. 232–243. [Google Scholar] [CrossRef]

- López-pastor, J.A.; Ruiz-ruiz, A.J.; Martínez-sala, A.S.; Gómez-, J.L. Evaluation of an Indoor Positioning System for Added-Value Services in a Mall. In Proceedings of the 2019 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Pisa, Italy, 30 September–3 October 2019. [Google Scholar]

- Wei, J.; Zhou, X.; Zhao, F.; Luo, H.; Ye, L. Zero-Cost and Map-Free Shop-Level Localization Algorithm Based on Crowdsourcing Fingerprints. In Proceedings of the 5th IEEE Conference on Ubiquitous Positioning, Indoor Navigation and Location-Based Services, UPINLBS, Wuhan, China, 22–23 March 2018; Institute of Electrical and Electronics Engineers Inc.: New York, NY, USA, 2018. [Google Scholar] [CrossRef]

- International Organization for Standardization. ISO 5725-1: 1994: Accuracy (Trueness and Precision) of Measurement Methods and Results-Part 1: General Principles and Definitions; International Organization for Standardization: Geneva, Switzerland, 1994. [Google Scholar]

- Liu, H.; Darabi, H.; Banerjee, P.; Liu, J. Survey of Wireless Indoor Positioning Techniques and Systems. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 2007, 37, 1067–1080. [Google Scholar] [CrossRef]

- Gu, Y.; Lo, A.; Niemegeers, I. A Survey of Indoor Positioning Systems for Wireless Personal Networks. IEEE Commun. Surv. Tutor. 2009, 11, 13–32. [Google Scholar] [CrossRef]

- Potortì, F.; Park, S.; Ruiz, A.R.J.; Barsocchi, P.; Girolami, M.; Crivello, A.; Lee, S.Y.; Lim, J.H.; Torres-Sospedra, J.; Seco, F.; et al. Comparing the Performance of Indoor Localization Systems through the EvAAL Framework. Sensors 2017, 17, 2327. [Google Scholar] [CrossRef]

- Haute, T.V.; Poorter, E.D.; Lemic, F.; Handziski, V.; Wiström, N.; Voigt, T.; Wolisz, A.; Moerman, I. Platform for benchmarking of RF-based indoor localization solutions. IEEE Commun. Mag. 2015, 53, 126–133. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Li, J.; Li, Q.; Chen, N.; Wang, Y. Indoor Pedestrian Trajectory Detection with LSTM Network. In Proceedings of the 2017 IEEE International Conference on Computational Science and Engineering and IEEE/IFIP International Conference on Embedded and Ubiquitous Computing, CSE and EUC, Guangzhou, China, 21–24 July 2017. [Google Scholar] [CrossRef]

- Hoang, M.T.; Yuen, B.; Dong, X.; Lu, T.; Westendorp, R.; Reddy, K. Recurrent Neural Networks for Accurate RSSI Indoor Localization. IEEE Internet Things J. 2019, 6, 10639–10651. [Google Scholar] [CrossRef]

- Bishop, C.M. Pattern Recognition and Machine Learning; Information Science and Statistics; Springer: New York, NY, USA, 2006. [Google Scholar]

- Xiao, L.; Mathar, R. A Deep Learning Approach to Fingerprinting Indoor Localization Solutions. In Proceedings of the 2017 27th International Telecommunication Networks and Applications Conference (ITNAC), Melbourne, Australia, 22–24 November 2017. [Google Scholar]

- Jaafar, R.H.; Saab, S.S. A Neural Network Approach for Indoor Fingerprinting-Based Localization. In Proceedings of the 2018 9th IEEE Annual Ubiquitous Computing, Electronics Mobile Communication Conference (UEMCON), New York, NY, USA, 8–10 November 2018; pp. 537–542. [Google Scholar] [CrossRef]

- Sahar, A.; Han, D. An LSTM-Based Indoor Positioning Method Using Wi-Fi Signals. In ACM International Conference Proceeding Series; Association for Computing Machinery: New York, NY, USA, 2018. [Google Scholar] [CrossRef]

- Xu, B.; Zhu, X.; Zhu, H. An Efficient Indoor Localization Method Based on the Long Short-Term Memory Recurrent Neuron Network. IEEE Access 2019, 7, 123912–123921. [Google Scholar] [CrossRef]

- Elbes, M.; Almaita, E.; Alrawashdeh, T.; Kanan, T.; AlZu’bi, S.; Hawashin, B. An Indoor Localization Approach Based on Deep Learning for Indoor Location-Based Services. In Proceedings of the 2019 IEEE Jordan International Joint Conference on Electrical Engineering and Information Technology (JEEIT), Amman, Jordan, 9–11 April 2019; pp. 437–441. [Google Scholar] [CrossRef]

- Ibrahim, M.; Torki, M.; ElNainay, M. CNN Based Indoor Localization Using RSS Time-Series. In Proceedings of the 2018 IEEE Symposium on Computers and Communications (ISCC), Natal, Brazil, 25–28 June 2018; pp. 1044–1049. [Google Scholar] [CrossRef]

- Lin, W.; Huang, C.; Duc, N.; Manh, H. Wi-Fi Indoor Localization Based on Multi-Task Deep Learning. In Proceedings of the 2018 IEEE 23rd International Conference on Digital Signal Processing (DSP), Shanghai, China, 19–21 November 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Wang, X.; Wang, X.; Mao, S. Deep Convolutional Neural Networks for Indoor Localization with CSI Images. IEEE Trans. Netw. Sci. Eng. 2018, 7, 316–327. [Google Scholar] [CrossRef]

- Li, Y.; Gao, Z.; He, Z.; Zhuang, Y.; Radi, A.; Chen, R.; El-Sheimy, N. Wireless Fingerprinting Uncertainty Prediction Based on Machine Learning. Sensors 2019, 19, 324. [Google Scholar] [CrossRef]

- Li, D.; Lei, Y. Deep Learning for Fingerprint-Based Outdoor Positioning via LTE Networks. Sensors 2019, 19, 5180. [Google Scholar] [CrossRef] [PubMed]

- Mittal, A.; Tiku, S.; Pasricha, S. Adapting Convolutional Neural Networks for Indoor Localization with Smart Mobile Devices. In Proceedings of the 2018 on Great Lakes Symposium on VLSI, Chicago, IL, USA, 23–25 May 2018; Association for Computing Machinery: New York, NY, USA, 2018; pp. 117–122. [Google Scholar] [CrossRef]

- Sinha, R.S.; Hwang, S.H. Comparison of CNN Applications for Rssi-Based Fingerprint Indoor Localization. Electronics 2019, 8, 989. [Google Scholar] [CrossRef]

- Li, X.; Shi, J.; Zhao, J. DeFe: Indoor Localization Based on Channel State Information Feature Using Deep Learning. In Journal of Physics: Conference Series; Institute of Physics Publishing: Bristol, UK, 2019; Volume 1303. [Google Scholar] [CrossRef]

- Chen, K.M.; Chang, R.Y.; Liu, S.J. Interpreting Convolutional Neural Networks for Device-Free Wi-Fi Fingerprinting Indoor Localization via Information Visualization. IEEE Access 2019, 7, 172156–172166. [Google Scholar] [CrossRef]

- Al-homayani, F.; Mahoor, M. Improved Indoor Geomagnetic Field Fingerprinting for Smartwatch Localization Using Deep Learning. In Proceedings of the 2018 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Nantes, France, 24–27 September 2018; pp. 1–8. [Google Scholar] [CrossRef]

- Rizk, H.; Torki, M.; Youssef, M. CellinDeep: Robust and Accurate Cellular-Based Indoor Localization via Deep Learning. IEEE Sensors J. 2019, 19, 2305–2312. [Google Scholar] [CrossRef]

- Shao, W.; Luo, H.; Zhao, F.; Ma, Y.; Zhao, Z.; Crivello, A. Indoor Positioning Based on Fingerprint-Image and Deep Learning. IEEE Access 2018, 6, 74699–74712. [Google Scholar] [CrossRef]

- Liu, H.X.; Chen, B.A.; Tseng, P.H.; Feng, K.T.; Wang, T.S. Map-Aware Indoor Area Estimation with Shortest Path Based on RSS Fingerprinting. In Proceedings of the 2015 IEEE 81st Vehicular Technology Conference (VTC Spring), Glasgow, UK, 11–14 May 2015. [Google Scholar] [CrossRef]

- Njima, W.; Ahriz, I.; Zayani, R.; Terre, M.; Bouallegue, R. Deep CNN for Indoor Localization in IoT-Sensor Systems. Sensors 2019, 19, 3127. [Google Scholar] [CrossRef] [PubMed]

- Kim, K.S.; Lee, S.; Huang, K. A Scalable Deep Neural Network Architecture for Multi-Building and Multi-Floor Indoor Localization Based on Wi-Fi Fingerprinting. Big Data Anal. 2018, 3, 4. [Google Scholar] [CrossRef]

- Gu, F.; Blankenbach, J.; Khoshelham, K.; Grottke, J.; Valaee, S. ZeeFi: Zero-Effort Floor Identification with Deep Learning for Indoor Localization. In Proceedings of the 2019 IEEE Global Communications Conference (GLOBECOM), Waikoloa, HI, USA, 9–13 December 2019; IEEE: Waikoloa, HI, USA, 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Song, X.; Fan, X.; Xiang, C.; Ye, Q.; Liu, L.; Wang, Z.; He, X.; Yang, N.; Fang, G. A Novel Convolutional Neural Network Based Indoor Localization Framework With WiFi Fingerprinting. IEEE Access 2019, 7, 110698–110709. [Google Scholar] [CrossRef]

- Yang, Z.; Zhou, Z.; Liu, Y. From RSSI to CSI. ACM Comput. Surv. 2013, 46, 1–32. [Google Scholar] [CrossRef]

- Wang, X.; Wang, X.; Mao, S. CiFi: Deep Convolutional Neural Networks for Indoor Localization with 5 GHz Wi-Fi. In Proceedings of the 2017 IEEE International Conference on Communications (ICC), Paris, France, 21–25 May 2017; IEEE: Paris, France, 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Sinha, R.S.; Lee, S.M.; Rim, M.; Hwang, S.H. Data Augmentation Schemes for Deep Learning in an Indoor Positioning Application. Electronics 2019, 8, 554. [Google Scholar] [CrossRef]

- Elezi, I.; Torcinovich, A.; Vascon, S.; Pelillo, M. Transductive Label Augmentation for Improved Deep Network Learning. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; IEEE: Beijing, China, 2018; pp. 1432–1437. [Google Scholar] [CrossRef]

- Torres-Sospedra, J.; Montoliu, R.; Martinez-Uso, A.; Avariento, J.P.; Arnau, T.J.; Benedito-Bordonau, M.; Huerta, J. UJIIndoorLoc: A New Multi-Building and Multi-Floor Database for WLAN Fingerprint-Based Indoor Localization Problems. In Proceedings of the 2014 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Busan, Korea, 27–30 October 2014; IEEE: Busan, Korea, 2014; pp. 261–270. [Google Scholar] [CrossRef]

- Lohan, E.; Torres-Sospedra, J.; Leppäkoski, H.; Richter, P.; Peng, Z.; Huerta, J. Wi-Fi Crowdsourced Fingerprinting Dataset for Indoor Positioning. Data 2017, 2, 32. [Google Scholar] [CrossRef]

- Moreira, A.; Nicolau, M.J.; Meneses, F.; Costa, A. Wi-Fi Fingerprinting in the Real World - RTLS@UM at the EvAAL Competition. In Proceedings of the 2015 International Conference on Indoor Positioning and Indoor Navigation, IPIN 2015, Banff, AB, Canada, 13–16 October 2015; pp. 13–16. [Google Scholar] [CrossRef]

- James, G.; Witten, D.; Hastie, T.; Tibshirani, R. (Eds.) An Introduction to Statistical Learning: With Applications in R; Number 103 in Springer Texts in Statistics; Springer: New York, NY, USA, 2013. [Google Scholar]

| Model | Base | Head | |||||

|---|---|---|---|---|---|---|---|

| HL | HU | Activation Fct. | Dropout Prob. | Output Layer | Output Activation Fct. | Loss Fct. | |

| DNN | [1,2,3] | 512 | ReLU | 0.5 | 2 units | linear | MSE loss |

| DNN–DLB | DLB output layer (Equation (8)) | tanh | DLB loss fct (Equation (9)) | ||||

| Model | Base | Head | ||||

|---|---|---|---|---|---|---|

| Layer 1 | Layer 2 | Layer 3 | Layer 4 | Output Layer | Loss Fct. | |

| 2D-CNN | 16 × 16 Conv. + ReLU + 0.5 Dropout | 16 × 16 Conv. + ReLU + 8 × 8 Maxpool + 0.5 Dropout | 8 × 8 Conv. + ReLU + 4 × 4 Maxpool + 0.5 Dropout | Dense 128 + ReLU | 2 units + linear | MSE loss |

| 2D-CNN-DLB | DLB output layer (Equation (8)) + tanh | DLB loss fct (Equation (9)) | ||||

| Dataset | Additional Aug. Training Data | Size-Gain (No Aug.) [m] | Size-Gain (Aug.) [m] | SUCC-Gain (No Aug.) | SUCC-Gain (Aug.) |

|---|---|---|---|---|---|

| RWTH (floor 4) | 35.8% | −9.97 | −9.54 | 9.46% | 9.08% |

| Tampere (floor 1) | 49.80% | −179.23 | −207.33 | 5.48% | 6.66% |

| UJI (bld. 0) | 42.06% | −12.56 | −103.74 | 3.71% | 3.86% |

| Dataset | Model | Error [m] | ||||||

|---|---|---|---|---|---|---|---|---|

| Mean | Std | Min | 25% | 50% | 75% | Max | ||

| RWTH Aachen (floor 4) | DNN (1 HL) | 2.52 | 2.87 | 0.01 | 1.05 | 1.90 | 3.0 | 39.52 |

| DNN–DLB (1 HL) | 2.04 | 3.53 | 0.0 | 0.65 | 1.25 | 2.37 | 63.38 | |

| 2D-CNN | 5.31 | 4.45 | 0.01 | 2.23 | 4.15 | 7.2 | 37.38 | |

| 2D-CNN-DLB | 2.36 | 3.4 | 0.01 | 0.84 | 1.54 | 2.9 | 60.11 | |

| Tampere (floor 1) | DNN (3 HL) | 6.49 | 5.91 | 0.07 | 3.21 | 5.17 | 7.91 | 106.40 |

| DNN–DLB (1 HL, aug) | 5.33 | 6.48 | 0.003 | 2.49 | 4.09 | 6.32 | 180.63 | |

| 2D-CNN | 22.10 | 9.08 | 0.11 | 16.37 | 21.49 | 26.94 | 94.04 | |

| 2D-CNN-DLB | 7.08 | 12.39 | 0.01 | 2.59 | 4.23 | 6.72 | 166.01 | |

| UJI (bld. 0) | DNN (3 HL) | 6.89 | 5.90 | 0.02 | 2.96 | 5.32 | 9.16 | 79.63 |

| DNN–DLB (1 HL, aug) | 6.31 | 5.91 | 0.01 | 2.63 | 4.75 | 8.09 | 121.32 | |

| 2D-CNN | 12.38 | 7.82 | 0.05 | 6.65 | 10.75 | 16.52 | 60.47 | |

| 2D-CNN-DLB | 6.76 | 6.93 | 0.04 | 2.84 | 4.94 | 8.06 | 111.66 | |

| Info | Model | Building Success Rate | Floor Success Rate | Mean Error | Median Error |

|---|---|---|---|---|---|

| IPIN 2015 results [59] (multi-model) | MOSAIC | 98.65% | 93.86% | 11.64 m | 6.7 m |

| HFTS | 100% | 96.25% | 8.49 m | 7.0 m | |

| RTLS@UM | 100% | 93.74% | 6.20 m | 4.6 m | |

| ICSL | 100% | 86.93% | 7.67 m | 5.9 m | |

| Recent multi-model | CNNLoc [52] | 100% | 96.03% | 11.78 m | - |

| Single DNN | Scalable DNN [50] | 99.82% | 91.27% | 9.29 m | - |

| DNN–DLB (best run) | 99.64% | 92.62% | 9.07 m | 6.32 m | |

| DNN–DLB (avg of 10 runs) | 99.56% | 92.12% | 9.26 m | 6.41 m |

| Model | Linear Regression | ||||||

|---|---|---|---|---|---|---|---|

| Label Aug. | (Equation (6)) | Y | X | -Value | |||

| No | 5.0 | e | 24.78 | 2.35 | 2.83 × 10−28 | 0.18 | |

| w | 5.45 | 0.28 | 6.83 × 10−23 | 0.14 | |||

| h | 4.78 | 0.23 | 1.85 × 10−23 | 0.15 | |||

| w | 5.64 | 0.26 | 1.56 × 10−16 | 0.10 | |||

| h | 5.32 | 0.06 | 6.98 × 10−3 | 0.01 | |||

| 10.0 | e | 105.85 | 6.37 | 1.34 × 10−16 | 0.10 | ||

| w | 11.33 | 0.43 | 5.22 × 10−15 | 0.09 | |||

| h | 9.50 | 0.32 | 6.50 × 10−20 | 0.13 | |||

| w | 11.76 | 0.36 | 2.75 × 10−9 | 0.06 | |||

| h | 10.33 | 0.06 | 5.69 × 10−2 | 0.01 | |||

| 15.0 | e | 235.91 | 10.72 | 2.64 × 10−14 | 0.09 | ||

| w | 16.46 | 0.60 | 2.38 × 10−15 | 0.10 | |||

| h | 14.22 | 0.41 | 2.54 × 10−16 | 0.10 | |||

| w | 17.41 | 0.38 | 3.93 × 10−6 | 0.03 | |||

| h | 15.47 | 0.02 | 6.21 × 10−1 | 0.00 | |||

| Yes | 5.0 | e | 30.61 | 2.37 | 1.72 × 10−24 | 0.16 | |

| w | 6.10 | 0.27 | 8.83 × 10−26 | 0.17 | |||

| h | 4.97 | 0.24 | 4.34 × 10−22 | 0.15 | |||

| w | 6.50 | 0.26 | 3.28 × 10−12 | 0.08 | |||

| h | 5.52 | 0.06 | 1.65 × 10−3 | 0.02 | |||

| 10.0 | e | 114.41 | 6.16 | 8.49 × 10−20 | 0.13 | ||

| w | 11.61 | 0.47 | 1.27 × 10−25 | 0.17 | |||

| h | 9.57 | 0.33 | 6.72 × 10−19 | 0.12 | |||

| w | 12.57 | 0.37 | 2.53 × 10−8 | 0.05 | |||

| h | 10.36 | 0.07 | 7.91 × 10−3 | 0.01 | |||

| 15.0 | e | 242.37 | 14.05 | 2.05 × 10−19 | 0.12 | ||

| w | 16.57 | 0.85 | 2.05 × 10−29 | 0.19 | |||

| h | 14.11 | 0.40 | 1.32 × 10−14 | 0.09 | |||

| w | 18.92 | 0.49 | 2.13 × 10−5 | 0.03 | |||

| h | 14.94 | 0.11 | 3.21 × 10−3 | 0.01 | |||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Laska, M.; Blankenbach, J. DeepLocBox: Reliable Fingerprinting-Based Indoor Area Localization. Sensors 2021, 21, 2000. https://doi.org/10.3390/s21062000

Laska M, Blankenbach J. DeepLocBox: Reliable Fingerprinting-Based Indoor Area Localization. Sensors. 2021; 21(6):2000. https://doi.org/10.3390/s21062000

Chicago/Turabian StyleLaska, Marius, and Jörg Blankenbach. 2021. "DeepLocBox: Reliable Fingerprinting-Based Indoor Area Localization" Sensors 21, no. 6: 2000. https://doi.org/10.3390/s21062000

APA StyleLaska, M., & Blankenbach, J. (2021). DeepLocBox: Reliable Fingerprinting-Based Indoor Area Localization. Sensors, 21(6), 2000. https://doi.org/10.3390/s21062000