Abstract

The progress brought by the deep learning technology over the last decade has inspired many research domains, such as radar signal processing, speech and audio recognition, etc., to apply it to their respective problems. Most of the prominent deep learning models exploit data representations acquired with either Lidar or camera sensors, leaving automotive radars rarely used. This is despite the vital potential of radars in adverse weather conditions, as well as their ability to simultaneously measure an object’s range and radial velocity seamlessly. As radar signals have not been exploited very much so far, there is a lack of available benchmark data. However, recently, there has been a lot of interest in applying radar data as input to various deep learning algorithms, as more datasets are being provided. To this end, this paper presents a survey of various deep learning approaches processing radar signals to accomplish some significant tasks in an autonomous driving application, such as detection and classification. We have itemized the review based on different radar signal representations, as it is one of the critical aspects while using radar data with deep learning models. Furthermore, we give an extensive review of the recent deep learning-based multi-sensor fusion models exploiting radar signals and camera images for object detection tasks. We then provide a summary of the available datasets containing radar data. Finally, we discuss the gaps and important innovations in the reviewed papers and highlight some possible future research prospects.

1. Introduction

Over the last decade, autonomous driving and Advanced Driver Assistance Systems (ADAS) have been among the leading research domains explored in deep learning technology. Important progress has been realized, particularly in autonomous driving research, since its inception in 1980 and the DARPA urban competition in 2007 [1,2]. However, up to now, developing a reliable autonomous driving system remained a challenge [3]. Object detection and recognition are some of the challenging tasks involved in achieving accurate, robust, reliable, and real-time perceptions [4]. In this regard, perception systems are commonly equipped with multiple complementary sensors (e.g., camera, Lidar, and radar) for better precision and robustness in monitoring objects. In many instances, the complementary information from those sensors is fused to achieve the desired accuracy [5].

Due to the low cost of the camera sensors and the abundant features (semantics) obtained from them, they have now established themselves as the dominant sensors in 2D object detection [6,7,8,9,10] and 3D object detection [11,12,13,14] using deep learning frameworks. However, the camera’s performance is limited in challenging environments such as rain, fog, snow, dust, and strong/weak illumination conditions. While Lidar can provide depth information, they are relatively expensive.

On the other hand, radars can efficiently measure the range, relative radial velocity, and angle (i.e., both elevation and azimuth) of objects in the ego vehicle surroundings and are not affected by the change of environmental conditions [15,16,17]. However, radars can only detect objects in their environment within their measuring range, but they cannot provide the category of the object detected (i.e., a vehicle or pedestrian). Additionally, radar detections are relatively too sparse compared to Lidar point clouds [18]. Hence, it is an arduous task to recognize/classify objects using radar data. Based on the sensor’s comparative working conditions, as mentioned earlier, we can deduce that they complement one another, and they can be fused to improve the performance and robustness of object detection/classification [5].

Multi-sensor fusion refers to the technique of combining different pieces of information from multiple sensors to acquire better accuracy and performance that cannot be attained using either one of the sensors alone. Readers can refer to [19,20,21,22] for detailed discussions about multi-sensor fusion and related problems. Based on the conventional fusion algorithms using radar and vision data, a radar sensor is mostly used to make an initial prediction of objects in the surroundings with bounding boxes drawn around them for later use. Then, machine learning or deep learning algorithms are applied to the bounding boxes over the vision data to confirm and validate the presence of earlier radar detections [23,24,25,26,27,28]. Moreover, other fusion methods integrate both radar and vision detections using probabilistic tracking algorithms such as the Kalman filter [29] or particle filter [30], and then track the final fused results appropriately.

With the recent advances in deep learning technology, many research domains such as signal processing, natural language processing, healthcare, economics, agriculture, etc. are adopting it to solve their respective problems, achieving promising results [20]. In this respect, a lot of studies have been published over the recent years, pursuing multi-sensor fusion with various deep convolutional neural networks and obtaining a state-of-the-art performance in object detection and recognition [31,32,33,34,35]. The majority of these systems concentrate on multi-modal deep sensor fusion with cameras and Lidars as input to the neural network classifiers, neglecting automotive radars, primarily due to the relative availability of public accessible annotated datasets and benchmarks. This is despite the robust capabilities of radar sensors, particularly in adverse or complex weather situations where Lidars and cameras are largely affected. Ideally, one of the reasons why radar signals are rarely processed with deep learning algorithms has to do with their peculiar characteristics, making them difficult to be fed directly as input to many deep learning frameworks. Besides, the lack of open-access datasets and benchmarks containing radar signals have contributed to the fewer research outputs over the years [18]. As a result, many researchers self-developed their own radar signal datasets to test their proposed algorithms for object detection and classification using different radar data representations as inputs to the neural networks [36,37,38,39,40]. However, as these datasets are inaccessible, comparisons and evaluations are not possible.

Over the recent years, some radar signal datasets are being reported for public usage [41,42,43,44]. As a result, many researchers have begun to apply radar signals as inputs to various deep learning networks for object detection [45,46,47,48], object segmentation [49,50,51], object classification [52], and their combination with vision data for deep-learning-based multi-modal object detection [53,54,55,56,57,58]. This paper specifically reviewed the recent articles on deep learning-based radar data processing for object detection and classification. In addition, we reviewed the deep learning-based multi-modal fusion of radar and camera data for autonomous driving applications, together with available datasets being used in that respect.

We structured the rest of the paper as follows: Section 2 contains an overview of the conventional radar signal processing chain. An in-depth deep learning overview was presented in Section 3. Section 4 provides a review of different detection and classification algorithms exploiting radar signals on deep learning models. Section 5 reviewed the deep learning-based multi-sensor fusion algorithms using radar and camera data for object detection. Datasets containing radar signals and other sensing data such as the camera and Lidar are presented in Section 6. Finally, discussions, conclusions, and possible research directions are given in Section 7.

2. Overview of the Radar Signal Processing Chain

This section describes a brief overview of radar signal detection processes. Specifically, we discuss the range and the velocity estimation of different kinds of radar systems, such as Frequency Modulated Continuous Wave (FMCW), Frequency Shift Keying (FSK), and Multiple Frequency Shift Keying (MFSK) waveforms commonly employed in automotive radars.

2.1. Range, Velocity, and Angle Estimation

Radio Detection and Ranging (Radar) technology was first introduced around the 19th century, mainly targeting military and surveillance-related security applications. Interest in radar usage has now expanded over the last couple of years, particularly towards commercial, automotive, and industrial applications. The fundamental task of a radar system is to detect the targets in their surroundings and, at the same time, estimate their associated parameters, such as the range, radial velocity, azimuth angle, etc. The range and radial velocity measurements are largely dependent upon the time delay and Doppler frequency estimation accuracy, respectively.

This system usually emits an electromagnetic wave signal and then receives the reflections of those waves reflected by the targets along its propagation path [59]. Radar sensors generally transmit either continuous waveform or short sequences of pulses in the majority of radar applications. Therefore, according to radar system waveforms, radars are conventionally divided into two general categories: pulse and continuous wave (CW) radars with or without modulation.

Pulse radar transmits sequences of short pulse signals to estimate both the range and radial velocity of a moving target. The distance of the target from the radar sensor is calculated using the time delay that elapses between the transmitted and the intercepted pulse. In order to achieve better accuracy, shorter pulses are employed, while, to attain a better signal-to-noise ratio, longer pulses are necessary.

On the other hand, a CW radar operates by transmitting a constant unmodulated frequency to measure the target radial velocity but without range information. The transmitted signal from the CW radar antenna with a particular frequency is intercepted after it is reflected back from the target, with the change in its frequency known as the Doppler frequency shift. The velocity information is estimated based on the Doppler effect exhibited by the motion between the radar and the target. However, CW cannot measure the target range, which is one of its drawbacks.

The linear frequency modulated continuous (LFMCW) waveform is another important radar waveform scheme. Unlike CW, the transmitted waveform signal frequency is modulated to simultaneously estimate the target’s range and radial velocity with high resolution. Most of the modern-day automotive radars operate based on the FMCW modulation scheme, and it has been extensively studied in the literature [60,61]. They are gaining more popularity recently, as they are among the leading sensing components employed in applications like adaptive cruise control (ACC), autonomous driving, industrial applications, etc. Their main benefit is the ability to measure the range and radial velocity of moving objects simultaneously.

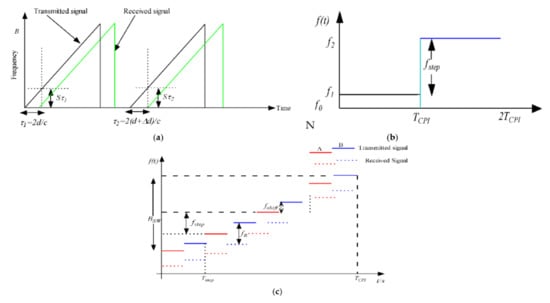

As depicted in Figure 1a, the FMCW transmits sequences of the linear frequency modulated signal (LFM), also called chirp signal, which increases linearly with time, within a bandwidth range of up to 4 GHz and a carrier frequency of 79 GHz [62], then receives the reflected signals that bounce back from the targets. The received signals are mixed with the transmitted signals (chirp) in a mixer at the receiving end to obtain another frequency called the beat frequency signal, given by:

where is the distance of the object from the radar, is the slope of the chirp signal, and is the speed of light.

Figure 1.

Range and velocity estimation schemes. (a) linear frequency modulated continuous waveform (LFMCW) scheme [52], (b) Frequency Shift Keying (FSK) waveform scheme [67], and (c) Multiple Frequency Shift Keying (MFSK) waveform system [68].

Using this frequency, we can infer the distance of the target to the radar sensor. A fast Fourier transform (FFT) (range FFT) is usually performed on the beat frequency signal to convert it to the frequency domain, thereby separating the individual peaks of the resolved objects. The range resolution of this procedure partly depends on the bandwidth of the FMCW system [60], given by:

The phase information of the beat signal is exploited to estimate the velocity of the target. As shown in Figure 1, the object motion in relation to the radar results in a beat frequency shift, given by:

over the received signal and a phase shift, given by [60]:

where is the object radial velocity, is the center frequency, is the chirp duration, and is the wavelength.

Since the phase shift of mm-wave signals is much more sensitive to the target object movements than the beat frequency shift, the velocity FFT is usually conducted across the chirps to generate the phase shift and then converted to the velocity afterward. The expression for the velocity resolution can be represented as [61]:

where is the number of chirps in one frame, and is the frame period.

To estimate the target’s position in space, the target’s azimuth and elevation angles are calculated by processing the received signals using array processing techniques. The most typical procedures include the digital beamforming [63], phase comparison monopulse [61], and the Multiple Signal Classification (MUSIC) algorithm [64].

However, according to an FFT algorithm, the azimuth angle of a moving object is obtained by conducting a fast Fourier transform (Angle FFT) on the spatial dimension across the receiver antennas. The expression for the velocity resolution can be represented as [61]:

where is the number of the receiver antennas, is the azimuth angle between the distant object to the radar position, and is the distance between the receiver antenna pairs.

The conventional linear frequency modulation (LFMCW) waveform scheme explained earlier delivers the desired range and velocity resolution. However, it usually encounters ambiguities in multi-target situations during the range and velocity estimations, which is also referred to as the ghost target problem. One of the most straightforward approaches to address this problem is applying multiple chirp signals (i.e., multiple chirp continuous waves, each with a different frequency of modulation, are transmitted) [65]. However, this method will also lead to another issue, as it increases the measurement time.

In this regard, many other waveforms have been proposed by the research community to overcome one issue to another, such as Frequency Shift Keying (FSK) [66], Chirp Sequence [67], and, Multiple Frequency Shift Keying (MFSK) [68], to mention a few. However, it must be emphasized that selecting a specific radar waveform to be utilized in any form of radar system has always been a critical parameter dictating the performance. It usually depends on the role, purpose, or mission of the radar application.

For instance, as an alternative to the FMCW method, the FSK waveform can provide a significant range and velocity resolution while simultaneously withstanding ghost target ambiguities. Its only drawback is that it does not resolve the target in a range direction. This system transmits two discrete frequency signals (i.e., and ) sequentially in an intertwined passion within each time duration, as shown in Figure 1b. The difference between these two frequencies is called a step frequency and is defined as . The step frequency is very small and is selected irrespective of the desired target range measured.

In this scheme, the receive echo signals are first down-converted into a baseband signal using the transmitted carrier frequency signal via a homodyne receiver and then sampled times afterward. The output of the baseband signal conveys the Doppler frequency generated by the moving objects. A Fourier transform is conducted on the time-discrete receive signal for each coherent processing interval () within the , and then, moving targets are detected after CFAR with an amplitude threshold. Suppose the frequency step () is maintained as minimal as possible regarding the intertwined transmitted signals and . In that case, the Doppler frequencies obtained from the baseband signal outputs should be roughly the same, while the phase information changes at the spectrum’s peak. In this way, the moving object’s range is estimated using the phase difference’s peak (), as shown in Equation seven based on [66]:

where is the phase difference measurement of the Doppler spectrum peak, the range of the moving target, and is the speed of light, while is a step frequency.

However, this scheme’s main drawback is that it cannot differentiate two targets with the same speed along the range dimension or when multiple targets are static. This is because multiple targets cannot be separated with phase information.

In the MFSK waveform scheme, the LFM and FSK waveforms combination is exploited to provide the range and radial velocity estimation of the target efficiently while, at the same time, avoiding the individual drawbacks of LFM and FSK [68]. The transmission signal waveform is a stepwise frequency modulated signal. In this case, the transmit waveform uses two linearly frequency modulated signals arranged in a sequence (e.g., ) with the same bandwidth and slope separated by a small frequency shift , as depicted in Figure 1c. Like in the case of FSK and LFM, the receive echo signals are down-converted to the baseband and sampled over each frequency step. Both signal sequences and are processed individually using the FFT and CFAR processing algorithms.

Due to the coherent measurement procedure in both sequences and , the phase information defined by is used to estimate the target range and radial velocity. Analytically, the measured phase difference can be defined as given in [67] by Equation eight. Hence, it can be seen that MFSK cannot encounter the ghost target problem that is present in the LFM system.

where defines the number of frequency shifts for each sequence and , is the frequency shift, is the speed of light, and is the range of the target.

Generally, selecting a radar waveform in a radar system design has always remained a challenging concern. It largely depends on many aspects, such as the role, purpose, and mission of the radar application. As such, a discussion about them is out of this study’s scope; however, more information can be found in [65,66,67,68,69].

2.2. Radar Signal Processing and Imaging

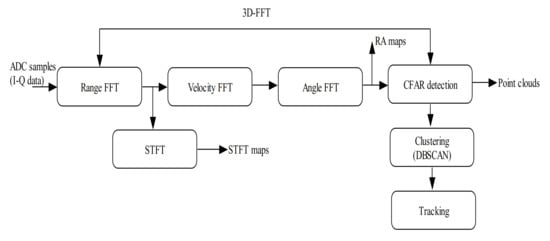

The complete procedure is depicted in Figure 2, which consists of seven functional processing blocks. A fast Fourier transform is usually conducted over the 3D tensor to resolve the object range, velocity, and angle. In the beginning, the received radar signals (ADC samples) within a single coherent processing interval (CPI) are stored in matrix frames creating a 3D radar cube with three different dimensions, including fast time (chirp index), slow time (chirp sampling), and the phase dimension (TX/RX antenna pairs). Then, an unambiguous range–velocity estimation is the second processing stage, which is achieved via a 2D-FFT processing scheme on the 3D radar cube. Usually, the range FFT is first executed on an ADC time–domain signal to estimate the range. The second FFT (velocity FFT) is then performed across the chirps to estimate the relative radial velocity.

Figure 2.

Radar signal processing and imaging. Adapted from [52].

After these two FFT stages, a 2D map of velocity/range points is obtained, with higher amplitude values indicating the target candidate. Further processing is required to identify the real target against clutter. In order to create the Range-Velocity-Azimuth map, a third FFT scheme (Angle FFT) is executed over the maximum Doppler peaks of each range bin. The complete procedure represents a 3-dimensional FFT (including the range FFT, velocity FFT, and angle FFT). Similarly, a short-time Fourier transform (STFT) over the range FFT output can create the spectrogram, illustrating the object’s velocity.

The fourth stage is the target detection scheme, mainly performed using CFAR algorithms applied to the FFT outputs. A CFAR detection algorithm is applied to measure the noise within the target vicinity and then provide a more accurate target detection. The CFAR technique was proposed in 1968 by Fin and Johnson [70]. Instead of using a fixed threshold during target detection, they offered a variable threshold, which is adjusted by considering the noise variance in each cell’s neighborhood. However, at the moment, there are many different CFAR algorithms published with various ways of computing the threshold, such as the cell averaging (CA), smallest of selection (SO), greatest of selection (GO), and the ordered statistic (OS) CFAR [71,72]. Moreover, the 3D point clouds are generated by conducting an angle FFT on the CFAR detection obtained over the range–velocity bins.

A DBSCAN is also used to cluster the detected targets into groups in order to differentiate multiple targets [73]. Target tracking is the final stage in the radar signal processing chain, where algorithms such as the Kalman filter track the target position and target trajectory to obtain a smoother estimation.

3. Overview of Deep Learning

This section provides an overview of the current neural network frameworks widely employed in computer vision and machine learning-related fields that could also be applied for processing radar signals. This spans across different models on object detection and classification.

Over the last decade, computer vision and machine learning have seen tremendous progress using deep learning algorithms. This is driven by the massive availability of publicly accessible datasets, as well as the graphical processing units (GPUs) that enable the parallelization of neural network training [74]. Overwhelmed by its successes across different domains, deep learning is now being employed in many other fields, including signal processing [75], medical imaging [76], speech recognition [77,78], and much more challenging tasks in autonomous driving applications such as image classification and object detection [79,80].

However, before we dive into the deep learning discussion, it is important to talk about the traditional machine learning algorithm briefly, as it is the foundation of deep learning models. While deep learning and machine learning are specialized research fields in artificial intelligence, they have significant differences. Machine learning utilizes algorithms to analyze a given data, learn from it, and provide the possible decision based on what it has learned. One of the famous problems solved by machine learning algorithms is classification, where the algorithm provides a discrete prediction response. Usually, the machine algorithm uses feature extraction algorithms to extract notable features from the given input data and subsequently make a prediction using classifiers. Some examples of machine learning algorithms include symbolic methods such as support vector machines (SVM), Bayesian networks, decision trees, etc. and nonsymbolic methods such as genetic algorithms and neural networks.

On the other hand, a deep learning algorithm is structured based on the multiple layers of artificial neural networks, inspired according to the way neurons in the human brain function. Neural networks learn from the input data high-level feature representations, which are used to make intelligent decisions. Some common deep learning networks include deep convolutional neural networks (DCNNs), recurrent neural networks (RNNs), autoencoders, etc.

The most significant distinction between deep learning and machine learning is its performance, given the large amount of data available. However, when the training data is less, the deep learning performance is not that much. This is because they do need a large volume of datasets to learn perfectly. On the other hand, the classical machine learning methods perform significantly well with small data. Deep learning network functionality depends on powerful high-end machines. This is because deep learning models are composed of many parameters that require a longer time for training. Thus, they perform complex matrix multiplication operations that can be easily realized and optimized using GPUs, while, on the contrary, machine learning algorithms can work efficiently well even on low-end machines such as CPUs.

Another important aspect of machine learning is feature engineering, which utilizes domain knowledge to create feature extractors that minimize the complexity of the data and make the patterns in the data visible for the learning algorithm. However, this process is very challenging and time-consuming. Generally, the machine learning algorithm’s performance depends heavily on how precisely the features are identified and extracted. On the other hand, deep learning learns high-level features from its single end-to-end network. There is no need to put in any mechanisms to evaluate the features or understand what best represents the input data. In other words, deep learning does not need feature engineering, as the features are extracted automatically. Hence, deep learning eliminates the need for developing a new feature extraction algorithm for every problem.

3.1. Machine Learning

Machine learning is one of the new emerging disciplines that are now widely applied in the fields of science, engineering, medicine, etc. It is a subset of artificial intelligence that relies on computational statistics to produce a model showcasing the relations between the input and output data. Therefore, the system uses mathematical models to learn significant high-dimensional data structure (i.e., how to perform a particular task) from a given data and make decisions/predictions based on the learned information. The learning method can be divided into three categories—namely, supervised, unsupervised, and reinforcement learning. Classification and regression are the most typical tasks performed by machine learning algorithms. To solve a classification problem, the model is required (or task) to find which of the categories () an input corresponds to. Therefore, classification is required to discriminate an object from the list of all other object categories.

The first stage in the machine learning algorithm is feature extraction. The input data is processed and transformed into high-dimensional representations (i.e., features) that contain the most significant information from the objects, discarding irrelevant information. Shift, HOG, haar-like features, and Gaussian mixture models are some of the most widely traditional machine learning techniques employed for feature extraction. After the learning procedure, a decision can be achieved using classifiers. In most cases, Naïve Bayes, K-Nearest neighbor, and support vector machines (SVM) are the commonly exploited classifiers.

Machine learning is also used to learn important data structures from the radar data acquired for different moving targets. Many papers have been presented in the literature for radar target recognition using machine learning methods [81,82,83]. For instance, the author of [81] presented a classification of airborne targets based on a supervised machine learning algorithm (SVM and Naïve Bayes). Airborne radar was used to provide the measurements of the aerial, sea surface, and ground moving targets. C. Abeynayake et al. [82] developed an automatic target recognition approach based on a machine learning algorithm applied to ground penetration radar data. Their system helps detect complex features that are relevant to a multitude of thread objects. In [83], a machine learning-based method for target detection using radar processors was proposed, where they compared the performance of the machine learning-based classifiers (random decision forest) with one of the deep learning algorithms (RNNs). The results of their approach demonstrated that machine learning classifiers could discriminate targets from clutter with good precision. The main disadvantage of the machine learning approach is that it requires the prior feature extraction procedure before the final decision-making. With the recent revolution brought about by deep learning technology due to the availability of huge data and bigger processing tools (i.e., GPUs), machine learning models are now being regarded as inferior in performance, though their computational complexity is lighter, and a high performance can be achieved with a small amount of training data.

3.2. Deep Learning

Deep learning belongs to the subsets of machine learning algorithms that can be viewed as an extension of artificial neural networks (ANNs) applied to row sensory data to capture and extract high-level features that can be mapped to the target variable. For example, given an image as the sensory data, the deep learning algorithm will extract the object’s features, like edges or texture, from the raw image pixels.

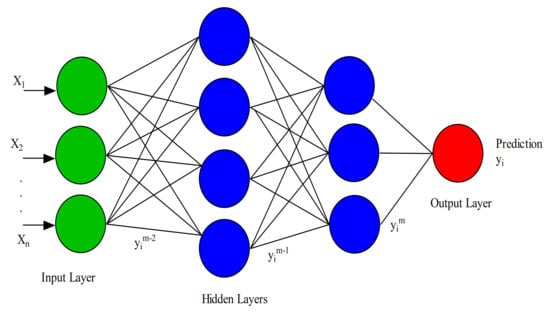

In general, a deep learning algorithm consists of ANNs with at least one or more intermediate layers. The network is considered “deep” after stacking several intermediate layers of ANNs. The ANNs are responsible for transforming the low-level data to a higher abstracted level of representation. In this network, the first layer’s output is passed as input to the intermediate layers before producing the final output. Through this process, the intermediate layers enable the network to learn a nonlinear relationship between the inputs and outputs by extracting more complex features, as depicted in Figure 3. Deep learning models consist of many different components stacked together to form the main network model (e.g., convolution layers, pooling layers, fully connected layers, gates, memory cells, encoders, decoders, etc.), depending on the type of the network architecture employed (e.g., CNNs, RNNs, or autoencoders).

Figure 3.

A simple structure of neural networks—input layer in green, output in red, and the hidden layers in blue.

3.3. Training Deep Learning Models

Deep learning employs the Backpropagation algorithm to update the weights in each of the layers during the course of the learning process. The weights of the network are usually initialized randomly using small values. Given a training sample, the predictions are obtained based on the current weight’s values, and the outputs are compared with the target variable. An objective function is utilized to make the comparisons and estimate the error. The error obtained is fed back into the network for updating the network weights accordingly. More information on Backpropagation can be found in [84].

3.4. Deep Neural Network Models

Here, we provide an overview of some of the popular deep neural networks utilized by the research communities, which include the deep convolutional neural networks (DCNNs), recurrent neural networks (RNNs), long short-term memory (LSTM), encoder-decoder, and the generative adversarial networks (GANs).

3.4.1. Deep Convolutional Neural Networks

Deep convolutional neural networks (DCNNs) are one of the most prominent deep learning models utilized by research communities, especially in the computer vision and related fields. DCNNs were first introduced by K. Fukushima [85], using the concept of a hierarchical representation of receptive fields from the visual cortex, as presented by Hubel and Wiesel. Afterward, Weibel et al. [86] proposed convolutional neural networks (CNNs) that share weights with temporal receptive fields and Backpropagation training methods. Later, Y. LeCun [87] presented the first CNN architecture for document recognition. The DCNN models typically accept 2D images or sequential data as the input.

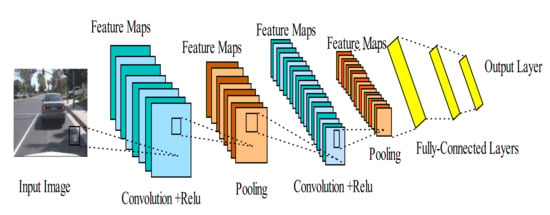

A DCNN consists of several convolutional layers, pooling layers, nonlinear layers, and multiple fully connected layers that are periodically stacked together to form the complete network, as shown in Figure 4. Within the convolutional layers, CNN uses a set of filters (kernels) to convolve the input data (usually, a 2D image) and extract various feature maps. The nonlinear layers are mainly applied as activation functions to the feature maps. In contrast, the pooling operation is used for down-sampling the extracted features and learning invariant to small input translations. Max-pooling is the most commonly employed pooling method. Lastly, the fully connected layers are used to transform the feature maps into a feature vector. Stacking these layers together will form a deep multi-level layer network, with the higher layers being the composite of the lower layers. The network’s name was derived from the convolution operation that spread across all the layers in the whole network. A standard convolution operation in a simple CNN model involves the multiplication of 2D image with a kernel filter , as given in [87] and shown below:

Figure 4.

A simple deep convolutional neural network (DCNN) architecture. Adapted from [87].

For a better understanding, the process involved in the whole DCNN can be better represented mathematically if we express as the input data of size , with representing the spatial level of , and as the number of channels. Similarly, if we assume a filter with its associated weights and bias . Then, we can obtain the output associated with the convolutional layer, as given in [87]:

where the activation function is employed to improve the network nonlinearity; at the moment, ReLu [79] is the most commonly used activation function in the literature.

DCNNs have been the most employed deep learning-based algorithms over the last decade in many applications. This is due to their strong capability to explore the local connectivity from the input data based on its multiple combinations of convolution and pooling layers that automatically extract the features. Among the most popular DCNN architectures are Alex-Net [79], VGG-Net [88], Res-Net [89], Google-Net [90], Mobile-Net [91], and Dense-Net [92], to mention a few. Their promising performances achieved in image classification have led to their application in learning and recognizing radar signals. Furthermore, weight sharing and invariance to translation, scaling, rotation, and other transformations of the input data are essential in recognizing radar signals. Over the past few years, DCNNs have been employed to process various types of millimeter-wave radar data for object detection, recognition, human activity classification, and many more tasks [37,38,39,45,47], with excellent performance accuracy and efficiency. This is due to their ability to extract high-level abstracted features by exploiting the radar signal’s structural locality. Similarly, using DCNNs with the radar signal will allow us to extract features according to their frequency and pace. However, DCNNs cannot model sequential data from the human motion with temporal information, because every type of human activity consists of a specific spectral kind of posture.

3.4.2. Recurrent Neural Networks (RNNs) and Long Short-term Memory (LSTM)

Recurrent neural networks (RNNs) are the type of network models designed specifically for processing sequential data. The output of RNNs constitutes the present inputs and the earlier outputs embedded in their hidden state [93]. This is because they have a memory to store the earlier outputs that the multilayer perceptron neural networks lack. Equation (11) shows the update in the memory state according to [88]:

where is a nonlinear transformation function such as tanh or ReLu, is the input to the network at a time , represents the hidden state at a time and can act as the memory of the network, and represents the previous hidden state. Similarly, is a weight matrix that represents the RNN input-to-hidden connections, while is the weight matrix representing the hidden-to-hidden recurrent connections.

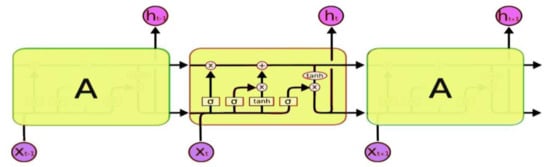

RNNs are also trained using the Backpropagation algorithm and can be applied in many areas, such as natural language processing, speech recognition, and time series prediction. However, RNNs suffered a deficiency called gradient instability, which means that, as the input sequence grows, the gradient vanishes or explodes. A long short-term memory (LSTM) network model was later proposed in [94] to overcome this problem and was then upgraded in [95]. LSTM adds a memory cell to the RNN networks to store each neuron’s state, thus preventing the gradient from exploding. LSTM architecture is shown in Figure 5, consisting of three different gates that control the memory cell’s data flow.

Figure 5.

Long short-term memory (LSTM) architecture. Image source [96].

Unlike DCNNs, which only process input data of predetermined sizes, RNNs and the LSTM predictions increase with more available data. Their output prediction changes with time. Accordingly, they are sensitive to the change in the input data. For radar signal processing, especially human activity recognition, RNNs can exploit the radar signal temporal and spatial correlation characteristics, which is vital in human activity recognition [38].

As shown in Figure 5, LSTM has a chain-like structure, with repeated neural network blocks (yellow rectangles) having different structures. The neural network blocks are also called memory blocks or cells. Each cell accepts input at a time and output , called the hidden state. Then two states are transferred to the next cell—namely, the cell state and the hidden state. These cells are responsible for remembering what is performed inside them while their manipulations are performed using gates (i.e., input gate, forget gate, and output gate) [95]. The input gate adds the information to the cell state. A forget gate is used to remove information from the cell state, while the output gate is responsible for creating a vector by applying a function () to the cell state and uses filters to regulate them before sending the output.

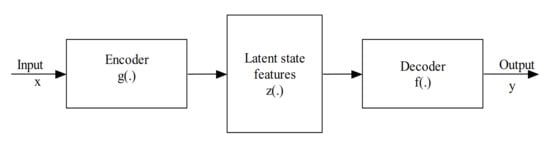

3.4.3. Encoder-Decoder

The encoder-decoder network is the kind of model that uses a two-stage network to transform the input data into the output data. The encoder is represented by a function that encodes the input information and transforms them into higher-level representations. Simultaneously, the decoder given by tries to reconstruct the output from the encoded data [97].

The high-level representations used here literally refer to the feature maps that capture the essential discriminant information from the input data needed to predict the output. This model is particularly prevalent in image-to-image translation and natural language processing. Besides, this model’s training minimizes the error between the real and the reconstructed data. Figure 6 illustrates a simplified block diagram of the encoder-decoder model.

Figure 6.

A simple encoder-decoder architecture.

Some of the encoder-decoder model variants are stacked autoencoder (SAE), the convolutional autoencoder (CAE), etc. Many such models have been applied in many application domains, including radar signal processing [98]. For instance, because of the benefit of localized feature extraction, as well as the unsupervised pretraining technique, CAE has superior performance over DCNN for moving object classification. However, most of these models are based on fully connected networks, and they could not necessarily extract the structured features embedded in the radar data, especially those contained in the range cells of a high range resolution profile wideband radar (HRRP). This is because HRRP returned target scatterer distributions based on the range dimension.

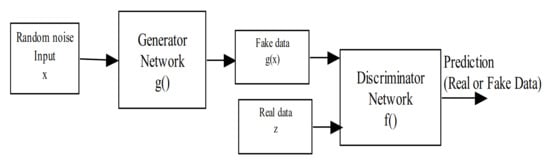

3.4.4. Generative Adversarial Networks (GANs)

Generative Adversarial Networks (GANs) are among the most prominent deep neural networks in the generative models family [99]. They are made of two network blocks, a generator, and a discriminator, as shown in Figure 7. The generator usually receives a random noise as its input and processes it to produce the output samples that look similar to the data distribution (e.g., fake images). In contrast, the discriminator tries to compare the difference between the real data samples and those produced by the generator.

Figure 7.

Generative adversarial network.

One of the major bottlenecks in applying deep learning models using radar signals is the lack of accessible radar datasets with annotations. Although labeling is one of the most challenging tasks in computer vision and its related applications, with the unsupervised generative models such as GANs, one could generate a huge amount of radar signal data and train it in an unsupervised manner, neglecting the need for laborious labeling tasks. In this regard, GANs have been used over the years for many applications, but very few studies have been performed using GANs and radar signal data [100]. However, GANs have a crucial issue regarding their training aspect, which sometimes leads to its collapse (instability). However, many of its variants have been proposed over the years to tackle this specific problem.

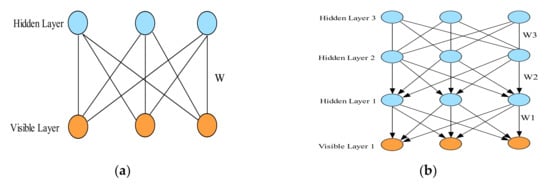

3.4.5. Restricted Boltzmann Machine (RBM) and Deep Belief Networks (DBNs)

Restricted Boltzmann Machine (RBM) has received increasing consideration over the recent years, as they have been adopted as the essential building components of deep belief networks (DBN) [101]. They are a specialized Boltzmann Machine (BM) with an added restriction of discarding all the connections within each visible or hidden layer, as shown in Figure 8a. Thus, the model is described as a bipartite graph. Therefore, an RBM can be described as a probabilistic model consisting of a visible layer (units) and a hidden layer (units) that extract a given data’s joint probability [102]. The two layers are connected using symmetrically undirected weights, while there are no intra-connections within either of the layers. The visible layer describes the input data (observable data) whose probability distribution is expected to be determined, while, on the other hand, the hidden layers are trained and expected to learn higher-order representations from the visible units.

Figure 8.

(a) Schematic of the Restricted Boltzmann Machine (RBM) architecture. (b) Schematic architecture of deep belief networks with one visible and three hidden layers [101].

The joint energy function of an RBM network according to the hidden and visible layers is determined using its weight and bias, as expressed in [102]:

where represents the symmetric weight, and and denotes the bias of the visible unit and the hidden unit , respectively. Therefore, and .

The model allocates a joint probability distribution to each vector combination in the layers based on the energy function defined in [102] and given by:

where is the normalization factor determined by adding up all the possible combinations of visible and hidden vectors, defined as:

Deep belief networks (DBN) are generative graphical deep learning models developed by R. Salakhutdinov and G.Hinton [103], in which they demonstrated that multiple RBMs could be stacked and trained in a specialized way (called the greedy approach). Figure 8b illustrates an example of three-layer deep belief networks. Unlike in the RBM model, a DBN only uses bidirectional connections (i.e., the same as in RBM) on its first top layer. In contrast, the subsequent layers use only top-down connections (bottom layers). The main reason behind this model’s recent interest is related to its new training principle called the layer-wise pretraining (i.e., the greedy method). Thus, DBN networks have recently been applied in many research domains, such as speech recognition, image classification, and audio classification.

The simple, most familiar application of the DBN model is feature extraction. The complexity of the DBN’s learning procedure is higher, as it learns the joint probability distribution of the output data. There is also a serious overfitting concern about DBN to the vanishing gradient, which changes the training from lower to higher network depth levels.

3.5. Object Detection Models

Object detection can be viewed as an act of identifying and localizing one or multiple objects from the given scene. This usually involves estimating the classification probability (labels) in conjunction with calculating the object’s location or bounding boxes. DCNN-based object detectors are grouped into two: the two-stage object detectors and the one-stage object detectors.

3.5.1. One-Stage Object Detectors

This method uses only one single-stage network model to extract the feature maps used to obtain the classification scores and bounding boxes. Many unified one-stage models have been proposed in the literature. For instance, the earlier models include Single-Shot Multi-Box Detector (SSD) [7], which uses small CNN filters to predict multi-scale bounding boxes. This model is aimed at handling an object with different sizes. Yolo Object Detector [8] is the fastest among the single-stage family. It regresses the bounding boxes in conjunction with the classification score directly via a single CNN model.

3.5.2. Two-Stage Object Detectors

Firstly, the object candidate region, also called Region of Interest (ROI) or Region Proposal (RP), is predicted from a given scene. The ROIs are then processed to acquire the classification score and the bounding boxes of the target objects. Examples of these types of object detectors are R-CNN [104], Fast-RCNN [105], Faster-RCNN [6], and Mask-R-CNN [9]. The region proposal generation ideally helped these types of models to provide better accuracy than one-stage detectors.

However, this comes with the disadvantage of huge, sophisticated training and high-inference time accrue, making them relatively slower than the one-stage counterpart. In contrast, one-stage object detectors are easier to train and faster for real-time applications.

4. Detection and Classification of Radar Signals Using Deep Learning Algorithms

This section provides an in-depth review of the recent deep learning algorithms that employ various radar signal representations for object detection and classification in both ADAS and autonomous driving systems. One of the most challenging tasks in using radar signals with deep learning models is representing the radar signals to fit in as inputs to the various deep learning algorithms.

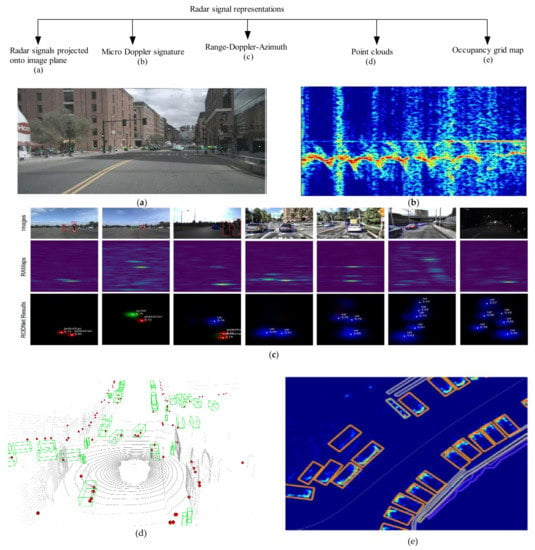

In this respect, many radar data representations have been proposed over the years. These include radar occupancy grid maps, Range-Doppler-Azimuth tensor, radar point clouds, micro-Doppler signature, etc. Each one of these radar data representations has its pros and cons. With the recent availability of accessible radar data, many studies have begun to explore radar data to understand them extensively. Thus, we based our review article on this direction. Figure 9 illustrates an example of the various types of radar signal representations.

Figure 9.

Example of different radar signal format representations. (a) Radar point clouds projected onto an image camera plane [58], with different colors depicting the depth information, (b) Spectrograms of a person walking away from the FMCW radar. (c) Samples of the Range-Doppler-Azimuth (RAMAPs) representations from the CRUW dataset [98], with the first row showing the images from the scene and the second row depicting their equivalent RAMAP tensors (d) Sample of Radar point clouds (red) with 3D annotations (green) and Lidar point clouds (grey) from the Nuscenes dataset. Image from [118]. And, (e) Example of a radar occupancy grid from a scene with multiple parked automobiles [119]. The white line represents the test vehicle driving path, and the rectangles represent the manually segmented objects along the grid.

4.1. Radar Occupancy Grid Maps

For a host vehicle equipped with radar sensors and drives along a given road, radar sensors can collect data about its motion in that environment. At every point in time, radars can resolve the object’s radial distance, the azimuth angle, and the radial velocity that falls within its field of view. Distance and angle (both elevation and azimuth) entail more about the target’s relative position (orientation) concerning the ego vehicle coordinate system. Simultaneously, the target’s radial velocity obtained from the Doppler frequency shift will aid in detecting the moving targets.

Hence, based on the vehicle pose, radar return signals can be accumulated in the form of occupancy grid maps from which algorithms in machine learning and deep learning can be utilized to detect the objects surrounding the ego vehicle. In this way, both static and dynamic obstacles in front of the radar can be segmented, identified, and classified. The authors of [106] discussed different radar occupancy grid map representations. The grid map algorithm’s sole purpose is to determine the probability of whether each of the cells in the grids is empty or occupied.

Elfes reported the first occupancy grid map-based algorithm for robot perception and navigation [107]. In the beginning, most of the algorithms, especially in robotics, used laser sensor data. However, with the recent success of radar sensors, many occupancy grids employ radar data for different applications. In this case, assuming we have an occupancy grid map, , consisting of grid cells of that represent an environment with a 2D grid of equally spaced cells. Each of these cells is a random variable with a probability value of either [0, 1] expressing their occupancy states over time. For instance, if is a grid map representation for a time instance , the cells are assumed to be mutually independent of one another. Then, the occupancy map can be estimated based on the posterior probability [107]:

where is the inverse sensor model, and it represents the occupancy probability of the cell, denotes the sensor measurement, and is the dynamic object pose from the ego vehicle.

A Bayes filter is typically used to calculate the occupancy value for each cell. Mainly, a posterior log formulation is used to integrate each of the new measurements for convenience.

Even though CNNs function extraordinarily well on images, they can also be tried and applied to other sensors that can yield image-like data [108]. The two-dimensional radar grid representations accumulated according to different occupancy grid map algorithms have already been exploited in deep learning domains for various autonomous system tasks, such as static object classification [109,110,111,112,113,114] and dynamic object classification [115,116,117]. In this case, the objects denote any road user within an autonomous system environment, like the pedestrian, vehicles, motorcyclists, etc.

For example, [109] is one of the earliest articles that employed machine learning techniques with a radar grid map. They proposed a real-time algorithm for detecting parallel and cross-parked vehicles using radar grid maps generated based on the occupancy grid reported by Elfes [107]. The candidate’s region was extracted, and two random forest classifiers were trained to confirm the parked vehicle’s presence. Subsequently, Lambacher et al. [110,111] presented a classification technique for static object recognition based on radar signals and DCNNs. The occupancy grid algorithm was used to accumulate the radar data into grid representations. All the occupancy grid cells were represented by a probability denoting whether it was occupied or not. Bounding boxes were labeled around each of the detected objects and applied to the classifiers as inputs. Bufler and Narayanan [112] also classified indoor targets with the aid of SVM. They generated their feature vectors using radar cross-entropy and observation angles from the simulated and measured objects.

The authors of [113] illustrate how to perform static object classification based on radar grid representation. Their work proves that semantic knowledge can be learned from the generated radar occupancy grids and accomplish cell-wise classification using CNN. L. Sless et al. [114] proposed an occupancy grid mapping using clustered radar data (point cloud). Ideally, the authors formulated their approach as a computer vision task in order to learn three semantic segmentation problems—namely, occupied, free, and unobserved spaces in front of or around vehicles. The main fundamental idea behind their proposed approach is the adoption of a deep learning model (i.e., encoder-decoder) to learn the occupancy grid mapping from the clustered radar data. They showed that their approach outperformed the classical filtering methods commonly used in the literature.

Usually, in the ideal case, the occupancy grid algorithm detects moving objects by removing moving objects based on their Doppler velocity. However, for complex systems, such as autonomous vehicle systems, both static and dynamic moving objects need to be detected simultaneously for the whole system’s efficacy. Hence, a radar grid map representation may not be suitable for dynamic objects, as other features have to be exploited in order to recognize dynamically moving objects like pedestrians. For dynamic road users such as vehicles, pedestrians, cyclists, etc., a grid map algorithm would require a longer time to be realized. This will not be good for applications like an autonomous system where latency is necessary.

Some authors, like [115,116], applied feature-based methods for classification. Schumann et al. [117] utilized a random forest classifier and long short-term memory (LSTM) based on radar data to classify dynamically moving objects. Feature vectors are generated from clustered radar reflections and fed to the classifiers. They found LSTM useful in their approach, as they were dealing with dynamic moving objects, since it is challenging to transform their radar signal into the image-like data needed by the CNN algorithms, while, for LSTM, successive feature vectors are grouped into a sequence.

The main problem with the radar grid map representations with regards to the deep learning and autonomous driving systems are:

- After the radar grid map generation, some significant information from the raw radar data may be lost, and thus, they cannot contribute to the classification task.

- The technique may result in a huge map, with many pixels in the grid map being empty, therefore adding more burden to the system complexity.

4.2. Radar Range-Velocity-Azimuth Maps

Having talked about radar grid representations in the previous section, as well as their drawbacks, especially in detecting moving targets. It will be essential to explore other ways to represent the radar data so that more information can be added to achieve a better performance. A radar image created via multidimensional FFT can preserve more informative data in the radar signal, as well as conforms to the required 2D grid data representation applicable to the deep learning algorithms like CNNs.

Many kinds of radar image tensors can be generated from the raw radar signals (ADC samples). This includes the range map, the Range-Doppler map, and the Range-Doppler-Azimuth map. A range map is a two-dimensional map that reveals the range profile of the target signal over time. Therefore, it demonstrates how the target range changes over time and can be generated by performing one-dimensional FFT on the raw radar ADC samples.

In contrast, the Range-Doppler map is generated by conducting 2D FFT on the radar frames. The first FFT (also called range FFT) is performed across samples in the time domain signal, while the second FFT (the velocity FFT) is performed across the chirps. In this way, a 2D image of radar targets is created that resolves targets in both range and velocity dimensions.

The Range-Doppler-Azimuth map is interpreted as a 3D data cube. The first two dimensions denote range–velocity, and the third dimension contains information about the target position (i.e., azimuth angle). The tensor is created by conducting 3-dimensional FFT (3D FFT), also known as the range FFT, the velocity FFT, and the angle FFT, on the radar return samples sequentially to create the complete map. Range FFT is performed on the time domain signal to estimate the range to the radar. Subsequently, velocity FFT is executed across the chirp’s frames to generate the Range-Doppler spectrum and then passed on to the CFAR detection algorithm to create a 2D sparse point cloud that can distinguish between real targets and the clutter. Finally, angle FFT is performed on the maximum Doppler peak of each range bin (i.e., detector Doppler), resulting in a 3D Range-Velocity-Azimuth map.

Most of the earlier studies using the Range-Doppler spectrums extracted from the automotive radar sensors performed either road user detection or classification using machine learning algorithms [36,120,121]. Reference [120] achieved pedestrian classification using a 24-GHz automotive radar sensor for city traffic application. Their system employed support vector machines, one of the most prominent machine learning algorithms, to discriminate pedestrians, vehicles, and other moving objects. Similarly, [121] presented an approach to detect and classify pedestrians and vehicles using a 24-GHz radar sensor with an intertwined Multi-Frequency Shift Keying (MFSK) waveform. Their system considered target features like the range profile and Doppler spectrum for the target recognition task.

S. Heuel and H. Rohling [36] presented a two-stage pedestrian classification system based on a 24-GHz radar sensor. In the first stage, they extracted both the Doppler spectrum and the range profile from the radar echo signal and fed it to the classifier. In the second stage, additional features were obtained from the tracking system and sent back to the recognition system to further improve the final system performance.

However, the techniques mentioned above based on machine learning require long time accumulations and feature selection to achieve a better performance from the handcrafted features learned on Range-Doppler maps. Due to the success achieved by deep learning algorithms in different tasks, such as image detection and classification, many researchers have now begun to apply it in their domains to benefit from its better performance. With enough training samples and GPU processors, deep learning provides a much better understanding than its machine learning counterparts.

The Range-Doppler-Azimuth spectrums extracted from automotive radar sensors have been used frequently as 2D image inputs to various deep learning algorithms for different tasks, ranging from obstacle detection to segmentation, classification, and identification in autonomous driving systems [122,123,124,125,126]. The authors of [122] presented a method to recognize objects in Cartesian coordinates using a high-resolution 300-GHz scanning radar based on deep neural networks. They applied a fast Fourier transform (FFT) on each of the received signals to obtain the radar image. Later, the radar image was converted from a polar radar coordinate to a Cartesian coordinate and used as an input into the deep convolutional neural network. Patel et al. [123] proposed an object classification based on a deep learning approach directly applied to automotive radar spectra for scene understanding. Firstly, they used a multidimensional FFT on the radar spectra to obtain the 3D Range-Velocity-Azimuth maps. Secondly, a Region of Interest (ROI) is extracted from the Range-Azimuth maps and used as an input to the DCNNs. Their approach could be seen as a potential substitute for conventional radar signal processing and has achieved better accuracy than the machine learning methods. This approach is particularly interesting, as most of the literature uses the full radar spectrum after the multidimensional FFT.

Similarly, Benco et al. [124] used radar signals to illustrate a deep learning-based vehicle detection system for autonomous driving applications. They represent the radar information as a 3D tensor using the first two spatial coordinates (i.e., Range-Azimuth) and then add the third dimension that contains the velocity information, therefore making it a complete Range-Azimuth-Doppler 3D radar tensor and forwarding it as the input to the LSTM. This is in contrast with the earlier approaches in the literature, where they first process the tensor using the CFAR algorithm to acquire 2D point clouds that distinguish the real targets from the surrounding clutter. However, this procedure may remove some important information from the original radar signal.

The authors of [125] presented a uniquely designed CNN, which they named RT-Cnet. This network takes as the input both the target-level (i.e., range, azimuth, RCS, and Doppler velocity) and low-level (Range-Azimuth-Doppler data cube) radar data for the multi-class road user’s detection system. The system uses a single radar frame and outputs both the classified radar targets, as well as their object proposal created based on the DBSACAN clustering algorithm [68]. In a nutshell, RT-Cnet performs object classification based on low-level data and the target-level radar data. The inclusion of the low-level data (i.e., speed distribution) improved the road user’s classification against the clustering methods. The object detection task is achieved through a combination of the RT-Cnet and a clustering algorithm that generates the bounding box proposal. A radar target detection scheme based on a four-dimensional space of Range-Doppler-Azimuth and elevation attributes acquired from radar sensors was studied in [126]. Their approach’s main aim was to replace the entire conventional module of detection and beamforming from the classical radar signal processing system.

Furthermore, radar Range-Velocity-Azimuth spectrums generated after 3D-FFT have been applied successfully in many other tasks, like human fall detection [127], human-robot classification [128], and pose estimation [129,130].

4.3. Radar Micro-Doppler Signatures

The dynamic moving objects within a radar field of view (FOV) generate a Doppler frequency shift in the returned radar signal, referred to as the Doppler effect. The Doppler effect is proportional to the target velocity. Moving objects consist of moving parts or components that vibrate, rotate, or even oscillate around them, with a different motion to the bulk target motion trajectory. The rotation or vibration of these components may induce an additional frequency on the radar returned signals and create a sideband Doppler velocity known as the micro-Doppler signature. This signature provides an image-like representation that can be utilized potentially for target classification or identification using either machine learning or deep learning algorithms.

Therefore, this motion-induced Doppler modulation may be captured to determine the dynamic nature of objects. Typical examples of micro-Doppler signatures for human walking are the frequency modulation motion induced by swinging components such as the arms and the legs and, also, the motion generated from the rotating propellers of helicopters or unmanned aerial vehicles (UAVs), etc.

The authors of [131] introduced the idea of a micro-Doppler signature and moved on to provide a detailed analysis and the mathematical formulation of different micro-Doppler modulation schemes [132,133]. Ideally, there are various methods for micro-Doppler signature extractions in the literature [134]. The most well-known technique among them is the time–frequency analysis called short-time Fourier transform (STFT). The STFT of a given signal is estimated mathematically, as expressed in [132] by:

where is the weighting function, and is the returned radar signal.

Compared to the standard Range-Velocity FFT, STFTs are calculated by dividing a long-time radar signal into shorter frames of equal lengths and After that, computing the FFT on the segmented frames. This procedure can be exploited to estimate the object’s velocity, representing the various Doppler signatures of the object’s moving parts. There are many methods for extracting radar micro-Doppler signatures with better resolutions than the STFT method; however, discussing them is not within the scope of this work.

Over the last decade, radar-based target classification using micro-Doppler features has gained significant research interest, especially with the recent prevalence of high-resolution radars (like the 77-GHz radar), resulting in much more distinct feature representations. In [135], different deep learning methods were applied to the micro-Doppler signatures obtained from Doppler radar for car, pedestrian, and cyclist classifications. The authors of [136,137] extracted and analyzed the micro-Doppler signature for pedestrian and vehicle classifications. In [138], the micro-Doppler spectrograms of different human gait motions were extracted for human activity classifications. Similarly, the authors of [139] performed both human detection and activity classification, exploiting radar micro-Doppler spectrograms generated from Doppler radar using DCNNs. However, without the range and angle dimensions, their system cannot spatially detect humans but only predict a human presence or absence from the radar signal.

Angelov et al. [38] demonstrated the capability of different DCNNs to recognize cars, people, and bicycles using micro-Doppler signatures extracted from an automotive radar sensor. The authors of [140] presented an approach based on hierarchical micro-Doppler information to classify vehicles into two groups (i.e., wheeled and tracked vehicles). Moreover, P. Molchanov et al. [141] presented an approach to recognized small UAVs and birds using their signatures measured with 9.5-Ghz radar. The features extracted from the signatures were evaluated with SVM classifiers.

4.4. Radar Point Clouds

The idea of radar point clouds is derived from computer vision domains concerning 3D point clouds obtained from Lidar sensors. Point clouds are unordered/scattered 3D data representations of information acquired by 3D scanners or Lidar sensors that can preserve the geometric information present in a 3D space and do not require any discretization [142]. This kind of 3D data representation provides high-resolution data that is very rich spatially and contains the depth information, compared to 2D grid image representations. Hence, they are the most commonly used representations for different scene understanding tasks, such as object segmentation, classification, detection, and many more.

Even though radar provides 2D data in polar coordinates, the radar signal can also be represented in the form of point clouds but differently. In conventional radar signal processing, a multi-dimensional 2D-FFT is usually conducted on the reflected radar signals to resolve the range and the velocity of the targets in front of the radar sensor. Later, a CFAR detection is applied to separate the targets from the surrounding clutter and noise. With this approach, the detected peak of the targets after CFAR can be viewed (or represented) as a point cloud with its associated attributes, such as the range, azimuth, RCS, and compensated Doppler velocity. Therefore, a radar point cloud can be defined as a sequence of independent points , , in which the order of each point in the point cloud is insignificant. For each radar detection, the radial distance, azimuth angle , radar cross-section (RCS), and the ego-motion compensated Doppler velocity can be generated. Therefore a dimensional radar point cloud is acquired.

Some studies have recently started implementing deep learning models using radar point clouds for different applications [45,49,50,51,143,144]. The authors of [51] presented the first article that employed radar point clouds for semantic segmentation. They used radar point clouds as the input to the classification algorithm, instead of feature vectors acquired from the clustered radar reflections. In essence, they assigned a class label to each of the measured radar reflections. In reference [45], Andreas Danzer et al. employed the PointNet ++ [145] model using radar point clouds for 2D object classifications and bounding box estimations. They used the popularly known PointNets family model, which was ideally designed to consume 3D Lidar point clouds, and adjusted it to fit radar point clouds with different attributes and characteristics.

O. Schumann et al. [50] proposed a new pipeline to segment both static and moving objects using automotive radar sensors for semantic (instance) segmentation applications. They used two separate modules to accomplish their task. In the first module, they employed 2D CNN to segment the static objects using radar grid maps. To achieve that, they introduced a new grid map representation by integrating the radar cross-section (RCS) histogram into the occupancy grid algorithm proposed in [146] as a third additional dimension, which they named the RHG-Layer. In the second module, they introduced another novel recurrent network architecture that accepted radar point clouds as inputs for instance-segmentation of the moving objects. The final results from the two modules were merged at the final stage of the pipeline to create one complete semantic point cloud from the radar reflections. Zhaofei Feng et al. [49] presented object segmentation using radar point clouds and the PointNet ++. Their method explicitly detected and classified the lane marking, guardrail, and moving cars on a highway.

S. Lee [144] presented a radar-only 3D object detection system trained on a public radar dataset based on deep learning. Their work aimed to overcome the lack of enough radar-labeled data that usually led to overfitting in deep learning training. They introduced a novel augmentation method by transforming the Lidar point clouds into radar-like point clouds and adopted Complex-YOLO [147] for one-stage 3D object detection.

4.5. Radar Signal Projection

In this method, radar detection or point clouds are usually transformed into a 2D image plane. The relationship between the radar coordinate, the camera coordinate, and the coordinate where the object is situated plays a vital role in this case. Therefore, the camera calibration matrices (i.e., intrinsic and extrinsic parameters) are used to transform the radar points (i.e., detections) from the world coordinate into a camera plane.

In this way, the generated radar image contains the radar detections and its characteristics superimposed on the 2D image grid and, as such, can be applied to deep learning classifiers. Many studies have used these radar signal representations as the input for various deep learning algorithms [28,30,54,58].

Table 1 summarizes the reviewed deep learning-based models employing various radar signal representations for ADAS and autonomous driving applications over the past few years.

Table 1.

Summary of the deep-learning algorithms using different radar signal representations.

4.6. Summary

A comprehensive review about radar data processing based on deep learning models is provided, covering different applications such as object classification, detection, and recognition. The study was itemized based on different radar signal representations used as the input to various deep learning algorithms. This is chosen mainly because radar signals are unique, with their own characteristics that are different from other data sources, such as 2D images and Lidar point clouds, which are frequently exploited in deep learning research.

5. Deep Learning-Based Multi-Sensor Fusion of Radar and Camera Data

To our best knowledge, no review paper has explicitly focused on the deep learning-based fusion of radar signals and camera information for different challenging tasks involving autonomous driving applications. This makes it somewhat challenging for beginners to venture into this research domain. In this respect, we provide a summary and discussions of the recently published papers according to the new fusion algorithms, fusion architectures, and fusion operations, as well as the datasets published between (2015-current) for the deep multi-sensor fusion of vision and radar information. We also discuss the challenges and possible research directions and potential open questions.

The improved performance achieved by neural networks in processing image-based data has now made some researchers tempted to incorporate additional sensing modalities in the form of multimodal sensor fusion to improve their performance further.

Therefore, by combining more than one sensor, the research community wants to achieve a more accurate, robust, real-time, and reliable performance in any task involved in environmental perceptions for autonomous driving systems. To this end, deep learning models are now being extended to perform deep multi-sensor fusion in order to benefit from the complementarity data from multiple sensing models, particularly in complex environmental situations like an autonomous driving case.

However, most of the recently published articles about DCNN fusion-based algorithms focused on combining camera and Lidar sensor data [31,33,34,35,148].

For instance, reference [31] performed a Multiview 3D (MV3D) object detection by a fusion of the feature representations extracted from three different frames of Lidar and camera—namely, the Lidar bird’s eye view, Lidar front view, and the camera front view. Other studies directly fused point cloud features and image features. Among these studies is Point-fusion [148], which utilizes ResNet [89] and PointNet [149] to generate image features and Lidar point cloud features, respectively, and then uses a global/dense fusion network to fuse them.

Some studies recently considered a neural networks-based fusion of radar signals with camera information to achieve different tasks in autonomous driving applications. The distinctiveness of radar signals and the lack of accessible datasets have contributed to insufficient studies, in that respect. Additionally, this could also be due to the high-sparsity characteristic of radar point clouds acquired with most automotive radars (typically, points).

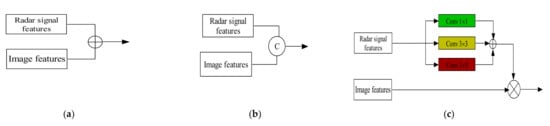

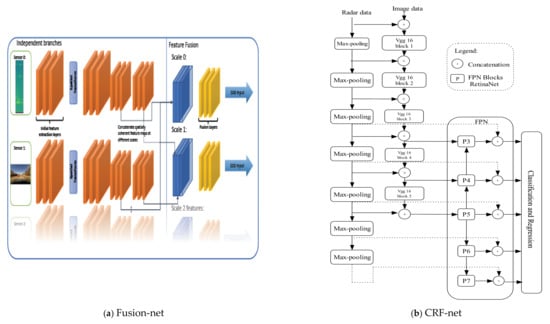

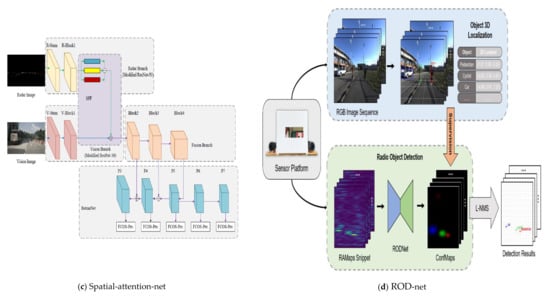

Generally, the challenging task concerning using radar signals with deep learning models is how to model the radar information to suit the required 2D image representation needed by the majority of deep learning algorithms. Many authors have proposed different radar signal representations in this respect, including Range-Doppler-Azimuth maps, radar-grid maps, micro-Doppler signature, radar signal projections, raw radar point clouds, etc. To this end, we itemized our review according to these four fundamental aspects—namely, radar signal representations, fusion levels, deep learning-based fusion architectures, and the fusion operations.

5.1. Radar Signal Representations

Radar signals are unique in their peculiar way, as they represent the reflection points obtained from target objects within the proximity of the radar sensor field of view. These reflections are accompanied by their respective characteristics, such as the radial distance, radial velocity, RCS, angle, and the amplitude. Ideally, these signals are 1D signals that cannot be applied directly to DCNN models, which usually require grid map representations as the input for image recognition. Therefore, radar signals are required to be transformed into 2D image-like tensors so that they can be practically deployed together with camera images into deep learning-based fusion networks.

5.1.1. Radar Signal Projection

The radar signal projections technique is when the radar signals (usually, radar point clouds or detections) are transformed into either a 2D image coordinate or into a 3D bird-eye view. Usually, the camera calibration matrices (both intrinsic and extrinsic) are employed to perform the transformation. In this way, a new pseudo-image is obtained that can be consumed by the DCNN algorithms efficiently. A more in-depth discussion about millimeter-wave radar and camera sensor coordinate transformations can be found in [150]. To this end, many deep learning-based fusion algorithms using vision and radar data that are projected onto various domains are reported in the literature [47,48,55,56,57,100,151,152,153,154,155,156].

To alleviate the complex burden involved by the two-stage object detectors with regards to region proposal generations, R. Nabati and H. Qi [47] proposed a radar-based region proposal algorithm for object detection for autonomous vehicles. They generate object proposals and anchor boxes through the mapping of radar detections onto an image plane. By relying on radar detections to obtain region proposals, they avoid the computational steps from the vision-based region proposal method while achieving improved detection results. In order to accurately detect distant objects, reference [54] fused radar and vision sensors. First, the radar image representation was acquired via projecting the radar targets into the image plane and also generating two additional image channels based on the range and radial velocity. After that, they used an SSD model [7] to extract the feature representations from both radar and vision sensors. Lastly, they used a concatenation method to fuse the two features.

The authors of [55], projected sparse radar data onto the camera image’s vertical plane and proposed a fusion method based on a new neural network architecture for object detection. Their framework automatically learned the best level for which the sensor’s data could improve the detection performance. They also introduced a new training strategy, referred to as Black-in, that selected the particular sensor to give preference at a time to achieve better results. Similarly, the authors of [100] performed free space segmentation using an unsupervised deep learning model (GANs) incorporating the radar and camera data in 2D bird-eye view representations. M. Meyer and G. Kuschk [56] conducted a 3D object detection using radar point clouds and camera images based on the deep learning fusion method. They demonstrated how DCNNs could be employed for the low-level fusion of radar and camera data. The DCNNs were trained with the camera images and the BEV images generated from the radar point clouds to detect 3D space cars. Their approach outperformed the Lidar camera-based settings even in a small dataset.