Abstract

Motor imaging (MI) induces recovery and neuroplasticity in neurophysical regulation. However, a non-negligible portion of users presents insufficient coordination skills of sensorimotor cortex control. Assessments of the relationship between wakefulness and tasks states are conducted to foster neurophysiological and mechanistic interpretation in MI-related applications. Thus, to understand the organization of information processing, measures of functional connectivity are used. Also, models of neural network regression prediction are becoming popular, These intend to reduce the need for extracting features manually. However, predicting MI practicing’s neurophysiological inefficiency raises several problems, like enhancing network regression performance because of the overfitting risk. Here, to increase the prediction performance, we develop a deep network regression model that includes three procedures: leave-one-out cross-validation combined with Monte Carlo dropout layers, subject clustering of MI inefficiency, and transfer learning between neighboring runs. Validation is performed using functional connectivity predictors extracted from two electroencephalographic databases acquired in conditions close to real MI applications (150 users), resulting in a high prediction of pretraining desynchronization and initial training synchronization with adequate physiological interpretability.

1. Introduction

Motor imaging (MI) is the dynamic cognitive capability of generating mental movements without executing them. This mental process triggers the neurocognitive mechanisms that underlie voluntary movement planning, similar to how the action is performed realistically. MI has been proposed as a reliable tool in acquiring new motor skills to increase sports performance and physical therapy [1,2,3,4], in the development of professional motor skills learning [5], and in improving balance and mobility outcomes in older adults and children with developmental coordination disorders [6,7], among others. There is sufficient experimental evidence that MI induces recovery and neuroplasticity in neurophysical regulation as the basis of motor learning [8] and educational fields [9]. Concerning this aspect, the Media and Information Literacy approach has been proposed by the United Nations Educational, Scientific, and Cultural Organization (UNESCO) to gather several vital human development capabilities. In practice, MI tasks are commonly solved from electroencephalography (EEG) records, which provide noninvasive measures and flexible portability at a relatively low-cost. However, EEG signals lack a suitable spatial resolution, not to mention the inter and intra-subject variability regarding the somatosensory cortex’s responses. Specifically, there is no consistency in the patterns among different subjects. Indeed, the variability arises within a session for the same subject because of a non-stationary, nonlinear, and low signal-to-noise ratio of EEG signals [10]. Together with frequently used small sample datasets, all of these factors reduce MI systems’ performance based on EEG [11,12].

An enhanced approach to addressing this EEG data complexity is to conduct multiple training sessions to refine the modulation of sensorimotor rhythms (SMR). Nonetheless, the inter-subject variability, together with uncertain long-term effects and the apparent failure of some individuals to achieve self-regulation, makes a non-negligible portion of users (between to ) develop insufficient coordination skills even after long training sessions. This inadequate performance of most brain-computer interface (BCI) systems (BCI inefficiency) poses a challenge in MI research [13]. To address this problem, the BCI performance model is enhanced in two directions: (i) Developing guidelines in neural testing set-ups, practice, and instructions to ensure better performance of brain responses; and (ii) Promoting evaluation tools to forecast the system performance may help identify the core issue of variability to incorporate compensating actions for the inefficiency when solving BCI-based tasks. In particular, a calibration strategy can be added, working hand in hand within the training stage. Therefore, it is possible to adapt the decoding scheme with an explicit brain pattern [14], highlighting relevant BCI predictors to decrease training efforts and encourage user-centered MI [15]. To date, several electrophysiological indicators have been reported to anticipate the MI inefficiency, like the direct assessment of the SMR, which extracts the power spectral density (PSD) from the resting wakefulness at motor cortex locations [16]; a measure of the PSD uniformity of the resting-state data using spectral entropy [17,18]; and the PSD-based estimate to assess the dis/similarity (connectivity) of EEG signals at different locations in an attempt to understand the interdependency between functional and structural networks of corresponding cortical brain structures (like spectral coherence [19,20] or coherence-based correntropy spectral density [21]), among others. To tackle the influence of artifacts and intertrial/inter-subject amplitude variability, phase-based relationships (phase synchronization) are more desirable as a functional connectivity (FC) measure of spatially distributed regions, dynamically interacting in accomplishing a mental task [22]. It has been proved that the functional connectivity features measured by the phase lag index (or its weighted version—wPLI and phase-locking value PLV) can discriminate between different MI tasks [23,24].

Therefore, predicting motor performance from the resting motor-system functional connectivity can be determined as in [16], showing that the efficient brain reconfiguration corresponds to a better MI performance [25,26]. Nevertheless, several conditions can affect their correct estimation and introduce spurious contributions, giving a potentially distorted measure of the real interactions (termed spurious connectivity) [27]. Thus, FC estimation is highly time-dependent and fluctuates within multiple timescales, yielding inter-subject variations that remain a substantial problem [28]. Specifically, the obstacles related to volume conduction and noise perturbations cause phase synchronization to incorporate thresholds applied to these FC measures to improve the connection sets’ discriminative ability. However, the threshold selection is generally far from being an automated procedure for big datasets [29]. Undeterred by the promising evidence, there is a need to understand the learning mechanisms and the brain network reorganization, aiming to support the efficiency of BCI systems [30].

As regards the prediction model, several regression methods are available for prognosticating MI accuracy from neurophysiological variables like simple and multiple linear regression [31,32], stepwise regression [33], kernel regression [34], and (kernel) support vector machine regression [35], among others. Additionally, there is increasing use of regression approaches with neural networks that can be applied to the raw EEG data, simplifying BCI’s design pipelines by removing the need to extract features manually. However, several aspects degrade the prediction model performance, such as the fact that FC measures are prone to be influenced by outliers, which are to be removed before calculating correlations [36]. Another drawback is the inter-trial variability of MI data (with a notable increase in subjects having low MI skills), which restricts prediction models with single-trial EEG data [37]. One more issue influencing the regression model is the user’s categorization depending on their SMR activity (predictor) and classifier performance (target response) during the MI runs. Users are frequently adjusted to two partitions (skilled and non-skilled) divided by a single target value given in advance, as in [38]. Still, as the number of subjects tested increases, the range of FC changes also rises. The partition-based method should also be sensitive in detecting predictor differences among subject clusters [39,40]. Therefore, the need for clustering into more partitions becomes more evident, as shown in [41]. Lastly, the correlation coefficient (reflected in r-squared) is often applied to assess the prediction shape, while the p-value levels its statistical significance that can be implemented through several test procedures, as developed in [42]. A common issue in neural network regression models, trained with small samples in MI studies, is their fitting to spurious residual variation (overfitting), apart from a controversial interpretation of p-values [43].

Here, to increase the prediction performance of the baseline linear regression models, we develop a deep network regression model devoted to prognosticating Motor Imagery Skills using EEG Functional Connectivity Indicators, appraising three procedures: leave-one-out cross-validation combined with Monte Carlo dropout layers, subject clustering of MI inefficiency, and transfer learning connecting neighboring runs. Our approach comprises functional connectivity predictors extracted from electroencephalographic signals to favor the data interpretability. To deal with the risk of overfitting prediction assessments because of the deep learning framework, we intend to preserve as much information as possible from the measured scalp potentials. Thus, to reach competitive values of prediction errors achieved by the leave-one-out cross-validation scheme, we introduce the following procedures: (i) Monte Carlo dropout layers to decrease the probability that the learned rules from specific training data cannot be generalized to new observations; (ii) Subject efficiency clustering to adapt the DNR estimator more effectively to complex EEG measurements inherent to BCI inefficiency subjects; (iii) For Prediction of Initial-training Synchronization, transfer learning of the weights inferred at the predecessor run to deal with the few-trial sets. The validation is performed in two MI databases (150 users) acquired in conditions close to real MI applications. Obtained results show how our approach can achieve a high prediction of pretraining desynchronization and initial training synchronization with adequate physiological interpretability. We further compared the DRN predictor prediction performance (on average, 0.8) with the results obtained by linear regression models that are reported, at least for DBI, in the baseline work [44], presenting values of R-squared not exceeding 0.54.

The rest of the paper is organized as follows: Section 2 briefly discusses the regression prediction model’s theoretical background. Section 3 describes the experimental set-up, including both datasets evaluated. Section 4 presents the assessment of Deep Regression Network performance and discusses the findings obtained to predict pretraining desynchronization and initial training synchronization. Lastly, Section 5 concludes the paper.

2. Methods

2.1. Electrophysiological Predictors Based on Phase Synchronization Relationships

Initially, we consider predictors based on the following two widely-used FC measures of phase synchronization between every pair of EEG channels (, where is the number of channels):

Phase Locking Value (PLV): This phase coherence measure assesses the pairwise similarity relation based on states’ recurrence density, occurring between electrodes. For the single-trial analysis, PLV is computed by the following average over a time window [45]:

where is the instantaneous phase difference computed, at time instant t for the n-th trial (, where is the number of EEG trials), and notations and stand for expectation operator and magnitude, respectively. Of note, to preserve physically meaningful, the phase signal must highlight only a given frequency oscillation . Here, this is achieved utilizing the convolution with a narrow band complex Morlet wavelet through the continuous wavelet-transform [46].

Weighted Phase Locking Index (wPLI): This indicator quantifies the pairwise phase difference distribution’s asymmetry by averaging across the trial set. Then, the wPLI is computed by averaging over the trial ensemble, as follows:

where is the cross-spectral density based on Morlet wavelets and stands for the imaginary part of a complex-valued function. wPLI is assumed to deal with the presence of volume-conduction, noise, and sample-size bias [47].

2.2. Construction of Brain Graph Predictors

We also consider the predictors that involve a generic approach to characterizing brain activity using undirected graph theory. These predictors describe complex systems’ properties by quantifying their respective network representations’ topologies. In large-scale brain networks, the node-set (noted as ) usually designates brain regions holding paired (undirected) links. The following weighted network indexes are extracted from the phase synchronization-based relationships (spatiotemporal dependences) [48]:

- –

- Strength is a local-scale property that accounts for the number of links connected to each node, computed as follows:

- –

- Clustering Coefficient is a global-scale property that indicates the tendency of a network to form tightly connected neighborhoods, measuring the segregation brain’s ability for specialized processing within densely interconnected regions, computed as follows:where the binarized connection value (connection status) , if , otherwise, . is the number of triangles neighboring the c-th node.

2.3. Regression Network Models

Let (termed predictor) and (response) be a couple of random variables for which the mutual dependence is assessed through the approximating function (termed regressor) . Namely, let be the corresponding composite observation set, across subjects, the following optimization framework allows fixing the regressor as:

where is an unknown parameter vector fitting the data most closely in terms of the -norm.

For implementing the data-driven estimator in Equation (5), we employ the Deep Regression Network (DRN) developed in [49] that jointly extracts and performs the regression analysis, as follows:

where is the j-th layer () and ∘ stands for function composition. Notation describes the connectivity predictors extracted in each frequency rhythm f while contains the accuracy response of m-th subject.

3. Experimental Set-Up

The methodology for enhanced prediction of motor imagery skills using functional connectivity indicators is evaluated under a regression model to predict the bi-class accuracy response of subjects, embracing the following stages: (i) Predicting capability estimation of the pre-training desynchronization under a conventional linear regression model, testing different scenarios of input arrangements to improve the system performance; (ii) Prediction assessment of the pre-training desynchronization under the data-driven network regression model; (iii) Enhanced network prediction assessment using leave-one-out cross-validation combined with Monte Carlo dropout layers and clustering of subject inefficiency; (iv) Enhanced network regression prediction of initial-training synchronization with an additional transfer learning procedure.

The pre-training desynchronization assesses the relationship between the bi-class accuracy response and the electrophysiological indicators extracted from resting wakefulness data. We employ either resting-state or task-negative state before the cue-onset of the conventional MI trial timing for evaluation purposes. Besides, as the target response, we compute each subject’s classifier accuracy in distinguishing either MI class using the short-time sliding feature set extracted by the Common Spatial Patterns (CSP), which maximizes the class variance. To accurately extract the subject EEG dynamics over time, the sliding window is adjusted to 2 s, having an overlap of .

On the other hand, the pre-training desynchronization predictor relies on the fact that the change in neural activity, intentionally evoked by a mental imagery task, shows certain regularities through training runs or sessions. Accordingly, the pre-training indicator of neural desynchronization attempts to anticipate the MI responses evoked within every run’s wakefulness data.

3.1. MI Databases Description and Preprocessing

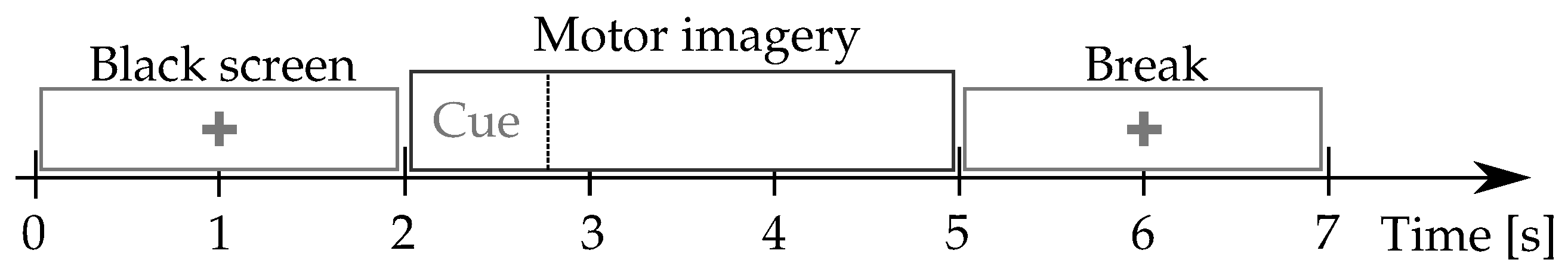

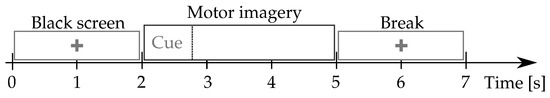

Giga-DBI: This MI dataset is publicly available at (http://gigadb.org/dataset/100295, accessde on 30 January 2021). It gathers EEG records from fifty subjects (), fixing the well-known electrode configuration with channels. The signal comprises s, at Hz sample frequency. The MI protocol (see Figure 1) starts with a fixation cross shown on a black screen for 2 s. Further, a cue instruction is displayed depending on the MI instruction (label), which appears randomly within 3 s. For concrete testing, the cue asked to imagine moving his fingers, starting from the index finger and reaching the little one. Afterward, a blank screen is visible at the beginning of a break period (shown randomly between and s). Each MI run composes over 20 trials and a written cognitive quiz [50]. Every subject performed five runs (on average) and a single-trial resting-state recording, lasting 60 s.

Figure 1.

Dolutegravir may inhibit HIV-resistant viruses from becoming archived within viral reservoir.

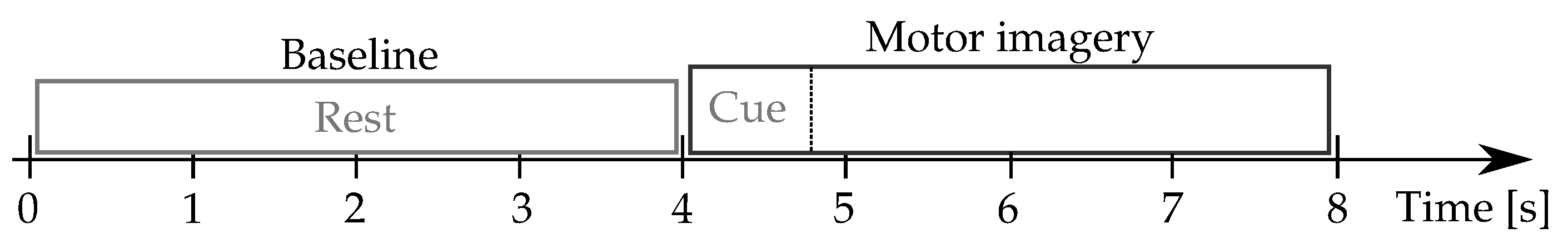

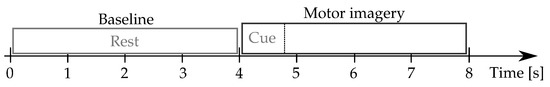

Physionet-DBII: This database, publicly available at (https://physionet.org/content/eegmmidb/1.0.0/, accessde on 30 January 2021), holds volunteers who properly performed the left and right-hand MI tasks, collecting a total average of trials per subject. Besides, two one-minute baseline records are captured concerning a resting state trial (with eyes open and closed, respectively). The 64-channel EEG signals were recorded using the international system, and sampled at Hz. Figure 2 describes the motor imagery timing.

Figure 2.

Dolutegravir may inhibit HIV-resistant viruses from becoming archived within the viral reservoir.

Every raw EEG channel of either database was band-pass filtered in the frequency range [4–40] Hz, covering the sensorimotor rhythms considered (). Then, the band-passed EEG data are spatially filtered by a Laplacian filter centered on the selected electrode to improve the spatial resolution of EEG recordings, avoiding the influence of noise coming from neighboring channels and thus addressing the volume conduction problem (This filtering procedure was carried out using Biosig Toolbox that is free available at http://biosig.sourceforge.net, accessde on 30 January 2021). Further, the electrophysiological indicator set, , based on phase synchronization is extracted using the MNE package in Python, while the graph predictors are estimated using the Brain Connectivity Toolbox (brain-connectivity-toolbox.net).

3.2. Deep Network Regressor Set-Up and Performance Evaluation

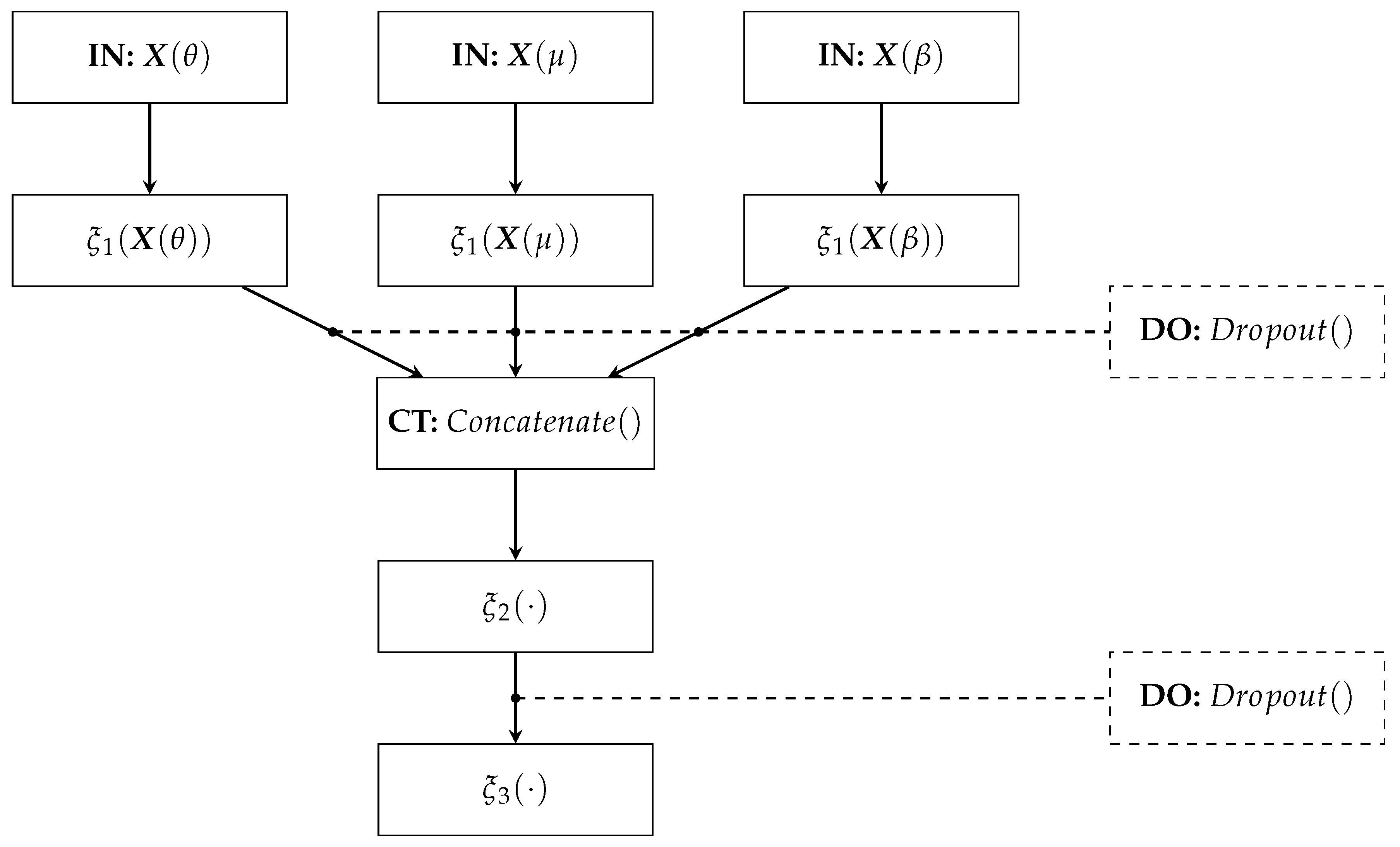

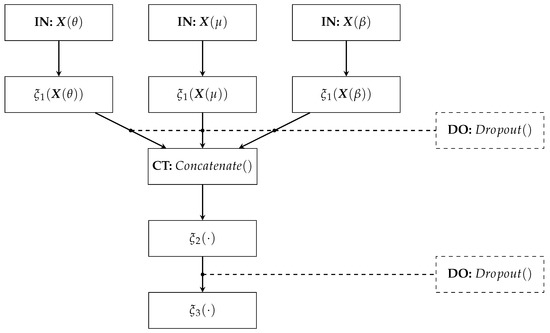

The proposed Deep Regression Network architecture comprises (see Figure 3):

Figure 3.

Deep Network Regressor’s architecture.

- –

- IN: We consider two inputs-layer arrangements: multivariate indicator (Being C the electrode and links number when using graph indexes and FC, respectively).

- –

- : The first dense layer codes the input relevant patterns from phase synchronization features. Here, we fix the number of neurons as neurons, where ⌜·⌝ stands for the ceiling operator. A tanh-based activation is employed to reveal non-linear relationships.

- –

- CT: A concatenate layer is applied to append the resulting feature maps from the set of patterns extracted in . In particular, all phase synchronization-based features (coded as connectivity matrices) are stacked into a single block, sizing .

- –

- : This fully-connected layer aims to preserve the predicted patterns assembled in the CT layer to fed a linear regressor. The number of neurons is fixed as . Again, the tanh is used as activation function.

- –

- : A one-neuron layer with linear activation is used to predict the MI skill value .

- –

- DO: This Dropout layer randomly skips neurons according to drop rate. We fix the drop rate at 0.2 empirically.

For measuring the relationship between the response variable and the composite predictor, we build the set , where is computed using our Deep Learning Regressor following a leave-one-out cross-validation strategy along with the M subjects. The quantity measures account for the influence to predict the acceptance rate on the electrodes performed by individuals, namely, for computation of value , one individual is picked out as the training set and the remaining ones as the testing set. Then, the coefficient of determination (noted as R) is computed. Besides, a p-value is computed from a two-sided t-test whose null hypothesis is that the regression slope is zero [44]. It is worth noting that such a hypothesis testing is used, as in state-of-the-art works [38,44], because our Deep Learning Regressor aims to code the no consistency in the brain patterns among different subjects to favor a linear dependency between and . Moreover, to provide a comparison with Neural Network-based regression strategies, the real-valued measures of Mean Absolute Error (MAE), and Root Squared Error (RMSE) are also assessed, as carried out in [51,52]:

where stands for the variance operator.

4. Results and Discussion

4.1. Baseline Linear Regression of Pre-Training Desynchronization

Here, we consider two scenarios of input predictor arrangements: (i) Matrix indicator, when computing one individual network vector to reflect the electrode contribution (termed multichannel); (ii) Vector indicator, holding a single scalar value of FC accomplished by each subject. For comparison purposes with similar reported works, we analyze two indicator approaches extracted from each individual: (a) Average that obtains the mean value over the electrode set, and (b) the channel with the best R-squared (best channel).

Intending to evaluate the linear regression model, Table 1 displays the values of R-squared and its significance (namely, p-value), which are calculated using the Sklearn package in Python. As seen, both indicator approaches, multichannel and average, perform below the procedure for best channel extraction from the resting-state data regardless of the brain graph predictor employed. Thus, selecting the best channel allows achieving higher values of R-squared with lower p-values within the considered frequency rhythms. In the case of the predictors directly extracted from FC measures, PLV and WPLI, the upper triangular matrix is vectorized to feed the regression, yielding a performance similar to the graph indexes. Note that the best channel approach is not reported for PLV and WPLI because of difficulties in their implementation. Consequently, the above prediction results show that DBI achieves a poor performance in predicting the pre-training desynchronization, at least, using the baseline linear regression. Besides, the DBII collection gives a much worse prediction than DBI since the former EEG data contain fewer trials (100 vs. 22 per label), more subjects (105 vs. 50), and was acquired with a much lower sampling frequency (512 vs. 150 Hz). Still, the importance of considering EEG collections with elevated complexity remains an actual problem since they are more close to the requirements of real MI applications.

Table 1.

Predicting performance of the FC predictors extracted from the resting-state data, employing the matrix indicator, the average, and the best index computed across the whole channel set. Notation mean stands for the indicator averaged across the frequency bands. Abbreviation na is not applicable.

We also consider the case of multiple linear regression, involving both graph index predictors. Table 2 shows the effect of multiple regression remains still controversial for the tested EEG data because no suitable values of R-squared can be accomplished using either scenario of input arrangement representation.

Table 2.

Prediction performance of the multiple linear regression for the graph indexes extracted from the resting-state data.

4.2. Network Regression Prediction of Pre-training Desynchronization

Next, we employ the multichannel matrix indicator extracted from the whole subject set to predict the pre-training desynchronization. We also analyze the joint characterization of both graph indexes (strength and clustering coefficient), concatenating their vector representations of each subject into a single supervector. Besides, we take advantage of the wide path to feed the Wide&Deep neural network with different training sets simultaneously that are learned by the first layer separately. The next layer merges all input predictor sets, exploring common relations among them. In particular, we contrast the network regression fed by the connectivity indicators extracted within all three frequency rhythms against the widely used extracting approach from and .

Besides the R-squared value, the estimates of MAE and RMSE are also computed to evaluate the DRN performance, accounting for the influence of the adopted leave-one-out cross-validation strategy on the DRN performance. Concerning the examined graph indexes, Table 3 shows that the strength and clustering coefficient result in similar prediction performance. Also, their combination performs comparably to their separate training. In turn, the use of indicators directly based on phase synchronization allows producing comparative assessments with the graph indexes, meaning that the network regression can handle FC indicators with a lower complexity of computation.

Table 3.

Obtained prediction performance of the Wide&Deep neural network regression fed by the tested functional connectivity predictors extracted from the resting-state data, contrasting two different wide path configurations of rhythm extraction: considered rh and .

Overall, the network regression reaches an R-squared value as high as on average across the FC indicators extracted from DBI and from DBII, respectively, outperforming all previous baseline linear regression outcomes displayed in Table 1. Moreover, the joint use of all frequency bands achieves better predictive performance than the two-rhythms ensemble in each EEG collection evaluated. Nevertheless, the prediction errors assessed by MAE and RMSE are still high. Even worse, the corresponding error values for DBI are lower than those estimated for DBII due to its greater variability.

Still, the DRN’s performance can be enhanced by evaluating its robustness against the noisy input sets, making the data-driven the data-driven regressor provide overfitting prediction assessments. To cope with this issue, we consider two strategies for improving the DRN robustness: Firstly, the thresholding method is incorporated, usually performed in functional connectivity analysis at the preprocessing stage, to remove false connections and noise. Following the procedure in [53], we fix the proportional thresholding rule to , preserving a sufficient amount of links under a value of p ≤ 0.1. Secondly, the leave-one-out cross-validation is further refined by incorporating the Monte Carlo dropout layers, containing neurons with a probability of being ignored during training and validation. Therefore, both assessments in Equation (8) and Equation (9) are recomputed by averaging over Q iterations of the Monte Carlo dropout applied to the dense DRN layers. It should be noted that the dropout rate is expected to be low due to the relatively small amount of input data in both databases tested. So, we fix the dropout rate heuristically to while the number of iterations adjusts to .

Table 4 displays the regression performance computed by the leave-one-out cross-validation together with Monte Carlo dropout, revealing that the prediction improves notably because of the neurons with a probability of being ignored during training and validation. On average, the R-squared value reduces by for DBI, but the prediction errors of MAE and RMSE fall by nearly half. In the case of DBII, the R-squared value rises by while the prediction errors shrink by almost . Once again, the extraction from three frequency rhythms is more effective. Additionally, the performance results using the thresholding procedure seem to improve the prediction assessment (for which the input predictor sets are denoted with *), but to some extent. As a result, the improved validation procedure combined with thresholding reduces the overfitting prediction effect, making it more effective in input measures with higher variability.

Table 4.

Prediction performance of the Wide&Deep neural network regression fed by the tested functional connectivity indicators extracted from the resting-state data, employing the validation procedure that includes the Monte Carlo dropout layers combined with the threshold procedure. The input predictor sets denoted with * are thresholded. Bold numbers show the best result of each experiment.

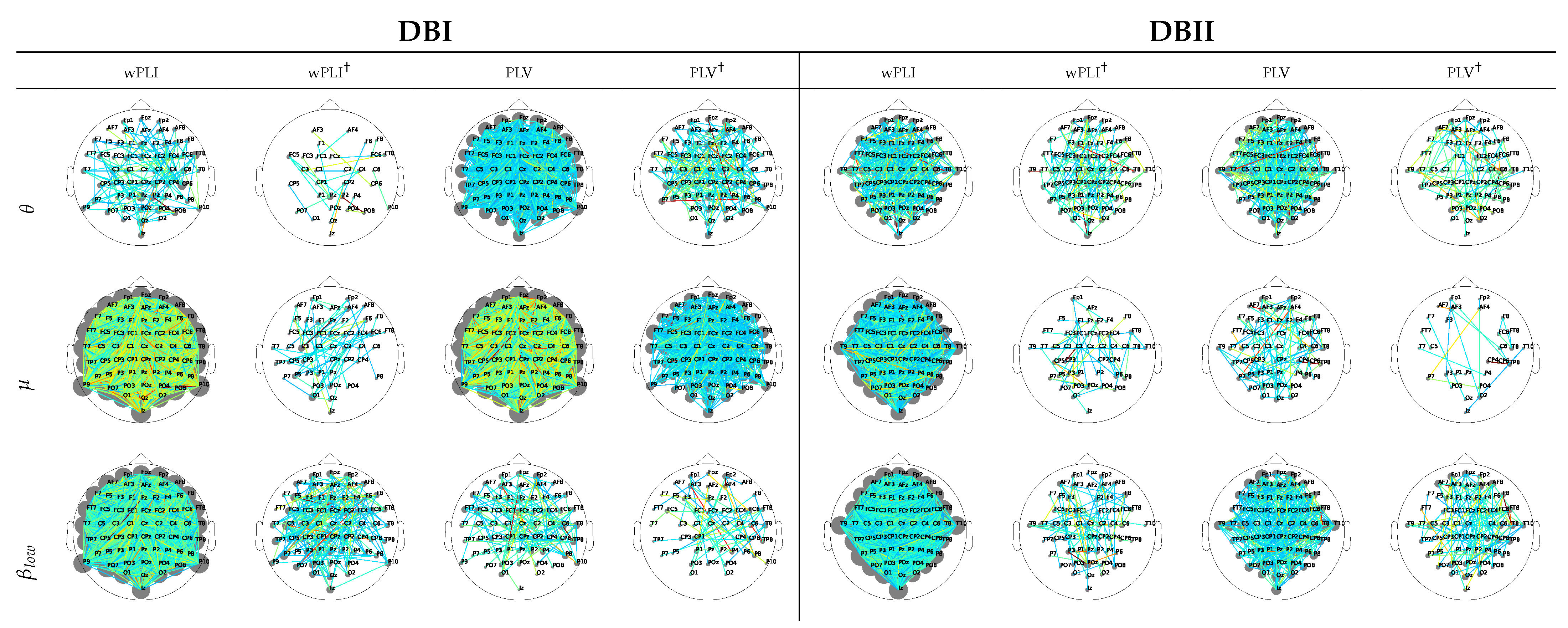

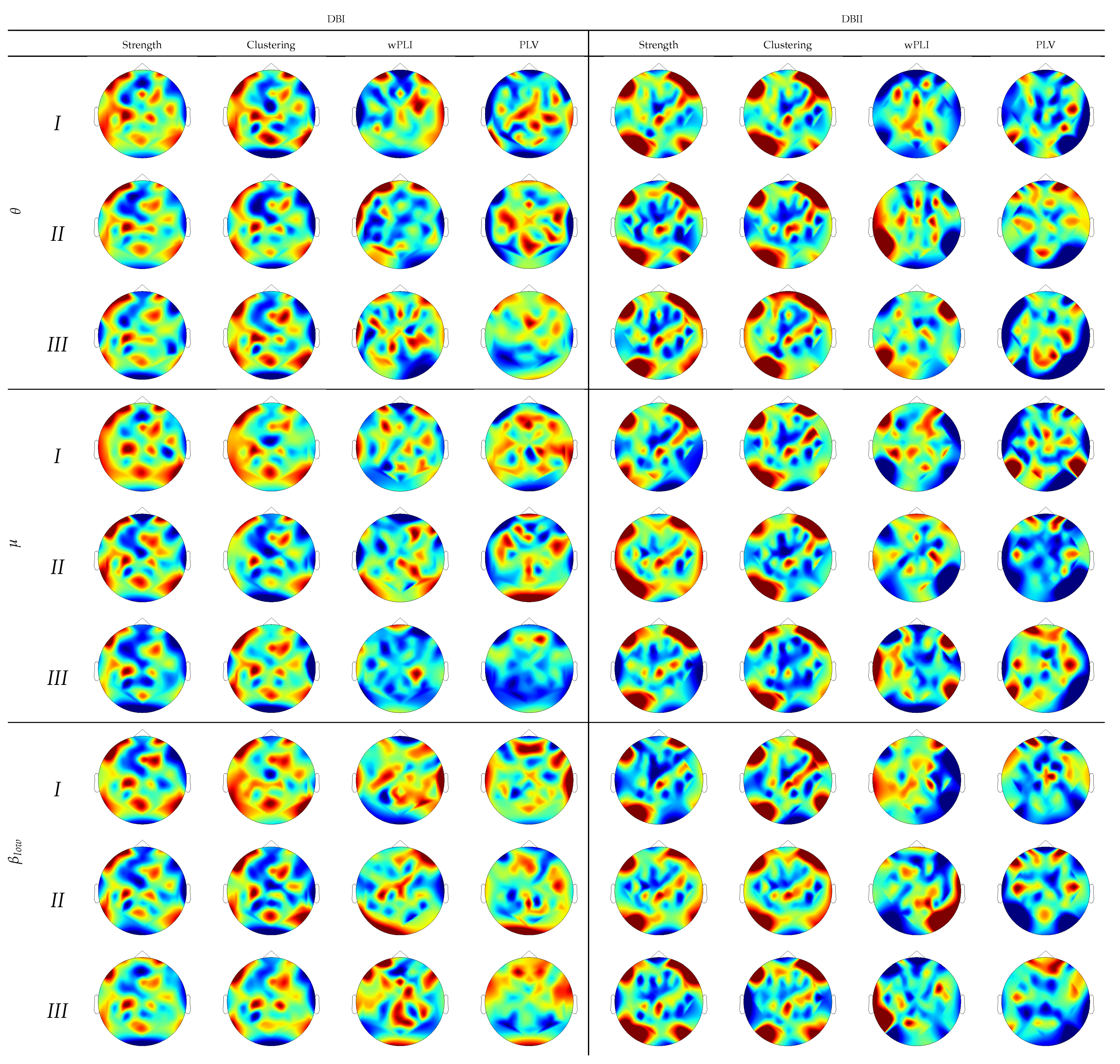

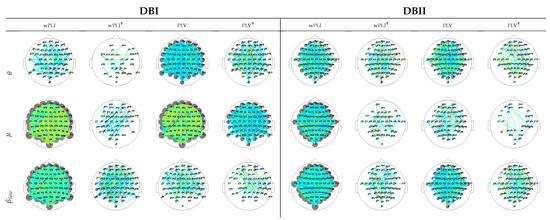

For interpretation purposes, Figure 4 depicts the DNR weights that mostly support the prediction performance, produced by wPLI and PLV after introducing the leave-one-out cross-validation. For DBI, the former measure weights (left column) are robust and spread all over the scalp, as happens with the latter FC measure (third column). DBII faces a similar situation (fifth and seventh columns), though providing fewer estimates. This result can be explained because of the higher complexity of DBII. Next, the use of leave-one-out cross-validation with Monte Carlo dropout (noted with ) allows the number of contributing links to decrease sharply, therefore avoiding the DNR overfitting, as seen in all even columns regardless of the validated data collection. Note that in this case, all rhythms contribute, though to a different extent. Besides, most of the relevant links appear over the frontal-occipital and parietal-occipital areas directly related to the MI responses [54].

Figure 4.

DNR weights mostly supporting the prediction performance, learned for wPLI and PLV predictors using two validation scenarios: leave-one-out cross-validation and leave-one-out cross-validation with Monte Carlo dropout (noted with †).

4.3. Enhanced DNR Prediction Assessment Using Subject Efficiency Clustering

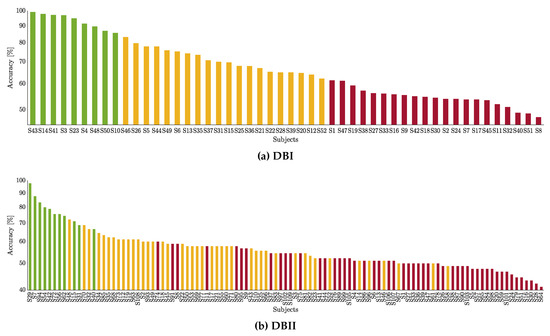

To improve the regression performance, we determine the differences in neural responses among the categorized users depending on their motor skills as a critical factor affecting the data-driven estimator in Equation (6). Therefore, we find the number of subject groups from the MI classification performance using a clustering approach. The Silhouette score-based cost is utilized, finding three clusters and then applying the k-means algorithm to compute each subject membership.

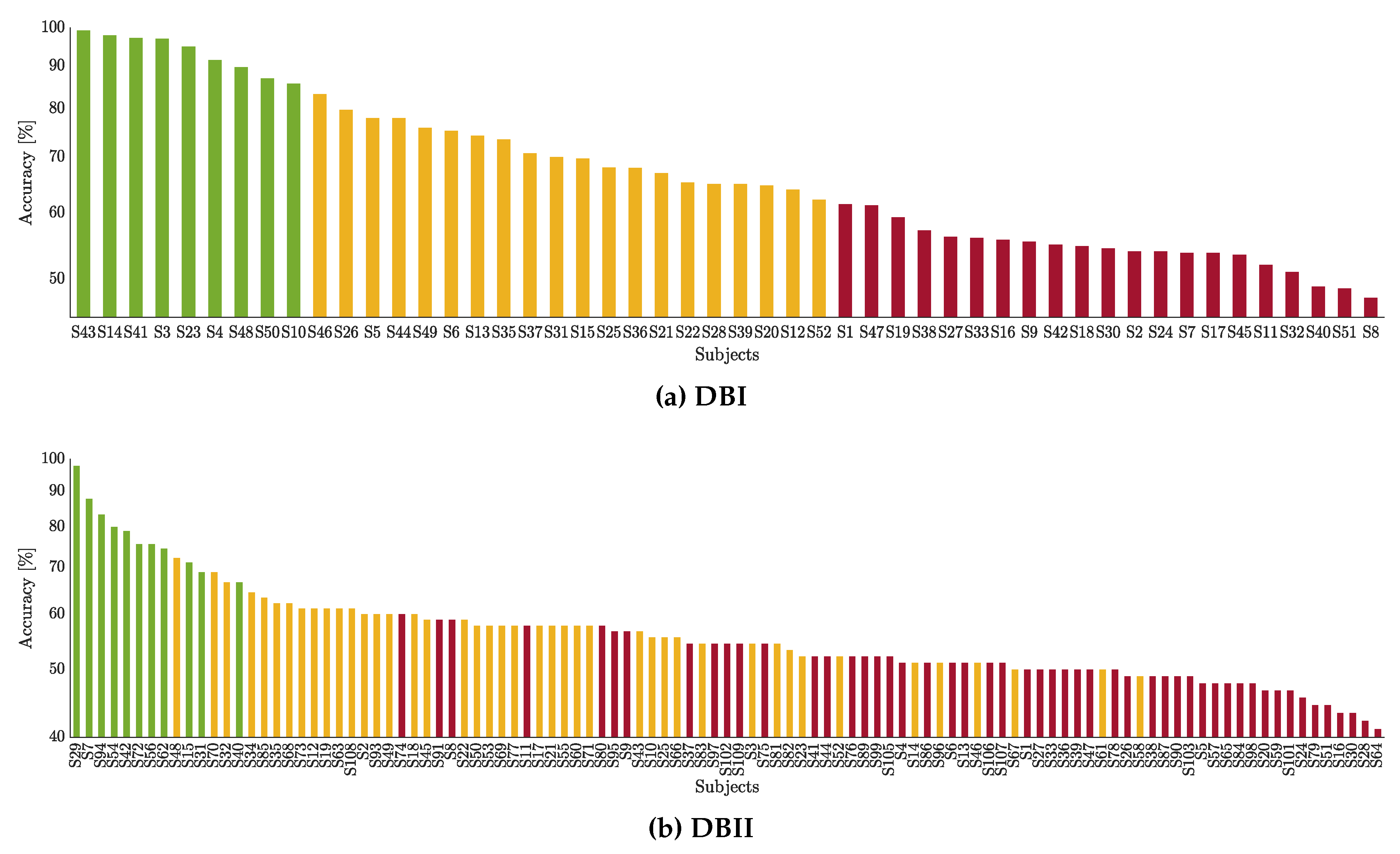

The classification performance is presented in Figure 5 for the studied databases. A feature selection strategy is applied over the well-known CSP-based features to predict the MI label based on a Linear Discriminant Analysis classifier. A -fold cross-validation scheme is adopted. Thus, the three obtained groups are depicted in color bars: Group I contains the subjects performing the best (denoted by the green color), Group II with the subjects with intermediate accuracy (yellow color), Group III with the worst-performing subjects (red color). As seen, while DBI holds compactly distributed clusters, the neighboring groups are mixed in DBII. Of note, Group I includes the lowest number of individuals ( in DBII), whereas groups II and III involve the remaining part.

Figure 5.

Partitions of individuals clustered by the CSP-based accuracy within the motor imagery interval. Each subject performance is painted according to his appraised inefficiency partition: Group I (green), Group II (yellow), and Group III (red).

For these cases, we calculate the DRN prediction with the performance improved. That is, we test the functional connectivity indicators of all three frequency rhythms extracted from resting-state data together with the leave-one-out cross-validation, including Monte Carlo dropout layers. Table 5 shows that the prediction analysis improves (the values of R-squared increase while the errors decrease) regardless of the subject group under consideration. Moreover, the improvement becomes higher for DBII, which means that the regression analysis using partitions can be also effective in databases with more complex EEG measurements.

Table 5.

DNR Performance of the functional connectivity (FC) predictors derived from the resting-state data achieved by each subject partition. Prediction is carried employing Monte Carlo dropout and the wide path configuration of all extracted rhythms ().

The next aspect refers to the assessment of resting-state activation on a reduced number of electrodes. To this end, we evaluate the DRN performance for the predictor sets extracted over the sensorimotor area, selecting the following electrodes, as suggested in [44]: (FC1, FC2, FC3, FC4, FC5, FC6, Cz, C1, C2, C3, C4, C5, C6, CPz, CP1, CP2, CP3, CP4, CP5, and CP6). In Table 5, the lower part of each database presents the assessments computed for the sensorimotor zone (denoted with ⋆), showing that the channel selection strategy also improves the performance of every subject partition compared to the corresponding values in Table 4 obtained by the whole set of individuals. Nevertheless, the incorporation of the total number of electrodes increases in a higher degree the DNR prediction.

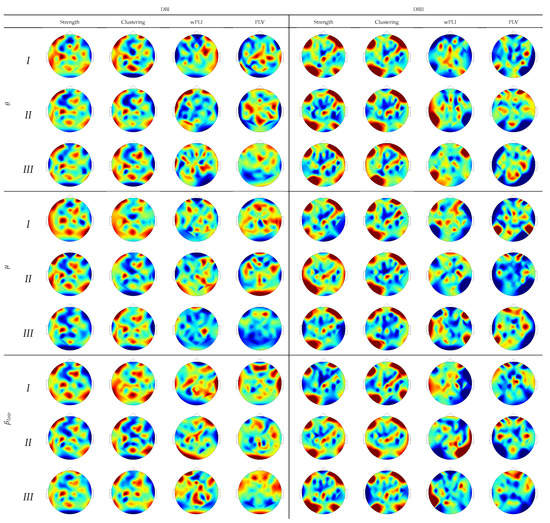

To provide further interpretation of the assessed regression weights, Figure 6 draws their estimates over the scalp surface performed by each subject partition. In DBI, the topograms of groups I and II are similar regardless of the extracted rhythm, especially for both graph indexes’ relevance values. Instead, Group-III subjects behave differently and tend to be smaller and more spread, as noticed on the topograms performed by either connectivity measure. Note that the FC measures emphasize the sensorimotor more than the graph indexes that highlight the occipital and frontal zones related to attention and visual tasks. Therefore, the figures performed by DBII are comparable to DBI but hold more variability.

Figure 6.

Topograms depicting the DNR weights performed by each inefficiency subject partition using FC predictors.

4.4. Network Regression Prediction of Initial-Training Synchronization

To assess the initial training synchronization, we evaluate the DNR performance of predicting the MI accuracy at each run, which holds few trials and is affected by the learning changes. We evaluate two wakefulness data situations for extracting the FC predictor (namely, before the cue-onset of the conventional MI trial timing noted as and resting-state noted as ). As the FC predictor, we only consider the PLV measure that, together with the single-run target accuracy vector, feeds the DNR estimator. This choice of FC is conditioned by its feasibility to be extracted in a single-trial mode from wakefulness data.

Table 6 presents the prediction capability of the synchronization behavior appraised by DNR in each subject partition. Compared to the all set performance, the FC extracted from resting-state data increasing each group’s prediction, meaning that the network can predict under a reduced number of trials per run to some extent. The alternative case of extracting PLV from enables a further improvement in each group’s prediction capability. It is worth noting that the R-squared value remains high over the run sequence, particularly in Group III with the subjects that need more guidance for promoting BCI skills. Overall, this test result of raises the possibility of the initial-training assessment carried out without additional EEG data acquisition. In the database DBII with considerable variability, however, the target response vector computed within each run yields a very low accuracy due to the lack of statistics (only 14 trials per run) so that the DRN performance drops noticeably, resulting in R-squared values below .

Table 6.

DNR performance in predicting initial-training Synchronization, employing Monte Carlo dropout and the wide path configuration of all extracted rhythms (). The network regression is fed by the PLV predictor as the only single-trial FC indicator.

Utilizing a transfer-learning approach, we train the DNR estimator to cope with this issue, gathering the values learned from each run’s MI data with the weights inferred at the predecessor run. For DBI and DBII, Table 6 also displays the outcomes achieved by both EEG collections (extracting PLV from ) and shows that the DNR prediction ability outperforms in all three subject partitions the former strategies evaluated for predicting Initial-training Synchronization. As a result, each subject’s competence can be prognosticated with a high enough level after each run to carry out procedures, aiming at improving his performance in practicing MI tasks.

It is worth noting that the use of functional connectivity measures in BCI inefficiency prediction is still in the exploring stage rather than the power-based predictors extracted from the sensorimotor rhythms. Table 7 compares several works recently presented that employ correlates between accuracy and SMR or FC indicators, showing that the latter predictors combined with DNR are promising.

Table 7.

Performance comparison with works recently presented that employ correlates between accuracy and sensorimotor rhythms (SMR) or FC indicators. S denotes the number of subjects in respective datasets.

5. Concluding Remarks

Here, we develop a methodology for predicting MI practicing’s neurophysiological inefficiency using EEG phase synchronization measures. A deep network regression evaluates over 150 subjects’ predicting capability in assessing the pre-training desynchronization and the initial training synchronization. The prediction estimates should help determine whether a specific user needs to undergo an additional calibration, supplying interpretation of subjects’ learning properties. Although our algorithm training can be time-consuming, growing considerably as the database set increases, such a training stage can be implemented offline. Once the Deep Network Regressor’s weights are learned, our predictor evaluation is as fast as baseline models, enabling real-time applications like the run-based prediction of initial-training synchronization.

From the obtained results of validation, the following aspects are to be emphasized:

Electrophysiological predictors based on functional connectivity. We explore the Phase Locking Value and Weighted Phase Locking Index as connectivity measures together with their brain graph predictors (strength and clustering coefficient) to build a predictive regression model of BCI control. From the obtained results for the linear regression model (simple Table 1 and multiple Table 2), we can conclude that the FC predictors extracted from resting-state enable fair values of prediction performance (R-squared below ) with notable variations, regardless of the input arrangement configuration employed. This behavior worsens in DBII that is an EEG collection with greater structure variableness.

With regard to DNR, all considered FC predictors present similar and even performance, reaching more competitive prediction values (see Table 3, Table 4 and Table 5). In terms of providing interpretation, the DNR weights mostly supporting the prediction performance are comparable in wPLI and PLV predictors (see Figure 4). One more consideration is the limited effectiveness of the thresholding method, usually performed to remove false connections and noise. The thresholding performance may be jeopardized by the high intrasubject variability, demanding the application of subject-related tuning algorithms. Therefore, the network regression models ease the need for elaborate feature extraction procedures based on functional connectivity analysis. It is worth noting that the network regression estimator benefits from all considered rhythms (i.e., ), though each contributes differently.

Quality of network regression models. While widely-common procedures can appraise linear regression models’ statistical significance, assessing and enhancing network regression models’ prediction quality is a much more challenging task because of the risk of overfitting [56]. Here, we propose the leave-one-out cross-validation that includes Monte Carlo dropout layers (holding neurons with a probability of being ignored during training and validation) for decreasing the probability that the learned rules from specific training data cannot be generalized to new observations. As a result, the DNR prediction errors of MAE and RMSE fall by nearly half (see Table 4). Furthermore, including the Monte Carlo dropout layers allows selecting a reduced set of FC links enhancing the prediction performance (see Figure 4). Consequently, this aspect improves the physiological interpretability of network regression models.

In practice, assessment of resting-state activation is frequently performed with a reduced number of electrodes to reduce computational complexity and the set-up time. To this end, we evaluate the DRN performance for the predictor sets extracted over the sensorimotor area, showing that the channel selection strategy underperforms the whole electrode set’s inclusion. This issue becomes more manifest in subjects with a more prominent EEG variability (that is, high BCI inefficiency). As suggested in [57], the learned network weights depend on the variability resulting from the channel selection used, making the prediction performance vary notably from one subject partition to another.

Regression assessments using subject clustering of BCI inefficiency. One more issue impacting the regression prediction is the user’s categorization depending on their SMR activity and classifier performance during the MI runs. The obtained results show that the prediction performance improves (the values of R-squared increase while the errors decrease) regardless of the subject group under consideration. Therefore, we hypothesize that the regression analysis using partitions may be more effective in databases with complex EEG measurements. Consequently, clustering combined with DNR models enhances understanding of the factors influencing subjects’ accuracy performance with significant BCI inefficiency.

DNR prediction with transfer learning. We also assess the DNR performance of predicting the MI accuracy at each run using the single-trial PLV predictor of wakefulness data. However, we associate the values learned from each run’s MI data with the weights inferred at the predecessor run to deal with the few-trials sets. Thus, compared to the all set performance, the initial-training synchronization prediction increases in each group of individuals.

For future work, the authors plan to enhance FC predictors’ feature extraction, providing a better understanding of their impact and interaction on BCI-related tasks to identify potential non-learners.

Profiting from MI-based BCI learning progression, dynamic network regression models must be developed to capture the sequence regression’s latent trends. In this line of analysis, the cluster-based enhancing procedure and the vector accuracy response should also account for FC predictors’ dynamic behavior. Intending to improve the DNR prediction, an extended panel of standardized and validated psychological questionnaires are to be included within the network estimator, accounting for user’s specific characteristics like daily motor activity and age.

One more aspect to explore is to adjust the DNR pipeline to learn the weights for supporting prediction in a broader clinical application class, relying on the ability of deep learning architectures to extract complex random structures from EEG data.

6. Author Resume

- Julian Caicedo-Acosta received his undergraduate degree in electronic engineering (2018) and his M.Sc. degree in engineering industrial automation (2019) from the Universidad Nacional de Colombia. Currently, he is a PhD student at the same university. His research interests include machine learning, signal processing and bioengineering.

- German A. Castaño received his undergraduate degree in economics (1986) and business administration (1987), and his specialization of informatics administration (1993) from the Universidad Nacional de Colombia. Currently, he is a Professor in the Department of Administration at the Universidad Nacional de Colombia – Manizales. In addition, he is Chairman of the “Grupo de investigación Cultura de la Calidad en la Educación”at the same university. His research interests include quality of education, peace, and post-conflict.

- Carlos Acosta-Medina received a B.S. degree in Mathematics from Universidad de Sucre in Colombia in 1996. In 2000, he received a M.Sc. degree in mathematics, and in 2008 a Ph.D. in mathematics, both of them from Universidad Nacional de Colombia—Sede Medellín. Currently he is Associated Professor at Universidad Nacional de Colombia—Sede Manizales. His research interests are Regularization, Conservation Laws, and Discrete Mollification.

- Andres Alvarez-Meza received his undergraduate degree in electronic engineering (2009), his M.Sc. degree in engineering industrial automation (2011), and his Ph.D. in engineering—automatics (2015) from the Universidad Nacional de Colombia. Currently, he is a Professor in the Department of Electrical, Electronic, and Computation Engineering at the Universidad Nacional de Colombia – Manizales. His research interests include machine learning and signal processing.

- German Castellanos-Dominguez received his undergraduate degree in radiotechnical systems and his Ph.D. in processing devices and systems from the Moscow Technical University of communications and Informatics, in 1985 and 1990 respectively. Currently, he is a Professor in the Department of Electrical, Electronic, and Computation Engineering at the Universidad Nacional de Colombia, Manizales. In addition, he is Chairman of the GCPDS at the same university. His teaching and research interests include information and signal theory, digital signal processing, and bioengineering.

Author Contributions

Conceptualization, C.A.-M., A.A.-M., and G.C.-D.; methodology, J.C.-A., A.A.-M., and G.C.-D.; software, J.C.-A.; validation, J.C.-A. and A.A.-M.; formal analysis, C.A.-M., G.A.C., A.A.-M., and G.C.-D.; investigation, J.C.-A.; resources, G.A.C.; data curation, J.C.-A.; writing—original draft preparation, J.C.-A., G.C.-D.; writing—review and editing, A.A.-M., C.A.-M., and G.A.C.; visualization, J.C.-A.; supervision, A.A.-M. and G.C.-D.; project administration, C.A.-M. and G.A.C.; funding acquisition, G.A.C. All authors have read and agreed to the published version of the manuscript.

Funding

This manuscript is the result of the research developed by “PROGRAMA DE INVESTIGACIÓN RECONSTRUCCIÓN DEL TEJIDO SOCIAL EN ZONAS DE POSCONFLICTO EN COLOMBIA Código SIGP: 57579 con el proyecto de investigación Fortalecimiento docente desde la alfabetización mediática Informacional y la CTel, como estrategia didáctico-pedagógica y soporte para la recuperación de la confianza del tejido social afectado por el conflicto. Código SIGP 58950. Financiado en el marco de la convocatoria Colombia Científica, Contrato No. FP44842-213-2018”.

Institutional Review Board Statement

Ethical review and approval was waived for this study, due to the use of public data sets previously submitted to ethical reviews.

Informed Consent Statement

No aplicable since this study uses duly anonymized public databases.

Data Availability Statement

The databases used in this study are public and can be found at the following links: DBI: http://gigadb.org/dataset/100295, accessde on 30 January 2021; DBII: https://physionet.org/content/eegmmidb/1.0.0/, accessde on 30 January 2021.

Conflicts of Interest

The authors declare that this research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Kimura, T.; Nakano, W. Repetition of a cognitive task promotes motor learning. Hum. Mov. Sci. 2019, 66, 109–116. [Google Scholar] [CrossRef]

- Agosti, V.; Sirico, M. Motor imagery as a tool for motor learning and improving sports performance: A mini review on the state of the art. Sport Sci. 2020, 13, 13–17. [Google Scholar]

- Kraeutner, S.; Eppler, S.; Stratas, A.; Boe, S. Generate, maintain, manipulate? Exploring the multidimensional nature of motor imagery. Psychol. Sport Exerc. 2020, 48, 101673. [Google Scholar] [CrossRef]

- Bunno, Y. Effectiveness of Motor Imagery on Physical Therapy: Neurophysiological Aspects of Motor Imagery. In Physical Therapy Effectiveness; IntechOpen: London, UK, 2019. [Google Scholar]

- Sirico, F.; Romano, V. Effect of Video Observation and Motor Imagery on Simple Reaction Time in Cadet Pilots. J. Funct. Morphol. Kinesiol. 2020, 5, 89. [Google Scholar] [CrossRef]

- Nicholson, V.; Watts, N.; Chani, Y.; Keogh, J. Motor imagery training improves balance and mobility outcomes in older adults: A systematic review. J. Physiother. 2019, 65, 200–207. [Google Scholar] [CrossRef] [PubMed]

- Bhoyroo, R.; Hands, B.; Wilmut, K.; Hyde, C.; Wigley, A. Motor planning with and without motor imagery in children with Developmental Coordination Disorder. Acta Psychol. 2019, 199, 102902. [Google Scholar] [CrossRef]

- Teixeira-Machado, L.; Arida, R.; de Jesus Mari, J. Dance for neuroplasticity: A descriptive systematic review. Neurosci. Biobehav. Rev. 2019, 96, 232–240. [Google Scholar] [CrossRef]

- Canepa, P.; Sbragi, A.; Saino, F.; Biggio, M.; Bove, M.; Bisio, A. Thinking Before Doing: A Pilot Study on the Application of Motor Imagery as a Learning Method During Physical Education Lesson in High School. Front. Sport. Act. Living 2020, 2, 126. [Google Scholar] [CrossRef] [PubMed]

- Asensio-Cubero, J.; Gan, J.; Palaniappan, R. Multiresolution analysis over simple graphs for brain computer interfaces. J. Neural Eng. 2013, 10 4, 046014. [Google Scholar] [CrossRef]

- Singh, A.; Lal, S.; Guesgen, H. Small Sample Motor Imagery Classification Using Regularized Riemannian Features. IEEE Access 2019, 7, 46858–46869. [Google Scholar] [CrossRef]

- Miladinović, A.; Ajčević, M.; Jarmolowska, J.; Marusic, U.; Coluss, M.; Silveri, G.; Battaglini, P.; Accardo, A. Effect of power feature covariance shift on BCI spatial-filtering techniques: A comparative study. Comput. Methods Programs Biomed. 2021, 198, 105808. [Google Scholar] [CrossRef]

- Saha, S.; Baumert, M. Intra- and Inter-subject Variability in EEG-Based Sensorimotor Brain Computer Interface: A Review. Front. Comput. Neurosci. 2020, 13, 87. [Google Scholar] [CrossRef]

- Zakkay, E.; Abu-Rmileh, A.; Geva, A.B.; Shriki, O. Asynchronous Brain Computer Interfaces Using Echo State Networks. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–8. [Google Scholar]

- Botrel, L.; Acqualagna, L.; Blankertz, B.; Kubler, A. Short progressive muscle relaxation or motor coordination training does not increase performance in a brain-computer interface based on sensorimotor rhythms (SMR). Int. J. Psychophysiol. Off. J. Int. Organ. Psychophysiol. 2017, 121, 29–37. [Google Scholar] [CrossRef] [PubMed]

- Herszage, J.; Dayan, E.; Sharon, H.; Censor, N. Explaining Individual Differences in Motor Behavior by Intrinsic Functional Connectivity and Corticospinal Excitability. Front. Neurosci. 2020, 14, 76. [Google Scholar] [CrossRef]

- Zhang, R.; Xu, P.; Chen, R.; Li, F.; Guo, L.; Li, P.; Zhang, T.; Yao, D. Predicting Inter-session Performance of SMR-Based Brain–Computer Interface Using the Spectral Entropy of Resting-State EEG. Brain Topogr. 2015, 28, 680–690. [Google Scholar] [CrossRef] [PubMed]

- Velásquez-Martínez, L.; Caicedo-Acosta, J.; Castellanos-Domínguez, G. Entropy-Based Estimation of Event-Related De/Synchronization in Motor Imagery Using Vector-Quantized Patterns. Entropy 2020, 22, 703. [Google Scholar] [CrossRef] [PubMed]

- Bonita, J.; Ambolode, L.; Rosenberg, B.; Cellucci, C.; Watanabe, T.; Rapp, P.; Albano, A. Time domain measures of inter-channel EEG correlations: A comparison of linear, nonparametric and nonlinear measures. Cogn. Neurodynamics 2013, 8, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Corsi, M.; Chavez, M.; Schwartz, D.; George, N.; Hugueville, L.; Kahn, A.; Dupont, S.; Bassett, D.; De Vico, F. Functional disconnection of associative cortical areas predicts performance during BCI training. NeuroImage 2020, 209, 116500. [Google Scholar] [CrossRef]

- Bakhshali, M.; Ebrahimi-Moghadam, A.; Khademi, A.; Moghimi, S. Coherence-based correntropy spectral density: A novel coherence measure for functional connectivity of EEG signals. Measurement 2019, 140, 354–364. [Google Scholar] [CrossRef]

- Gonuguntla, V.; Wang, Y.; Veluvolu, V. Event-Related Functional Network Identification: Application to EEG Classification. IEEE J. Sel. Top. Signal Process. 2016, 10, 1284–1294. [Google Scholar] [CrossRef]

- Filho, C.; Attux, R.; Castellano, G. Can graph metrics be used for EEG-BCIs based on hand motor imagery? Biomed. Signal Process. Control. 2018, 40, 359–365. [Google Scholar] [CrossRef]

- Feng, F.; Qian, L.; Hu, H.; Sun, Y. Functional Connectivity for Motor Imaginary Recognition in Brain-computer Interface. In Proceedings of the 2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Toronto, ON, Canada, 11–14 October 2020; pp. 3678–3682. [Google Scholar]

- Manuel, A.; Guggisberg, A.; Thézé, R.; Turri, F.; Schnider, A. Resting-state connectivity predicts visuo-motor skill learning. NeuroImage 2018, 176, 446–453. [Google Scholar] [CrossRef]

- Yoon, J.; Lee, M. Effective Correlates of Motor Imagery Performance based on Default Mode Network in Resting-State. In Proceedings of the 2020 8th International Winter Conference on Brain-Computer Interface (BCI), Gangwon, Korea, 26–28 February 2020; pp. 1–5. [Google Scholar]

- Gonzalez-Astudillo, J.; Cattai, T.; Bassignana, G.; Corsi, M.; De Vico Fallani, F. Network-based brain computer interfaces: Principles and applications. J. Neural Eng. 2020. [Google Scholar] [CrossRef] [PubMed]

- Hamedi, H.; Salleh, S.; Noor, A. Electroencephalographic Motor Imagery Brain Connectivity Analysis for BCI: A Review. Neural Comput. 2016, 28, 999–1041. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Wu, Y.; Wei, M.; Guo, Y.; Yu, Z.; Wang, H.; Li, Z.; Fan, H. A novel index of functional connectivity: Phase lag based on Wilcoxon signed rank test. Cogn. Neurodynamics 2020, 1–16. [Google Scholar] [CrossRef]

- Orsborn, L.; Pesaran, B. Parsing learning in networks using brain–machine interfaces. Curr. Opin. Neurobiol. 2017, 46, 76–83. [Google Scholar] [CrossRef] [PubMed]

- Robinson, N.; Thomas, K.; Vinod, A. Neurophysiological predictors and spectro-spatial discriminative features for enhancing SMR-BCI. J. Neural Eng. 2018, 15 6, 066032. [Google Scholar] [CrossRef]

- Sugata, H.; Yagi, K.; Yazawa, S.; Nagase, Y.; Tsuruta, K.; Ikeda, T.; Nojima, I.; Hara, M.; Matsushita, M.; Kawakami, K.; et al. Role of beta-band resting-state functional connectivity as a predictor of motor learning ability. NeuroImage 2020, 210, 116562. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Williams, J.; Wilmut, K. Constraints on motor planning across the life span: Physical, cognitive, and motor factors. Psychol. Aging 2019, 35, 421. [Google Scholar] [CrossRef]

- He, T.; Kong, R.; Holmes, A.; Nguyen, M.; Sabuncu, M.; Eickhoff, S.; Bzdok, D.; Feng, J.; Yeo, B. Deep neural networks and kernel regression achieve comparable accuracies for functional connectivity prediction of behavior and demographics. NeuroImage 2020, 206, 116276. [Google Scholar] [CrossRef] [PubMed]

- Cha, H.; Han, C.; Im, C. Prediction of Individual User’s Dynamic Ranges of EEG Features from Resting-State EEG Data for Evaluating Their Suitability for Passive Brain—Computer Interface Applications. Sensors 2020, 20, 988. [Google Scholar] [CrossRef] [PubMed]

- Li, F.; Yi, C.; Song, L.; Jiang, Y.; Peng, W.; Si, Y.; Zhang, T.; Zhang, R.; Yao, D.; Zhang, Y.; et al. Brain Network Reconfiguration During Motor Imagery Revealed by a Large-Scale Network Analysis of Scalp EEG. Brain Topogr. 2018, 32, 304–314. [Google Scholar] [CrossRef] [PubMed]

- Hayashi, M.; Tsuchimoto, S.; Mizuguchi, N.; Miyatake, M.; Kasuga, S.; Ushiba, J. Two-stage regression of high-density scalp electroencephalograms visualizes force regulation signaling during muscle contraction. J. Neural Eng. 2019, 16, 056020. [Google Scholar] [CrossRef] [PubMed]

- Shu, X.; Chen, S.; Yao, L.; Sheng, X.; Zhang, D.; Jiang, N.; Jia, J.; Zhu, X. Fast Recognition of BCI-Inefficient Users Using Physiological Features from EEG Signals: A Screening Study of Stroke Patients. Front. Neurosci. 2018, 12, 93. [Google Scholar] [CrossRef]

- G, C.; Ward, B.; Xie, C.; Li, W.; Chen, G.; Goveas, J.; P, A.; Li, S. A clustering-based method to detect functional connectivity differences. NeuroImage 2012, 61, 56–61. [Google Scholar]

- Kim, Y.; Lee, S.; Kim, H.; Lee, S.; Lee, S.; Kim, D. Reduced Burden of Individual Calibration Process in Brain-Computer Interface by Clustering the Subjects based on Brain Activation. In Proceedings of the 2019 IEEE International Conference on Systems, Man and Cybernetics (SMC), Toronto, ON, Canada, 6–9 October 2019; pp. 2139–2143. [Google Scholar]

- Sannelli, C.; Vidaurre, C.; Müller, K.; Blankertz, B. A large scale screening study with a SMR-based BCI: Categorization of BCI users and differences in their SMR activity. PLoS ONE 2019, 14, e0207351. [Google Scholar] [CrossRef] [PubMed]

- Fiederer, L.; Völker, M.; Schirrmeister, R.; Burgard, W.; Boedecker, J.; Ball, T. Hybrid Brain-Computer-Interfacing for Human-Compliant Robots: Inferring Continuous Subjective Ratings With Deep Regression. Front. Neurorobotics 2019, 13, 76. [Google Scholar] [CrossRef] [PubMed]

- Andrade, C. The P Value and Statistical Significance: Misunderstandings, Explanations, Challenges, and Alternatives. Indian J. Psychol. Med. 2019, 41, 210–215. [Google Scholar] [CrossRef]

- Lee, M.; Yoon, Y.; Lee, S. Predicting Motor Imagery Performance From Resting-State EEG Using Dynamic Causal Modeling. Front. Hum. Neurosci. 2020, 14, 321. [Google Scholar] [CrossRef]

- Mormann, F.; Lehnertz, K.; David, P.; Elger, C.E. Mean phase coherence as a measure for phase synchronization and its application to the EEG of epilepsy patients. Phys. Nonlinear Phenom. 2000, 144, 358–369. [Google Scholar] [CrossRef]

- Bruns, A. Fourier-, Hilbert-and wavelet-based signal analysis: Are they really different approaches? J. Neurosci. Methods 2004, 137, 321–332. [Google Scholar] [CrossRef] [PubMed]

- Vinck, M.; Oostenveld, R.; Van Wingerden, M.; Battaglia, F.; Pennartz, C.M. An improved index of phase-synchronization for electrophysiological data in the presence of volume-conduction, noise and sample-size bias. Neuroimage 2011, 55, 1548–1565. [Google Scholar] [CrossRef]

- García-Prieto, J.; Bajo, R.; Pereda, E. Efficient computation of functional brain networks: Towards real-time functional connectivity. Front. Neuroinform. 2017, 11, 8. [Google Scholar] [CrossRef] [PubMed]

- Cheng, H.; Koc, L.; Harmsen, J.; Shaked, T.; Aradhye, H.; Anderson, G.; Corrado, G.; Chai, W.; Ispir, M.; Anil, R.; et al. Wide & deep learning for recommender systems. In Proceedings of the 1st Workshop on Deep Learning for Recommender Systems, Boston, MA, USA, 15 September 2016; pp. 7–10. [Google Scholar]

- Cho, H.; Ahn, M.; Ahn, S.; Kwon, M.; Jun, S. EEG datasets for motor imagery brain-computer interface. GigaScience 2017, 6, gix034. [Google Scholar] [CrossRef]

- Mingjun, L.; Junxing, W. An Empirical Comparison of Multiple Linear Regression and Artificial Neural Network for Concrete Dam Deformation Modelling. Math. Probl. Eng. 2019, 2019, 13. [Google Scholar]

- Chen, Y.; Meng, L.; Zhang, J. Graph Neural Lasso for Dynamic Network Regression. arXiv 2019, arXiv:1907.11114. [Google Scholar]

- Padilla-Buritica, J.; Hurtado, J.; Castellanos-Dominguez, G. Supervised piecewise network connectivity analysis for enhanced confidence of auditory oddball tasks. Biomed. Signal Process. Control 2019, 52, 341–346. [Google Scholar] [CrossRef]

- Zhang, Y.; Xu, P.; Guo, D.; Yao, D. Prediction of SSVEP-based BCI performance by the resting-state EEG network. J. Neural Eng. 2013, 10, 066017. [Google Scholar] [CrossRef][Green Version]

- Vidaurre, C.; Haufe, S.; Jorajuría, T.; Müller, K.R.; Nikulin, V.V. Sensorimotor functional connectivity: A neurophysiological factor related to BCI performance. Front. Neurosci. 2020, 14, 1278. [Google Scholar] [CrossRef]

- Calesella, F.; Testolin, A.; De Filippo De Grazia, M.; Zorzi, M. A Systematic Assessment of Feature Extraction Methods for Robust Prediction of Neuropsychological Scores from Functional Connectivity Data. In Brain Informatics; Mahmud, M., Vassanelli, S.O., Kaiser, M., Zhong, N., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 29–40. [Google Scholar]

- Baig, M.; Aslam, N.; Shum, H. Filtering techniques for channel selection in motor imagery EEG applications: A survey. Artif. Intell. Rev. 2019, 53, 1207–1232. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).