1. Introduction

Increasing interest in ecofriendly and sustainable power usage has changed the paradigm of energy management [

1] from a supply-based to a demand-oriented policy that encourages efficient energy usage by consumers, in view of their continued efforts to create new industries. Convergence of industries is accelerating with the advent of internet of things (IoT) technology, while big data collected through various devices is essential for creating new opportunities and values. Energy services provided by the combination of IoT technology and big data are evolving into intelligent services and their related smart objects [

2]. Thus, the importance of incorporating information and communication technology (ICT) with the existing technology in the smart grid is increasing. Specifically, a smart meter is a necessary platform in the advanced and active management systems of energy data information [

3]. As smart metering systems are being installed on a large scale worldwide, they must process large data through numerous nodes connected in a network [

2]. In addition, the quality of services delivered to consumers and the performance of various applications, such as dynamic pricing [

4], demand side [

5], management [

6], and load forecasting [

7,

8] could be improved by improving smart metering systems [

9,

10,

11,

12]. The energy data generated by smart meters are characterized by a high sampling rate and periodic collection. In addition, the high sampling data generated by smart meters is used in various research fields, such as non-intrusive load monitoring (NILM), user segmentation, and the analysis and prediction of power consumption patterns. Improved performance can be confirmed by downsampling based on the micropatterns in the high-sampling data [

13]. The data sampling rate of the sensor installed in a smart meter is provided in the specifications and is not fixed; accordingly, the size of the generated data reaches several hundred terabytes per year [

14]. However, an excessive amount of data causes additional burdens such as traffic problems related to the transmission process of the channel from the advanced metering infrastructure (AMI) to the cloud server. Therefore, compression and data pruning technique for efficient transmission is becoming more important to save the bandwidth and the storage cost [

2]. In addition, a generalized structure of the compressed and pruned data is needed to communicate with other agents in the grid in an efficient way. In this aspect, additional compression of the data is possible according to the correlation structure of the generated data from the user’s power consumption or other agents in the community [

15].

The compression technology is divided into lossless and lossy compression technologies. There is a trade-off between the compression ratio (CR) and the information loss of the data, depending on the purpose of usage of the two compression techniques [

16]. Lossless compression is used for high-resolution or nonpersistent data with a nonvisible pattern. Representative lossless compression methods include entropy coding, dictionary coding, and deflate coding [

17]. In [

18], a lossless compression method that compresses the waveform approximated with few using the Gaussian approximation is proposed. Lossy compression is applied to error-tolerant scenarios, such as the case where a high CR is needed or a wireless communication environment [

2]. In addition, lossy compression is mainly used as a method for efficiently compressing sensor data such as smart meter data to primarily reduce the cost related to the limited aspects of bandwidth, energy, and storage in the transmission area of the IoT environment [

18]. Lossy compression methods include discrete wavelet transformation (DWT) [

19], singular value decomposition (SVD) [

20], principal component analysis (PCA) [

21], and compressive sensing (CS) [

22]. In addition, a compression method, which captures the interdependence of multi-variables generated by a smart meter, using PCA and CS has been recently proposed [

2]. A compression method based on deep learning techniques such as an auto-encoder (AE) has been additionally proposed [

23].

For AE, compression, sparsity or another data structure is not required; instead, the appropriate structure is learned from the data within the range of the allowed compressions using nonlinear techniques. In addition, it can be argued that it shows better performance than other linear operation-based compression methods such as PCA since AE applies a neural network (NN) to select an appropriate nonlinear activation function that performs a nonlinear operation on the data [

24]. Moreover, nonlinear transformation, multiplication, and summation are included in the process to reconstruct the data efficiently. Thus, it secures the possibility of generalization of the trained model when applied to other datasets [

25]. However, experiments confirmed that the AE compression method had an adverse effect on the reconstruction due to severe fluctuation in the data collected with high sampling. In addition, most differential coding-based compression techniques tend to be less efficient because they are sensitive to small differences in consecutive values of smart meter data. The reason is that compressing household power data of high resolution in smart meters is a real challenge due to the rapidly changing load patterns [

23,

26]. In [

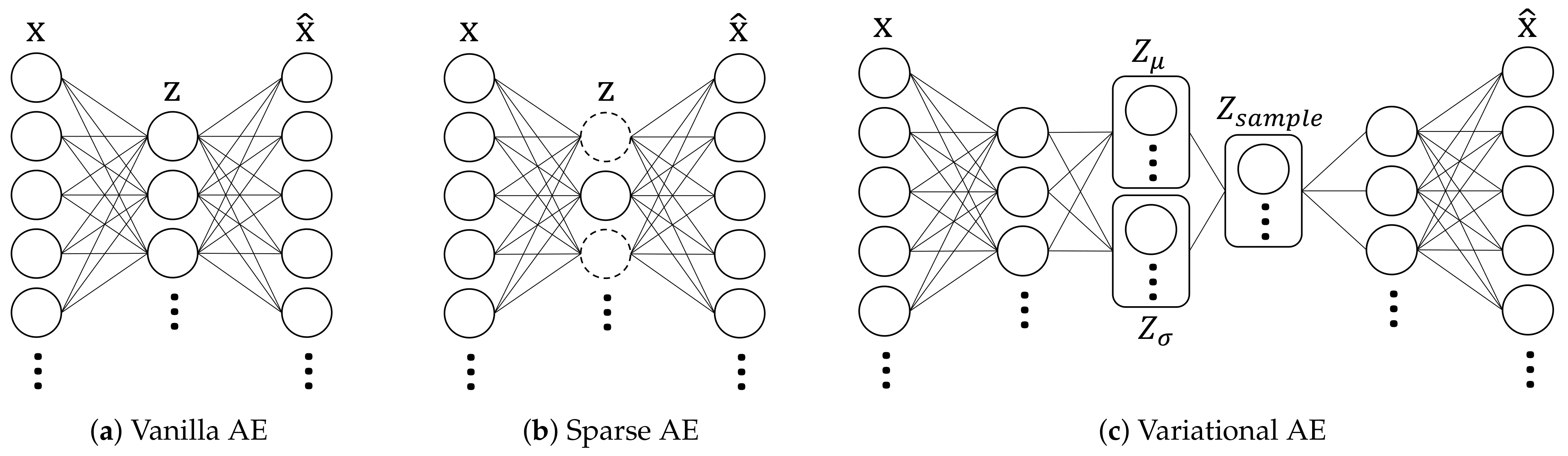

23], a method of selectively operating a customized compression model of each signal was proposed to improve efficiency. The stacked, sparse, variational, and convolutional AE models [

27], which are commonly used models were applied to the Dutch residential energy dataset (DRED) [

28], one of the public datasets of energy to investigate data-dependent characteristics. Results of the analysis show that the reconstruction performance is excellent for simple waveforms such as pulses. On the other hand, waveforms with high-frequencies such as noise signals showed high errors due to fluctuation or nonperiodicity, resulting in reduced reconstruction performance.

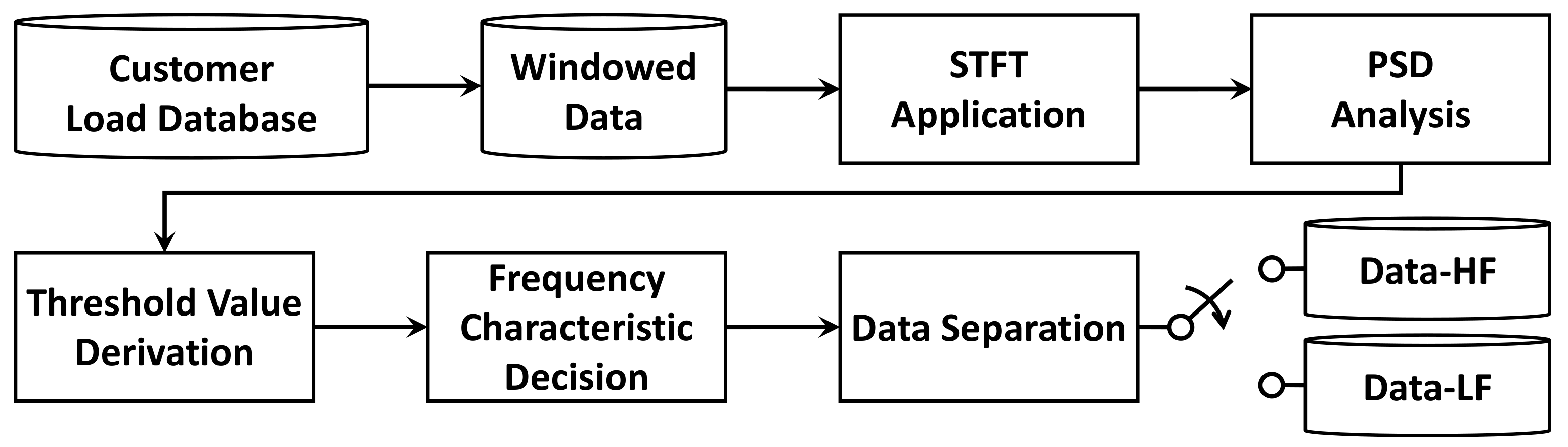

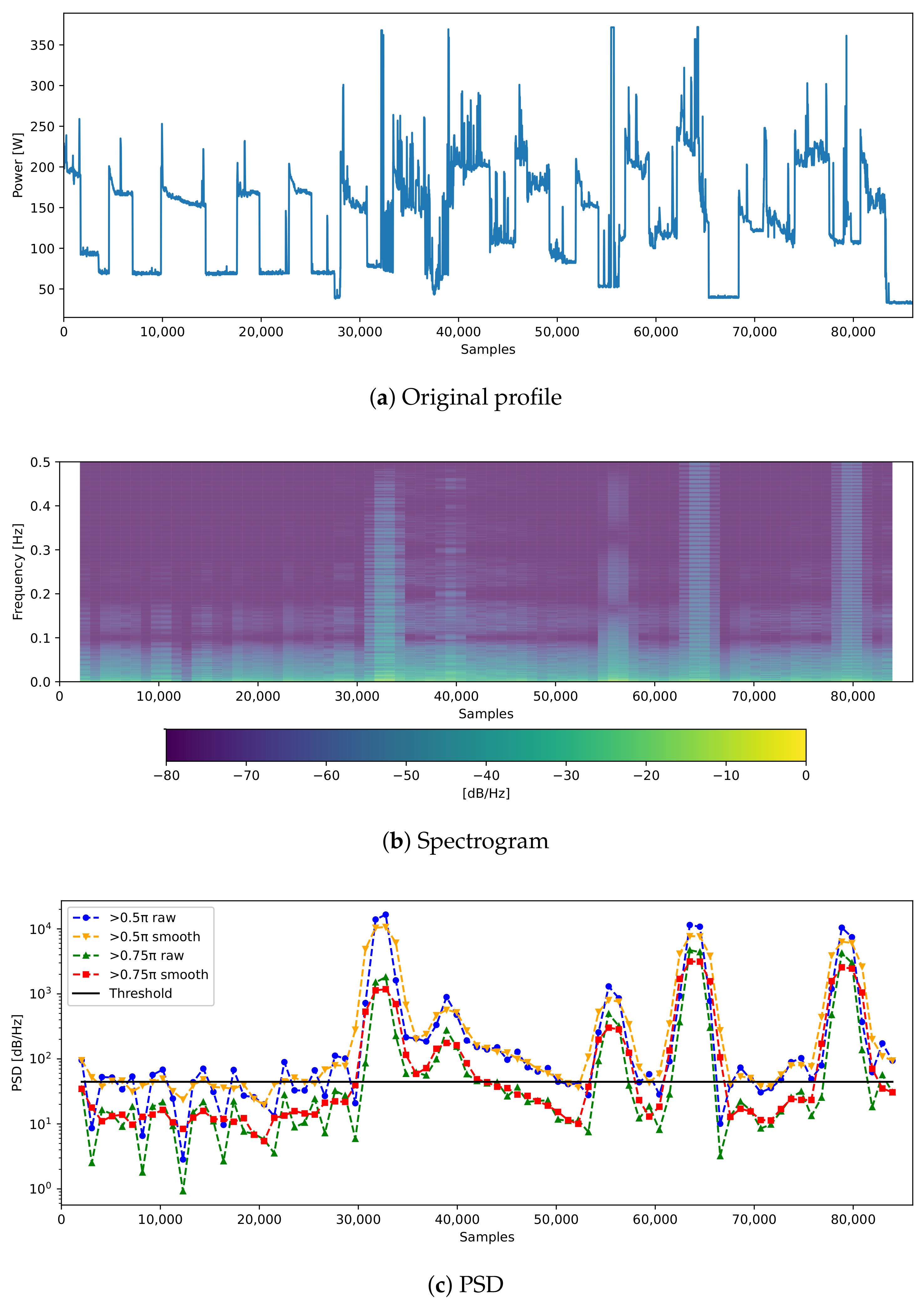

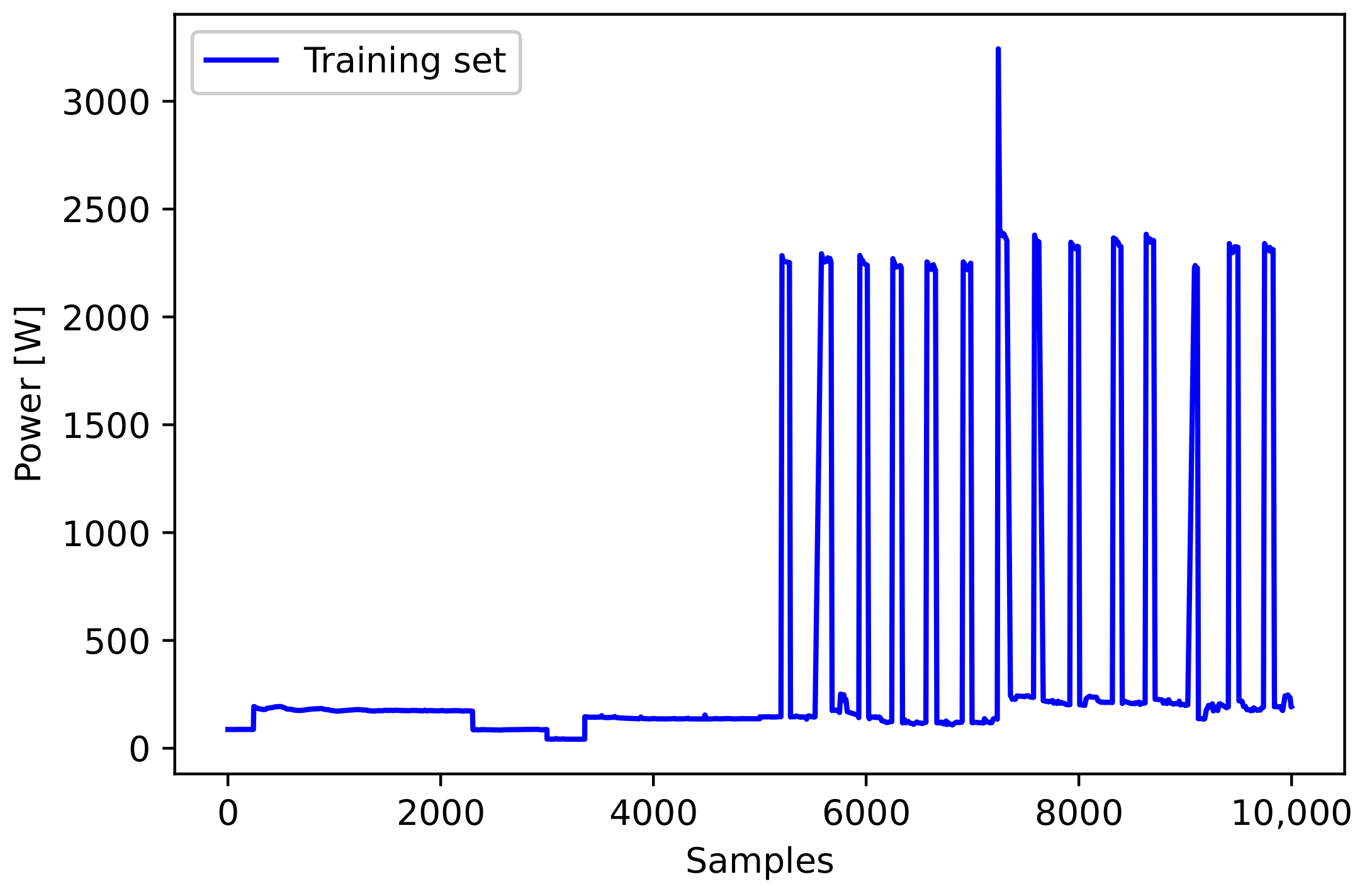

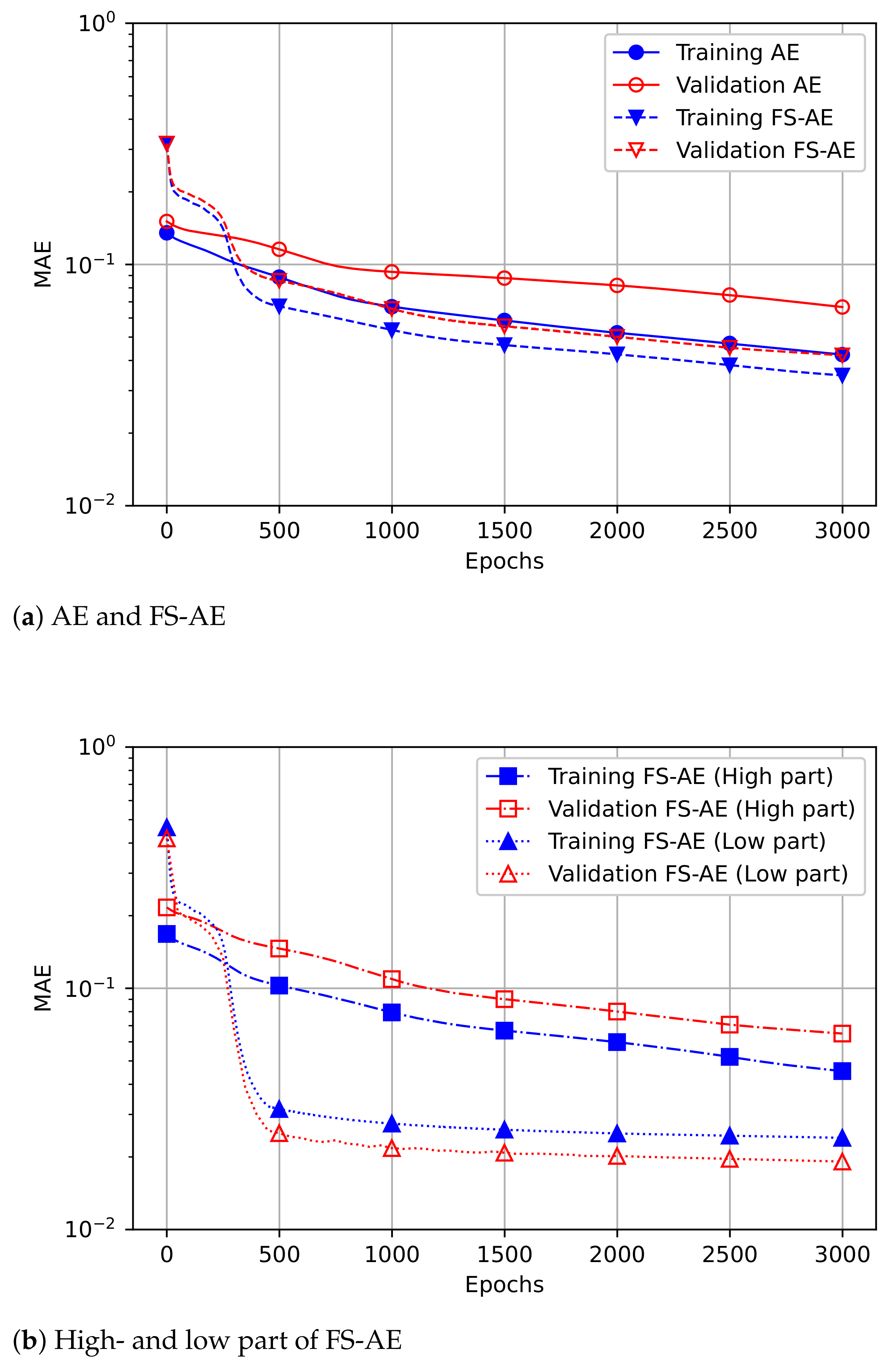

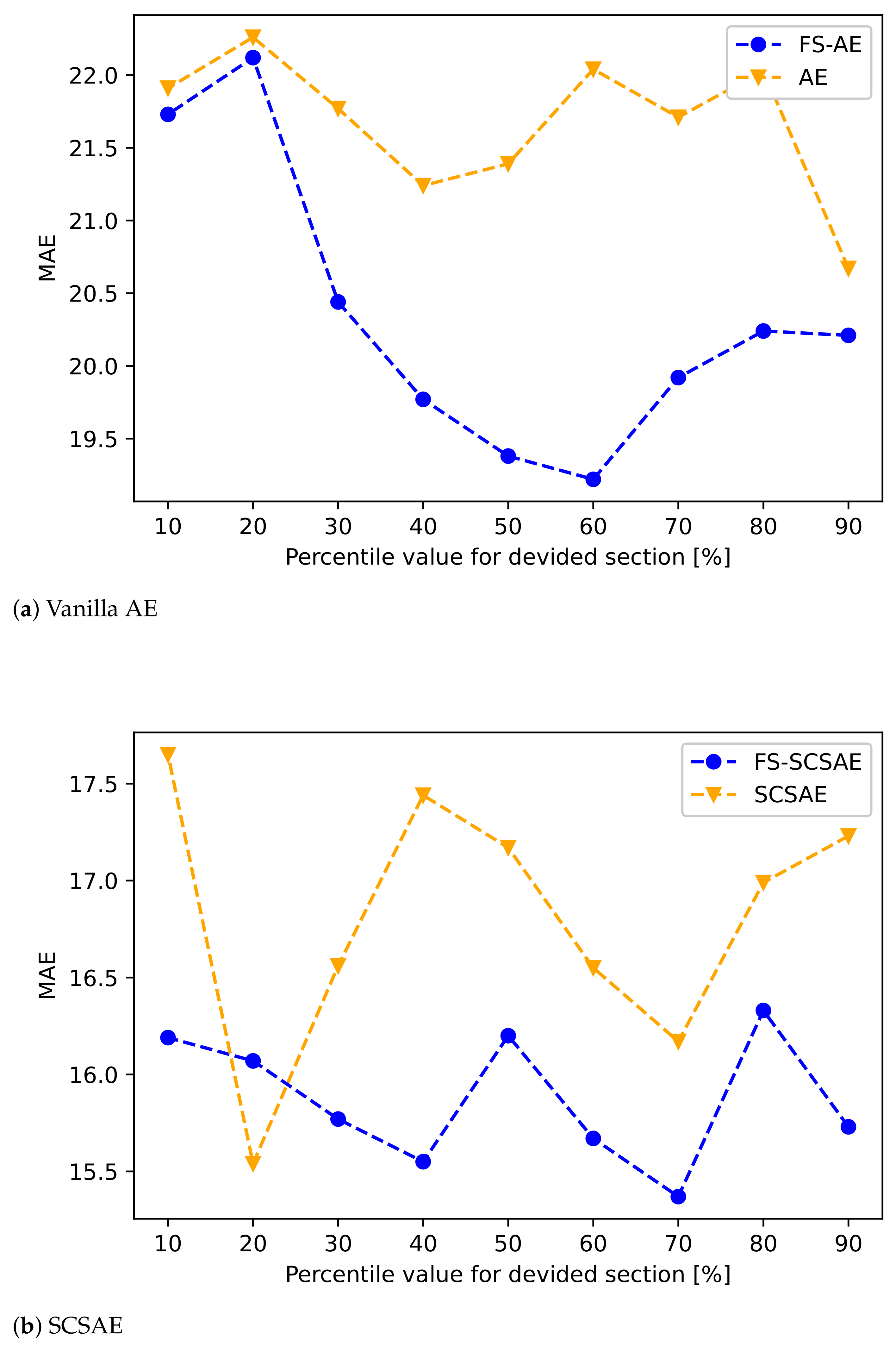

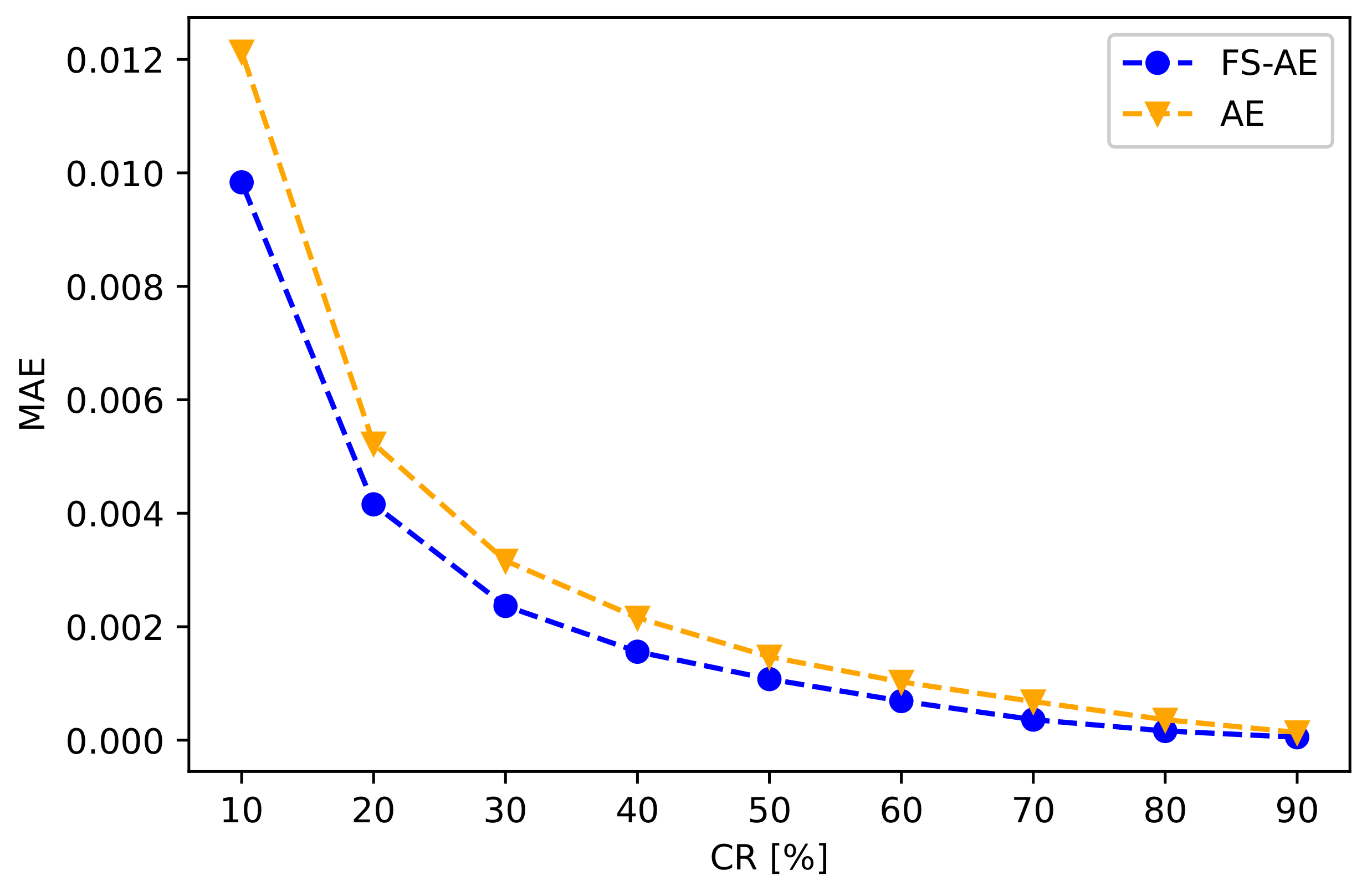

This paper proposes a compression structure that reflects the data-dependent features of smart meter data and aims to achieve efficient compression through a lightweight model such as the AE model, to improve the low reconstruction quality checked to severe fluctuation. After analyzing the frequency characteristics of smart meter data through a signal processing technique, data is separated and a deep learning-based compression method is applied. First, to improve the reconstruction performance, the proposed method separates the frequency domain based on a specific threshold in the power spectral density (PSD) [

29] transformation. Using this, it is verified that the training process of the deep learning model has an advantage by grouping data with similar frequency characteristics. Moreover, the performance is improved when data is reconstructed at the same CR as the existing method. Second, it is confirmed that the computational complexity was reduced by analyzing the learning process of the NN model applied in the proposed method. As a result of checking the learning curve of the model, it is evaluated to confirm faster convergence than the existing method. A variety of AE models are applied to the proposed method to verify the effectiveness of the proposed method.

Section 2 introduces the features of smart meter data, signal processing techniques applied to the proposed method, and types of various compression techniques.

Section 3 presents the data preprocessing and specific network architectures used in the proposed method. In

Section 4, the results of the feasibility test applied to the proposed method, and the compression performance of the benchmark model is provided. Through this, the applicability and effectiveness of the proposed method can be confirmed. Finally, conclusions are summarized in

Section 5.