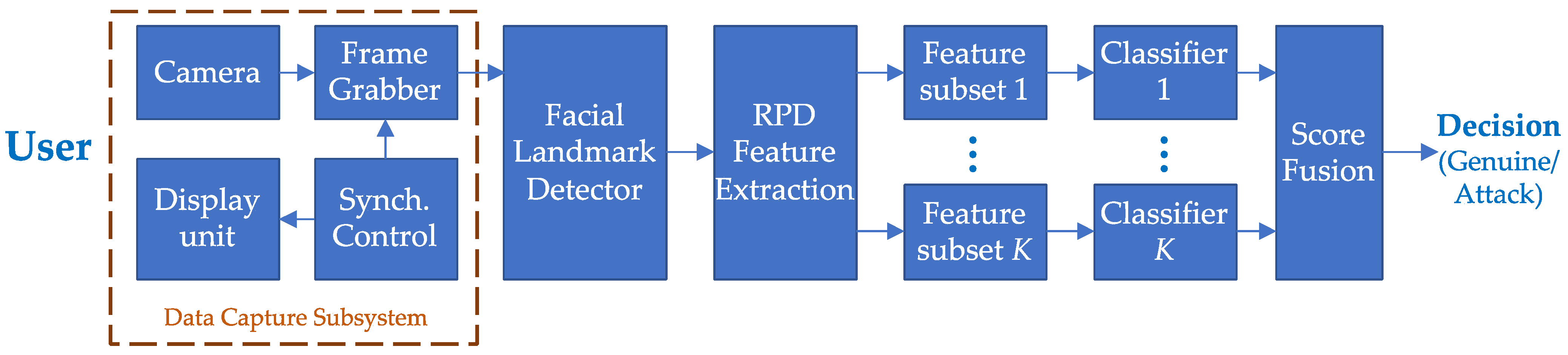

Directed Gaze Trajectories for Biometric Presentation Attack Detection

Abstract

1. Introduction

2. State of the Art

2.1. Passive Techniques

2.2. Active Techniques

3. Proposed Technique

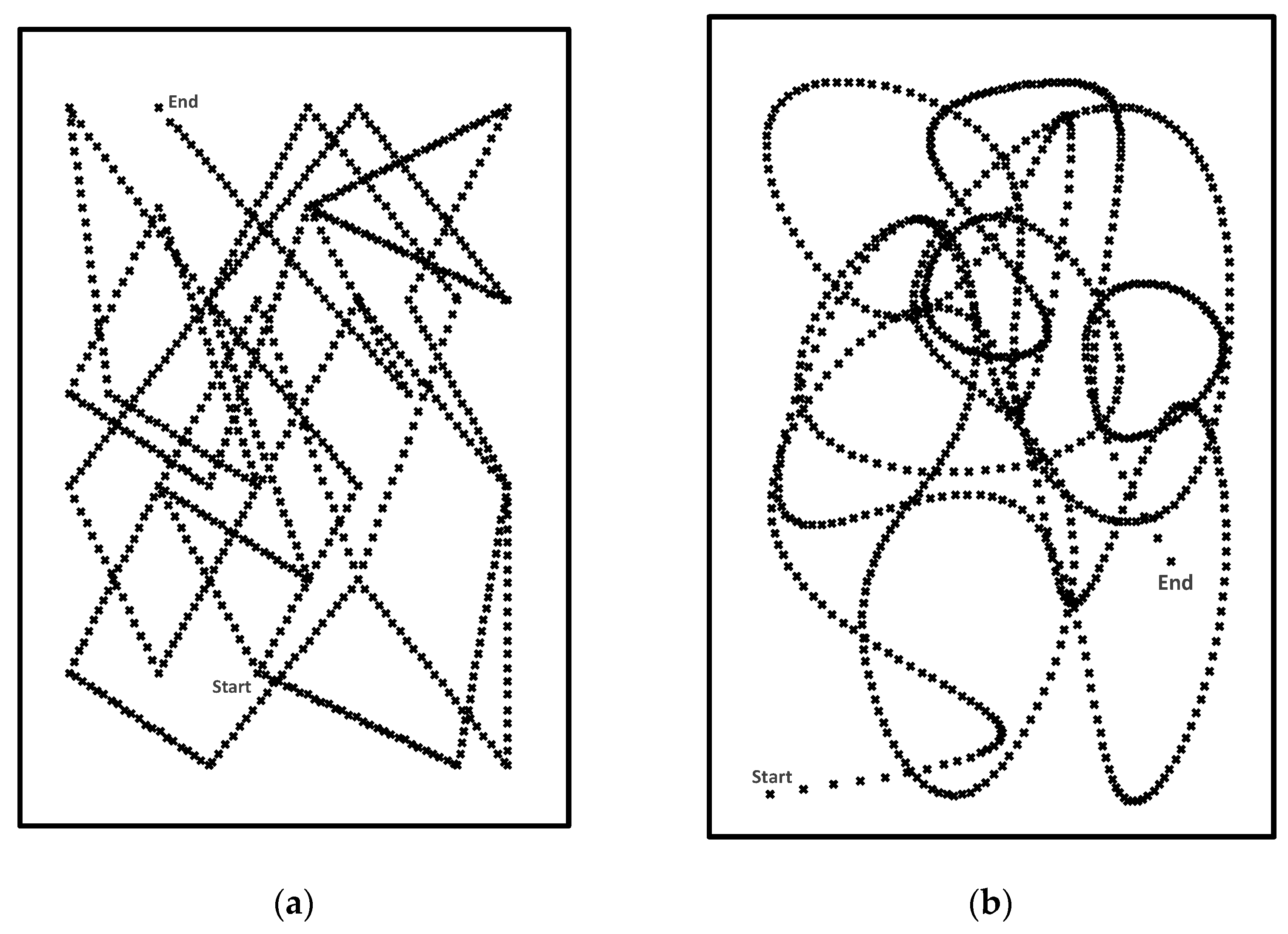

3.1. Challenge Trajectories

3.2. Facial Landmark Detection, Feature Extraction and Classification

4. Experimental Evaluation

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Roberts, C. Biometric attack vectors and defenses. Comput. Secur. 2007, 26, 14–25. [Google Scholar] [CrossRef]

- Ali, A.; Hoque, S.; Deravi, F. Biometric presentation attack detection using stimulated pupillary movements. In Proceedings of the 9th International Conference on Imaging for Crime Detection and Prevention (ICDP-2019), London, UK, 16–18 December 2019; pp. 80–85. [Google Scholar] [CrossRef]

- Ali, A.; Hoque, S.; Deravi, F. Gaze stability for liveness detection. In Pattern Analysis and Applications; Springer: Cham, Switzerland, 2018; Volume 21, pp. 437–449. [Google Scholar]

- Schwartz, W.R.; Rocha, A.; Pedrini, H. Face spoofing detection through partial least squares and low-level descriptors. In Proceedings of the International Joint Conference on Biometrics (IJCB), Washington, DC, USA, 11–13 October 2011; pp. 1–8. [Google Scholar]

- Pinto, A.; Schwartz, W.R.; Pedrini, H.; Rocha, A.D.R. Using visual rhythms for detecting video-based facial spoof attacks. IEEE Trans. Inf. Forensics Secur. 2015, 10, 1025–1038. [Google Scholar] [CrossRef]

- Sun, L.; Pan, G.; Wu, Z.; Lao, S. Blinking-based live face detection using conditional random fields. In Advances in Biometrics (ICB 2007), Seoul, Korea; Springer: Cham, Switzerland, 2007; Volume 4642, pp. 252–260. [Google Scholar]

- Pan, G.; Sun, L.; Wu, Z.; Lao, S. Eyeblink-based anti-spoofing in face recognition from a generic webcamera. In Proceedings of the IEEE 11th International Conf on Computer Vision (ICCV), Rio de Janeiro, Brazil, 14–20 October 2007; pp. 1–8. [Google Scholar]

- Nguyen, H.P.; Delahaies, A.; Retraint, F.; Morain-Nicolier, F. Face presentation attack detection based on a statistical model of image noise. IEEE Access 2019, 7, 175429–175442. [Google Scholar] [CrossRef]

- Maatta, J.; Hadid, A.; Pietikainen, M. Face spoofing detection from single images using texture and local shape analysis. IET Biom. 2012, 1, 3–10. [Google Scholar] [CrossRef]

- Lagorio, A.; Tistarelli, M.; Cadoni, M.; Fookes, C.; Sridharan, S. Liveness detection based on 3d face shape analysis. In Proceedings of the International Workshop on Biometrics and Forensics (IWBF), Lisbon, Portugal, 4–5 April 2013; pp. 1–4. [Google Scholar]

- Singh, A.K.; Joshi, P.; Nandi, G.C. Face recognition with liveness detection using eye and mouth movement. In Proceedings of the 2014 International Conference on Signal Propagation and Computer Technology (ICSPCT), Ajmer, India, 12–13 July 2014; pp. 592–597. [Google Scholar]

- Smith, D.F.; Wiliem, A.; Lovell, B.C. Face recognition on consumer devices: Reflections on replay attacks. IEEE Trans. Inf. Forensics Secur. 2015, 10, 736–745. [Google Scholar] [CrossRef]

- Frischholz, R.W.; Werner, A. Avoiding replay-attacks in a face recognition system using head-pose estimation. In Proceedings of the IEEE International Workshop on Analysis and Modeling of Faces and Gestures (AMFG’03), Nice, France, 17 October 2003; pp. 234–235. [Google Scholar] [CrossRef]

- Ali, A.; Deravi, F.; Hoque, S. Liveness detection using gaze collinearity. In Proceedings of the 2012 Third International Conference on Emerging Security Technologies (EST), Lisbon, Portugal, 5–7 September 2012; pp. 62–65. [Google Scholar]

- Ali, A.; Deravi, F.; Hoque, S. Spoofing attempt detection using gaze colocation. In Proceedings of the International Conference of the Biometrics Special Interest Group (BIOSIG), Darmstadt, Germany, 5–6 September 2013; pp. 1–12. [Google Scholar]

- Ali, A.; Deravi, F.; Hoque, S. Directional sensitivity of gaze-collinearity features in liveness detection. In Proceedings of the 4th International Conference on Emerging Security Technologies (EST), Cambridge, UK, 9–11 September 2013; pp. 8–11. [Google Scholar]

- Alsufyani, N.; Ali, A.; Hoque, S.; Deravi, F. Biometric presentation attack detection using gaze alignment. In Proceedings of the IEEE 4th International Conference on Identity, Security, and Behavior Analysis (ISBA), Singapore, 10–12 January 2018; pp. 1–8. [Google Scholar]

- Ali, A.; Alsufyani, N.; Hoque, S.; Deravi, F. Biometric counter-spoofing for mobile devices using gaze information. In Pattern Recognition and Machine Intelligence (PReMI), Kolkata, India; Springer: Cham, Switzerland, 2017; Volume LNCS-10597, pp. 11–18. [Google Scholar]

- Ali, A.; Alsufyani, N.; Hoque, S.; Deravi, F. Gaze- based Presentation Attack Detection for Users Wearing Tinted Glasses. In Proceedings of the 2019 Eighth International Conference on Emerging Security Technologies (EST), Colchester, UK, 22–24 July 2019. [Google Scholar]

- Cai, L.; Huang, L.; Liu, C. Person-specific face spoofing detection for replay attack based on gaze estimation. In Biometric Recognition (CCBR 2015), Tianjin, China; Springer: Cham, Switzerland, 2015; Volume 9428, pp. 201–211. [Google Scholar]

- Ramachandra, R.; Busch, C. Presentation attack detection methods for face recognition systems: A comprehensive survey. ACM Comput. Surv. 2017, 50, 8.3–8.37. [Google Scholar] [CrossRef]

- Jia, S.; Guo, G.; Xu, Z. A survey on 3D mask presentation attack detection and countermeasures. Pattern Recognit. 2020, 98. [Google Scholar] [CrossRef]

- Sepas-Moghaddam, A.; Pereira, F.; Correia, P.L. Light Field-Based Face Presentation Attack Detection: Reviewing, Benchmarking and One Step Further. IEEE Trans. Inf. Forensics Secur. 2018, 13, 1696–1709. [Google Scholar] [CrossRef]

- Li, J.; Wang, Y.; Tan, T.; Jain, A.K. Live face detection based on the analysis of fourier spectra. In Proceedings of the Biometric Technology for Human Identification, Orlando, FL, USA, 12–13 April 2004; Volume 5404, pp. 296–304. [Google Scholar]

- Bao, W.; Li, H.; Li, N.; Jiang, W. A liveness detection method for face recognition based on optical flow field. In Proceedings of the International Conference on Image Analysis and Signal Processing, Taizhou, China, 12–14 April 2009; pp. 233–236. [Google Scholar]

- Boulkenafet, Z.; Komulainen, J.; Li, L.; Feng, X.; Hadid, A. OULU-NPU: A mobile face presentation attack database with real-world variations. In Proceedings of the 12th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2017), Washington, DC, USA, 30 May–3 June 2017; pp. 612–618. [Google Scholar]

- Tan, X.; Li, Y.; Liu, J.; Jiang, L. Face liveness detection from a single image with sparse low rank bilinear discriminative model. In Proceedings of the Computer Vision–ECCV 2010, Heraklion, Greece, 5–11 September 2010; Springer: Cham, Switzerland, 2010; Volume LNCS 6316, pp. 504–517. [Google Scholar]

- Anjos, A.; Marcel, S. Counter-measures to photo attacks in face recognition: A public database and a baseline. In Proceedings of the International Joint Conference on Biometrics (IJCB), Washington, DC, USA, 11–13 October 2011; pp. 1–7. [Google Scholar]

- Kollreider, K.; Fronthaler, H.; Bigun, J. Verifying liveness by multiple experts in face biometrics. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Anchorage, AK, USA, 24–26 June 2008; pp. 1–6. [Google Scholar]

- Zhang, Z.; Yan, J.; Liu, S.; Lei, Z.; Yi, D.; Li, S.Z. A face antispoofing database with diverse attacks. In Proceedings of the 5th IAPR International Conference on Biometrics (ICB), New Delhi, India, 1 March–30 April 2012; pp. 26–31. [Google Scholar]

- Chingovska, I.; Anjos, A.; Marcel, S. On the effectiveness of local binary patterns in face anti- spoofing. In Proceedings of the International Conference of the Biometrics Special Interest Group (BIOSIG), Darmstadt, Germany, 6–7 September 2012; pp. 1–7. [Google Scholar]

- Wen, D.; Han, H.; Jain, A.K. Face spoof detection with image distortion analysis. IEEE Trans. Inf. Forensics Secur. 2015, 10, 746–761. [Google Scholar] [CrossRef]

- Costa-Pazo, A.; Bhattacharjee, S.; Vazquez-Fernandez, E.; Marcel, S. The REPLAY-MOBILE face presentation-attack database. In Proceedings of the International Conference of the Biometrics Special Interest Group (BIOSIG), Darmstadt, Germany, 21–23 September 2016; pp. 1–7. [Google Scholar]

- Jia, S.; Hu, C.; Guo, G.; Xu, Z. A Database for Face Presentation Attack Using Wax Figure Faces. In New Trends in Image Analysis and Processing—ICIAP 2019; Cristani, M., Prati, A., Lanz, O., Messelodi, S., Sebe, N., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2019; Volume 11808, pp. 39–47. [Google Scholar] [CrossRef]

- Vareto, R.H.; Marcia Saldanha, A.; Schwartz, W.R. The Swax Benchmark: Attacking Biometric Systems with Wax Figures. In Proceedings of the 2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 986–990. [Google Scholar] [CrossRef]

- Asthana, A.; Zafeiriou, S.; Cheng, S.; Pantic, M. Incremental face alignment in the wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 1859–1866. [Google Scholar]

- Kittler, J. Combining classifiers: A theoretical framework. Pattern Anal. Appl. 1998, 1, 18–27. [Google Scholar] [CrossRef]

- Kollreider, K.; Fronthaler, H.; Faraj, M.I.; Bigun, J. Real-time face detection and motion analysis with application in “liveness” assessment. IEEE Trans. Inf. Forensics Secur. 2007, 2, 548–558. [Google Scholar] [CrossRef]

- Peixoto, B.; Michelassi, C.; Rocha, A. Face liveness detection under bad illumination conditions. In Proceedings of the 18th IEEE International Conference on Image Processing, Brussels, Belgium, 11–14 September 2011; pp. 3557–3560. [Google Scholar]

| Format | Attack Type | TPR (in %) | ||||

|---|---|---|---|---|---|---|

| @FPR = 0.01 | @FPR = 0.02 | @FPR = 0.03 | @FPR = 0.05 | @FPR = 0.10 | ||

| Tablet | Photo | 69.5 | 76.4 | 80.2 | 85.6 | 91.0 |

| 2D Mask | 93.2 | 96.8 | 97.4 | 97.8 | 98.2 | |

| 3D Mask | 90.4 | 93.6 | 95.6 | 97.6 | 98.7 | |

| Phone | Photo | 16.1 | 27.0 | 35.0 | 47.0 | 66.0 |

| 2D Mask | 98.5 | 99.0 | 99.3 | 99.6 | 99.7 | |

| 3D Mask | 93.2 | 96.2 | 97.4 | 98.2 | 99.1 | |

| Format | Attack Type | TPR (in %) | ||

|---|---|---|---|---|

| 3 | 5 | 10 | ||

| Tablet | Photo | 88.3 | 91.0 | 88.4 |

| 2D Mask | 98.4 | 98.2 | 98.4 | |

| 3D Mask | 97.9 | 98.7 | 97.9 | |

| Phone | Photo | 58.0 | 66.0 | 76.0 |

| 2D Mask | 99.3 | 99.7 | 99.8 | |

| 3D Mask | 97.1 | 99.1 | 99.1 | |

| Format | Attack Type | TPR (in %) | ||||

|---|---|---|---|---|---|---|

| @FPR = 0.01 | @FPR = 0.02 | @FPR = 0.03 | @FPR = 0.05 | @FPR = 0.10 | ||

| Tablet | Photo | 24.0 | 33.9 | 41.5 | 51.5 | 68.0 |

| 2D Mask | 80.0 | 87.8 | 90.5 | 93.6 | 97.6 | |

| 3D Mask | 53.0 | 64.8 | 72.1 | 81.0 | 90.0 | |

| Phone | Photo | 42.1 | 55.8 | 62.5 | 71.0 | 83.0 |

| 2D Mask | 94.0 | 97.0 | 98.0 | 98.9 | 99.5 | |

| 3D Mask | 72.5 | 82.0 | 87.0 | 91.9 | 96.2 | |

| Format | Attack Type | TPR (in %) | ||

|---|---|---|---|---|

| 3 | 5 | 10 | ||

| Tablet | Photo | 69.5 | 68.0 | 74.4 |

| 2D Mask | 96.8 | 97.6 | 99.6 | |

| 3D Mask | 87.2 | 90.0 | 95.2 | |

| Phone | Photo | 59.4 | 83.0 | 86.0 |

| 2D Mask | 96.2 | 99.5 | 99.4 | |

| 3D Mask | 91.3 | 96.2 | 98.9 | |

| Format | Attack Type | TPR (in %) | ||||

|---|---|---|---|---|---|---|

| @FPR = 0.01 | @FPR = 0.02 | @FPR = 0.03 | @FPR = 0.05 | @FPR = 0.10 | ||

| Tablet | Photo | 68.2 | 77.1 | 82.1 | 88.0 | 94.0 |

| 2D Mask | 96.7 | 98.2 | 98.5 | 98.8 | 99.0 | |

| 3D Mask | 95.5 | 97.2 | 98.1 | 98.7 | 99.4 | |

| Phone | Photo | 34.0 | 47.0 | 55.0 | 64.8 | 78.0 |

| 2D Mask | 99.3 | 99.6 | 99.7 | 99.8 | 99.9 | |

| 3D Mask | 94.4 | 96.7 | 97.7 | 98.7 | 99.4 | |

| Format | TPR (in %) | ||||

|---|---|---|---|---|---|

| @FPR = 0.01 | @FPR = 0.02 | @FPR = 0.03 | @FPR = 0.05 | @FPR = 0.10 | |

| Tablet | 73.5 | 83.0 | 87.8 | 91.5 | 96.0 |

| Phone | 64.1 | 78.0 | 84.0 | 90.5 | 95.7 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ali, A.; Hoque, S.; Deravi, F. Directed Gaze Trajectories for Biometric Presentation Attack Detection. Sensors 2021, 21, 1394. https://doi.org/10.3390/s21041394

Ali A, Hoque S, Deravi F. Directed Gaze Trajectories for Biometric Presentation Attack Detection. Sensors. 2021; 21(4):1394. https://doi.org/10.3390/s21041394

Chicago/Turabian StyleAli, Asad, Sanaul Hoque, and Farzin Deravi. 2021. "Directed Gaze Trajectories for Biometric Presentation Attack Detection" Sensors 21, no. 4: 1394. https://doi.org/10.3390/s21041394

APA StyleAli, A., Hoque, S., & Deravi, F. (2021). Directed Gaze Trajectories for Biometric Presentation Attack Detection. Sensors, 21(4), 1394. https://doi.org/10.3390/s21041394