Our main goal in this section is to study the impact of fatigue on the collected data, the number of significant features, and the models’ performance. In addition, we evaluate the impact of fatigue on subject-specific and cross-subject models. Specifically, we address the following research questions:

Next, we detail the motivation, approach, and the findings for each research question.

4.1. RQ1: How Does Fatigue Impact the Collected Data?

Motivation: We believe that fatigue impacts the collected data by changing its patterns, leading to a snowball effect, affecting the extracted features and HAR models’ performance. Hence, we want first to capture the data pattern changes, which might occur during the data collection process.

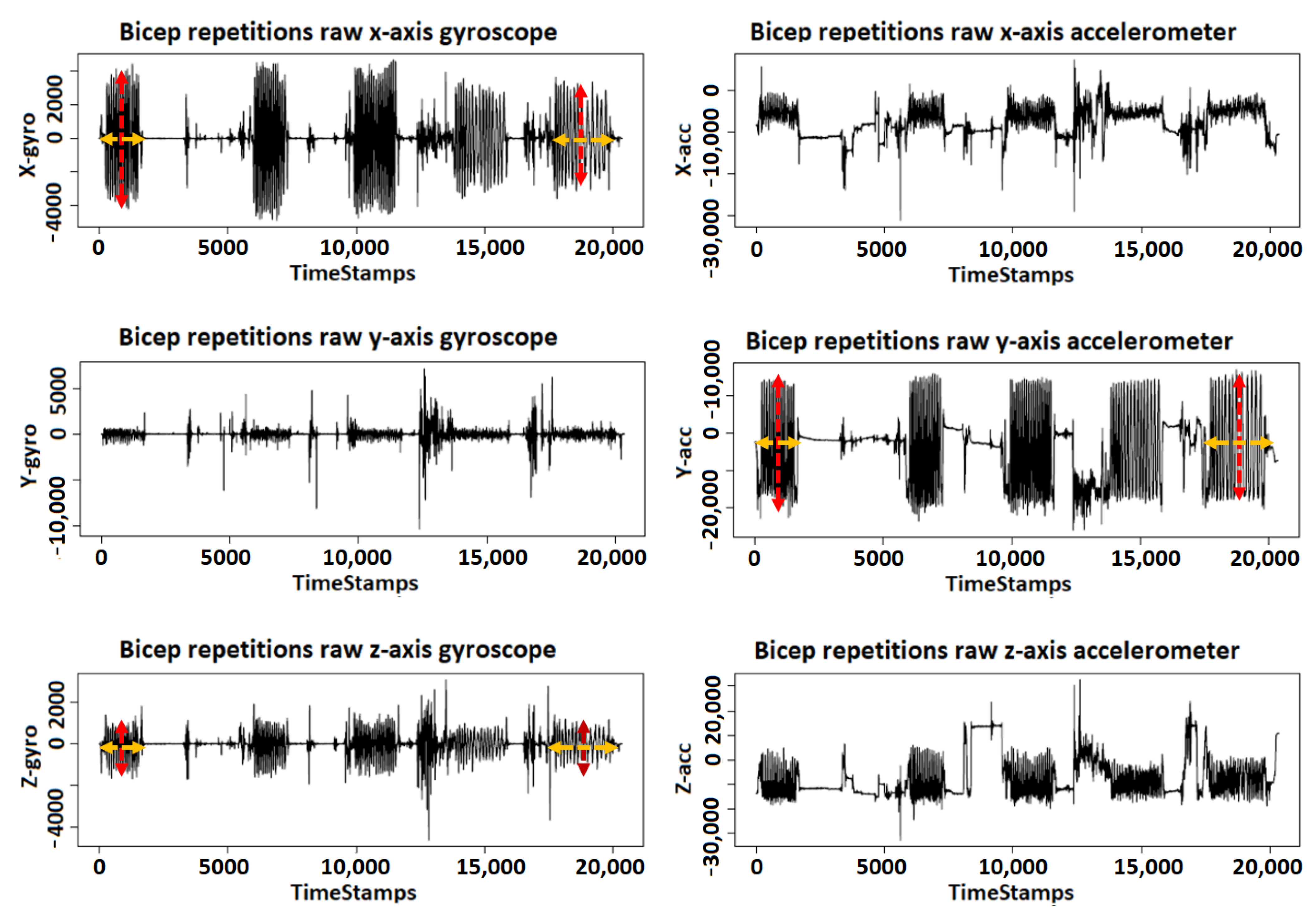

Approach: To address this research question, we look into the data provided by the IMU that contains the 3-axis gyroscope and accelerometer. We excluded the magnetometer for simplicity as it did not show any significant changes in the magnetic field regarding direction or strength during the exercise. We started with a visualization of the impact of fatigue on collected data to evaluate the data pattern changes.

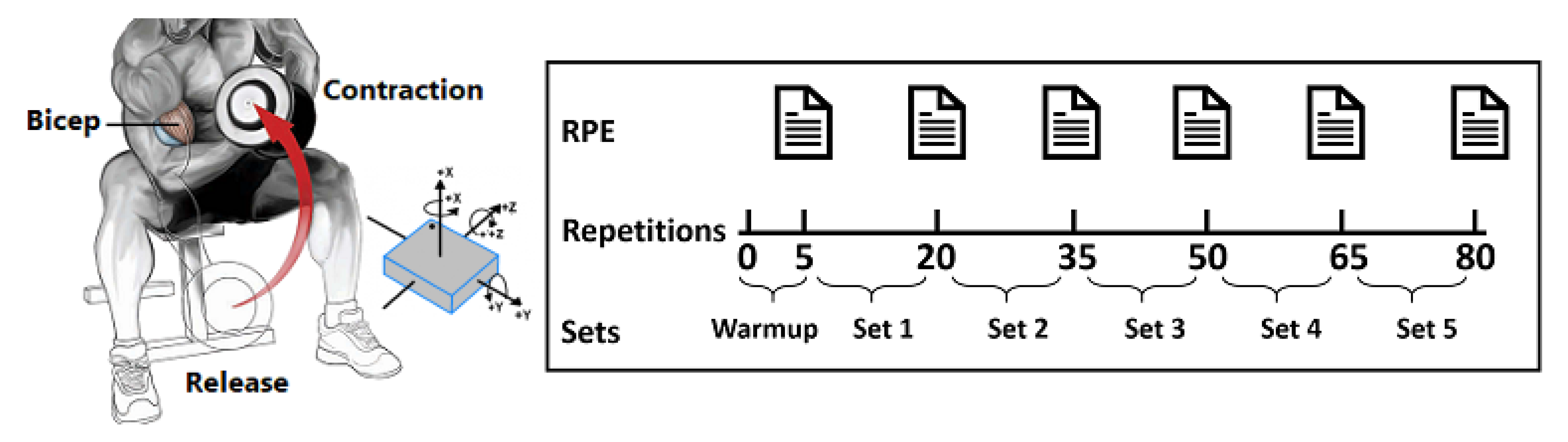

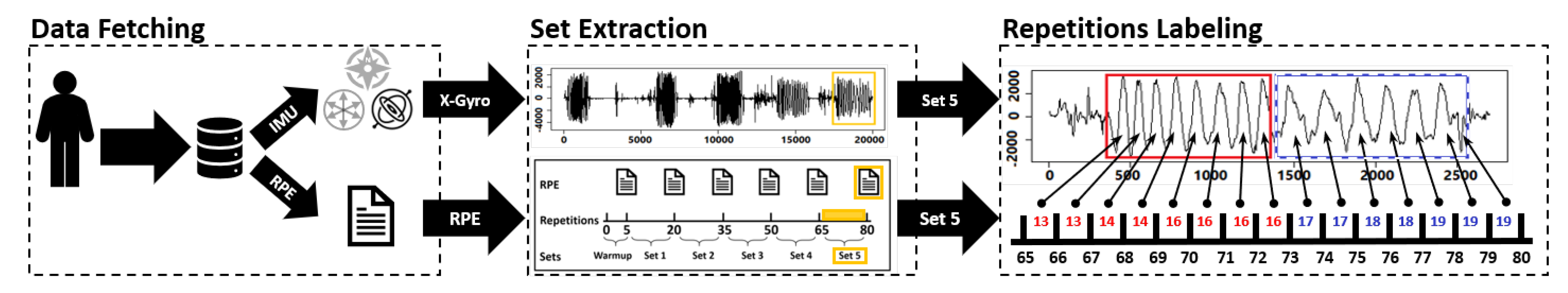

Figure 6 shows an example of the five sets of biceps repetitions using the gyroscope and the accelerometer signals. The

X-axis represents the vertical displacement, which is the distance between the highest and lowest positions of the volunteer’s hand during bicep extension and flexion. The

Y-axis represents the horizontal displacement, which is the sideways vibration of the volunteer’s hand during bicep extension and flexion. The

Z-axis represents the depth displacement, which is the farthest and nearest positions of the volunteer’s hand from their body during bicep extension and flexion. We select the

X-axis from the gyroscope and

Y-axis from the accelerometer because they provide the best visualization for the angular velocity and sideways vibration of the volunteer’s hand. Similarly, we showcase the

Z-axis from the gyroscope as it provides the best visualization of the farthest and nearest positions of the volunteer’s hand. We use the first set of bicep repetition as a reference set to comparatively measure the data pattern changes. The rationale behind this is that the first set usually contains the least number of fatigue repetitions. Therefore, as the fatigue accumulates in later sets, we would be able to differentiate the changes in the data patterns. The first set also always contains 15 biceps repetitions for all of the 20 volunteers.

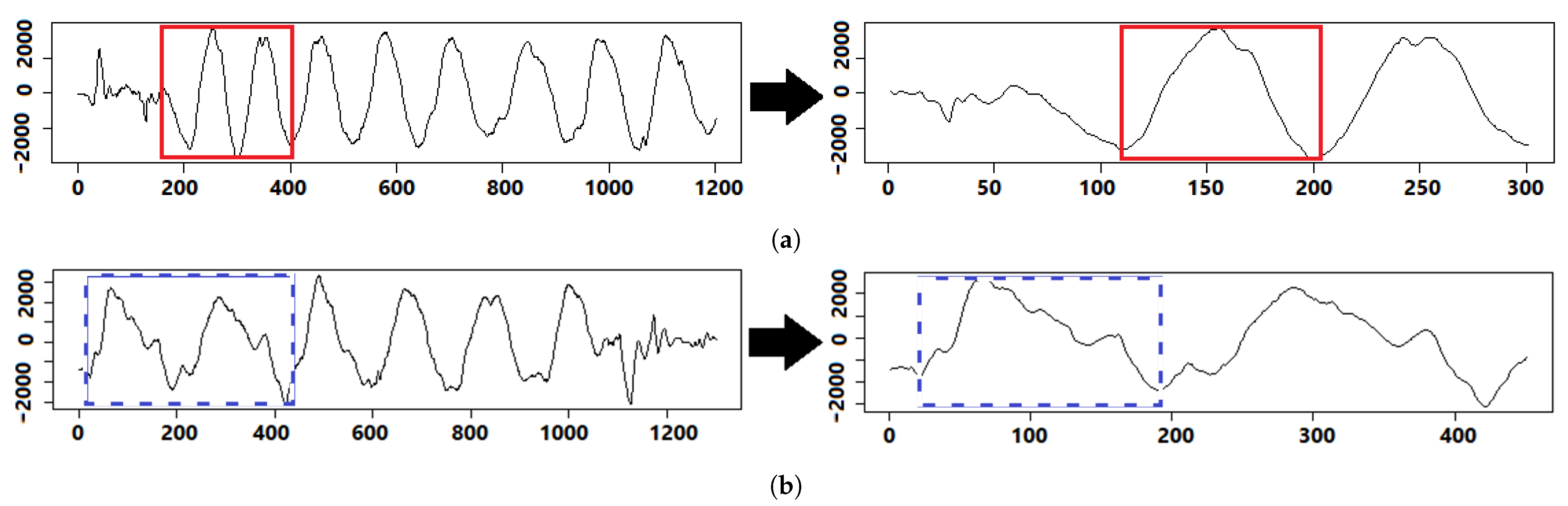

We look for data pattern changes along the horizontal axis which indicates the changes in completion time whereas the vertical axis indicates the changes in angular velocity according to muscular endurance [

36]. To analyze the data pattern changes in the horizontal axis, we measured the time required to complete the first set of biceps repetitions for each volunteer, which is the time interval from the 1st repetition until the end of the 15th repetition. We repeated the same approach to measure the completion time of the remaining sets separately. Then, we calculate the difference in completion time between each set compared to the first set. To analyze the data pattern changes in the vertical axis, we measured the absolute magnitude of each repetition in the first set to calculate the muscular endurance [

36] during the first set of biceps repetitions. We repeated the same approach to measure the muscular endurance for the remaining sets separately. Then, we calculate the drop in muscular endurance between each set compared to the first set.

Result:

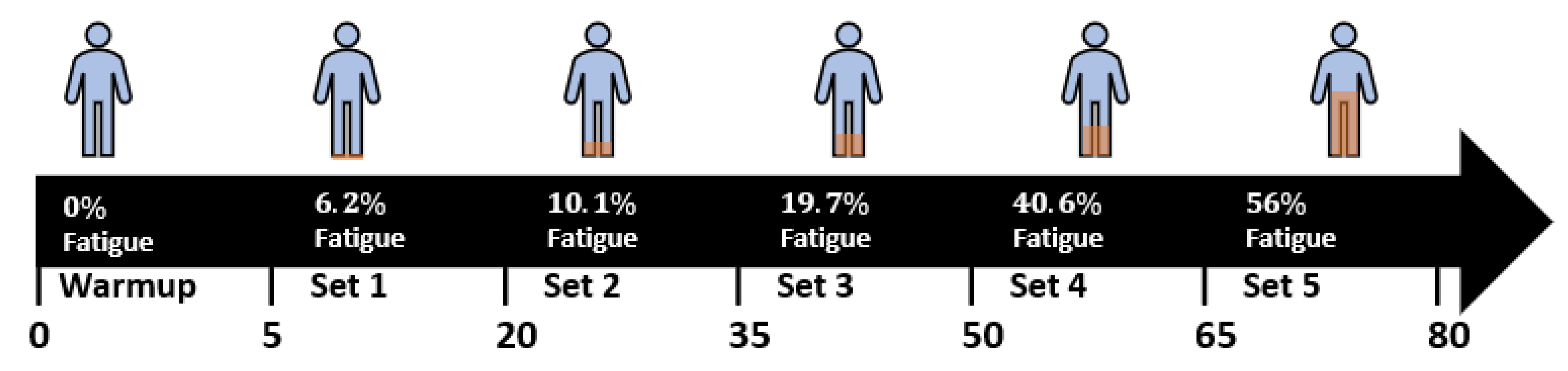

Table 3 shows the increase in completion time for each set in relation to the first set. In the 2nd set, the average increase in completion time is 1.7%, which is considerably a small change to the 1st set. The reason is that the 2nd set is usually the introductory stage of fatigue, where fatigue occurs for the first time at the last 1 or 2 repetitions. When we look at the 3rd set, we found the average increase in completion time to have increased to 8.1%. At the 4th set, volunteers take on average 14.3% more time to finish their exercises, compared to the time they took in the 1st set. Comparing the 4th set to the 3rd set, the 4th set contains almost twice the number of fatigue repetitions than the 3rd set, resulting on substantial increase in the time to complete metric, from 8% to 14%. At the 5th set, we found that the average increase in completion time is 31.0%, more than twice the increase observed in the 4th set. The reason is that the 5th set contains at least eight fatigue repetitions, which indicates that fatigue impacts later sets to a much larger extent, slowing down bicep movements and increasing the time completion for the set. As a result, fixed-size and non-overlapping windows will no longer be suitable to capture full repetitions because of its narrow fit, especially, at the 4th and 5th sets.

In

Table 4, we present the changes of muscular endurance for each of the five sets as the fatigue accumulates during repetitions in the later sets. It is possible to measure the muscular endurance using the angular velocity from the gyroscope [

36] rather than using the accelerometer. Therefore, we observe that fatigue decreases the muscular endurance according to the

X- and

Z-axes from the gyroscope by an average of −2.4% and −3.9%, respectively. However, we do not observe a substantial decrease on the muscular endurance using the

Y-axis from the accelerometer, with a small average change of only 0.4%. Overall, we observe that the average muscular endurance decreases in the later sets as the fatigue accumulates in the repetitions. For example, the 2nd and 3rd sets maintain a muscular endurance similar to the compared 1st set. However, the muscular endurance decreases by an average of 5.5% in the 4th set, and 4.1% in the 5th set, as fatigue accumulates over time. This could negatively impact data filtering, especially in the case of peak filtering, because such a filter may exclude a complete bicep repetition if it did not meet the peak threshold, especially, at the 4th and 5th sets.

4.2. RQ2: What Impact Can Fatigue Impose on the Extracted Features?

Motivation: We believe that if fatigue affects the collected data, it may affect the extracted features from the same data. In other words, some features may appear to be significant in detecting biceps repetitions without fatigue, but become less significant at later sets, where fatigue often occurs. We think that a factor such as fatigue can deform the patterns of these features reducing their correlation hence, some extracted features may be more sensitive to fatigue than others.

Approach: To address this research question, we have to analyze the effects of fatigue on the extracted features. To that aim, we extract three main features from our complete dataset and contrast them with the features extracted in the non-fatigue subset. Our goal is to investigate whether fatigue could influence the number of significant features of a HAR model. The three main features are mean, MAD, and SD. Our complete dataset and the non-fatigue subset contain 12 data signals where 9 data signals are collected from the 3-axes of gyroscope, accelerometer, and magnetometer; 3 data signals represent the rotations on

X-,

Y-, and

Z-axes which are roll, pitch, and yaw. In total, we have 36 extracted features in our complete dataset and the non-fatigue subset. We use Spearman’s rank correlation coefficient with a significance allowance of 0.1 to show how these extracted features correlate with bicep repetitions [

37].

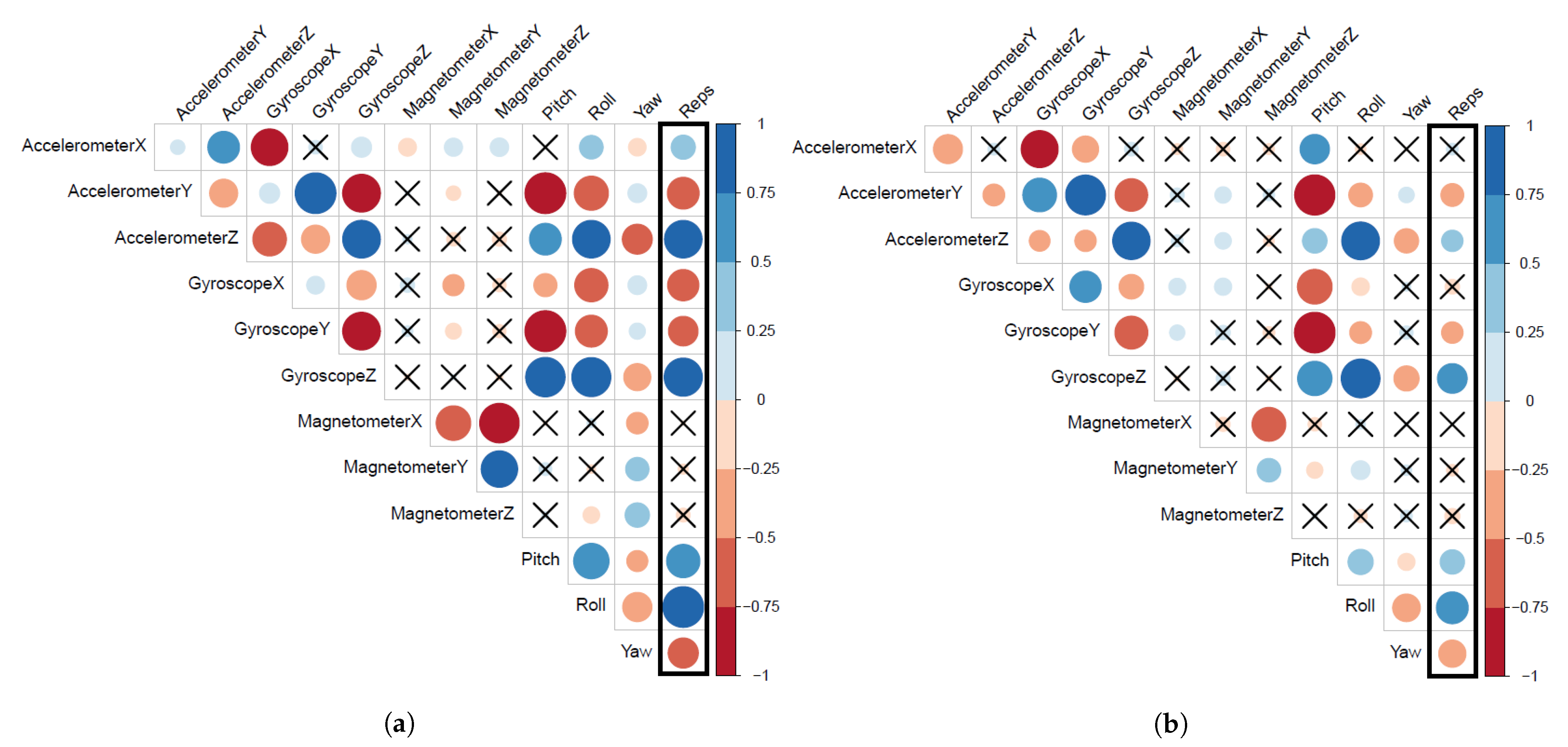

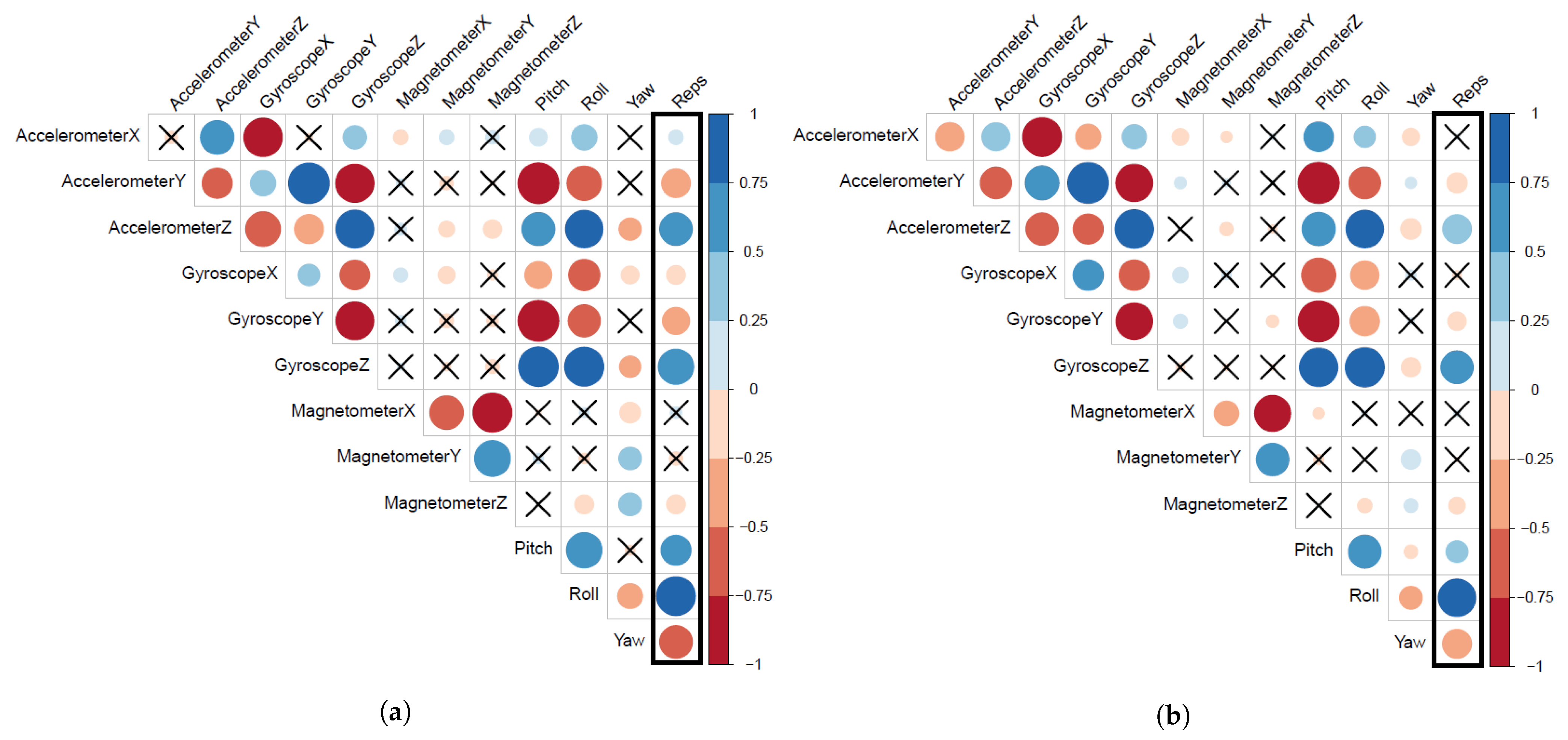

Figure 7 presents the correlation matrix for 12 mean features and the bicep repetitions. The positive correlations are displayed in blue and negative correlations are presented in red. The color intensity and the size of the circle are also proportional to the correlation coefficients whereas, the insignificant correlations are marked with ×.

Figure 7a shows that bicep repetitions have significant correlations with 9 out of 12 mean features extracted from the non-fatigue subset. These 9 significant features are (X,Y,Z)-Accelerometer, (X,Y,Z)-Gyroscope, pitch, roll, and yaw. On the other hand,

Figure 7b shows that bicep repetitions have significant correlations with 7 out of 12 mean features extracted from our complete dataset where fatigue exists during the exercise. These 7 significant features are (Y,Z)-accelerometer, (Y,Z)-gyroscope, pitch, roll, and yaw. We can observe two impacts of fatigue on extracted features. First, some mean features correlations became insignificant to bicep repetitions such as X-accelerometer and X-gyroscope. Second, an overall drop in the correlation coefficient values for all mean features, as presented by the faint color intensity and the shrink of circle sizes.

To strengthen the evidence that points to fatigue as the potential cause of these impacts, we believe that similar observations should exist for MAD and SD features.

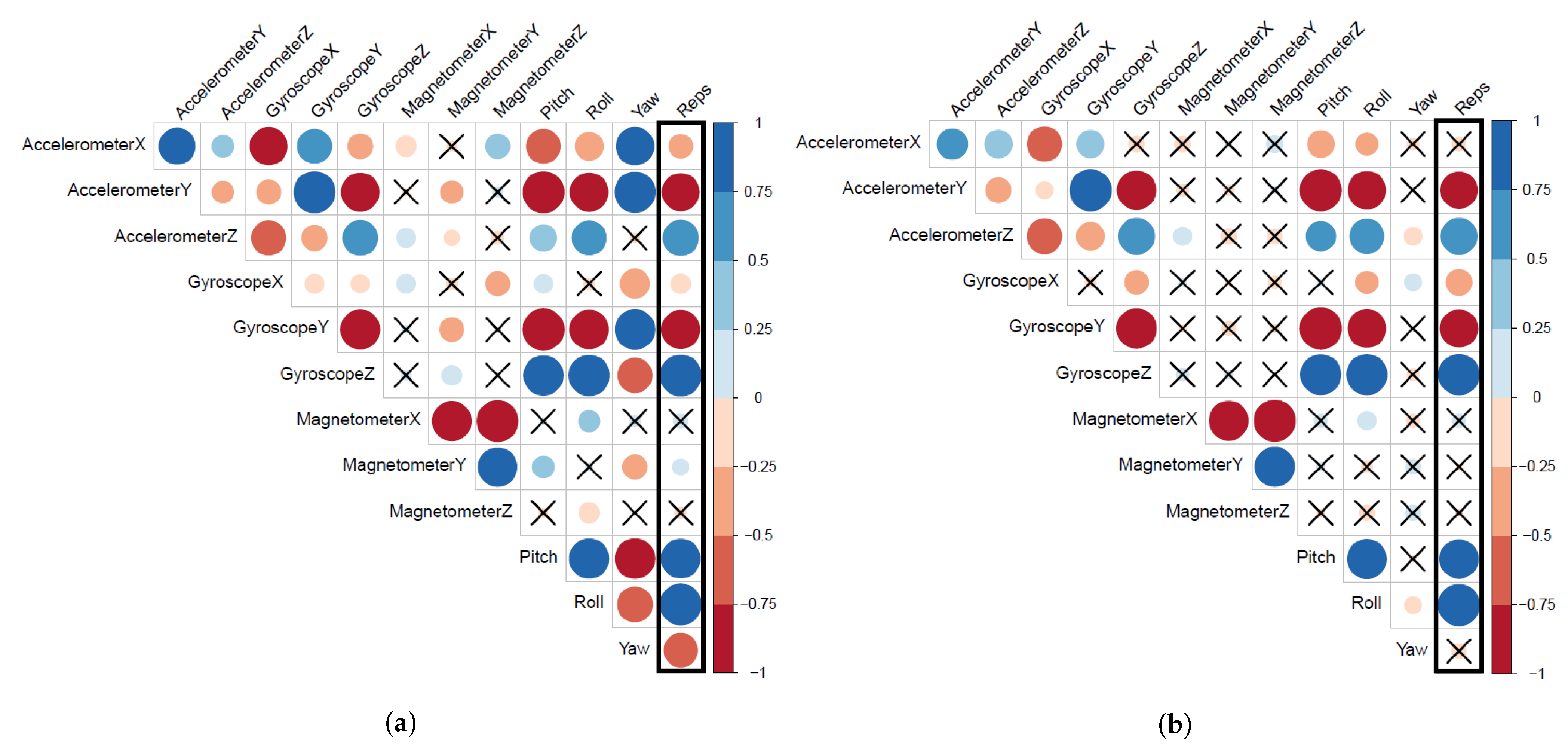

Figure 8 presents the correlation matrix for 12 MAD features and the bicep repetitions in our complete dataset and the non-fatigue subset.

Figure 8a shows that bicep repetitions have significant correlations with 10 out of 12 MAD features extracted from the non-fatigue subset. On the other hand,

Figure 8b shows that bicep repetitions have significant correlations with seven out of 12 MAD features extracted from our complete dataset. Again, we encounter a similar effect to the aforementioned ones in extracted features (mean). Some MAD features correlations became insignificant to bicep repetitions such as X-accelerometer, Y-magnetometer, and yaw. However, we did not observe a major drop in all MAD features’ correlation coefficient values, only the newly three mentioned insignificant suffered from a drop in the correlation coefficient values.

Figure 9 presents the correlation matrix for 12 SD features and the biceps repetitions in our complete dataset and the non-fatigue subset.

Figure 9a shows that bicep repetitions have significant correlations with 10 out of 12 SD features extracted from the non-fatigue subset. On the other hand,

Figure 9b shows that bicep repetitions have significant correlations with eight out of 12 SD features extracted from our complete dataset. Once more, some SD features correlations became insignificant to bicep repetitions such as the

X-axis for both accelerometer and gyroscope. We also observe a slight drop in the correlation coefficient values for all SD features, as presented by the faint color intensity and the shrink of circle sizes. At this point, we clearly observe the same recurring effects when fatigue is introduced to the data, which indicates that fatigue impacts the significant features of a HAR model.

Table 5 shows the features extracted from each dataset. In RQ1, we pointed out that the

X-axis represents the vertical displacement, which has the largest angle of movement and linear acceleration. However, fatigue often affects acceleration greatly compared to the angle of movement due to the movement nature of the biceps muscle [

38]. Therefore, changes on the linear acceleration, measured by the accelerometer, affected the significance of its extract features. On the other hand, the angular velocity, measured by the gyroscope, remains relatively steady because of the fixed angle of movement of bicep muscles. Regarding the low number of significant features from the magnetometer, we believe that fatigue did not impact that feature. But mostly, the magnetometer is not an optimal sensor as it did not reveal any significant characteristics readings about the magnetic field’s direction or strength, as previously mentioned in RQ1. Most of the features extracted from the magnetometer were insignificant.

Result: Our findings show that fatigue significantly impacts the extracted features, by hindering their correlation coefficient values to the extent of turning some significant features into insignificant ones. We were able to extract 9 mean, 10 MAD, and 10 SD features from the none-fatigue subset for a total of 29 significant features with a significance allowance of 0.1. However, once the fatigue was introduced in the data, we were only able to extract 7 mean, 7 MAD, and 8 SD features for a total of 22 significant features, as shown in

Table 5. This indicates that fatigue, once introduced in the dataset, reduced the significance of 7 features (24% of total significant features).

4.3. RQ3: What Is the Impact of Fatigue on Subject-Specific Biceps Repetitions Models?

Motivation: Given that fatigue impacted the extracted features, we want to investigate how it could affect the biceps detection models’ performance. The presence of fatigue has the potential to decrease the model’s performance in recognizing human activities. Therefore, we start with subject-specific models to examine their ability to detect biceps concentration curl in a dataset with a progressive inclusion of fatigue repetitions.

Approach: To address this research question, we use the five detection models mentioned in

Section 3.2. These models use the 22 significant features extracted from our complete dataset to eliminate weak features that turn to insignificant once fatigue occurs. We use these models to detect biceps repetitions in our dataset. Then, we calculate the accuracy using the confusion matrix shown in

Table 6, where non-repetition represents an incomplete repetition or any random movement, and repetition represents a completed repetition, whether it contains fatigue or not. We perform six 10-fold cross-validation runs using the non-fatigue subset where we replace 10% of the non-fatigue repetitions in the subset with fatigue repetitions from our complete dataset per experiment. Note that all models are trained and tested with similar levels of fatigue to simulate a realistic use-case. Since fatigue is a natural consequence of any physical activity, it is expected to occur in the training data of models as well as when a final users use wearable devices for HAR. These runs are done over all participants, and then averaged, as shown in

Table 7. This allows us to observe the effects of fatigue on the detection models gradually, as more fatigue repetition is added to the dataset. We use the first 10-fold cross-validation run as a reference point because there are no fatigue repetitions in the dataset to hinder models’ performance. Then, we calculate the accuracy (

1), precision (

2), recall (

3), and F1 (

4) for each run.

Table 7 shows the performance averages for the six 10-fold cross-validation runs over all participants per model. Each △ F1* row shows the difference in the model’s performance, compared to the performance obtained in the first run (no fatigue).

Result: Our findings show that the more fatigue added to the dataset, the steeper the decline in performance of the five models. In fact,

Table 7 shows that replacing as little as 10% of the repetitions with fatigue repetitions can drop the GLM models’ performance by 8%, and 11% for DT. If we replace an additional 10% of the repetitions with fatigue repetitions, all models’ performance decrease by at least 21% (FNN and DT). The decrease in the performance can be as severe as 30% in the RF model. Such findings indicate that, for some models (e.g., GLM, LR, and RF), it only takes 20% of fatigued repetitions to decrease a model’s performance by more than 20%. The impact in the model’s performance is even more significant when we reach to 40% and 50% of fatigue repetitions. With half the repetitions containing fatigue, the models lose between 47% (DT) to 57% (GLM) of its original performance, which may compromise the reliability of HAR systems that do not take fatigue properly into account.

4.4. RQ4: What Is the Impact of Fatigue on Cross-Subject Biceps Repetitions Models?

Motivation: We observed that the impact of fatigue on subject-specific models were significant, hindering the performance of models by at least 20% when fatigue is present in a quarter of all repetitions. We believe cross-subject models may be similarly affected, perhaps even to a greater extent, given that these models tend to underperform the subject-specific models. Hence, we assess the impact of fatigue on the generality of the models and the ability to detect biceps repetitions across different subjects.

Approach: Our approach used to answer RQ4 is similar to RQ3. We use the 22 significant features extracted from our complete dataset and use the five detection models aforementioned in

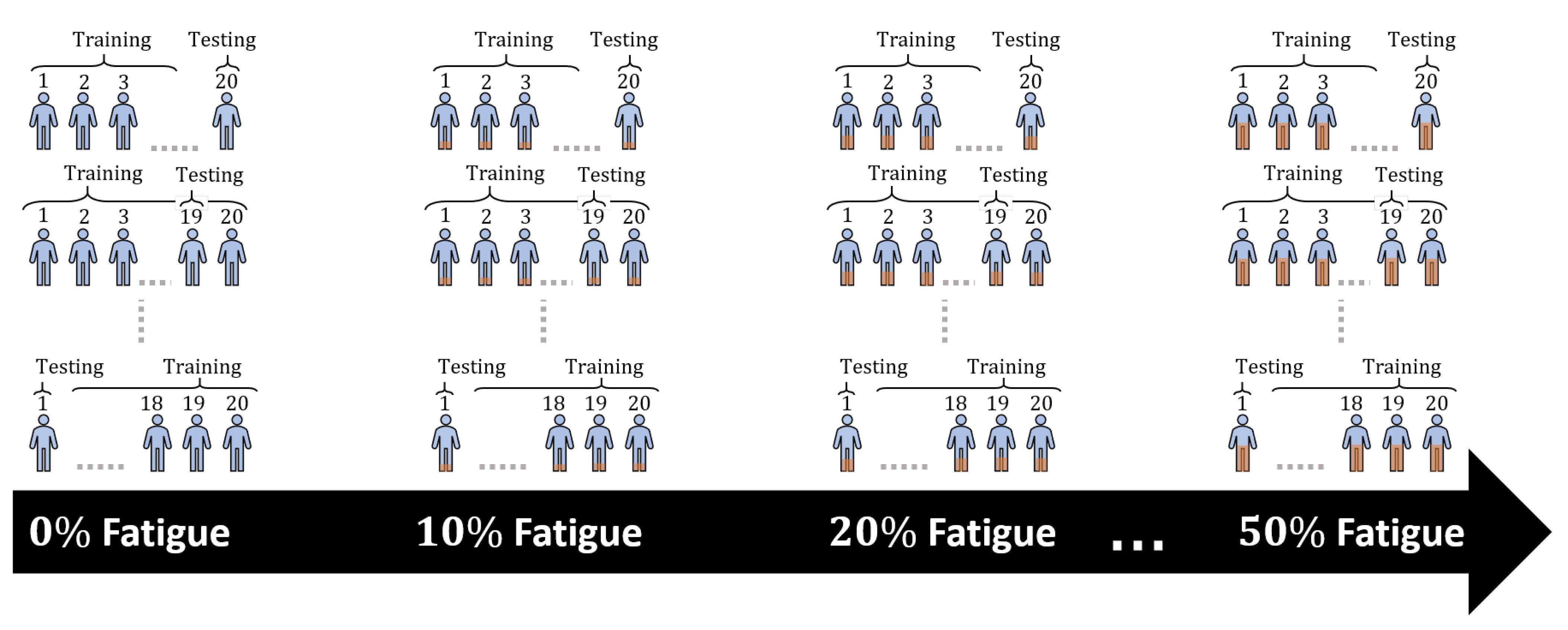

Section 3.2. We perform six Leave-One-Out Cross-Validations (LOOCV) runs where K equals the number of volunteers, K = 20.

Figure 10 shows a partial representation of the six LOOCV runs with K = 20 for using the data of 20 volunteers individually. Each LOOCV run consists of 20 iterations, in the 1st iteration, we use the data from 19 volunteers to train our models then use the 20th volunteer’s data to test the models. At last, in the 20th iteration, we should have used all volunteers data for testing except the 1st volunteer therefore, we train the model using all the 19 volunteers dataset then, used the 1st volunteer’s data for testing. We calculate the precision, recall, accuracy, and F1-score per iteration then report the averages. We repeat the LOOCV run after we replace 10% of each volunteer’s data with fatigue repetitions from each individuals data, recursively. We use the first LOOCV run as a reference point because there are no fatigue repetitions in the individuals data to affect the models’ performance. Similarly to the approach used in RQ3, all models are trained and tested with the same share of fatigue repetitions, to simulate a realistic use-case. Then, we calculate the accuracy (

1), precision (

2), recall (

3), and F1 (

4) for each run.

Table 8 shows the performance averages for the six LOOCV runs per model. Each △ F1* rows show the comparison of the model’s performance against the performance obtained in the first run (no fatigue repetitions).

Result: Similar to RQ3, our finding indicates fatigue significantly impacts the performance in all five models.

Table 8 shows that replacing as little as 10% of the repetitions with fatigued ones can drop a model’s performance by 6% for RF, and down to 13% for GLM and LR. If we replace an additional 10% of the repetitions with fatigued ones, the models’ performance decrease by 20% for FNN and DT, and down to 25% for LR. Once the fatigue reaches 30% of repetitions, we see a sharp decrease in all models by at least 30%. This trend continues, as once the fatigue repetitions reach 50% of the dataset, the HAR models’ performance decrease by at least 41%. We observe a negative linear effect in some models’ performance as the fatigue increases. For instance, the performance of DT models decreases by an average of 10% for every 10% increase of fatigue in the dataset.