Mitigating Cold Start Problem in Serverless Computing with Function Fusion

Abstract

:1. Introduction

- We propose a model of the workflow response time in the cold start and the warm start modes as well as in sequential and parallel runs (Section 3).

- We propose a function fusion scheme that handles branch and parallel execution fusing (Section 4).

- We present a practical fusion automation process for stateless functions. Even when implemented for AWS Lambda, the proposed fusion automation process can be easily adapted for other serverless platforms (Section 4).

- We evaluated the performance of the proposed method by conducting thorough evaluations on real cloud environment (i.e., AWS Lambda).

2. Background and Motivation

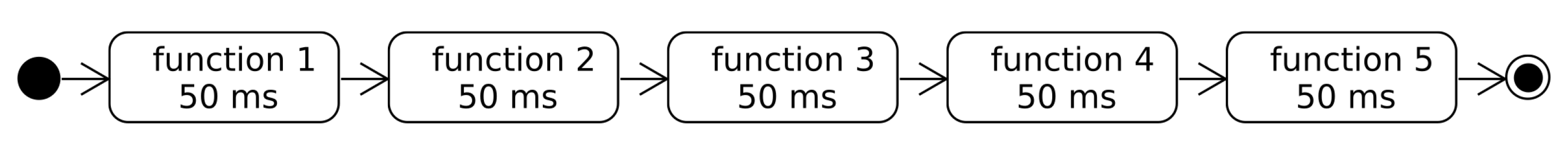

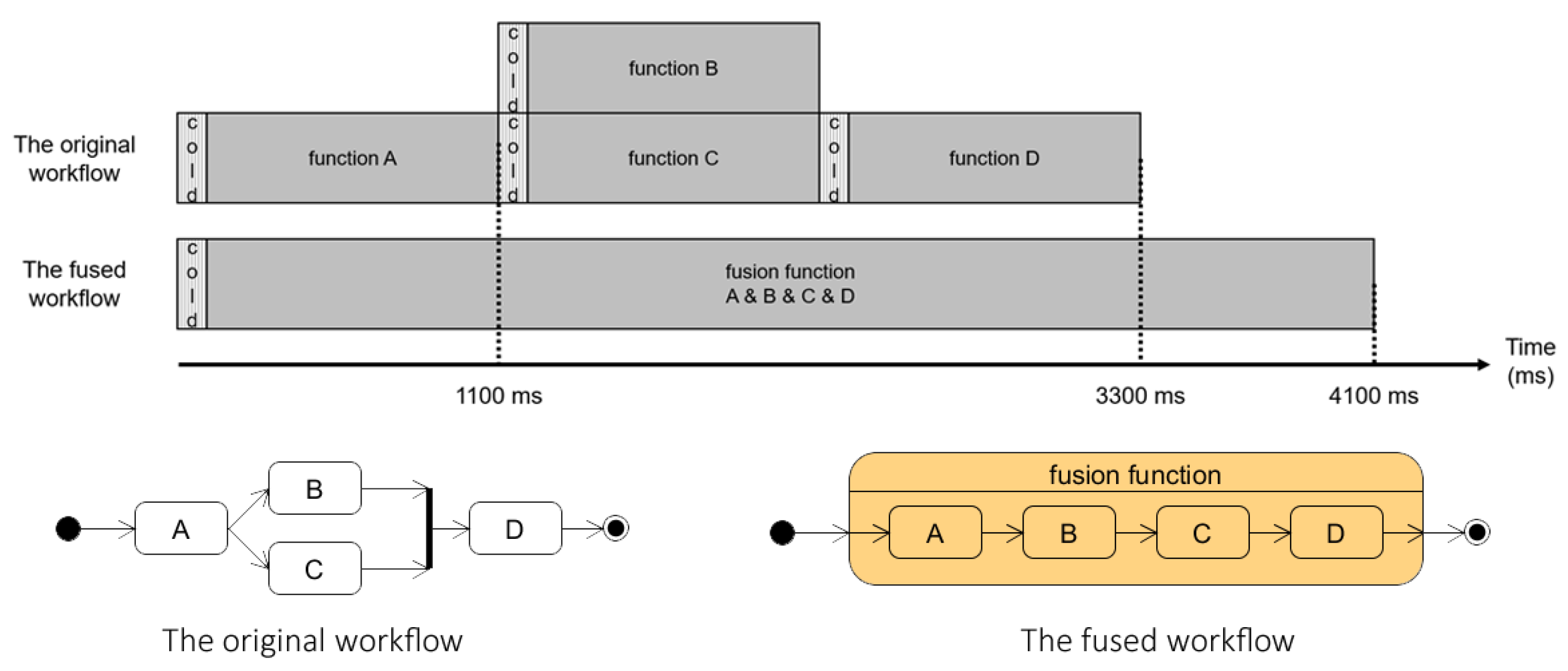

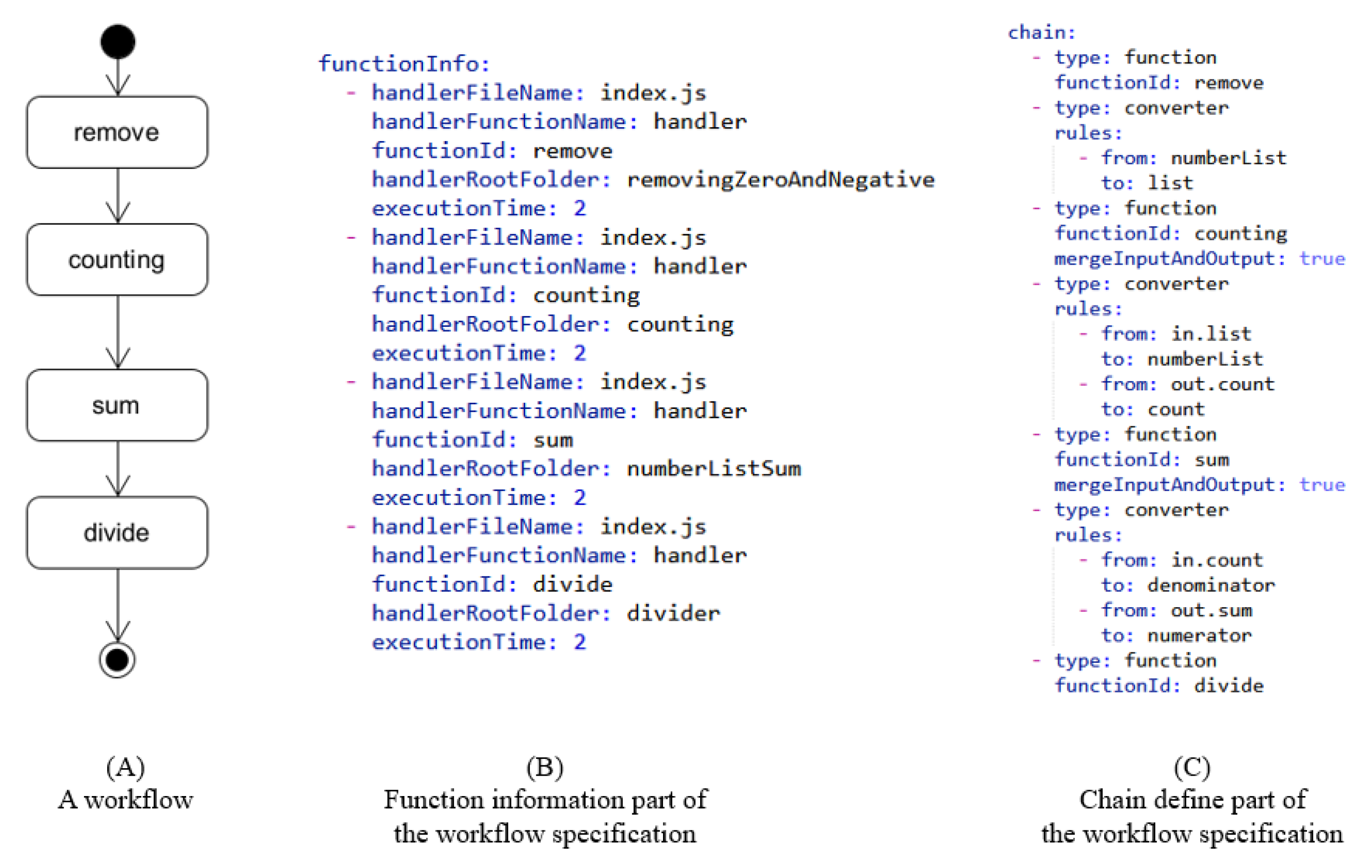

2.1. Composing a Workflow Using Function Fusion

2.2. Cold Start and Warm Start

3. Problem Modeling

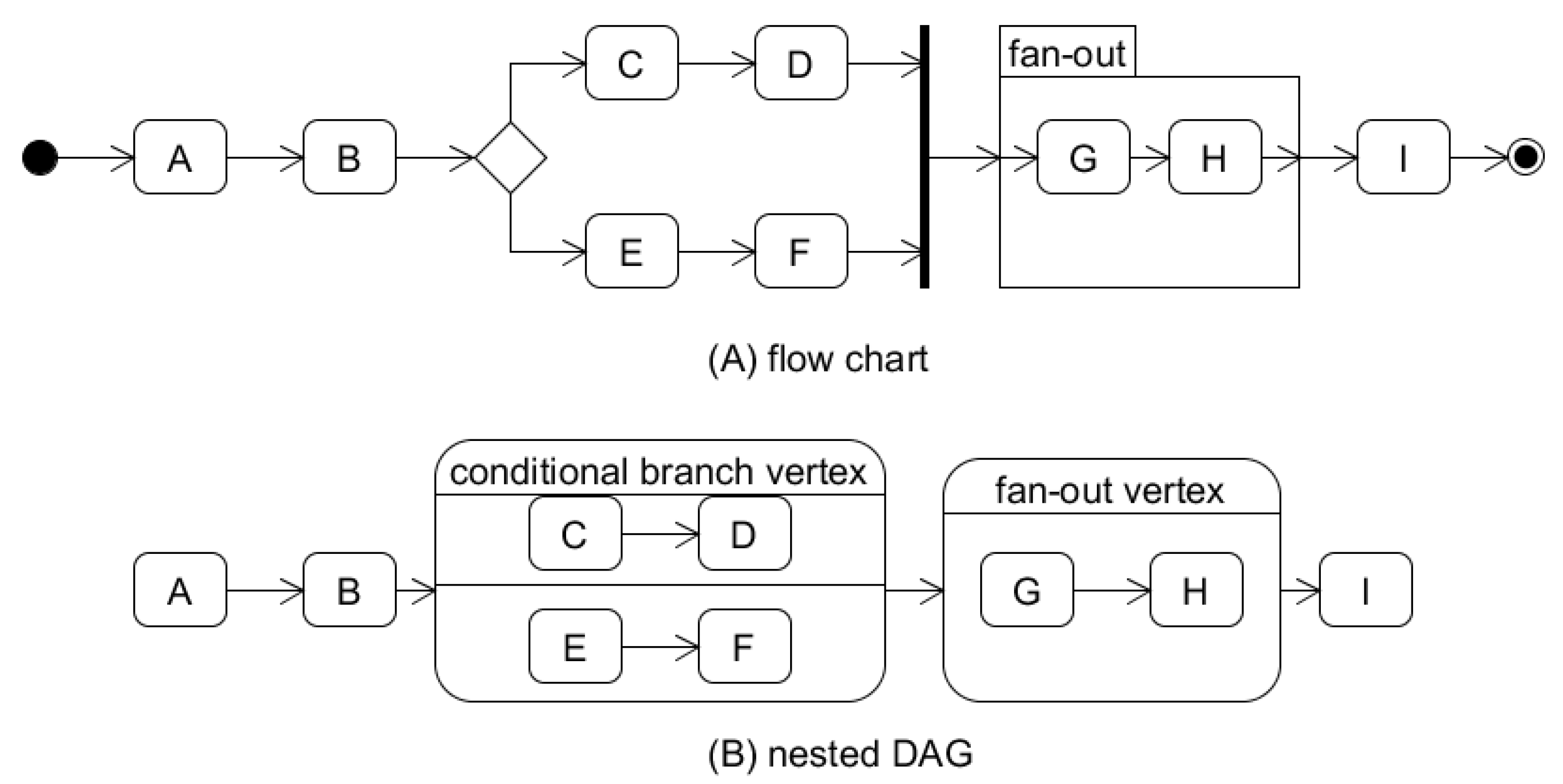

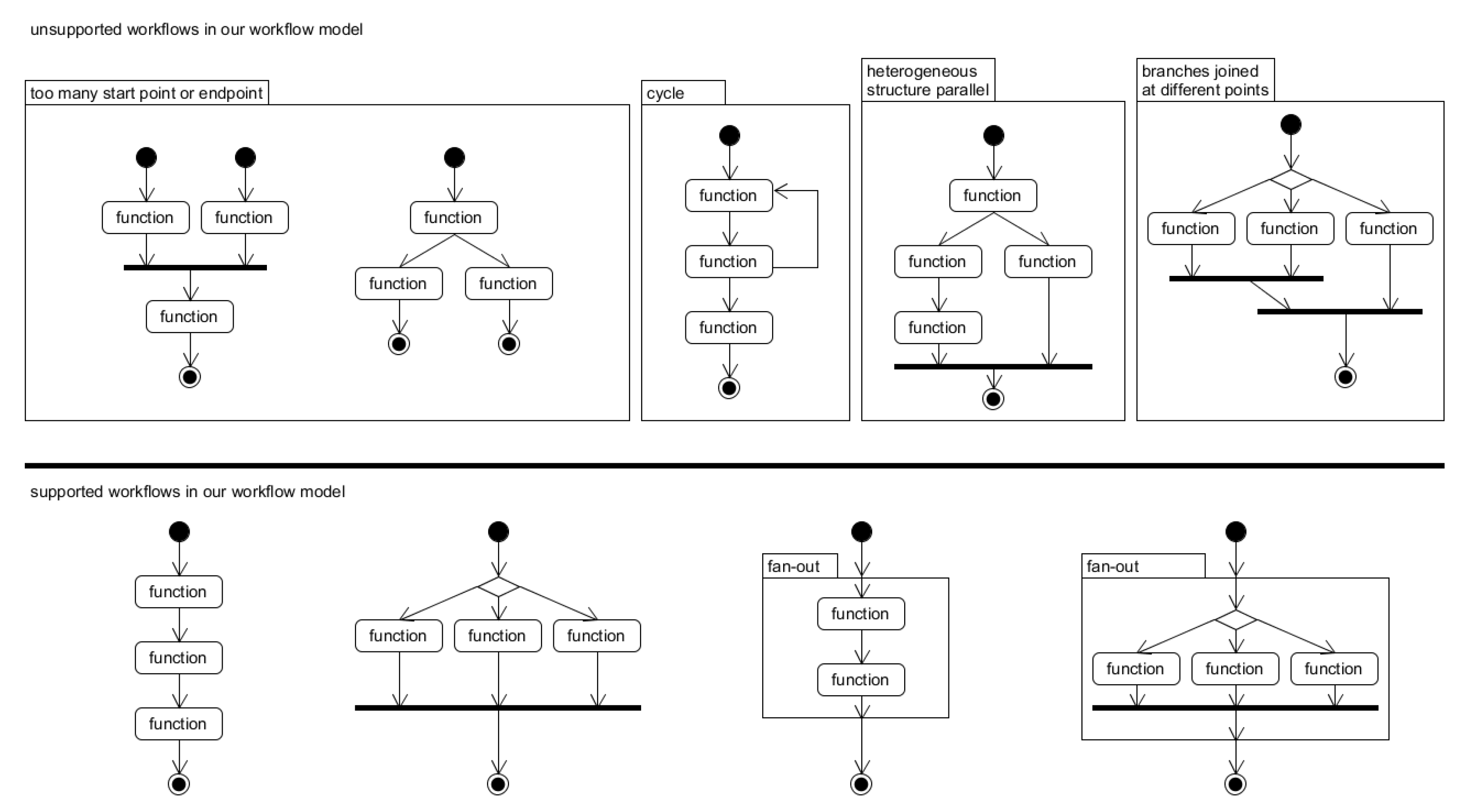

3.1. Graph Representation of a Workflow

- There can be only a single starting point as well as a single endpoint in the workflow.

- The split execution of the workflow that will end at different points is not allowed. In addition, two execution flows that start from different points and meet in the middle are not allowed either. Hence there is one source vertex and one sink vertex in a graph.

- Reversing the workflow is not allowed.

- Only parallel execution of the same structure (fan-out) is possible. If parallel execution of different structures is required, fan-out with a conditional branch should be used.

- Each branch of a conditional branch should be joined at the same point.

3.2. Workflow Items

3.2.1. Function

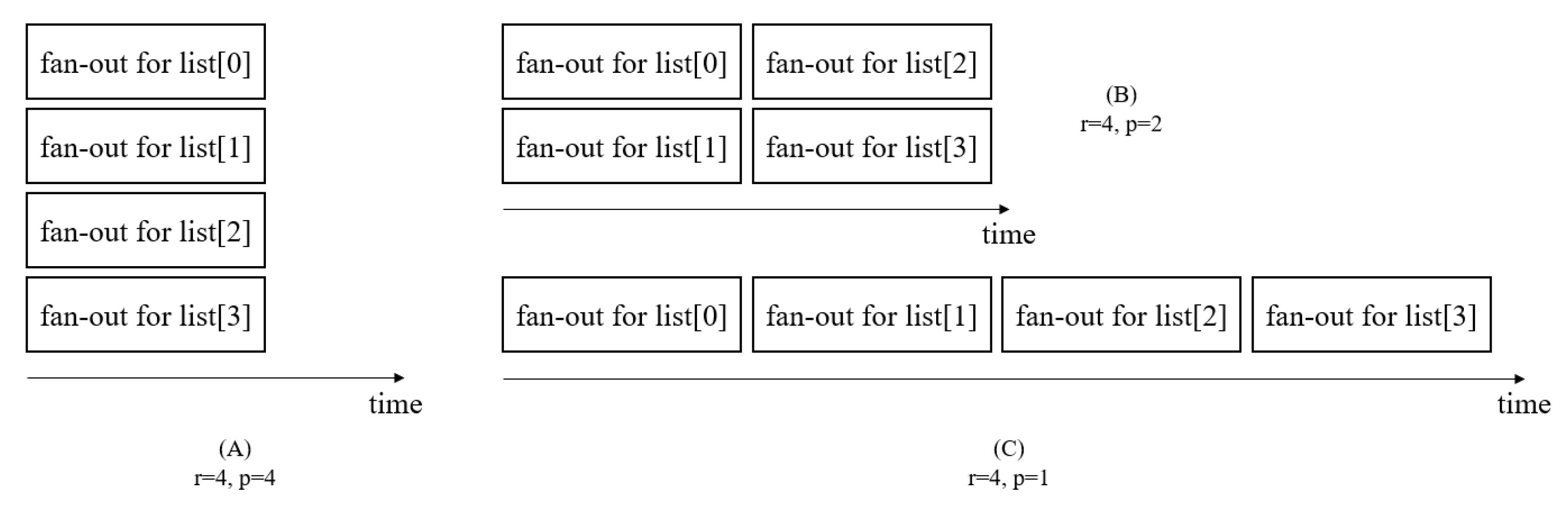

3.2.2. Fan-Out

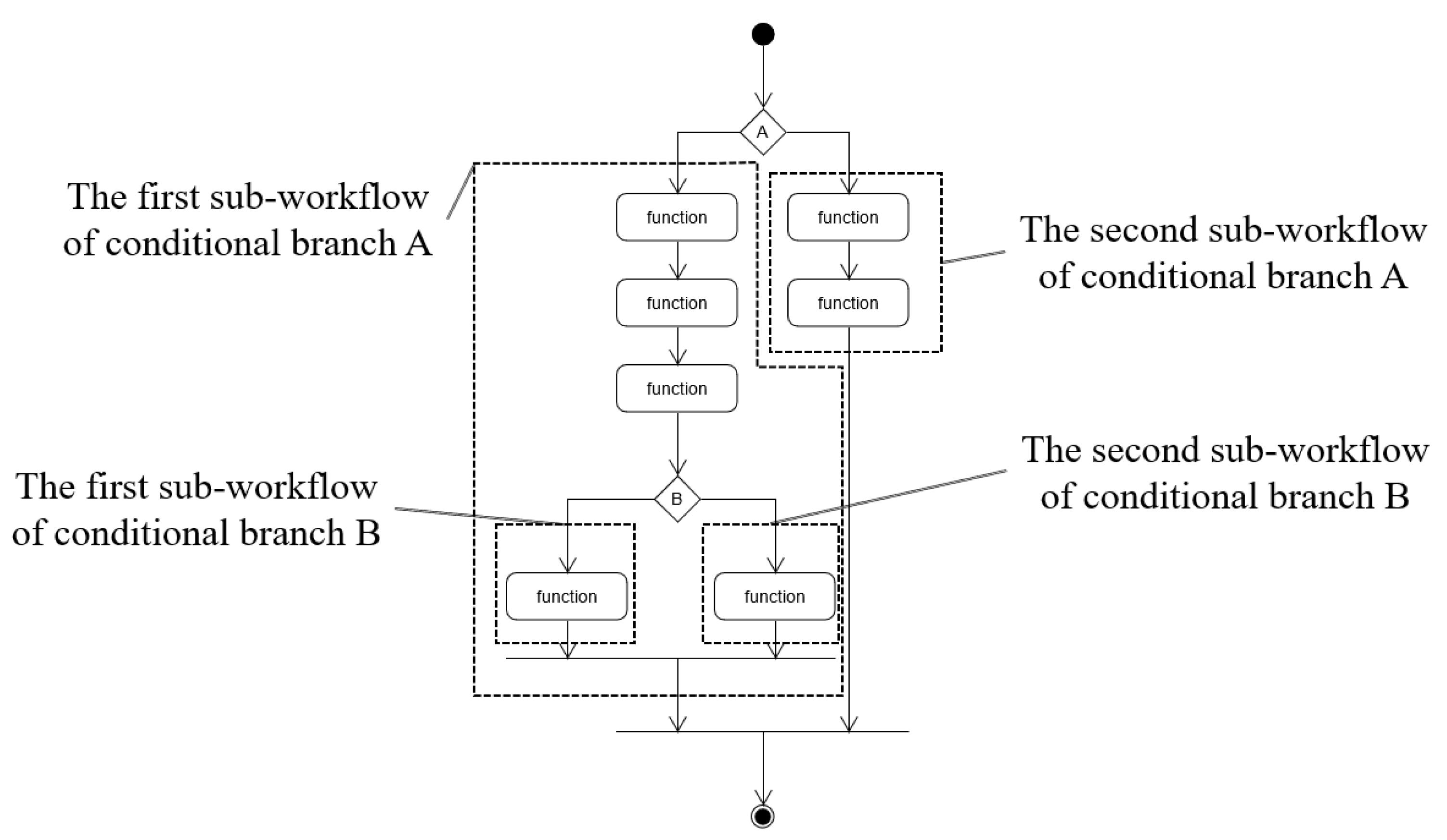

3.2.3. Conditional Branch

3.3. Latency

- Cold Start latency: We define the cold start latency as a response time difference of a single function between cold start and warm start. As mentioned in the Background section, we assume that the cold start latency is the same for all functions. This latency is removed at a warm start or at the time of fusing.

- Invocation latency: In a workflow, there is a latency to call the following function because the serverless platform encodes the result of the previous function to general data format (usually JSON) and decodes it to call the following function. We define this latency as the difference between the end time of the previous function and the start time of the following function at the warm start mode. This latency will appear not only at the cold start but also at the warm start. However, fusing can remove it because function calls are performed directly in a thread with almost zero delays.

- Fan-out latency: When an execution flow reaches a fan-out, a time is needed to split the list data into each element and initiate the sub-workflow of the fan-out. We call this latency the fan-out latency and define it as the difference between the end time of the previous workflow item and the earliest start time among all instances of the sub-workflow of the fan-out. We assume that the fan-out latency is the same for all fan-outs. This latency will appear at the cold start and at the warm start but can be removed by fusion.

3.4. Workflow Response Time Model

4. Mitigating Cold Start Problem with Function Fusion by Considering Conditional Branch Selection and Parallelism

4.1. Fusion Decision on a Function

4.2. Decision for a Fusion on a Fan-Out

4.3. Fusion Decision on a Conditional Branch

4.4. The Overall Process

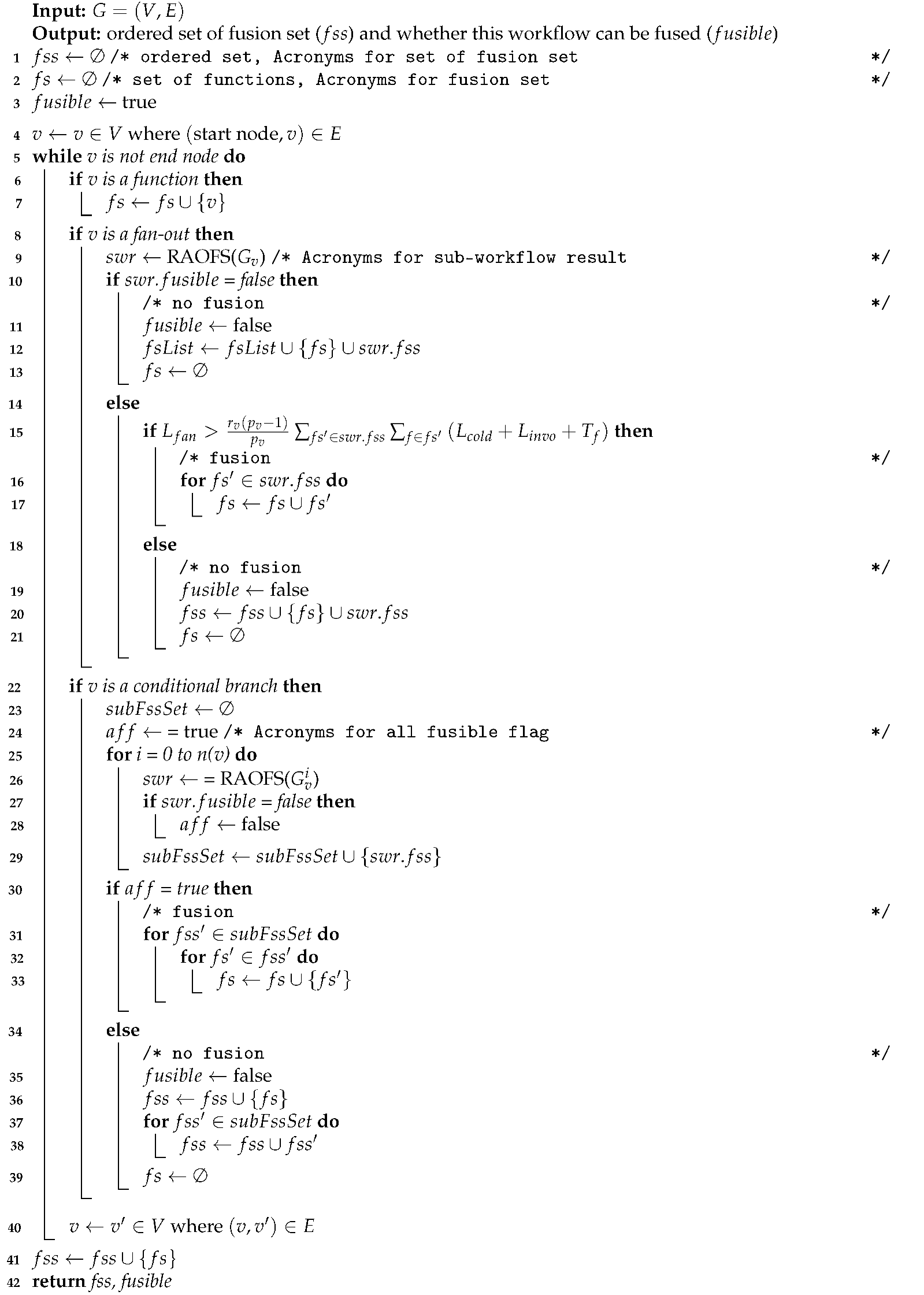

| Algorithm 1: RAOFS: The Recursive Algorithm for Optimal Fusion Strategy |

|

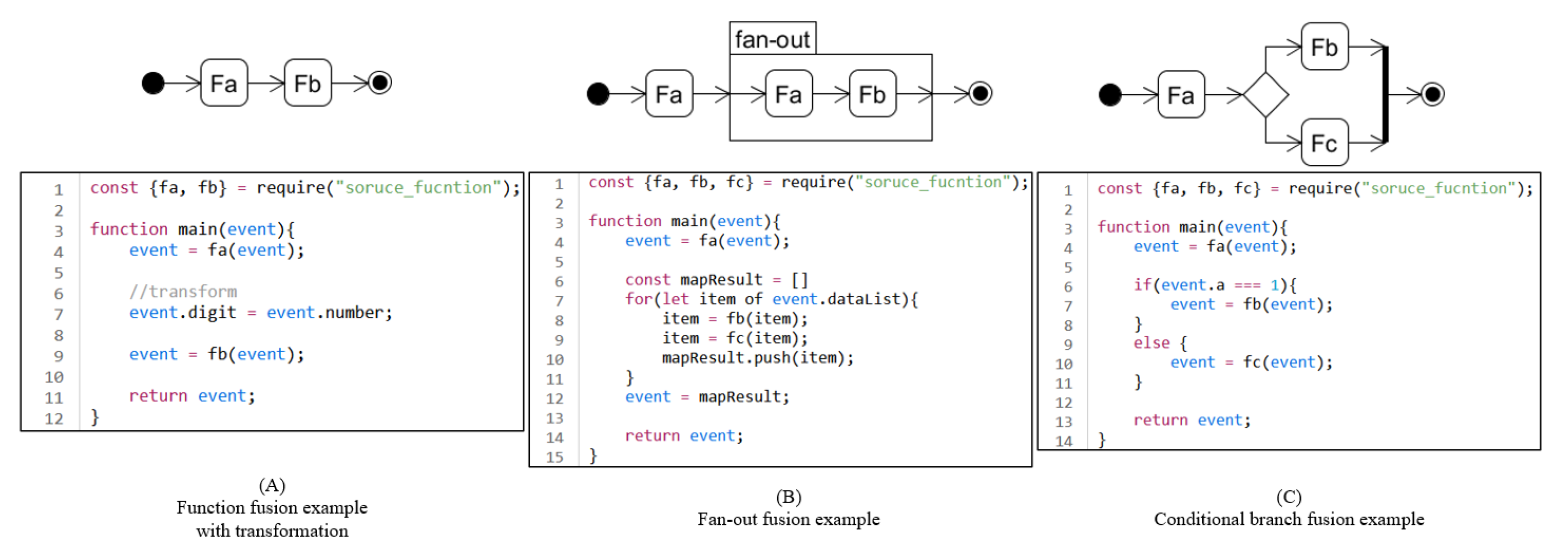

4.5. Writing a Fused Function

- All source functions are stateless. They do not store any state data for future use.

- The input and output of a source function are a single object that may contain multiple properties.

- If a source function contains an asynchronous call, it will be blocked until resolved.

5. Experiment

5.1. Experimental Setting

- Memory: 128 MB.

- Region: ap-northeast-2.

- Runtime: Node.js 14.x.

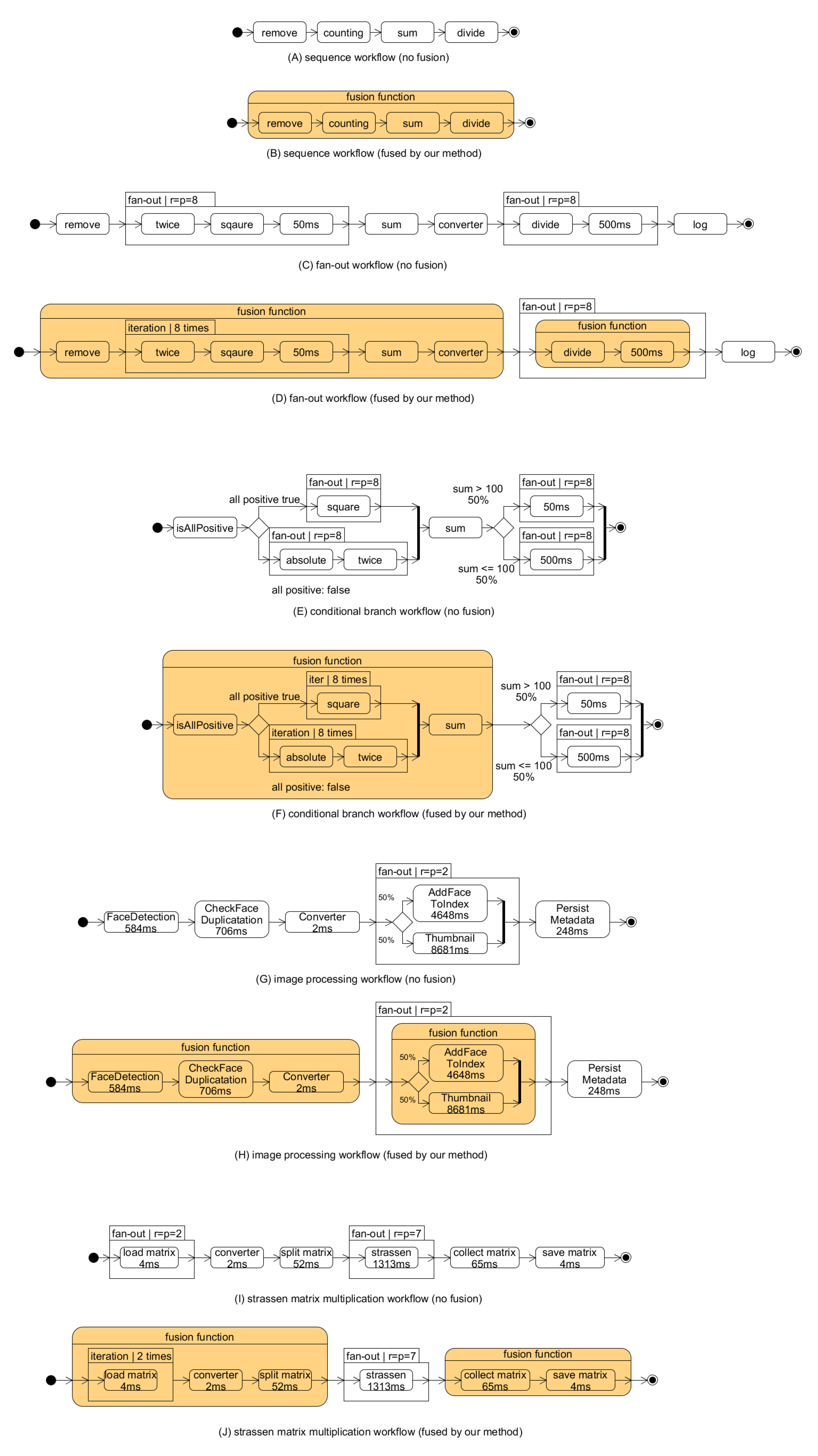

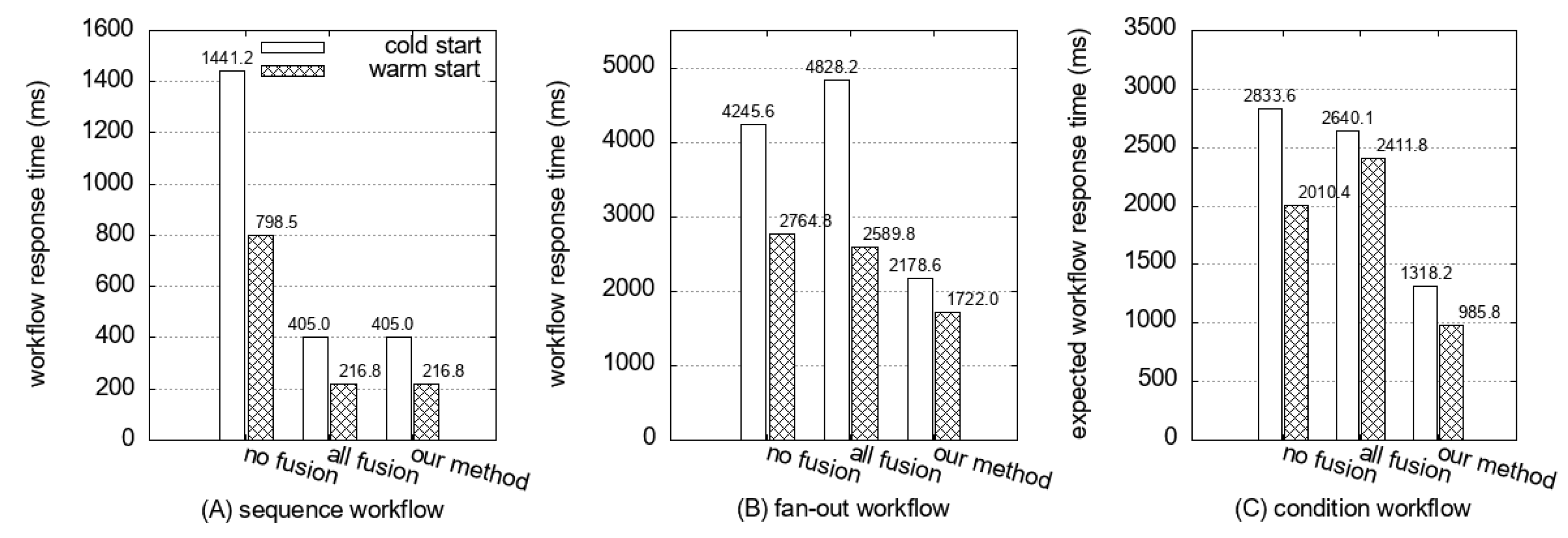

5.2. Example Workflows

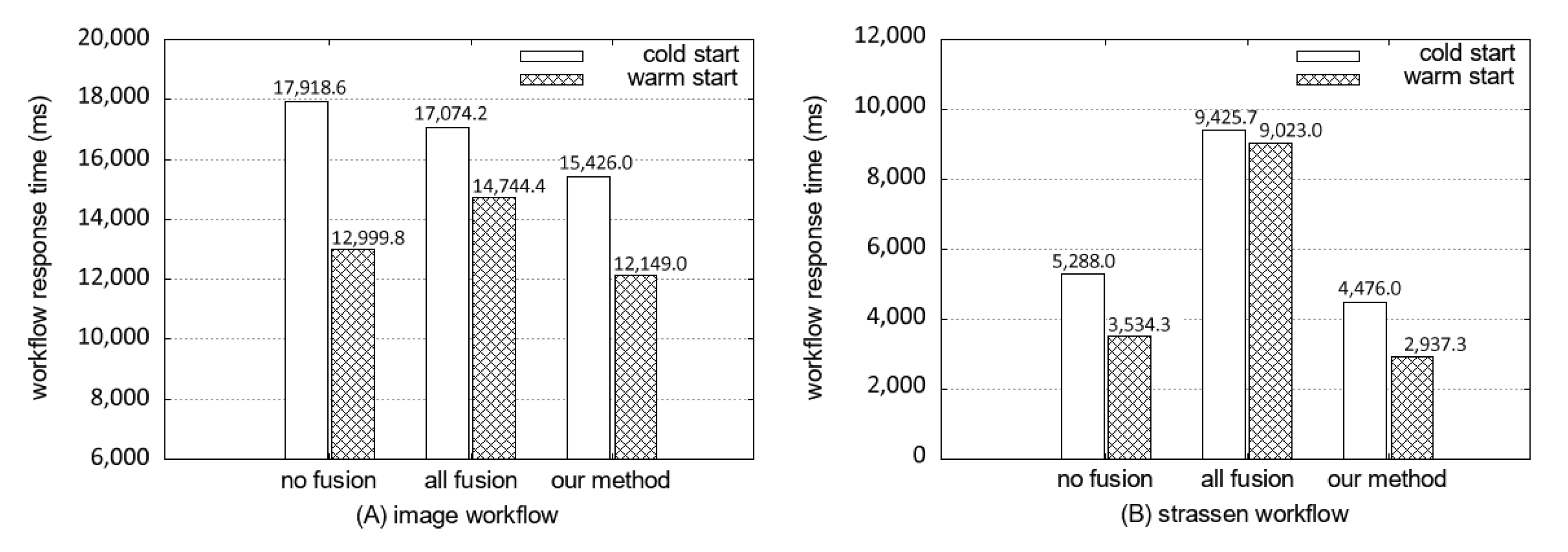

5.3. Real-World Workflow

5.3.1. Face Detection

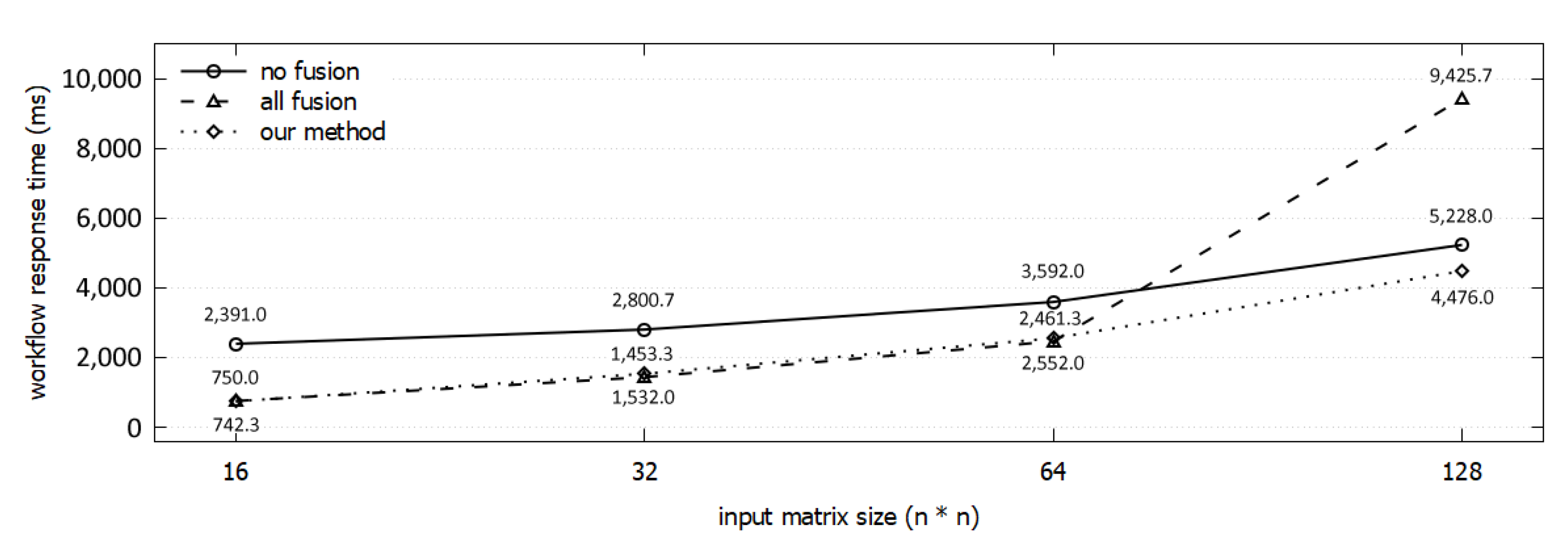

5.3.2. Matrix Multiplication

6. Related Works

6.1. Workflow in Grid/Cloud and Serverless Computing

6.2. Mitigating Cold Start by Function Preloading

6.3. Reducing the Cost of Serverless Computing by Function Fusion

6.4. Workflows Management and Optimizations for Serverless Computing

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Wang, F.Y.; Zhang, J.J.; Zheng, X.; Wang, X.; Yuan, Y.; Dai, X.; Zhang, J.; Yang, L. Where does AlphaGo go: From church-turing thesis to AlphaGo thesis and beyond. IEEE/CAA J. Autom. Sin. 2016, 3, 113–120. [Google Scholar]

- Ulintz, P.J.; Zhu, J.; Qin, Z.S.; Andrews, P.C. Improved classification of mass spectrometry database search results using newer machine learning approaches. Mol. Cell. Proteom. 2006, 5, 497–509. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kaur, B.; Sharma, R.; Rani, S.; Gupta, D. Recommender system: Towards classification of human intentions in e-shopping using machine learning. J. Comput. Theor. Nanosci. 2019, 16, 4280–4285. [Google Scholar] [CrossRef]

- Carnier, R.M.; Fujimoto, Y. Assessment of Machine Learning of Optimal Solutions for Robotic Walking. Int. J. Mech. Eng. Robot. Res. 2021, 10, 44–48. [Google Scholar] [CrossRef]

- Treveil, M.; Omont, N.; Stenac, C.; Lefevre, K.; Phan, D.; Zentici, J.; Lavoillotte, A.; Miyazaki, M.; Heidmann, L. Introducing MLOps; O’Reilly Media, Inc.: Newton, MA, USA, 2020. [Google Scholar]

- Vahidinia, P.; Farahani, B.; Aliee, F.S. Cold Start in Serverless Computing: Current Trends and Mitigation Strategies. In Proceedings of the 2020 International Conference on Omni-layer Intelligent Systems (COINS), Barcelona, Spain, 31 August–2 September 2020; pp. 1–7. [Google Scholar]

- Shafiei, H.; Khonsari, A.; Mousavi, P. Serverless Computing: A Survey of Opportunities, Challenges and Applications. arXiv 2021, arXiv:1911.01296. [Google Scholar]

- Daw, N.; Bellur, U.; Kulkarni, P. Xanadu: Mitigating Cascading Cold Starts in Serverless Function Chain Deployments. In Proceedings of the 21st International Middleware Conference, Association for Computing Machinery, Delft, The Netherlands, 7–11 December 2020; pp. 356–370. [Google Scholar] [CrossRef]

- Sbarski, P.; Kroonenburg, S. Serverless Architectures on Aws: With Examples Using Aws Lambda; Simon and Schuster: New York, NY, USA, 2017. [Google Scholar]

- Baldini, I.; Cheng, P.; Fink, S.J.; Mitchell, N.; Muthusamy, V.; Rabbah, R.; Suter, P.; Tardieu, O. The Serverless Trilemma: Function Composition for Serverless Computing. In Proceedings of the 2017 ACM SIGPLAN International Symposium on New Ideas, New Paradigms, and Reflections on Programming and Software, Vancouver, BC, Canada, 25–27 October 2017; pp. 89–103. [Google Scholar] [CrossRef]

- Elgamal, T. Costless: Optimizing Cost of Serverless Computing through Function Fusion and Placement. In Proceedings of the 2018 IEEE/ACM Symposium on Edge Computing (SEC), Seattle, WA, USA, 25–27 October 2018; pp. 300–312. [Google Scholar] [CrossRef] [Green Version]

- Silva, P.; Fireman, D.; Pereira, T.E. Prebaking Functions to Warm the Serverless Cold Start. In Proceedings of the 21st International Middleware Conference, Association for Computing Machinery, Delft, The Netherlands, 7–11 December 2020; pp. 1–13. [Google Scholar] [CrossRef]

- Wang, L.; Li, M.; Zhang, Y.; Ristenpart, T.; Swift, M. Peeking Behind the Curtains of Serverless Platforms. In Proceedings of the 2018 {USENIX} Annual Technical Conference ({USENIX} {ATC} 18), Boston, MA, USA, 11–13 July 2018; pp. 133–146. [Google Scholar]

- How Does Language, Memory and Package Size Affect Cold Starts of AWS Lambda? Available online: https://acloudguru.com/blog/engineering/does-coding-language-memory-or-package-size-affect-cold-starts-of-aws-lambda (accessed on 15 December 2021).

- Du, D.; Yu, T.; Xia, Y.; Zang, B.; Yan, G.; Qin, C.; Wu, Q.; Chen, H. Catalyzer: Sub-Millisecond Startup for Serverless Computing with Initialization-Less Booting. In Proceedings of the Twenty-Fifth International Conference on Architectural Support for Programming Languages and Operating Systems, Lausanne, Switzerland, 16–20 March 2020; pp. 467–481. [Google Scholar] [CrossRef] [Green Version]

- Wang, I.; Liri, E.; Ramakrishnan, K.K. Supporting IoT Applications with Serverless Edge Clouds. In Proceedings of the 2020 IEEE 9th International Conference on Cloud Networking (CloudNet), Piscataway, NJ, USA, 9–11 November 2020; pp. 1–4. [Google Scholar] [CrossRef]

- Degutis, D.R. Modeling and Transformation of Serverless Workflows. Master’s Thesis, University of Stuttgart, Stuttgart, Germany, 2020. [Google Scholar] [CrossRef]

- Buddha, J.P.; Beesetty, R. Step Functions. In The Definitive Guide to AWS Application Integration; Springer: Berlin/Heidelberg, Germany, 2019; pp. 263–342. [Google Scholar]

- Sawhney, R. Azure Durable Functions. In Beginning Azure Functions; Springer: Berlin/Heidelberg, Germany, 2019; pp. 87–121. [Google Scholar]

- Serverless Workflow Specification. Available online: https://serverlessworkflow.io/ (accessed on 15 December 2021).

- Lin, C.; Khazaei, H. Modeling and Optimization of Performance and Cost of Serverless Applications. IEEE Trans. Parallel Distrib. Syst. 2021, 32, 615–632. [Google Scholar] [CrossRef]

- Sutter, R. Wild Rydes Image Processing Workflow. Available online: https://www.image-processing.serverlessworkshops.io/ (accessed on 15 December 2021).

- Werner, S.; Kuhlenkamp, J.; Klems, M.; Müller, J.; Tai, S. Serverless Big Data Processing Using Matrix Multiplication as Example. In Proceedings of the 2018 IEEE International Conference on Big Data (Big Data), Seattle, WA, USA, 10–13 December 2018; pp. 358–365. [Google Scholar] [CrossRef]

- Qasha, R.; Cała, J.; Watson, P. A Framework for Scientific Workflow Reproducibility in the Cloud. In Proceedings of the 2016 IEEE 12th International Conference on E-Science (e-Science), Baltimore, MD, USA, 23–27 October 2016; pp. 81–90. [Google Scholar] [CrossRef] [Green Version]

- Cao, J.; Jarvis, S.; Saini, S.; Nudd, G. GridFlow: Workflow Management for Grid Computing. In Proceedings of the CCGrid 2003. 3rd IEEE/ACM International Symposium on Cluster Computing and the Grid, Tokyo, Japan, 12–15 May 2003; pp. 198–205. [Google Scholar] [CrossRef] [Green Version]

- Yu, M.; Jiang, Z.; Ng, H.C.; Wang, W.; Chen, R.; Li, B. Gillis: Serving Large Neural Networks in Serverless Functions with Automatic Model Partitioning. In Proceedings of the 41st IEEE International Conference on Distributed Computing Systems, Washington, DC, USA, 7–10 July 2021. [Google Scholar]

- Apache OpenWhisk. Available online: https://openwhisk.apache.org (accessed on 4 December 2021).

- OpenFaaS. Available online: https://www.openfaas.com/ (accessed on 4 December 2021).

- Kratzke, N. A Brief History of Cloud Application Architectures. Appl. Sci. 2018, 8, 1368. [Google Scholar] [CrossRef] [Green Version]

| Symbol | Meaning |

|---|---|

| execution time of function f | |

| a workflow represented in DAG | |

| response time of workflow G | |

| the number of requests to a fan-out o | |

| the maximum concurrency of a fan-out o | |

| the sub-workflow of a fan-out o | |

| the branch probability of the ith branch of a conditional branch | |

| the ith sub-workflow of a conditional branch | |

| the cold start latency | |

| the function invocation delay of the workflow system | |

| the fan-out delay to initiate a fan-out of the workflow system | |

| the number of sub-workflow of a conditional branch |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, S.; Yoon, D.; Yeo, S.; Oh, S. Mitigating Cold Start Problem in Serverless Computing with Function Fusion. Sensors 2021, 21, 8416. https://doi.org/10.3390/s21248416

Lee S, Yoon D, Yeo S, Oh S. Mitigating Cold Start Problem in Serverless Computing with Function Fusion. Sensors. 2021; 21(24):8416. https://doi.org/10.3390/s21248416

Chicago/Turabian StyleLee, Seungjun, Daegun Yoon, Sangho Yeo, and Sangyoon Oh. 2021. "Mitigating Cold Start Problem in Serverless Computing with Function Fusion" Sensors 21, no. 24: 8416. https://doi.org/10.3390/s21248416

APA StyleLee, S., Yoon, D., Yeo, S., & Oh, S. (2021). Mitigating Cold Start Problem in Serverless Computing with Function Fusion. Sensors, 21(24), 8416. https://doi.org/10.3390/s21248416