Abstract

Unmanned Aerial Vehicles (UAVs) are a novel technology for landform investigations, monitoring, as well as evolution analyses of long−term repeated observation. However, impacted by the sophisticated topographic environment, fluctuating terrain and incomplete field observations, significant differences have been found between 3D measurement accuracy and the Digital Surface Model (DSM). In this study, the DJI Phantom 4 RTK UAV was adopted to capture images of complex pit-rim landforms with significant elevation undulations. A repeated observation data acquisition scheme was proposed for a small amount of oblique-view imaging, while an ortho-view observation was conducted. Subsequently, the 3D scenes and DSMs were formed by employing Structure from Motion (SfM) and Multi-View Stereo (MVS) algorithms. Moreover, a comparison and 3D measurement accuracy analysis were conducted based on the internal and external precision by exploiting checkpoint and DSM of Difference (DoD) error analysis methods. As indicated by the results, the 3D scene plane for two imaging types could reach an accuracy of centimeters, whereas the elevation accuracy of the orthophoto dataset alone could only reach the decimeters (0.3049 m). However, only 6.30% of the total image number of oblique images was required to improve the elevation accuracy by one order of magnitude (0.0942 m). (2) An insignificant variation in internal accuracy was reported in oblique imaging-assisted datasets. In particular, SfM-MVS technology exhibited high reproducibility for repeated observations. By changing the number and position of oblique images, the external precision was able to increase effectively, the elevation error distribution was improved to become more concentrated and stable. Accordingly, a repeated observation method only including a few oblique images has been proposed and demonstrated in this study, which could optimize the elevation and improve the accuracy. The research results could provide practical and effective technology reference strategies for geomorphological surveys and repeated observation analyses in sophisticated mountain environments.

1. Introduction

As UAV technology makes strides and is increasingly developed, UAVs have become progressively popularized and civilianized. In addition, UAV Remote Sensing (UAV-RS) is being gradually achieved by employing UAVs as carriers with imaging or non-imaging sensors to capture high-resolution remote sensing images, SAR images, as well as high-precision laser point clouds [1,2,3]. Since low-altitude and small UAVs exhibit several advantages (e.g., mobility, flexibility, low-cost, and rich data results) [4], they have been adopted to generate 3D scenes by integrating Structure from Motion (SfM) and Multi-View Stereo (MVS) algorithms [5] for landslide disaster monitoring [6], flood hazard assessment [7], geomorphological evolution analysis [8], as well as crop monitoring [9].

Geomorphology refers to various undulating forms in the earth’s surface, created under constant shaping by internal and external geological forces. It is one of the critical factors to explain and analyze the physical geographic environment [10]. UAVs have been adopted extensively to capture images of different landforms in different periods. The constructed 3D scenes, Digital Elevation Models (DEMs), and Digital Surface Models (DSMs) are applied for rapid, non-contact geomorphological surveys, geomorphological feature extraction and change analysis [11,12,13,14]. However, the accuracy of 3D-scene measurement, elevation error and the error of the instrument are recognized as the basis for ensuring qualitative and quantitative analyses of the geomorphological survey and its morphological evolution under a long time series and repeated observations. As reported by existing studies, 3D scene construction achieved by exploiting images acquired by UAVs equipped with Global Navigation Satellite System (GNSS) with low positioning accuracy fails to meet 3D measurement accuracy requirements [15], and the achieved data results are of less significance to multi-phase geomorphological evolution analysis studies [16]. To increase 3D-scene accuracy, Ground Control Points (GCPs) have been primarily applied as a constraint to improve the exterior orientation elements of the original image in aerial triangulation and to achieve absolute orientation [17,18]. Moreover, a data processing method that integrates GCPs and UAV images is capable of monitoring centimeter-scale variations of collapse erosion landform [19], open-pit mine dump erosion zone [20], as well as wetland tidal flats [21]. Likewise, the measurement accuracy of relevant glacial landforms [22], outcrop geology [23], and other studies can be met. However, the mountainous environment of the Yunnan plateau is complicated, and different landscape types are located in a wide variety of environments. Thus, GCPs are difficult to lay. An unreasonable GCPs layout will reduce the accuracy of the 3D scene, rendering repeat observations impossible. For complex terrain environments, UAVs equipped with a Real-Time Kinematic (RTK) system can capture images, and exploit differential correction data provided by Continuously Operating Reference Stations (CORS) to acquire high-precision positioning information [24] and achieve aerial triangulation without GCPs [25,26]. On that basis, 3D scene accuracy is improved to a greater extent, and operational risks and the dependence on GCPs are reduced [27]. Most existing studies for 3D scene accuracy are based on combining different data processing software and different GCPs layout schemes to accurately assess a single image dataset by using checkpoints [28,29]. However, when RTK UAVs are adopted to analyze the geomorphological evolution of long time series, the accuracy of the 3D scenes of repeated observations may change significantly. Furthermore, the repeated observation accuracy of RTK UAV has been rarely investigated, and its error analysis under no GCPs constraint has been scarce on the complex mountain environment terrain.

Thus, under no GCPs constraint, for the same time as without a topographic variation of complex mountain pit-rim landforms, DJI Phantom 4 RTK UAV was employed in this study to repeat the observation with ortho-view at a fixed altitude and overlap while adding repeated observation with a small number of oblique-view images by flight a section. The 3D scene construction was achieved by applying SfM-MVS technology for different datasets, obtained from repeated observations. By using checkpoints and DoD, the accuracy analysis was conducted by comparing different datasets with each other for internal and external precision.

2. Study Area

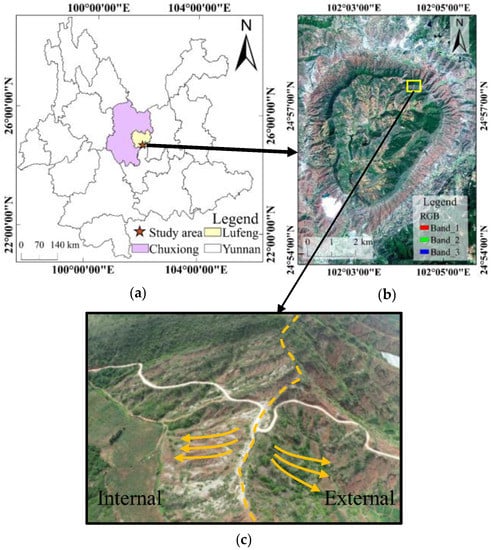

The study area is a typical circular pit-rim landscape at the southern edge of Dinosaur Valley on the west side of Lufeng Jurassic Dinosaur Site Park in Lufeng, Chuxiong, Yunnan (Figure 1a). The width is nearly 3 km from east to west, 10 km length from north to south, and 4 km in diameter. It is surrounded by steep mountains that connect to form a bar-shaped radial ridge in contrast to the central terrain. The complex pit-rim at the highest entrance in the northeast is the subject of the investigation (Figure 1b). The location of this area has a significant downward gradient from the external structure, and significant differences are identified in topography and features between the interior and exterior of the ridge (Figure 1c). Thus, the study area could be a promising test area for geomorphological data collection and repeated observation accuracy analyses.

Figure 1.

(a) Location of Lufeng; (b) Satellite image of the study area; (c) Complex pit-rim at the entrance.

3. Material and Methods

3.1. Data Acquisition

3.1.1. UAV Images Collection

The DJI Phantom 4 RTK (P4R) UAV was equipped with a multi-frequency and multi-system high-precision RTK GNSS and an FC6310R camera (20-megapixel RGB sensor). It was also equipped with an active stabilizing camera cradle head, which ensures sharp images, to compensate for the unstable UAV flight conditions. Moreover, DJI GS RTK ground station control software was applied for route planning and image-data acquisition in the test area. Table 1 lists the specifications of the aircraft and camera.

Table 1.

Aircraft and camera specifications.

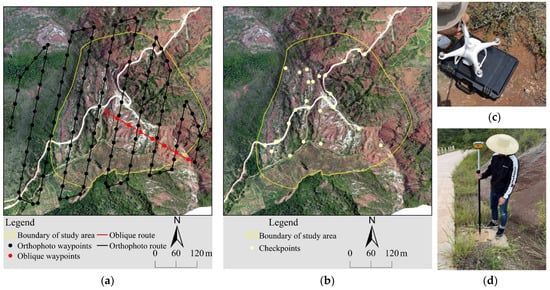

The test area covered an area of 0.06 km2. The aerial survey campaign was conducted on 21 July 2021. The UAV was set at a 200 m flight altitude directly above the take-off point. To avoid missing image data and long flight time, the main route angle was set to 68°, and the direction of the route was set as perpendicular to the edge of the pit-rim. The forward and the side overlap were 80%. Moreover, the RTK status was fixed to obtain the differential signal returned from the CORS station to the UAV instantly. The traffic conditions, UAV take-off and landing points were determined by the Google Earth platform and through field research.

First, the lens inclination angle was set to −90° to collect orthophotos. Subsequently, it was set to −45°, and the UAV was flown along a straight line connecting the last waypoint to the center of the route-planning area to acquire partially oblique images (Figure 3a). The lens direction was kept parallel to the straight-line direction. Several camera parameters (e.g., ISO, exposure value and shutter) were automatically regulated by complying with the real brightness of the scene at the time of flight. The UAV flight parameters were selected as shown in Table 2.

Table 2.

UAV flight parameters.

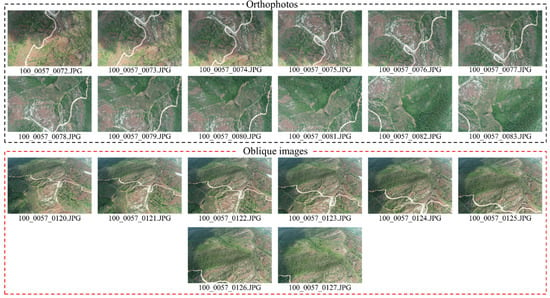

Three flights were conducted based on the identical flight parameters, two of which were repeated observations, i.e., . Six datasets were developed by combining the data types “orthophotos” () or “oblique imaging-assisted” () (Table 3). To be specific, to distinguish different datasets, repeated observation numbers and image data types were adopted to name the respective dataset, e.g., represents the orthophoto dataset of the first observation, denotes the orthophotos and oblique imaging-assisted dataset of the second observation. Using the image dataset as an example, some orthophotos and oblique images are presented in Figure 2. The UAV type is shown in Figure 3c.

Table 3.

Characteristics of datasets resulting from the test setup.

Figure 2.

Partial orthophotos and oblique images ( dataset as an example).

Figure 3.

(a) UAV flight routes; (b) Location of checkpoints; (c) UAV type; (d) RTK data acquisition.

3.1.2. Checkpoint Acquisition

Influenced by the significant difference between the inside and outside of the pit-rim landforms, the obvious feature points were adopted as checkpoints to analyze the measurement accuracy comprehensively. Overall, 27 checkpoints (Figure 3b) were acquired with the Hi-Target V90 RTK device (Figure 3d) by connecting to the CORS network, and the coordinate system used was CGCS 2000. This RTK equipment achieved a planimetric accuracy of ±8 mm + 1 ppm and an elevation measurement accuracy of ±15 mm + 1 ppm.

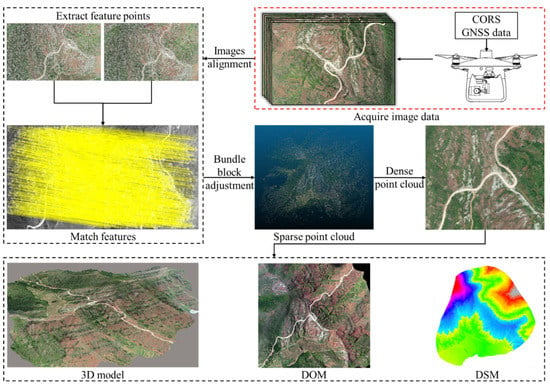

3.2. 3D Scene Construction Based on SfM-MVS

The 3D scene construction was achieved using SfM-MVS technology [30] in Agisoft Metashape Professional 1.6.4 software. Under an identical data processing flow (Figure 4), six datasets were processed (Table 3) to generate 3D scenes, DOMs, as well as a 0.1 m resolution DSMs.

Figure 4.

3D scene construction process of SfM-MVS technology.

To begin with, in the SfM algorithm, the Scale Invariant Feature Transform (SIFT) algorithm [31] was employed to extract the feature points on each image. The scale, position, direction eigenvalues of the key points on the respective image were then determined. Subsequently, in accordance with the key points on the overlapping images, image feature matching was performed by combining the image attitude and spatial position information, the camera focal length, as well as radial and tangential distortion model parameters. Lastly, Bundle Block Adjustment (BBA) was performed [32]. The key point features and camera parameter positions were optimized by minimizing the reprojection error between the locations of key points on the image and the predicted locations [33], as an attempt to generate a sparse point cloud with coordinate and color information.

The MVS algorithm first iteratively diffused and filtered the sparse point cloud continuously to generate the dense point cloud and construct the 3D point cloud data [34]. Second, the dense point cloud was segmented into blocks, and the block point cloud was constructed on an irregular three-dimensional grid. Subsequently, the white film data were obtained from the grid data, and the optimal texture was automatically detected through the triangular network to automatically optimize texture mapping. Lastly, the 3D scene consistent with the dense point cloud coordinate system was obtained.

3.3. Accuracy Assessment Methods

3.3.1. 3D scene Absolute Accuracy Assessment

To analyze the absolute accuracy of 3D scenes within a range of datasets, the deviation between the measured value of the model and the checkpoint in 3D scenes was determined. The mean error () and Root Mean Square Error () were adopted to quantify the plane accuracy and elevation accuracy. The calculation formula as:

where denotes the number of checkpoints; represents the image solution value for the respective direction of checkpoints; denotes the measured value of the respective direction of checkpoints; , , are the of each direction of the checkpoint.

3.3.2. Internal and External Precision Assessment

The accuracy of the terrain 3D scene constructed by SfM-MVS technology was analyzed in terms of internal and external precision [30], which revealed the differences that led to the sources of errors in 3D scenes and the mechanisms of their transmission features. In this study, the internal precision was the error generated by the repeated observation data in the SfM-MVS technology, while the external precision was the error caused by the change of the number and position of the oblique images.

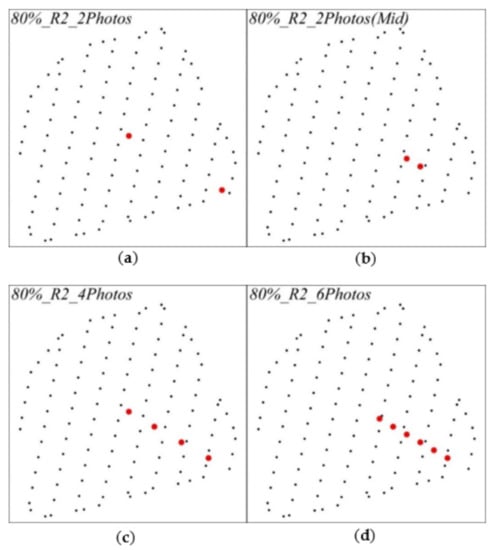

To be specific, the internal precision between pairs of repeated observations was compared, while the dataset type remained unchanged, as follows: , , for the orthophoto datasets, , , , for the oblique imaging-assisted datasets. The external precision complied with the dataset to compare the numbers and location schemes of four different oblique imaging datasets (Figure 5): (1) two images in the end or middle of the oblique flight route, i.e., . (2) Four images were selected at equal intervals, i.e., . (3) Six consecutive images were selected in the middle, i.e., .

Figure 5.

4 different oblique imaging numbers and location schemes, (a) 2 images in the end of the oblique flight route, (b) 2 images in the middle of the oblique flight route, (c) 4 images at equal intervals of the oblique flight route, (d) 6 consecutive images in the middle of the oblique flight route. (Black points are orthophoto waypoints, red points are oblique imaging waypoints).

Furthermore, before the quantitative analysis of the internal and external precision of the repeated observation datasets, the different values of the checkpoint model values in different 3D scenes should be compared, and the for the respective axial, plane, and the 3D should be calculated. Moreover, the DoD was able to visualize the variations of surface morphology between DSMs in different periods and analyze the elevation deviation [35]. Accordingly, DoD differences were processed in ArcMap, and the histogram statistics were performed in accordance with the calculated results.

4. Results and Analysis

4.1. 3D Scene Absolute Accuracy Analysis

The 3D scene accuracy assessment results for different datasets were analyzed using checkpoints (Table 4), which indicated that the magnitude of the plane error of the six datasets was consistent, whereas the magnitude of the elevation error was significantly different. Under the different image data types, the repeated observation error results were constant.

Table 4.

3D scenes accuracy assessment results for different datasets.

For the three repeated observations of orthophoto datasets (), the average plane error was 0.0445 m, the average 3D error was 0.3082 m, and the elevation error was 0.3049 m. For oblique imaging-assisted datasets (), the average plane error was 0.0339 m, the average 3D error was 0.1005 m, the elevation error reached 0.0942 m, and the plane and elevation errors were found to be within centimeters. In the orthophoto datasets (), the elevation accuracy could only reach the decimeters. However, by adding the oblique images (), in the three repeated observations, only the last () elevation error reached the decimeters, whereas the others (, ) were within centimeters. The overall elevation accuracy was improved by one order of magnitude.

Both types of imaging datasets could achieve the plane accuracy of a centimeter order of magnitude for complex mountainous terrain. Increasing the number of oblique images could improve the overall elevation accuracy, reduce the fieldwork required, and significantly improve work efficiency and safety.

4.2. Internal Precision Analysis

To analyze the effect of UAV and SfM-MVS technology on the internal accuracy of repeated observations, 3D scene checkpoint model values and DoD were analyzed for repeated observations under the identical data type (Table 5).

Table 5.

The difference results of 3D scene checkpoint model values of different datasets under the identical data type.

Compared with the repeated observation results under the orthophoto datasets (), which achieved the average plane error of 0.0405 m and the average 3D error of 0.1035 m, the oblique imaging-assisted datasets () had a mean plane error of 0.0417 m and an average 3D error of 0.0693 m, for which the elevation deviation of repeated observations was smaller.

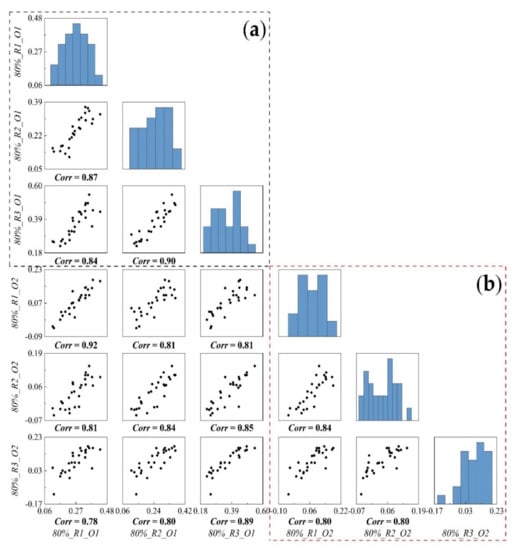

Impacted by the obvious differences in elevation between different datasets, the elevation error was able to fully reflect the stability of UAV and SfM-MVS technology for repeated observations. The correlation between repeated observations of orthophoto datasets () (Figure 5a) exceeded 0.84, the correlation between oblique imaging-assisted datasets () (Figure 6b) exceeded 0.80, and the correlation between was 0.92, which revealed that the elevation error between repeated observations of P4R UAV for the 3D reconstruction of terrain based on SfM-MVS technology was stable.

Figure 6.

The elevation error correlation results of different datasets under repeated observations, Corr is the correlation coefficient, (a) represents the orthophoto datasets, (b) represents the oblique imaging datasets.

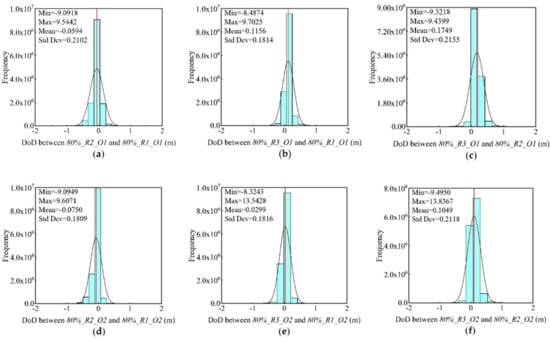

In addition, according to the DoD results of different datasets under repeated observations (Figure 7, Table 6), the mean absolute value of error between DSMs of orthophoto datasets () was 0.13 m and the mean standard deviation reached 0.2024 (Figure 7a–c). For oblique imaging-assisted datasets (), the mean absolute value was 0.07 m, and the mean standard deviation was 0.1914 (Figure 7d–f). Combined with the standard deviation and normal distribution curves (Figure 7), no significant difference was reported in the dispersion of the internal precision error of repeated observations between the two imaging data types. However, assisting the oblique images could reduce the error, and the internal precision of repeated observation was stable.

Figure 7.

DoD results of different datasets under repeated observations. Histograms (a–c) represent the orthophoto datasets results. Histograms (d–f) represent the oblique imaging datasets.

Table 6.

Statistics of DoD internal precision results of different datasets under repeated observations.

4.3. External Precision Analysis

Before analyzing the effect of oblique imaging-assisted datasets on external precision, the checkpoints error of 3D scenes with 4 different oblique imaging numbers and position schemes were analyzed (Table 7). The plane error of the 4 schemes was better than 0.04 m, reaching a centimeter order of magnitude, and the elevation error was better than 0.14 m. Compared with the results of orthophoto datasets (), the elevation error was reduced by 2.28 times (0.3049 m to 0.1338 m) by adding 2 oblique images. A few oblique images could effectively improve the elevation precision. Compared with the results of 2 oblique images, the elevation error increases to 0.1385 m by adding 4 oblique images, and the method of equal interval extraction of oblique images could not effectively reduce the elevation error.

Table 7.

3D scenes accuracy assessment results of 4 different oblique imaging numbers and position schemes.

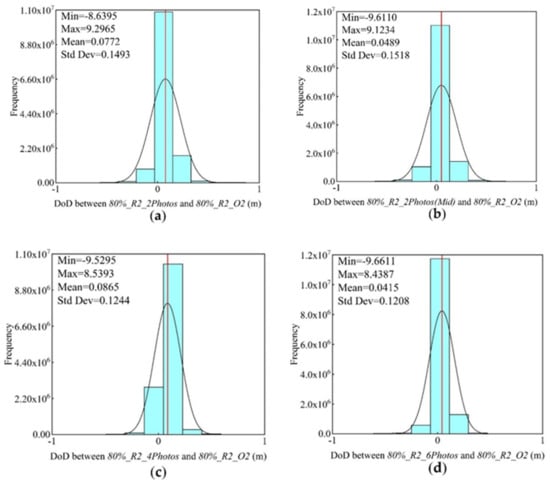

Using DSM as a reference, the DoD results of different schemes (Figure 8, Table 8) revealed that the mean absolute values of elevation deviations between different datasets ranged from 0.0415 m to 0.0772 m, the standard deviations ranged from 0.1208 to 0.1518, and the number of oblique images gradually decreased from 6 to 2. When 2 oblique images were added, the average absolute value was 0.0772 m. When the positions of the 2 images were changed, the average absolute value was 0.0489 m, and the absolute value difference was insignificant, whereas the standard deviation increased to 0.1518, and the error distribution was relatively discrete. When 4 oblique images were added, the average absolute value was 0.0865 m. The scheme exerted the worst effect on elevation optimization. The average absolute values of 6 and 2 oblique images were similar, and the elevation error was the smallest. For the 3D reconstruction of a complex mountain environment, the elevation accuracy could be optimized by adding 2 oblique images located in the middle of the oblique route, which could also meet the precision requirements of a correlation analysis.

Figure 8.

DoD results of different oblique imaging numbers and location schemes. Histogram (a) represents the result of 2 images in the end of the oblique flight route, histogram (b) represents the result of 2 images in the middle of the oblique flight route, histogram (c) represents the result of 4 images at equal intervals of the oblique flight route, histogram (d) represents the result of 6 consecutive images in the middle of the oblique flight route.

Table 8.

Statistics of DoD external precision results of different oblique imaging numbers and location schemes.

5. Discussion

At present, greater focus has been placed on the measurement accuracy analysis of different types of UAVs under the constraints of GCPs in various fields. The measurement accuracy is largely dependent of GCPs, whereas the possibility of RTK UAV measurement without using GCPs is commonly overlooked. Thus, this study employed a repeated observation method assisting a small number of oblique-view images while observing the image from the ortho-view. In addition, the measurement accuracy of the RTK UAV without GCPs was analyzed. To conduct a targeted analysis, a typical pit-rim landform with significant differences in undulation at the southern edge of Lufeng Dinosaur Valley was adopted as the test area for a comparative test study. As revealed by the elevation deviation and histogram statistical results, which were obtained by checkpoint error and DoD, the accuracy optimization feasibility of repeated observation 3D measurement results of various data was obtained, displaying internal and external precision.

As indicated by the checkpoint error analysis, the plane error of the 3D scene under the two types of datasets could reach centimeters, RTK UAV could effectively improve the plane accuracy, and the effect was significant for sophisticated mountainous terrain environments. However, the elevation values of the two imaging types were significantly different, and the results of the oblique imaging-assisted datasets () were better than those of the orthophoto datasets (). Moreover, there was a vertical offset between the DSMs, obtained by repeated observations, which partially attributed to the change of the estimated focal length () in self-calibration. Table 9 lists the statistical results of the focal length estimates for different datasets.

Table 9.

The results of focal length estimates for different datasets.

Table 9 lists the same camera focal length estimates (3707.94 pix) obtained from repeated observations of the orthophoto datasets (), and the oblique imaging-assisted datasets (), with an average value of 3708.14 pix. According to the checkpoint error results, the addition of oblique images could be used across a larger range of perspectives, and effectively regulate the estimated camera focal length in the solving process. The estimation results of the oblique imaging-assisted datasets () were found to be more accurate.

Though the elevation deviation of the two image data types is significant, the elevation error correlation is found to be more intense between the repeated observation datasets of the respective image data type, where the internal accuracy is stable, and SfM-MVS technology exhibits a high reproducibility for the 3D scene construction of terrain and its derived results. UAVs are capable of capturing high-resolution images with a considerable amount of image information, and complete details of ground objects. Moreover, the datasets obtained using SfM-MVS technology have low network roughness and provide an optimized data perspective, which effectively reduces the size of the shadow [36]. Accordingly, SfM-MVS technology underpins an effective and quantitative change in the monitoring of different landforms, while obtaining high-precision and high-quality results. To be specific, Alfredsen et al. [37] successfully achieved feature-element extraction and an accurate calculation of river ice. Pagan et al. [38] analyzed the historical evolution of dunes and their relationship with coastal erosion. Yang et al. [39] analyzed the landslide evolution by obtaining multi-period landslide images and extracting feature elements of landslides in different periods.

By analyzing the external precision of different oblique imaging numbers and position change schemes, the elevation error tends to decrease, and the error distribution is found to be more concentrated and stable with an increase in the oblique imaging number. To further analyze the effect of the image position on the external accuracy, the distance between 2 images was determined by complying with the Position and Orientation System (POS) information, and the mean distance of the respective scheme was obtained. As impacted by the change of image position, it also represents the change of overlap on the oblique route. The overlap between images was calculated through the feature matching method [40]. The results are listed in Table 10.

Table 10.

The mean image spacing and overlap results under different oblique imaging numbers and position schemes.

Table 10 lists the mean image spacing and overlap results under different oblique imaging numbers and position schemes. For the improved scheme of two middle and six oblique images, the image spacing was less than 40 m, and the image overlap exceeded 80%. For the two image schemes at both ends, under the significant image spacing, there was found to be no certainty overlap. For the four images extracted with equal intervals, the image spacing was doubled, and the overlap was reduced to less than 67%. Furthermore, the image spacing impacts the elevation error. If the image overlap is not lower than the preset parameter, the image distribution will be more concentrated, and the error reduced. For the mentioned reasons, the elevation accuracy from the data acquisition method will be optimized, and the overall 3D measurement accuracy will be effectively improved.

6. Conclusions

In this study, DJI Phantom 4 RTK UAV was employed to collect repeated observation images of complex mountain pit-rim landforms via ortho-view, while adding a small amount of repeated observation from oblique-view images. For various imaging datasets, SfM-MVS technology was adopted to realize 3D scene construction. The comparative analysis methods of checkpoint and DoD were employed to compare different datasets as well as the 3D measurement accuracy of repeated redundant observation for the internal and external precision. As indicated by the results of the checkpoint comparison, with other parameters unchanged, regardless of whether it is a single orthophoto observation or considering the 3D scene formed by oblique images, the plane error reaches centimeters, whereas the elevation error is found to be significantly different. The average elevation error of the 3D scene constructed by orthophoto datasets was 0.3049 m, while the average elevation error of the oblique imaging datasets was only 0.0942 m, thereby the elevation accuracy was significantly improved, and the number of oblique images accounted for only 6.3% of the total number of images. This means that the proposed method of performing RTK UAV orthophotography, while appropriately assisting oblique imaging acquisition technology can effectively improve elevation accuracy in constructing 3D scenes of complex surface environments. This method is capable of reducing the dependence on GCPs and the field workload. Moreover, the empirical study indicates that the terrain 3D reconstruction by using SfM-MVS technology exhibited effective reproduction. The repeated observation elevation deviation of the oblique imaging was smaller, and the internal precision was stable. When the number or location of oblique images were changed, the external precision was effectively improved. The elevation error and standard deviation were gradually reduced, and the error distribution was more concentrated and stable. It is clear that with the smaller distance between oblique images, the elevation accuracy can be further optimized.

This study primarily analyzed repeated observations under constant flight altitude, the same overlap, as well as oblique-imaging data. However, UAVs are affected by the natural environment when collecting images, which causes blurred images and inconsistent color and thus affects the extraction of image feature points to a certain extent. The effects of different flight altitudes, overlap and different oblique imaging collection methods on the repeated observation accuracy require further analysis.

Author Contributions

R.B. performed the research and methodology, analyzed the data, and wrote the manuscript. S.G. (Shu Gan) designed the framework of the research, mastered the conceptualization. S.G. (Shu Gan) and X.Y. have given many suggestions for improving and modifying this paper. R.L. and W.L. participated in data collection and investigation. S.G. (Sha Gao) and L.H. contributed to data processing, visualization analysis. All authors have read and agreed to the published version of the manuscript.

Funding

This research received the National Natural Science Foundation of China (No. 41861054 and No. 41561083).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data are not publicly available as they involve the subsequent application of other studies.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Li, D.R.; Li, M. Research advance and application prospect of unmanned aerial vehicle remote sensing system. Geomat. Inf. Sci. Wuhan Univ. 2014, 39, 505–513. [Google Scholar] [CrossRef]

- Yang, B.S.; Li, J.P. Implementation of a low-cost mini-UAV laser scanning system. Geomat. Inf. Sci. Wuhan Univ. 2018, 43, 1972–1978. [Google Scholar] [CrossRef]

- Michaelides, R.J.; Chen, R.H.; Zhao, Y.; Schaefer, K.; Parsekian, A.D.; Sullivan, T.; Moghaddam, M.; Zebker, H.A.; Liu, L.; Xu, X.; et al. Permafrost dynamics observatory-part I: Postprocessing and calibration methods of UAVSAR L-band InSAR data for seasonal subsidence rstimation. Earth Space Sci. 2021, 8, e2020EA001630. [Google Scholar] [CrossRef]

- Zhang, J.X.; Liu, F.; Wang, J. Review of the light-weighted and small UAV system for aerial photography and remote sensing. Natl. Remote Sens. Bull. 2021, 25, 708–724. [Google Scholar] [CrossRef]

- Li, X.; Xiong, B.S.; Yuan, Z.D.; He, K.F.; Liu, X.L.; Liu, Z.M.; Shen, Z.Q. Evaluating the potentiality of using control-free images from a mini Unmanned Aerial Vehicle (UAV) and Structure-from-Motion (SfM) photogrammetry to measure paleoseismic offsets. Int. J. Remote Sens. 2021, 42, 2417–2439. [Google Scholar] [CrossRef]

- Fernandez, T.; Perez, J.L.; Cardenal, J.; Gomez, J.M.; Colomo, C.; Delgado, J. Analysis of landslide evolution affecting Olive Groves using UAV and photogrammetric techniques. Remote Sens. 2016, 8, 29. [Google Scholar] [CrossRef] [Green Version]

- Anders, N.; Smith, M.; Suomalainen, J.; Cammeraat, E.; Valente, J.; Keesstra, S. Impact of flight altitude and cover orientation on Digital Surface Model (DSM) accuracy for flood damage assessment in Murcia (Spain) using a fixed-wing UAV. Earth Sci. Inform. 2020, 13, 391–404. [Google Scholar] [CrossRef] [Green Version]

- Dai, W.Q.; LI, H.; Gong, Z.; Zhang, C.K.; Zhou, Z. Application of unmanned aerial vehicle technology in geomorphological evolution of tidal flat. Adv. Water Sci. 2019, 30, 359–372. [Google Scholar] [CrossRef]

- Liu, Y.; Feng, H.K.; Huang, J.; Sun, Q.; Yang, F.Q.; Yang, G.J. Estimation of potato plant height and above-ground biomass based on UAV hyperspectral images. Trans. Chin. Soc. Agric. Mach. 2021, 52, 188–198. [Google Scholar] [CrossRef]

- Yang, Y.C.; Qi, Y.B.; Fu, J.X.; Wu, J. DEM based geomorphic features and classification: A case study in the Pisha sandstone area. Sci. Soil Water Conserv. 2019, 17, 1–10. [Google Scholar] [CrossRef]

- Garcia, G.P.B.; Grohmann, C.H. DEM-based geomorphological mapping and landforms characterization of a tropical karst environment in southeastern Brazil. J. S. Am. Earth Sci. 2019, 93, 14–22. [Google Scholar] [CrossRef] [Green Version]

- Lian, H.Q.; Meng, L.; Han, R.G.; Yang, Y.; Yu, B. Geological information extraction based on remote sensing of unmanned aerial vehicle: Exemplified by Liujiang Basin. Remote Sens. Land Resour. 2020, 32, 136–142. [Google Scholar] [CrossRef]

- Meinen, B.U.; Robinson, D.T. Mapping erosion and deposition in an agricultural landscape: Optimization of UAV image acquisition schemes for SfM-MVS. Remote Sens. Environ. 2020, 239, 10. [Google Scholar] [CrossRef]

- Hugenholtz, C.H.; Whitehead, K.; Brown, O.W.; Barchyn, T.E.; Moorman, B.J.; LeClair, A.; Riddell, K.; Hamilton, T. Geomorphological mapping with a small unmanned aircraft system (sUAS): Feature detection and accuracy assessment of a photogrammetrically-derived digital terrain model. Geomorphology 2013, 194, 16–24. [Google Scholar] [CrossRef] [Green Version]

- Elkhrachy, I. Accuracy assessment of low-cost Unmanned Aerial Vehicle (UAV) photogrammetry. Alex. Eng. J. 2021, 60, 5579–5590. [Google Scholar] [CrossRef]

- Liu, Y.S.; Qin, X.; Guo, W.Q.; Gao, S.R.; Chen, J.Z.; Wang, L.H.; Li, Y.X.; Jin, Z.Z. Influence of the use of photogrammetric measurement precision on low-altitude micro-UAVs in the glacier region. Natl. Remote Sens. Bull. 2020, 24, 161–172. [Google Scholar] [CrossRef]

- Zhu, J.; Ding, Y.Z.; Chen, P.J.; Wang, X.A.; Guo, B.X.; Xiao, X.W.; Niu, K.K. Influence of control points’ layout on aero-triangulation accuracy for UAV images. Sci. Surv. Mapp. 2016, 41, 116–120. [Google Scholar] [CrossRef]

- Sanz-Ablanedo, E.; Chandler, J.H.; Rodríguez-Pérez, J.R.; Ordóñez, C. Accuracy of Unmanned Aerial Vehicle (UAV) and SfM photogrammetry survey as a function of the number and location of ground control points used. Remote Sens. 2018, 10, 1606. [Google Scholar] [CrossRef] [Green Version]

- Li, Z.J.; Zhong, L.T.; Huang, Y.H.; Ge, H.L.; Zhu, Y.; Jiang, F.S.; Li, X.F.; Zhang, Y.; Lin, J.S. Monitoring technology for collapse erosion based on the nap of the object photograph of UAV. Trans. Chin. Soc. Agric. Eng. 2021, 37, 151–159. [Google Scholar] [CrossRef]

- Gong, C.G.; Lei, S.G.; Bian, Z.F.; Liu, Y.; Zhang, Z.; Cheng, W. Analysis of the development of an erosion gully in an open-pit coal mine dump during a Winter freeze-thaw cycle by using low-cost UAVs. Remote Sens. 2019, 11, 17. [Google Scholar] [CrossRef] [Green Version]

- Brunetta, R.; Duo, E.; Ciavola, P. Evaluating short-term tidal flat evolution through UAV surveys: A case study in the Po Delta (Italy). Remote Sens. 2021, 13, 31. [Google Scholar] [CrossRef]

- Zhang, B.G.; Zhao, J.; Ma, C.; Li, T.; Cheng, X.; Liu, L.B. UAV photogrammetric monitoring of Antarctic ice doline formation. J. Beijing Norm. Univ. (Nat. Sci.) 2019, 55, 19–24. [Google Scholar] [CrossRef]

- Yin, S.L.; Tan, Y.Y.; Zhang, L.; Feng, W.; Liu, S.Y.; Jin, J. 3D outcrop geological modeling based on UAV oblique photography data: A case study of Pingtouxiang section in Lüliang City, Shanxi Province. J. Palaeogeogr. (Chin. Ed.) 2018, 20, 909–924. [Google Scholar] [CrossRef]

- Peppa, M.V.; Hall, J.; Goodyear, J.; Mills, J.P. Photogrammetric assessment and comparison of DJI Phantom 4 Pro and Phantom 4 RTK small unmanned aircraft systems. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W13, 503–509. [Google Scholar] [CrossRef] [Green Version]

- Xiao, S.Y. Positioning accuracy analysis of aerial triangulation of UAV images without ground control points. J. Chongqing Jiaotong Univ. (Nat. Sci.) 2021, 40, 117–123. [Google Scholar] [CrossRef]

- Benassi, F.; Dall’Asta, E.; Diotri, F.; Forlani, G.; di Cella, U.M.; Roncella, R.; Santise, M. Testing accuracy and repeatability of UAV blocks oriented with GNSS-supported aerial triangulation. Remote Sens. 2017, 9, 23. [Google Scholar] [CrossRef] [Green Version]

- Zhao, C.X.; Yang, W.; Wang, Y.J.; Ding, B.H.; Xu, X.C. Changes in surface elevation and velocity of Parlung No.4 glacier in southeastern Tibetan Plateau: Monitoring by UAV technology. J. Beijing Norm. Univ. (Nat. Sci.) 2020, 56, 557–565. [Google Scholar] [CrossRef]

- Casella, V.; Chiabrando, F.; Franzini, M.; Manzino, A.M. Accuracy assessment of a photogrammetric UAV block by using different software and adopting diverse processing strategies. In Proceedings of the 5th International Conference on Geographical Information Systems Theory, Applications and Management (GISTAM), Heraklion, Greece, 3–5 May 2019; pp. 77–87. [Google Scholar]

- Forlani, G.; Dall’Asta, E.; Diotri, F.; di Cella, U.M.; Roncella, R.; Santise, M. Quality assessment of DSMs produced from UAV flights georeferenced with on-board RTK positioning. Remote Sens. 2018, 10, 22. [Google Scholar] [CrossRef] [Green Version]

- Clapuyt, F.; Vanacker, V.; Van Oost, K. Reproducibility of UAV-based earth topography reconstructions based on Structure-from-Motion algorithms. Geomorphology 2016, 260, 4–15. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Wu, C.C. Towards linear-time incremental Structure from Motion. In Proceedings of the 2013 International Conference on 3D Vision—3DV 2013, Seattle, WA, USA, 29 June–1 July 2013; pp. 127–134. [Google Scholar]

- Snavely, N.; Seitz, S.M.; Szeliski, R. Modeling the world from Internet photo collections. Int. J. Comput. Vis. 2008, 80, 189–210. [Google Scholar] [CrossRef] [Green Version]

- Xiao, X.W.; Guo, B.X.; Li, D.R.; Li, L.H.; Yang, N.; Liu, J.C.; Zhang, P.; Peng, Z. Multi-View Stereo matching based on self-adaptive patch and image grouping for multiple unmanned aerial vehicle imagery. Remote Sens. 2016, 8, 30. [Google Scholar] [CrossRef] [Green Version]

- Brasington, J.; Langham, J.; Rumsby, B. Methodological sensitivity of morphometric estimates of coarse fluvial sediment transport. Geomorphology 2003, 53, 299–316. [Google Scholar] [CrossRef]

- Cook, K.L. An evaluation of the effectiveness of low-cost UAVs and structure from motion for geomorphic change detection. Geomorphology 2017, 278, 195–208. [Google Scholar] [CrossRef]

- Alfredsen, K.; Haas, C.; Tuhtan, J.A.; Zinke, P. Brief Communication: Mapping river ice using drones and structure from motion. Cryosphere 2018, 12, 627–633. [Google Scholar] [CrossRef] [Green Version]

- Pagan, J.I.; Banon, L.; Lopez, I.; Banon, C.; Aragones, L. Monitoring the dune-beach system of Guardamar del Segura (Spain) using UAV, SfM and GIS techniques. Sci. Total Environ. 2019, 687, 1034–1045. [Google Scholar] [CrossRef]

- Yang, D.D.; Qiu, H.J.; Hu, S.; Pei, Y.Q.; Wang, X.G.; Du, C.; Long, Y.Q.; Cao, M.M. Influence of successive landslides on topographic changes revealed by multitemporal high-resolution UAS-based DEM. Catena 2021, 202, 13. [Google Scholar] [CrossRef]

- Bi, R.; Gan, S.; Yuan, X.P.; LI, R.B.; Hu, L.; Gao, S. Research on UAV elevation route planning and modeling analysis for complex mountain landslides in Dongchuan. Bull. Surv. Mapp. 2021, 63–67. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).