Behind-The-Scenes (BTS): Wiper-Occlusion Canceling for Advanced Driver Assistance Systems in Adverse Rain Environments

Abstract

:1. Introduction

- Acquisition of a real dataset of driving under adverse rainy weather conditions using windshield wipers;

- Implementation of a fine-tuning optical flow-based model with a synthesized dataset to detect precise windshield wiper-occlusion regions;

- Conception and realization of wiper-free rain images for autonomous driving datasets.

2. Related Work

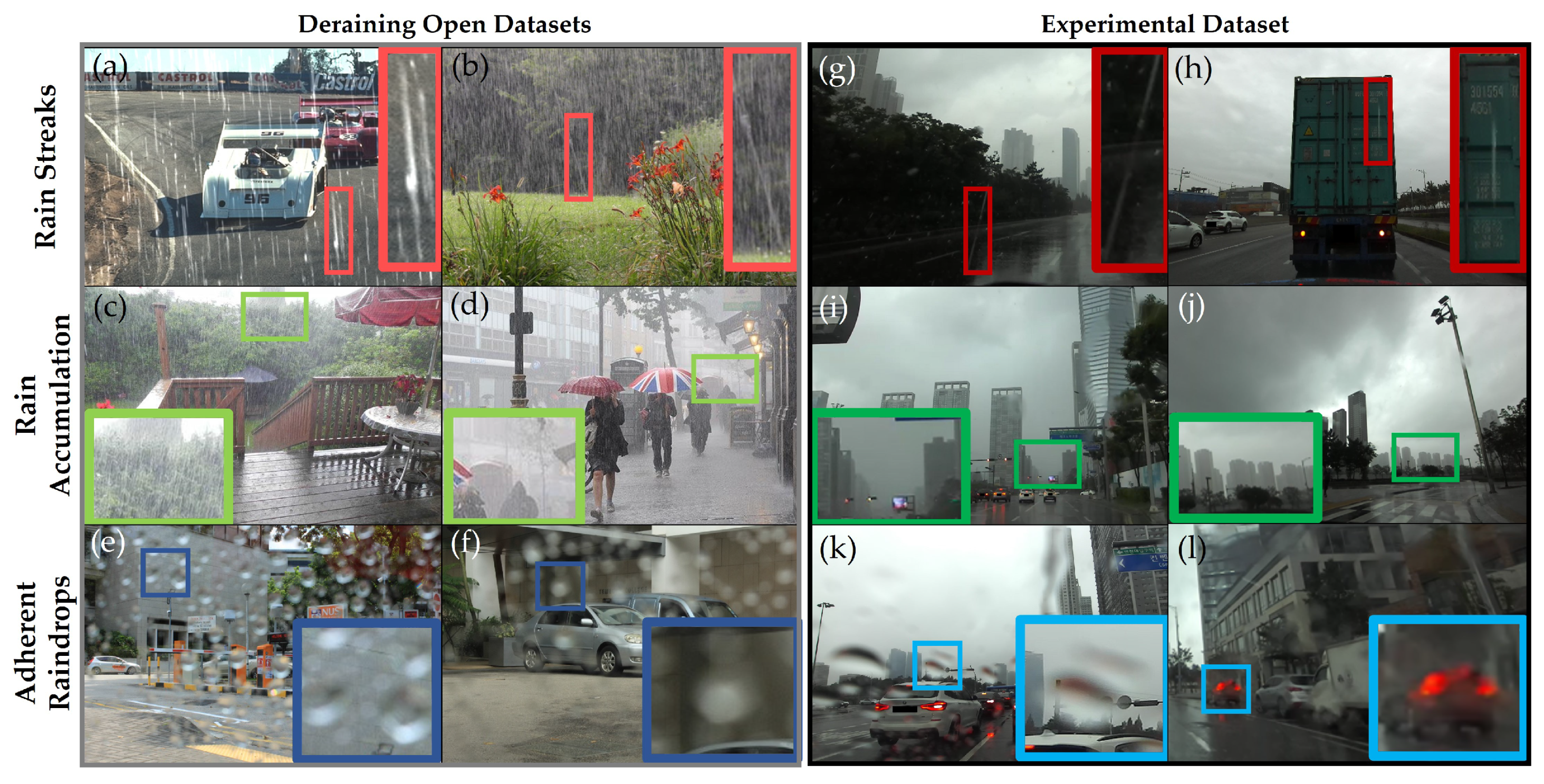

2.1. Deraining

2.1.1. Model-Driven Approaches

2.1.2. Data-Driven Approaches

2.1.3. Deraining Datasets

2.2. Driving in Rainy Weather Conditions

2.2.1. Deraining in Driving

2.2.2. Wiper Removal

2.2.3. Autonomous Driving Datasets

2.3. Optical Flow

2.3.1. Deep Learning-Based Approaches

2.3.2. Optical Flow Datasets

3. Approach

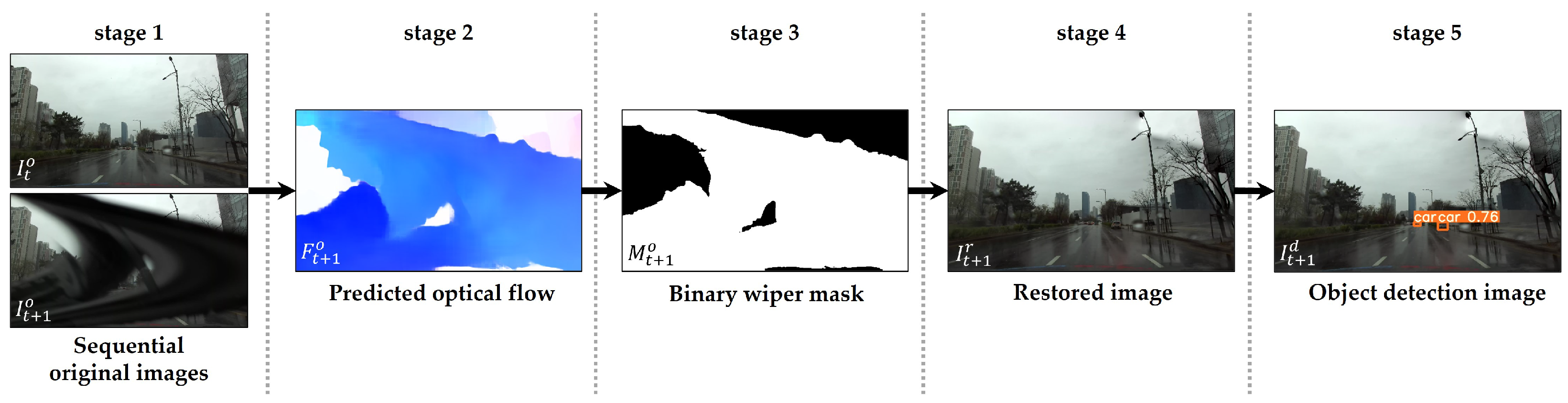

3.1. System Overview

3.2. Data Acquisition

3.2.1. Hardware Setup

3.2.2. Recording Environment

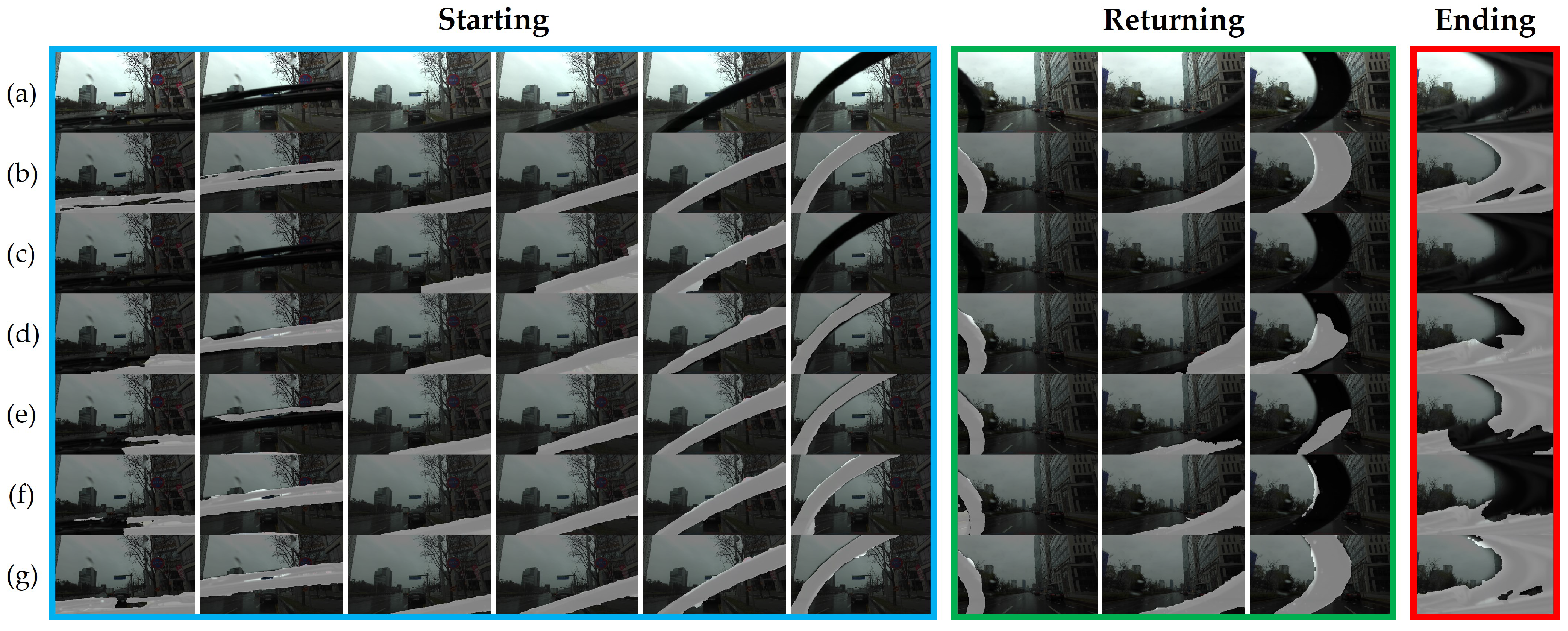

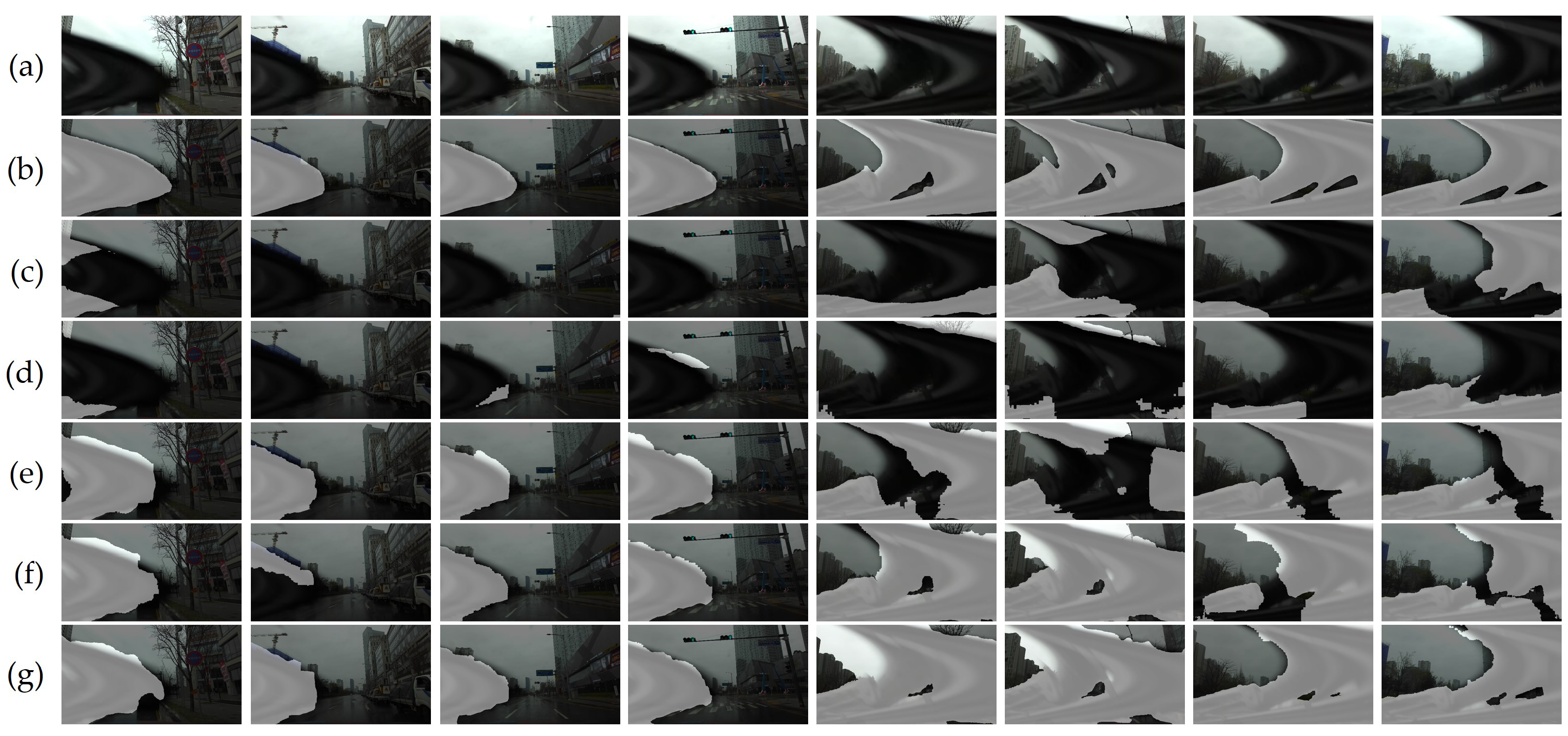

3.2.3. Hand-Crafted Ground Truth

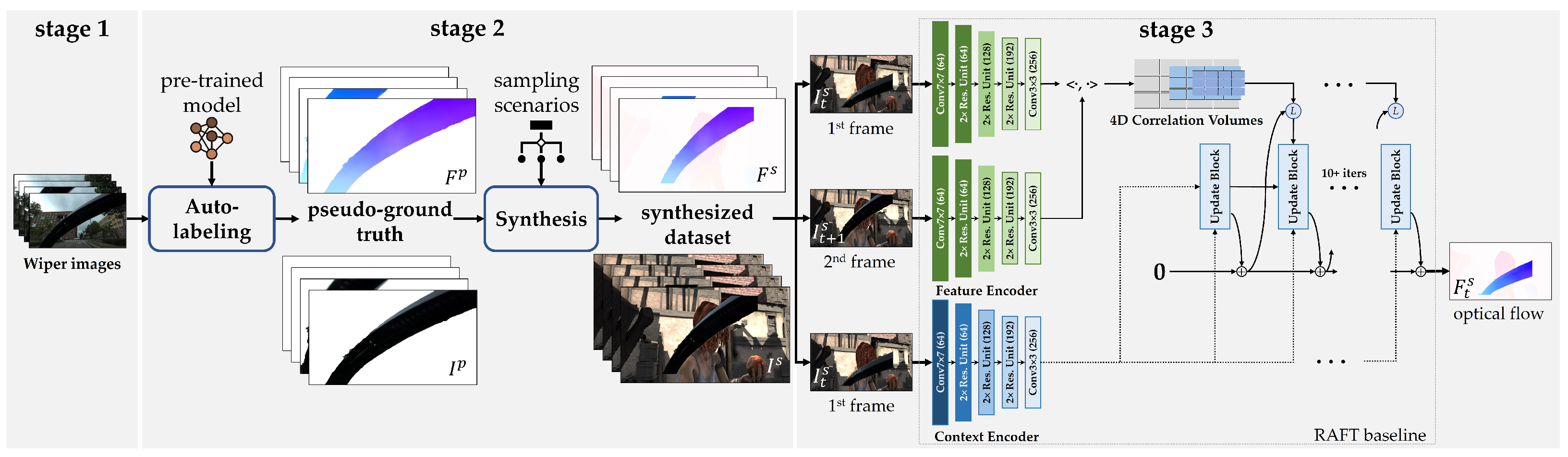

3.3. Data Synthesis

3.3.1. Pseudo-Ground Truth

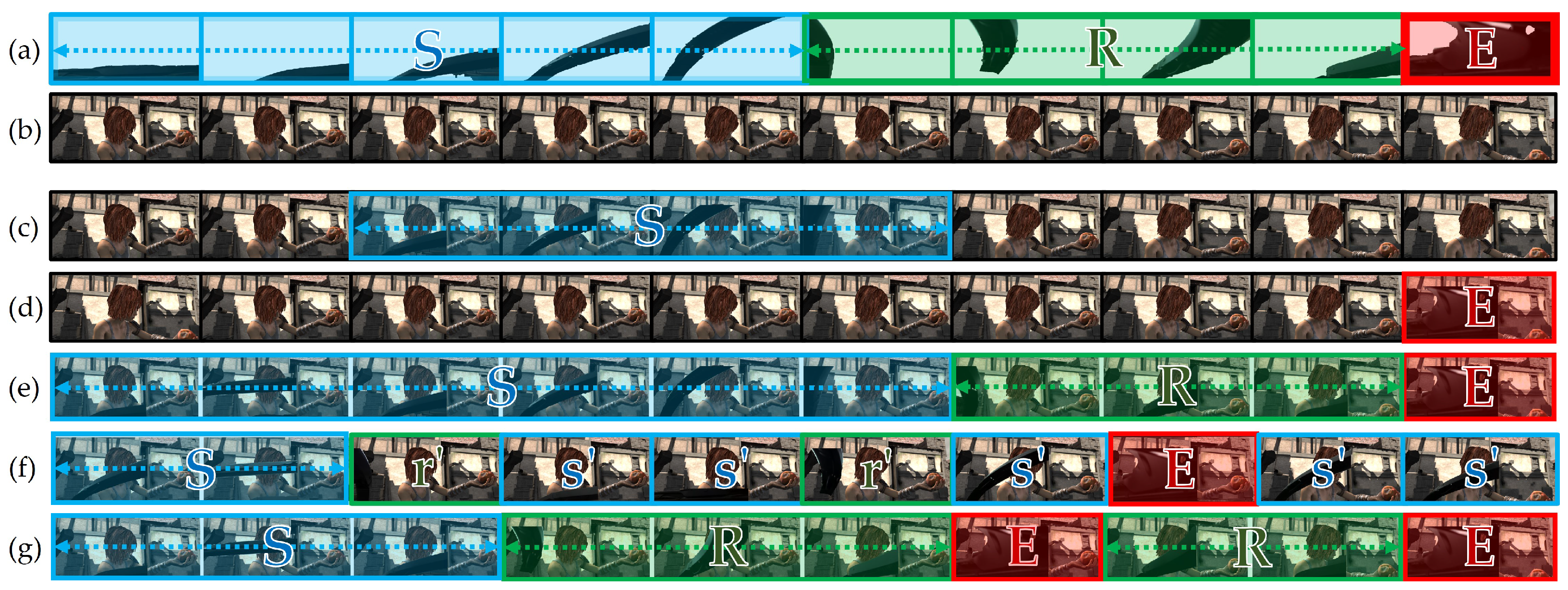

3.3.2. Synthesis Scenario

4. Experiments

4.1. Implementation Details

4.2. Quantitative Evaluation

4.3. Qualitative Evaluation

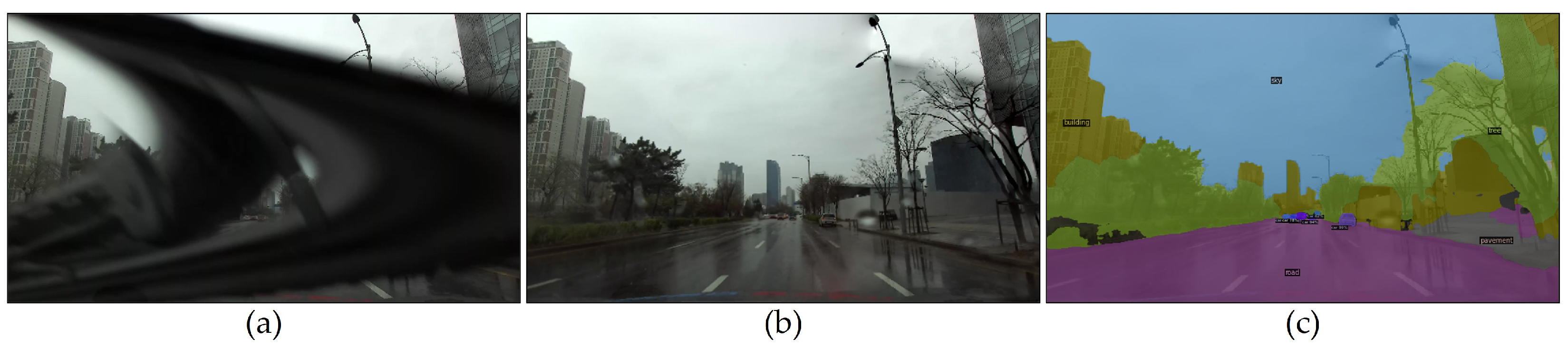

4.4. Applications

4.4.1. Image Restoration

4.4.2. Object Detection

5. Discussion

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Jhung, J.; Bae, I.; Moon, J.; Kim, T.; Kim, J.; Kim, S. End-to-end steering controller with cnn-based closed-loop feedback for autonomous vehicles. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 617–622. [Google Scholar]

- Bae, I.; Moon, J.; Jhung, J.; Suk, H.; Kim, T.; Park, H.; Cha, J.; Kim, J.; Kim, D.; Kim, S. Self-Driving like a Human driver instead of a Robocar: Personalized comfortable driving experience for autonomous vehicles. arXiv 2020, arXiv:2001.03908. [Google Scholar]

- Tesla. Tesla Artificial Intelligence & Autopilot. 2021. Available online: https://www.tesla.com/AI (accessed on 8 October 2021).

- Horgan, J.; Hughes, C.; McDonald, J.; Yogamani, S. Vision-based driver assistance systems: Survey, taxonomy and advances. In Proceedings of the 2015 IEEE 18th International Conference on Intelligent Transportation Systems, Gran Canaria, Spain, 15–18 September 2015; pp. 2032–2039. [Google Scholar]

- Dalal, P. Pulsed Laser Cleaning of Debris Accumulated on Glass Articles in Vehicles and Photovoltaic Assemblies. U.S. Patent 11,110,896, 21 November 2021. [Google Scholar]

- Kang, L.W.; Lin, C.W.; Fu, Y.H. Automatic single-image-based rain streaks removal via image decomposition. IEEE Trans. Image Process. 2011, 21, 1742–1755. [Google Scholar] [CrossRef]

- Luo, Y.; Xu, Y.; Ji, H. Removing rain from a single image via discriminative sparse coding. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 3397–3405. [Google Scholar]

- Li, Y.; Tan, R.T.; Guo, X.; Lu, J.; Brown, M.S. Rain streak removal using layer priors. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 21–26 July 2016; pp. 2736–2744. [Google Scholar]

- You, S.; Tan, R.T.; Kawakami, R.; Ikeuchi, K. Adherent raindrop detection and removal in video. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 1035–1042. [Google Scholar]

- Zhang, H.; Sindagi, V.; Patel, V.M. Image de-raining using a conditional generative adversarial network IEEE Trans. Circuits Syst. Video Technol. 2019, 30, 3943–3956. [Google Scholar] [CrossRef] [Green Version]

- Yang, W.; Tan, R.T.; Feng, J.; Liu, J.; Guo, Z.; Yan, S. Deep joint rain detection and removal from a single image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1357–1366. [Google Scholar]

- Li, X.; Wu, J.; Lin, Z.; Liu, H.; Zha, H. Recurrent squeeze-and-excitation context aggregation net for single image deraining. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 254–269. [Google Scholar]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.H.; Shao, L. Multi-stage progressive image restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 14821–14831. [Google Scholar]

- Chen, L.; Lu, X.; Zhang, J.; Chu, X.; Chen, C. HINet: Half instance normalization network for image restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 182–192. [Google Scholar]

- Qian, R.; Tan, R.T.; Yang, W.; Su, J.; Liu, J. Attentive generative adversarial network for raindrop removal from a single image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2482–2491. [Google Scholar]

- Fan, Z.; Wu, H.; Fu, X.; Huang, Y.; Ding, X. Residual-guide network for single image deraining. In Proceedings of the 26th ACM International Conference on Multimedia, Seoul, Korea, 22–26 October 2018; pp. 1751–1759. [Google Scholar]

- Lin, C.T.; Lin, Y.C.; Chen, L.T.; Wang, Y.F. In-image rain wiper elimination for vision-based Advanced Driver Assistance Systems. In Proceedings of the 11th IEEE International Conference on Control & Automation (ICCA), Taichung, Taiwan, 18–20 June 2014; pp. 32–37. [Google Scholar]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? the kitti vision benchmark suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The cityscapes dataset for semantic urban scene understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar]

- Sun, P.; Kretzschmar, H.; Dotiwalla, X.; Chouard, A.; Patnaik, V.; Tsui, P.; Guo, J.; Zhou, Y.; Chai, Y.; Caine, B.; et al. Scalability in perception for autonomous driving: Waymo open dataset. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 2446–2454. [Google Scholar]

- Caesar, H.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Krishnan, A.; Pan, Y.; Baldan, G.; Beijbom, O. nuscenes: A multimodal dataset for autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 11621–11631. [Google Scholar]

- Yu, F.; Xian, W.; Chen, Y.; Liu, F.; Liao, M.; Madhavan, V.; Darrell, T. Bdd100k: A diverse driving video database with scalable annotation tooling. arXiv 2018, arXiv:1805.04687. [Google Scholar]

- Pfeiffer, D.; Gehrig, S.; Schneider, N. Exploiting the power of stereo confidences. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 297–304. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Dai, X.; Chen, Y.; Xiao, B.; Chen, D.; Liu, M.; Yuan, L.; Zhang, L. Dynamic Head: Unifying Object Detection Heads with Attentions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 7373–7382. [Google Scholar]

- Cheng, B.; Schwing, A.G.; Kirillov, A. Per-pixel classification is not all you need for semantic segmentation. arXiv 2021, arXiv:2107.06278. [Google Scholar]

- Teed, Z.; Deng, J. Raft: Recurrent all-pairs field transforms for optical flow. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 402–419. [Google Scholar]

- Dosovitskiy, A.; Fischer, P.; Ilg, E.; Hausser, P.; Hazirbas, C.; Golkov, V.; Van Der Smagt, P.; Cremers, D.; Brox, T. Flownet: Learning optical flow with convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 2758–2766. [Google Scholar]

- Mayer, N.; Ilg, E.; Hausser, P.; Fischer, P.; Cremers, D.; Dosovitskiy, A.; Brox, T. A large dataset to train convolutional networks for disparity, optical flow, and scene flow estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4040–4048. [Google Scholar]

- Butler, D.J.; Wulff, J.; Stanley, G.B.; Black, M.J. A naturalistic open source movie for optical flow evaluation. In Proceedings of the European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 611–625. [Google Scholar]

- Liu, Y.L.; Lai, W.S.; Yang, M.H.; Chuang, Y.Y.; Huang, J.B. Learning to see through obstructions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 14215–14224. [Google Scholar]

- Li, S.; Araujo, I.B.; Ren, W.; Wang, Z.; Tokuda, E.K.; Junior, R.H.; Cesar-Junior, R.; Zhang, J.; Guo, X.; Cao, X. Single image deraining: A comprehensive benchmark analysis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3838–3847. [Google Scholar]

- Hnewa, M.; Radha, H. Object detection under rainy conditions for autonomous vehicles. arXiv 2020, arXiv:2006.16471. [Google Scholar]

- Ren, S.; He, K.; Girschik, R.; Sun, J. Faster-RCNN: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Montreal, QC, Canada, 7–12 December 2015; pp. 91–99. [Google Scholar]

- Porav, H.; Bruls, T.; Newman, P. I can see clearly now: Image restoration via de-raining. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 7087–7093. [Google Scholar]

- Hirohashi, Y.; Narioka, K.; Suganuma, M.; Liu, X.; Tamatsu, Y.; Okatani, T. Removal of image obstacles for vehicle-mounted surrounding monitoring cameras by real-time video inpainting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 214–215. [Google Scholar]

- Liu, Y.; Wang, Y.; Wang, S.; Liang, T.; Zhao, Q.; Tang, Z.; Ling, H. Cbnet: A novel composite backbone network architecture for object detection. In Proceedings of the AAAI conference on artificial intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 11653–11660. [Google Scholar]

- Xu, M.; Zhang, Z.; Hu, H.; Wang, J.; Wang, L.; Wei, F.; Bai, X.; Liu, Z. End-to-End Semi-Supervised Object Detection with Soft Teacher. arXiv 2021, arXiv:2106.09018. [Google Scholar]

- Chen, L.C.; Wang, H.; Qiao, S. Scaling Wide Residual Networks for Panoptic Segmentation. arXiv 2020, arXiv:2011.11675. [Google Scholar]

- Li, Y.; Zhao, H.; Qi, X.; Wang, L.; Li, Z.; Sun, J.; Jia, J. Fully Convolutional Networks for Panoptic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 214–223. [Google Scholar]

- Brostow, G.J.; Shotton, J.; Fauqueur, J.; Cipolla, R. Segmentation and recognition using structure from motion point clouds. In Proceedings of the European Conference on Computer Vision, Marseille, France, 12–18 October 2008; Springer: Berlin/Heidelberg, Germany, 2008; pp. 44–57. [Google Scholar]

- Huang, X.; Wang, P.; Cheng, X.; Zhou, D.; Geng, Q.; Yang, R. The apolloscape open dataset for autonomous driving and its application. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 42, 2702–2719. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Neuhold, G.; Ollmann, T.; Rota Bulo, S.; Kontschieder, P. The mapillary vistas dataset for semantic understanding of street scenes. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4990–4999. [Google Scholar]

- Che, Z.; Li, G.; Li, T.; Jiang, B.; Shi, X.; Zhang, X.; Lu, Y.; Wu, G.; Liu, Y.; Ye, J. D2-City: A Large-Scale Dashcam Video Dataset of Diverse Traffic Scenarios. arXiv 2019, arXiv:1904.01975. [Google Scholar]

- Chang, M.F.; Lambert, J.; Sangkloy, P.; Singh, J.; Bak, S.; Hartnett, A.; Wang, D.; Carr, P.; Lucey, S.; Ramanan, D.; et al. Argoverse: 3d tracking and forecasting with rich maps. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 8748–8757. [Google Scholar]

- Ilg, E.; Mayer, N.; Saikia, T.; Keuper, M.; Dosovitskiy, A.; Brox, T. Flownet 2.0: Evolution of optical flow estimation with deep networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2462–2470. [Google Scholar]

- Sun, D.; Yang, X.; Liu, M.Y.; Kautz, J. Pwc-net: Cnns for optical flow using pyramid, warping, and cost volume. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8934–8943. [Google Scholar]

- Luo, K.; Wang, C.; Liu, S.; Fan, H.; Wang, J.; Sun, J. Upflow: Upsampling pyramid for unsupervised optical flow learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 1045–1054. [Google Scholar]

- Menze, M.; Geiger, A. Object scene flow for autonomous vehicles. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3061–3070. [Google Scholar]

- Sun, D.; Vlasic, D.; Herrmann, C.; Jampani, V.; Krainin, M.; Chang, H.; Zabih, R.; Freeman, W.T.; Liu, C. AutoFlow: Learning a Better Training Set for Optical Flow. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 10093–10102. [Google Scholar]

- Stereolabs. Streolabs ZED - Python API 2019. Available online: https://github.com/stereolabs/zed-python-api.git (accessed on 8 October 2021).

- Bréhéret, A. Pixel Annotation Tool. 2017. Available online: https://github.com/abreheret/PixelAnnotationTool (accessed on 8 October 2021).

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gao, C.; Saraf, A.; Huang, J.B.; Kopf, J. Flow-edge guided video completion. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 713–729. [Google Scholar]

- Liu, Y.L.; Lai, W.S.; Chen, Y.S.; Kao, Y.L.; Yang, M.H.; Chuang, Y.Y.; Huang, J.B. Single-image HDR reconstruction by learning to reverse the camera pipeline. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1651–1660. [Google Scholar]

- Cheng, B.; Collins, M.D.; Zhu, Y.; Liu, T.; Huang, T.S.; Adam, H.; Chen, L.C. Panoptic-DeepLab: A Simple, Strong, and Fast Baseline for Bottom-Up Panoptic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 12475–12485. [Google Scholar]

| Schedule | Dataset | #Iterations | Batch Size | Crop Size | Learning Rate | Weight Decay |

|---|---|---|---|---|---|---|

| 1 | FlyingChairs | 100 k | 12 | 386 × 496 | 0.0004 | 0.0001 |

| 2 | FlyingThings3D | 100 k | 6 | 400 × 720 | 0.000125 | 0.0001 |

| 3 | SintelWipers | 100 k | 6 | 368 × 768 | 0.000125 | 0.00001 |

| Wiper Mask Detection (WMD) | Wiper Scene Detection (WSD) | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Model | Dataset | SSIM | Binary Classification | Binary Classification | |||||

| Average | Std. Dev. | Precision | Recall | F1 Score | Precision | Recall | F1 Score | ||

| raft-chairs | C | 0.833 | 0.112 | 63.4 | 13.7 | 22.5 | 74.5 | 25.6 | 38.1 |

| raft-things | C + T | 0.937 | 0.087 | 88.3 | 76.9 | 82.2 | 76.8 | 85.0 | 80.7 |

| raft-sintel | C + T + S | 0.934 | 0.094 | 92.8 | 71.6 | 80.8 | 73.6 | 84.7 | 78.8 |

| raft-kitti | C + T + S/K | 0.884 | 0.079 | 75.7 | 53.7 | 62.8 | 68.9 | 73.0 | 70.9 |

| BTS | C + T + Sw | 0.962 | 0.027 | 87.6 | 96.0 | 91.6 | 87.4 | 89.2 | 88.3 |

| BTS-kitti | C + T + Sw/K | 0.890 | 0.075 | 68.8 | 79.4 | 72.5 | 85.8 | 84.1 | 84.9 |

| Datasets: FlyingChairs (C), FlyingThings3D (T), Sintel (S), KITTI (K), SintelWipers (Sw) | |||||||||

| Model | Sintel (Train) | KITTI 2015 (Train) | ||

|---|---|---|---|---|

| Clean | Final | F1-Epe | F1-All | |

| raft-chairs | 2.24 | 4.51 | 9.85 | 37.6 |

| raft-things | 1.46 | 2.78 | 5.00 | 17.4 |

| raft-sintel | 0.75 | 1.22 | 1.21 | 5.6 |

| raft-kitti | 4.55 | 6.15 | 0.63 | 1.5 |

| BTS | 0.93 | 1.49 | 4.37 | 13.5 |

| BTS-kitti | 5.41 | 6.68 | 0.67 | 1.7 |

| Wiper Mask Detection (WMD) | Wiper Scene Detection (WSD) | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Proportion | Method | SSIM | Binary Classification | Binary Classification | |||||

| Average | Std. Dev. | Precision | Recall | F1 | Precision | Recall | F1 | ||

| Orig. Sintel | 0.934 | 0.094 | 92.8 | 71.6 | 80.8 | 73.6 | 84.7 | 78.8 | |

| Partial | Single seq. | 0.922 | 0.095 | 93.4 | 62.9 | 75.2 | 71.8 | 76.5 | 74.1 |

| Single end. | 0.938 | 0.070 | 0.924 | 74.1 | 82.2 | 95.2 | 76.0 | 84.5 | |

| Sequential | 0.953 | 0.045 | 0.886 | 87.7 | 88.1 | 90.8 | 80.1 | 85.1 | |

| Complete | Random | 0.956 | 0.032 | 0.879 | 90.5 | 89.2 | 91.3 | 82.6 | 86.7 |

| Rand. Seq. | 0.962 | 0.027 | 0.876 | 96.0 | 91.6 | 87.4 | 89.2 | 88.3 | |

| Reference model: raft-sintel (original Sintel), raft-things + synthesized Sintel with scenarios | |||||||||

| Image Type | Model for Mask Generation | Average Precision (AP, %) @ IoU = 0.5 |

|---|---|---|

| original | none | 23.30 |

| restored | raft-things | 53.26 |

| BTS | 69.87 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jhung, J.; Kim, S. Behind-The-Scenes (BTS): Wiper-Occlusion Canceling for Advanced Driver Assistance Systems in Adverse Rain Environments. Sensors 2021, 21, 8081. https://doi.org/10.3390/s21238081

Jhung J, Kim S. Behind-The-Scenes (BTS): Wiper-Occlusion Canceling for Advanced Driver Assistance Systems in Adverse Rain Environments. Sensors. 2021; 21(23):8081. https://doi.org/10.3390/s21238081

Chicago/Turabian StyleJhung, Junekyo, and Shiho Kim. 2021. "Behind-The-Scenes (BTS): Wiper-Occlusion Canceling for Advanced Driver Assistance Systems in Adverse Rain Environments" Sensors 21, no. 23: 8081. https://doi.org/10.3390/s21238081

APA StyleJhung, J., & Kim, S. (2021). Behind-The-Scenes (BTS): Wiper-Occlusion Canceling for Advanced Driver Assistance Systems in Adverse Rain Environments. Sensors, 21(23), 8081. https://doi.org/10.3390/s21238081