Wearable Assistive Robotics: A Perspective on Current Challenges and Future Trends

Abstract

1. Introduction

1.1. Importance of Wearable Assistive Robotics

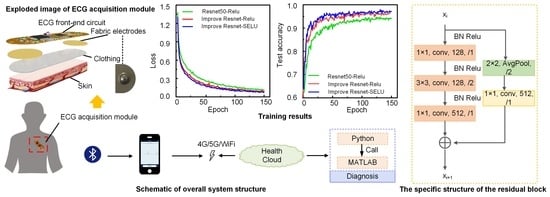

1.2. Clinical Need—Target User Groups

2. Wearable Assistive Robots Technologies

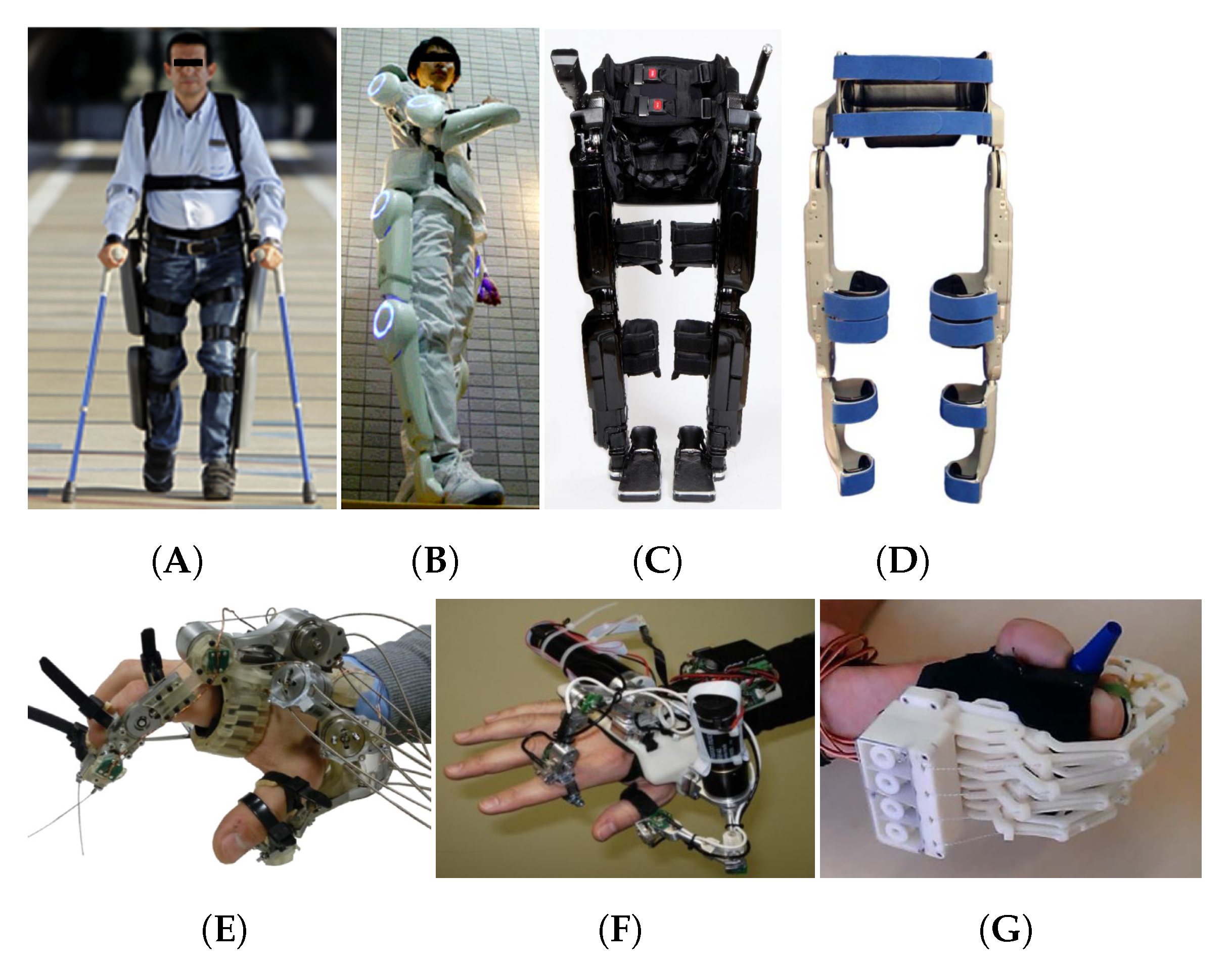

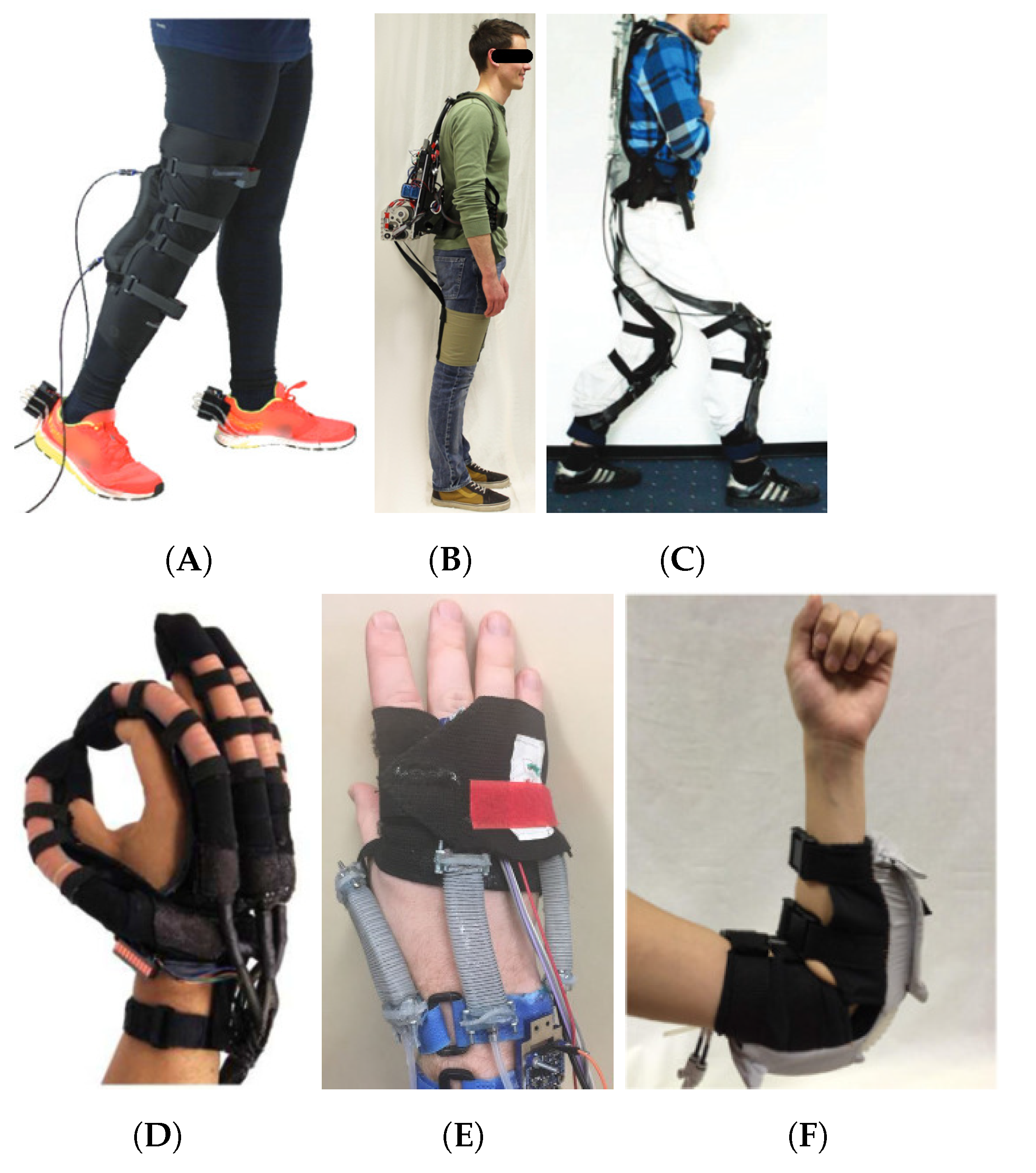

2.1. Rigid Materials in Wearable Robotics

2.2. Soft Materials in Wearable Robotics

3. Wearable Sensing Technologies

4. Machine Learning in Wearable Assistive Robots

4.1. Traditional Machine Learning Methods

4.2. Deep Learning

4.3. Sensor Fusion

5. Current Challenges in Wearable Assistive Robots

5.1. Device Adaptability to the User (Personalised Robotics)

5.2. Translational Issues from the Lab to the Real World

5.3. Wearable Sensing Technology

5.4. User Acceptability

6. Forecasting Future Trends on Wearable Assistive Robots

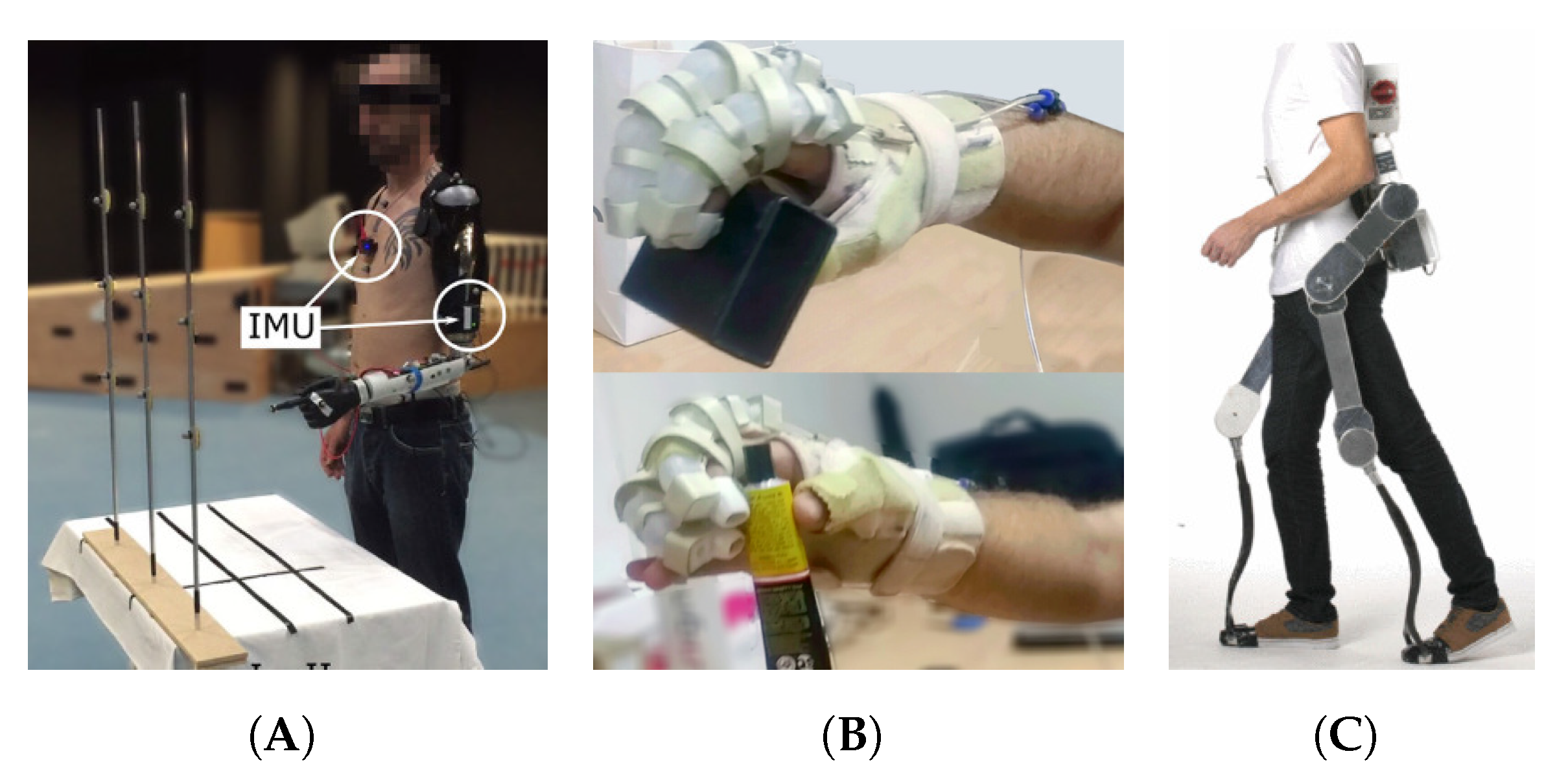

6.1. Hybrid Wearable Assistive Systems

6.2. Learning and Adaptability to the User

6.3. Datasets and Standards

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Sultan, N. Reflective thoughts on the potential and challenges of wearable technology for healthcare provision and medical education. Int. J. Inf. Manag. 2015, 35, 521–526. [Google Scholar] [CrossRef]

- Chen, T.L.; Ciocarlie, M.; Cousins, S.; Grice, P.M.; Hawkins, K.; Hsiao, K.; Kemp, C.C.; King, C.H.; Lazewatsky, D.A.; Leeper, A.E.; et al. Robots for humanity: Using assistive robotics to empower people with disabilities. IEEE Robot. Autom. Mag. 2013, 20, 30–39. [Google Scholar] [CrossRef]

- Park, S.; Jayaraman, S. Enhancing the quality of life through wearable technology. IEEE Eng. Med. Biol. Mag. 2003, 22, 41–48. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.; Kim, D.; Ryoo, H.Y.; Shin, B.S. Sustainable wearables: Wearable technology for enhancing the quality of human life. Sustainability 2016, 8, 466. [Google Scholar] [CrossRef]

- Merad, M.; De Montalivet, É.; Touillet, A.; Martinet, N.; Roby-Brami, A.; Jarrassé, N. Can we achieve intuitive prosthetic elbow control based on healthy upper limb motor strategies? Front. Neurorobot. 2018, 12, 1. [Google Scholar] [CrossRef] [PubMed]

- Jiryaei, Z.; Alvar, A.A.; Bani, M.A.; Vahedi, M.; Jafarpisheh, A.S.; Razfar, N. Development and feasibility of a soft pneumatic-robotic glove to assist impaired hand function in quadriplegia patients: A pilot study. J. Bodyw. Mov. Ther. 2021, 27, 731–736. [Google Scholar] [CrossRef]

- Chung, J.; Heimgartner, R.; O’Neill, C.T.; Phipps, N.S.; Walsh, C.J. Exoboot, a soft inflatable robotic boot to assist ankle during walking: Design, characterization and preliminary tests. In Proceedings of the 2018 7th IEEE International Conference on Biomedical Robotics and Biomechatronics (Biorob), Enschede, The Netherlands, 26–29 August 2018; pp. 509–516. [Google Scholar]

- Kapsalyamov, A.; Jamwal, P.K.; Hussain, S.; Ghayesh, M.H. State of the art lower limb robotic exoskeletons for elderly assistance. IEEE Access 2019, 7, 95075–95086. [Google Scholar] [CrossRef]

- Huo, W.; Mohammed, S.; Moreno, J.C.; Amirat, Y. Lower limb wearable robots for assistance and rehabilitation: A state of the art. IEEE Syst. J. 2014, 10, 1068–1081. [Google Scholar] [CrossRef]

- Oguntosin, V.; Harwin, W.S.; Kawamura, S.; Nasuto, S.J.; Hayashi, Y. Development of a wearable assistive soft robotic device for elbow rehabilitation. In Proceedings of the 2015 IEEE International Conference on Rehabilitation Robotics (ICORR), Singapore, 11–14 August 2015; pp. 747–752. [Google Scholar]

- Rahman, M.H.; Rahman, M.J.; Cristobal, O.; Saad, M.; Kenné, J.P.; Archambault, P.S. Development of a whole arm wearable robotic exoskeleton for rehabilitation and to assist upper limb movements. Robotica 2015, 33, 19–39. [Google Scholar] [CrossRef]

- Chen, B.; Ma, H.; Qin, L.Y.; Gao, F.; Chan, K.M.; Law, S.W.; Qin, L.; Liao, W.H. Recent developments and challenges of lower extremity exoskeletons. J. Orthop. Transl. 2016, 5, 26–37. [Google Scholar] [CrossRef] [PubMed]

- Koo, S.H. Design factors and preferences in wearable soft robots for movement disabilities. Int. J. Cloth. Sci. Technol. 2018, 30, 477–495. [Google Scholar] [CrossRef]

- Lee, H.R.; Riek, L.D. Reframing assistive robots to promote successful aging. ACM Trans. Hum.-Robot Interact. (THRI) 2018, 7, 1–23. [Google Scholar] [CrossRef]

- WHO. Assistive Technology. 2018. Available online: https://www.who.int/news-room/fact-sheets/detail/assistive-technology (accessed on 31 January 2021).

- Garçon, L.; Khasnabis, C.; Walker, L.; Nakatani, Y.; Lapitan, J.; Borg, J.; Ross, A.; Velazquez Berumen, A. Medical and assistive health technology: Meeting the needs of aging populations. Gerontologist 2016, 56, S293–S302. [Google Scholar] [CrossRef] [PubMed]

- Porciuncula, F.; Roto, A.V.; Kumar, D.; Davis, I.; Roy, S.; Walsh, C.J.; Awad, L.N. Wearable movement sensors for rehabilitation: A focused review of technological and clinical advances. PM&R 2018, 10, S220–S232. [Google Scholar]

- Tapu, R.; Mocanu, B.; Zaharia, T. Wearable assistive devices for visually impaired: A state of the art survey. Pattern Recognit. Lett. 2018, 137, 37–52. [Google Scholar] [CrossRef]

- Moyle, W. The promise of technology in the future of dementia care. Nat. Rev. Neurol. 2019, 15, 353–359. [Google Scholar] [CrossRef]

- MacLachlan, M.; McVeigh, J.; Cooke, M.; Ferri, D.; Holloway, C.; Austin, V.; Javadi, D. Intersections between systems thinking and market shaping for assistive technology: The smart (Systems-Market for assistive and related technologies) thinking matrix. Int. J. Environ. Res. Public Health 2018, 15, 2627. [Google Scholar] [CrossRef]

- Tebbutt, E.; Brodmann, R.; Borg, J.; MacLachlan, M.; Khasnabis, C.; Horvath, R. Assistive products and the sustainable development goals (SDGs). Glob. Health 2016, 12, 1–6. [Google Scholar] [CrossRef]

- Layton, N.; Murphy, C.; Bell, D. From individual innovation to global impact: The Global Cooperation on Assistive Technology (GATE) innovation snapshot as a method for sharing and scaling. Disabil. Rehabil. Assist. Technol. 2018, 13, 486–491. [Google Scholar] [CrossRef]

- WHO. Priority Assistive Products List. 2016. Available online: https://apps.who.int/iris/rest/bitstreams/920804/retrieve (accessed on 31 January 2021).

- Patel, S.; Park, H.; Bonato, P.; Chan, L.; Rodgers, M. A review of wearable sensors and systems with application in rehabilitation. J. Neuroeng. Rehabil. 2012, 9, 1–17. [Google Scholar] [CrossRef]

- Moon, N.W.; Baker, P.M.; Goughnour, K. Designing wearable technologies for users with disabilities: Accessibility, usability, and connectivity factors. J. Rehabil. Assist. Technol. Eng. 2019, 6, 1–12. [Google Scholar] [CrossRef]

- Vollmer Dahlke, D.; Ory, M.G. Emerging issues of intelligent assistive technology use among people with dementia and their caregivers: A US Perspective. Front. Public Health 2020, 8, 191. [Google Scholar] [CrossRef] [PubMed]

- Zeagler, C.; Gandy, M.; Baker, P. The Assistive Wearable: Inclusive by Design. Assist. Technol. Outcomes Benefits (ATOB) 2018, 12, 11–36. [Google Scholar]

- Herr, H. Exoskeletons and orthoses: Classification, design challenges and future directions. J. Neuroeng. Rehabil. 2009, 6, 1–9. [Google Scholar] [CrossRef]

- Esquenazi, A.; Talaty, M.; Packel, A.; Saulino, M. The ReWalk powered exoskeleton to restore ambulatory function to individuals with thoracic-level motor-complete spinal cord injury. Am. J. Phys. Med. Rehabil. 2012, 91, 911–921. [Google Scholar] [CrossRef]

- Barbareschi, G.; Richards, R.; Thornton, M.; Carlson, T.; Holloway, C. Statically vs. dynamically balanced gait: Analysis of a robotic exoskeleton compared with a human. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 6728–6731. [Google Scholar]

- Sankai, Y. HAL: Hybrid assistive limb based on cybernics. In Robotics Research; Springer: Berlin/Heidelberg, Germany, 2010; pp. 25–34. [Google Scholar]

- Birch, N.; Graham, J.; Priestley, T.; Heywood, C.; Sakel, M.; Gall, A.; Nunn, A.; Signal, N. Results of the first interim analysis of the RAPPER II trial in patients with spinal cord injury: Ambulation and functional exercise programs in the REX powered walking aid. J. Neuroeng. Rehabil. 2017, 14, 1–10. [Google Scholar] [CrossRef]

- Yan, T.; Cempini, M.; Oddo, C.M.; Vitiello, N. Review of assistive strategies in powered lower-limb orthoses and exoskeletons. Robot. Auton. Syst. 2015, 64, 120–136. [Google Scholar] [CrossRef]

- Marconi, D.; Baldoni, A.; McKinney, Z.; Cempini, M.; Crea, S.; Vitiello, N. A novel hand exoskeleton with series elastic actuation for modulated torque transfer. Mechatronics 2019, 61, 69–82. [Google Scholar] [CrossRef]

- Iqbal, J.; Khan, H.; Tsagarakis, N.G.; Caldwell, D.G. A novel exoskeleton robotic system for hand rehabilitation-conceptualization to prototyping. Biocybern. Biomed. Eng. 2014, 34, 79–89. [Google Scholar] [CrossRef]

- Bianchi, M.; Cempini, M.; Conti, R.; Meli, E.; Ridolfi, A.; Vitiello, N.; Allotta, B. Design of a series elastic transmission for hand exoskeletons. Mechatronics 2018, 51, 8–18. [Google Scholar] [CrossRef]

- Brokaw, E.B.; Holley, R.J.; Lum, P.S. Hand spring operated movement enhancer (HandSOME) device for hand rehabilitation after stroke. In Proceedings of the 2010 Annual International Conference of the IEEE Engineering in Medicine and Biology, Buenos Aires, Argentina, 31 August–4 September 2010; pp. 5867–5870. [Google Scholar]

- Chiri, A.; Vitiello, N.; Giovacchini, F.; Roccella, S.; Vecchi, F.; Carrozza, M.C. Mechatronic design and characterization of the index finger module of a hand exoskeleton for post-stroke rehabilitation. IEEE/ASmE Trans. Mechatron. 2011, 17, 884–894. [Google Scholar] [CrossRef]

- Gasser, B.W.; Bennett, D.A.; Durrough, C.M.; Goldfarb, M. Design and preliminary assessment of vanderbilt hand exoskeleton. In Proceedings of the 2017 International Conference on Rehabilitation Robotics (ICORR), London, UK, 17–20 July 2017; pp. 1537–1542. [Google Scholar]

- Majidi, C. Soft robotics: A perspective—Current trends and prospects for the future. Soft Robot. 2014, 1, 5–11. [Google Scholar] [CrossRef]

- Thalman, C.; Artemiadis, P. A review of soft wearable robots that provide active assistance: Trends, common actuation methods, fabrication, and applications. Wearable Technol. 2020, 1, e3. [Google Scholar] [CrossRef]

- Sridar, S.; Qiao, Z.; Muthukrishnan, N.; Zhang, W.; Polygerinos, P. A soft-inflatable exosuit for knee rehabilitation: Assisting swing phase during walking. Front. Robot. AI 2018, 5, 44. [Google Scholar] [CrossRef]

- Park, Y.L.; Chen, B.R.; Young, D.; Stirling, L.; Wood, R.J.; Goldfield, E.C.; Nagpal, R. Design and control of a bio-inspired soft wearable robotic device for ankle-foot rehabilitation. Bioinspir. Biomim. 2014, 9, 016007. [Google Scholar] [CrossRef]

- Asbeck, A.T.; Schmidt, K.; Walsh, C.J. Soft exosuit for hip assistance. Robot. Auton. Syst. 2015, 73, 102–110. [Google Scholar] [CrossRef]

- Asbeck, A.T.; De Rossi, S.M.; Holt, K.G.; Walsh, C.J. A biologically inspired soft exosuit for walking assistance. Int. J. Robot. Res. 2015, 34, 744–762. [Google Scholar] [CrossRef]

- Khomami, A.M.; Najafi, F. A survey on soft lower limb cable-driven wearable robots without rigid links and joints. Robot. Auton. Syst. 2021, 144, 103846. [Google Scholar] [CrossRef]

- Polygerinos, P.; Wang, Z.; Galloway, K.C.; Wood, R.J.; Walsh, C.J. Soft robotic glove for combined assistance and at-home rehabilitation. Robot. Auton. Syst. 2015, 73, 135–143. [Google Scholar] [CrossRef]

- Skorina, E.H.; Luo, M.; Onal, C.D. A soft robotic wearable wrist device for kinesthetic haptic feedback. Front. Robot. AI 2018, 5, 83. [Google Scholar] [CrossRef]

- Koh, T.H.; Cheng, N.; Yap, H.K.; Yeow, C.H. Design of a soft robotic elbow sleeve with passive and intent-controlled actuation. Front. Neurosci. 2017, 11, 597. [Google Scholar] [CrossRef]

- Ge, L.; Chen, F.; Wang, D.; Zhang, Y.; Han, D.; Wang, T.; Gu, G. Design, modeling, and evaluation of fabric-based pneumatic actuators for soft wearable assistive gloves. Soft Robot. 2020, 7, 583–596. [Google Scholar] [CrossRef]

- Gerez, L.; Chen, J.; Liarokapis, M. On the development of adaptive, tendon-driven, wearable exo-gloves for grasping capabilities enhancement. IEEE Robot. Autom. Lett. 2019, 4, 422–429. [Google Scholar] [CrossRef]

- Yun, S.S.; Kang, B.B.; Cho, K.J. Exo-glove PM: An easily customizable modularized pneumatic assistive glove. IEEE Robot. Autom. Lett. 2017, 2, 1725–1732. [Google Scholar] [CrossRef]

- Hadi, A.; Alipour, K.; Kazeminasab, S.; Elahinia, M. ASR glove: A wearable glove for hand assistance and rehabilitation using shape memory alloys. J. Intell. Mater. Syst. Struct. 2018, 29, 1575–1585. [Google Scholar] [CrossRef]

- Valdastri, P.; Rossi, S.; Menciassi, A.; Lionetti, V.; Bernini, F.; Recchia, F.A.; Dario, P. An implantable ZigBee ready telemetric platform for monitoring of physiological parameters. Sens. Actuators A Phys. 2008, 142, 369–378. [Google Scholar] [CrossRef]

- Malhi, K.; Mukhopadhyay, S.C.; Schnepper, J.; Haefke, M.; Ewald, H. A zigbee-based wearable physiological parameters monitoring system. IEEE Sens. J. 2010, 12, 423–430. [Google Scholar] [CrossRef]

- Díaz, S.; Stephenson, J.B.; Labrador, M.A. Use of wearable sensor technology in gait, balance, and range of motion analysis. Appl. Sci. 2020, 10, 234. [Google Scholar] [CrossRef]

- Tan, C.; Dong, Z.; Li, Y.; Zhao, H.; Huang, X.; Zhou, Z.; Jiang, J.W.; Long, Y.Z.; Jiang, P.; Zhang, T.Y.; et al. A high performance wearable strain sensor with advanced thermal management for motion monitoring. Nat. Commun. 2020, 11, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Khan, Y.; Han, D.; Ting, J.; Ahmed, M.; Nagisetty, R.; Arias, A.C. Organic multi-channel optoelectronic sensors for wearable health monitoring. IEEE Access 2019, 7, 128114–128124. [Google Scholar] [CrossRef]

- Nisar, H.; Khan, M.B.; Yi, W.T.; Voon, Y.V.; Khang, T.S. Contactless heart rate monitor for multiple persons in a video. In Proceedings of the 2016 IEEE International Conference on Consumer Electronics-Taiwan (ICCE-TW), Nantou, Taiwan, 27–29 May 2016; pp. 1–2. [Google Scholar]

- Maestre-Rendon, J.R.; Rivera-Roman, T.A.; Fernandez-Jaramillo, A.A.; Guerron Paredes, N.E.; Serrano Olmedo, J.J. A non-contact photoplethysmography technique for the estimation of heart rate via smartphone. Appl. Sci. 2020, 10, 154. [Google Scholar] [CrossRef]

- Lee, H.; Chung, H.; Ko, H.; Lee, J. Wearable multichannel photoplethysmography framework for heart rate monitoring during intensive exercise. IEEE Sens. J. 2018, 18, 2983–2993. [Google Scholar] [CrossRef]

- Yüzer, A.; Sümbül, H.; Polat, K. A novel wearable real-time sleep apnea detection system based on the acceleration sensor. IRBM 2020, 41, 39–47. [Google Scholar] [CrossRef]

- Surrel, G.; Aminifar, A.; Rincón, F.; Murali, S.; Atienza, D. Online obstructive sleep apnea detection on medical wearable sensors. IEEE Trans. Biomed. Circuits Syst. 2018, 12, 762–773. [Google Scholar] [CrossRef]

- Haji Ghassemi, N.; Hannink, J.; Roth, N.; Gaßner, H.; Marxreiter, F.; Klucken, J.; Eskofier, B.M. Turning analysis during standardized test using on-shoe wearable sensors in Parkinson’s disease. Sensors 2019, 19, 3103. [Google Scholar] [CrossRef] [PubMed]

- Mariani, B.; Jiménez, M.C.; Vingerhoets, F.J.; Aminian, K. On-shoe wearable sensors for gait and turning assessment of patients with Parkinson’s disease. IEEE Trans. Biomed. Eng. 2012, 60, 155–158. [Google Scholar] [CrossRef] [PubMed]

- Chen, B.R.; Patel, S.; Buckley, T.; Rednic, R.; McClure, D.J.; Shih, L.; Tarsy, D.; Welsh, M.; Bonato, P. A web-based system for home monitoring of patients with Parkinson’s disease using wearable sensors. IEEE Trans. Biomed. Eng. 2010, 58, 831–836. [Google Scholar] [CrossRef] [PubMed]

- Monje, M.H.; Foffani, G.; Obeso, J.; Sánchez-Ferro, Á. New sensor and wearable technologies to aid in the diagnosis and treatment monitoring of Parkinson’s disease. Annu. Rev. Biomed. Eng. 2019, 21, 111–143. [Google Scholar] [CrossRef] [PubMed]

- Silva de Lima, A.L.; Smits, T.; Darweesh, S.K.; Valenti, G.; Milosevic, M.; Pijl, M.; Baldus, H.; de Vries, N.M.; Meinders, M.J.; Bloem, B.R. Home-based monitoring of falls using wearable sensors in Parkinson’s disease. Mov. Disord. 2020, 35, 109–115. [Google Scholar] [CrossRef]

- Bae, C.W.; Toi, P.T.; Kim, B.Y.; Lee, W.I.; Lee, H.B.; Hanif, A.; Lee, E.H.; Lee, N.E. Fully stretchable capillary microfluidics-integrated nanoporous gold electrochemical sensor for wearable continuous glucose monitoring. ACS Appl. Mater. Interfaces 2019, 11, 14567–14575. [Google Scholar] [CrossRef] [PubMed]

- Bandodkar, A.J.; Wang, J. Non-invasive wearable electrochemical sensors: A review. Trends Biotechnol. 2014, 32, 363–371. [Google Scholar] [CrossRef]

- Wang, R.; Zhai, Q.; Zhao, Y.; An, T.; Gong, S.; Guo, Z.; Shi, Q.; Yong, Z.; Cheng, W. Stretchable gold fiber-based wearable electrochemical sensor toward pH monitoring. J. Mater. Chem. B 2020, 8, 3655–3660. [Google Scholar] [CrossRef] [PubMed]

- Edwards, J. Wireless sensors relay medical insight to patients and caregivers [special reports]. IEEE Signal Process. Mag. 2012, 29, 8–12. [Google Scholar] [CrossRef]

- Castillejo, P.; Martinez, J.F.; Rodriguez-Molina, J.; Cuerva, A. Integration of wearable devices in a wireless sensor network for an E-health application. IEEE Wirel. Commun. 2013, 20, 38–49. [Google Scholar] [CrossRef]

- Mukhopadhyay, S.C. Wearable sensors for human activity monitoring: A review. IEEE Sens. J. 2014, 15, 1321–1330. [Google Scholar] [CrossRef]

- Nag, A.; Mukhopadhyay, S.C.; Kosel, J. Wearable flexible sensors: A review. IEEE Sens. J. 2017, 17, 3949–3960. [Google Scholar] [CrossRef]

- Stavropoulos, T.G.; Papastergiou, A.; Mpaltadoros, L.; Nikolopoulos, S.; Kompatsiaris, I. IoT wearable sensors and devices in elderly care: A literature review. Sensors 2020, 20, 2826. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Gong, L.; Wei, L.; Yeh, S.C.; Da Xu, L.; Zheng, L.; Zou, Z. A Wearable Hand Rehabilitation System with Soft Gloves. IEEE Trans. Ind. Inform. 2021, 17, 943–952. [Google Scholar] [CrossRef]

- Stefanou, T.; Chance, G.; Assaf, T.; Dogramadzi, S. Tactile Signatures and Hand Motion Intent Recognition for Wearable Assistive Devices. Front. Robot. AI 2019, 6, 124. [Google Scholar] [CrossRef]

- Badawi, A.A.; Al-Kabbany, A.; Shaban, H. Multimodal human activity recognition from wearable inertial sensors using machine learning. In Proceedings of the 2018 IEEE-EMBS Conference on Biomedical Engineering and Sciences (IECBES), Kuching, Malaysia, 3–6 December 2018; pp. 402–407. [Google Scholar]

- Garcia-Ceja, E.; Galván-Tejada, C.E.; Brena, R. Multi-view stacking for activity recognition with sound and accelerometer data. Inf. Fusion 2018, 40, 45–56. [Google Scholar] [CrossRef]

- Martinez-Hernandez, U.; Dehghani-Sanij, A.A. Probabilistic identification of sit-to-stand and stand-to-sit with a wearable sensor. Pattern Recognit. Lett. 2019, 118, 32–41. [Google Scholar] [CrossRef]

- Zhao, H.; Wang, Z.; Qiu, S.; Wang, J.; Xu, F.; Wang, Z.; Shen, Y. Adaptive gait detection based on foot-mounted inertial sensors and multi-sensor fusion. Inf. Fusion 2019, 52, 157–166. [Google Scholar] [CrossRef]

- Roman, R.C.; Precup, R.E.; Petriu, E.M. Hybrid data-driven fuzzy active disturbance rejection control for tower crane systems. Eur. J. Control 2021, 58, 373–387. [Google Scholar] [CrossRef]

- Zhang, H.; Liu, X.; Ji, H.; Hou, Z.; Fan, L. Multi-agent-based data-driven distributed adaptive cooperative control in urban traffic signal timing. Energies 2019, 12, 1402. [Google Scholar] [CrossRef]

- Yoon, J.G.; Lee, M.C. Design of tendon mechanism for soft wearable robotic hand and its fuzzy control using electromyogram sensor. Intell. Serv. Robot. 2021, 14, 119–128. [Google Scholar] [CrossRef]

- Parri, A.; Yuan, K.; Marconi, D.; Yan, T.; Crea, S.; Munih, M.; Lova, R.M.; Vitiello, N.; Wang, Q. Real-time hybrid locomotion mode recognition for lower limb wearable robots. IEEE/ASME Trans. Mechatron. 2017, 22, 2480–2491. [Google Scholar] [CrossRef]

- Jamwal, P.K.; Xie, S.Q.; Hussain, S.; Parsons, J.G. An adaptive wearable parallel robot for the treatment of ankle injuries. IEEE/ASME Trans. Mechatron. 2012, 19, 64–75. [Google Scholar] [CrossRef]

- Hatcher, W.G.; Yu, W. A Survey of Deep Learning: Platforms, Applications and Emerging Research Trends. IEEE Access 2018, 6, 24411–24432. [Google Scholar] [CrossRef]

- Liu, S.; Zhang, J.; Zhang, Y.; Zhu, R. A wearable motion capture device able to detect dynamic motion of human limbs. Nat. Commun. 2020, 11, 1–12. [Google Scholar] [CrossRef]

- Oniga, S.; József, S.S. Optimal Recognition Method of Human Activities Using Artificial Neural Networks. Meas. Sci. Rev. 2015, 15, 323–327. [Google Scholar] [CrossRef][Green Version]

- Yang, G.; Deng, J.; Pang, G.; Zhang, H.; Li, J.; Deng, B.; Pang, Z.; Xu, J.; Jiang, M.; Liljeberg, P.; et al. An IoT-Enabled Stroke Rehabilitation System Based on Smart Wearable Armband and Machine Learning. IEEE J. Transl. Eng. Health Med. 2018, 6, 1. [Google Scholar] [CrossRef]

- Lin, Y.; Wang, K.; Yi, W.; Lian, S. Deep learning based wearable assistive system for visually impaired people. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Korea, 27 October–2 November 2019. [Google Scholar]

- Yang, J.B.; Nguyen, M.N.; San, P.P.; Li, X.L.; Krishnaswamy, S. Deep convolutional neural networks on multichannel time series for human activity recognition. In Proceedings of the IJCAI International Joint Conference on Artificial Intelligence, Buenos Aires, Argentina, 25–31 July 2015; Volume 2015, pp. 3995–4001. [Google Scholar]

- Xu, W.; Pang, Y.; Yang, Y.; Liu, Y. Human Activity Recognition Based on Convolutional Neural Network. In Proceedings of the International Conference on Pattern Recognition, Beijing, China, 20–24 August 2018; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2018; Volume 2018, pp. 165–170. [Google Scholar] [CrossRef]

- Lee, S.M.; Yoon, S.M.; Cho, H. Human activity recognition from accelerometer data using Convolutional Neural Network. In Proceedings of the 2017 IEEE International Conference on Big Data and Smart Computing, BigComp 2017, Jeju, Korea, 13–16 February 2017; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2017; pp. 131–134. [Google Scholar] [CrossRef]

- Suri, K.; Gupta, R. Convolutional Neural Network Array for Sign Language Recognition Using Wearable IMUs. In Proceedings of the 2019 6th International Conference on Signal Processing and Integrated Networks, SPIN 2019, Noida, India, 7–8 March 2019; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2019; pp. 483–488. [Google Scholar] [CrossRef]

- Ha, S.; Choi, S. Convolutional neural networks for human activity recognition using multiple accelerometer and gyroscope sensors. In Proceedings of the International Joint Conference on Neural Networks, Vancouver, BC, Canada, 24–29 July 2016; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2016; Volume 2016, pp. 381–388. [Google Scholar] [CrossRef]

- Hammerla, N.Y.; Halloran, S.; Ploetz, T. Deep, Convolutional, and Recurrent Models for Human Activity Recognition using Wearables. In Proceedings of the IJCAI International Joint Conference on Artificial Intelligence, New York, NY, USA, 9–15 July 2016; Volume 2016, pp. 1533–1540. [Google Scholar]

- Ordóñez, F.J.; Roggen, D. Deep convolutional and LSTM recurrent neural networks for multimodal wearable activity recognition. Sensors 2016, 16, 115. [Google Scholar] [CrossRef]

- Xu, C.; Chai, D.; He, J.; Zhang, X.; Duan, S. InnoHAR: A deep neural network for complex human activity recognition. IEEE Access 2019, 7, 9893–9902. [Google Scholar] [CrossRef]

- Wang, K.; He, J.; Zhang, L. Sequential Weakly Labeled Multi-Activity Recognition and Location on Wearable Sensors using Recurrent Attention Network. arXiv 2020, arXiv:2004.05768. [Google Scholar]

- Josephs, D.; Drake, C.; Heroy, A.M.; Santerre, J. sEMG Gesture Recognition with a Simple Model of Attention. arXiv 2020, arXiv:2006.03645. [Google Scholar]

- Chung, S.; Lim, J.; Noh, K.J.; Kim, G.; Jeong, H. Sensor data acquisition and multimodal sensor fusion for human activity recognition using deep learning. Sensors 2019, 19, 1716. [Google Scholar] [CrossRef] [PubMed]

- Little, K.; Pappachan, K.B.; Yang, S.; Noronha, B.; Campolo, D.; Accoto, D. Elbow Motion Trajectory Prediction Using a Multi-Modal Wearable System: A Comparative Analysis of Machine Learning Techniques. Sensors 2021, 21, 498. [Google Scholar] [CrossRef] [PubMed]

- Fung, M.L.; Chen, M.Z.Q.; Chen, Y.H. Sensor fusion: A review of methods and applications. In Proceedings of the 29th Chinese Control Furthermore, Decision Conference (CCDC), Chongqing, China, 28–30 May 2017; pp. 3853–3860. [Google Scholar] [CrossRef]

- Gravina, R.; Alinia, P.; Ghasemzadeh, H.; Fortino, G. Multi-sensor fusion in body sensor networks: State-of-the-art and research challenges. Inf. Fusion 2017, 35, 1339–1351. [Google Scholar] [CrossRef]

- Mitchell, H.B. Data Fusion: Concepts and Ideas; Springer: Berlin/Heidelberg, Germany, 2012; pp. 1–344. [Google Scholar] [CrossRef]

- Muzammal, M.; Talat, R.; Sodhro, A.H.; Pirbhulal, S. A multi-sensor data fusion enabled ensemble approach for medical data from body sensor networks. Inf. Fusion 2020, 53, 155–164. [Google Scholar] [CrossRef]

- Male, J.; Martinez-Hernandez, U. Recognition of human activity and the state of an assembly task using vision and inertial sensor fusion methods. In Proceedings of the IEEE International Conference on Industrial Technology, Valencia, Spain, 10–12 March 2021. [Google Scholar]

- Qin, Z.; Zhang, Y.; Meng, S.; Qin, Z.; Choo, K.K.R. Imaging and fusing time series for wearable sensor-based human activity recognition. Inf. Fusion 2020, 53, 80–87. [Google Scholar] [CrossRef]

- Liang, X.; Li, H.; Wang, W.; Liu, Y.; Ghannam, R.; Fioranelli, F.; Heidari, H. Fusion of Wearable and Contactless Sensors for Intelligent Gesture Recognition. Adv. Intell. Syst. 2019, 1, 1900088. [Google Scholar] [CrossRef]

- Smith, R. Adaptivity as a Service (AaaS): Personalised Assistive Robotics for Ambient Assisted Living. In Proceedings of the International Symposium on Ambient Intelligence, L’Aquila, Italy, 7–9 October 2020; pp. 239–242. [Google Scholar]

- Martins, G.S.; Santos, L.; Dias, J. User-adaptive interaction in social robots: A survey focusing on non-physical interaction. Int. J. Soc. Robot. 2019, 11, 185–205. [Google Scholar] [CrossRef]

- Chen, L.; Nugent, C.D.; Wang, H. A knowledge-driven approach to activity recognition in smart homes. IEEE Trans. Knowl. Data Eng. 2011, 24, 961–974. [Google Scholar] [CrossRef]

- Chen, L.; Nugent, C.; Okeyo, G. An ontology-based hybrid approach to activity modeling for smart homes. IEEE Trans. Hum.- Syst. 2013, 44, 92–105. [Google Scholar] [CrossRef]

- Hu, B.; Rouse, E.; Hargrove, L. Benchmark datasets for bilateral lower-limb neuromechanical signals from wearable sensors during unassisted locomotion in able-bodied individuals. Front. Robot. AI 2018, 5, 14. [Google Scholar] [CrossRef]

- Young, A.J.; Simon, A.M.; Fey, N.P.; Hargrove, L.J. Intent recognition in a powered lower limb prosthesis using time history information. Ann. Biomed. Eng. 2014, 42, 631–641. [Google Scholar] [CrossRef]

- Berryman, N.; Gayda, M.; Nigam, A.; Juneau, M.; Bherer, L.; Bosquet, L. Comparison of the metabolic energy cost of overground and treadmill walking in older adults. Eur. J. Appl. Physiol. 2012, 112, 1613–1620. [Google Scholar] [CrossRef]

- Al-dabbagh, A.H.; Ronsse, R. A review of terrain detection systems for applications in locomotion assistance. Robot. Auton. Syst. 2020, 133, 103628. [Google Scholar] [CrossRef]

- Miyatake, T.; Lee, S.; Galiana, I.; Rossi, D.M.; Siviy, C.; Panizzolo, F.A.; Walsh, C.J. Biomechanical analysis and inertial sensing of ankle joint while stepping on an unanticipated bump. In Wearable Robotics: Challenges and Trends; Springer: Berlin/Heidelberg, Germany, 2017; pp. 343–347. [Google Scholar]

- Bahk, J.H.; Fang, H.; Yazawa, K.; Shakouri, A. Flexible thermoelectric materials and device optimization for wearable energy harvesting. J. Mater. Chem. C 2015, 3, 10362–10374. [Google Scholar] [CrossRef]

- Zhu, X.; Li, Q.; Chen, G. APT: Accurate outdoor pedestrian tracking with smartphones. In Proceedings of the IEEE INFOCOM 2013, Turin, Italy, 14–19 April 2013; pp. 2508–2516. [Google Scholar]

- Blobel, B.; Pharow, P.; Sousa, F. PHealth 2012: Proceedings of the 9th International Conference on Wearable Micro and Nano Technologies for Personalized Health, PHealth 2012, Porto, Portugal, 26–28 June 2012; IOS Press: Washington, DC, USA, 2012; Volume 177. [Google Scholar]

- Phillips, S.M.; Cadmus-Bertram, L.; Rosenberg, D.; Buman, M.P.; Lynch, B.M. Wearable technology and physical activity in chronic disease: Opportunities and challenges. Am. J. Prev. Med. 2018, 54, 144. [Google Scholar] [CrossRef] [PubMed]

- McAdams, E.; Krupaviciute, A.; Géhin, C.; Grenier, E.; Massot, B.; Dittmar, A.; Rubel, P.; Fayn, J. Wearable sensor systems: The challenges. In Proceedings of the 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA, USA, 30 August–3 September 2011; pp. 3648–3651. [Google Scholar]

- Biddiss, E.; Chau, T. Upper-limb prosthetics: Critical factors in device abandonment. Am. J. Phys. Med. Rehabil. 2007, 86, 977–987. [Google Scholar] [CrossRef] [PubMed]

- Smail, L.C.; Neal, C.; Wilkins, C.; Packham, T.L. Comfort and function remain key factors in upper limb prosthetic abandonment: Findings of a scoping review. Disabil. Rehabil. Assist. Technol. 2020, 16, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Rodgers, M.M.; Alon, G.; Pai, V.M.; Conroy, R.S. Wearable technologies for active living and rehabilitation: Current research challenges and future opportunities. J. Rehabil. Assist. Technol. Eng. 2019, 6, 2055668319839607. [Google Scholar] [CrossRef]

- Sugawara, A.T.; Ramos, V.D.; Alfieri, F.M.; Battistella, L.R. Abandonment of assistive products: Assessing abandonment levels and factors that impact on it. Disabil. Rehabil. Assist. Technol. 2018, 13, 716–723. [Google Scholar] [CrossRef] [PubMed]

- Holloway, C.; Dawes, H. Disrupting the world of disability: The next generation of assistive technologies and rehabilitation practices. Healthc. Technol. Lett. 2016, 3, 254–256. [Google Scholar] [CrossRef]

- van den Kieboom, R.C.; Bongers, I.M.; Mark, R.E.; Snaphaan, L.J. User-driven living lab for assistive technology to support people with dementia living at home: Protocol for developing co-creation–based innovations. JMIR Res. Protoc. 2019, 8, e10952. [Google Scholar] [CrossRef]

- Callari, T.C.; Moody, L.; Magee, P.; Yang, D. ‘Smart–not only intelligent!’Co-creating priorities and design direction for ‘smart’footwear to support independent ageing. Int. J. Fash. Des. Technol. Educ. 2019, 12, 313–324. [Google Scholar] [CrossRef]

- Ugulino, W.C.; Fuks, H. Prototyping wearables for supporting cognitive mapping by the blind: Lessons from co-creation workshops. In Proceedings of the 2015 Workshop on Wearable Systems and Applications, Florence, Italy, 18 May 2015; pp. 39–44. [Google Scholar]

- van Dijk-de Vries, A.; Stevens, A.; van der Weijden, T.; Beurskens, A.J. How to support a co-creative research approach in order to foster impact. The development of a Co-creation Impact Compass for healthcare researchers. PLoS ONE 2020, 15, e0240543. [Google Scholar] [CrossRef]

- Van der Zijpp, T.; Wouters, E.; Sturm, J. To use or not to use: The design, implementation and acceptance of technology in the context of health care. In Assistive Technologies in Smart Cities; InTechOpen: London, UK, 2018. [Google Scholar]

- Ran, M.; Banes, D.; Scherer, M.J. Basic principles for the development of an AI-based tool for assistive technology decision making. Disabil. Rehabil. Assist. Technol. 2020, 1–4. [Google Scholar] [CrossRef]

- Seyhan, A.A. Lost in translation: The valley of death across preclinical and clinical divide-identification of problems and overcoming obstacles. Transl. Med. Commun. 2019, 4, 1–19. [Google Scholar] [CrossRef]

- Ortiz, J.; Poliero, T.; Cairoli, G.; Graf, E.; Caldwell, D.G. Energy efficiency analysis and design optimization of an actuation system in a soft modular lower limb exoskeleton. IEEE Robot. Autom. Lett. 2017, 3, 484–491. [Google Scholar] [CrossRef]

- Kim, J.; Lee, G.; Heimgartner, R.; Revi, D.A.; Karavas, N.; Nathanson, D.; Galiana, I.; Eckert-Erdheim, A.; Murphy, P.; Perry, D.; et al. Reducing the metabolic rate of walking and running with a versatile, portable exosuit. Science 2019, 365, 668–672. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.; Moon, J.; Kim, J.; Lee, G. Compact Variable Gravity Compensation Mechanism With a Geometrically Optimized Lever for Maximizing Variable Ratio of Torque Generation. IEEE/ASME Trans. Mechatron. 2020, 25, 2019–2026. [Google Scholar] [CrossRef]

- Lin, P.Y.; Shieh, W.B.; Chen, D.Z. A theoretical study of weight-balanced mechanisms for design of spring assistive mobile arm support (MAS). Mech. Mach. Theory 2013, 61, 156–167. [Google Scholar] [CrossRef]

- Lenzo, B.; Zanotto, D.; Vashista, V.; Frisoli, A.; Agrawal, S. A new Constant Pushing Force Device for human walking analysis. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 6174–6179. [Google Scholar]

- Cherelle, P.; Grosu, V.; Beyl, P.; Mathys, A.; Van Ham, R.; Van Damme, M.; Vanderborght, B.; Lefeber, D. The MACCEPA actuation system as torque actuator in the gait rehabilitation robot ALTACRO. In Proceedings of the 2010 3rd IEEE RAS & EMBS International Conference on Biomedical Robotics and Biomechatronics, Tokyo, Japan, 26–29 September 2010; pp. 27–32. [Google Scholar]

- Moltedo, M.; Bacek, T.; Junius, K.; Vanderborght, B.; Lefeber, D. Mechanical design of a lightweight compliant and adaptable active ankle foot orthosis. In Proceedings of the 2016 6th IEEE International Conference on Biomedical Robotics and Biomechatronics (BioRob), Singapore, 26–29 June 2016; pp. 1224–1229. [Google Scholar]

- Niknejad, N.; Ismail, W.B.; Mardani, A.; Liao, H.; Ghani, I. A comprehensive overview of smart wearables: The state of the art literature, recent advances, and future challenges. Eng. Appl. Artif. Intell. 2020, 90, 103529. [Google Scholar] [CrossRef]

- Lee, Y.; Kozar, K.A.; Larsen, K.R. The technology acceptance model: Past, present, and future. Commun. Assoc. Inf. Syst. 2003, 12, 50. [Google Scholar] [CrossRef]

- Arias, O.; Wurm, J.; Hoang, K.; Jin, Y. Privacy and security in internet of things and wearable devices. IEEE Trans. Multi-Scale Comput. Syst. 2015, 1, 99–109. [Google Scholar] [CrossRef]

- Glikson, E.; Woolley, A.W. Human trust in artificial intelligence: Review of empirical research. Acad. Manag. Ann. 2020, 14, 627–660. [Google Scholar] [CrossRef]

- Cheng, M.; Nazarian, S.; Bogdan, P. There is hope after all: Quantifying opinion and trustworthiness in neural networks. Front. Artif. Intell. 2020, 3, 54. [Google Scholar] [CrossRef]

- Stokes, A.A.; Shepherd, R.F.; Morin, S.A.; Ilievski, F.; Whitesides, G.M. A hybrid combining hard and soft robots. Soft Robot. 2014, 1, 70–74. [Google Scholar] [CrossRef]

- Yang, H.D.; Asbeck, A.T. A new manufacturing process for soft robots and soft/rigid hybrid robots. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 8039–8046. [Google Scholar]

- Yu, S.; Huang, T.H.; Wang, D.; Lynn, B.; Sayd, D.; Silivanov, V.; Park, Y.S.; Tian, Y.; Su, H. Design and control of a high-torque and highly backdrivable hybrid soft exoskeleton for knee injury prevention during squatting. IEEE Robot. Autom. Lett. 2019, 4, 4579–4586. [Google Scholar] [CrossRef]

- Rose, C.G.; O’Malley, M.K. Hybrid rigid-soft hand exoskeleton to assist functional dexterity. IEEE Robot. Autom. Lett. 2018, 4, 73–80. [Google Scholar] [CrossRef]

- Araromi, O.A.; Walsh, C.J.; Wood, R.J. Hybrid carbon fiber-textile compliant force sensors for high-load sensing in soft exosuits. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 1798–1803. [Google Scholar]

- Gerez, L.; Dwivedi, A.; Liarokapis, M. A hybrid, soft exoskeleton glove equipped with a telescopic extra thumb and abduction capabilities. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 9100–9106. [Google Scholar]

- Park, S.; Meeker, C.; Weber, L.M.; Bishop, L.; Stein, J.; Ciocarlie, M. Multimodal sensing and interaction for a robotic hand orthosis. IEEE Robot. Autom. Lett. 2018, 4, 315–322. [Google Scholar] [CrossRef]

- Chiri, A.; Cempini, M.; De Rossi, S.M.M.; Lenzi, T.; Giovacchini, F.; Vitiello, N.; Carrozza, M.C. On the design of ergonomic wearable robotic devices for motion assistance and rehabilitation. In Proceedings of the 2012 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, San Diego, CA, USA, 28 August–1 September 2012; pp. 6124–6127. [Google Scholar]

- Heerink, M.; Krose, B.; Evers, V.; Wielinga, B. Measuring acceptance of an assistive social robot: A suggested toolkit. In Proceedings of the 18th IEEE International Symposium on Robot and Human Interactive Communication, RO-MAN 2009, Toyama, Japan, 27 September–2 October 2009; pp. 528–533. [Google Scholar]

- Zhang, B.; Wang, S.; Zhou, M.; Xu, W. An adaptive framework of real-time continuous gait phase variable estimation for lower-limb wearable robots. Robot. Auton. Syst. 2021, 143, 103842. [Google Scholar] [CrossRef]

- Umbrico, A.; Cesta, A.; Cortellessa, G.; Orlandini, A. A holistic approach to behavior adaptation for socially assistive robots. Int. J. Soc. Robot. 2020, 12, 617–637. [Google Scholar] [CrossRef]

- Cangelosi, A.; Invitto, S. Human-Robot Interaction and Neuroprosthetics: A review of new technologies. IEEE Consum. Electron. Mag. 2017, 6, 24–33. [Google Scholar] [CrossRef]

- Cesta, A.; Cortellessa, G.; Orlandini, A.; Umbrico, A. A cognitive loop for assistive robots-connecting reasoning on sensed data to acting. In Proceedings of the 2018 27th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Nanjing, China, 27–31 August 2018; pp. 826–831. [Google Scholar]

- Moreno, J.C.; Asin, G.; Pons, J.L.; Cuypers, H.; Vanderborght, B.; Lefeber, D.; Ceseracciu, E.; Reggiani, M.; Thorsteinsson, F.; Del-Ama, A.; et al. Symbiotic wearable robotic exoskeletons: The concept of the biomot project. In Proceedings of the International Workshop on Symbiotic Interaction, Berlin, Germany, 7–8 October 2015; pp. 72–83. [Google Scholar]

- Khattak, A.M.; La, V.; Hung, D.V.; Truc, P.T.H.; Hung, L.X.; Guan, D.; Pervez, Z.; Han, M.; Lee, S.; Lee, Y.K.; et al. Context-aware human activity recognition and decision making. In Proceedings of the 12th IEEE International Conference on e-Health Networking, Applications and Services, Lyon, France, 1–3 July 2010; pp. 112–118. [Google Scholar]

- Riboni, D.; Bettini, C. COSAR: Hybrid reasoning for context-aware activity recognition. Pers. Ubiquitous Comput. 2011, 15, 271–289. [Google Scholar] [CrossRef]

- Wongpatikaseree, K.; Ikeda, M.; Buranarach, M.; Supnithi, T.; Lim, A.O.; Tan, Y. Activity recognition using context-aware infrastructure ontology in smart home domain. In Proceedings of the 2012 Seventh International Conference on Knowledge, Information and Creativity Support Systems, Melbourne, VIC, Australia, 8–10 November 2012; pp. 50–57. [Google Scholar]

- Cumin, J.; Lefebvre, G.; Ramparany, F.; Crowley, J.L. A dataset of routine daily activities in an instrumented home. In Proceedings of the International Conference on Ubiquitous Computing and Ambient Intelligence, Philadelphia, PA, USA, 7–10 November 2017; pp. 413–425. [Google Scholar]

- Alshammari, T.; Alshammari, N.; Sedky, M.; Howard, C. SIMADL: Simulated activities of daily living dataset. Data 2018, 3, 11. [Google Scholar] [CrossRef]

- Winfield, A.F.; Jirotka, M. Ethical governance is essential to building trust in robotics and artificial intelligence systems. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 2018, 376, 20180085. [Google Scholar] [CrossRef]

- Boden, M.; Bryson, J.; Caldwell, D.; Dautenhahn, K.; Edwards, L.; Kember, S.; Newman, P.; Parry, V.; Pegman, G.; Rodden, T.; et al. Principles of robotics: Regulating robots in the real world. Connect. Sci. 2017, 29, 124–129. [Google Scholar] [CrossRef]

- Veruggio, G. The euron roboethics roadmap. In Proceedings of the 6th IEEE-RAS International Conference on Humanoid Robots, Genova, Italy, 4–6 December 2006; pp. 612–617. [Google Scholar]

- International Organization for Standardization. ISO 13842:2013. In Robot and Robotic Devices: Safety Requirements for Personal Care Robots; International Organization for Standardization: Geneva, Switzerland, 2013. [Google Scholar]

- British Standard Institute. BS 8611:2016. In Robots and Robotic Devices: Guide to the Ethical Design and Application of Robot and Robotic Systems; British Standard Institute: London, UK, 2016. [Google Scholar]

- Spiekermann, S. IEEE P7000—The first global standard process for addressing ethical concerns in system design. Proceedings 2017, 1, 159. [Google Scholar] [CrossRef]

| Assistive System | Application | Material Structure | Degrees of Freedom (DoF) | Assisted Body Segments | Actuation Type | Weight |

|---|---|---|---|---|---|---|

| ReWalk [12] | Assistance to stand upright and walk | Rigid materials | 6 DoF | Hip flexion/extension Knee flextion/extension | Electric motors | 23 kg |

| HAL [28] | Human gait rehabilitation, strength, augmentation | Rigid materials | 4 DoF | Hip flexion/extension Knee flextion/extension | Electric motors | 21 kg |

| REX [32] | Human locomotion in forward and backward directions, turn and climb stairs. | Rigid materials | 10 DoF | Hip flexion/extension Knee flexion/extension Posture support | Electric motors | 38 kg |

| Vanderbuilt exoskeleton [33] | Assistance for walking, sitting, standing, walking up and down stairs | Rigid materials | 4 DoF | Hip flexion/extension Knee flexion/extension | Electric motors | 12 kg |

| HandeXos- Beta [34] | Hand motion rehabilitation for multiple grip configurations | Rigid materials | 5 DoF | Index finger flexion/extension Thumb finger flexion/extension and circumduction | Electric motors | 0.42 g |

| HexoSYS [35] | Hand motion rehabilitation | Rigid materials | 4 DoF | All fingers flexion/extension and abduction/adduction | Electric motors | 1 kg |

| HES Hand [36] | Hand motion rehabilitation to recover hand motor skills | Rigid materials | 5 DoF | All fingers flexion/extension | Electric motors | 1.5 kg |

| Soft-inflatable knee exosuit [42] | Gait training for stroke rehabilitation | Soft pneumatic materials | 1 DoF | Knee flexion | Pneumatic system, inflatable actuators | 0.16 kg |

| Soft hip exosuit [44] | Assistance for level-ground walking | Soft textile materials | 1 DoF | Hip extension | Electric motors, fabric bands | 0.17 kg |

| Multi-articular hip and knee exosuit [46] | Assistance to gait impairments in sit-to-stand and stair ascent | Soft materials and Bowen cables | 1 DoF | Hip and knee extension | Electrical motors, Bowden cables | - |

| Soft robotic glove for assistance at home [47] | Assistance to hand rehabilitation for grasping movements | Soft elastomeric chambers | 3 DoF | All fingers flexion/extension | Pneumatic system | 0.5 kg |

| Soft wearable wrist [48] | Assistance for rehabilitation of writs movement | Soft reverse pneumatic artificial muscles | 2 DoF | Wrist flexion/extension and abduction/adduction | Pneumatic system | - |

| Soft robotic elbow sleeve [49] | Assistance for rehabilitation of elbow movements | Elastomeric and fabric-based pneumatic actuators | 2 DoF | Elbow flexion and extension | Pneumatic system | - |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Martinez-Hernandez, U.; Metcalfe, B.; Assaf, T.; Jabban, L.; Male, J.; Zhang, D. Wearable Assistive Robotics: A Perspective on Current Challenges and Future Trends. Sensors 2021, 21, 6751. https://doi.org/10.3390/s21206751

Martinez-Hernandez U, Metcalfe B, Assaf T, Jabban L, Male J, Zhang D. Wearable Assistive Robotics: A Perspective on Current Challenges and Future Trends. Sensors. 2021; 21(20):6751. https://doi.org/10.3390/s21206751

Chicago/Turabian StyleMartinez-Hernandez, Uriel, Benjamin Metcalfe, Tareq Assaf, Leen Jabban, James Male, and Dingguo Zhang. 2021. "Wearable Assistive Robotics: A Perspective on Current Challenges and Future Trends" Sensors 21, no. 20: 6751. https://doi.org/10.3390/s21206751

APA StyleMartinez-Hernandez, U., Metcalfe, B., Assaf, T., Jabban, L., Male, J., & Zhang, D. (2021). Wearable Assistive Robotics: A Perspective on Current Challenges and Future Trends. Sensors, 21(20), 6751. https://doi.org/10.3390/s21206751