Finger-Vein Recognition Using Heterogeneous Databases by Domain Adaption Based on a Cycle-Consistent Adversarial Network

Abstract

1. Introduction

2. Related Work

2.1. Non-Training-Based Methods

2.2. Training-Based Methods

3. Contributions

- This is the first study to examine GAN-based domain adaptation to solve the problem of performance deterioration of the finger-vein recognition system in a heterogeneous cross dataset.

- Domain adaptation was performed through a CycleGAN so that the existing training-based finger-vein recognition method can handle unobserved data. Each finger-vein dataset has different numbers of classes. Therefore, we used CycleGAN, which can deal with unpaired datasets.

- The proposed finger-vein recognition system does not have to be trained again when unobserved data are input into the system.

- The experiments with two open databases of SDUMLA-HMT-DB and HKPolyU-DB showed that the equal error rate (EER) of finger-vein recognition was 0.85% in case of training with SDUMLA-HMT-DB and testing with HKPolyU-DB, which is the improvement of 33.1% compared to the second best method. The EER was 3.4% in case of training with HKPolyU-DB and testing with SDUMLA-HMT-DB, which is also the improvement of 14.1% compared to the second best method.

- CycleGAN-based domain adaptation models and finger-vein recognition models trained with our domain adapted dataset proposed in this study are disclosed for a fair assessment of performance [25] by other researchers. On the website (http://dm.dgu.edu/link.html) explained in [25], we include the instructions of how other researchers can obtain our CycleGAN-based domain adaptation models and finger-vein recognition models.

4. Proposed Method

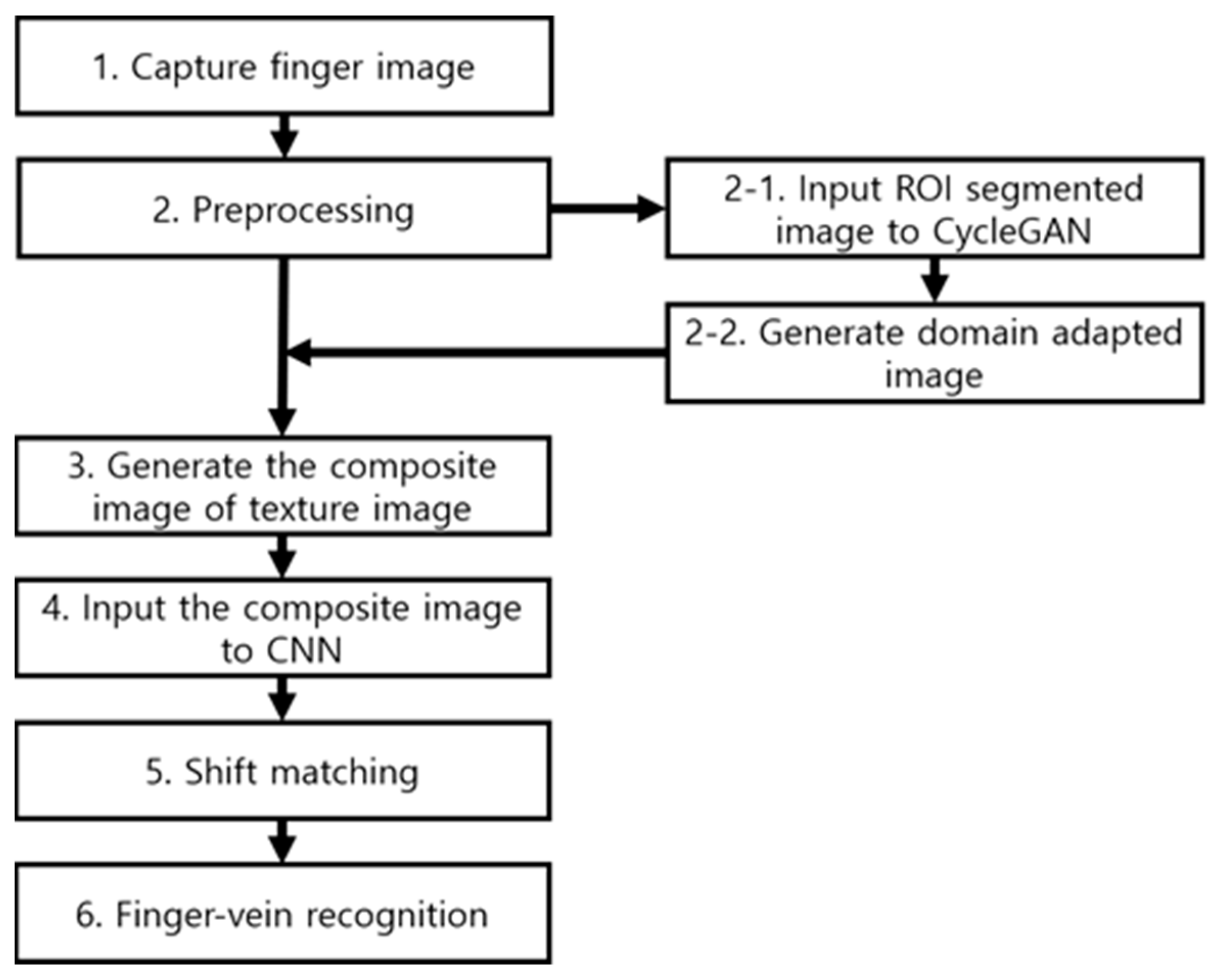

4.1. Overview of the Proposed Method

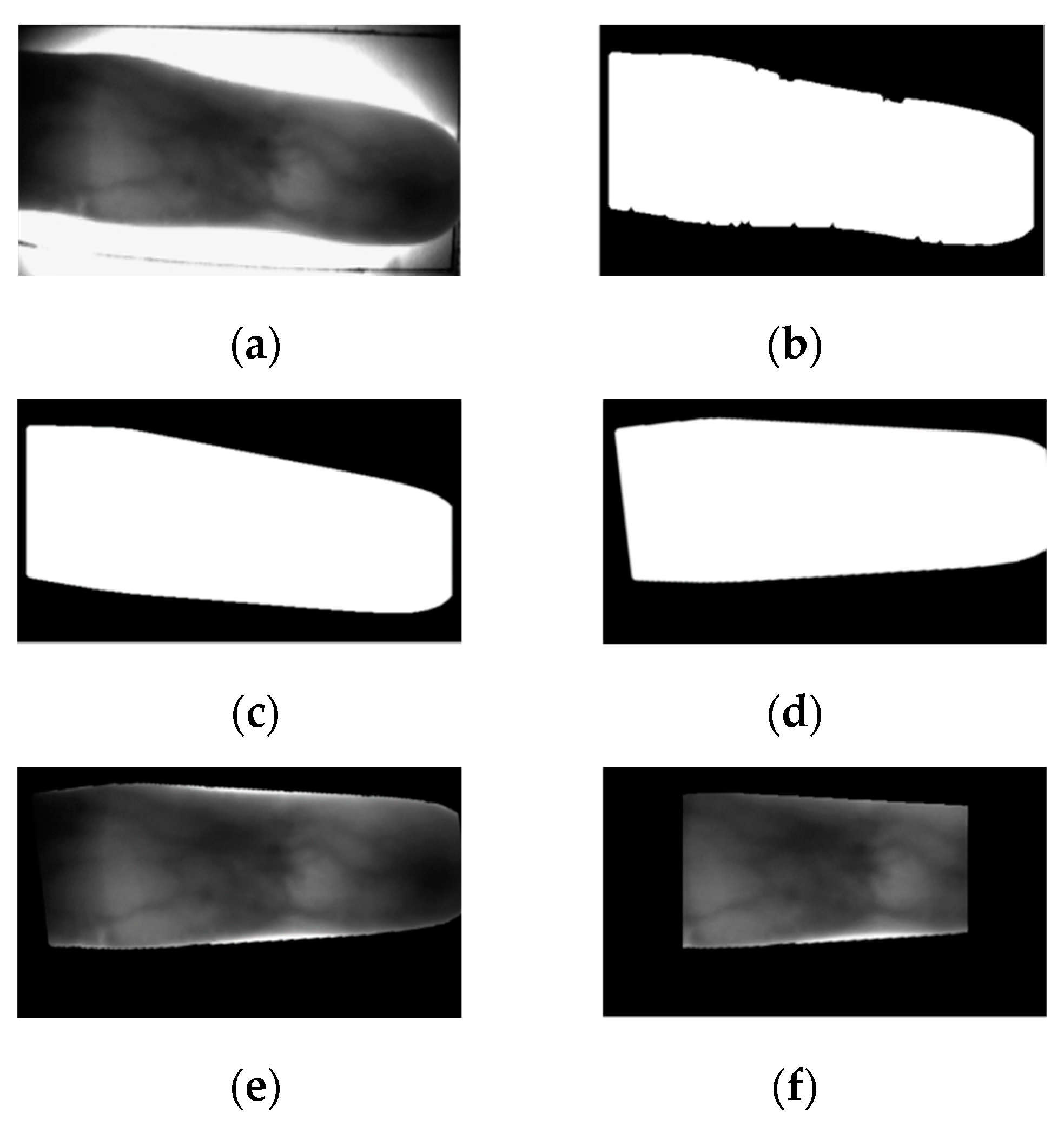

4.2. Preprocessing

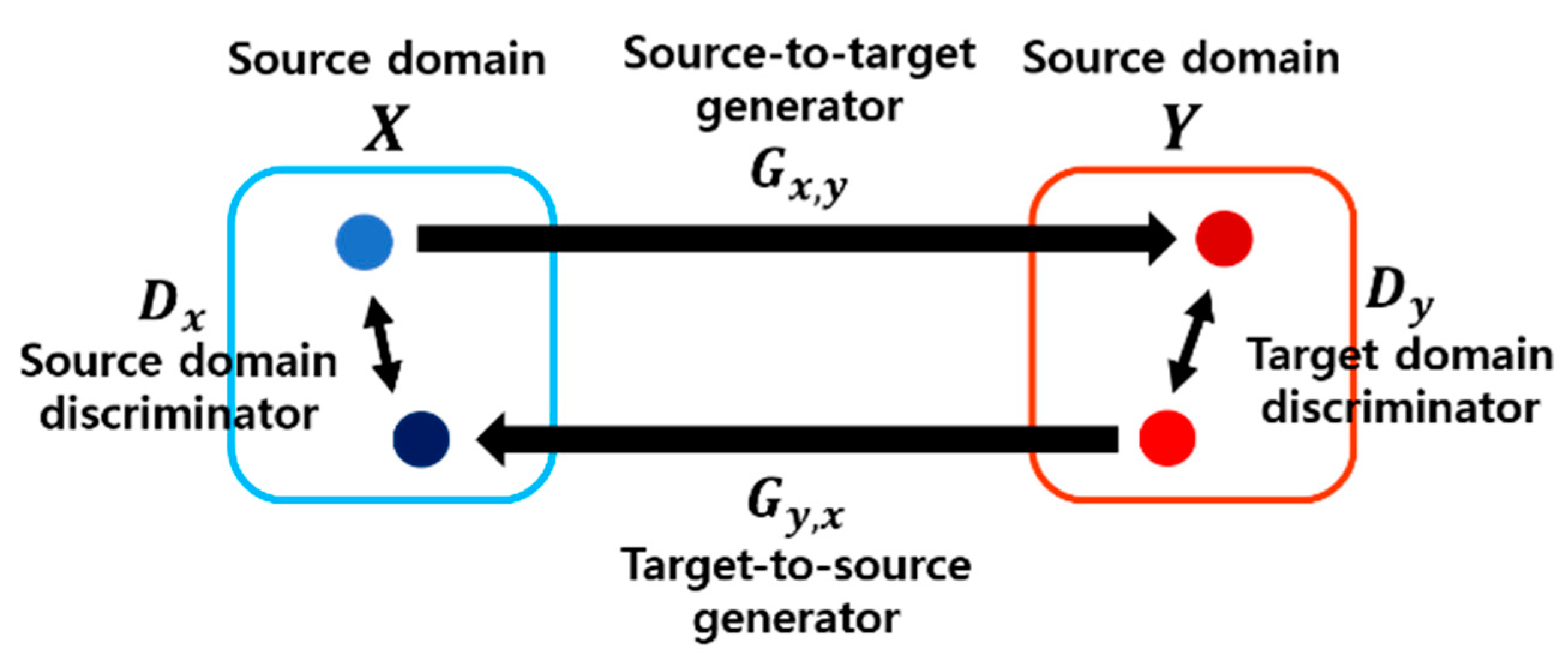

4.3. Domain Adaptation

4.3.1. CycleGAN Architecture

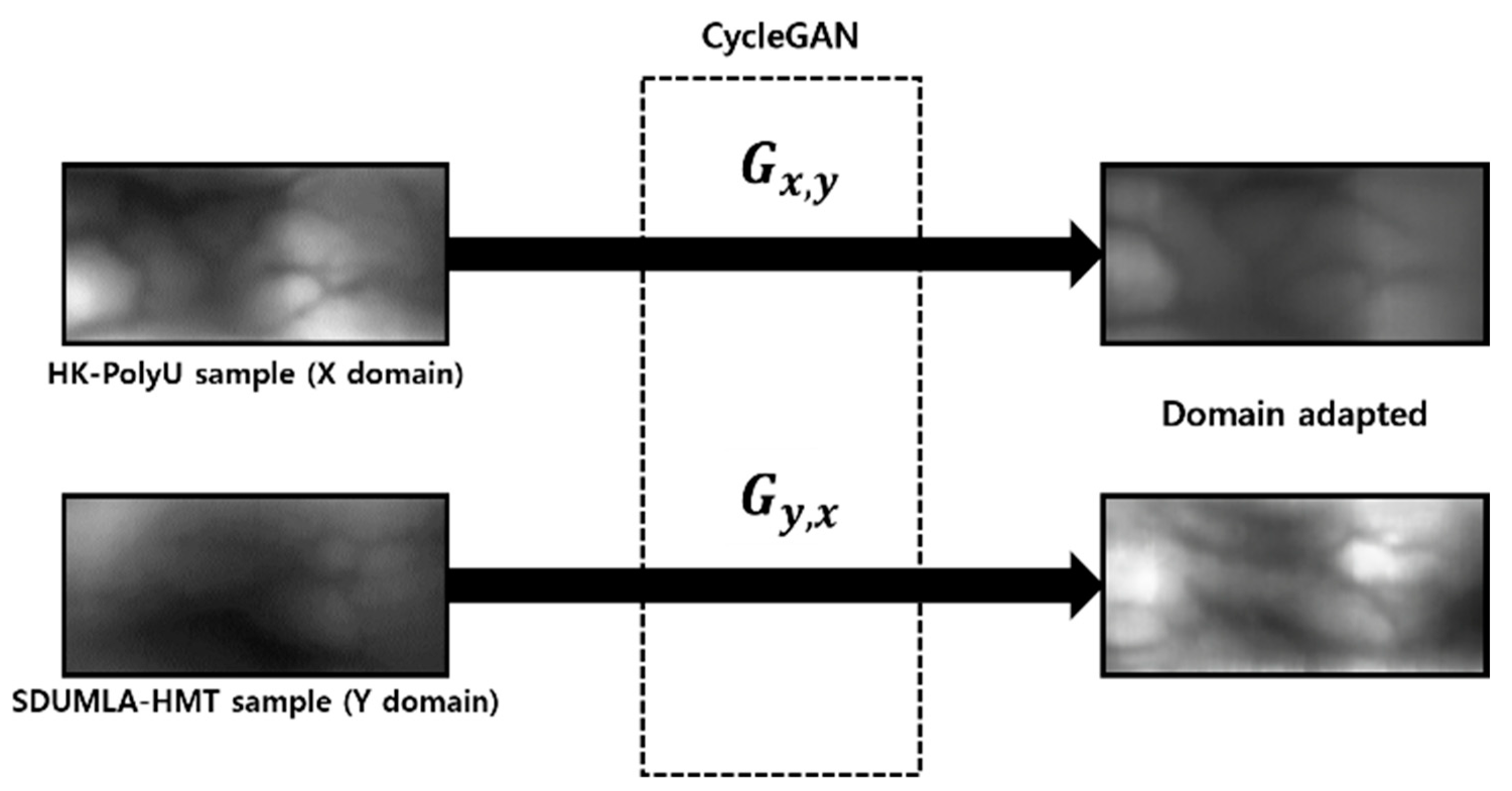

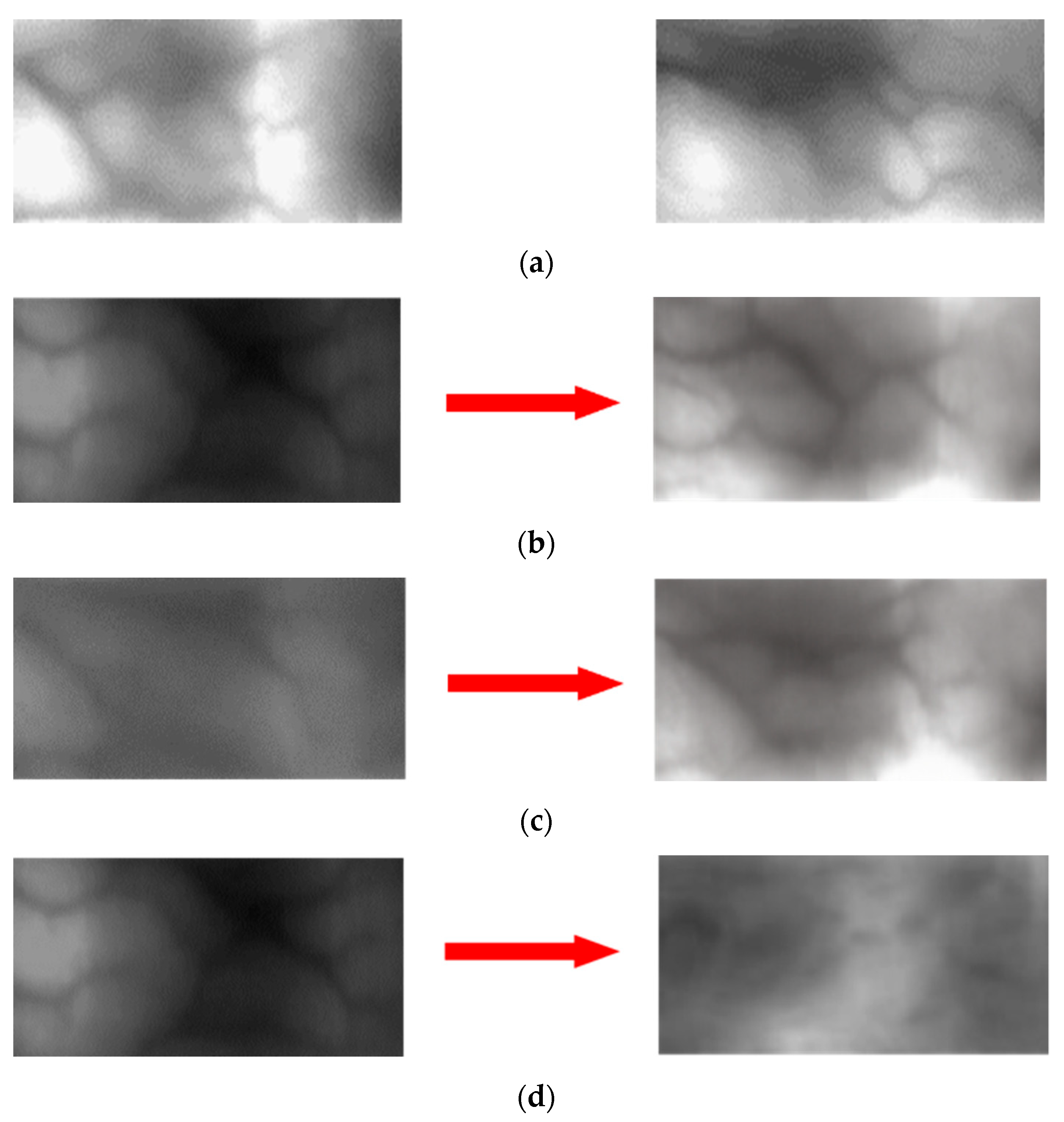

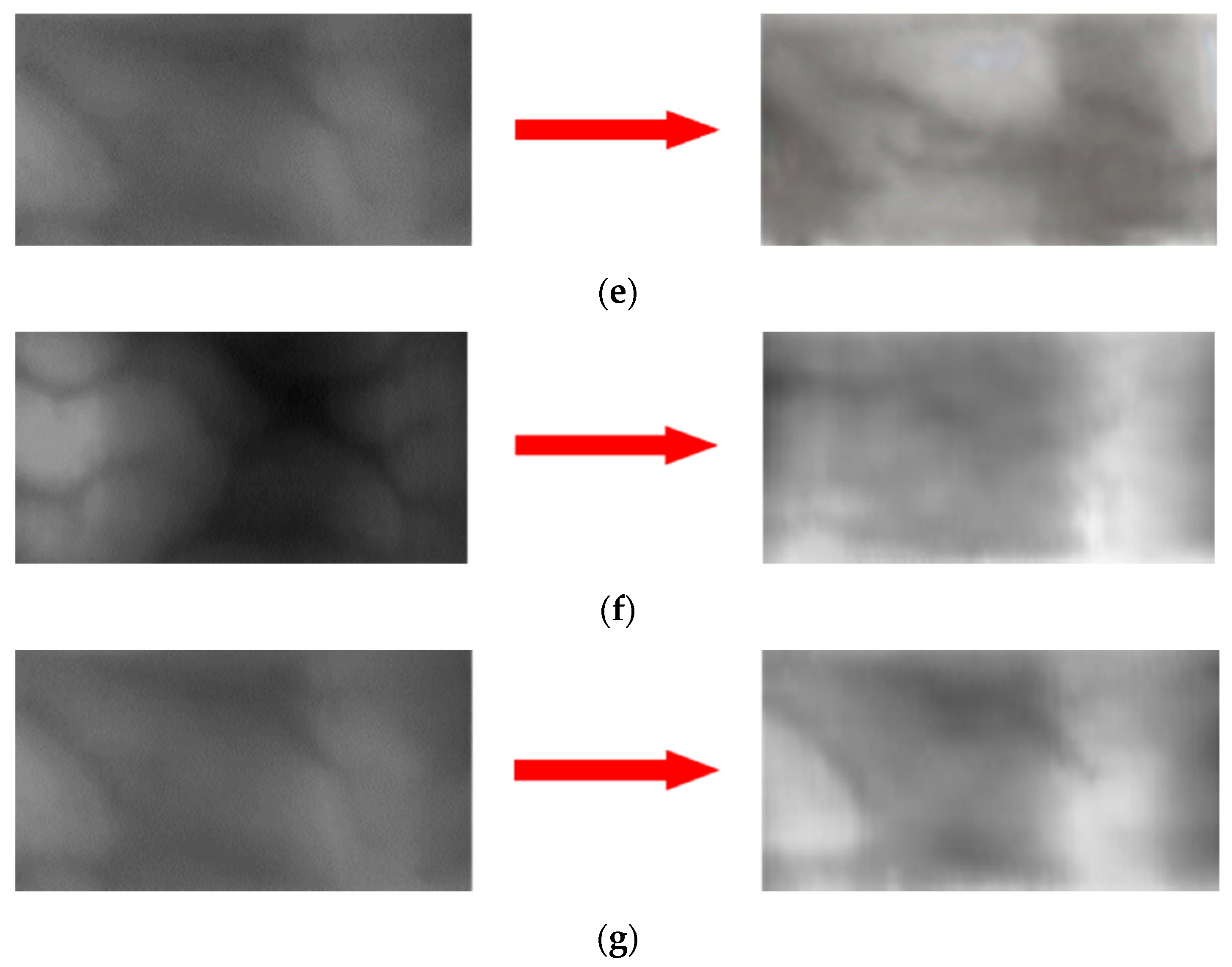

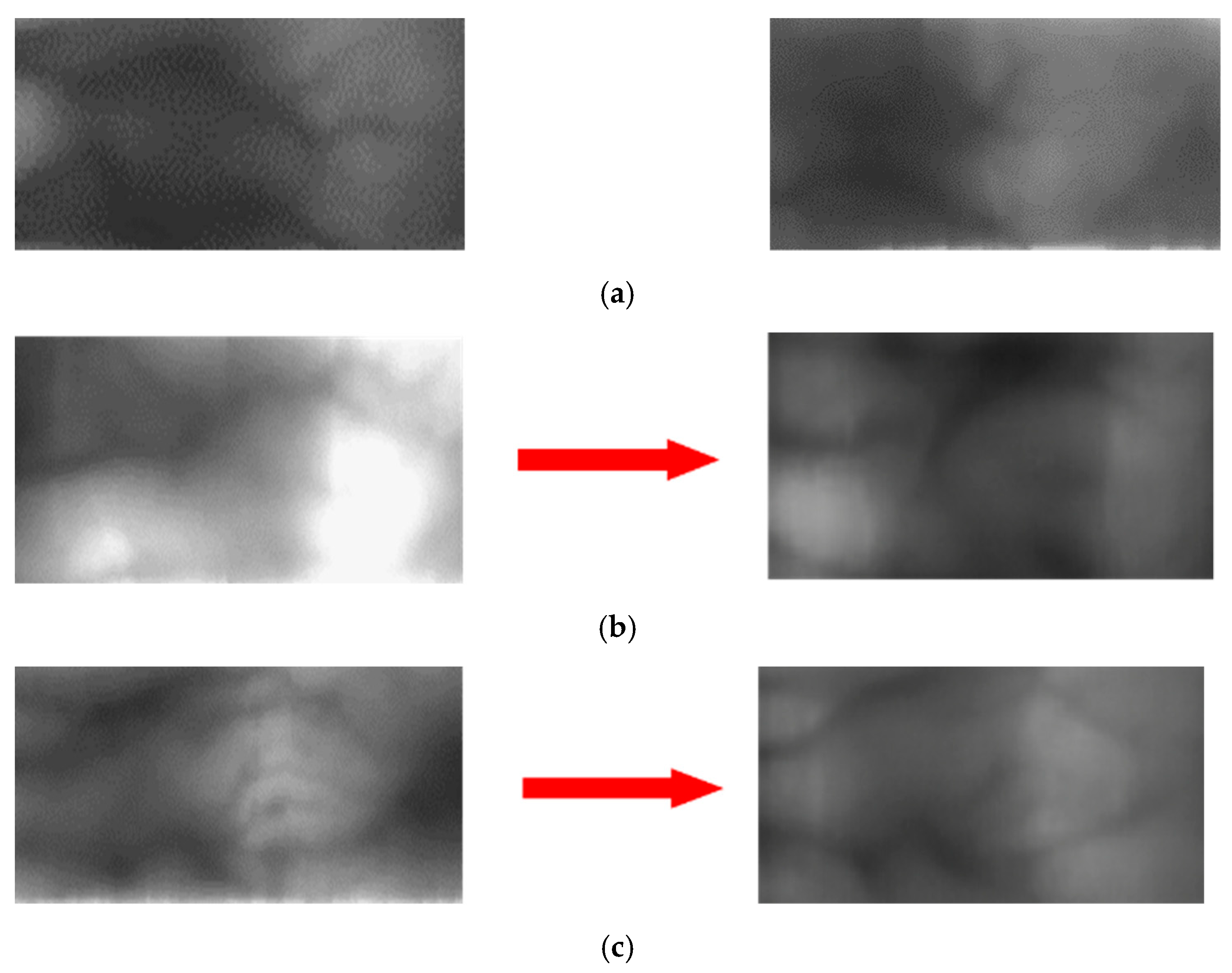

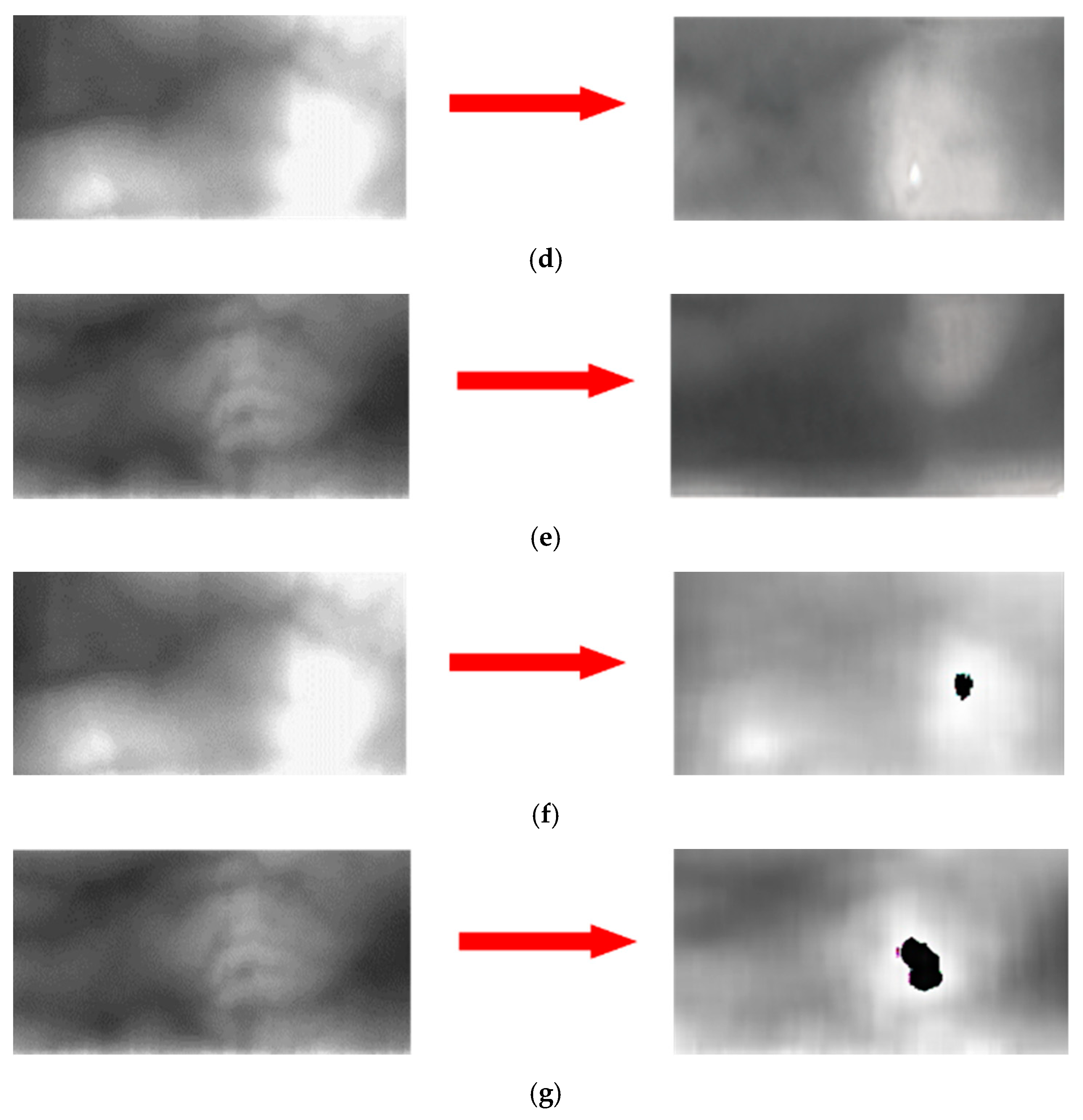

4.3.2. Generating a Domain Adapted Finger-Vein Image

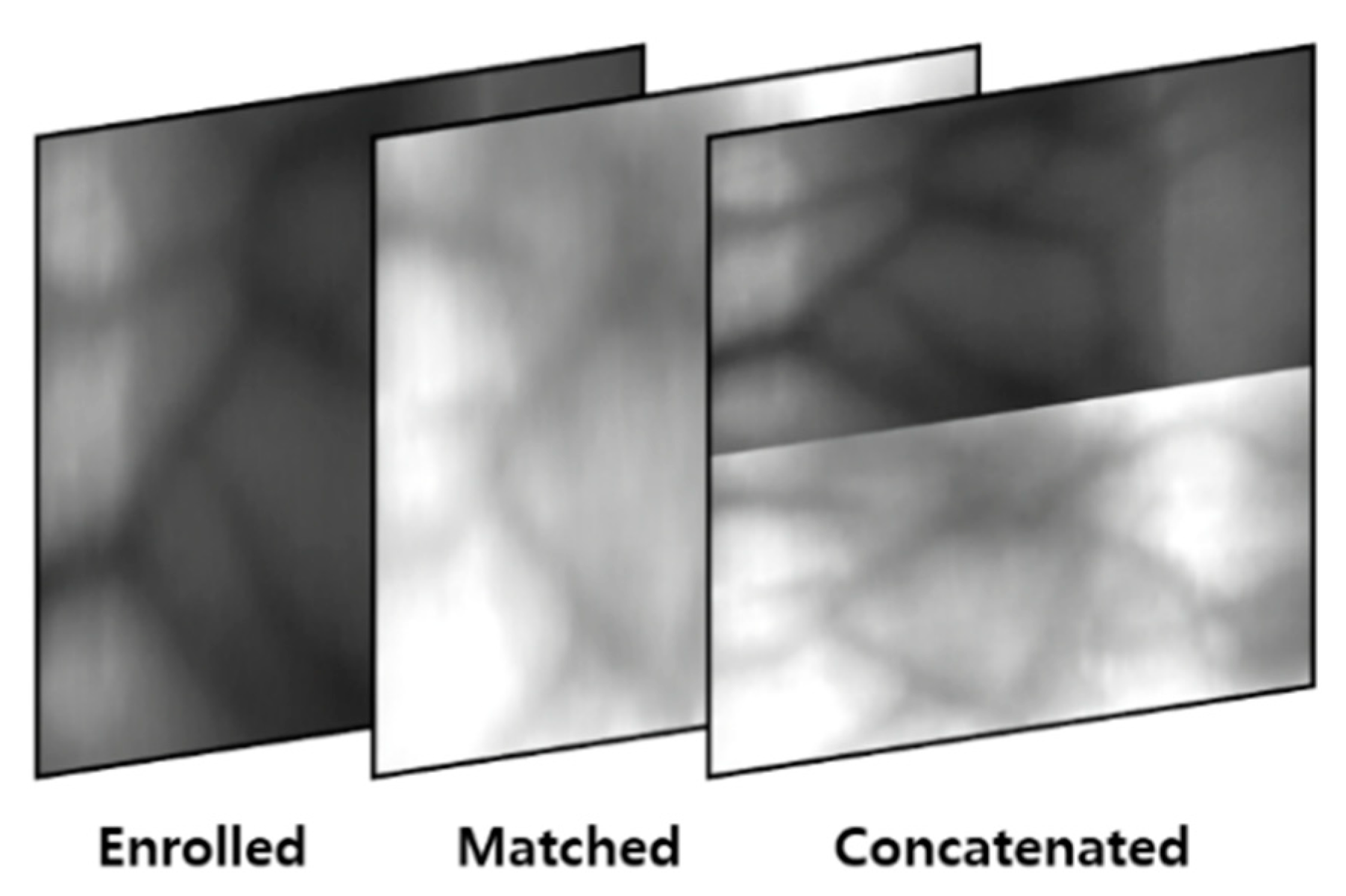

4.4. Generating Composite Image

4.5. Finger-Vein Recognition Based on Deep Densenet and Shift Matching

5. Experimental Results

5.1. Experimental Environments

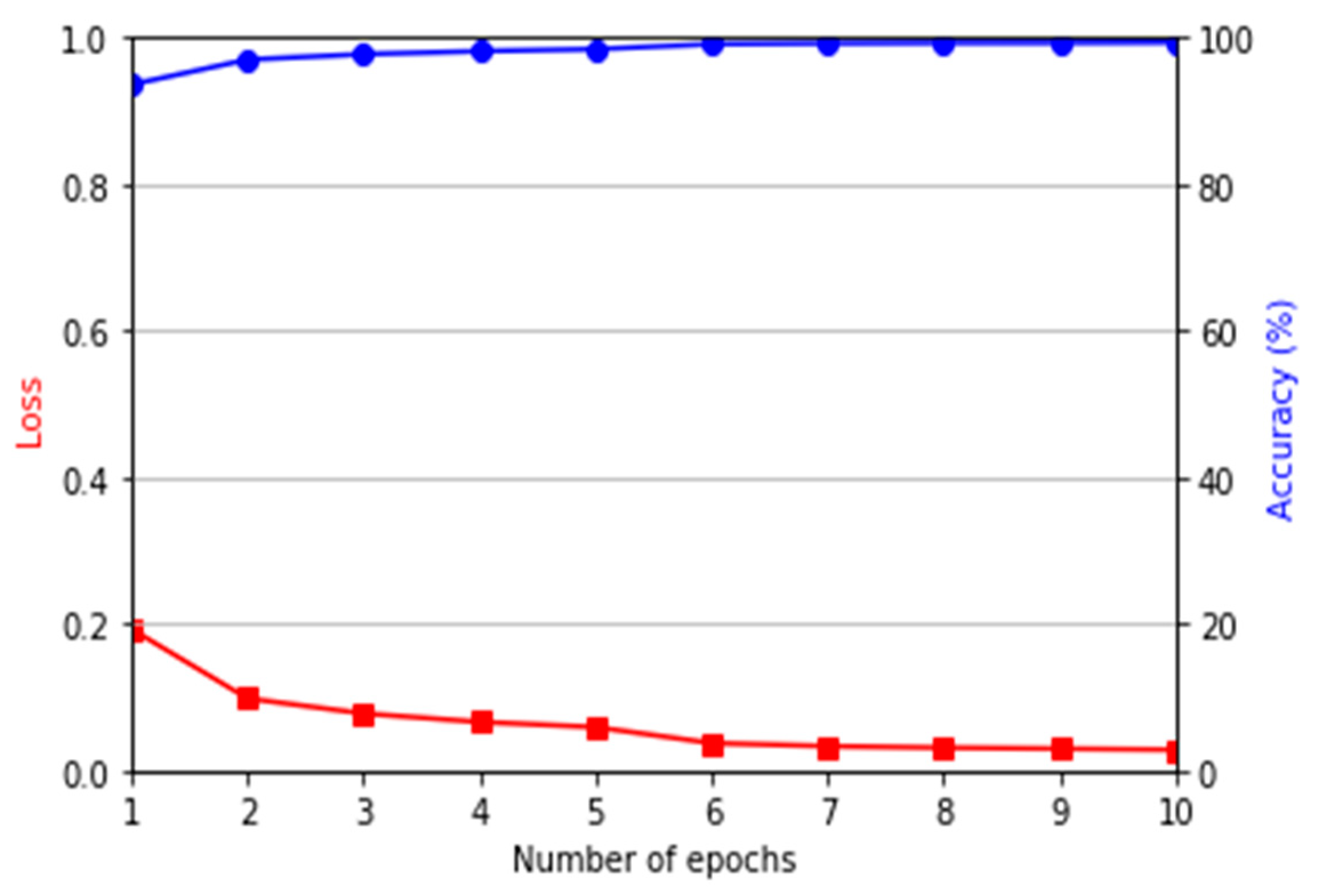

5.2. Training of the Domain Adaptation Model

5.3. Training of Finger-Vein Recognition Model

5.4. Evaluation Metrics

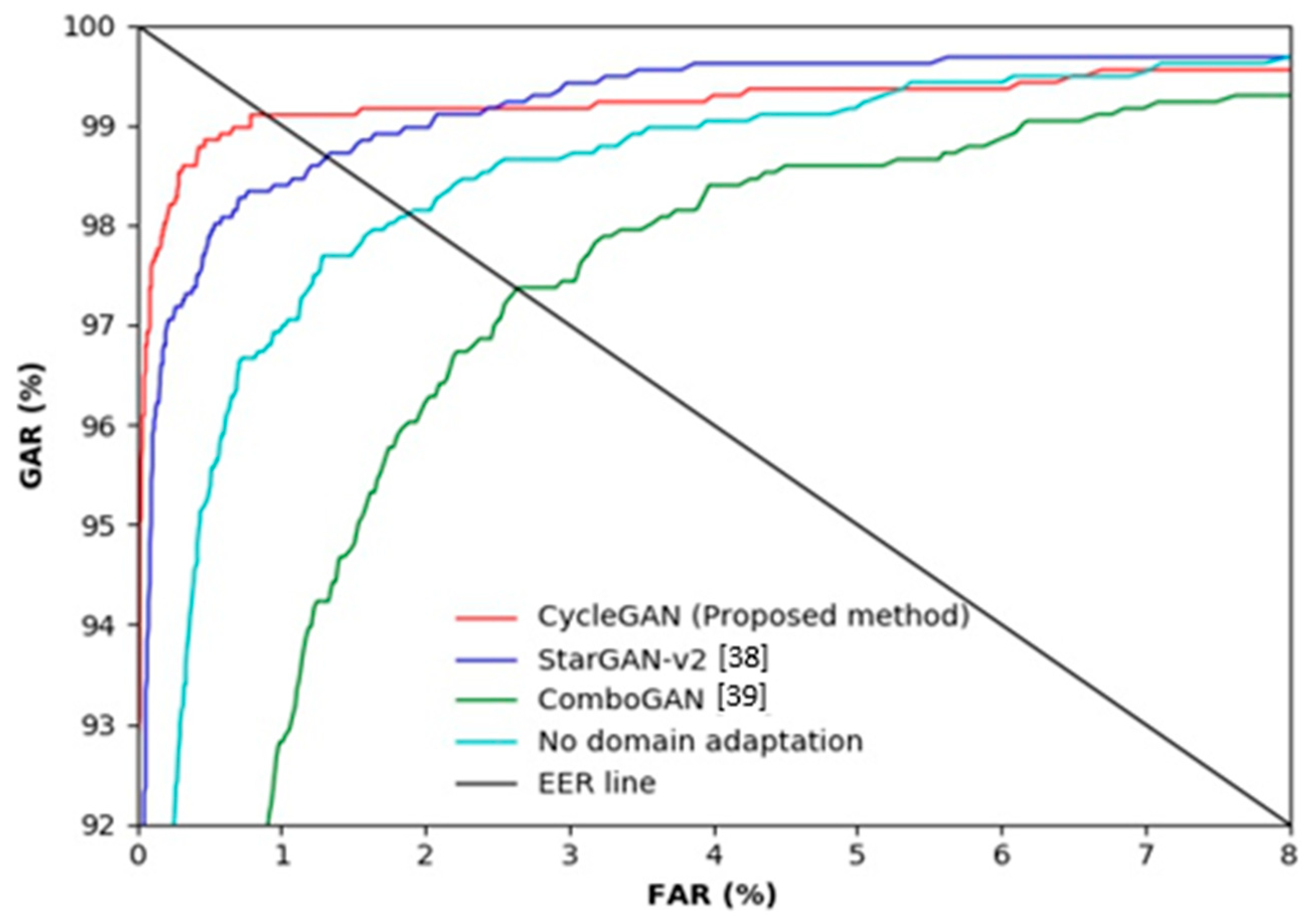

5.5. Testing with HKPolyU-DB after Training with SDUMLA-HMT-DB (including Ablation Study)

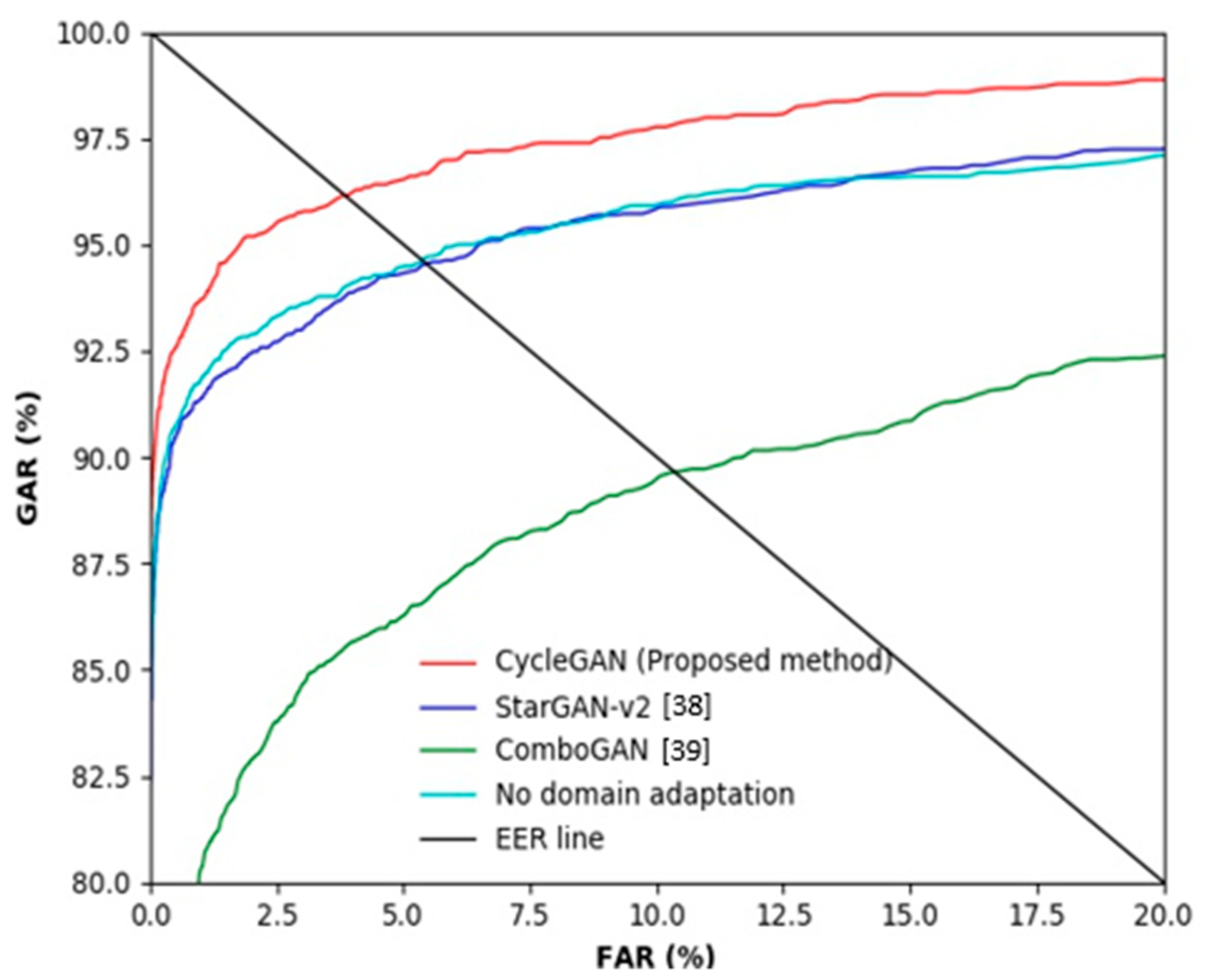

5.6. Testing with SDUMLA-HMT-DB after Training with HKPolyU-DB (including Ablation Study)

6. Discussion

7. Conclusions

Author Contributions

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lee, E.C.; Jung, H.; Kim, D. New Finger Biometric Method Using Near Infrared Imaging. Sensors 2011, 11, 2319–2333. [Google Scholar] [CrossRef] [PubMed]

- Meng, X.; Yang, G.; Yin, Y.; Xiao, R. Finger Vein Recognition Based on Local Directional Code. Sensors 2012, 12, 14937–14952. [Google Scholar] [CrossRef] [PubMed]

- Peng, J.; Wang, N.; El-Latif, A.A.A.; Li, Q.; Niu, X. Finger-vein Verification Using Gabor Filter and SIFT Feature Matching. In Proceedings of the 8th International Conference on Intelligent Information Hiding and Multimedia Signal Processing, Piraeus, Greece, 18–20 July 2012; pp. 45–48. [Google Scholar]

- Song, J.M.; Kim, W.; Park, K.R. Finger-vein Recognition Based on Deep Densenet Using Composite Image. IEEE Access 2019, 7, 66845–66863. [Google Scholar] [CrossRef]

- Kim, W.; Song, J.M.; Park, K.R. Multimodal Biometric Recognition Based on Convolutional Neural Network by the Fusion of Finger-vein And Finger Shape Using Near-Infrared (NIR) Camera Sensor. Sensors 2018, 18, 2296. [Google Scholar] [CrossRef] [PubMed]

- Lu, Z.; Li, M.; Zhang, J. Automatic Illumination Control Algorithm for Capturing the Finger Vein Image. In Proceedings of the 13th World Congress on Intelligent Control and Automation, Changsha, China, 4–8 July 2018; pp. 881–886. [Google Scholar]

- Jia, W.; Hu, R.-X.; Gui, J.; Zhao, Y.; Ren, X.-M. Palmprint recognition across different devices. Sensors 2012, 12, 7938–7964. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Zheng, X.; Wang, C. Dorsal Hand Vein Recognition across Different Devices. In Proceedings of the In Chinese Conference on Biometric Recognition, Chengdu, China, 14–16 October 2016; pp. 307–316. [Google Scholar]

- Wang, Y.; Zheng, X. Cross-device Hand Vein Recognition Based on Improved SIFT. Int. J. Wavelets Multiresolution Inf. Process. 2018, 16, 1840010. [Google Scholar] [CrossRef]

- Alshehri, H.; Hussain, M.; Aboalsamh, H.A.; Zuair, M.A.A. Cross-Sensor Fingerprint Matching Method Based on Orientation, Gradient, And Gabor-Hog Descriptors with Score Level Fusion. IEEE Access 2018, 6, 28951–28968. [Google Scholar] [CrossRef]

- Ghiani, L.; Mura, V.; Tuveri, P.; Marcialis, G.L. On the Interoperability of Capture Devices in Fingerprint Presentation Attacks Detection. In Proceedings of the First Italian Conference on Cybersecurity, Venice, Italy, 17–20 January 2017; pp. 66–75. [Google Scholar]

- Kute, R.S.; Vyas, V.; Anuse, A. Cross Domain Association Using Transfer Subspace Learning. Evol. Intell. 2019, 12, 201–209. [Google Scholar] [CrossRef]

- Gajawada, R.; Popli, A.; Chugh, T.; Namboodiri, A.; Jain, A.K. Universal Material Translator: Towards Spoof Fingerprint Generalization. In Proceedings of the 2019 International Conference on Biometrics, Crete, Greece, 4–9 June 2019; pp. 1–8. [Google Scholar]

- Anand, V.; Kanhangad, V. Unsupervised Domain Adaptation for Cross-sensor Pore Detection in High-resolution Fingerprint Images. arXiv 2020, arXiv:1908.10701v2. Available online: https://arxiv.org/abs/1908.10701 (accessed on 12 December 2020).

- Shao, H.; Zhong, D.; Li, Y. PalmGAN for Cross-domain Palmprint Recognition. In Proceedings of the 2019 IEEE International Conference on Multimedia and Expo, Shanghai, China, 8–12 July 2019; pp. 1390–1395. [Google Scholar]

- Shao, H.; Zhong, D.; Du, X. Cross-domain Palmprint Recognition Based on Transfer Convolutional Autoencoder. In Proceedings of the 2019 IEEE International Conference on Image Processing, Taipei, Taiwan, 22–25 September 2019; pp. 1153–1157. [Google Scholar]

- Malhotra, A.; Sankaran, A.; Vatsa, M.; Singh, R. On Matching Finger-selfies Using Deep Scattering Networks. IEEE Trans. Biometrics Behav. Identit. Sci. 2020, 2, 350–362. [Google Scholar] [CrossRef]

- Jalilian, E.; Uhl, A. Finger-vein Recognition Using Deep Fully Convolutional Neural Semantic Segmentation Networks: The Impact of Training Data. In Proceedings of the 2018 IEEE International Workshop on Information Forensics and Security, Hong Kong, China, 11–13 December 2018; pp. 1–8. [Google Scholar]

- Dabouei, A.; Kazemi, H.; Iranmanesh, S.M.; Dawson, J.; Nasrabadi, N.M. ID Preserving Generative Adversarial Network for Partial Latent Fingerprint Reconstruction. In Proceedings of the 2018 IEEE 9th International Conference on Biometrics Theory, Applications and Systems, Redondo Beach, CA, USA, 22–25 October 2018; pp. 1–10. [Google Scholar]

- Nogueira, R.F.; de Alencar Lotufo, R.; Machado, R.C. Fingerprint Liveness Detection Using Convolutional Neural Networks. IEEE Trans. Inf. Forensics Secur. 2016, 11, 1206–1213. [Google Scholar] [CrossRef]

- Chugh, T.; Cao, K.; Jain, A.K. Fingerprint Spoof Buster: Use of Minutiae-centered Patches. IEEE Trans. Inf. Forensics Secur. 2018, 13, 2190–2202. [Google Scholar] [CrossRef]

- Chui, K.T.; Lytras, M.D.; Vasant, P. Combined Generative Adversarial Network and Fuzzy C-Means Clustering for Multi-Class Voice Disorder Detection with an Imbalanced Dataset. Appl. Sci. 2020, 10, 4571. [Google Scholar] [CrossRef]

- Isola, P.; Zhu, J.-Y.; Zhou, T.; Efros, A.A. Image-to-image Translation with Conditional Adversarial Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- Zhu, J.-Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired Image-to-image Translation Using Cycle-consistent Adversarial Networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; p. 2223. [Google Scholar]

- Dongguk CycleGAN-Based Domain Adaptation and DenseNet-Based Finger-Vein Recognition Models (DCDA&DFRM) with Algorithms. Available online: http://dm.dgu.edu/link.html (accessed on 9 August 2020).

- Noh, K.J.; Choi, J.; Hong, J.S.; Park, K.R. Finger-vein Recognition Based on Densely Connected Convolutional Network Using Score-level Fusion with Shape and Texture Images. IEEE Access 2020, 8, 96748–96766. [Google Scholar] [CrossRef]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing, 3th ed.; Prentice Hall: Upper Saddle River, NJ, USA, 2010. [Google Scholar]

- Kumar, A.; Zhang, D. Personal Recognition Using Hand Shape and Texture. IEEE Trans. Image Process. 2006, 15, 2454–2461. [Google Scholar] [CrossRef] [PubMed]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar]

- Yin, Y.; Liu, L.; Sun, X. SDUMLA-HMT: A Multimodal Biometric Database. In Proceedings of the 6th Chinese Conference on Biometric Recognition, Beijing, China, 3–4 December 2011; pp. 260–268. [Google Scholar]

- Kumar, A.; Zhou, Y. Human Identification Using Finger Images. IEEE Trans. Image Process. 2012, 21, 2228–2244. [Google Scholar] [CrossRef]

- NVIDIA GeForce GTX 1070. Available online: https://www.nvidia.com/en-in/geforce/products/10series/geforce-gtx-1070/ (accessed on 10 July 2020).

- CUDA. Available online: https://developer.nvidia.com/cuda-90-download-archive (accessed on 10 July 2020).

- CUDNN. Available online: https://developer.nvidia.com/cudnn (accessed on 10 July 2020).

- Tensorflow: The Python Deep Learning Library. Available online: https://www.tensorflow.org/versions/r1.15/api_docs/python/tf (accessed on 10 July 2020).

- Python. Available online: https://www.python.org/downloads/release/python-371 (accessed on 10 July 2020).

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the 3rd International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015; pp. 1–15. [Google Scholar]

- Choi, Y.; Uh, Y.; Yoo, J.; Ha, J.-W. Stargan v2: Diverse Image Synthesis for Multiple Domains. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 8188–8197. [Google Scholar]

- Anoosheh, A.; Agustsson, E.; Timofte, R.; Van Gool, L. Combogan: Unrestrained Scalability for Image Domain Translation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 783–790. [Google Scholar]

- Huang, B.; Dai, Y.; Li, R.; Tang, D.; Li, W. Finger-vein Authentication Based on Wide Line Detector and Pattern Normalization. In Proceedings of the 20th International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 1269–1272. [Google Scholar]

- Miura, N.; Nagasaka, A.; Miyatake, T. Extraction of Finger-vein Patterns Using Maximum Curvature Points in Image Profiles. IEICE Trans. Inf. Syst. 2007, E90-D, 1185–1194. [Google Scholar] [CrossRef]

- Liu, F.; Yang, G.; Yin, Y.; Wang, S. Singular Value Decomposition Based Minutiae Matching Method for Finger Vein Recognition. Neurocomputing 2014, 145, 75–89. [Google Scholar] [CrossRef]

- Gupta, P.; Gupta, P. An Accurate Finger Vein Based Verification System. Digit. Signal Process. 2015, 38, 43–52. [Google Scholar] [CrossRef]

- Miura, N.; Nagasaka, A.; Miyatake, T. Feature Extraction of Fingervein Patterns Based on Repeated Line Tracking and Its Application to Personal Identification. Mach. Vis. Appl. 2004, 15, 194–203. [Google Scholar] [CrossRef]

- Dong, L.; Yang, G.; Yin, Y.; Liu, F.; Xi, X. Finger Vein Verification Based on a Personalized Best Patches Map. In Proceedings of the IEEE International Joint Conference on Biometrics, Clearwater, FL, USA, 29 September–2 October 2014; pp. 1–8. [Google Scholar]

- Liu, F.; Yin, Y.; Yang, G.; Dong, L.; Xi, X. Finger Vein Recognition with Superpixel-based Features. In Proceedings of the IEEE International Joint Conference on Biometrics, Clearwater, FL, USA, 29 September–2 October 2014; pp. 1–8. [Google Scholar]

- Xi, X.; Yang, L.; Yin, Y. Learning Discriminative Binary Codes for Finger Vein Recognition. Pattern Recognit. 2017, 66, 26–33. [Google Scholar] [CrossRef]

- Joseph, R.B.; Ezhilmaran, D. An Efficient Approach to Finger Vein Pattern Extraction Using Fuzzy Rule-based System. In Proceedings of the 5th Innovations in Computer Science and Engineering, Hyderabad, India, 16–17 August 2017; pp. 435–443. [Google Scholar]

- Pham, T.D.; Park, Y.H.; Nguyen, D.T.; Kwon, S.Y.; Park, K.R. Nonintrusive Finger-vein Recognition System Using NIR Image Sensor and Accuracy Analyses According to Various Factors. Sensors 2015, 15, 16866–16894. [Google Scholar] [CrossRef] [PubMed]

- Yang, L.; Yang, G.; Xi, X.; Meng, X.; Zhang, C.; Yin, Y. Tri-branch Vein Structure Assisted Finger vein Recognition. IEEE Access 2017, 5, 21020–21028. [Google Scholar] [CrossRef]

| Layer | Filter (Number/Size/Stride) | Input Size | Output Size |

|---|---|---|---|

| Input layer | 256 × 256 × 3 (×2) | 256 × 256 × 6 | |

| Conv1 * | 64/4 × 4 × 3/2 | 256 × 256 × 6 | 128 × 128 × 64 |

| Conv2 * | 128/4 × 4 × 64/2 | 128 × 128 × 64 | 64 × 64 × 128 |

| Conv3 * | 256/4 × 4 × 128/2 | 64 × 64 × 128 | 32 × 32 × 256 |

| Conv4 * | 512/4 × 4 × 256/1 | 32 × 32 × 256 | 31 × 31 × 512 |

| Conv5 | 1/4 × 4 × 512/1 | 31 × 31 × 512 | 30 × 30 × 1 |

| Layer | Filter (Number/Size/Stride) | Input Size | Output Size |

|---|---|---|---|

| Input layer | 256 × 256 × 3 | 256 × 256 × 3 | |

| Conv1 | 64/7 × 7 × 3/1 | 256 × 256 × 3 | 256 × 256 × 64 |

| Conv2 * | 128/3 × 3 × 64/2 | 256×256×64 | 128 × 128 × 128 |

| Conv3 * | 256/3 × 3 × 128/2 | 128 × 128 × 128 | 64 ×64 × 256 |

| Res1 | (256/3 × 3 × 256/1) × 3 ** | 64 × 64 × 256 | 64 ×64 × 256 |

| Res2 | (256/3 × 3 ×256/1) × 3 ** | 64 × 64 × 256 | 64 ×64 × 256 |

| Res3 | (256/3 × 3 × 256/1) × 3 ** | 64 × 64 × 256 | 64 ×64 × 256 |

| Res4 | (256/3 × 3 × 256/1) × 3 ** | 64 × 64 × 256 | 64 ×64 × 256 |

| Res5 | (256/3 × 3 × 256/1) × 3 ** | 64 × 64 × 256 | 64 ×64 × 256 |

| Res6 | (256/3 × 3 × 256/1) × 3 ** | 64 × 64 × 256 | 64 ×64 × 256 |

| Res7 | (256/3 × 3 × 256/1) × 3 ** | 64 × 64 × 256 | 64 ×64 × 256 |

| Res8 | (256/3 × 3 × 256/1) × 3 ** | 64 × 64 × 256 | 64 ×64 × 256 |

| Res9 | (256/3 × 3 × 256/1) × 3 ** | 64 × 64 × 256 | 64 ×64 × 256 |

| Up-conv1 | 128/3 × 3 × 256/2 | 64 × 64 × 256 | 128 ×128 × 128 |

| Up-conv2 | 64/3 × 3 × 256/2 | 128 × 128 × 128 | 256 × 256 × 64 |

| Conv4 | 3/7 × 7 × 3/1 | 256 × 256 × 64 | 256 × 256 × 3 |

| Layer | Filter (Number/Size/Stride) | Input Size | Output Size |

|---|---|---|---|

| Input layer | 224 × 224 × 3 | 224 × 224 × 3 | |

| Conv | (96/7 × 7 × 96/2) | 224 × 224 × 3 | 112 × 112 × 96 |

| Max pool | (96/2 × 2 × 1/2) | 112 × 112 × 96 | 57 × 57 × 96 |

| Dense block | (6/(1 × 1 × 192, 3 × 3 × 48)/1) | 57 × 57 × 96 | 57 × 57 × 384 |

| Transition block | (1/(1 × 1 × 192, 2 × 2 × 192) */1) | 57 × 57 × 384 | 29 × 29 × 192 |

| Dense block | (12/(1 × 1 × 192, 3 × 3 × 48)/1) | 29 × 29 × 192 | 29 × 29 × 768 |

| Transition block | (1/(1 × 1 × 384, 2 × 2 × 384) */1) | 29 × 29 × 768 | 15 × 15 × 384 |

| Dense block | (36/(1 × 1 × 192, 3 × 3 × 48)/1) | 15 × 15 × 384 | 15 × 15 × 2112 |

| Transition block | (1/(1 × 1 × 1056, 2 × 2 × 1056) */1) | 15 × 15 × 2112 | 8 × 8 × 1056 |

| Dense block | (24/(1 × 1 × 192, 3 × 3 × 48)/1) | 8 × 8 × 1056 | 8 × 8 × 2208 |

| Global average pool | (2208/8 × 8 × 1/1) | 8 × 8 × 2208 | 1 × 1 × 2208 |

| Fully connected layer | 1 × 1 × 2208 | 1 × 1 × 2 |

| Database | Subset | Classes | Number of Original Images | Number of Augmented Images |

|---|---|---|---|---|

| HKPolyU-DB | Training | 156 | 936 | 4680 |

| Test | 156 | 936 | - | |

| SDUMLA-HMT-DB | Training | 318 | 1908 | 9540 |

| Test | 318 | 1908 | - |

| Training of CycleGAN | Image Generation by CycleGAN | Training of Finger-Vein Recognition Model | Testing of Finger-Vein Recognition Model |

|---|---|---|---|

| Using the training data of HKPolyU-DB (input domain) and SDUMLA-HMT-DB (target domain) | Using the testing data of HKPolyU-DB | Using the training data of SDUMLA-HMT-DB | Using the generated images by CycleGAN (similar to SDUMLA-HMT-DB) |

| Training of Finger-Vein Recognition Model | Testing of Finger-Vein Recognition Model | EER |

|---|---|---|

| HKPolyU-DB | HKPolyU-DB | 0.58 |

| SDUMLA-HMT-DB | HKPolyU-DB | 1.80 |

| Method | EER |

|---|---|

| No domain adaptation | 1.80 |

| StarGAN-v2 [38] | 1.34 |

| ComboGAN [39] | 2.77 |

| CycleGAN (proposed method) | 0.85 |

| Method | EER |

|---|---|

| Huang et al. [40] | 9.46 |

| Miura et al. [41] | 6.49 |

| Liu et al. [42] | 5.01 |

| Gupta et al. [43] | 4.47 |

| Miura et al. [44] | 4.45 |

| Dong et al. [45] | 3.53 |

| Liu et al. [46] | 1.47 |

| Xi et al. [47] | 1.44 |

| Joseph et al. [48] | 1.27 |

| Proposed method | 0.85 |

| Training of Finger-Vein Recognition Model | Testing of Finger-Vein Recognition Model | EER |

|---|---|---|

| SDUMLA-HMT-DB | SDUMLA-HMT-DB | 2.17 |

| HKPolyU-DB | SDUMLA-HMT-DB | 4.42 |

| Method | EER |

|---|---|

| No domain adaptation | 4.42 |

| StarGAN-v2 [38] | 4.43 |

| ComboGAN [39] | 8.96 |

| CycleGAN (proposed method) | 3.40 |

| Method | EER |

|---|---|

| Jalilian et al. [18] | 3.57 |

| Pham et al. [49] | 8.09 |

| Miura et. al. [44] | 5.46 |

| Miura et al. [41] | 4.54 |

| Yang et al. [50] | 3.96 |

| CycleGAN (proposed method) | 3.40 |

| Categories | Considering the Cross-Domain Problem | Method | Modality | Advantage | Disadvantage |

|---|---|---|---|---|---|

| Non-training-based | No | Wide line detector and pattern normalization [40] | Finger-vein | Simple and computationally efficient than training-based method | Performance is not good compared to training-based method |

| Maximum curvature points [41] | |||||

| Minutiae matching [42] | |||||

| Multi-scale matched filter [43] | |||||

| Repeated line tracking [44] | |||||

| Personalized best patches map [45] | |||||

| Superpixel-based [46] | |||||

| Discriminative binary codes [47] | |||||

| Fuzzy rule-based [48] | |||||

| Local binary pattern [49] | |||||

| Tri-branch vein structure [50] | |||||

| Yes | Dimension reduction and orientation coding algorithm [7] | Palmprint | |||

| SIFT [8] | Dorsal hand-vein | ||||

| Improved SIFT [9] | |||||

| BGP and Gabor-HoG [10] | Fingerprint | ||||

| Least square-based domain transformation function [11] | |||||

| Training-based | No | VGG-16 and CNN [20] | Preprocessing is not required | No consideration about the heterogeneous data problem | |

| Patch-based MobileNet [21] | |||||

| CGAN [19] | Does not show good performance in cross-sensor environments | ||||

| FCN [18] | Finger-vein | Using compact information on recognition stage increases generality | Unreliable label data were used | ||

| Yes | FLDA [12] | Face and fingerprint | Simple method for domain adaptation | Needs multiple modality data from same people | |

| Universal material translator wrapper [13] | Fingerprint | Uses a simple style transfer network | Generated images cannot deal with level 3 features | ||

| DeepDomainPore network [14] | Can exploit level 3 features using low-resolution input | Long preprocessing time and ground truth required for source data | |||

| PalmGAN [15] | Palmprint | Automatically generates label data for target domain | - Long preprocessing time and ground truth required for source data Segmentation method is unstable | ||

| Auto-encoder [16] | Automatically generates label data for target domain Simple method for domain matching with good matching performance | ||||

| DeepScatNet and RDF [17] | Finger-selfie | ||||

| CycleGAN-based (Proposed method) | Finger-vein | High performance for domain adaptation Does not need ground truth for source data | Intensive training for CycleGAN is necessary |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Noh, K.J.; Choi, J.; Hong, J.S.; Park, K.R. Finger-Vein Recognition Using Heterogeneous Databases by Domain Adaption Based on a Cycle-Consistent Adversarial Network. Sensors 2021, 21, 524. https://doi.org/10.3390/s21020524

Noh KJ, Choi J, Hong JS, Park KR. Finger-Vein Recognition Using Heterogeneous Databases by Domain Adaption Based on a Cycle-Consistent Adversarial Network. Sensors. 2021; 21(2):524. https://doi.org/10.3390/s21020524

Chicago/Turabian StyleNoh, Kyoung Jun, Jiho Choi, Jin Seong Hong, and Kang Ryoung Park. 2021. "Finger-Vein Recognition Using Heterogeneous Databases by Domain Adaption Based on a Cycle-Consistent Adversarial Network" Sensors 21, no. 2: 524. https://doi.org/10.3390/s21020524

APA StyleNoh, K. J., Choi, J., Hong, J. S., & Park, K. R. (2021). Finger-Vein Recognition Using Heterogeneous Databases by Domain Adaption Based on a Cycle-Consistent Adversarial Network. Sensors, 21(2), 524. https://doi.org/10.3390/s21020524