Robot-Assisted Gait Self-Training: Assessing the Level Achieved

Abstract

:1. Introduction

2. Requirements

2.1. Methodological–Technical Aspects

2.2. User Perspective

2.3. Health Economic Aspects

3. State-of-the-Art

3.1. Approaches from Science

3.2. Related Products on the Market

4. Mobile Gait Self-Training under Real Clinical Environment Conditions

4.1. Training Application

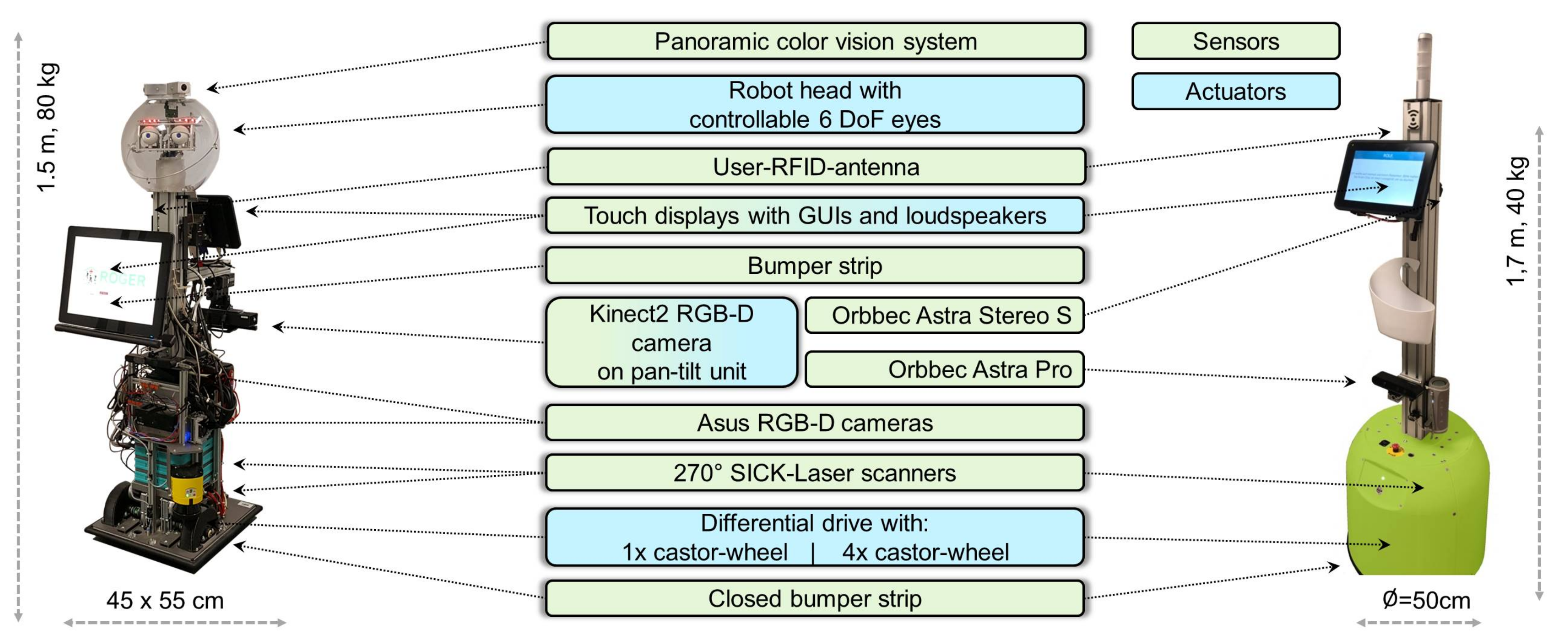

4.2. Robot Platforms Used

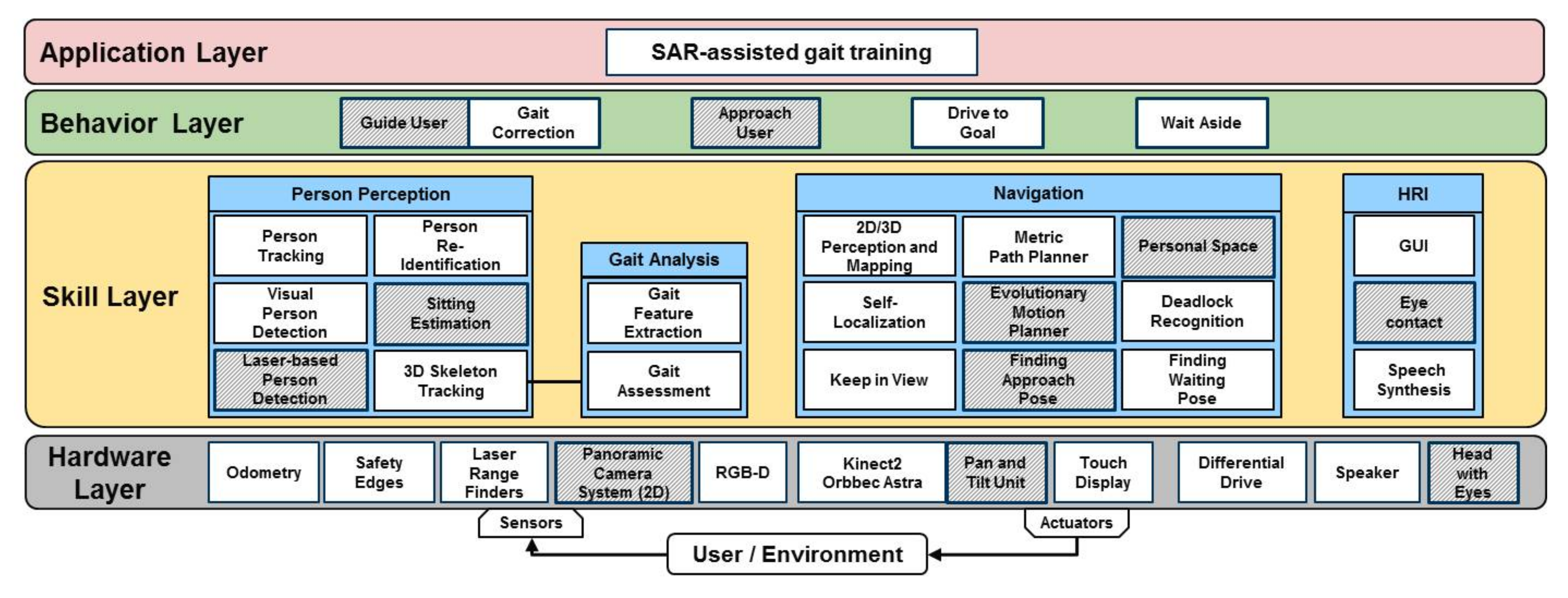

4.3. System Architectures for Both Robot Platforms

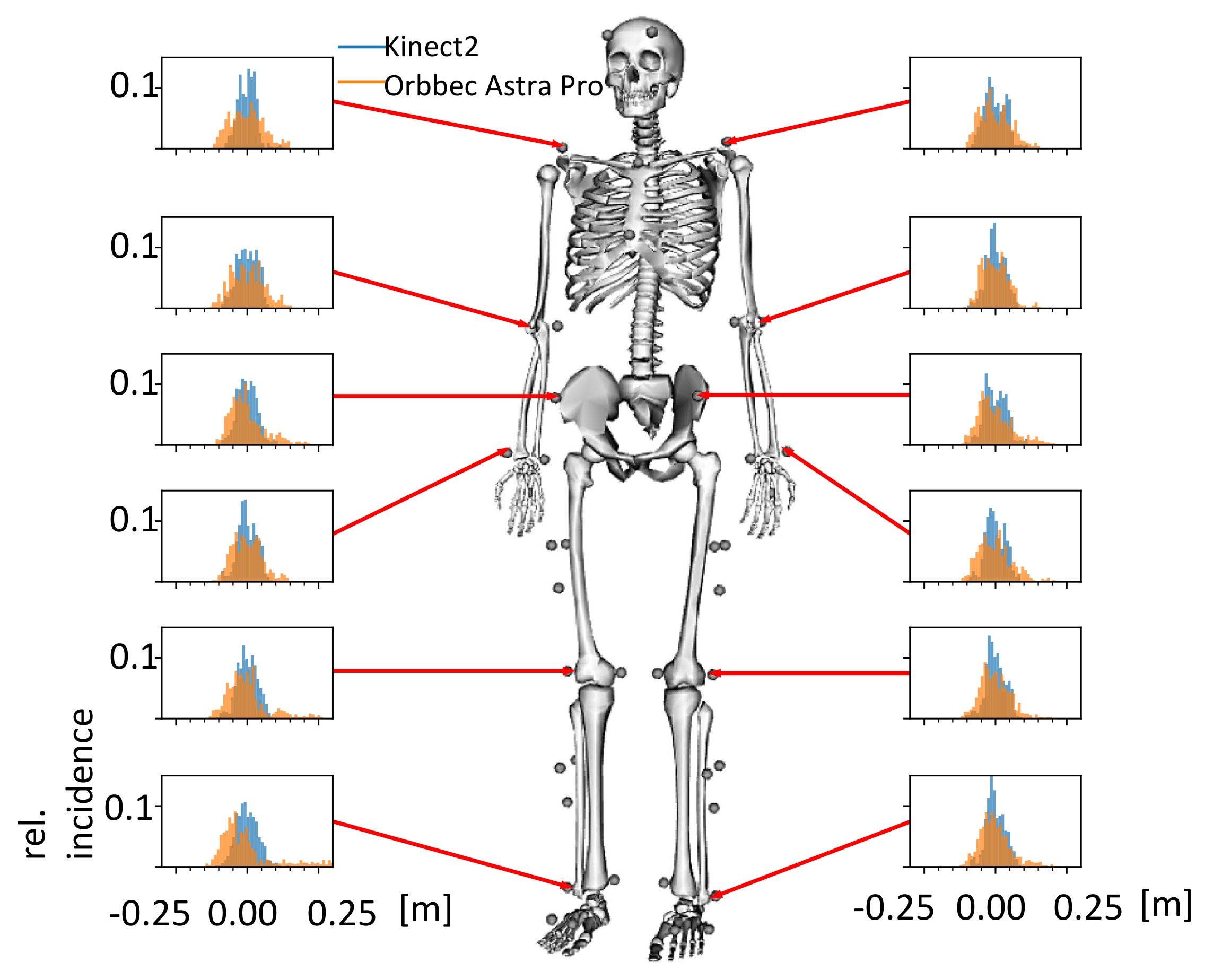

5. State of Development from a Methodological–Technical Point of View

5.1. Technical Benchmarking

5.1.1. Product Prototype Platform

5.1.2. Research Platform

6. State of Development from the Users’ Point of View

7. State of Development from an Economic Perspective

8. Conclusions on the Questions of the Article and Outlook

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Gross, H.M.; Meyer, S.; Scheidig, A.; Eisenbach, M.; Mueller, S.; Trinh, T.Q.; Wengefeld, T.; Bley, A.; Martin, C.; Fricke, C. Mobile Robot Companion for Walking Training of Stroke Patients in Clinical Post-stroke Rehabilitation. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 1028–1035. [Google Scholar]

- Scheidig, A.; Jaeschke, B.; Schuetz, B.; Trinh, T.Q.; Vorndran, A.; Mayfarth, A.; Gross, H.M. May I Keep an Eye on Your Training? In Gait Assessment Assisted by a Mobile Robot. In Proceedings of the 2019 IEEE 16th International Conference on Rehabilitation Robotics (ICORR), Toronto, ON, Canada, 24–28 June 2019; pp. 701–708. [Google Scholar]

- Trinh, T.Q.; Vorndran, A.; Schuetz, B.; Jaeschke, B.; Mayfarth, A.; Scheidig, A.; Gross, H.M. Autonomous Mobile Gait Training Robot for Orthopedic Rehabilitation in a Clinical Environment. In Proceedings of the 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Naples, Italy, 31 August–4 September 2020; pp. 580–587. [Google Scholar]

- Röhner, E.; Mayfarth, A.; Sternitzke, C.; Layher, F.; Scheidig, A.; Gross, H.M.; Matziolis, G.; Böhle, S.; Sander, K. Mobile robot-based gait training after Total Hip Arthroplasty (THA) improves walking in biomechanical gait analysis. J. Clin. Med. 2021, 10, 2416. [Google Scholar] [CrossRef] [PubMed]

- European Commission. Annex G. Technology Readiness Levels (TRL). 2014. Available online: http://ec.europa.eu/research/participants/data/ref/h2020/other/wp/2016_2017/annexes/h2020-wp1617-annex-g-trl_en.pdf (accessed on 13 September 2021).

- Gross, H.M.; Eisenbach, M.; Scheidig, A.; Trinh, T.Q.; Wengefeld, T. Contribution towards Evaluating the Practicability of Socially Assistive Robots—By Example of a Mobile Walking Coach Robot. In International Conference on Social Robotics; Springer: Berlin/Heidelberg, Germany, 2016; Volume 9979, pp. 890–899. [Google Scholar]

- Vorndran, A.; Trinh, T.Q.; Müller, S.; Scheidig, A.; Gross, H.M. How to Always Keep an Eye on the User with a Mobile Robot? In Proceedings of the ISR 2018 50th International Symposium on Robotics, Munich, Germany, 20–21 June 2018; pp. 219–225. [Google Scholar]

- Weber, K. MEESTAR: Ein Modell zur Ethischen Evaluierung Sozio-Technischer Arrangements in der Pflege- und Gesundheitsversorgung. In Technisierung des Alltags —Beitrag für ein gutes Leben? Franz Steiner Verlag: Stuttgart, Germany, 2015; pp. 247–262. [Google Scholar]

- Manzeschke, A.; Weber, K.; Rother, E.; Fangerau, H. Ethische Fragen im Bereich Altersgerechter Assistenzsysteme; VDI/VDE Innovation + Technik: Berlin, Germany, 2013; ISBN 978-3-89750-169-0. [Google Scholar]

- Hondori, H.M.; Khademi, M. A Review on Technical and Clinical Impact of Microsoft Kinect on Physical Therapy and Rehabilitation. J. Med. Eng. 2014, 2014, 846514. [Google Scholar] [CrossRef] [Green Version]

- Guffanti, D.A.; Brunete, A.; Hernando, M. Human Gait Analysis Using Non-invasive Methods with a ROS-Based Mobile Robotic Platform. In Proceedings of the 7th International Workshop on New Trends in Medical and Service Robotics, Basel, Switzerland, 7–9 June 2021; pp. 309–317. [Google Scholar]

- Chi, W.; Wang, J.; Meng, M.Q.H. Gait Recognition Method for Human Following in Service Robots. IEEE Trans. Syst. Man Cybern. Syst. 2018, 48, 1429–1440. [Google Scholar] [CrossRef]

- van den Berg, M.; Sherrington, C.; Killington, M.; Smith, S.; Bongers, B.; Hassett, L.; Crotty, M. CLARC: A cognitive robot for helping geriatric doctors in real scenarios. In Proceedings of the 3rd Iberian Robotics Conference (ROBOT), Seville, Spain, 22–24 November 2017; pp. 404–414. [Google Scholar]

- Martins, M.; Frizera, A.; Ceres, R.; Santos, C. Legs tracking for walker-rehabilitation purposes. In Proceedings of the 5th IEEE RAS/EMBS International Conference on Biomedical Robotics and Biomechatronics (ICBRB), Sao Paulo, Brazil, 12–15 August 2014; pp. 387–392. [Google Scholar] [CrossRef]

- Mandel, C.; Choudhury, A.; Autexier, S.; Hochbaum, K.; Brüggemann, S.; Wedler, P. Gait cycle classification for wheeled walker users by matching time series of distance measurements. In Proceedings of the Anual Conference of the Rehabilitation Engineering and Assistive Technology Society of North America (RESNA 2018), Arlington, VA, USA, 13–15 July 2018. [Google Scholar]

- Ongun, M.F.; Güdükbay, U.; Aksoy, S. Recognition of occupational therapy exercises and detection of compensation mistakes for Cerebral Palsy. J. Vis. Commun. Image Represent. 2020, 73, 102970. [Google Scholar] [CrossRef]

- Bruse, X.Y.; Liu, Y.; Chan, K.C. Skeleton-Based Detection of Abnormalities in Human Actions Using Graph Convo-lutional Networks. In Proceedings of the Second International Conference on Transdisciplinary AI (TransAI), Irvine, CA, USA, 23 September 2020; pp. 131–137. [Google Scholar] [CrossRef]

- Shi, L.; Zhang, Y.; Cheng, J.; Lu, H. Skeleton-based action recognition with multi-stream adaptive graph convolutional networks. IEEE Trans. Image Process. 2020, 29, 9532–9545. [Google Scholar] [CrossRef] [PubMed]

- Zhang, P.; Lan, C.; Xing, J.; Zeng, W.; Xue, J.; Zheng, N. View adaptive neural networks for high performance skeleton-based human action recognition. Trans. Pattern Anal. Mach. Intell. 2019, 41, 1963–1978. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tsuda, N.; Funatsu, H.; Ise, N.; Nomura, Y.; Kato, N. Measurement and evaluation of crutch walk motions by Kinect sensor. Mech. Eng. J. 2016, 3, 15–00472. [Google Scholar] [CrossRef]

- Tsuda, N.; Hashimoto, R.; Hiasa, R.; Tarao, S.; Nomura, Y.; Kato, N. Development of Measuring and Guiding Robot for Crutch Walk Training. In Proceedings of the Third IASTED International Conference Telehealth and Assistive Technology/847: Intelligent Systems and Robotic, Zurich, Switzerland, 6–8 October 2016. [Google Scholar] [CrossRef]

- Tsuda, N.; Tarao, S.; Nomura, Y.; Kato, N. Attending and Observing Robot for Crutch Users. In Proceedings of the Companion of the 2018 ACM/IEEE International Conference on Human-Robot Interaction, Chicago, IL, USA, 5–8 March 2018; pp. 259–260. [Google Scholar] [CrossRef]

- Jaeschke, B.; Vorndran, A.; Trinh, T.Q.; Scheidig, A.; Gross, H.M.; Sander, K.; Layher, F. Making Gait Training Mobile—A Feasibility Analysis. In Proceedings of the 2018 7th IEEE International Conference on Biomedical Robotics and Biomechatronics (Biorob), Enschede, The Netherlands, 12 October 2018; pp. 484–490. [Google Scholar]

- Trinh, T.Q.; Wengefeld, T.; Müller, S.; Vorndran, A.; Volkhardt, M.; Scheidig, A.; Gross, H.M. “Take a seat, please”: Approaching and Recognition of Seated Persons by a Mobile Robot. In Proceedings of the ISR 2018 50th International Symposium on Robotics, Munich, Germany, 20–21 June 2018; pp. 240–247. [Google Scholar]

- Wengefeld, T.; Eisenbach, M.; Trinh, T.Q.; Gross, H.M. May I be your Personal Coach? Bringing Together Person Tracking and Visual Re-identification on a Mobile Robot. In Proceedings of the ISR 2016: 47st International Symposium on Robotics, Munich, Germany, 21–22 June 2016; pp. 141–148. [Google Scholar]

- Einhorn, E.; Langner, T.; Stricker, R.; Martin, C.; Gross, H.M. MIRA—Middleware for robotic applications. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura, Portugal, 7–12 October 2012. [Google Scholar]

- Einhorn, E.; Gross, H.M. Generic 2D/3D SLAM with NDT Maps for Lifelong Application. In Proceedings of the European Conference on Mobile Robots (ECMR), Barcelona, Spain, 25–27 September 2013. [Google Scholar]

- Schmiedel, T.; Einhorn, E.; Gross, H.M. IRON: A Fast Interest Point Descriptor for Robust NDT-Map Matching and Its Application to Robot Localization. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 3144–3151. [Google Scholar]

- Mueller, S.; Trinh, T.Q.; Gross, H.M. Local Real-Time Motion Planning Using Evolutionary Optimization. In Annual Conference Towards Autonomous Robotic Systems; Springer: Cham, Switzerland, 2017; pp. 211–221. [Google Scholar]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the International Conference on Neural Networks (ICNN), Perth, WA, Australia, 27 November–1 December 1995; pp. 1942–1948. [Google Scholar]

- Mueller, S.; Wengefeld, T.; Trinh, T.Q.; Aganian, D.; Eisenbach, M.; Gross, H.M. A Multi-Modal Person Perception Framework for Socially Interactive Mobile Service Robots. Sensors 2020, 20, 722. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cao, Z.; Simon, T.; Wei, S.E.; Sheikh, Y. Realtime multi-person 2d pose estimation using part affinity fields. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1302–1310. [Google Scholar]

- Weinrich, C.; Wengefeld, T.; Schroeter, C.; Gross, H.M. People Detection and Distinction of their Walking Aids in 2D Laser Range Data based on Generic Distance-Invariant Features. In Proceedings of the 23rd IEEE International Symposium on Robot and Human Interactive Communication, Edinburgh, UK, 25–29 August 2014; pp. 767–773. [Google Scholar]

- Liu, W.; Wen, Y.; Yu, Z.; Li, M.; Raj, B.; Song, L. Sphereface: Deep hypersphere embedding for face recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6738–6746. [Google Scholar]

- Eisenbach, M.; Vorndran, A.; Sorge, S.; Gross, H.M. User Recognition for Guiding and Following People with a Mobile Robot in a Clinical Environment. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 3600–3607. [Google Scholar]

- Brooke, J. SUS: A quick and dirty usability scale. Usability Eval. Ind. 1995, 189, 4–7. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Scheidig, A.; Schütz, B.; Trinh, T.Q.; Vorndran, A.; Mayfarth, A.; Sternitzke, C.; Röhner, E.; Gross, H.-M. Robot-Assisted Gait Self-Training: Assessing the Level Achieved. Sensors 2021, 21, 6213. https://doi.org/10.3390/s21186213

Scheidig A, Schütz B, Trinh TQ, Vorndran A, Mayfarth A, Sternitzke C, Röhner E, Gross H-M. Robot-Assisted Gait Self-Training: Assessing the Level Achieved. Sensors. 2021; 21(18):6213. https://doi.org/10.3390/s21186213

Chicago/Turabian StyleScheidig, Andrea, Benjamin Schütz, Thanh Quang Trinh, Alexander Vorndran, Anke Mayfarth, Christian Sternitzke, Eric Röhner, and Horst-Michael Gross. 2021. "Robot-Assisted Gait Self-Training: Assessing the Level Achieved" Sensors 21, no. 18: 6213. https://doi.org/10.3390/s21186213

APA StyleScheidig, A., Schütz, B., Trinh, T. Q., Vorndran, A., Mayfarth, A., Sternitzke, C., Röhner, E., & Gross, H.-M. (2021). Robot-Assisted Gait Self-Training: Assessing the Level Achieved. Sensors, 21(18), 6213. https://doi.org/10.3390/s21186213