A Spatiotemporal Deep Learning Approach for Automatic Pathological Gait Classification

Abstract

1. Introduction

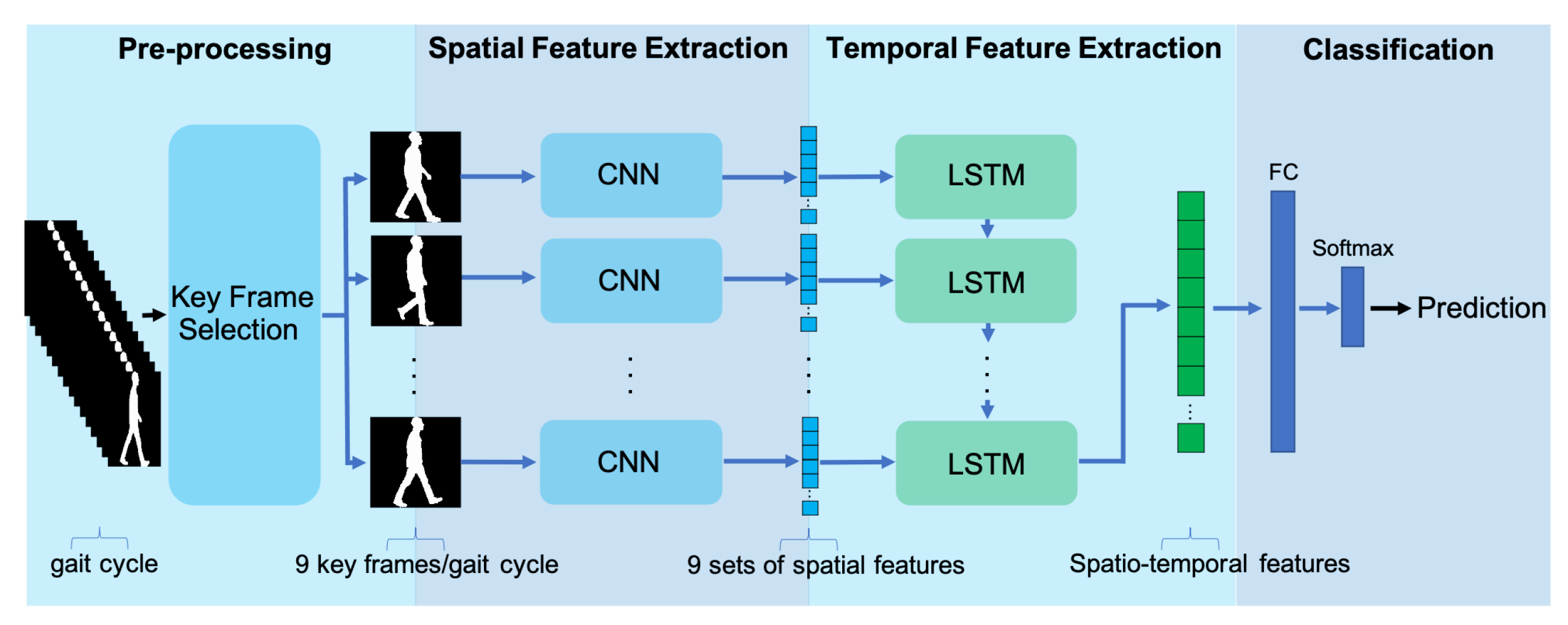

- Representation by a selection of key frames—A complete gait cycle is represented by a set of key silhouette frames, obtained by the proposed frame selection module. These key frames are selected taking as reference the functional phases expected to be present in normal gait, according to clinical gait literature [33]: initial contact, mid stance, terminal stance, preswing, initial swing, midswing, and terminal swing. This representation provides more information than the compact ones often considered by state-of-the-art solutions, such as the GEI. In particular, it allows a deeper analysis of the temporal dependencies between consecutive moments during human locomotion;

- Spatiotemporal deep learning framework for classification—The proposed pathological gait classification framework combines convolutional and recurrent neural networks to extract both spatial and temporal features from the set of key frames used to represent gait. A CNN is used to extract spatial features from each key frame, at individual time instants. Then, the adopted RNN, an LSTM, is used to learn temporal patterns of the spatial features along the gait cycle. Gait can then be classified into one of the five gait classes considered: diplegic, hemiplegic, neuropathic, Parkinsonian, or healthy/normal gait.

2. Materials and Methods: Proposed Gait Analysis System

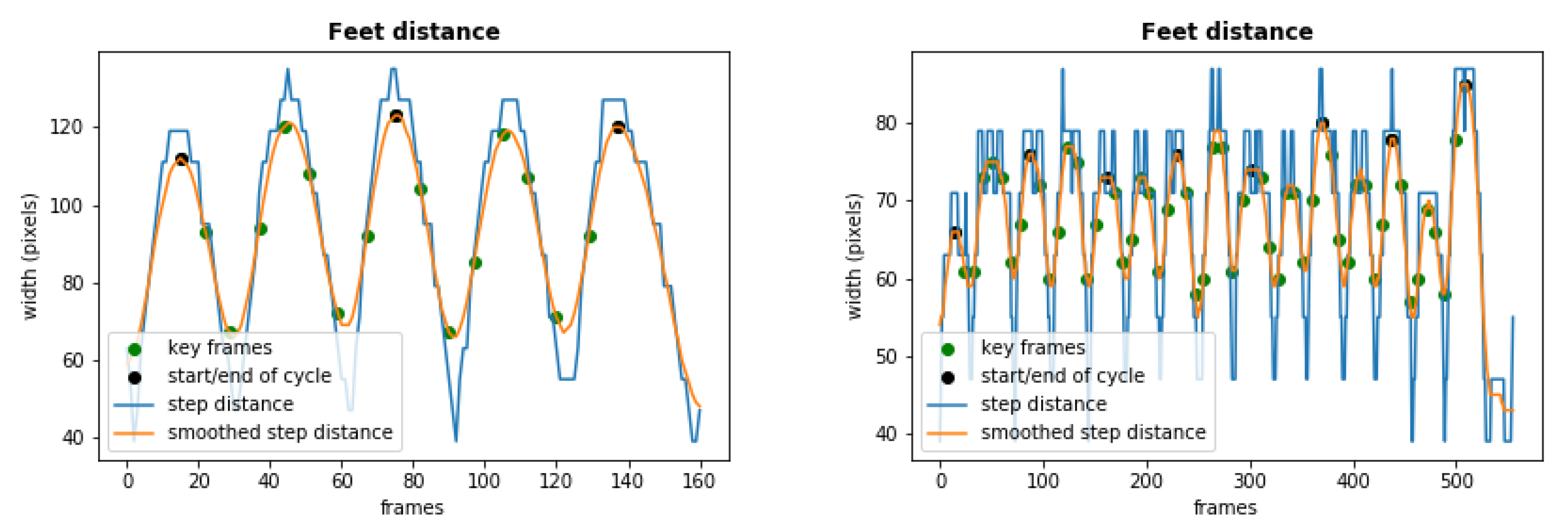

- Key frame selection—This module selects 9 key frames to represent 1 gait cycle, with each key frame containing a binary silhouette;

- Spatial feature extraction—This module uses a pretrained CNN, which is used to process each of the 9 selected key frames individually, thus obtaining 9 sets of 2D spatial features, each flattened into a 1D vector;

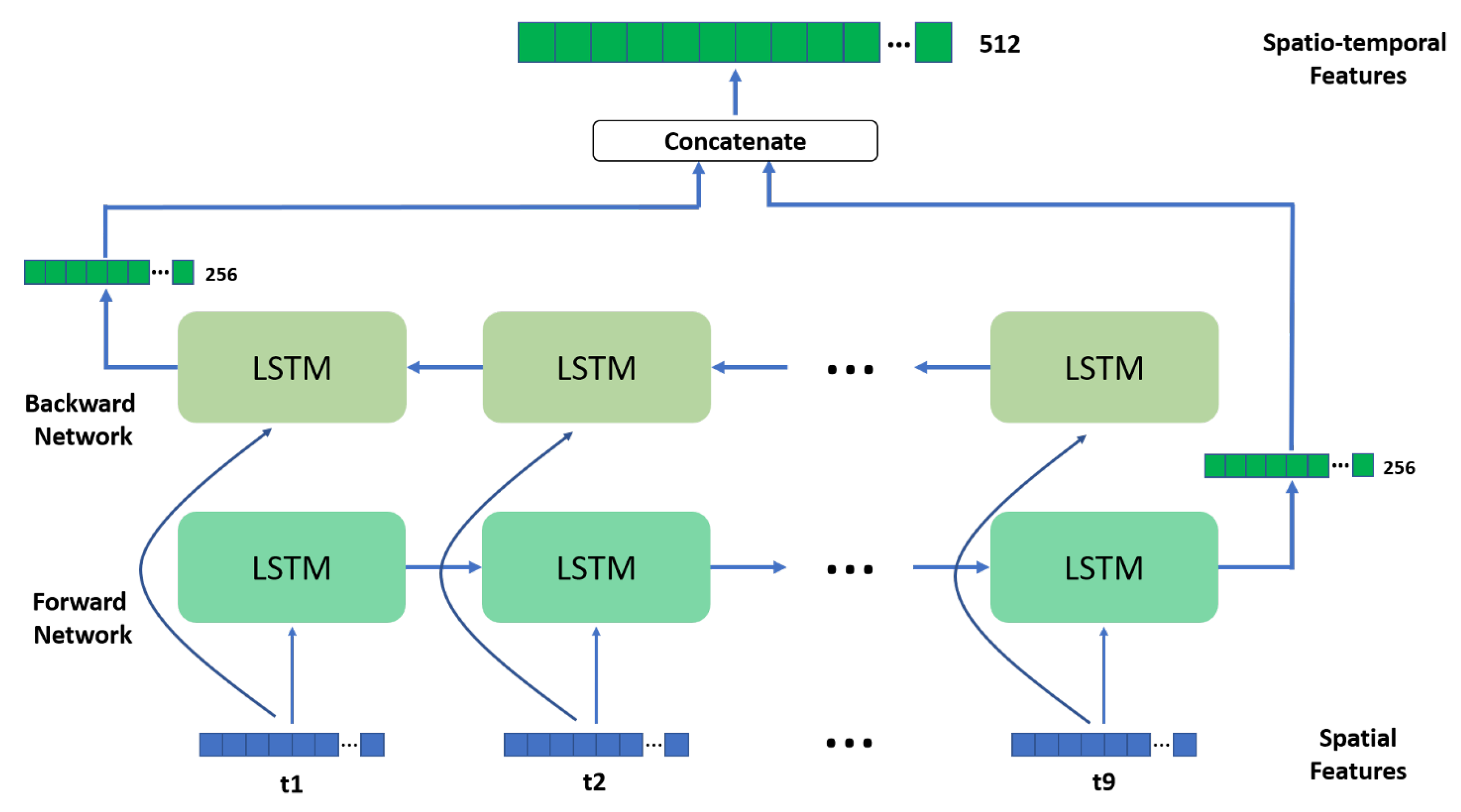

- Temporal feature extraction—This module processes the sequence of spatial features extracted from the 9 key frames representing one gait cycle, using a bidirectional LSTM network, to obtain the desired spatiotemporal features;

- Classification—The final module uses the features extracted in the previous module to reach a classification decision, by using a dense neural network of fully connected layers.

2.1. Key Frame Selection

2.2. Spatial Feature Extraction

2.3. Temporal Feature Extraction

2.4. Classification

3. Results

3.1. Cross-Validation Results

3.2. Cross-Dataset Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Gafurov, D. A survey of biometric gait recognition: Approaches, security and challenges. In Proceedings of the Annual Norwegian Computer Science Conference Norway, Oslo, Norway, 19–21 November 2007. [Google Scholar]

- Bouchrika, I. A survey of using biometrics for smart visual surveillance: Gait recognition. In Surveillance in Action: Technologies for Civilian, Military and Cyber Surveillance; Karampelas, P., Bourlai, T., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 3–23. [Google Scholar] [CrossRef]

- Verlekar, T.; Correia, P.; Soares, L. Gait recognition using normalized shadows. In Proceedings of the 2017 25th European Signal Processing Conference (EUSIPCO), Kos, Greece, 28 August–2 September 2017; pp. 936–940. [Google Scholar]

- Muro-De-La-Herran, A.; Garcia-Zapirain, B.; Mendez-Zorrilla, A. Gait analysis methods: An overview of wearable and non-wearable systems, highlighting clinical applications. Sensors 2014, 14, 3362–3394. [Google Scholar] [CrossRef]

- Kessler, E.; Malladi, V.V.S.; Tarazaga, P.A. Vibration-based gait analysis via instrumented buildings. Int. J. Distrib. Sens. Netw. 2019, 15. [Google Scholar] [CrossRef]

- Chaparro-Rico, B.D.M.; Cafolla, D.; Tortola, P.; Galardi, G. Assessing Stiffness, Joint Torque and ROM for Paretic and Non-Paretic Lower Limbs during the Subacute Phase of Stroke Using Lokomat Tools. Appl. Sci. 2020, 10, 6168. [Google Scholar] [CrossRef]

- Tao, W.; Liu, T.; Zheng, R.; Feng, H. Gait Analysis Using Wearable Sensors. Sensors 2012, 12, 2255–2283. [Google Scholar] [CrossRef]

- Hssayeni, M.D.; Jimenez-Shahed, J.; Burack, M.A.; Ghoraani, B. Wearable Sensors for Estimation of Parkinsonian Tremor Severity during Free Body Movements. Sensors 2019, 19, 4215. [Google Scholar] [CrossRef]

- Marsico, M.D.; Mecca, A. A Survey on Gait Recognition via Wearable Sensors. ACM Comput. Surv. 2019, 52. [Google Scholar] [CrossRef]

- Steinert, A.; Sattler, I.; Otte, K.; Röhling, H.; Mansow-Model, S.; Müller-Werdan, U. Using New Camera-Based Technologies for Gait Analysis in Older Adults in Comparison to the Established GAITRite System. Sensors 2020, 20, 125. [Google Scholar] [CrossRef]

- Rocha, A.P.; Choupina, H.; Fernandes, J.M.; Rosas, M.J.; Vaz, R.; Silva Cunha, J.P. Kinect v2 based system for Parkinson’s disease assessment. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 1279–1282. [Google Scholar] [CrossRef]

- Ilić, T. A vision-based system for movement analysis in medical applications: The example of parkinson disease. In Proceedings of the International Conference on Computer Vision Systems, Copenhagen, Denmark, 6–9 July 2015; pp. 424–434. [Google Scholar] [CrossRef]

- Vanrenterghem, J.; Gormley, D.; Robinson, M.; Lees, A. Solutions for representing the whole-body centre of mass in side cutting manoeuvres based on data that is typically available for lower limb kinematics. Gait Posture 2010, 31, 517–521. [Google Scholar] [CrossRef]

- Serrano, M.; Chen, Y.; Howard, A.; Vela, P. Automated feet detection for clinical gait assessment. In Proceedings of the 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 16–20 August 2016; pp. 2161–2164. [Google Scholar] [CrossRef]

- Ortells, J.; Herrero-Ezquerro, M.; Mollineda, R. Vision-based gait impairment analysis for aided diagnosis. Med. Biol. Eng. Comput. 2018, 56, 1553–1564. [Google Scholar] [CrossRef]

- Nieto-Hidalgo, M.; Ferrández-Pastor, F.; Valdivieso-Sarabia, R.; Mora-Pascual, J.; García-Chamizo, J. Vision based extraction of dynamic gait features focused on feet movement using RGB camera. In Ambient Intelligence for Health; Springer: Berlin/Heidelberg, Germany, 2015; pp. 155–166. [Google Scholar]

- Leu, A.; Ristić-Durrant, D.; Gräser, A. A robust markerless vision-based human gait analysis system. In Proceedings of the 2011 6th IEEE International Symposium on Applied Computational Intelligence and Informatics (SACI), Timisoara, Romania, 19–21 May 2011; pp. 415–420. [Google Scholar] [CrossRef]

- Chaaraoui, A.A.; Padilla-López, J.R.; Flórez-Revuelta, F. Abnormal gait detection with RGB-D devices using joint motion history features. In Proceedings of the 2015 11th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition (FG), Ljubljana, Slovenia, 4–8 May 2015; Volume 07, pp. 1–6. [Google Scholar] [CrossRef]

- Nambiar, A.; Correia, P.L.; Soares, L.D. Frontal Gait Recognition Combining 2D and 3D Data. In Proceedings of the ACM Workshop on Multimedia and Security, Coventry, UK, 6–7 September 2012; pp. 145–150. [Google Scholar] [CrossRef]

- Chaparro-Rico, B.D.M.; Cafolla, D. Test-Retest, Inter-Rater and Intra-Rater Reliability for Spatiotemporal Gait Parameters Using SANE (an eaSy gAit aNalysis systEm) as Measuring Instrument. Appl. Sci. 2020, 10, 5781. [Google Scholar] [CrossRef]

- Verlekar, T.; Soares, L.; Correia, P. Automatic classification of gait impairments using a markerless 2D video-based system. Sensors 2018, 18, 2743. [Google Scholar] [CrossRef]

- Han, J.; Bhanu, B. Individual recognition using gait energy image. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 28, 316–322. [Google Scholar] [CrossRef] [PubMed]

- Nieto-Hidalgo, M.; García-Chamizo, J. Classification of pathologies using a vision based feature extraction. In Proceedings of the International Conference on Ubiquitous Computing and Ambient Intelligence, Philadelphia, PA, USA, 7–10 November 2017; Springer: Berlin/Heidelberg, Germany, 2017; pp. 265–274. [Google Scholar]

- Zhao, G.; Liu, G.; Li, H.; Pietikainen, M. 3D gait recognition using multiple cameras. In Proceedings of the 7th International Conference on Automatic Face and Gesture Recognition (FGR06), Southampton, UK, 10–12 April 2006; pp. 529–534. [Google Scholar] [CrossRef]

- Firmino, J. Using Deep Learning for Gait Abnormality Classification. Master’s Thesis, Instituto Superior Técnico, Lisbon, Portugal, 2019. [Google Scholar]

- Cao, Z.; Hidalgo, G.; Simon, T.; Wei, S.E.; Sheikh, Y. OpenPose: Realtime multi-person 2D pose estimation using Part Affinity Fields. arXiv 2018, arXiv:1812.08008. [Google Scholar] [CrossRef] [PubMed]

- Verlekar, T.; Correia, P.; Soares, L. Using transfer learning for classification of gait pathologies. In Proceedings of the 2018 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Madrid, Spain, 3–6 December 2018; pp. 2376–2381. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Li, F.F. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Loureiro, J.; Correia, P. Using a skeleton gait energy image for pathological gait classification. In Proceedings of the 2020 15th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2020) (FG), Buenos Aires, Argentina, 16–20 November 2020; pp. 410–414. [Google Scholar]

- Khokhlova, M.; Migniot, C.; Morozov, A.; Sushkova, O.; Dipanda, A. Normal and pathological gait classification LSTM model. Artif. Intell. Med. 2019, 94, 54–66. [Google Scholar] [CrossRef] [PubMed]

- Mutegeki, R.; Han, D.S. A CNN-LSTM approach to human activity recognition. In Proceedings of the 2020 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), Fukuoka, Japan, 19–21 February 2020; pp. 362–366. [Google Scholar] [CrossRef]

- Perry, J.; Schoneberger, B. Gait Analysis: Normal and Pathological Function; Slack: San Francisco, CA, USA, 1992. [Google Scholar]

- Albuquerque, P.; Machado, J.; Verlekar, T.T.; Soares, L.D.; Correia, P.L. Remote Pathological Gait Classification System. arXiv 2021, arXiv:cs.CV/2105.01634. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Li, C.; Min, X.; Sun, S.; Lin, W.; Tang, Z. DeepGait: A learning deep convolutional representation for view-invariant gait recognition using joint Bayesian. Appl. Sci. 2017, 7, 210. [Google Scholar] [CrossRef]

- Rezende, E.; Ruppert, G.; Carvalho, T.; Theophilo, A.; Ramos, F.; de Geus, P. Malicious software classification using VGG16 deep neural network’s bottleneck features. In Information Technology—New Generations; Latifi, S., Ed.; Springer International Publishing: Cham, Switzerland, 2018; pp. 51–59. [Google Scholar]

- Awais, M.; Müller, H.; Tang, T.B.; Meriaudeau, F. Classification of SD-OCT images using a deep learning approach. In Proceedings of the 2017 IEEE International Conference on Signal and Image Processing Applications (ICSIPA), Kuching, Malaysia, 12–14 September 2017; pp. 489–492. [Google Scholar] [CrossRef]

- Schuster, M.; Paliwal, K. Bidirectional recurrent neural networks. Signal Process. IEEE Trans. 1997, 45, 2673–2681. [Google Scholar] [CrossRef]

- Singh, B.; Marks, T.; Jones, M.; Tuzel, O.; Shao, M. A multi-stream bi-directional recurrent neural network for fine-grained action detection. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1961–1970. [Google Scholar] [CrossRef]

- Graves, A.; Schmidhuber, J. Framewise phoneme classification with bidirectional LSTM and other neural network architectures. Neural Netw. 2005, 18, 602–610. [Google Scholar] [CrossRef]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Kingma, D.; Ba, J. Adam: A method for stochastic optimization. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015—Conference Track Proceedings, San Diego, CA, USA, 7–9 May 2015; pp. 1–15. [Google Scholar]

- Dozat, T. Incorporating Nesterov Momentum into Adam. In Proceedings of the 4th International Conference on Learning Representations, ICLR 2016—Workshop Track Proceedings, San Juan, Puerto Rico, 2–4 May 2016. [Google Scholar]

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D.Y.; Wong, W.K.; Woo, W.C. Convolutional LSTM network: A machine learning approach for precipitation nowcasting. Adv. Neural Inf. Process. Syst. 2015, 2015, 802–810. [Google Scholar]

- Sepas-Moghaddam, A.; Etemad, A.; Pereira, F.; Correia, P. Long Short-Term Memory With Gate and State Level Fusion for Light Field-Based Face Recognition. IEEE Trans. Inf. Forensics Secur. 2021, 16, 1365–1379. [Google Scholar] [CrossRef]

| Predicted Class | ||||||

|---|---|---|---|---|---|---|

| Diplegic | Hemiplegic | Neuropathic | Normal | Parkinson | ||

| True Class | Diplegic | 92 (10) | 4 | 0 | 0 | 4 |

| Hemiplegic | 1 | 96 (2) | 3 | 0 | 0 | |

| Neuropathic | 0 | 2 | 98 (4) | 0 | 0 | |

| Normal | 0 | 0 | 1 | 99 (3) | 0 | |

| Parkinsonian | 1 | 0 | 0 | 0 | 98 (3) | |

| Pathological Gait Classification System | Input Type | Accuracy |

|---|---|---|

| VGG-19 [25] | GEI | 94.0 |

| VGG-19 [25] | SEI | 93.6 |

| VGG-16-BiLSTM | Binary Silhouettes | 96.5 |

| Pathological Gait Classification System | Input Type | Accuracy |

|---|---|---|

| VGG-19 [25] | GEI | 86.4 |

| VGG-19 [25] | SEI | 85.1 |

| VGG-16-BiLSTM | Binary Silhouettes | 91.4 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Albuquerque, P.; Verlekar, T.T.; Correia, P.L.; Soares, L.D. A Spatiotemporal Deep Learning Approach for Automatic Pathological Gait Classification. Sensors 2021, 21, 6202. https://doi.org/10.3390/s21186202

Albuquerque P, Verlekar TT, Correia PL, Soares LD. A Spatiotemporal Deep Learning Approach for Automatic Pathological Gait Classification. Sensors. 2021; 21(18):6202. https://doi.org/10.3390/s21186202

Chicago/Turabian StyleAlbuquerque, Pedro, Tanmay Tulsidas Verlekar, Paulo Lobato Correia, and Luís Ducla Soares. 2021. "A Spatiotemporal Deep Learning Approach for Automatic Pathological Gait Classification" Sensors 21, no. 18: 6202. https://doi.org/10.3390/s21186202

APA StyleAlbuquerque, P., Verlekar, T. T., Correia, P. L., & Soares, L. D. (2021). A Spatiotemporal Deep Learning Approach for Automatic Pathological Gait Classification. Sensors, 21(18), 6202. https://doi.org/10.3390/s21186202