Abstract

In this work, we present an eye-image acquisition device that can be used as an image acquisition front-end application in compact, low-cost, and easy-to-integrate products for smart-city access control applications, based on iris recognition. We present the advantages and disadvantages of iris recognition compared to fingerprint- or face recognition. We also present the main drawbacks of the existing commercial solutions and propose a concept device design for door-mounted access control systems based on iris recognition technology. Our eye-image acquisition device was built around a low-cost camera module. An integrated infrared distance measurement was used for active image focusing. FPGA image processing was used for raw-RGB to grayscale demosaicing and passive image focusing. The integrated visible light illumination meets the IEC62471 photobiological safety standard. According to our results, we present the operation of the distance-measurement subsystem, the operation of the image-focusing subsystem, examples of acquired images of an artificial toy eye under different illumination conditions, and the calculation of illumination exposure hazards. We managed to acquire a sharp image of an artificial toy eye sized 22 mm in diameter from an approximate distance of 10 cm, with 400 pixels over the iris diameter, an average acquisition time of 1 s, and illumination below hazardous exposure levels.

1. Introduction

Computer vision technology uses digital image processing to extract information from images, which is then used in decision algorithms employed by many applications. State-of-the-art, multi-vision systems represent an extension of monocular vision systems, where a wider range of 3D geometric information is obtained [1]. For example, in a recent object detection application, Tang et al. [2] used a dynamic real-time, mark-free, four-ocular stereoscopic visual tracking system to measure the surface deformation of large-field recycled concrete-filled steel tube columns. Computer vision technology can also be used to automate biometrics processes that are used for the identification and authentication of individuals by measuring and analyzing their personal traits: fingerprints, iris, hand geometry, voice, face, vascular pattern, palm-print, or behavioral characteristics (e.g., signature, typing pattern, and gait). Automated biometric technology provides an advanced methodology with an advantage over traditional access control methods. Pin codes or passwords can be forgotten, and identification cards or keys may be lost or stolen. Biometric traits are difficult to steal or forget. Owing to its unique characteristics and high security, biometric technology is used in a variety of applications [3]. In a verification application scenario, an acquired biometric sample is compared against previously stored samples for matching. In an identification scenario, a biometric sample is acquired with the task of identifying the unknown sample as matching a previously acquired known sample. In both scenarios, four possible matching outcomes are possible: true accept, false accept, true reject, or false reject [4].

The overall global biometrics market is expected to grow rapidly from USD 19.5 billion in 2020 to USD 44.1 billion by 2026, registering a compound annual growth rate (CAGR) of 14.8% [5]. The global market for contactless biometrics will generate more than USD 9 billion in revenue during 2021, and it is expected to grow at a robust 16% CAGR over the next decade [6]. The demand for contact-based biometric systems has been affected by the COVID-19 pandemic. The demand for such contact-based biometric systems is likely to fall drastically to avoid the spread of coronavirus. Similarly, contactless biometric systems, such as face recognition, iris recognition, and voice recognition, are expected to witness a boost in demand post-COVID-19 [7].

1.1. Iris, Fingerprint, and Face Recognition

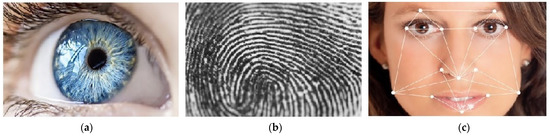

Iris, fingerprint, and face recognition are the most well-known forms of biometric security. As shown in Figure 1 [8], when comparing iris textures to fingerprint patterns or to face images, iris textures have the highest diversity. This feature makes iris recognition the most accurate and, therefore, also the most reliable non-invasive biometric system. However, what are the other pros and cons of these three systems?

Figure 1.

Diversity and accuracy of biometrics systems: (a) iris texture—high diversity and accuracy; (b) fingerprint pattern—middle diversity and accuracy; (c) face image—low diversity and accuracy.

Iris recognition is a method of biometric authentication that uses pattern recognition techniques based on high-resolution images of the irises of an individual’s eye. The iris is formed in the early stages of life, and once it is fully formed, its texture remains stable throughout a person’s life. Since the iris is an internal organ, it is well protected against damage and wear. Iris recognition is an excellent security technique, especially if it is performed using infrared illumination to reduce specular reflection from the convex cornea. Its efficiency is rarely impeded by the presence of glasses or contact lenses. However, it is difficult to perform iris recognition from a distance further than a few meters. A frequently encountered problem when the technology is introduced is resistance from users. People usually undergo an unpleasant experience when having their eyes scanned. They must also adopt a certain position, which can cause discomfort. Iris scanners are also relatively expensive compared to other recognition modalities [8,9].

Fingerprint recognition is the oldest and most widely used recognition modality. Most known examples of its application include mobile phones or laptops, building access, or car doors. Fingerprints are typically composed of ridges and furrows, and their uniqueness is determined by the patterns made by the ridges, minutiae points, and furrows, which remain unchanged throughout the life of an individual. An identification system based on fingerprint recognition looks for specific characteristics in the pattern. However, fingerprints may be damaged or even worn away and, consequently, cannot be recognized or recorded. On the other hand, fingerprint identification is already familiar to the public and is widely accepted. It can be easily integrated into existing applications. Users can easily learn to use this system, as it is intuitive and needs no special training. The advancements of technology have led to small and inexpensive fingerprint readers, and the deployment of these systems has increased in a wide range of applications [8,9].

Face recognition has developed rapidly in recent years and is an excellent candidate if a system is needed for remote recognition. Although it may not have high accuracy, one primary advantage of this system is that it does not need the cooperation of the person under identification. Face recognition may work even when the subject is unaware of being scanned. A face recognition system analyzes the shape and position of different parts of the face to determine a match. It is a hands-free and non-intrusive identification method that can be utilized in static or dynamic applications. However, there are several factors that can affect the accuracy of facial biometrics: it might not work well under poor lighting conditions; the face of a person changes over time; the shape of the face is also affected by variations in facial expressions [8,9].

1.2. Iris Recognition Standards

The most important work in the early history of iris biometrics is that of Daugman [4]. In 1993, Daugman [10] published the first academic paper proposing an actual method for iris recognition, just before his corresponding US patent [11] was also issued. Today, Daugman’s IrisCode algorithm is implemented in most systems deployed worldwide, which operate as licensed executables [12]. The basic principle of an iris recognition system is shown in Figure 2. First, a sharp image of the eye is acquired. Then, the acquired image is segmented, and the iris image is extracted. In the iris coding phase, some mathematical transformation is applied on the extracted iris segment. In the code-matching phase, the results of this transformation are used in search algorithms for the best-matching candidate in a database of stored iris codes [13].

Figure 2.

Basic principles of an iris recognition system.

A well-structured and widely implemented standard can create entirely new markets and promote competition. The ISO/IEC19794-x family of standards for biometric data interchange formats consists of 14 parts. These standards are needed for applications where biometric data, which are stored in a standard format, must be processed using compatible equipment. The most widely implemented standard from this family is ISO/IEC19794-5, which specifies the format requirements for the storage of facial images. It is used by national passport issuers and passport readers on immigration checkpoints. Iris recognition interoperability requires that data records are both syntactically and semantically understood by the receiving system. Iris recognition standardization efforts began in 2002, after the September 11 attack. At that time, the only commercial entity in the field was Iridian, which held Daugman’s patent rights. Iridian volunteered the first standard draft; in 2005, this led to the almost identical ISO/IEC19794-6 standard [14]. The standard specifies the image data format, image properties, image quality, and image capture recommendations. For example, the standard specifies an image size of 640 × 480 pixels and an acquired iris diameter of 200 pixels.

Exposure to optical radiation has been linked with several reactions that fall within the category of photobiological effects and have been shown to be of risk to the skin and eye. The IEC62471 standard is recognized in many countries as the key standard addressing photobiological safety issues. According to this standard, individuals in the vicinity of lamp systems must not be exposed to levels exceeding the exposure limits, which represent the conditions to which it is believed that nearly all individuals in the general population may be repeatedly exposed without adverse health effects [15].

1.3. Commercial Solutions for Smart-City Access Control Applications and the Market Opportunity

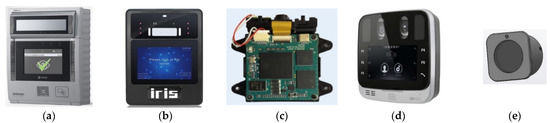

To access our smart-city homes using iris recognition technology, a compact, low-cost, and easy-to-integrate solution is required. Several commercial solutions using access-control devices based on iris recognition, intended for smart-city applications, exist on the market today. Figure 3a–d shows four examples of such solutions, and Table 1 lists their typical technical characteristics [16,17,18,19]. The main drawback of existing commercial solutions is that they are designed to be mounted on a wall, near the entrance door. Their price, the cost of installation excluded, is in the range of USD 1000. They are not designed for simple push-in-door installation, which would simplify the integration and maintenance for door manufacturers. Fingerprint readers are also important competitors. They are widely adopted, small, inexpensive, and designed to be integrated into the door or even within the doorknob. Therefore, an affordable solution that can replace fingerprint readers is required. Figure 3e shows our proposed solution, which is a design concept. The last row in Table 1 shows its basic technical characteristics. Our design will be compact and intended for direct door-mounting. The device will of course conform to illumination exposure limits. It will use a local, non-standardized database of 30 users, which will be based on images that are 800 × 600 in size. Since we use an off-the-shelf, low-cost color camera, visible illumination will be used. The capture distance will be from 7 to 13 cm. The target access time will be less than 2 s, which is comparable to fingerprint readers. The target production cost should be less than USD 200 at the production level of 5000 units per year in the first production year.

Figure 3.

Examples of existing access control devices, based on iris recognition, intended for smart-city applications: (a) IRISID-iCAM7; (b) GRANDING-IR7pro; (c) IRITECH-MO2121; (d) THOMAS-TA-EF-45; (e) proposed concept design.

Table 1.

Typical technical characteristics of products from smart-city access control applications, based on iris recognition.

1.4. Purpose, Goal, and Innovation of This Work

The arguments discussed in Section 1.3 led us to the purpose of this work, which was to verify if a compact access control device, based on iris recognition technology and intended for easy integration into smart-city doors, can be built at a reasonable cost.

Our project is divided into two stages. In this work, we present the results of the first stage. The main goal of this work was to develop a device that performs FPGA-based real-time image acquisition of a sharp grayscale image of an illuminated eye, using a camera module that integrates a Bayer-based color image sensor and a motorized lens. In the second stage, the device will be further miniaturized, and other iris recognition phases (iris segmentation, iris coding, code matching) will be added as the software upgrades.

The main innovation of this work is the method for real-time processing of the raw RGB image frames acquired from the camera module. In this work, we present a combined real-time image-processing technique that performs two image-processing tasks that are executed in real-time and in parallel on an FPGA. The first task is image conversion from raw RGB to grayscale. The second task is image-focusing, which is performed on raw RGB images. To the best of our knowledge, the described image-processing method has not yet been presented in any iris image acquisition or iris recognition applications.

1.5. Structure of This Work

This paper is organized as follows. In Section 2, the scientific background and the related research work are presented. This chapter is divided into four subsections: iris illumination and acquisition, iris image quality metrics and image focusing, image sensor demosaicing, and image conversion from color to grayscale. Section 3 presents the research method and materials used. In Section 4, the results of the research are presented and discussed. Conclusions and future work are presented in Section 5.

2. Scientific Background and Related Research Work

2.1. Iris Illumination and Acquisition

Eye color does not vary smoothly across the iris. Instead, it is arranged in clusters of similar colors that can have abrupt boundaries arising from structures within the iris. The perception of iris color, based on light reflection, light scattering, light diffraction, and light absorption on the iris, is a complex phenomenon, combining the effects of pigmentation, texture, fibrous tissue, and blood vessels in the iris. Shorter wavelengths of light are commonly reflected or scattered, while the longer wavelengths are typically absorbed by the iris. The amount of texture information, extractable from a given iris, is dependent on the wavelength under which it is illuminated. Traditionally, only a narrow band of the near-infrared (NIR) spectrum (700–900 nm) is utilized for iris recognition, since this alleviates any physical discomfort from illumination, reduces specular reflections, and increases the amount of texture captured for certain iris colors. The diffuse reflectivity of an iris is approximately 10% in the NIR band. High NIR illumination levels may be hazardous because the eye does not instinctively respond with its natural protective mechanisms, such as aversion, blinking, and pupil contraction. On the other hand, low visible light illumination levels, in unconstrained imaging setups, can degrade the quality of captured data [20,21,22].

The constraints in all commercial iris recognition systems are mostly driven by the image acquisition process, which imposes the subject’s position and motion during the recognition process. The metrics for the required degree of cooperation are the capture volume, the standoff distance, and the acquisition time. At any distance from which they are taken, the iris images must provide sufficient information for the task of iris recognition. An iris-imaging system captures a single or dual iris image at a given distance using illumination that is over a prescribed illumination wavelength band. Iris cameras that operate at approximately 30 cm or less from the subject typically resolve 200 pixels across the iris diameter. All commercial iris imaging systems conform to eye-safety standards that limit the irradiance and radiance values [23,24].

Matey et al. [23] presented the results of the “Iris on the Move” project. The developed system was able to capture iris images of sufficient quality for iris recognition, while the subject was moving at a normal walking speed, through a confining portal. The acquisition camera was approximately 3 m in distance, the image capture volume was 0.2 m × 0.4 m × 0.1 m, and a synchronized NIR LED illumination was used. Images with approximately 100 pixels across the iris were obtained. Most subjects were able to wear eyeglasses or contact lenses. The overall recognition rate for all subjects was 78%. Yoon et al. [25] proposed the usage of a pan-tilt-zoom camera at a distance. The system covered a capture volume of 1 m × 1 m × 1 m, at a stand-off distance, and ranged between 1.5 m and 2.5 m. Two NIR illuminators were used and a stepping motor was used to control the focus. To acquire an iris, the face was first detected, using the Viola-Jones algorithm. Then, the pan-tilt system was used to place the face in the center of the image. Lastly, the iris was detected by the circular edge-detector algorithm. Dong et al. [26] presented an iris image acquisition system that was self-adaptive to users. Two low-resolution cameras were used, one for face acquisition and the other for iris acquisition. Once the face region was detected by the face camera, the iris was captured by the iris camera. The subject was not moving, and the capture volume was 0.6 m × 0.4 m × 0.4 m. The average capture time was 5.1 s. He et al. [27] designed an iris camera with the goal of being more economical than commercial alternatives while still acquiring high-quality images. They used a 480 K pixel image sensor with a custom lens and a fixed focus at 25 cm, also using an 880 nm NIR LED illumination and an NIR-pass filter to minimize specular reflections on the iris. Hu and Si [28] presented an image acquisition and real-time detection system using a convolutional neural network. Image acquisition and processing were achieved with an Altera Cyclone IV FPGA, a DDR memory, and a digital image sensor OV7725. Image resolution was set to 640 × 480 pixels, and the RGB565 image output data format was used. The system was connected to a PC through a USB interface. The NIR illumination and the manually adjustable focus lens were used to collect iris images at distances of 1.5 m, 1.6 m, and 1.7 m.

2.2. Iris Image Quality Metrics and Image Focusing

Iris sample quality has several applications. It can be used at different processing levels in iris recognition systems, for example, at the image acquisition stage, at the image enhancement stage, or at the matching stage. Iris image quality is evaluated by means of quality metrics to quantify what information an iris image contains, or if the image should be discarded or further processed. The metrics can be broadly divided into two groups. The first group of metrics includes environmental and camera effects. The second group of metrics belongs to the unconstrained presentation of a subject, for example, insufficient illumination, defocus blur, off-angle presentation, and occlusion [29]. The image quality metric can improve a system’s performance. However, there is no generally accepted measure of overall iris image quality [4]. Most authors from the research field of iris image acquisition use the image focus metric for assessing motion or defocus blur. Active auto-focusing (AF) or passive AF may be used to capture a sharp image in digital cameras. While the active AF utilizes a distance sensor to measure the distance between the lens and the object to adjust the focus lens position, the passive AF calculates the image sharpness value using pixel processing [30,31]. Different methods for image sharpness evaluation exist in the literature. In evaluations based on gradient, focused images have larger gray or color-change levels than those that are defocused. In evaluations based on correlation, blurred images have a stronger pixel correlation, whereas, in evaluations based on statistics, the clearest image contains the maximum amount of entropy. Transform-based evaluations use Fourier or other transforms to evaluate the frequency spectrum of an image. Focused images contain more high-frequency components because they obtain sharper and clearer edges [32].

Daugman’s system assessed the focus of the image in real time by looking at the power in the middle and upper bands of the 2D Fourier spectrum. He used an 8 × 8 convolution kernel to calculate the Fourier transform [4,10]. He et al. [33] developed a clear iris image acquisition system with a fixed focus lens and best focus distance of 30 cm. An infrared sensor was used for distance measurement. They then used the 2D Fourier transform of the image to evaluate the power of the high-frequency components in the image. Kang and Park [34] proposed a 5 × 5 convolution kernel, similar to Daugman’s kernel. To test the focus assessment method, they artificially generated blurred iris images of the Casia 1.0 iris image database, using a Gaussian kernel mask with various σ values. Yousefi et al. [35] evaluated the following gradient-based sharpness functions: Vollath’s F4, Vollath’s F5, the variance of the image, squared gradient, sum of differences across rows, sum of differences across rows and columns, and the sum of Laplacians, in terms of accuracy and computation time on a PC platform. The accuracy was measured as a percentage of matching the true focus position. The sum of differences method gave the fastest computational time, with a 92% accuracy, while the square gradient method was more than three times slower, with a 77% accuracy. Yang and Wang [36] proposed an iris image quality evaluation method based on a gray-level evaluation, where iris image quality was reflected by evaluating a weighted average of three quality metrics: iris position, iris visibility, and iris clarity. The proposed quality index was tested on the images from the Casia 1.0 iris image database, where they correctly detected 95.7% of irises.

2.3. Image Sensor Demosaicing

Each pixel in a digital color image is composed of red, green, and blue (RGB) color channels. A color digital camera would require three image sensors, one for each color channel. To reduce costs, digital camera manufacturers employ a single CMOS image sensor and a color filter array (CFA) to record one of the three color channels at each pixel location. The primary output of these image sensors is raw RGB. CFA image interpolation, also known as demosaicing, is required to reconstruct a full-color RGB image from raw RGB pixel data [37]. The most common CFA pattern is the Bayer pattern [38], as shown in Figure 4.

Figure 4.

Bayer color filter array (CFA) pattern, as widely used in color CMOS sensors [38].

Demosaicing methods can be grouped into two groups. The first group of algorithms works on each color channel separately. They include nearest-neighbor replication, bilinear interpolation, and cubic spline interpolation. The second group of algorithms exploits inter-channel correlation. One approach in this group is smooth hue transition, which assumes that the hue does not change abruptly between neighboring pixel locations. In the first step, these algorithms interpolate the luminance (green) channel, which is usually achieved using bilinear interpolation. The chrominance channels (red and blue) are estimated from the bilinearly interpolated “red hue” (red-to-green ratio) and “blue hue” (blue-to-green ratio). Another approach that exploits inter-channel correlation is an edge-directed interpolation. Here, the bilinear interpolation of the green channel is replaced by adaptive interpolation to prevent interpolation across edges [39].

Gunturk et al. [39] presented a new demosaicing algorithm that uses inter-channel correlation in an alternating-projections scheme. The proposed algorithm was compared to bilinear interpolation and six other demosaicing algorithms; it demonstrated an outstanding performance both visually and in terms of mean square error at reasonable computational complexity. Kimmel [40] presented a simplified image formation model, used to reconstruct an algorithm for image reconstruction with a CCD sensor, based on Bayer CFA. The performance of the developed interpolation algorithm was tested on four benchmark images. Bilinear interpolation was used as the reference method to show the visual improvement offered by the proposed scheme. Lukac et al. [41] presented a cost-effective edge-sensing correlation–correction interpolation method for digital still cameras that uses edge-sensing weighted coefficients. To measure the efficiency of this method, a color image was first transformed into a Bayer image. The efficiency of the interpolation methods was measured using the mean square error and the normalized color difference criterion. The method was compared with seven other methods on the twenty color images. On average, they achieved a 20–30% improvement. Bailey et al. [42] implemented a demosaicing algorithm using an Altera Cyclone V FPGA. They recommend integrating the Bayer interpolation within the FPGA image-processing pipeline when the pixels are streamed from the image sensor. In the first stage of their three-stage algorithm, the missing green pixels were first estimated using a high-order interpolation in four directions, with a 7 × 7 interpolation window. The second stage used a simple first-order interpolation and interpolated diagonally to estimate the missing blue and red pixels. The final stage was used to interpolate the red and blue channels both horizontally and vertically. Images with a resolution of 800 × 600 and an 8-bit pixel depth were captured and displayed on a VGA monitor. In the results, they compared the resource requirements of the proposed algorithm with the other five algorithms. The quality of the processed images was estimated visually, and the authors observed significantly better quality, although at the cost of increased resource utilization.

2.4. Color to Grayscale Image Conversion

In most image-recognition applications, digital image processing is conducted on grayscale images. Edge detection is one of the most frequently used procedures in digital image processing. Many researchers have proposed an FPGA implementation of some edge detection algorithm, and their first step is usually RGB-to-grayscale conversion. Color edge detection is rare, because its calculation requirements are three times greater than in the case of a grayscale image, and 90% of the edges are approximately the same in grayscale and color images [43]. Converting color images to grayscale is used for various reasons, such as for reproducing monochrome devices. Color-to-grayscale conversions reduce three-dimensional color data into a single dimension. During this process, some loss of information is inevitable. The goal is to preserve as much information as possible and to produce perceptibly plausible grayscale results. Various approaches have been proposed. One simple, widely used, and computationally efficient approach is based on neglecting the chrominance channels (red and blue) and taking the luminance channel (green) as a representation of the original color image [44]. In 2005, Gooch et al. [45] presented the Color2Gray algorithm with the aim of improving what at that time was considered conventional algorithms (CIECAM97 Lum, L*a*b* Lum, XYZ Lum, YCrCb Lum, Photoshop Auto Contrast) for converting RGB images to grayscale. These algorithms, which employ a simple pixel-weighted sum to map a 3D color space to a single dimension, are ineffective at preserving chrominance differences between isoluminant pixels. The Color2Gray algorithm encodes differences from a color image into luminance differences in grayscale. The Color2Gray algorithm does not provide large improvements for scenes with a high dynamic range. However, the method does improve any image that contains large isoluminant regions with a small number of different chrominance values. The algorithm is computationally very intensive. In 2005, using an Athlon 64 3200+ processor, computing images with full neighborhoods required 12.7 s for a 100 × 100-pixel image.

3. Methods and Materials

The FPGA image-processing block is the functional core of our device. The device performs the function of sharp grayscale image acquisition for an illuminated eye, using a camera module that integrates a color-image sensor and a motorized lens. The FPGA image-processing block is supported by the IR distance measurement circuit and by the visible light illumination. Both light sources conform to the IEC62471 safety standard. In the following section, we first present the FPGA image-processing block; then, we present the supporting hardware of the eye-image acquisition device, the experimental setup, and the test procedures.

3.1. FPGA Image-Processing Block

The FPGA image-processing block performs two very important tasks:

- Image conversion from 10-bit raw RGB to 8-bit grayscale;

- Image sharpness value calculation.

Both image-processing blocks run in parallel and in real time on the FPGA. The combined result of both operations is a sharp 8-bit grayscale image from the camera module that is based on a low-cost 10-bit color image sensor.

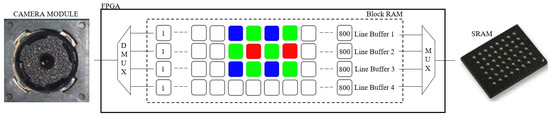

The pixel-data flow from the camera module to the external SRAM, through the FPGA real-time image-processing block, is shown in Figure 5. See also Figure 4. In this block, four line-buffers (LBs) are implemented as block RAM (BRAM) cells inside the FPGA. Each LB holds an entire SVGA line or 800 pixels. LBs are filled with SVGA lines sequentially, line by line. When the fourth LB is full, the next SVGA line is written in the first LB, etc., until all 600 lines are acquired and processed. Each LB’s cell location can be accessed (write-to LB cell or read-from LB cell) in a single FPGA clock. For example, when writing 10 bits of pixel data in any of the fourth LB cells, 10 bits of pixel data can be simultaneously red from any cell in the first, second, or third LB. This is the basis of our FPGA image-processing block. Each processed pixel is written to the external SRAM for further processing. In our case, this entails displaying the processed image to the SVGA display or sending the processed image to the PC over the UART-USB interface.

Figure 5.

FPGA real-time image processing block.

The camera module was set to the raw-RGB image data format. Each acquired pixel had a 10-bit intensity value of either red, green, or blue color. Half of all of the pixels in the image sensor were green. Therefore, the spatial resolution of green pixels was twice that compared to the blue pixels’ or red pixels’ spatial resolution. We interpolated the missing green pixels to obtain an 8-bit green monochrome image that can be approximated to a grayscale image, which we would obtain from an 8-bit monochrome grayscale image sensor. To interpolate the missing green pixels, we used an edge-directed bilinear interpolation. Figure 6a, Equations (1) and (2) show the pixels involved in an edge-directed bilinear interpolation of the missing green pixels.

Figure 6.

Pixels involved in real-time FPGA image processing: (a) edge-directed green color bilinear interpolation; (b) image sharpness value calculation from red and blue pixels.

Green color interpolation was calculated at every red pixel position and at every blue pixel position. The image sharpness value was calculated at every green pixel position as a blue-gradient and red-gradient calculation. By doing this, the two image-processing operations were interleaved on a single image frame. To the best of our knowledge, the described image processing method has not yet been presented in any iris image acquisition application. Figure 6b and Equations (3)–(5) show how the green pixels’ positions are used to calculate the image sharpness of an image frame. For an image frame with 480,000 pixels, there are 240,000 pixel-sharpness calculations. Better focused images have higher image sharpness values.

Interpolating a green pixel at the red-R0 pixel position into a grayscale 8-bit value:

if |G2 − G3| > |G0 − G5|=> interpolated green pixel value = (G0 + G5)/8

if |G2 − G3| < |G0 − G5| => interpolated green pixel value = (G2 + G3)/8

if |G2 − G3| < |G0 − G5| => interpolated green pixel value = (G2 + G3)/8

Interpolating a green pixel at the blue-B4 pixel position into a grayscale 8-bit value:

if |G5 − G6| > |G3 − G8| => interpolated green pixel value = (G3 + G8)/8

if |G5 − G6| < |G3 − G8| => interpolated green pixel value = (G5 + G6)/8

if |G5 − G6| < |G3 − G8| => interpolated green pixel value = (G5 + G6)/8

Pixel sharpness calculation at the green-G3 pixel position:

pixel_sharpness (k) = |R0 − R1| + |B1 − B4|

Pixel sharpness calculation at the green-G5 pixel position:

pixel_sharpness (k) = |B3 − B4| + |R0 − R2|

Image sharpness = [∑k pixel_sharpness (k)]/64

3.2. Supporting Hardware of the Eye-Image Acquisition Device

The developed eye-image acquisition device was designed around a camera module. We used a small, low-cost, and easy-to-purchase camera module with an integrated image sensor and motorized lens. Figure 7 shows the functional block diagram of the developed eye-image acquisition device, with the following core components:

Figure 7.

Functional block diagram of the developed eye image acquisition device.

- Camera module [46] as the image acquisition device;

- Microcontroller (MCU) [47] as the system controller;

- FPGA [48] with 2 MB external SRAM memory [49] as the image processing core;

- Illumination subsystem for object illumination based on RGB LEDs [50];

- Distance measurement subsystem to measure the distance between the object and the camera module based on IR emitting LEDs [51] and IR detecting photodiodes [52];

- External PC that is connected to the FPGA through the external UART/USB chip interface [53], for off-line MATLAB [54] image processing and testing;

- External 8.4” SVGA display [55] that is connected to the FPGA through the external SVGA/LVDS chip interface [56], for real-time image debugging. The display is used in the prototype device for debug purposes only, and it will not be integrated into the final product.

The camera module integrated a 5 M pixel CMOS color image sensor and a motorized lens to adjust the camera focus. The image size, the image data format, and the focus lens position were adjusted over the I2C bus by the MCU. In our design, the image size was set to SVGA (800 × 600 pixels), and the image data format was set to raw RGB, where each pixel was 10-bit deep. The image frames were captured by the FPGA over the digital video port (DVP).

Figure 8a shows the camera module mounted on the CPU board. The SVGA image size was selected to obtain eye images with good enough resolution that would fit the display’s size. However, this also means that our device is not compliant with the ISO/IEC19794-5 standard. Since we were using a local on-chip database, which is not accessible by the external world, this is not an issue.

Figure 8.

Image acquisition electronics: (a) camera module mounted on the MCU PCB; (b) IR distance/RGB illumination PCB mounted on the MCU PCB; (c) red RGB LED turned on; (d) green RGB LED turned on; (e) blue RGB LED turned on.

The image pixel clock was set to 12 MHz, which gives an approximate frame rate of 5 frames per second. At this pixel clock, the frame duration was 195 ms. This frame rate was selected due to the timing requirements of the FPGA real-time image-processing block. The FPGA chip runs at 200 MHz. This provides the processing block with 16 clock cycles to process each incoming pixel in real time.

The image sharpness was adjusted by adjusting the position of the integrated motorized lens. To speed up the focusing process, the camera’s lens position was first set roughly, according to the measured distance between the camera module and the object. This was achieved by the IR distance measurement circuit, where the main components are four IR LEDs and four IR photodiodes. The emitted and reflected IR light was detected by the IR photodiodes when an object was placed in front of the camera module. The distance measurement circuit, arranged around the camera module, is shown in Figure 8b.

Since we used a camera module with an integrated CFA-based image sensor, which is sensitive to red, blue, and green light wavelengths, a three-color RGB LED illumination was used, with peak wavelengths at 634 nm, 522 nm and 465 nm for the red, green, and blue illumination channels, respectively. As can be seen in Figure 8b, there were eight RGB LEDs arranged symmetrically around the camera module. The illumination intensity for each illumination channel can be adjusted independently by the MCU, according to the measured distance from the object, while considering the eye-safety illumination limits. In Figure 8c–e, the red, green, and blue LEDs are turned on. The advantage of using visible illumination over IR illumination is that it can be used for eye liveness detection, since the eye pupil changes in size when the visible light illumination level is changed. The eye image was acquired at a short distance of 7–13 cm. Therefore, the visible illumination should ensure capturing enough iris structure detail for the iris recognition process.

To acquire a sharp 8-bit grayscale image using our eye-image acquisition device, we used a simple experimental setup, as shown in Figure 9a. The setup was composed of two wooden boards, four metal threaded sticks, and four screw nuts under the top wooden board. The distance between the camera module and the top boards was adjusted manually by adjusting the position of the nuts. Instead of acquiring real-eye images, a plastic artificial toy eye with a diameter of 22 mm [57], as shown in Figure 8b, was used. At this stage of the project, we did not use real human eyes, since we were interested in the core functionality and the concept of the proposed eye-image acquisition device.

Figure 9.

Eye image acquisition experimental setup: (a) developed prototype electronics under the toy eye; (b) toy eye glued on the top board.

3.3. Experimental Setup and Test Procedures

In the first step, the experimental setup was used to calibrate the IR distance measurement circuit. In the second step, the sharpness function as a function of distance was measured. In the last step, the experimental setup was used for testing the proposed eye-image acquisition scheme. Using the calibrated IR distance measurement circuit, the focus lens position is first set roughly. Then, the fine-tuning process of the lens position adjustment may start. At this point, the RGB illumination begins and the passive focusing algorithm is executed. Here, image sharpness values are calculated from sequentially acquired frames. Fine adjustment of the camera’s lens position is conducted, based on current and previous image sharpness values. The camera’s lens position is adjusted by the MCU over the I2C bus in the range from 0 to 1023 digits. The image-focusing process is finished when a maximum of six consecutive images are acquired. Therefore, the whole image-focusing process never takes more than 1200 ms. At this point, we acquired an image with a maximum image sharpness value. The RGB illumination level was set according to the measured distance from the eye.

To measure the image sharpness function as a function of distance from the camera, the ISO12233 chart was used as the focusing target image. A PDF image file version of this chart is shown in Figure 10. The chart was obtained from [58], printed, and fixed on the bottom side of the top wooden board of the mechanical setup.

Figure 10.

ISO-12233 chart for image sharpness evaluation [58].

It is important that the RGB illumination circuit and the IR distance measurement circuit comply with the IEC62471 standard. To calculate the total hazard exposure level of a single RGB LED at a 10-mm distance and the hazard exposure level of a single IR LED at a 10-mm distance, the radiant power Φ [W] of a red, green, blue, and IR illumination was measured first. The standard IEC62471 [59] and the power-meter setup from Gentec [60,61] were used for this purpose. Here, worst-case illumination scenarios were assumed, as the eye would be centered 10 mm above an LED and illuminated for 5 s. Equations (6) and (7) show the calculation of the retinal blue light hazard exposure limit (EB × t, EB) of a small light source for the spectral region from 300 to 700 nm. Equations (8) and (9) show the calculation of the infrared radiation hazard exposure limit (EIR) for the eye for the IR spectral region from 780 to 3000 nm. E(λ) is the spectral irradiance (W × m2 × nm−1), defined as the quotient of the radiant power dΦ(λ) in a wavelength interval dλ, incident on an element of a surface, by the area dA of that element, and by the wavelength interval dλ. λ is the wavelength (nm), Δt is the exposure time (s), Δλ is the bandwidth (nm), and B(λ) is the blue-light hazard weighting function (constant).

EB × t = ∑λ∑t E(λ) × B(λ) × Δt × Δλ ≤ 100 J/m2 valid for t ≤ 100 s

EB = ∑λ E(λ) × B(λ) × Δλ ≤ 1 W/m2 valid for t > 100 s

EIR = ∑λ E(λ) × Δλ ≤ 18.000 × t−0.75 W/m2 valid for t ≤ 1.000 s

EIR = ∑λ E(λ) × Δλ ≤ 100 W/m2 valid for t > 1.000 s

4. Results and Discussion

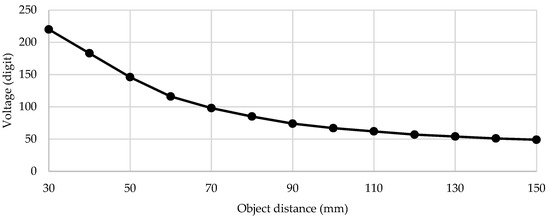

In this section, we present the results of the tests that were obtained using the experimental setup presented in Section 3. Figure 11 shows the measured voltage/distance characteristic. The voltage of the IR detection circuit was sampled by the MCU’s 10-bit ADC at a 3.3 V reference voltage. A look-up table and linear interpolation between calibration points were used in the code for voltage-to-distance conversion. Figure 12 shows the measured image sharpness function of the developed imaging system in terms of image sharpness versus object distance. As specified by the manufacturer, the maximum value of the sharpness function was at a 100-mm distance. Figure 13 shows the measured image sharpness as a function of the lens position at three fixed distances from the object: 70 mm, 100 mm and 130 mm. As expected, the best performance of the image-sharpness circuit was measured at a 100-mm distance. This is the optimal standoff acquisition distance of the system.

Figure 11.

Measured voltage/distance characteristics of the distance measurement subsystem.

Figure 12.

Measured image sharpness as a function of distance from the object.

Figure 13.

Measured image sharpness as a function of lens position at 3 fixed distances from the object: 70 mm, 100 mm, and 130 mm.

The developed eye-image acquisition device was tested at a distance range of 7–13 cm from the target. The measured span of the distance measurement subsystem in this range was approximately 50 digits. The achieved distance measurement accuracy was good enough to perform active focusing with this application.

To estimate the overall performance of the proposed eye-image acquisition device, the subjective visual approach and the image sharpness value metric were used. Figure 14 shows three acquired images under different illumination conditions in the best focus position. Image sharpness improved significantly with higher illumination levels. In ten attempts, the average sharp image acquisition time was 1 s, with acquisition times ranging from 800 to 1200 ms. The diameter of the acquired iris was approximately 400 pixels, which is double compared to the standardized 200 pixels. This will be an advantage when the whole iris recognition system is implemented. The undesired illumination artifacts were well concentrated inside the pupil area.

Figure 14.

Acquired 8 bit grayscale images of the artificial eye at distance 100 mm, at a focus lens position of 590 digits, and under different illumination conditions: (a) no illumination (only the infrared distance measurement circuit is active), image sharpness = 136,000; (b) low RGB illumination level, image sharpness = 195,000; (c) high RGB illumination level, image sharpness = 229,000.

Table 2 shows the calculation of the total retinal blue hazard exposure of a single RGB LED. Table 3 shows the calculation of the infrared radiation hazard exposure of the eye to a single IR LED. Both calculated hazard exposure levels were below their hazard exposure level limits. The retinal blue hazard exposure level limit, EB × t, is 100 J/m2. The calculated infrared radiation hazard exposure level limit, EIR, was 5883 W/m2.

Table 2.

Total retinal blue hazard exposure calculation of a single RGB LED.

Table 3.

Infrared radiation hazard exposure for the eye of a single IR LED.

5. Conclusions and Future Work

The purpose of this work was to verify if a compact access-control device based on iris recognition technology, intended for easy integration into smart-city doors, could be built at a reasonable cost. In every iris recognition system, the image acquisition subsystem represents the most complex and expensive part. Therefore, the project was divided into two stages. In this work, we presented the results of the first stage, where the goal was to develop and test just the eye-image acquisition part of the system.

The results showed a good performance by the developed device. We managed to acquire a sharp image of an artificial toy eye sized 22 mm in diameter from an approximate distance of 10 cm, achieving 400 pixels over the iris diameter, with an average acquisition time of 1 s, and with illumination levels below hazard exposure levels.

The main drawback of this work was the limitations induced by the usage of an artificial toy eye and, consequently, the significantly constrained iris image acquisition conditions. We were unable to measure the performance of the complete iris recognition system, which can be achieved by using real eye images, less unconstrained eye image acquisition, and implementing all iris recognition phases.

Nevertheless, we obtained enough positive data to continue our work, which will be focused on the development of a complete iris recognition system for smart-city access control applications. In the second stage, the device will be further miniaturized and other iris recognition phases (iris segmentation, iris coding, code-matching) will be added as software upgrades. The optimal visible illumination levels for different iris colors as a function of the distance from the camera will also be studied and implemented. The performance of the whole iris recognition system will be measured in terms of its access control accuracy metrics, such as the false accept rate (FAA) and false reject rate (FRR).

Author Contributions

Conceptualization, D.Z.; methodology, D.Z. and A.Ž.; hardware/software, D.Z.; validation, A.Ž.; formal analysis, D.Z. and A.Ž.; investigation, D.Z.; resources, D.Z. and A.Ž.; data curation, D.Z. and A.Ž.; writing—original draft preparation, D.Z. and A.Ž.; writing—review and editing, D.Z. and A.Ž.; supervision, A.Ž.; project administration, D.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Data are available upon request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Chen, M.; Tang, Y.; Zou, X.; Huang, K.; Li, L.; He, Y. High-accuracy multi-camera reconstruction enhanced by adaptive point cloud correction algorithm. Opt. Lasers Eng. 2019, 122, 170–183. [Google Scholar] [CrossRef]

- Tang, Y.; Li, L.; Wang, C.; Chen, M.; Feng, W.; Zou, X.; Huang, K. Real-time detection of surface deformation and strain in recycled aggregate concrete-filled steel tubular columns via four-ocular vision. Robot. Comput. -Integr. Manuf. 2019, 59, 36–46. [Google Scholar] [CrossRef]

- Biometric Technology Market Outlook: 2022. 2021. Available online: https://www.alliedmarketresearch.com/biometric-technology-market (accessed on 17 August 2021).

- Bowyer, K.W.; Hollingsworth, K.; Flynn, P.J. Image understanding for iris biometrics: A survey. Comput. Vis. Image Underst. 2008, 110, 281–307. [Google Scholar] [CrossRef]

- Biometrics Market Forecast for $44B by 2026, Industry Survey Sees Digital Identity Focus. 2021. Available online: https://www.biometricupdate.com/202107/biometrics-market-forecast-for-44b-by-2026-industry-survey-sees-digital-identity-focus (accessed on 17 August 2021).

- Contactless Biometrics Estimated to Bring in over $9B This Year. 2021. Available online: https://www.biometricupdate.com/202106/contactless-biometrics-estimated-to-bring-in-over-9b-this-year (accessed on 17 August 2021).

- COVID-19 Impact on the Biometric System Market. 2021. Available online: https://www.marketsandmarkets.com/Market-Reports/next-generation-biometric-technologies-market-697.html (accessed on 17 August 2021).

- Five Common Biometric Techniques Compared. 2021. Available online: https://www.recogtech.com/en/knowledge-base/5-common-biometric-techniques-compared (accessed on 17 August 2021).

- Top Five Biometrics (Face, Fingerprint, Iris, Palm and Voice) Modalities Comparison. 2021. Available online: https://www.bayometric.com/biometrics-face-finger-iris-palm-voice (accessed on 17 August 2021).

- Daugman, J.G. High confidence visual recognition of persons by a test of statistical independence. IEEE Trans. Pattern Anal. Mach. Intell. 1993, 15, 1148–1161. [Google Scholar] [CrossRef] [Green Version]

- Daugman, J.G. Biometric Personal Identification System Based on Iris Analysis. U.S. Patent 5291560A, 1 March 1994. [Google Scholar]

- Daugman, J.G. Introduction to Iris Recognition. University of Cambridge: Faculty of Computer Science and Technology. Available online: https://www.cl.cam.ac.uk/~jgd1000/iris_recognition.html (accessed on 12 June 2021).

- Wildes, R.P. Iris recognition: An emerging biometric technology. Proc. IEEE 1997, 85, 1348–1363. [Google Scholar] [CrossRef] [Green Version]

- Quinn, G.; Grother, P.; Tabassi, E. Standard iris storage formats. In Handbook of Iris Recognition; Burge, M.J., Bowyer, K.W., Eds.; Springer: London, UK, 2013; pp. 55–66. [Google Scholar] [CrossRef]

- Assessing the Photobiological Safety of LEDs; Underwriters Laboratories: Oak Brook, IL, USA, 2012; Available online: https://code-authorities.ul.com/wp-content/uploads/sites/40/2015/02/UL_WP_Final_Assessing-the-Photobiological-Safety-of-LEDs_v3_HR.pdf (accessed on 13 September 2021).

- IrisID, iCAM7. 2021. Available online: https://www.irisid.com/productssolutions/hardwareproducts/icam7-series (accessed on 20 August 2021).

- Granding, IR7pro. 2021. Available online: https://www.grandingteco.com/top-suppliers-biometric-scanner-iris-recognition-access-control-and-time-attendance-system-ir7-pro-granding-product (accessed on 20 August 2021).

- Iritech. MO2121. 2021. Available online: https://www.iritech.com/products/hardware/irishield%E2%84%A2-series (accessed on 20 August 2021).

- Thomas.TA-EF-45. 2021. Available online: https://www.kmthomas.ca/biometric-/950-iris-recognition-reader.html (accessed on 20 August 2021).

- Monaco, M.K. Color Space Analysis for Iris Recognition. Master’s Thesis, West Virginia University, Morgantown, WV, USA, 2007. [Google Scholar] [CrossRef]

- Burge, M.J.; Monaco, M. Multispectral iris fusion and cross-spectrum matching. In Handbook of Iris Recognition; Burge, M.J., Bowyer, K.W., Eds.; Springer: London, UK, 2013; pp. 171–182. [Google Scholar] [CrossRef]

- Proença, H. Iris recognition: On the segmentation of degraded images acquired in the visible wavelength. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1502–1516. [Google Scholar] [CrossRef] [PubMed]

- Matey, J.R.; Naroditsky, O.; Hanna, K.; Kolczynski, R.; LoIacono, D.J.; Mangru, S.; Tinker, M.; Zappia, T.M.; Zhao, W.Y. Iris on the Move: Acquisition of Images for Iris Recognition in Less Constrained Environments. Proc. IEEE 2006, 94, 1936–1947. [Google Scholar] [CrossRef]

- Ackerman, D.A. Optics of iris imaging systems. In Handbook of Iris Recognition; Burge, M.J., Bowyer, K.W., Eds.; Springer: London, UK, 2013; pp. 367–393. [Google Scholar] [CrossRef]

- Yoon, S.; Bae, K.; Park, K.R.; Kim, J. Pan-tilt-zoom based iris image capturing system for unconstrained user environments at a distance. In International Conference on Biometrics; Springer: Berlin/Heidelberg, Germany, 2007; pp. 653–662. [Google Scholar]

- Dong, W.; Sun, Z.; Tan, T.; Qiu, X. Self-adaptive iris image acquisition system. In Proceeding SPIE 6944, Biometric Technology for Human Identification V; International Society for Optics and Photonics: Washington, DC, USA, 2008; p. 694406. [Google Scholar] [CrossRef]

- He, X.; Yan, J.; Chen, G.; Shi, P. Contactless Autofeedback Iris Capture Design. IEEE Trans. Instrum. Meas. 2008, 57, 1369–1375. [Google Scholar] [CrossRef]

- Hu, Y.; Si, X. Iris image acquisition and real-time detection system using convolutional neural network. ResearchSquare 2021. preprint. [Google Scholar] [CrossRef]

- Schmid, N.A.; Zuo, J.; Nicolo, F.; Wechsler, H. Iris quality metrics for adaptive authentication. In Handbook of Iris Recognition; Burge, M.J., Bowyer, K.W., Eds.; Springer: London, UK, 2013; pp. 67–84. [Google Scholar] [CrossRef]

- Weideman, D. Automated focus determination. In Proceedings of the IEEE 1993 National Aerospace and Electronics Conference-NAECON 1993, Dayton, OH, USA, 24–28 May 1993; Volume 2, pp. 996–1002. [Google Scholar] [CrossRef]

- Kuo, C.J.; Chiu, C. Improved auto-focus search algorithms for CMOS image-sensing module. J. Inf. Sci. Eng. 2011, 27, 1377–1393. [Google Scholar]

- Cao, D.; Gao, Y.; Li, H. Auto-focusing evaluation functions in digital image system. In Proceedings of the 2010 3rd International Conference on Advanced Computer Theory and Engineering, Chengdu, China, 20–22 August 2010; Volume 5, pp. 331–334. [Google Scholar] [CrossRef]

- He, Y.; Cui, J.; Tan, T.; Wang, Y. Key techniques and methods for imaging iris in focus. In Proceedings of the 18th International Conference on Pattern Recognition, Hong Kong, China, 20–26 August 2006; pp. 557–561. [Google Scholar] [CrossRef]

- Kang, B.J.; Park, K.R. Real-time image restoration for iris recognition systems. IEEE Trans. Syst. Man Cybern. B 2007, 37, 1555–1566. [Google Scholar] [CrossRef] [PubMed]

- Yousefi, S.; Rahman, M.; Kehtarnavaz, N.; Gamadia, M. A new auto-focus sharpness function for digital and smart-phone cameras. In Proceedings of the 2011 IEEE International Conference on Consumer Electronics, Las Vegas, NV, USA, 9–12 January 2011; pp. 475–476. [Google Scholar] [CrossRef]

- Yang, B.; Wang, J. Iris image quality evaluation method research based on gradation features. In Proceedings of the 2016 IEEE International Conference on Signal and Image Processing, Beijing, China, 13–15 August 2016; pp. 108–112. [Google Scholar] [CrossRef]

- Lien, C.; Yang, F.; Chen, P.; Fang, Y. Efficient VLSI architecture for edge-oriented demosaicking. IEEE Trans. Circuits Syst. Video Technol. 2018, 28, 2038–2047. [Google Scholar] [CrossRef]

- Bayer, B.E. Color Imaging Array. U.S. Patent 3971065, 20 July 1976. [Google Scholar]

- Gunturk, B.K.; Altunbasak, Y.; Mersereau, R.M. Color plane interpolation using alternating projections. IEEE Trans. Image Process. 2002, 11, 997–1013. [Google Scholar] [CrossRef] [PubMed]

- Kimmel, R. Demosaicing: Image reconstruction from color CCD samples. IEEE Trans. Image Process. 1999, 8, 1221–1228. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lukac, R.; Plataniotis, K.N.; Hatzinakos, D.; Aleksic, M. A novel cost effective demosaicing approach. IEEE Trans. Consum. Electron. 2004, 50, 256–261. [Google Scholar] [CrossRef]

- Bailey, D.; Randhawa, S.; Li, J.S.J. Advanced Bayer demosaicing on FPGAs. In Proceedings of the 2015 International Conference on Field Programmable Technology (FPT), Queenstown, New Zealand, 7–9 December 2015; pp. 216–220. [Google Scholar] [CrossRef]

- Hagara, M.; Stojanović, R.; Bagala, T.; Kubinec, P.; Ondráček, O. Grayscale image formats for edge detection and for its FPGA implementation. Microprocess. Microsyst. 2020, 75, 103056. [Google Scholar] [CrossRef]

- Cadik, M. Perceptual evaluation of color-to-grayscale image conversions. Comput. Graph. Forum 2008, 27, 1745–1754. [Google Scholar] [CrossRef]

- Gooch, A.A.; Olsen, S.C.; Tumblin, J.; Gooch, B. Color2Gray: Salience-preserving color removal. ACM Trans. Graph. 2005, 24, 634–639. [Google Scholar] [CrossRef]

- Camera Module KLT-M6MPA-OV56040 V3.4 NIR. Kai Lap Technologies. 2021. Available online: http://www.kailaptech.com/Product.aspx?id=2014&l1=712 (accessed on 13 September 2021).

- SAM E70/S70/V70/V71 Family Data Sheet. Microchip Technology. 2020. Available online: https://www.microchip.com/en-us/product/ATSAMS70J19#document-table (accessed on 13 September 2021).

- 7 Series FPGAs Data Sheet: Overview. Xilinx. 2020. Available online: https://www.xilinx.com/content/dam/xilinx/support/documentation/data_sheets/ds180_7Series_Overview.pdf (accessed on 13 September 2021).

- 2048K × 8bit High Speeed Cmos Sram. Lyontek. 2014. Available online: http://www.lyontek.com.tw/pdf/hs/LY61L20498A-1.0.pdf (accessed on 13 September 2021).

- PLCC-4 Tricolor Black Surface LED, ASMB-MTB0-0A3A2. Avago Technologies. 2015. Available online: https://docs.broadcom.com/doc/AV02-4186EN (accessed on 13 September 2021).

- High Speed Infrared Emitting Diodes 940 nm, VSMB2020X01. Vishay. 2011. Available online: https://www.vishay.com/docs/81930/vsmb2000.pdf (accessed on 13 September 2021).

- Silicon PIN Photodiode, VEMD2520X01. Vishay. Rev. 1.2, Document Number: 83294. 2011. Available online: https://www.vishay.com/docs/83294/vemd2500.pdf (accessed on 13 September 2021).

- FT232R Usb Uart Ic. FTDI. 2020. Available online: https://ftdichip.com/wp-content/uploads/2020/08/DS_FT232R.pdf (accessed on 13 September 2021).

- Matlab. Mathworks. 2021. Available online: https://uk.mathworks.com/products/matlab.html (accessed on 2 June 2021).

- IDK-1108R-45SVA1E. Advantech. 2012. Available online: https://www.advantech.com/products/60f5fbd2-6b02-490c-98bc-88cd13279638/idk-1108/mod_d36e2e00-0217-4c24-ae52-9366a1d62d2f (accessed on 13 September 2021).

- DS90C385A. Texas Instruments. 2013. Available online: https://www.ti.com/lit/ds/symlink/ds90c385a.pdf?ts=1631464072720&ref_url=https%253A%252F%252Fwww.google.com%252F (accessed on 13 September 2021).

- Etsy, Eyes for Dolls. Available online: https://www.etsy.com/listing/871244018/eyes-for-dolls-23-colors-11-sizes-good?ga_order=most_relevant&ga_search_type=all&ga_view_type=gallery&ga_search_query=toy+eyes&ref=sr_gallery-2-8&bes=1&col=1 (accessed on 24 August 2021).

- Westin, S.H. ISO 12233 Chart. Cornel University. Available online: https://www.graphics.cornell.edu/~westin/misc/res-chart.html (accessed on 17 June 2021).

- European Standard EN-62471:2008; CENELEC: Brussels, Belgium, 2008.

- Touchscreen Display Device for Power & Energy Measurement, Maestro, P/N 201235B. Gentec. Available online: https://www.gentec-eo.com/products/maestro (accessed on 19 June 2021).

- Photodiode Detector for Laser Power Measurements up to 300 mW, PH100-SI-HA-OD1-D0, P/N 202683. Gentec. Available online: https://www.gentec-eo.com/products/ph100-si-ha-od1-d0 (accessed on 19 June 2021).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).