Depth-Camera-Aided Inertial Navigation Utilizing Directional Constraints

Abstract

:1. Introduction

- The use of the directional constraint of the non-metric visual translation to aid the inertial solutions. It is based on our preliminary work [5], providing more thorough results using a public dataset as well as outdoor experiments.

- Our Inertial-Kinect odometry system integrates the full 6 degrees of freedom (DOF) (rotation and translation) and partial 5DOF (rotation and scale-ambiguous translation) information from the Kinect to estimate the pose of an aerial vehicle. Most existing works have been directed at indoor applications in which the full 6DOF Kinect poses are available.

- We demonstrate real-time, front-end odometry while the back-end pose-graph SLAM supports low-priority multi-threaded processing for the keyframe optimization. The real-time odometry outputs are subsequently used for hovering flight control in a cluttered outdoor environment.

2. Related Work

3. Methods

3.1. Inertial Odometry

- is the Earth rotation rate in the navigation frame;

- is the acceleration due to gravity;

- is the accelerometer measurement in the body frame;

- is the gyroscope measurement in the body frame;

- is the accelerometer bias in the body frame;

- is the gyroscope bias in the body frame;

- is a direction cosine matrix transforming a vector from body to navigation frame

- is a matrix transforming a body rate to an Euler angle rate.where , , and are shorthand notations for , , and , respectively.

3.2. Visual Pose Measurement

6DOF Pose Measurement

3.3. 5DOF Measurement Using Directional Constraints

3.4. Integration Filter with Directional Constraints

3.5. Pose-Graph Optimization

3.6. Observability of the System

4. Results and Discussion

4.1. Depth Calibration

4.2. Indoor Experiment

4.3. Public Indoor Dataset

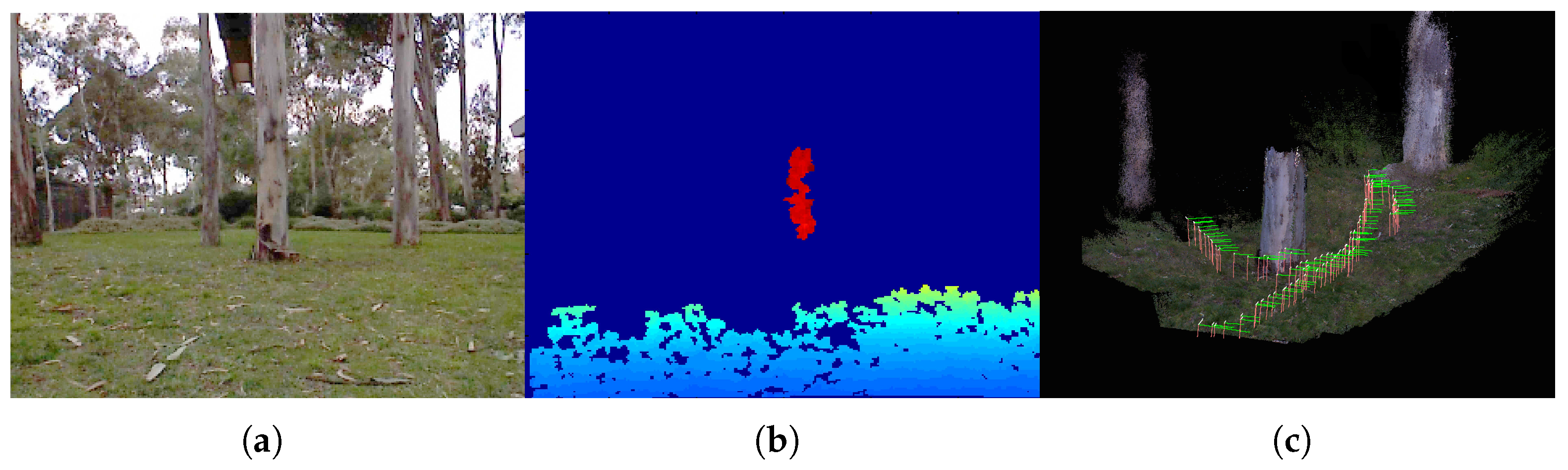

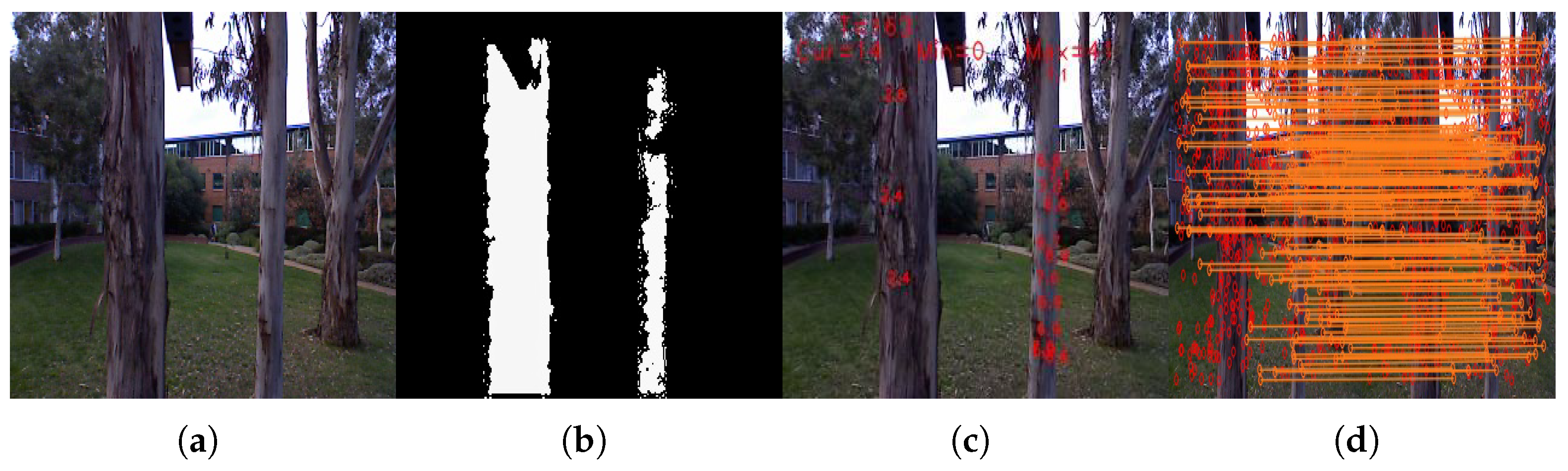

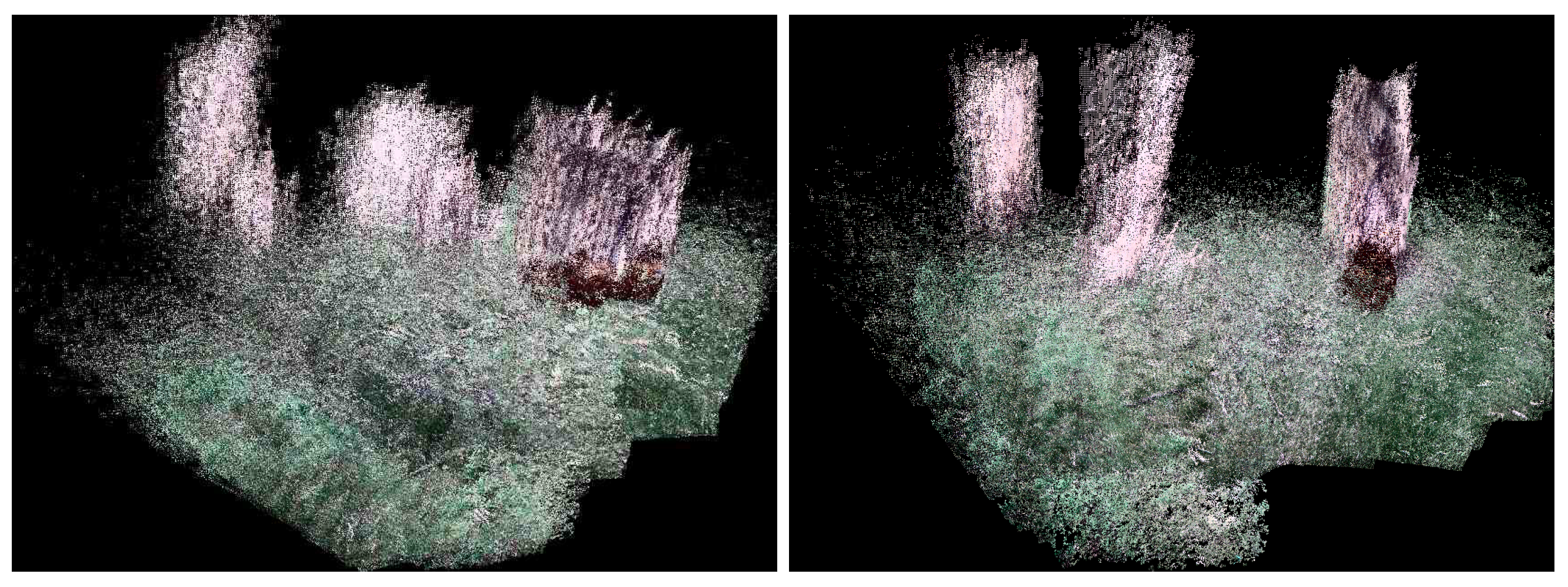

4.4. Outdoor Experiment

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Zhang, H.; Ye, C. DUI-VIO: Depth Uncertainty Incorporated Visual Inertial Odometry based on an RGB-D Camera. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Las Vegas, NV, USA, 24 October–24 January 2021; pp. 5002–5008. [Google Scholar]

- Li, H.; Wen, I.D.X.; Guo, H.; Yu, M. Research into Kinect/Inertial Measurement Units Based on Indoor Robots. Sensors 2018, 18, 839. [Google Scholar] [CrossRef] [Green Version]

- Chai, W.; Chen, C. Enhanced Indoor Navigation Using Fusion of IMU and RGB-D Camera. In Proceedings of the International Conference on Computer Information Systems and Industrial Applications (CISIA), Bangkok, Thailand, 28–29 June 2015. [Google Scholar]

- Cho, H.; Yeon, S.; Choi, H.; Doh, N. Detection and Compensation of Degeneracy Cases for IMU-Kinect Integrated Continuous SLAM with Plane Features. Sensors 2018, 18, 935. [Google Scholar] [CrossRef] [Green Version]

- Qayyum, U.; Kim, J. Inertial-Kinect Fusion for Outdoor 3D Navigation. In Proceedings of the Australasian Conference on Robotics and Automation, Sydney, Australia, 2–4 December 2013. [Google Scholar]

- Dai, X.; Mao, Y.; Huang, T.; Li, B.; Huang, D. Navigation of Simultaneous Localization and Mapping by Fusing RGB-D Camera and IMU on UAV. In Proceedings of the CAA Symposium on Fault Detection, Supervision and Safety for Technical Processes, Xiamen, China, 5–7 July 2019; pp. 6–11. [Google Scholar]

- Diel, D.D.; DeBitetto, P.; Teller, S. Epipolar Constraints for Vision-Aided Inertial Navigation. In Proceedings of the Seventh IEEE Workshops on Applications of Computer Vision, Breckenridge, CO, USA, 5–7 January 2005; Volume 1, pp. 221–228. [Google Scholar]

- Fang, W.; Zheng, L. Rapid and robust initialization for monocular visual inertial navigation within multi-state Kalman filter. Chin. J. Aeronaut. 2018, 31, 148–160. [Google Scholar] [CrossRef]

- Pire, T.; Fischer, T.; Castro, G.; Cristóforis, P.D.; Civera, J.; Berlles, J.J. S-PTAM: Stereo Parallel Tracking and Mapping. Robot. Auton. Syst. 2017, 93, 27–42. [Google Scholar] [CrossRef] [Green Version]

- Huang, S.; Bachrach, A.; Henry, P.; Krainin, M.; Maturana, D.; Fox, D.; Roy, N. Visual Odometry and Mapping for Autonomous Flight Using an RGB-D Camera. In Robotics Research, Proceedings of the 15th International Symposium on Robotics Research (ISRR), Flagstaff, AZ, USA, 28 August–1 September 2011; Springer: Cham, Switzerland, 2011. [Google Scholar]

- Izadi, S.; Kim, D.; Hilliges, O.; Molyneaux, D.; Newcombe, R.; Kohli, P.; Shotton, J.; Hodges, S.; Freeman, D.; Davison, A.; et al. KinectFusion: Real-Time 3D reconstruction and interaction using a moving depth camera. In Proceedings of the 24th Annual ACM Symposium on User Interface Software and Technology, Santa Barbara, CA, USA, 16–19 October 2011. [Google Scholar]

- Dryanovski, I.; Valenti, R.; Xiao, J. Fast Visual Odometry and Mapping from RGB-D Data. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Karlsruhe, Germany, 6–10 May 2013. [Google Scholar]

- Scherer, S.A.; Dube, D.; Zell, A. Using depth in visual simultaneous localization and mapping. In Proceedings of the Robotics and Automation (ICRA), Saint Paul, MN, USA, 14–18 May 2012; pp. 5216–5221. [Google Scholar]

- Whelan, T.; McDonald, J.; Johannsson, H.; Kaess, M.; Leonard, J. Robust Real-Time Visual Odometry for Dense RGB-D Mapping. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Karlsruhe, Germany, 6–10 May 2013. [Google Scholar]

- Hu, G.; Huang, S.; Zhao, L.; Alempijevic, A.; Dissanayake, G. A robust RGB-D SLAM algorithm. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vilamoura-Algarve, Portugal, 7–12 October 2012; pp. 1714–1719. [Google Scholar]

- Qin, T.; Li, P.; Shen, S. VINS-Mono: A Robust and Versatile Monocular Visual-Inertial State Estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef] [Green Version]

- Fu, D.; Xia, H.; Qiao, Y. Monocular Visual-Inertial Navigation for Dynamic Environment. Remote Sens. 2021, 13, 1610. [Google Scholar] [CrossRef]

- Yang, Y.; Geneva, P.; Zuo, X.; Eckenhoff, K.; Liu, Y.; Huang, G. Tightly-Coupled Aided Inertial Navigation with Point and Plane Features. In Proceedings of the International Conference on Robotics and Automation, Montreal, QC, Canada, 20–24 May 2019; pp. 6094–6100. [Google Scholar]

- Jones, E.; Soatto, S. Visual-inertial navigation, mapping and localization: A scalable real-time causal approach. Int. J. Robot. Res. 2011, 30, 407–430. [Google Scholar] [CrossRef]

- Mourikis, I.; Roumeliotis, S. A multistate constraint Kalman filter for vision-aided inertial navigation. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Rome, Italy, 10–14 April 2007. [Google Scholar]

- Konolige, K.; Agrawal, M.; Sola, J. Large scale visual odometry for rough terrain. In Proceedings of the International Symposium on Research in Robotics (ISRR), Hiroshima, Japan, 26–29 November 2007. [Google Scholar]

- Bouvrie, B. Improving RGBD Indoor Mapping with IMU Data. Master’s Thesis, Delft University of Technology, Delft, The Netherlands, 2011. [Google Scholar]

- Ovren, H.; Forssen, P.; Tornqvist, D. Why Would I Want a Gyroscope on my RGB-D Sensor? In Proceedings of the IEEE Winter Vision Meetings, Workshop on Robot Vision (WoRV13), Clearwater Beach, FL, USA, 15–17 January 2013. [Google Scholar]

- Weiss, S.; Siegwart, R. Real-Time Metric State Estimation for Modular Vision-Inertial Systems. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011. [Google Scholar]

- Nuetzi, G.; Weiss, S.; Scaramuzza, D.; Siegwart, R. Fusion of IMU and Vision for Absolute Scale Estimation in Monocular SLAM. J. Intell. Robot. Syst. 2011, 61, 287–299. [Google Scholar] [CrossRef] [Green Version]

- Horn, B. Closed-form solution of absolute orientation using unit quaternions. J. Opt. Soc. Am. A 1987, 4, 629–642. [Google Scholar] [CrossRef]

- Herrera, C.; Kannala, D.; Heikkila, J. Joint depth and color camera calibration with distortion correction. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2058–2064. [Google Scholar] [CrossRef] [PubMed]

- Kelly, J.; Sukhatme, S. Visual-inertial sensor fusion:localization mapping and sensor-to-sensor self-calibration. Int. J. Robot. Res. 2011, 30, 56–79. [Google Scholar] [CrossRef] [Green Version]

- Qayyum, U.; Kim, J. Seamless aiding of inertial-slam using Visual Directional Constraints from a monocular vision. In Proceedings of the Intelligent Robot Systems (IROS), Vilamoura-Algarve, Portugal, 7–12 October 2012; pp. 4205–4210. [Google Scholar]

- Sturm, J.; Engelhard, N.; Endres, F.; Burgard, W.; Cremers, D. A benchmark for the evaluation of RGB-D SLAM systems. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vilamoura-Algarve, Portugal, 7–12 October 2012. [Google Scholar]

- Khoshelham, K.; Elberink, S.O. Accuracy and Resolution of Kinect Depth Data for Indoor Mapping Applications. Sensors 2012, 12, 1437. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| () | () | () | ||||

|---|---|---|---|---|---|---|

| Monocular Visual-Inertial [29] | ||||||

| Inertial-Kinect w/o directional constraints | ||||||

| Inertial-Kinect with directional constraints |

| Dataset | Trans (m/s) | Max Trans (m/s) | Rotation (/s) | Max Rotation (/s) |

|---|---|---|---|---|

| fr1/xyz | 0.120 | 0.053 | 1.342 | 5.415 |

| fr1/desk | 0.011 | 0.372 | 4.219 | 9.173 |

| fr2/xyz | 0.301 | 0.017 | 0.321 | 4.310 |

| fr2/desk | 0.147 | 0.172 | 3.102 | 3.999 |

| Algorithm | fr1 Desk | fr2 Desk | fr1 Room | |||

|---|---|---|---|---|---|---|

| Median | Max | Median | Max | Median | Max | |

| RGB-D SLAM [14] | 0.068 | 0.231 | 0.118 | 0.346 | 0.152 | 0.419 |

| Monocular SLAM [29] | 0.931 | 1.763 | 0.982 | 1.621 | 2.531 | 0.792 |

| REVO-KF [31] | - | 0.547 | - | 0.095 | - | 0.288 |

| REVO-FF [31] | - | 0.186 | - | 0.329 | - | 0.305 |

| FOVIS [10] | 0.221 | 0.799 | 0.112 | 0.217 | −0.238 | 0.508 |

| Proposed Approach | 0.024 | 0.214 | 0.012 | 0.092 | 0.133 | 0.317 |

| Module | Processing Time per Frame (ms) |

|---|---|

| Data acquisition | |

| Feature detection and matching | |

| Motion estimation | |

| Pose update |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qayyum, U.; Kim, J. Depth-Camera-Aided Inertial Navigation Utilizing Directional Constraints. Sensors 2021, 21, 5913. https://doi.org/10.3390/s21175913

Qayyum U, Kim J. Depth-Camera-Aided Inertial Navigation Utilizing Directional Constraints. Sensors. 2021; 21(17):5913. https://doi.org/10.3390/s21175913

Chicago/Turabian StyleQayyum, Usman, and Jonghyuk Kim. 2021. "Depth-Camera-Aided Inertial Navigation Utilizing Directional Constraints" Sensors 21, no. 17: 5913. https://doi.org/10.3390/s21175913

APA StyleQayyum, U., & Kim, J. (2021). Depth-Camera-Aided Inertial Navigation Utilizing Directional Constraints. Sensors, 21(17), 5913. https://doi.org/10.3390/s21175913