Abstract

Since remote sensing images are one of the main sources for people to obtain required information, the quality of the image becomes particularly important. Nevertheless, noise often inevitably exists in the image, and the targets are usually blurred by the acquisition of the imaging system, resulting in the degradation of quality of the images. In this paper, a novel preprocessing algorithm is proposed to simultaneously smooth noise and to enhance the edges, which can improve the visual quality of remote sensing images. It consists of an improved adaptive spatial filter, which is a weighted filter integrating functions of both noise removal and edge sharpness. Its processing parameters are flexible and adjustable relative to different images. The experimental results confirm that the proposed method outperforms the existing spatial algorithms both visually and quantitatively. It can play an important role in the remote sensing field in order to achieve more information of interested targets.

1. Introduction

The remote sensing image is an important source for people to achieve a variety of useful information; thus, many airborne or aerospace imaging systems are developed to acquire high quality remote sensing images, which are based on photoelectric detectors with high sensitivity. The quality of the image becomes particularly important because it affects the accurate interpretation and perception of the image. Nevertheless, noise often inevitably exists in the image, and the targets are usually blurred to a certain extent due to the acquisition of the imaging system, resulting in the degradation of quality of the images [1,2,3,4]. Therefore, it makes it hard for human observers to discriminate the fine details of the images such as edges and other features.

There are many kinds of noises interfering the imaging system, principally from the photoelectric detectors, for example, photon shot noise, dark current noise, thermal noise, and so on. The images acquired by the system always include noise, which affects their final display effect [5,6,7]. Therefore, some image preprocessing techniques can be applied to obtain the image data of high definition and high signal-to-noise ratio (SNR) by denoising and edge sharpening. The high-quality images often possess more abundant information and higher value. The expected processing effect can not only smooth the noise in the uniform region to improve the SNR but also sharpen the edges in the target region to achieve clearer images [8,9]. Thus, the design of the preprocessing algorithm is particularly critical. It should not amplify the noise or obscure useful edge information.

Currently, there are many image preprocessing methods that can be generally divided into two kinds: space domain and transform domain. The objective of any filtering methods is to simultaneously remove noise and to retain the important features of the images. The methods based on space domain mainly include Wiener filter, Gauss filter, bilateral filter, neighborhood medium filter, average filter, and so on [5,10,11,12,13,14,15,16,17]. The image grayscale of each pixel is directly dealt with to achieve the desired effect. The transform domain methods usually consist of discrete wavelet transform (DWT), discrete cosine transform (DCT), and Fourier transform, etc. [5,11,18,19,20,21,22,23]. The image is first transferred into the frequency domain by transforming, and the processing operations are carried out. Then, the inverse transform is performed to obtain the resultant image. Some of these approaches are quite computationally intensive. By contrast, the former is easier to be implemented without any transforms. They can smooth the noise effectively, but most of them only preserve the edge information of the images and are unable to enhance it. The high frequency region of an image often plays a vital role in enhancing its visual appearance, with respect to the edges and contrast. The classic linear unsharp masking filter (UMF) is one of the popular sharpening techniques that is capable of magnifying the high-frequency content, but it is highly sensitive to the noise present in the original image [15,16,24,25,26]. Although an algorithm combining the bilateral filter and the UMF can sharpen the edges, presented in Reference [27], the resultant images are not satisfactory enough, producing overshoot and undershoot artifacts.

In this work, a novel spatial preprocessing algorithm is proposed, called an improved adaptive spatial filter (IASF). It has good capabilities of both edge sharpening and noise smoothing, and its processing parameters are flexible and adjustable relative to different images. It can enhance the edges in the target region without artifacts and smooth the noise in the uniform region without reducing the useful information. Therefore, it renders the IASF filter more appropriate for processing remote sensing images. Its performances in edge sharpening and denoising are analyzed and compared with the commonly used spatial algorithms. The experimental results indicate that the proposed method performs better in both visual effect and objective data. It can play an important role in the remote sensing field in making images clearer with higher SNR and better display effects.

2. Related Work

This section may be divided by subheadings. It should provide a concise and precise description of the experimental results, their interpretation, as well as the experimental conclusions that can be drawn.

In this section, we mainly discuss the spatial filtering techniques that are the most related to our proposed algorithm.

2.1. Wiener Filter

It is a classic approach based on statistics, which filters out the noise present in the image and preserves the images’ details [5,12]. Its filtering principle is state as follows:

where is the intensity value of pixel (i,j) and is the average intensity of pixels in the M×N window centered at (i,j). and represent the variances of the noise and the actual image, respectively. Its performance can be analyzed in two cases.

Case 1: “Target region.” The variance is far greater than , that is, , and we can obtain and ; thus, . This means that the filter can preserve the edge information of the image.

Case 2: “Uniform region.” The variance approximates , that is, , and we can obtain and ; thus, . This means that the filter becomes an average filter in order to smooth the noise of the image.

Therefore, it can be concluded that the Wiener filter is an edge-preserving smoothing filter with advantages of self-adaptation and easy-implementation. However, it only tries to preserve the edges instead of enhancing them.

2.2. Bilateral Filter

The de-noising bilateral filter is built based on the low-pass Gaussian algorithm, which considers both distance between the pixels and the intensity variations of the image [10,16]. That is the domain filter and the range filter. Its working principle can be expressed as follows:

where is the restored image and is the response at (i,j) to an impulse at (k,l) [10]. The specific definition of can be expressed as follows:

where () is the central pixel of the window,; and are the standard deviations of the domain and range Gaussian filters, respectively; and is the normalization factor that makes the average intensity of the whole image unchanged. Its definition is given by the following.

The domain low-pass Gaussian filter can provide higher weight to pixels that are spatially close to the central pixel. The range low-pass Gaussian filter can provide higher weight to pixels that are similar to the central pixel in intensity. Thus, the performance of the bilateral filter mainly depends on two parameters, and , when the size of the window is fixed.

Zhang B et al. focused on the characteristics of the range filter, increasing a variable ε to sharpen the edge information [10]. Then, the weighted factor of the range filter becomes the following:

where represents the grayscale offset of the center pixel . When , is the conventional weighted factor of the range filter. If is similar to the center pixel , its weighted factor will be larger. Therefore, this filter produces the result that the grayscale of the pixel approaches to preserve the edges. When , the filter will provide higher weight to pixels that are similar to . Thus, this filter makes the grayscale of the pixel approach . It can be observed that this filtering algorithm can enhance the edges, provided a reasonable is selected.

2.3. UMF Filter

Unsharp mask filter (UMF), a high-pass linear filtering method, is a typical edge enhancing algorithm with very low-cost computational structure [24,25,26]. The neighborhood of the filtering operator is a fixed 3 × 3 matrix, as shown in Equation (6), where determines the direction of the edge sharpening and belongs to the interval [0, 1]. If , the filter will sharpen the image in the horizontal and vertical directions, respectively. If , the sharpening direction changes to both diagonals. If , the edges will be sharpened in a superimposed direction. Although UMF can enhance the edge information effectively, it also amplifies the noise in the image.

3. Improved Adaptive Spatial Filter (IASF) for Image Sharpening and Denoising

A weighted normalization filtering algorithm was proposed for suppressing the noise efficiently in order to further enhance the SNR and sharpen the main edge information simultaneously. It introduces the improved range filter and combines the average filter, called the improved adaptive spatial filter (IASF). The principle is as follows:

where represents the output of the improved range filter. The parameters a and b are the normalized weighted factors of the improved range filter and the average filter, respectively, rendering the filter adaptable to different image data.

Therefore, the proposed method has the advantages of both filters mentioned above. The average filter can smooth the noise effectively, and the improved range filter can sharpen the edges of the image. Consequently, the IASF filter integrates the abilities of both noise suppressing and edge sharpening, which meets the processing need of remote sensing image data.

The performance of the proposed algorithm is closely related to the design of the improved range filter and the reasonable selections of weighted factors a and b. These will be analyzed and discussed in the following part.

3.1. Design of the Improved Range Filter

It is necessary to select a reasonable grayscale offset for each pixel in order to enhance the edge information, which will be analyzed in the following two cases. There is a mean grayscale value, denoted as MEAN, in the neighborhood .

- (a)

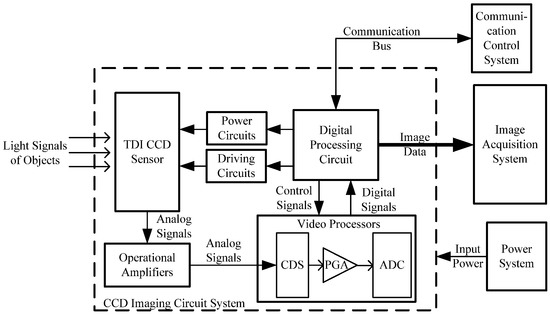

- . The pixel moves to MEAN, resulting in a blurred image, because each pixel tends to the average value of its neighborhood in the image, as shown in Figure 1b.

Figure 1. The different results of the improved range filter with different grayscale offsets. (a) Original image; (b); (c).

Figure 1. The different results of the improved range filter with different grayscale offsets. (a) Original image; (b); (c).

- (b)

- . The pixel moves away from MEAN, making each pixel tend to , resulting in the sharpening effect, as shown in Figure 1c. It can be divided into three cases. If , that is , the grayscale of the pixel decreases. Otherwise, if , , the grayscale of the pixel increases. Otherwise, if , , it becomes a conventional range filter.

According to the above analysis, it can be observed that the improved range filter can reduce the transition pixels of the edges effectively and increase the gradient of the grayscale variation in order to achieve the edge enhancement when the pixel moves away from the MEAN. Now the proposed method sets as the grayscale offset in order to sharpen edges.

3.2. Determination of the Weighted Factors of IASF

The weighted factors a and b are a set of certain values in each window . In order to determine the reasonable weighted factors, a cost function is employed to minimize the difference between and in window :

where is a regularization parameter and a positive real number. Its specific meaning is discussed in the following part. Equation (8) is a linear ridge regression model, and its solution can be expressed as follows.

Therefore, taking Equation (9) into Equation (7), we can obtain the following equation.

The performance of the IASF can be analyzed in two typical cases based on Equation (10).

Case 1: “Edge region.” The image data changes a lot within , so the variance is far larger than , . Thus, we can obtain the weighted factor and , resulting in . This makes the IASF sharpen the edge information.

Case 2: “Uniform region.” The image variance is far less than , . Thus, we can obtain the weighted factor and , resulting in . The IASF turns out to be an average filter for smoothing the noise.

In total, the weighted factors of the IASF can adjust itself to implement the edge sharpening and noise suppressing simultaneously according to different images. More specifically, the criterion of an “edge region” or a “uniform region” is determined by the parameter . The regions with variance far less than are smoothed, whereas those with variance much larger than are enhanced. The effect of parameter in the IASF is similar to the variance in the range filter, both of which can determine whether an edge region should be enhanced or preserved. Thus, both parameters are equivalent, so we empirically set [13].

In order to further improve the display quality of the remote sensing images and to enhance the edge sharpening of the image information, the grayscale offset is set to in this method. Thus, the weighted factor of the improved range filter becomes the following.

At the same time, in order to improve the adaptability of the algorithm, we introduced the rational parameter kr to optimize the functions of edge sharpening and denoising for different images. Thus, the filtering algorithm can be expressed as follows.

The function and selection of parameters kp and kr are described below.

3.3. Parameter kp

The parameter kp is an adjusting factor of the grayscale offset for enhancing edge sharpening. The higher the value is, the larger the grayscale offset, producing a more obvious sharpening effect. However, if the parameter kp is too large, it results in grayscale overshoot. Thus, kp should not be too large. Generally, the range of kp is 1 ≤ kp ≤ 2, which can be adjusted according to the actual image data. The parameter kp is set to 1.5 by training the actual images here.

3.4. Parameter kr

The parameter kr is a weighted factor for measuring the effect of noise smoothing. The smaller the value is, the weaker the smoothing effect and the stronger the sharpening effect and vice versa. Now, we set two different thresholds a and b to divide the parameter kr into three segments. In order to simplify the computation, we employ three linear expressions to represent kr here. When , kr is set to 1, indicating the edge sharpening and noise smoothing based on the actual image. When , kr is set to 0.01, showing that the neighborhood is mainly the edge region and performing stronger edge sharpening. In the other cases, the parameter kr can be expressed as a linear expression. Consequently, we have the following.

The parameters a and b are selected in terms of the variance of images with different features. We will set a = 1.5 and b = 8 by training the actual images here.

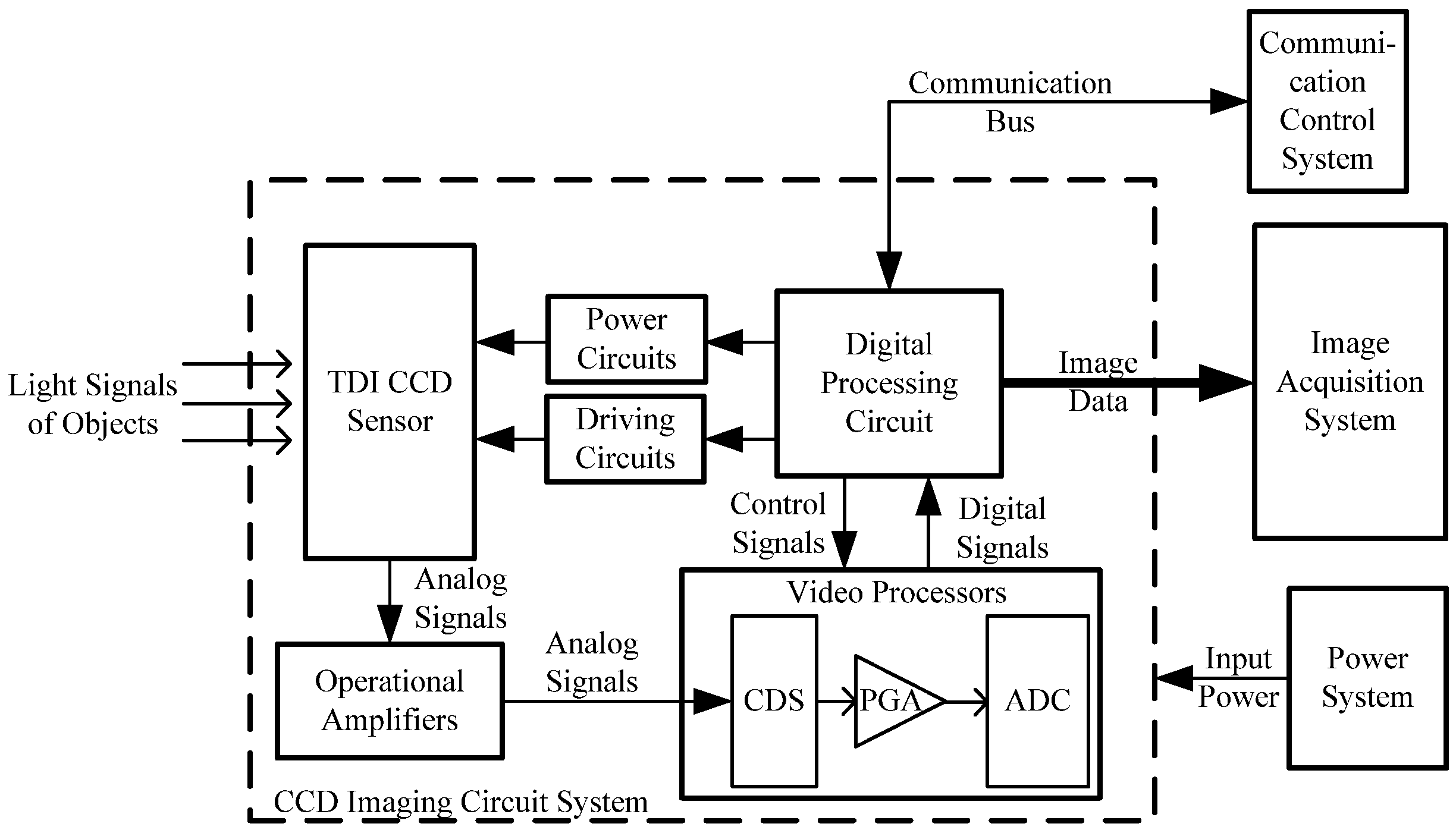

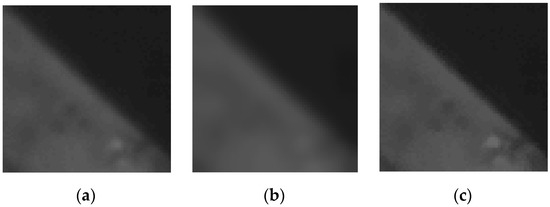

4. Verification and Discussion

The test images are acquired by a time-delayed integration (TDI) charge-coupled device (CCD) imaging system, which employs a kind of linear array photoelectric detector. It can implement charge accumulations by superposition mode with advantages of high sensitivity, high dynamic range, and low noise. The imaging system is usually composed of TDI CCD sensor, amplifiers, video processors, digital processing circuit, and so on, represented in Figure 2. It can realize the driving of TDI CCD, the power supply, signal quantization, and output of the image data. The communication control system is in charge of supervising and monitoring the imaging system. The image data can be captured and displayed by the image acquisition system. The main technical indicators of the imaging system are as shown in Table 1.

Figure 2.

Test block diagram of the TDI CCD imaging system.

Table 1.

The main technical indicators of the imaging system.

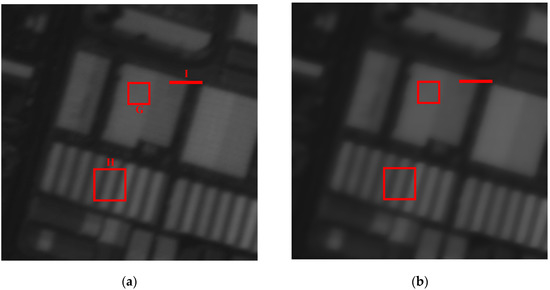

Then, we apply the imaging system in order to acquire multiple images with different targets and choose two of them with size of 256 pixels × 256 pixels to validate the performance of the proposed method. Various spatial filters have been applied to the test images for comparison.

Some performance indexes are applied for the quantitative evaluation in order to compare the processing effects. There are two commonly used objective indexes: the mean value μ and standard deviation (STD) σ [5,11]. Their definitions are given by the following:

where is the intensity of pixel (i, j) and (M,N) is the size of the image region. The mean value μ is the average of all intensities, indicating the average brightness of the image. The STD(σ) is the deviation of intensities relative to the mean value, denoting the distribution uniformity of the pixels.

The gray mean gradient (GMG) is employed for measuring the edge sharpening effect, which is an image quality evaluation method based on the image gradient. Its expression is given by the following.

The GMG indicates the variance rate of the grayscale of the image, representing the sharpness extent with higher sensitivity. The greater the value of GMG, the better the image quality; that is, the edges become sharper with more information of the targets. However, if there is a lot of noise in the image, the GMG may be bigger as well because both the noise and the edge are presented as high frequency component. Therefore, we apply the evaluation method by combining subjective visual effects and objective data comparisons in order to analyze the resultant images.

The size of the neighborhood window should be appropriate and selective. If it is too small, it cannot cover most of the edge transitions. Otherwise, if it is too big, it might increase the computation and consume time. Considering the above factors, we chose a 7 × 7 window. The standard deviation σr of the IASF determines how selective the filter is in choosing the pixels that are similar enough in intensity, and σr is set to two here.

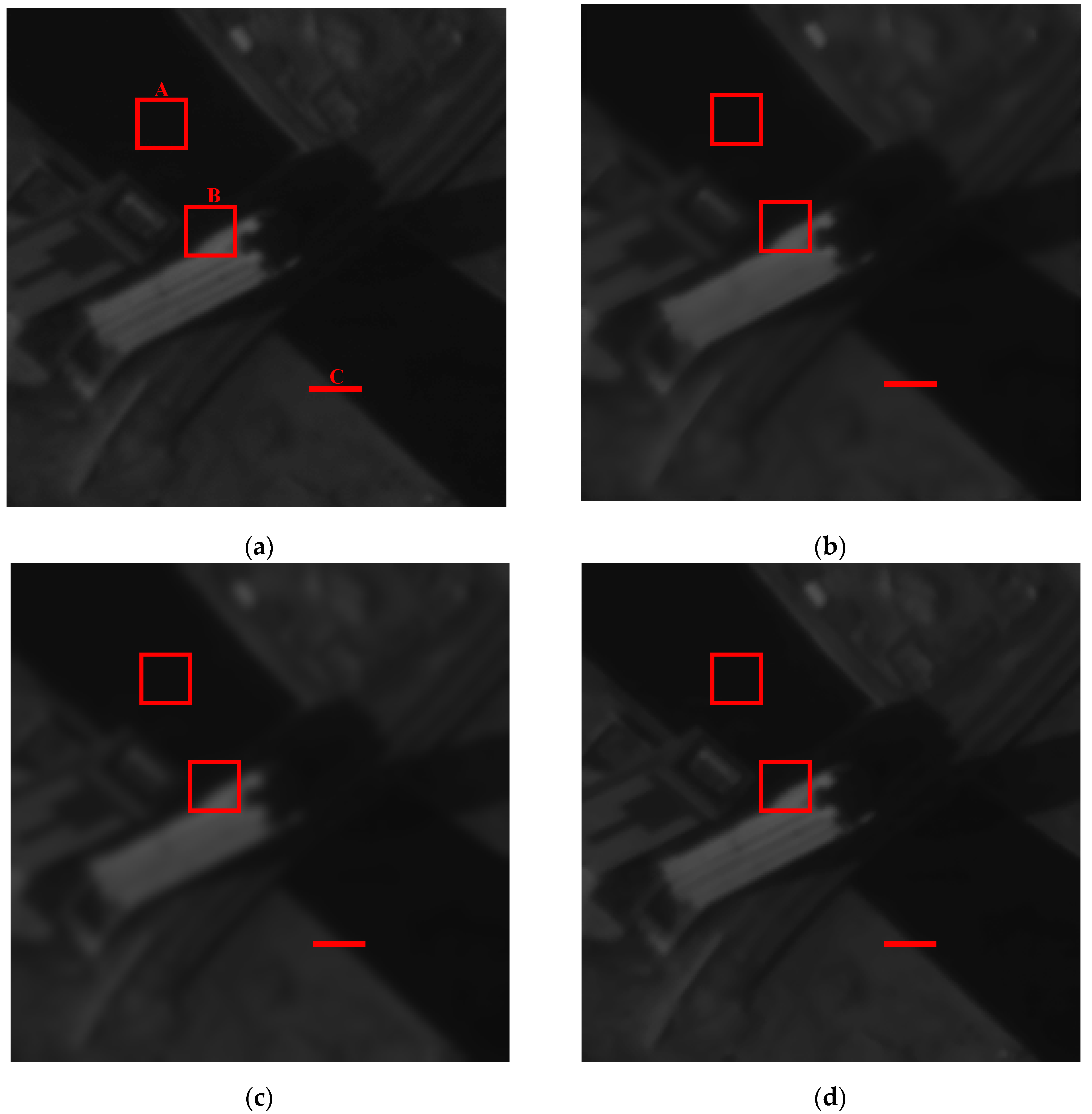

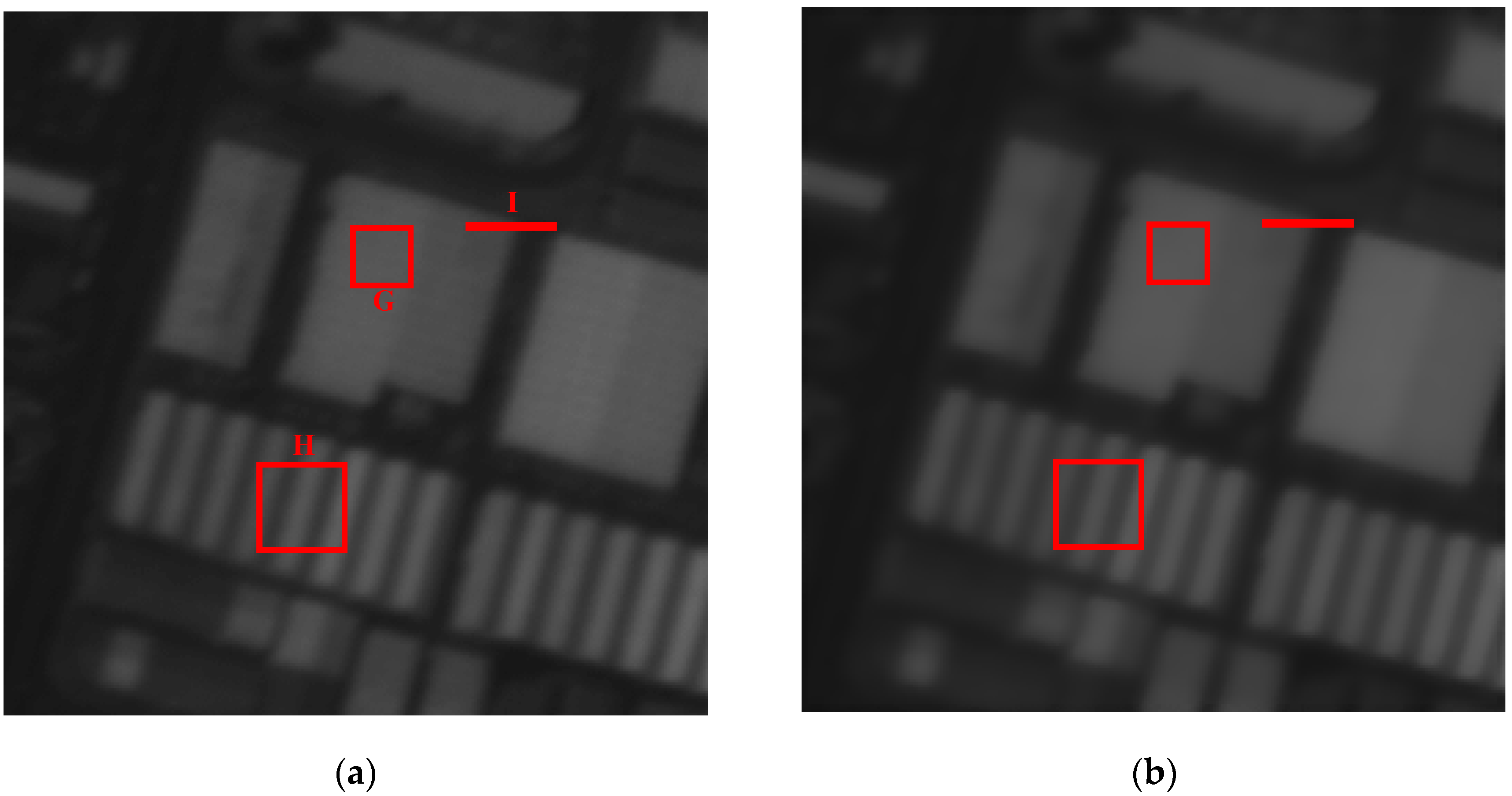

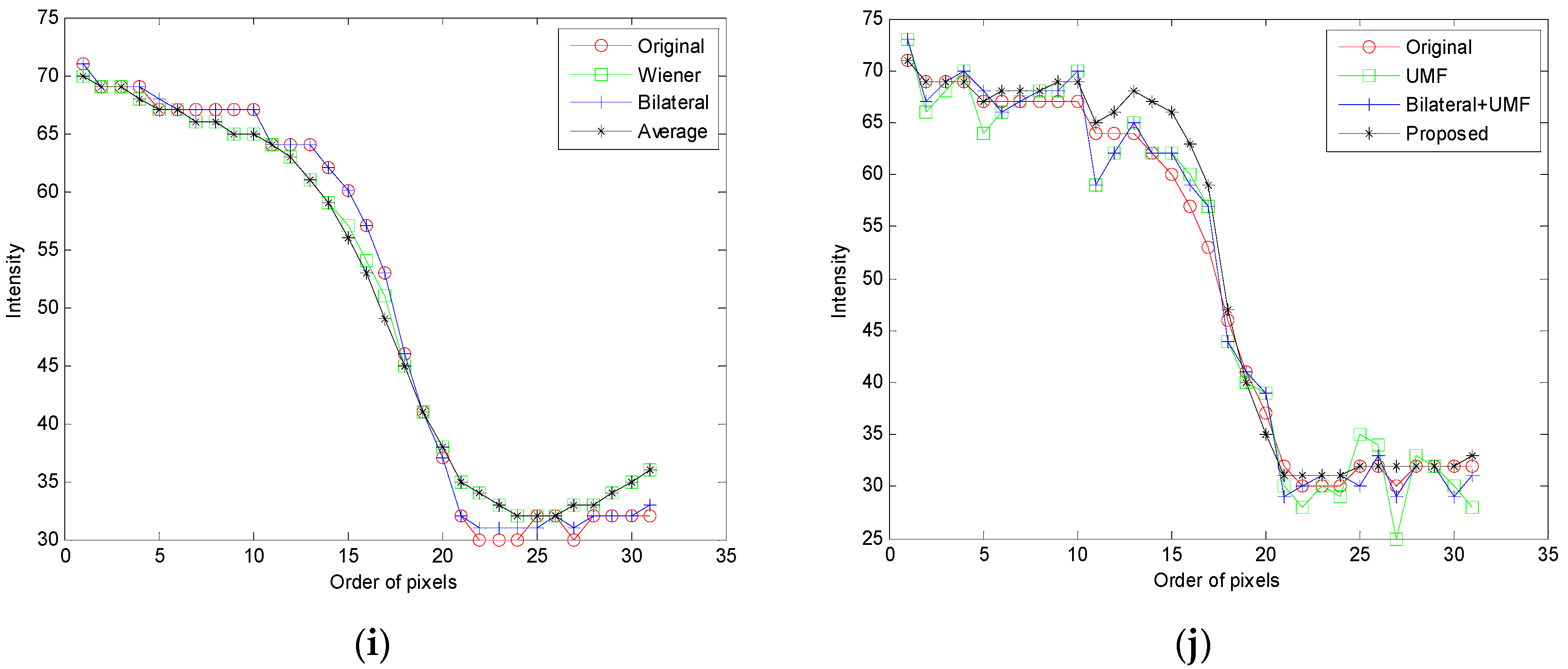

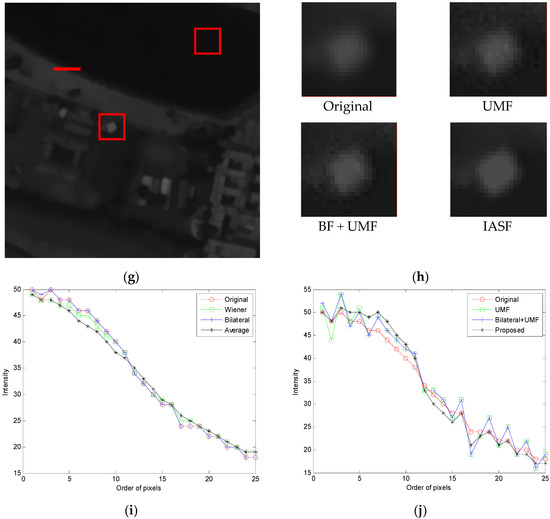

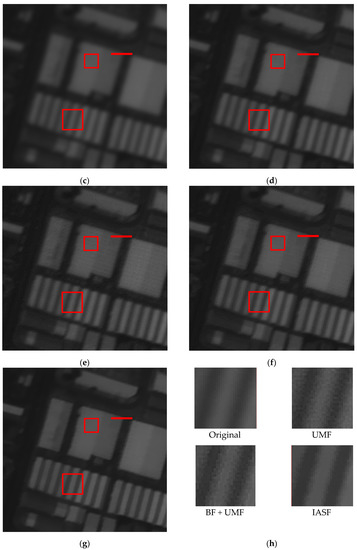

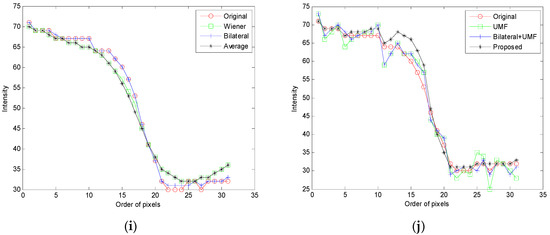

Figure 3a is the raw image data of target 1 “building”, which is processed by different spatial filters as shown in Figure 3b–g. Box A shows a uniform region while box B is an edge region. The zoomed in images of box B for UMF, BF + UMF, and IASF are shown in Figure 3h. The different gray level distributions of edge C are depicted in Figure 3i,j.

Figure 3.

The raw image data of target 1 and its processed resultant images by different filters: (a) original data; (b) Wiener filter (WF); (c) average filter (AF); (d) bilateral filter(BF); (e) UMF; (f) BF + UMF; (g) IASF; (h) zoomed in images of box B; (i) gray level distributions of edge C for (a–d); (j) gray level distributions of edge C for (e–g).

Compared with the raw image Figure 3a, Figure 3b–d became blurred, and some details are lost from visual effects. From Figure 3i, it can be observed that the average filter (AF) makes the image the most blurred, increases the transition pixels, and reduces the slope of grayscale variation. The Wiener filter (WF) obtains similar results relative to the average filter, and the results of the bilateral filter (BF) becomes a little blurred, which is similar to the original. We can observe that the edge sharpening effect is realized in Figure 3e–g, but in terms of Figure 3h, the UMF obviously amplifies the noise in the image, resulting in visually less pleasing enhanced effects. For the combined algorithm of BF + UMF, it achieves a slightly better result than UMF due to the noise suppression by BF, but there is still some noise remaining in the image. However, the IASF implements the edge sharpening without noise amplification, producing a better visual effect, as shown in Figure 3g,h.

The grayscale variations of the three filters for edge C are described in Figure 3j. The UMF, sensitive to the noise, produces large overshoot and undershoot in grayscale, causing the undesired artifacts, although the slope of the edge increases. The BF + UMF method can restrain the overshoot to a certain extent, but the processed result is still unsatisfactory. Distinct from UMF, the IASF is not sensitive to the noise, and it can adjust the grayscale of edges in the spatial domain to increase the slope without generating overshoot. Thus, the IASF can enhance the edge information effectively to improve the overall appearance of the image.

Then, we can analyze the image data of target 1 processed by different algorithms to realize the objective data comparison, as shown in Table 2. The average intensity of each filter is nearly the same as the original. The STDs for the red box A and B are calculated, representing the uniform region and the edge region, respectively, with the size of 25 pixels × 25 pixels, and the GMGs for box B are also displayed.

Table 2.

The comparison results for image data of target 1.

It can be observed that the WF, the AF, and the IASF have the same STD results when processing the uniform region A, which is better than the BF. Therefore, this indicates that IASF has good capability for noise smoothing. From the calculation results of GMG for the edge region B, we can observe that the WF, BF, and AF have smaller values than the original image, indicating that they have less information of the targets and only perform edge preservation, while the UMF, BF + UMF and IASF performs larger edge preservation. Thus, the three filters can enhance the edges in order to obtain more information. We can draw the same conclusion from the STD results. However, the UMF and BF + UMF also amplifies the noise at the same time, resulting in a less pleasing appearance. Since IASF is not sensitive to noise, it can achieve an ideal enhancing effect without artifacts. Consequently, the IASF can simultaneously achieve good performance in edge sharpening and noise smoothing. The filtering characteristics of each algorithm are summarized in Table 2.

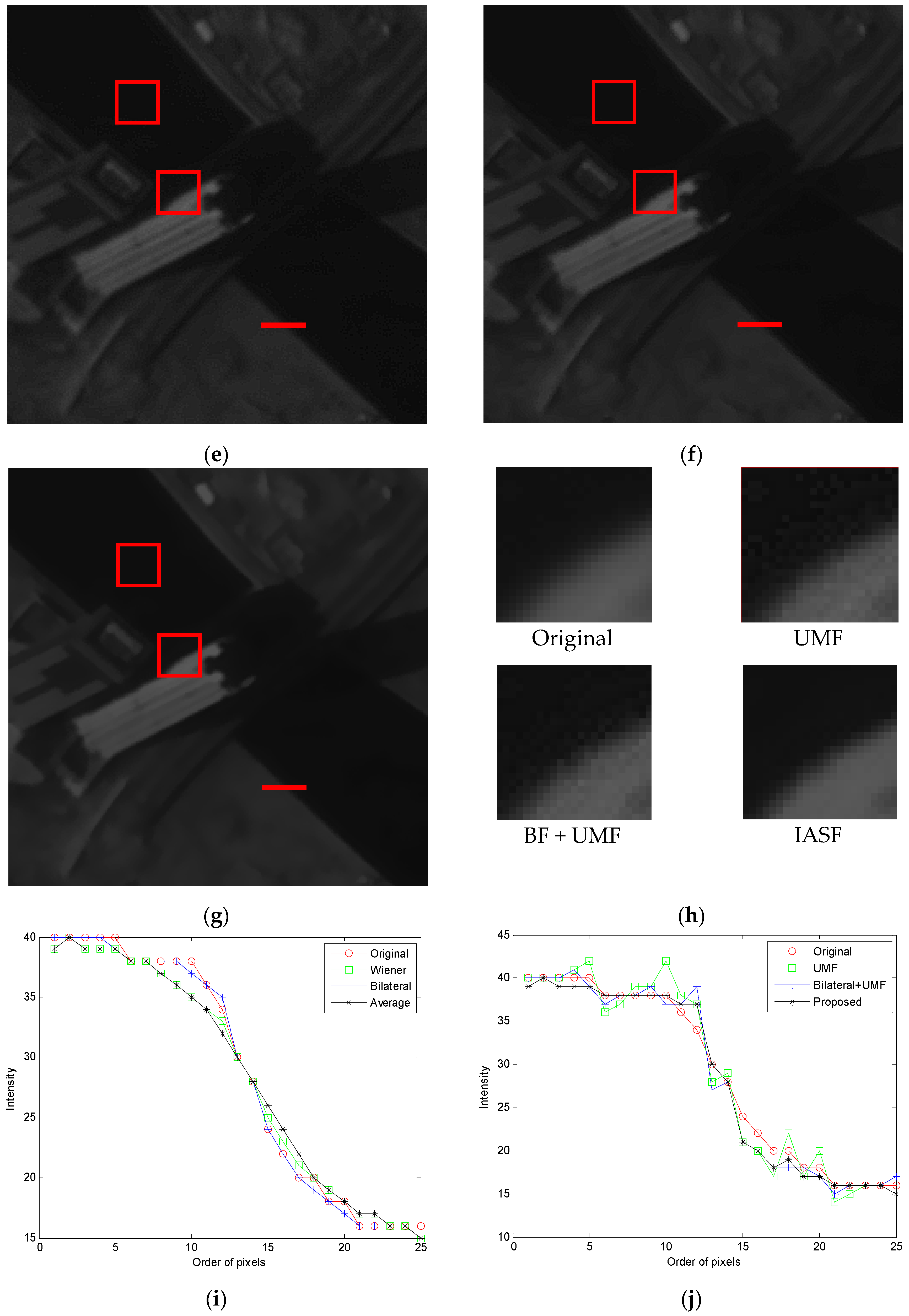

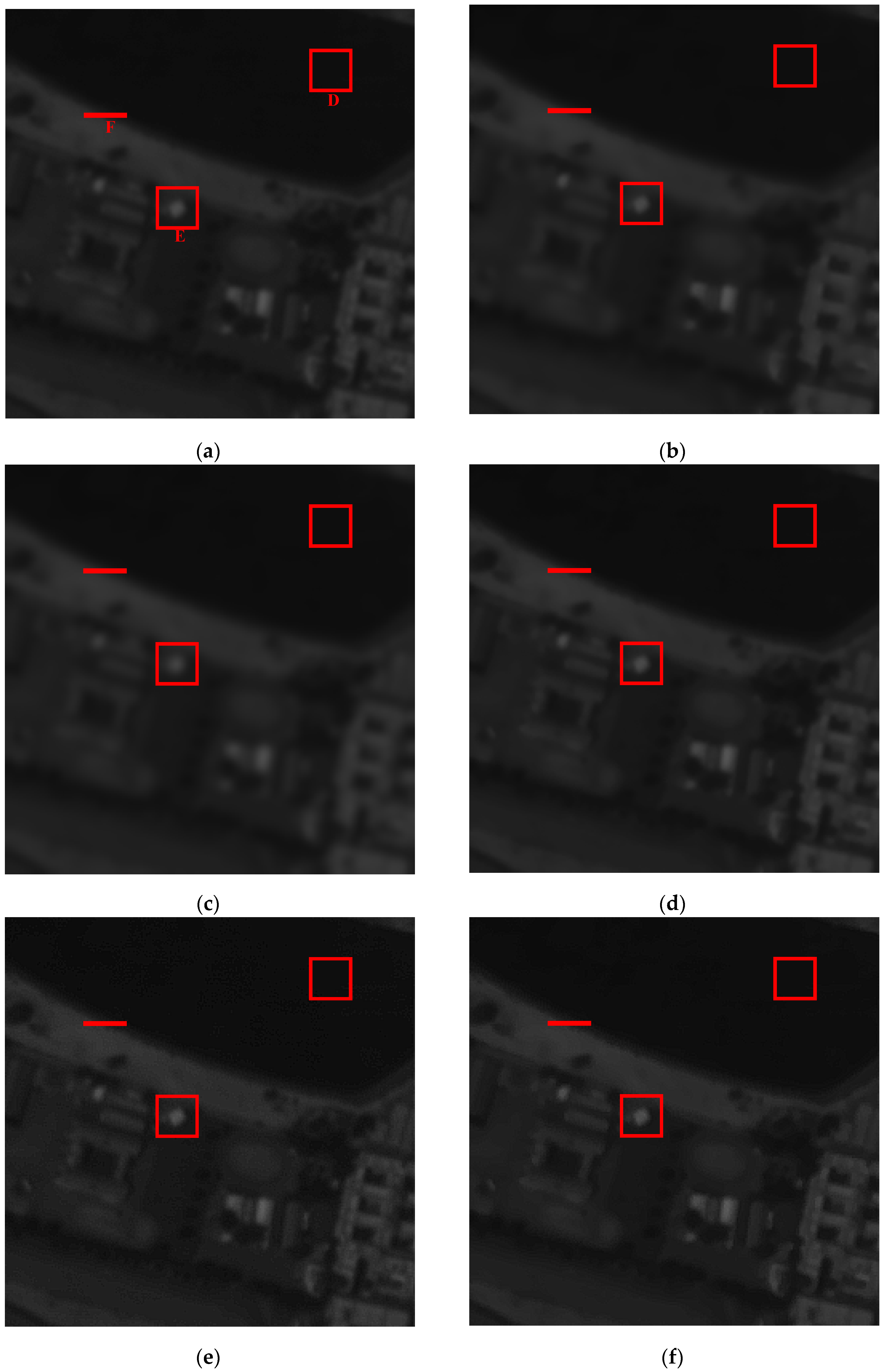

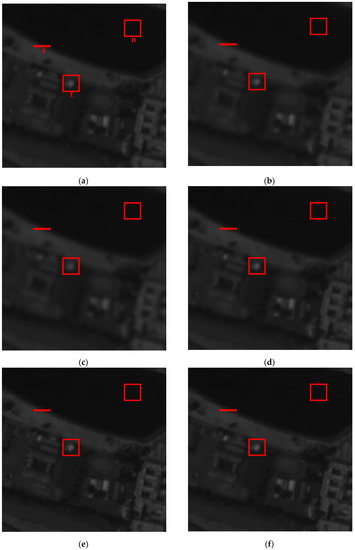

We then change to another image to further validate the proposed method. Figure 4a is the raw image data of target 2 “beach” and its resultant processed images by different spatial filters, as shown in (b) to (g). Box D shows a uniform region while box E is an edge region. The zoomed in images of box E for UMF, BF + UMF, and IASF are shown in Figure 4h. The gray level distributions of edge F are depicted in Figure 4i,j.

Figure 4.

The raw image data of target 2 and its processed resultant images by different filters: (a) original data; (b) WF; (c) AF; (d) BF; (e) UMF; (f) BF + UMF; (g) IASF; (h) zoomed in images of box B; (i) gray level distributions of edge C for (a–d); (j) gray level distributions of edge C for (e–g).

It can be observed that, compared with the raw image Figure 4a, Figure 4b–d become blurred while Figure 4e–g become clearer with their edges enhanced. In terms of Figure 4h, since UMF is sensitive to the noise, it has a poor visual appearance. The BF + UMF obtained a slightly better result than UMF, but some noise still remained in the image. The IASF sharpens the edge information effectively without obvious noise, achieving a better visual appearance. From Figure 4i, we know that AF has the lowest slope of grayscale variation, resulting in the most blurred image. WF obtained a similar result relative to AF, while BF obtained a little blurred image, similar to the original.

The grayscale variations of the three filters for edge F are depicted in Figure 4j. Similarly to Figure 3j, the UMF produces large overshoot and undershoot, resulting in the undesired artifacts. Although the overshoot can be suppressed to a certain extent by BF, the BF + UMF method still cannot obtain a more pleasing result. Nevertheless, the IASF can adjust the grayscale of edges in spatial domain to increase the slope without producing artifacts through the reasonable selection of parameters kp and kr. Thus, the IASF can sharpen the edges effectively to improve the overall appearance of the image.

The objective data comparison results for the image data of target 2 are shown in Table 3. The average intensity of each filter is nearly the same as the original one. The STDs for the red boxes D and E are calculated, each with a size of 25 pixels × 25 pixels, and the GMGs for box E are also displayed.

Table 3.

The comparison results for image data of target 2.

It can be observed that IASF has nearly the same STD result with WF and AF when processing the uniform region D, better than the BF. Thus, the IASF has a good capability for noise smoothing. From the calculation results of GMG and STD for the edge region E, we can observe that the UMF, BF + UMF, and IASF have higher values than the original one, indicating that the three filters can enhance the edges to obtain more information. However, the UMF and BF + UMF also amplify the noise, resulting in less pleasing effects. The IASF can achieve an ideal enhancing effect without artifacts since it is not sensitive to noise.

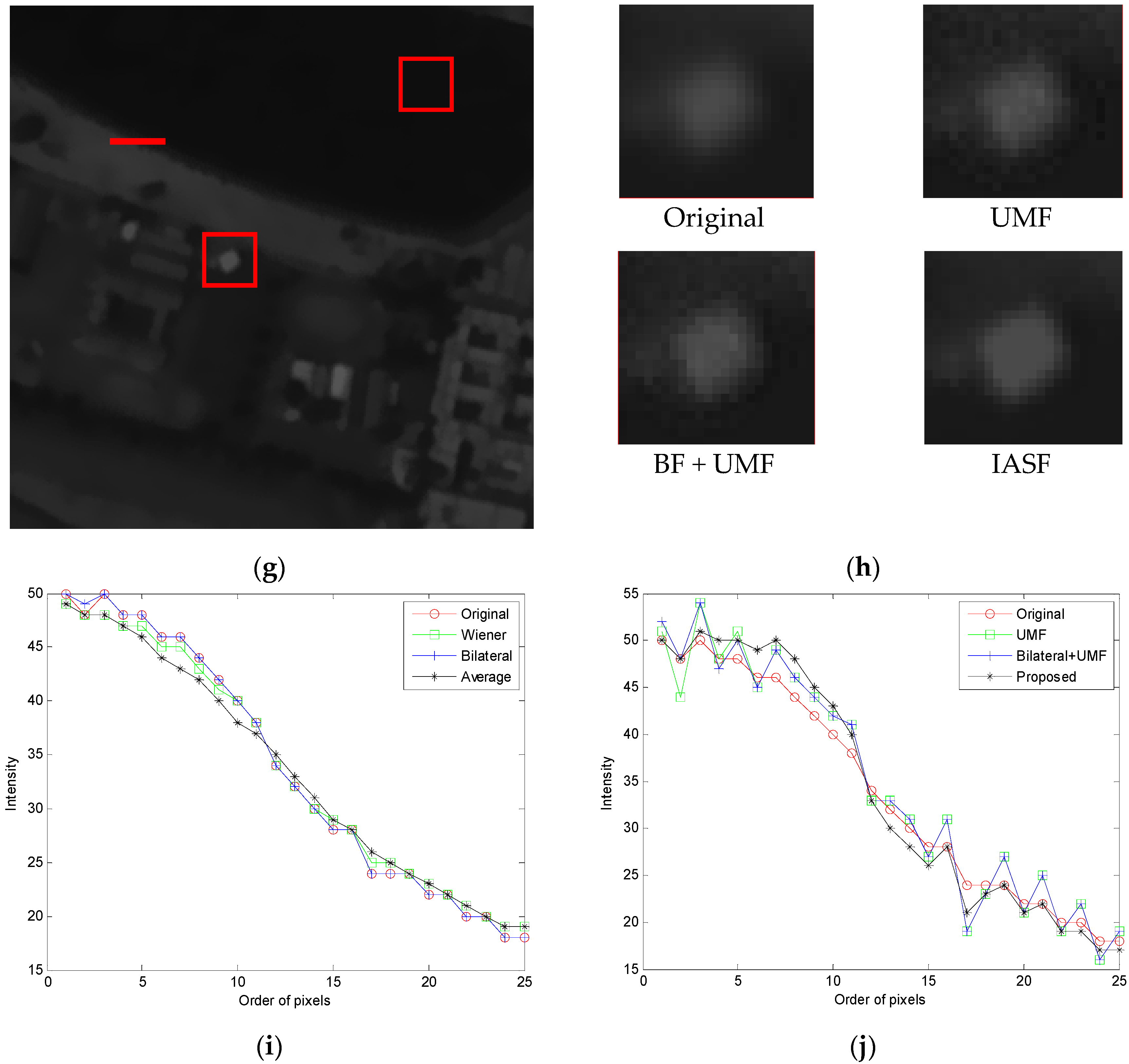

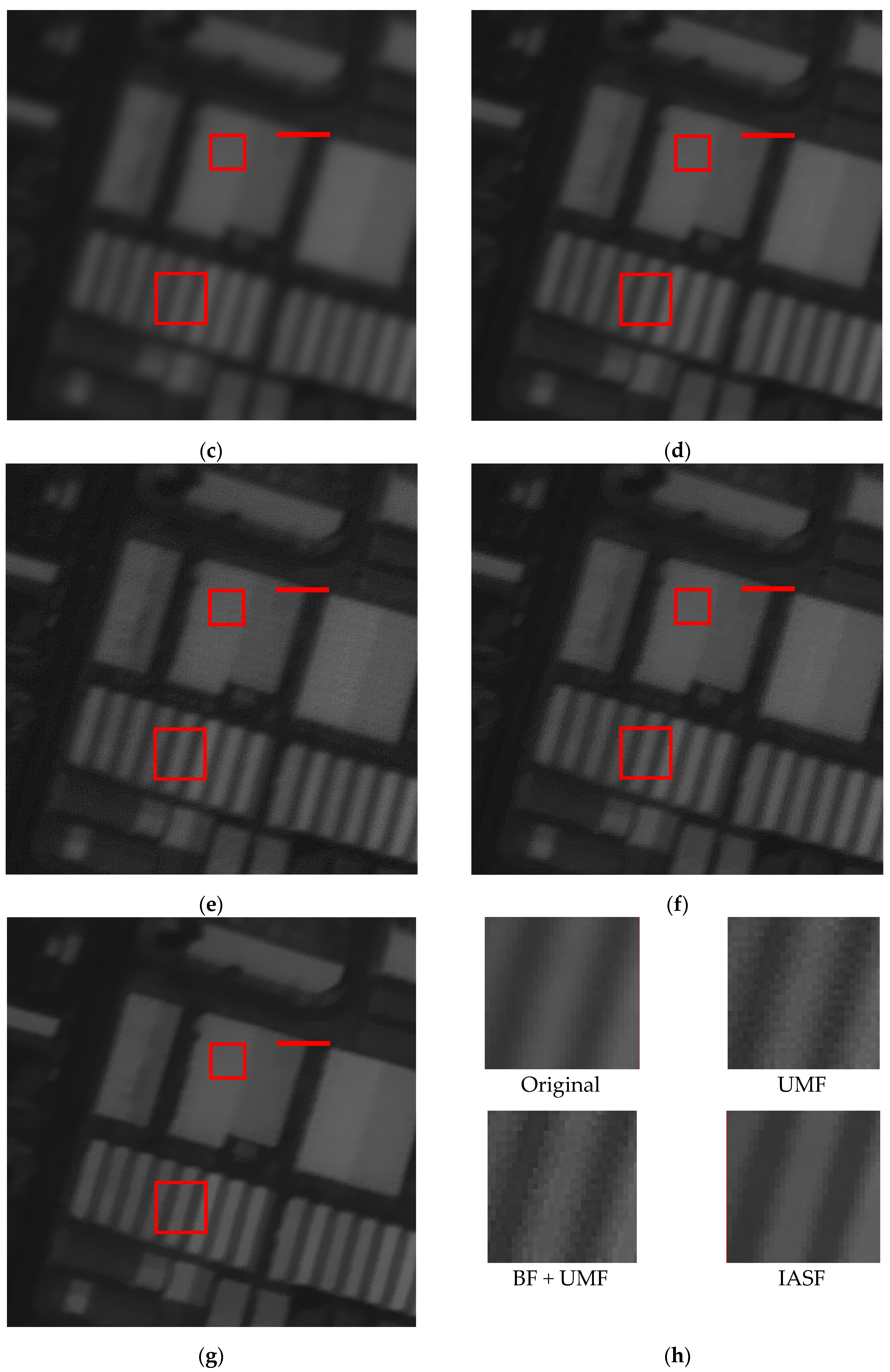

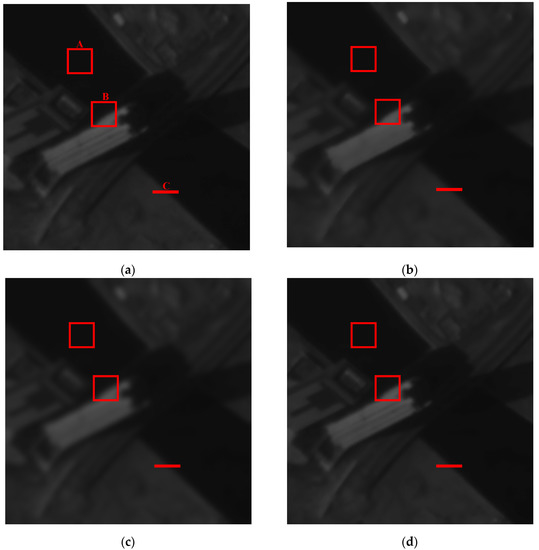

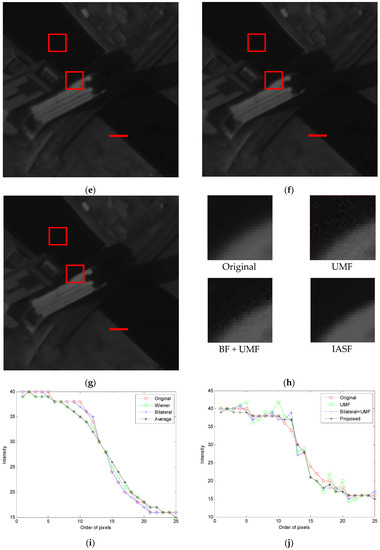

Figure 5a is the raw image data of target 3 “town” and its processed resultant images by different spatial filters, as shown in (b) to (g). The box G shows a uniform region while the box H is an edge region. The zoomed in images of the box H for UMF, BF + UMF, and IASF are shown in Figure 5h. The gray level distributions of edge I are depicted in Figure 5i,j.

Figure 5.

The raw image data of target 3 and its processed resultant images by different filters: (a) original data; (b) WF; (c) AF; (d) BF; (e) UMF; (f) BF + UMF; (g) IASF; (h) zoomed in images of box H; (i) gray level distributions of edge I for (a–d); (j) gray level distributions of edge I for (e–g).

A similar conclusion can be drawn that, compared with the raw image Figure 5a, Figure 5b–d become blurred while Figure 5e–g become clearer with their edges enhanced. The UMF and the BF + UMF are sensitive to noise, which produces large overshoot and undershoot, resulting in poor visual appearances. However, the IASF can adjust the grayscale of edges in the spatial domain in order to sharpen edge information effectively without obvious noise, achieving better visual appearance.

The objective data comparison results for the image data of target 3 are shown in Table 4. It can be observed that IASF has nearly the same STD result with WF and AF when processing the uniform region G and is better than the BF, indicating a good capability for noise smoothing. According to the calculation results of GMG and STD for edge region H, we can observe that UMF, BF + UMF, and IASF can enhance the edge information with higher value. The IASF can achieve an ideal enhancing effect while UMF and BF + UMF simultaneously amplify noise. Consequently, the IASF can simultaneously achieve good performance in edge sharpening and noise smoothing.

Table 4.

The comparison results for image data of target 3.

The information entropy of image data is employed for evaluating the effect of edge sharpening, as shown in Table 5. We can observe that the information entropy results of the three filters increase in different degrees. However, UMF and BF + UMF not only sharpen the edges but also amplify the noise, resulting in higher values. The IASF is not sensitive to noise; thus, it can achieve better enhancing results.

Table 5.

The comparison results of entropy for three different images.

In summary, the performance of the proposed preprocessing algorithm is verified and analyzed based on three different target images, and its related properties are described quantitatively from Table 2 to Table 5. We can perceive that the IASF has excellent capabilities in both edge sharpening and noise smoothing.

The two parameters kp and kr are key factors affecting the filtering performance. The parameter kp determines the output of in formula (12), which can sharpen the edges of the image. If kp is too big (that is, the grayscale offset is too large), the IASF may result in a drastic sharpening effect, and the image appears over-sharpened. If kp is smaller than 1, the sharpening effect is weakened, unable to meet the preprocessing purpose. The parameter kr is complementary with kp, which determines the normalized weighted factors of and . If we increase kr, the weight of decreases and that of increases, indicating that the sharpening effect is weaker and the smoothing effect is stronger. Therefore, we should select reasonable parameters for kp and kr in order to achieve the desired results according to the specific image data.

The IASF is not sensitive to noise. It can adjust the grayscale of the local pixels for transforming the histogram of the image. If we set kp = 0, the improved range filter changes to a conventional range filter. Then, the IASF becomes a low-pass filtering algorithm due to the range filter, and the average filter possesses low-pass characteristics. In particular, if we further set kr = 0, the proposed algorithm eventually turns out to be the range filter.

The value ranges of kp and kr are greater than or equal to 0. The filtering effect will be different according to different parameter values, and it is summarized in Table 6.

Table 6.

Different filtering effects with different parameter values.

5. Conclusions

According to the preprocessing requirements of the remote sensing images, a novel spatial algorithm is proposed for simultaneously smoothing noise and for enhancing the edges. The weighted normalization filtering algorithm integrates the improved range filter and the average filter, and its processing parameters are flexible and adjustable relative to different images. We compared our algorithm with other commonly used spatial filters such as the Wiener filter, bilateral filter, average filter, UMF, and the combination of bilateral filter followed by UMF. The experimental results clearly indicate that our algorithm performs better than the filters mentioned previously, both in terms of subjective as well as quantitative analysis. The proposed algorithm exhibits excellent performances of both edge sharpness and noise removal. It can play an important role in the remote sensing field for achieving clearer images with higher SNR.

Author Contributions

Conceptualization, L.Z. and W.X.; methodology, L.Z.; writing original draft preparation, L.Z.; writing—review and editing, W.X.; funding acquisition, W.X. Both authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Key Research and Development Program of China under Grant 2016YBF0501202.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All data will be made available upon request to the corresponding author’s email with appropriate justification.

Acknowledgments

The authors would like to acknowledge the intelligent imaging laboratory for their hard experimental work and active involvement in the project.

Conflicts of Interest

No potential conflict of interest are reported by the authors.

References

- Al-Hamadani, A.H.; Zainulabdeen, F.S.; Karam, G.S.; Nasir, E.Y.; Al-Saedi, A. Effects of atmospheric turbulence on the imaging performance of optical system. In Proceedings of the AIP Conference, Beirut, Lebanon, 1–3 February 2018; p. 030071. [Google Scholar]

- Yau, H.; Du, X. Robust deep learning-based multi-image super-resolution using inpainting. J. Electron. Imaging 2021, 30, 013005. [Google Scholar] [CrossRef]

- Muller, T. Extended retinex and optimized image sharpening for hidden detail uncovering, visual range improvement, and image enhancement. In Proceedings of the SPIE: Computational Imaging V, Electr Network, 27 April–8 May 2020; Online. p. 113960A. [Google Scholar]

- Qian, Y.; Li, Q.; Liu, H.; Wang, Y.; Zhu, J. A preprocessing algorithm for hyperspectral images of vessels. In Proceedings of the 2014 7th International Conference on Biomedical Engineering and Informatics, Dalian, China, 14–16 October 2014; pp. 19–23. [Google Scholar]

- Zheng, L.; Jin, G.; Xu, W.; Qu, H. An improved adaptive preprocessing method for TDI CCD images. Optoelectron. Lett. 2018, 14, 76–80. [Google Scholar] [CrossRef]

- Zheng, L.; Jin, G.; Xu, W.; Qu, H.; Wu, Y. Noise model of a multispectral TDI CCD imaging system and its parameter estimation of piecewise weighted least square fitting. IEEE Sens. J. 2017, 17, 3656–3668. [Google Scholar] [CrossRef]

- Zhang, L.; He, J.; Dai, H.; Wan, C. Noise processing technology of a TDI CCD sensor. In Proceedings of the 2010 International Conference on Computer, Mechatronics, Control and Electronic Engineering, Changchun, China, 24–26 August 2010; pp. 395–398. [Google Scholar]

- Krieger, E.; Asari, V.K.; Arigela, S. Color image enhancement of low-resolution images captured in extreme lighting conditions. In Proceedings of the Mobile Multimedia/Image Processing, Security, and Applications, Baltimore, MD, USA, 22 May 2014; Volume 9120, p. 91200Q. [Google Scholar]

- Awasthi, N.; Kalva, S.K.; Pramanik, M.; Yalavarthy, P.K. Image-guided filtering for improving photoacoustic tomographic image reconstruction. J. Biomed. Opt. 2018, 23, 091413. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, B.; Allebach, J.P. Adaptive bilateral filter for sharpness enhancement and noise removal. IEEE Trans. Image Process. 2008, 17, 664–678. [Google Scholar] [CrossRef] [PubMed]

- Wu, X.; Hu, S.; Zhao, J.; Li, Z.; Li, J.; Tang, Z.; Xi, Z. Comparative analysis of different methods for image enhancement. J. Cent. South Univ. 2014, 21, 4563–4570. [Google Scholar] [CrossRef]

- Dhanushree, M.; Priyadharsini, R.; Sharmila, T.S. Acoustic image denoising using various spatial filtering techniques. Int. J. Inform. Technol. 2019, 11, 659–665. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Guided image filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1397–1409. [Google Scholar] [CrossRef] [PubMed]

- Khetkeeree, S.; Thanakitivirul, P. Hybrid filtering for image sharpening and smoothing simultaneously. In Proceedings of the ITC-CSCC 2020–35th International Technical Conference on Circuits/Systems, Computers and Communications, Nagoya, Japan, 3–6 July 2020; pp. 367–371. [Google Scholar]

- Joseph, J.; Periyasamy, R. Nonlinear sharpening of MR images using a locally adaptive sharpness gain and a noise reduction parameter. Pattern Anal. Appl. 2019, 22, 273–283. [Google Scholar] [CrossRef]

- Pham, C.C.; Ha, S.V.U.; Jeon, J.W. Adaptive guided image filtering for sharpness enhancement and noise reduction. In Proceedings of the Advances in Image and Video Technology: 5th Pacific-Rim Symposium on Video and Image Technology (PSIVT), Gwangju, Korea, 20–23 November 2011; Volume 7087, pp. 323–334. [Google Scholar]

- Xie, Z.; Lau, R.W.H.; Gui, Y.; Chen, M.; Ma, L. A gradient-domain-based edge-preserving sharpen filter. IEEE Trans. Vis. Comput. Graph. 2012, 28, 1195–1207. [Google Scholar] [CrossRef]

- Shi, H.; Wang, H.; Zhao, C.; Shen, Z. Sonar image preprocessing based on morphological wavelet transform. In Proceedings of the 2010 IEEE International Conference on Information and Automation, Harbin, China, 20–23 June 2010; pp. 1113–1117. [Google Scholar]

- Yoon, H.; Lee, H.; Lee, Y.Y.; Jung, H. Multi-scale synthetic filtering method for ultrasonic image enhancement. In Proceedings of the 2012 IEEE International Ultrasonics Symposium, Dresden, Germany, 7–10 October 2012; pp. 699–702. [Google Scholar]

- Archana, J.N.; Aishwarya, P. Geographic data visualization using wavelet based unsharp masking. In Proceedings of the 2015 International Conference on Control, Instrumentation, Communication and Computational Tech-nologies (ICCICCT), Kumaracoil, India, 18–19 December 2015; pp. 490–494. [Google Scholar]

- Chi, J.; Eramian, M. Wavelet-based texture-characteristic morphological component analysis for colour image enhancement. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 4097–4101. [Google Scholar]

- Pandey, P.; Dewangan, K.K.; Dewangan, D.K. Enhancing the quality of satellite images by preprocessing and contrast enhancement. In Proceedings of the 2017 International Conference on Communication and Signal Processing (ICCSP), Chennai, India, 6–8 April 2017; pp. 56–60. [Google Scholar]

- Basile, M.C.; Bruni, V.; Vitulano, D. A CSF-based preprocessing method for image deblurring. In Proceedings of the International Conference on Advanced Concepts for Intelligent Vision Systems (ACIVS) 2017, Antwerp, Belgium, 18–21 September 2017; Volume 10617, pp. 602–614. [Google Scholar]

- Kim, S.H.; Allebach, J.P. Optimal unsharp mask for image sharpening and noise removal. J. Electron. Imaging 2005, 14, 023005. [Google Scholar]

- Ramponi, G.; Strobel, N.; Mitra, S.K.; Yu, T. Nonlinear unsharp masking methods for image contrast enhancement. J. Electron. Imaging 1996, 5, 353–366. [Google Scholar]

- Guan, R.; Wan, Y. An improved unsharp masking sharpening algorithm for image enhancement. In Proceedings of the Eighth International Conference on Digital Image Processing (ICDIP 2016), Chengdu, China, 20–23 May 2016; Volume 10033, p. 100332A. [Google Scholar]

- Shi, H.; Kwok, N. An integrated bilateral and unsharp masking filter for image contrast enhancement. In Proceedings of the 2013 International Conference on Machine Learning and Cybernetics, Tianjin, China, 14–17 July 2013; pp. 907–912. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).