Virtual Namesake Point Multi-Source Point Cloud Data Fusion Based on FPFH Feature Difference

Abstract

:1. Introduction

- By introducing Fast Point Feature Histograms (FPFH) features, using the CNN network to learn the F2 distance of FPFH features to obtain the probability, improve the robustness to point cloud noise and point cloud resolution.

- Use voxels that use spatial information around the point to generate virtual points, increasing the accuracy and stability of finding corresponding points.

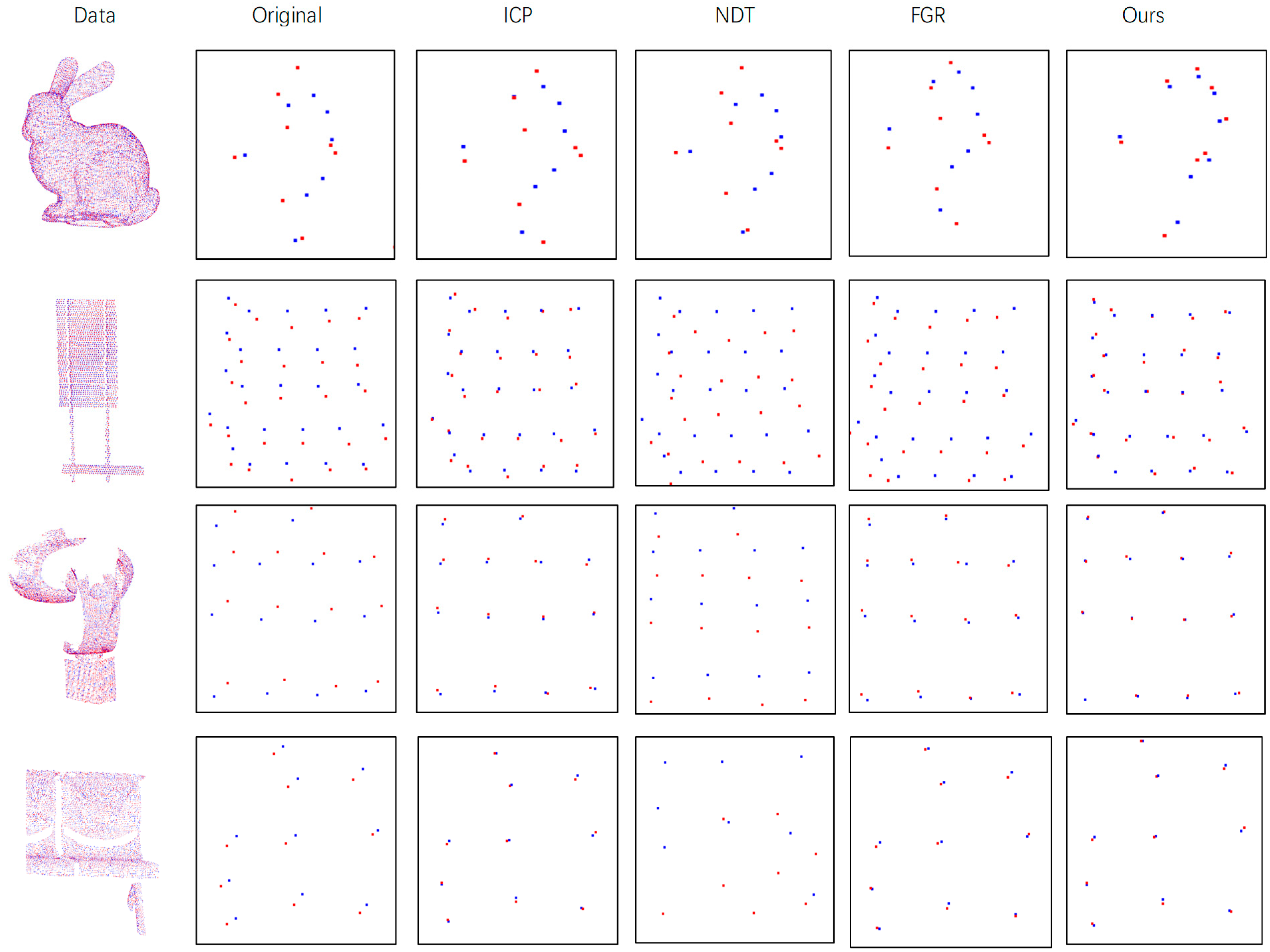

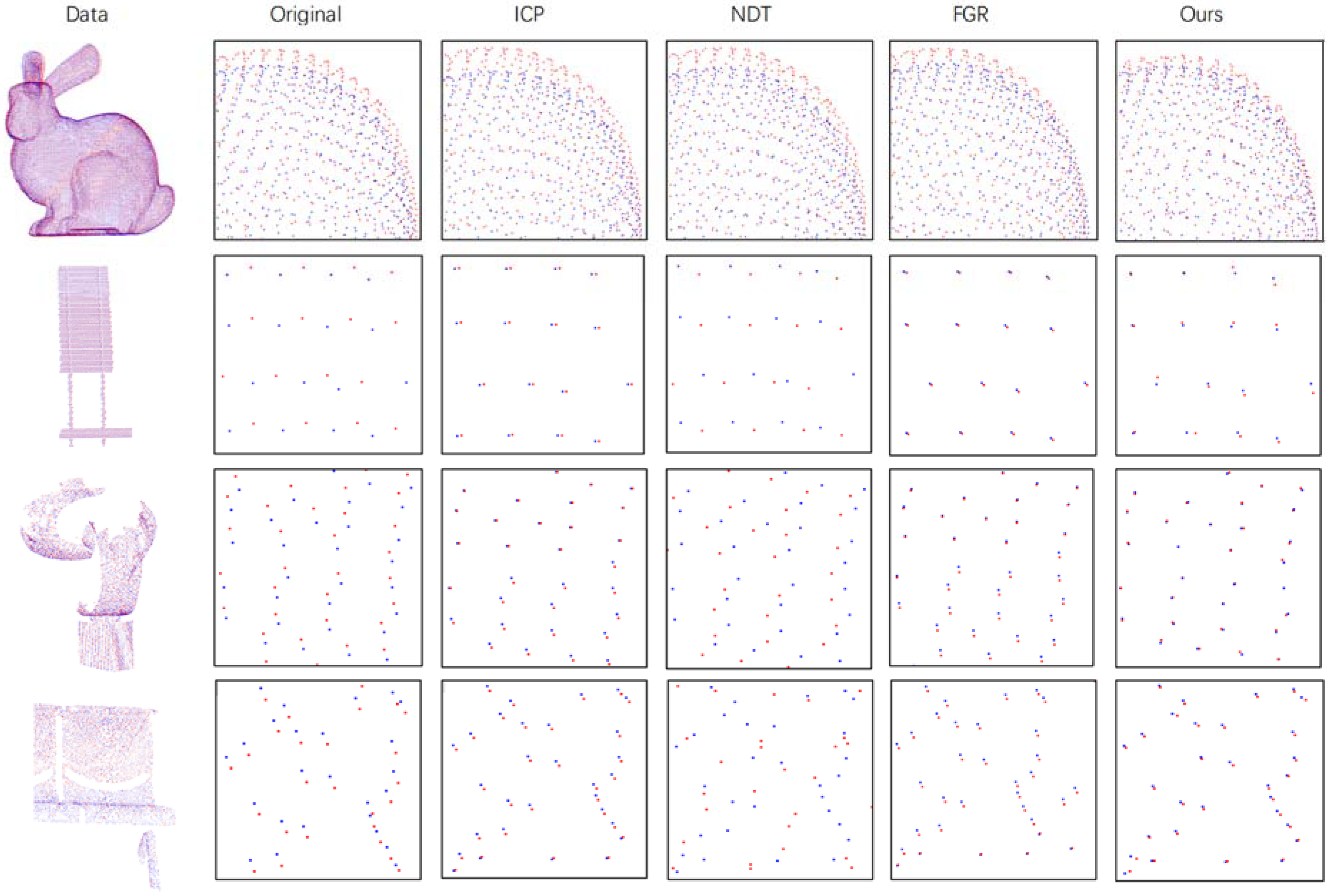

- Show the results compared to other existing works on experimental evaluations under clean, noisy, different resolution and distorted datasets.

2. Related Work

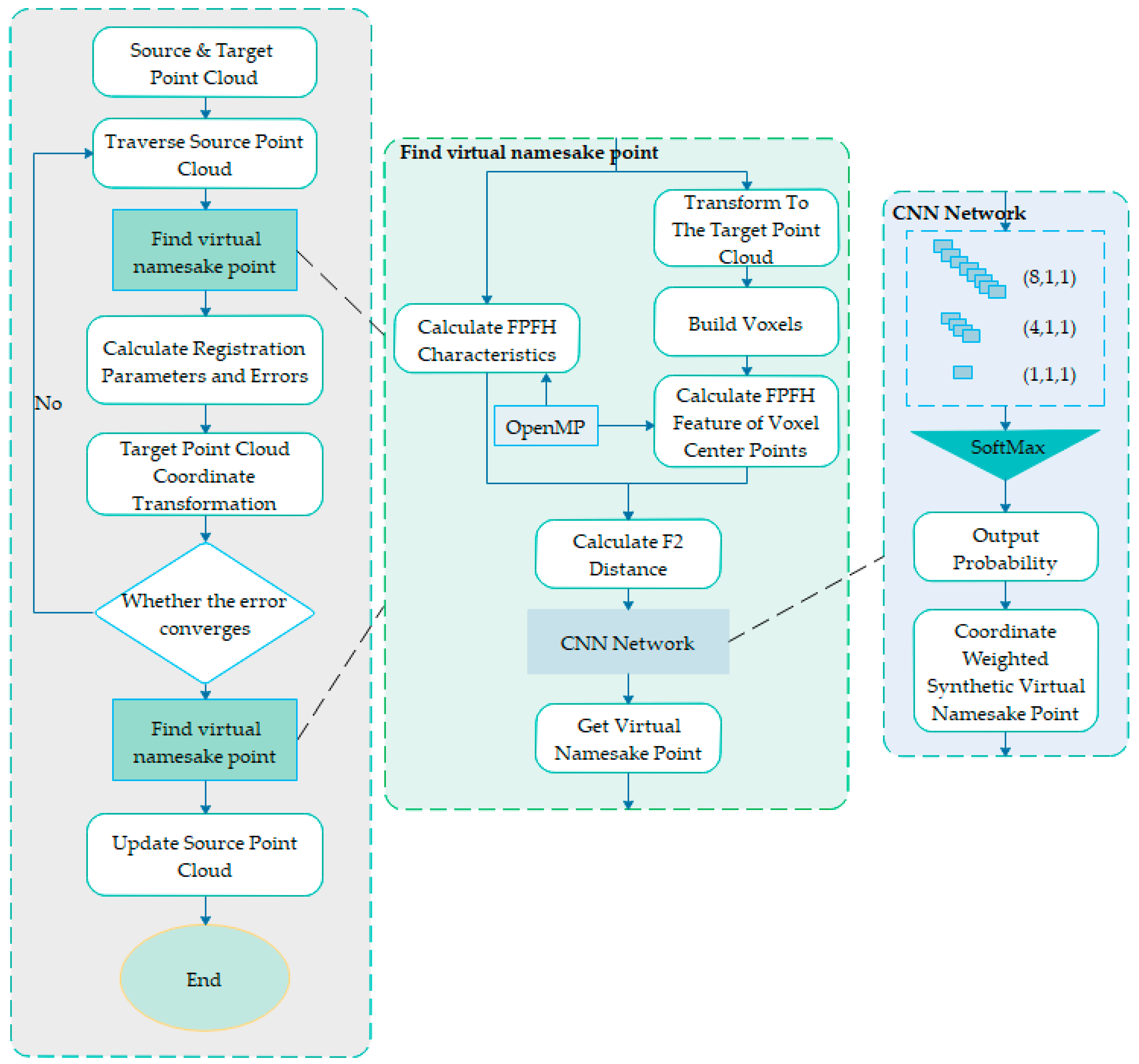

3. Methodology

- (1)

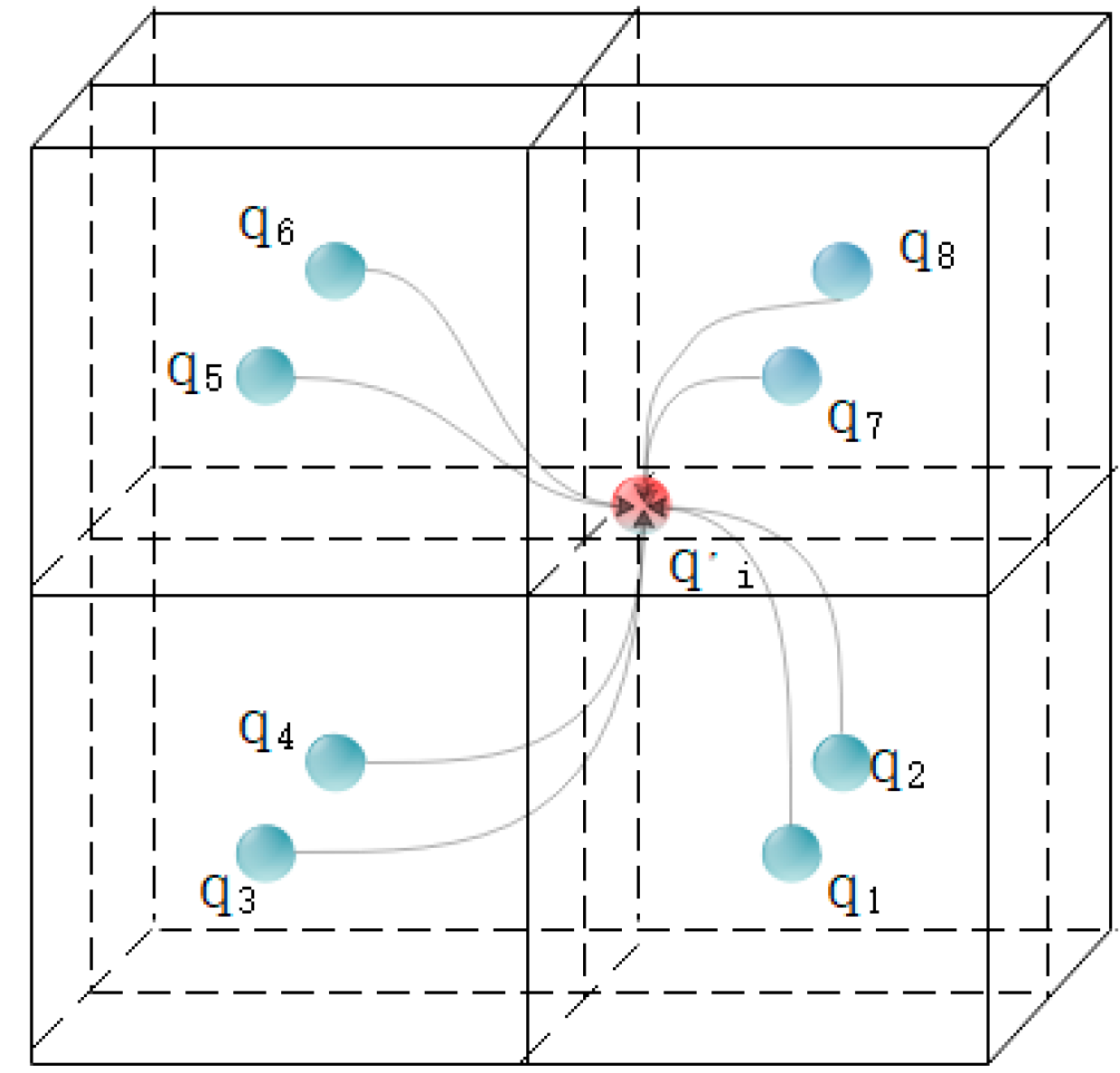

- Finding virtual namesake points based on FPFH feature difference: calculate the FPFH eigenvector value of the current point in the source point cloud , and then convert the current point to the target point cloud to acquire the point , and construct a 2*2*2 voxel around the point to acquire 8 voxel center points, the center point of each voxel is set to point the above 8 voxel center points are, respectively, interpolated into the target point cloud , and the FPFH value of the voxel center point is calculated after interpolation. This will obtain the feature vector value of calculate the F2 distance of and respectively, will acquire 8 F2 distances and input them into the constructed CNN convolutional neural network, and output the probability corresponding to the center point of each voxel, thus according to the coordinate of the prime center point and the corresponding probability form a virtual point , and the virtual point is selected as the corresponding point of the current point in the source point cloud.

- (2)

- Point cloud registration algorithm based on virtual points with the same name: based on the ICP algorithm, replace the step of using the closest point as the point with the same name in the ICP algorithm to find the virtual point with the same name in (1), and perform the target point cloud and the source point cloud Point cloud registration.

- (3)

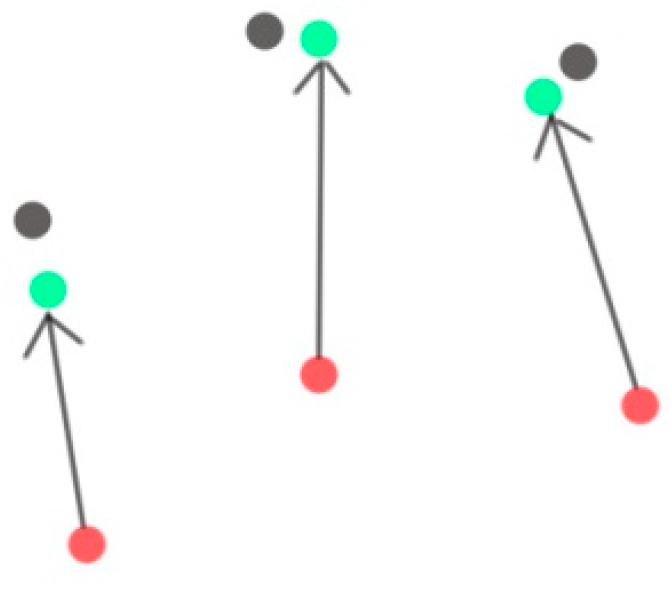

- Further correction of overlapping area point cloud: After the overall best point cloud registration of the low-precision point cloud, further precision corrections can be made to the details. Once again, we acquire virtual namesake points as we did in step 1, then replace the low precision points with these virtual namesake points. Correct the accuracy of the points in the low-precision point cloud to improve the accuracy of the low-precision point cloud.

3.1. Finding Virtual Namesake Point Based on FPFH Feature Difference

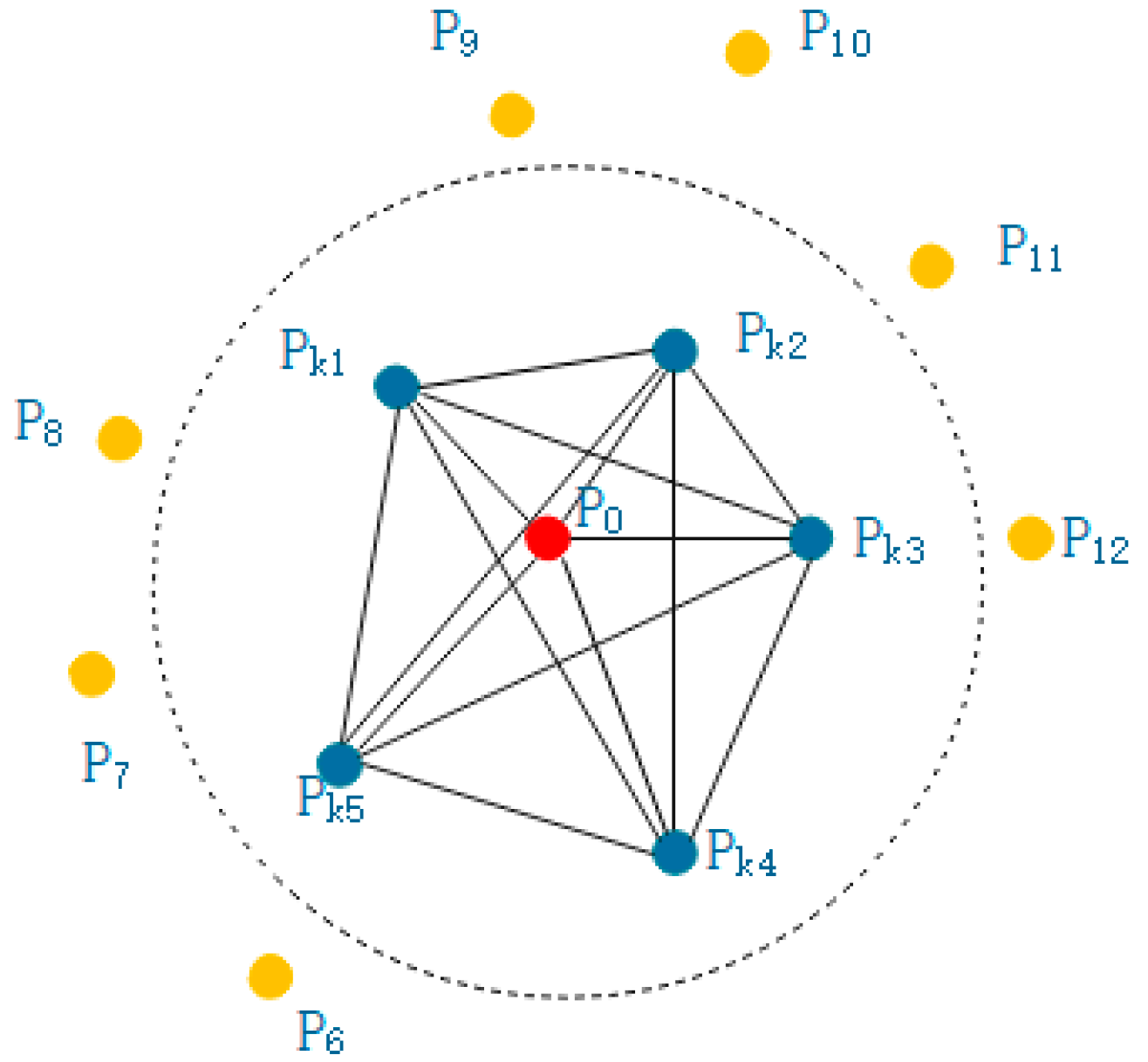

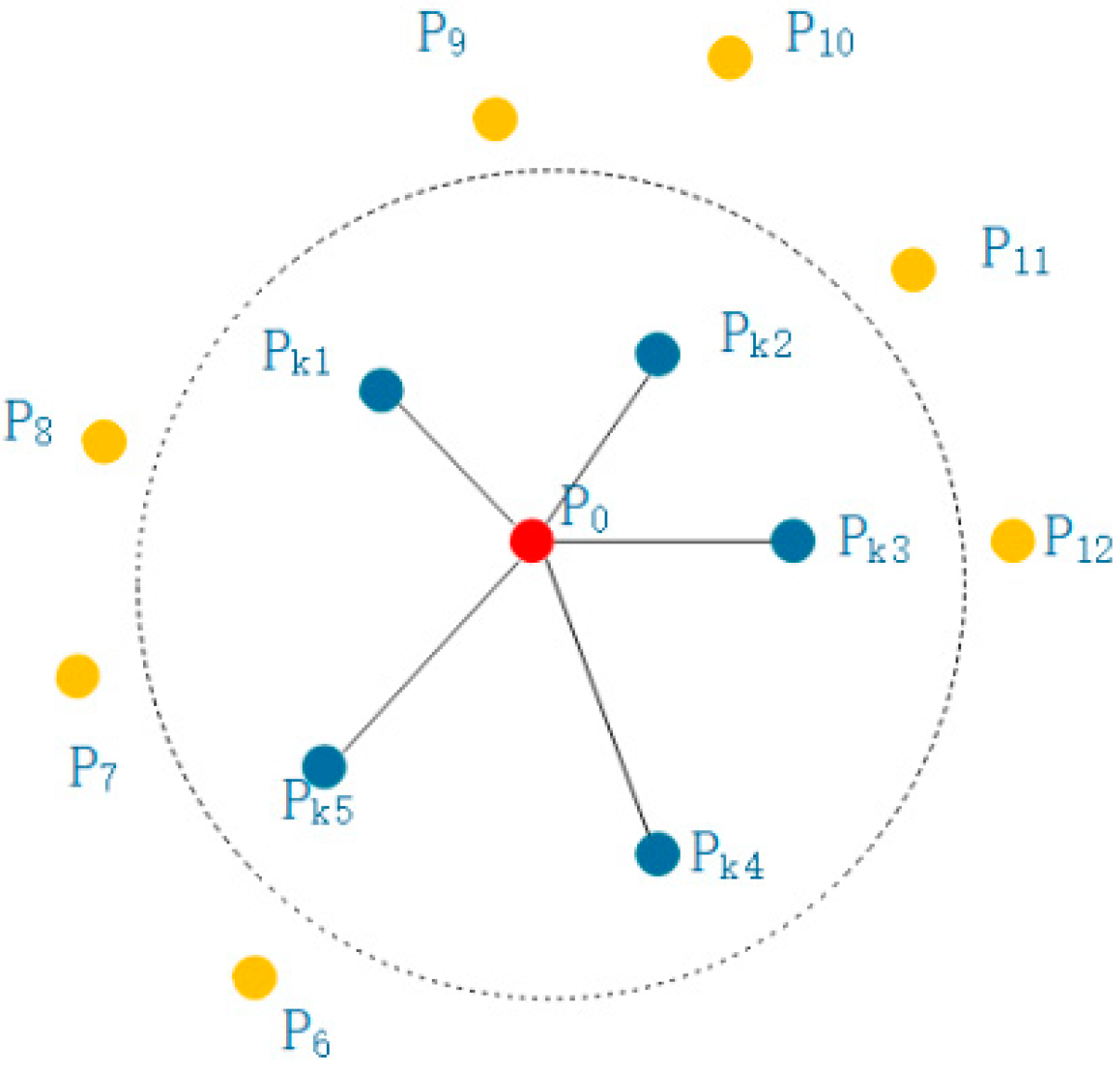

- (1)

- Suppose the point cloud is known and its coordinates and neighborhood are known, that is, a sphere is made with point as the center and as the radius. The points surrounded by the sphere are the neighborhood of point , as shown in Figure 2. As shown, are neighborhood points, and each point in the neighborhood is connected in pairs, forming a point pair with each other.

- (2)

- Construct the local coordinate system of the point pair as shown in Figure 3:where is the coordinate axis of the coordinate system.

- (3)

- At this time, according to the normal vector and coordinate system of the point pair, calculate the four eigenvalues of the point pair:

3.2. Point Cloud Registration Algorithm Based on Virtual Namesake Point

- (1)

- First select two point cloud datasets with different accuracy, take the low-precision point cloud as the source point cloud, the relatively high-precision point cloud as the target point cloud and the points in the source point cloud as the candidate points.

- (2)

- Use the initial conversion matrix to transform all points in the source point cloud and convert all points in the source point cloud to obtain the conversion points .

- (3)

- Put all the obtained conversion points into the target point cloud , find the neighbor points of the conversion point in the target point cloud , set a threshold at this time, calculate the Euclidean distance between the conversion point and the nearest neighbor point in the target point cloud, compare the calculated distance with the threshold. If it is greater than the threshold, it indicates that the conversion point does not overlap in the source point cloud, delete the conversion point and keep the conversion point less than the threshold.

- (4)

- Perform FPFH eigenvalue calculation on the conversion point obtained above in the source point cloud to obtain .

- (5)

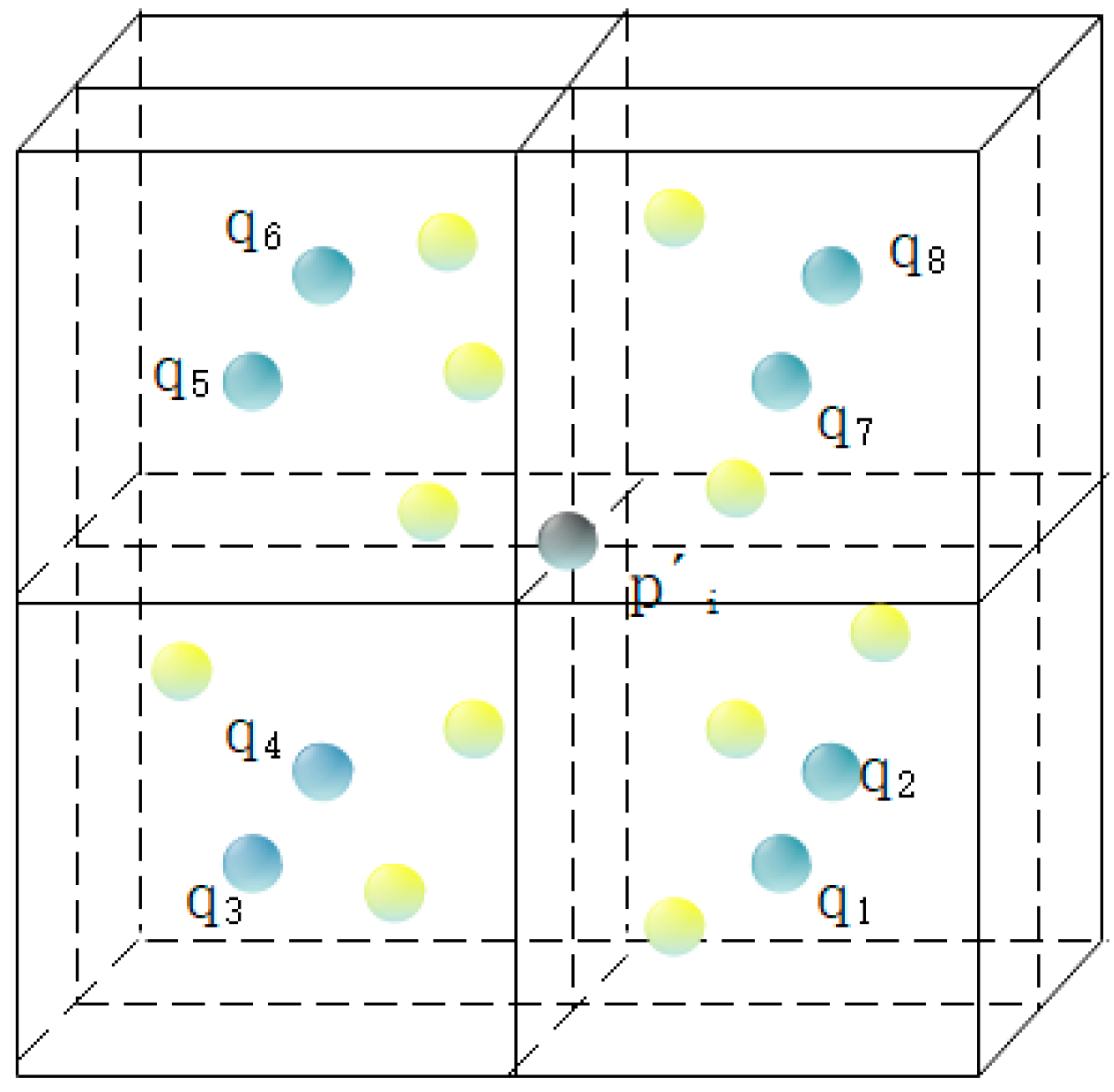

- Find the neighborhood of the conversion point in the target point cloud and divide the voxel to obtain the voxel center point .

- (6)

- Calculate the FPFH eigenvalue of the voxel center point and calculate the F2 distance from .

- (7)

- Feed the F2 distance obtained in (6) into the CNN network to obtain the probability of the voxel center point.

- (8)

- Use the probability in (7) to calculate the virtual point corresponding to the conversion point .

- (9)

- After obtaining the corresponding points, calculate the conversion matrix according to the least squares, and the calculation principle is to minimize the objective function of Formula (6):

- (10)

- Repeat the above steps until the number of cycles is reached or the objective function is basically unchanged.

3.3. Point Cloud in Overlapping Areas for Further Correction

4. Experiment and Result Discussion

4.1. Experimental Data and Baseline Algorithms

4.2. Evaluation Metrics

4.3. Experiment Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Liu, J.; Wu, H.; Guo, C.; Zhang, H.; Zuo, W.; Yang, C. Progress and consideration of highprecision road navigation map. Eng. Sci. 2018, 20, 99–105. [Google Scholar]

- Chen, Z.; Sun, E.; Li, D.; Zhang, C.; Zang, D.; Cheng, X. Analysis of the Status Quo of High-precision Maps and Research on Implementation Schemes. Comput. Knowl. Technol. 2018, 14, 270–272. [Google Scholar]

- Grilli, E.; Menna, F.; Remondino, F. A review of point clouds segmentation and classification algorithms. ISPRS Int. Arch. Photogramm. Remote. Sens. Spat. Inf. Sci. 2017, 42, 339–344. [Google Scholar] [CrossRef] [Green Version]

- Yao, Y.; Jiang, S.; Wang, H. Overall Deformation Monitoring for Landslide by Using Ground 3D Laser Scanner. J. Geomat. 2014, 1, 16. [Google Scholar]

- Besl, P.J.; Mckay, H.D. A method for registration of 3-d shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 1611, 586–606. [Google Scholar] [CrossRef]

- Biber, P. The Normal Distributions Transform: A New Approach to Laser Scan Matching. In Proceedings of the 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems, Las Vegas, NV, USA, 27–31 October 2003; Volume 3, pp. 2743–2748. [Google Scholar]

- Chen, X. Research on Algorithm and Application of Deep Learning Based on Convolutional Neural Network. Master’s Thesis, Zhejiang Gongshang University, Hangzhou, China, 2013. [Google Scholar]

- Elbaz, G.; Avraham, T.; Fischer, A. 3D Point Cloud Registration for Localization Using a Deep Neural Network Auto-Encoder. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4631–4640. [Google Scholar]

- Lu, W.; Wan, G.; Zhou, Y.; Fu, X.; Yuan, P.; Song, S. Deepvcp: An end-to-end deep neural network for point cloud registration. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 12–21. [Google Scholar]

- Censi, A. An ICP variant using a point-to-line metric. In Proceedings of the 2008 IEEE International Conference on Robotics and Automation, Pasadena, CA, USA, 19–23 May 2008; pp. 19–25. [Google Scholar]

- Chen, Y.; Medioni, G. Object modelling by registration of multiple range images. Image Vis. Comput. 1992, 10, 145–155. [Google Scholar] [CrossRef]

- Mitra, N.J.; Gelfand, N.; Pottmann, H.; Guibas, L. Registration of point cloud data from a geometric optimization perspective. In Proceedings of the 2004 Eurographics/ACM SIGGRAPH symposium on Geometry processing SGP’04, Nice, France, 8–10 July 2004; pp. 22–31. [Google Scholar] [CrossRef] [Green Version]

- Luo, X.; Zhong, Y.; Li, R. Data registration in 3-D scanning systems. Qinghua Daxue Xuebao J. Tsinghua Univ. 2004, 44, 1104–1106. [Google Scholar]

- James, S.; Steven, L.W. Multi channel generalized-ICP. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 3644–3649. [Google Scholar]

- Li, D. Research on Point Cloud Registration Method Based on Improved Convolutional Neural Network. Master’s Thesis, North University of China, Taiyuan, China, 2018. [Google Scholar]

- Liang, Z.; Shao, W.; Sun, W.; Ma, W. Space Management of Point Cloudand Searching Nearest Neighbors Based on Point Cloud Library. Beijing Surv. Mapp. 2018, 32, 52–57. [Google Scholar]

- Rusu, R.B.; Blodow, N.; Beetz, M. Fast Point Feature Histograms (FPFH) for 3D registration. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 3212–3217. [Google Scholar]

- Rusu, R.B.; Blodow, N.; Marton, Z.C.; Beetz, M. Aligning point cloud views using persistent feature histograms. In Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 3384–3391. [Google Scholar]

- Dong, Z.; Yang, B.; Liu, Y.; Liang, F.; Li, B.; Zang, Y. A novel binary shape context for 3D local surface description. ISPRS J. Photogramm. Remote Sens. 2017, 130, 431–452. [Google Scholar] [CrossRef]

- Dong, Z.; Yang, B.; Liang, F.; Huang, R.; Scherer, S. Hierarchical registration of unordered TLS point clouds based on binary shape context descriptor. ISPRS J. Photogramm. Remote Sens. 2018, 144, 61–79. [Google Scholar] [CrossRef]

- Dong, Z.; Liang, F.; Yang, B.; Xu, Y.; Zang, Y.; Li, J.; Wang, Y.; Dai, W.; Fan, H.; Hyyppä, J.; et al. Registration of large-scale terrestrial laser scanner point clouds: A review and benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 163, 327–342. [Google Scholar] [CrossRef]

- Zhou, Q.-Y.; Park, J.; Koltun, V. Fast Global Registration. In Algorithms and Data Structures; Springer: Berlin/Heidelberg, Germany, 2016; pp. 766–782. [Google Scholar]

| Data | Method | Rotation Err. (°) | Translation Err. (m) | CD (m) |

|---|---|---|---|---|

| Bunny | ICP | 0.002 | 0.00001 | 0.00000 |

| NDT | 0.105 | 0.01832 | 0.00076 | |

| FGR | 0.001 | 0.00001 | 0.00000 | |

| Ours | 0.015 | 0.00321 | 0.00002 | |

| Sign Board | ICP | 0.448 | 0.04120 | 0.00123 |

| NDT | 0.228 | 0.08271 | 0.00227 | |

| FGR | 0.094 | 0.04397 | 0.00008 | |

| Ours | 0.195 | 0.05780 | 0.00025 | |

| Sculpture | ICP | 0.001 | 0.00002 | 0.00000 |

| NDT | 0.337 | 0.07969 | 0.00249 | |

| FGR | 0.017 | 0.00117 | 0.00000 | |

| Ours | 0.008 | 0.00204 | 0.00000 | |

| Chair | ICP | 0.003 | 0.00000 | 0.00000 |

| NDT | 2.373 | 0.09056 | 0.00232 | |

| FGR | 0.018 | 0.00128 | 0.00000 | |

| Ours | 0.022 | 0.00189 | 0.00000 |

| Data | Method | Rotation Err. (°) | Translation Err. (m) | CD (m) |

|---|---|---|---|---|

| Bunny | ICP | 0.044 | 0.01323 | 0.00316 |

| NDT | 0.201 | 0.01667 | 0.00366 | |

| FGR | 0.083 | 0.00559 | 0.00315 | |

| Ours | 0.032 | 0.00413 | 0.00310 | |

| Sign Board | ICP | 0.111 | 0.02589 | 0.00141 |

| NDT | 0.150 | 0.08755 | 0.00178 | |

| FGR | 0.414 | 0.27841 | 0.00321 | |

| Ours | 0.085 | 0.07789 | 0.00148 | |

| Sculpture | ICP | 0.131 | 0.01742 | 0.00044 |

| NDT | 0.448 | 0.06963 | 0.00228 | |

| FGR | 0.297 | 0.04777 | 0.00052 | |

| Ours | 0.201 | 0.01555 | 0.00046 | |

| Chair | ICP | 0.027 | 0.00186 | 0.00002 |

| NDT | 2.076 | 0.09368 | 0.00243 | |

| FGR | 0.201 | 0.01737 | 0.00243 | |

| Ours | 0.039 | 0.00260 | 0.00002 |

| Data | Method | Rotation Err. (°) | Translation Err. (m) | CD (m) |

|---|---|---|---|---|

| Bunny | ICP | 0.004 | 0.00024 | 0.00126 |

| NDT | 0.152 | 0.01740 | 0.00202 | |

| FGR | 0.080 | 0.00101 | 0.00132 | |

| Ours | 0.043 | 0.00810 | 0.00112 | |

| Sign Board | ICP | 0.026 | 0.02363 | 0.00020 |

| NDT | 0.211 | 0.09656 | 0.00197 | |

| FGR | 0.257 | 0.09921 | 0.00067 | |

| Ours | 0.115 | 0.08282 | 0.00018 | |

| Sculpture | ICP | 0.016 | 0.00025 | 0.00001 |

| NDT | 0.355 | 0.06781 | 0.00168 | |

| FGR | 0.197 | 0.01182 | 0.00002 | |

| Ours | 0.330 | 0.02407 | 0.00001 | |

| Chair | ICP | 0.002 | 0.00003 | 0.00051 |

| NDT | 2.442 | 0.09082 | 0.00227 | |

| FGR | 0.027 | 0.00197 | 0.00063 | |

| Ours | 0.012 | 0.00151 | 0.00055 |

| Data | Method | CD (m) |

|---|---|---|

| Bunny | ICP | 0.00913 |

| NDT | 0.01203 | |

| FGR | 0.01077 | |

| Ours | 0.00600 | |

| Sign Board | ICP | 0.00007 |

| NDT | 0.00169 | |

| FGR | 0.00010 | |

| Ours | 0.00018 | |

| Sculpture | ICP | 0.00022 |

| NDT | 0.00040 | |

| FGR | 0.00025 | |

| Ours | 0.00009 | |

| Chair | ICP | 0.00015 |

| NDT | 0.00166 | |

| FGR | 0.00016 | |

| Ours | 0.00010 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zheng, L.; Li, Z. Virtual Namesake Point Multi-Source Point Cloud Data Fusion Based on FPFH Feature Difference. Sensors 2021, 21, 5441. https://doi.org/10.3390/s21165441

Zheng L, Li Z. Virtual Namesake Point Multi-Source Point Cloud Data Fusion Based on FPFH Feature Difference. Sensors. 2021; 21(16):5441. https://doi.org/10.3390/s21165441

Chicago/Turabian StyleZheng, Li, and Zhukun Li. 2021. "Virtual Namesake Point Multi-Source Point Cloud Data Fusion Based on FPFH Feature Difference" Sensors 21, no. 16: 5441. https://doi.org/10.3390/s21165441

APA StyleZheng, L., & Li, Z. (2021). Virtual Namesake Point Multi-Source Point Cloud Data Fusion Based on FPFH Feature Difference. Sensors, 21(16), 5441. https://doi.org/10.3390/s21165441