Affective Communication for Socially Assistive Robots (SARs) for Children with Autism Spectrum Disorder: A Systematic Review

Abstract

1. Introduction

2. Background

2.1. Autism Spectrum Disorder (ASD)

2.2. What Is an Emotion?

2.3. Emotional Intelligence

2.4. Affective Interaction with Social Robots

- Knowledge representation, which is related to past, present, and future events as beliefs, desires, plans, and intentions.

- Cognitive operators are related to computer metaphors, which can be cognitive, perceptual, or motor.

- Appraisals consider appraisal theories, where each cognitive operator is represented using a casual interpretation considering that an event can be past, present, or future.

- Emotions, mood, and focus of attention, which are appraisal patterns related to emotion labels

- Coping strategies, which determine how the agent responds to the events.

3. Objectives

- What is an intelligent method of affective communication for a social robot?

- What theories/modules have been used to develop these models of affective communication?

- Which of the proposed affective communication models have been used for children with ASD?

- What are the differences between the affective communication models for children with ASD and those without ASD?

- Can affective communication be achieved by SARs for children with ASD?

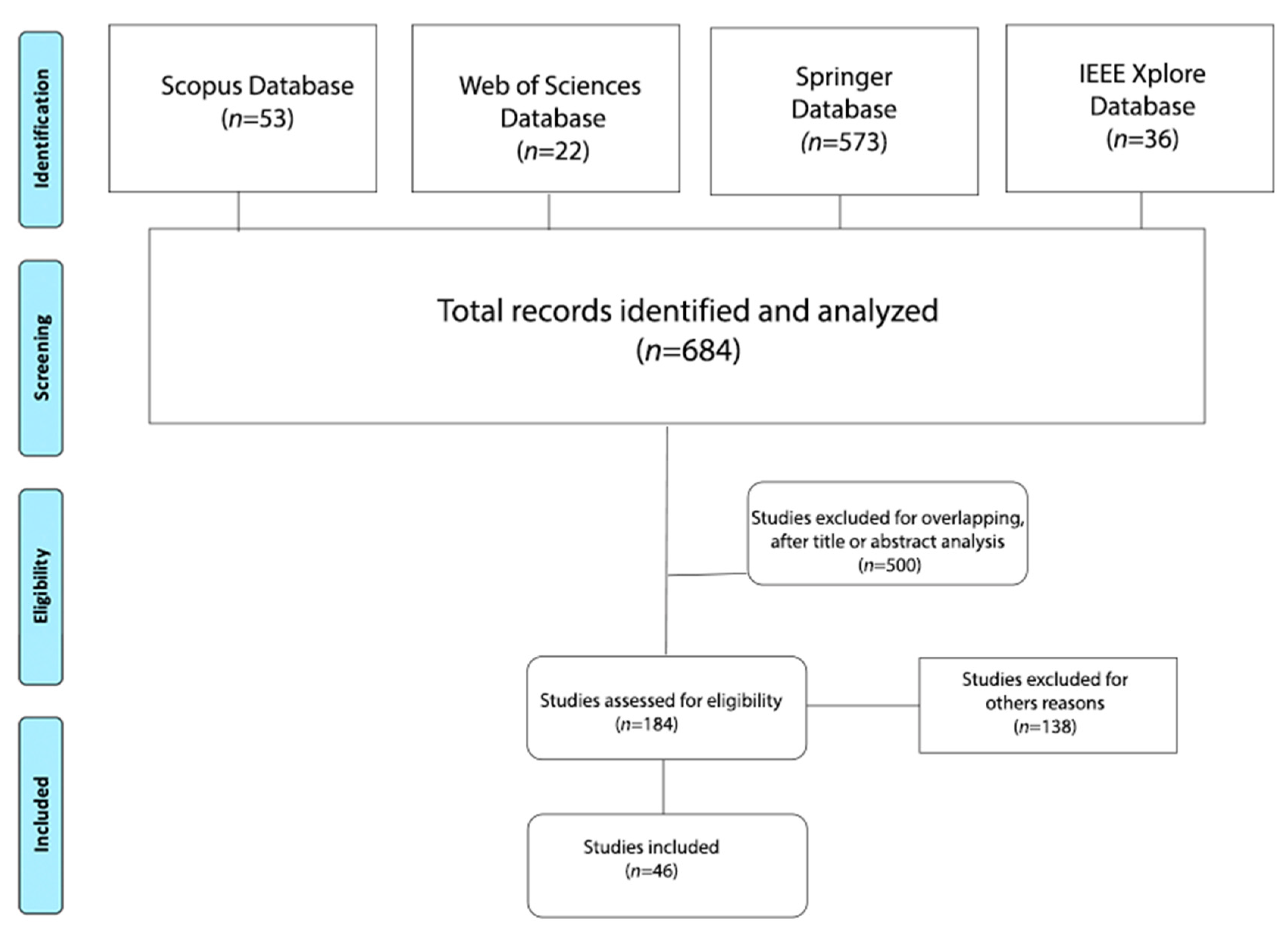

4. Methods

4.1. Review of Terms

4.2. Inclusion/Exclusion Criteria Selection of Studies

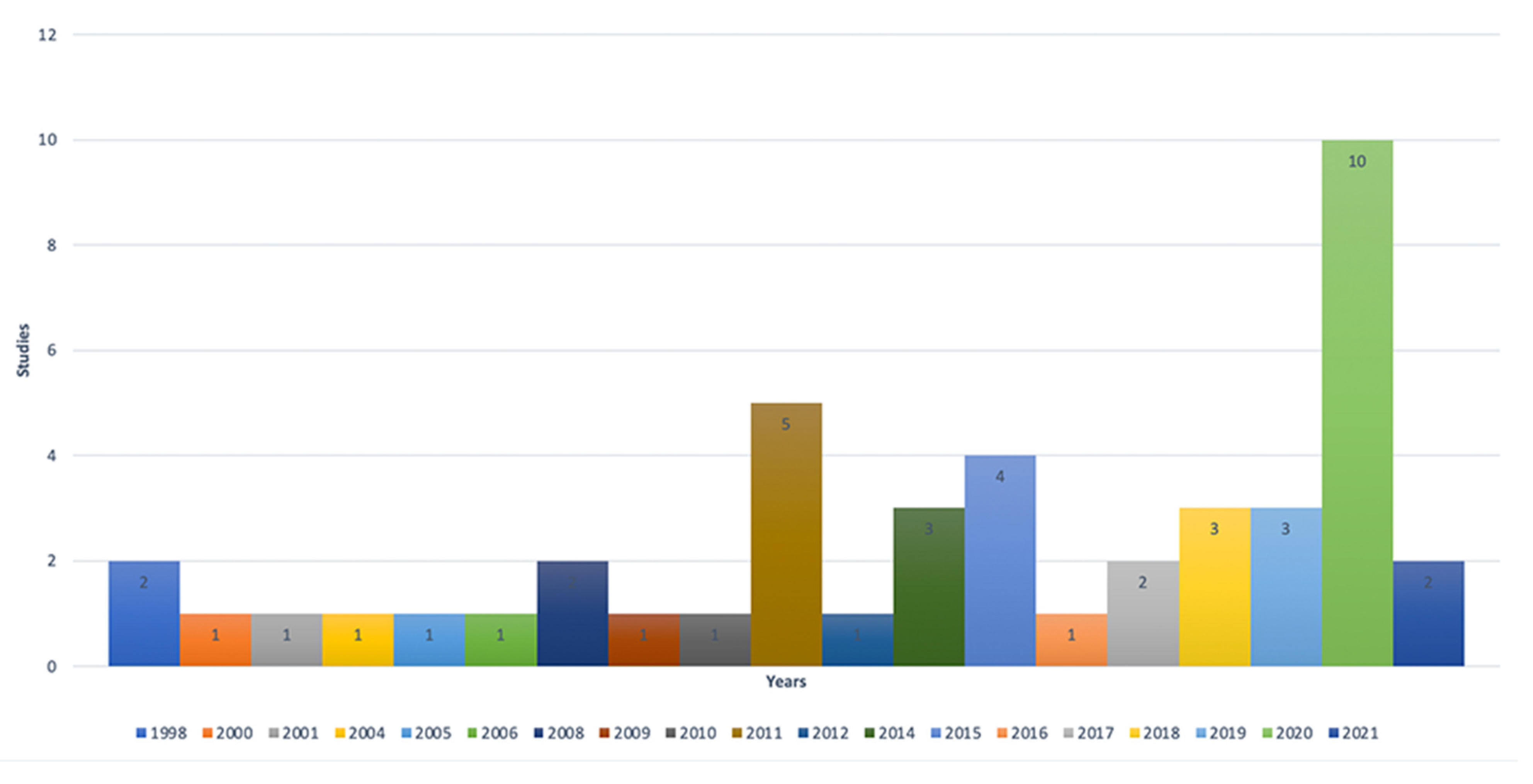

5. Results

Data Extraction

- The robot does not need the standardization of the environment.

- The interface of the robot is not limited.

- The communication scenario is not set to the robot.

- Perception, which captures sensory data, including sound, vision, touch, and acceleration.

- A processor, which functions to analyze data and apply techniques of artificial intelligence, amongst others.

- Outputs through various channels: vision, audio, color, and motion.

- Emotion detection, which detects and recognizes an emotion from facial expressions.

- Reinforcement learning algorithms, through which, over time, they learn to select the empathic behaviors that comfort users in different emotional states.

- Empathic behavior provider, which applies selected behaviors to the robot to react to users’ emotions.

- The moderator represents the cognitive internal emotional state. It builds a list of emotional experiences as a personality and mood.

- The emotional experiences selector represents the emotional state. It builds a list of emotional experiences and functions from the words of the discourse.

- The emotional experiences generator represents cognitive internal emotion.

- The behavior chooses a reaction according to the best emotional experiences.

- An emotion can be induced by several sensorial channels: visual, auditory, and tactile, amongst others.

- Signal processing of the different sensory channels can activate neural sites that are present to respond to the particular channel.

- An emotion can be manifested in different psycho-physiological responses.

- Changes in body state are represented by both the subcortical and cortical regions, which are represented by first-order neural maps.

- An emotional state neutral is represented by second-order neural structures.

| Year | Title | Model/Architecture (Modules/Name) | Theories Inspired | Emotions | Outputs/Inputs | Robot | Child | Child with ASD |

|---|---|---|---|---|---|---|---|---|

| 1998 [72] | Modelling emotions and other motivations in synthetic agents. | Cathexis:

| Damasio’s theory: decision-making; ethology theories; artificial intelligence theories. | Anger, fear, distress/sadness, enjoyment/happiness, disgust, and surprise. | Battery, temperature, energy, interest levels Cameras, Audio, IR sensors for obstacle, air pressure sensor | Yuppy | No | No |

| 1998 [127] | Intelligent agent system for human–robot interaction through artificial emotion |

| Multimodal environment, model of artificial emotion using Kohonen’s Self-Organization Map (SOM). | Tranquil, happy, melancholy, angry. | Movement, light and acoustic (music and sound) Camera, ultrasound sensor | Pioneer 1 Mobile Robot | No | No |

| 2000 [71] | Emotional communication robot: WAMOEBA | Endocrine system:

| Behavior of robots could be interpreted as feelings, based on the Urge theory of emotion and cognition proposed by Toda [128], model of endocrine system of humans. | Anger, sadness, pleasure, expectation. | Actuator speed, LCD color, cooling fan Camera, sound (volume, speed, pitch) | WAMOEBA- 2R | No | No |

| 2001 [129] | Robot learning driven by emotions |

| Perception, reinforcement, and control triggering. Emotions influence the feelings through a hormone system. | Emotions: happiness, sadness, fear, and anger. Feelings: hunger, pain, restlessness, temperature, eating, smell, warmth, proximity. | Battery, light, motor speed Proximity sensor | - | No | No |

| 2001 [130] | Model of knowledge, emotion, and intention | K.E.I:

| Algorithm Q-learning to learn a series of behavior patterns. Fuzzy Cognitive Maps [131] | Anger, fear, abandonment, avoidance, troublesome, anxiety, approach-forward. | Camera | - | No | No |

| 2004 [60] | Can a robot empathize with people? | Robotogenetic:

| Theory of mind, development of empathy of the child. | Reading of desired or negative emotions of the infant. | 29 actuators (face and body), speech synthesizer Cameras and microphones | Infanoid, Keepon | Yes | Yes |

| 2005 [75] | An embodied computational model of social referencing |

| Theory of Damasio, theory of OCC, dimensional theory (arousal, valence), and human infants. | Happiness, surprise, contempt, sadness, fear, disgust, and anger. | 65 actuators, facial and body expressions Camera, microphone (vocal intonation) | Leonardo | Yes | No |

| 2006 [74] | EMOBOT: A Robot Control Architecture Based on Emotion-Like Internal Values | EMOBOT:

| Neuronal network and neuronal learning paradigms. Theory of control, theory of psychology of Damasio. | Primary internal states (drives): fatigue, hunger, homesickness, and curiosity. Secondary internal states (emotions): fear, anger, boredom, and happiness. | Movement directions (motors) ultrasonic sensor, ambient light, infrared | - | No | No |

| 2008 [117] | An affective model applied in playmate robots for children |

| Based on HMM. | Happiness, anger, and sadness | - | - | Yes | No |

| 2008 [132] | Multi-dimensional emotional engine with personality using intelligent service robot for children |

| Dimensional theory of emotions and personality model using five factor models in psychology [48]. | Happy, sad, surprise, disgust, fear, angry. | Temperature, speech, facial expression, humidity Camera | iRobi-Q | Yes | No |

| 2009 [88] | Emotion-Based Architecture for Social Interactive Robots |

| Theory of social interaction Watzlawick [133], theory of motivation [89], iB2C architecture [90]. | Anger, disgust, fear, happiness, sadness, surprise. Motivation such as: obeying humans, self-protection, energy consumption, avoid fatigue, communication, exploration, and entertainment. | Facial expressions, head (up/down) Camera, microphones | ROMAN | No | No |

| 2010 [87] | Robo-CAMAL: a BDI, motivational robot. | CAMAL:

| Psychological (belief–desire–intention) BDI model, CRIBB model (children’s reasoning about intentions, beliefs, and behavior) [134]. | Drives, goals, desire, intentions, and attitudes. | Movement directions Camera, microphone | Mobile robot | No | No |

| 2011 [91] | Children recognize emotions of EmI companion robot | iGrace:

| Based on the GRACE model. The EMFACS system is used for the facial expression of emotions. | Joy, surprise, sadness, anger, fear, disgust. | Facial expression (mouth, eyebrows, ears, eyes), tone of voice, posture (movement, speed) Camera, microphone | EmI | Yes | No |

| 2011 [59] | Artificial emotion model based on reinforcement learning mechanism of neural network | Homeostasis and extrinsic motivation, appraisal, and intrinsic motivation. Reward and value function and hard-wired connections from sensations. | Reinforcement learning and based on the hierarchical structure of human brain. | Emotional polarity | - | - | Yes | No |

| 2011 [66] | TAME: Time-Varying Affective Response for Humanoid Robots |

| Personality, emotion, mood, and attitude areas of psychology. | Fear, anger, disgust, sadness, joy and interest. Personality: openness, conscientiousness, extraversion, agreeableness, and neuroticism. | Facial expressions Body expression (head, ears, movement), LED | AIBO, NAO | No | No |

| 2011 [135] | A layered model of artificial emotion merging with attitude | AME (Attitude Mood Emotion):

| OCC theory, PAD (Pleasure Arousal Dominance) emotion space. | Happiness, dependence, calm, mildness. | - | FuNiu | No | No |

| 2011 [136] | Emotions as a dynamical system: the interplay between the meta-control and communication function of emotions |

| Canon–Bard theory, model of emotions of FACS (Facial Action Coding System). | Interest, excitation, satisfaction, joy, hunger, fear, shame, and disgust. | Movements, camera | Mobile robot | No | No |

| 2012 [78] | A Multidisciplinary Artificial Intelligence Model of an Affective Robot |

| Dynamic Bayesian network. | Happiness, sadness, disgust, surprise, anger, and fear. | 2D motion, audio, color, tilt and height | Lovotics | No | No |

| 2014 [67] | Empathic Robots for Long-Term Interaction |

| Hoffman theory of empathy, Scherer’s theory, framework of Cutrona et al. [96]. | Empathic expressions: Stronger reward, expected reward, weaker reward, unexpected reward, stronger punishment, expected punishment, weaker punishment, unexpected punishment. | Speech, facial expressions Camera | iCat | Yes | No |

| 2014 [79] | An Emotional Model for Social Robots: Late-Breaking Report |

| Reinforcement learning algorithm. | Joy and anger. | Actuators Body postures | - | No | No |

| 2014 [126] | Development of First Social Referencing Skills: Emotional Interaction to Regulate Robot Behavior |

| Deep learning techniques, attention visual concepts. | Sadness, surprise, happiness, hunger and neutral. | Actuators Camera | Katana arm | No | No |

| 2015 [84] | A Cognitive and Affective Architecture for Social Human–Robot Interaction | CAIO (Cognitive and Affective Interaction-Oriented):

| Architecture BDI BIGRE mental states. | Regret, disappointment, guilt, reproach, moral satisfaction, admiration, rejoicing, and gratitude. | Actuators (body postures) Camera, microphones | NAO | Yes | No |

| 2015 [32] | Cognitive Emotional Regulation Model in Human–Robot Interaction |

| Emotional regulation, based on Gross re-evaluation-based emotional regulation. | Angry, sober, controlled, friendly, clam, dominant painful, interested, humble, excited stiff and influential. | Facial expressions, body postures (head, arms) | - | Yes | No |

| 2015 [61] | The Affective Loop: A Tool for Autonomous and Adaptive Emotional Human-Robot Interaction | Affective loop:

| Definition of empathy by Goleman and Hoffman. | Sadness, anger, disgust, surprise, joy, anger, fear. | Body postures Kinect | NAO | Yes | No |

| 2015 [101] | EMIA: Emotion Model for Intelligent Agent | EMIA:

| Fuzzy logic, appraisal theories of emotions, emotion regulation theory, and multistore human memory model. | Happiness, anger, fear, sadness, disgust, and surprise. | - | - | No | No |

| 2016 [102] | A cognitive-affective architecture for ECAs | ECA:

| Affective model inspired by ALMA. | PAD model. | - | - | No | No |

| 2017 [99] | Towards an Affective Cognitive Architecture for Human–Robot Interaction for the iCub Robot |

| Inspired in cognitive architectures. | Neutral, interested, and bored. | - | iCub NAO | No | No |

| 2017 [124] | Animating the Adelino robot with ERIK: the expressive robotics inverse kinematics | ERIK (Expressive Robotics Inverse Kinematics) | Animation and kinematics. | - | Actuators | Adelino | No | No |

| 2018 [64] | SEAI: Social Emotional Artificial Intelligence Based on Damasio’s Theory of Mind | SEAI:

| Theory of Damasio. | - | Actuators (facial and body expression) | Face robot | No | No |

| 2018 [105] | Multimodal expression of Artificial Emotion in Social Robots Using Color, Motion, and Sound | Expressions of emotions:

| Theory of metaphor and emotion | Joy, sadness, fear, and anger. | Light, motors, and sound. | Probe | No | No |

| 2019 [95] | A multimodal affective computing approach for children companion robots |

| Three-dimensional space theory PAD, OCC model, RULER Theory. | Happy, angry, and upset. | Text, pronunciation intonation, eye-gaze, gesture, body posture, visual and dialogue interaction | - | Yes | No |

| 2019 [116] | Empathic robot for group learning |

| Artificial robotic tutors. | - | Body expressions, camera, microphone | Nao Torso | Yes | No |

| 2019 [111] | Artificial emotion modelling in PAD emotional space and human–robot interactive experiment |

| PAD emotion space, OCC theory. | Angry, bored, curious, dignified, elated, hungry, inhibited, loved, puzzled, sleepy, violent. | Actuators Camera, microphone | Fuwa | No | No |

| 2020 [81] | An Autonomous Cognitive Empathy Model (ACEM) Responsive to Users’ Facial Emotion Expressions |

| Empathy theories | Happiness, sadness, fear, anger, surprise, disgust. | Speech, eye color, motion camera, microphone | Pepper | No | No |

| 2020. [107] | Social and Emotional Skills Training with Embodied Moxie | START Evidence based therapeutic strategies:

| ABA (applied behavior analysis) therapy, CBT (cognitive behavioral therapy) for children with ASD. | - | Speech, facial expressions Camera, microphone | Moxie | Yes | Yes |

| 2020 [56] | Deep interaction: wearable robot-assisted emotion communication for enhancing perception and expression ability of children with autism spectrum disorder |

| Deep learning and multimodal data. | Happiness, anger and fear. | - | - | Yes | Yes |

| 2020 [108] | A Multimodal Emotional Human–Robot Interaction Architecture for Social Robots Engaged in Bidirectional Communication |

| Multimodal data fusion, OCC model. | happy, interested, sad, worried, and angry. | LEDs, actuators, speech synthesis Kinect, microphone, touch sensor, camera | NAO | No | No |

| 2020 [112] | An affective decision-making model with applications to social robotics |

| Based on Gomez and Rios’s affective model for social agent [115]. | Hope, fear, joy, sadness, anger. | - | - | No | No |

| 2020 [109] | On Designing Expressive Robot Behavior: The Effect of Affective Cues on Interaction |

| Multimodal data. | Sadness, disgust, happiness, anger, and fear. | ALICE | No | No | |

| 2020 [77] | Abel: Integrating Humanoid Body, Emotions, and Time Perception to Investigate Social Interaction and Human Cognition |

| Extension of SEAI, Damasio’s theory. | - | Facial expressions | ABEL | Yes | Yes |

| 2020 [137] | Creating and capturing artificial emotions in autonomous robots and software agents | ARTEMIS:

| Scherer theory, PAD model, memory of Dorner’s Psi theory. | Novelty, valence, goal, certainty, urgency goal congruence, coping, norms. | - | - | No | No |

| 2020 [81] | Toward a reinforcement learning-based framework for learning cognitive empathy in human–robot interactions |

| Reinforcement learning. | Anger, happiness, and surprise. | Actuators, eye color, speech Camera, microphone | Pepper | No | No |

| 2021 [138] | FATiMA Toolkit: Toward an effective and accessible tool for the development of intelligent virtual agents and social robots |

| FATiMA extended. | 22 emotions of OCC theory. | - | - | No | No |

| 2021 [82] | Cognitive emotional interaction model of robot based on reinforcement learning | - | Reinforcement learning, PAD model, psychology theory of interpersonal communication. | Happiness, anger, fear, sadness, disgust, and surprise. | - | - | No | No |

6. Discussion

- -

- The appearance of the physical robot can help it to empathize with the child.

- -

- Communication channels (verbal and non-verbal) to express an appropriate emotional state.

- -

- Types of sensors to perceive emotions, and techniques to recognize a target’s emotion.

- -

- Theories of psychology that can support learning socio-emotional skills.

- -

- Empathic behavior responses are autonomous.

7. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Tapus, A.; Tapus, C.; Mataric, M.J. The use of socially assistive robots in the design of intelligent cognitive therapies for people with dementia. In Proceedings of the 2009 IEEE International Conference on Rehabilitation Robotics, Kyoto, Japan, 23–26 June 2009; pp. 924–929. [Google Scholar] [CrossRef]

- Liu, C.; Conn, K.; Sarkar, N.; Stone, W. Online Affect Detection and Robot Behavior Adaptation for Intervention of Children with Autism. IEEE Trans. Robot. 2008, 24, 883–896. [Google Scholar] [CrossRef]

- Eshraghi, A.A. COVID-19: Overcoming the challenges faced by people with autism and their families. Lancet Psychiatry 2020, 7, 481–483. [Google Scholar] [CrossRef]

- American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders, 5th ed.; American Psychiatric Association: Washington, DC, USA, 2013. [Google Scholar] [CrossRef]

- Picard, R.W. Rosalind Picard: Affective Computing. User Model. User-Adapted Interact. 2002, 12, 85–89. [Google Scholar] [CrossRef]

- Velásquez, J.D.; Maes, P. Cathexis: A Computational Model of Emotions. In Proceedings of the First International Conference on Autonomous Agents, AGENTS 97, Marina del Rey, CA, USA, 5–8 February 1997; ACM: New York, NY, USA, 1997; pp. 518–519. [Google Scholar]

- Velásquez, J.D. When robots weep: Emotional memories and decision-making. In American Association for Artificial Intelligence Proceedings; AAAI Press: Palo Alto, CA, USA, 1998; pp. 70–75. [Google Scholar]

- Esau, N.; Kleinjohann, L.; Kleinjohann, B. Emotional Communication with the Robot Head MEXI. In Proceedings of the 2006 9th International Conference on Control, Automation, Robotics and Vision, Singapore, 5–8 December 2006; pp. 1–7. [Google Scholar] [CrossRef]

- Paiva, A.; Leite, I.; Ribeiro, T. Emotion Modeling for Social Robots. In The Oxford Handbook of Affective Computing, Psychology Affective Science; Oxford Library of Psychology: Oxford, UK, 2015. [Google Scholar]

- Ojha, S.; Vitale, J.; Williams, M.-A. Computational Emotion Models: A Thematic Review. Int. J. Soc. Robot. 2020, 2020, 1–27. [Google Scholar] [CrossRef]

- Nation, K.; Penny, S. Sensitivity to eye gaze in autism: Is it normal? Is it automatic? Is it social? Dev. Psychopathol. 2008, 20, 79–97. [Google Scholar] [CrossRef]

- Carter, A.S.; Davis, N.O.; Klin, A.; Volkmar, F.R. Social Development in Autism. In Handbook of Autism and Pervasive Developmental Disorders: Diagnosis, Development, Neurobiology, and Behavior; Volkmar, F.R., Paul, R., Klin, A., Cohen, D., Eds.; John Wiley & Sons Inc.: Hoboken, NJ, USA, 2005; pp. 312–334. [Google Scholar]

- Bennett, T.A.; Szatmari, P.; Bryson, S.; Duku, E.; Vaccarella, L.; Tuff, L. Theory of Mind, Language and Adaptive Functioning in ASD: A Neuroconstructivist Perspective. J. Can. Acad. Child Adolesc. Psychiatry 2013, 22, 13–19. [Google Scholar] [PubMed]

- Black, M.H.; Chen, N.T.; Iyer, K.K.; Lipp, O.V.; Bölte, S.; Falkmer, M.; Tan, T.; Girdler, S. Mechanisms of facial emotion recognition in autism spectrum disorders: Insights from eye tracking and electroencephalography. Neurosci. Biobehav. Rev. 2017, 80, 488–515. [Google Scholar] [CrossRef]

- Behrmann, M.; Thomas, C.; Humphreys, K. Seeing it differently: Visual processing in autism. Trends Cogn. Sci. 2006, 10, 258–264. [Google Scholar] [CrossRef]

- Fox, E. Emotion Science: Cognitive and Neuroscientific Approaches to Understanding Human Emotions; Palgrave Macmillan: Basingstoke, UK, 2008. [Google Scholar]

- Ekman, P.; Friesen, W.V.; O’Sullivan, M.; Chan, A.; Diacoyanni-Tarlatzis, I.; Heider, K.; Krause, R.; Lecompte, W.A.; Pitcairn, T.; Ricci-Bitti, P.E.; et al. Universals and cultural differences in the judgments of facial expressions of emotion. J. Pers. Soc. Psychol. 1987, 53, 712–717. [Google Scholar] [CrossRef]

- Calvo, R.A.; D’Mello, S.; Gratch, J.; Kappas, A. The Oxford Handbook of Affective Computing; Oxford Library of Psychology: Oxford, UK, 2015. [Google Scholar]

- Russell, J.A. A circumplex model of affect. J. Pers. Soc. Psychol. 1980, 39, 1161–1178. [Google Scholar] [CrossRef]

- James, W. What is an Emotion? Mind 1884, 9, 188–205. [Google Scholar] [CrossRef]

- Lange, C.G.; James, W. The Emotions; Williams &Wilkins Co: Baltimore, MD, USA, 1992; Volume 1. [Google Scholar] [CrossRef]

- Schachter, S.; Singer, J. Cognitive, social, and physiological determinants of emotional state. Psychol. Rev. 1962, 69, 379–399. [Google Scholar] [CrossRef] [PubMed]

- Cannon, B.; Walter, B. Cannon: Personal reminiscences. In The Life and Contributions of Walter Bradford Cannon 1871–1945: His Influence on the Development of Physiology in the Twentieth Century; Brooks, C.M., Koizumi, K., Pinkston, J.O., Eds.; State University of New York: New York, NY, USA, 1975; pp. 151–169. [Google Scholar]

- Lazarus, R.S. Emotion and Adaptation; Oxford University Press: Oxford, UK, 1991. [Google Scholar]

- Frijda, N.H. The Emotions; Cambridge University Press: Cambridge, UK, 1986. [Google Scholar]

- Roseman, I.J.; Jose, P.E.; Spindel, M.S. Appraisals of emotion-eliciting events: Testing a theory of discrete emotions. J. Personal. Soc. Psychol. 1990, 59, 899–915. [Google Scholar] [CrossRef]

- Ortony, A.; Clore, G.; Collins, A. The Cognitive Structure of Emotions; Cambridge University Press: Cambridge, UK, 1988. [Google Scholar]

- Damasio, A. Descartes’ Error: Emotion, Reason, and the Human Brain; Grosset/Putnam: New York, NY, USA, 1994. [Google Scholar]

- Goleman, D. Emotional Intelligence: Why It Can Matter More Than IQ; Bantam Books: New York, NY, USA, 1995. [Google Scholar]

- Christopher, S.; Shakila, C. Social Skills in Children with Autism. Indian J. Appl. Res. J. 2015, 5, 139–141. [Google Scholar]

- Gross, J.J. Emotion regulation: Affective, cognitive, and social consequences. Psychophysiology 2002, 39, 281–291. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Xie, L.; Liu, A.; Li, D. Cognitive Emotional Regulation Model in Human-Robot Interaction. Discret. Dyn. Nat. Soc. 2015, 2015, 1–8. [Google Scholar] [CrossRef]

- Gross, J.J. Emotion regulation: Taking stock and moving forward. Emotion 2013, 13, 359–365. [Google Scholar] [CrossRef]

- Salovey, P.; Mayer, J.D. Emotional Intelligence. Imagin. Cogn. Pers. 1990, 9, 185–211. [Google Scholar] [CrossRef]

- Bar-On, R. The Emotional Quotient inventory (EQ-i): A Test of Emotional Intelligence; Multi-Health Systems: Toronto, ON, Canada, 1997. [Google Scholar]

- Petrides, K.V.; Furnham, A. Trait emotional intelligence: Psychometric investigation with reference to established trait taxonomies. Eur. J. Pers. 2001, 15, 425–448. [Google Scholar] [CrossRef]

- Mayer, J.D.; Salovey, P. What is emotional intelligence? In Emotional Development and Emotional Intelligence: Implications for Educators; Salovey, P., Sluyter, D., Eds.; Basic Books: New York, NY, USA, 1997; pp. 3–31. [Google Scholar]

- Goleman, D. An EI-based theory of performance. In The Emotionally Intelligent Workplace: How to Select for, Measure, and Improve Emotional Intelligence in Individuals, Groups, and Organizations; Cherniss, C., Goleman, D., Eds.; Jossey-Bass: San Francisco, CA, USA, 2001; pp. 27–44. [Google Scholar]

- Hegel, F.; Spexard, T.; Wrede, B.; Horstmann, G.; Vogt, T. Playing a different imitation game: Interaction with an Empathic Android Robot. In Proceedings of the 2006 6th IEEE-RAS International Conference on Humanoid Robots, Genova, Italy, 4–6 December 2006; pp. 56–61. [Google Scholar] [CrossRef]

- Moualla, A.; Boucenna, S.; Karaouzene, A.; Vidal, D.; Gaussier, P. Is it useful for a robot to visit a museum? Paladyn J. Behav. Robot. 2018, 9, 374–390. [Google Scholar] [CrossRef]

- El-Nasr, M.S.; Yen, J.; Ioerger, T.R. FLAME—Fuzzy Logic Adaptive Model of Emotions. Auton. Agents Multi-Agent Syst. 2000, 3, 219–257. [Google Scholar] [CrossRef]

- Roseman, I.J.; Antoniou, A.A.; Jose, P.E. Appraisal determinants of emotions: Constructing a more accurate and comprehensive theory. Cogn. Emot. 1996, 10, 241–278. [Google Scholar] [CrossRef]

- Bolles, R.C.; Fanselow, M.S. A perceptual-defensive-recuperative model of fear and pain. Behav. Brain Sci. 1980, 3, 291–301. [Google Scholar] [CrossRef]

- El-Nasr, M.S.; Ioerger, T.; Yen, J. PETEEI: A PET with evolving emotional intelligence. In Proceedings of the Third International Conference on Autonomous Agents, Seattle, WA, USA, 1–5 May 1999. [Google Scholar]

- Aylett, R.S.; Louchart, S.; Dias, J.; Paiva, A.; Vala, M. Fearnot!—An experiment in emergent narrative. In Intelligent Virtual Agents; Panayiotopoulos, T., Gratch, J., Aylett, R., Ballin, D., Olivier, P., Rist, T., Eds.; Springer: Berlin/Heidelberg, Germany, 2005; pp. 305–316. Available online: https://link.springer.com/chapter/10.1007/11550617_26 (accessed on 27 June 2021).

- Gebhard, P. Alma: A layered model of affect. In Proceedings of the Fourth International Joint Conference on Autonomous Agents and Multiagent Systems, Utrecht, The Netherlands, 25–29 July 2005; ACM: New York, NY, USA, 2005; pp. 29–36. [Google Scholar]

- Mehrabian, A. Pleasure-arousal-dominance: A general framework for describing and measuring individual differences in temperament. Curr. Psychol. 1996, 14, 261–292. [Google Scholar] [CrossRef]

- Digman, J.M. Personality structure: Emergence of the five-factor model. Annu. Rev. Psychol. 1990, 41, 417–440. [Google Scholar] [CrossRef]

- Gratch, J.; Marsella, S. A domain-independent framework for modeling emotion. Cogn. Syst. Res. 2004, 5, 269–306. [Google Scholar] [CrossRef]

- Smith, C.A.; Lazarus, R.S. Emotion and adaptation. In Theory and Research, Handbook of Personality; Guilford: New York, NY, USA, 1990; pp. 609–637. [Google Scholar]

- Yoo, S.; Jeong, O. EP-Bot: Empathetic Chatbot Using Auto-Growing Knowledge Graph. Comput. Mater. Contin. 2021, 67, 2807–2817. [Google Scholar] [CrossRef]

- Morris, C. The Use of Self-Service Technologies in Stress Management: A Pilot Project. Master’s Thesis, University of St. Thomas, St. Thomas, MO, USA, 2012. [Google Scholar]

- Laranjo, L.; Dunn, A.; Tong, H.L.; Kocaballi, A.B.; Chen, J.; Bashir, R.; Surian, D.; Gallego, B.; Magrabi, F.; Lau, A.Y.; et al. Conversational agents in healthcare: A systematic review. J. Am. Med. Inform. Assoc. 2018, 25, 1248–1258. [Google Scholar] [CrossRef] [PubMed]

- Hoermann, S.; McCabe, K.L.; Milne, D.N.; Calvo, R.A. Application of Synchronous Text-Based Dialogue Systems in Mental Health Interventions: Systematic Review. J. Med. Internet Res. 2017, 19, e267. [Google Scholar] [CrossRef]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G. The PRISMA Group Preferred Reporting Items for Systematic Reviews and Meta-Analyses: The PRISMA Statement. PLoS Med. 2009, 6, e1000097. [Google Scholar] [CrossRef] [PubMed]

- Xiao, W.; Li, M.; Chen, M.; Barnawi, A. Deep interaction: Wearable robot-assisted emotion communication for enhancing perception and expression ability of children with Autism Spectrum Disorders. Future Gener. Comput. Syst. 2020, 108, 709–716. [Google Scholar] [CrossRef]

- Chen, M.; Zhou, P.; Fortino, G. Emotion Communication System. IEEE Access 2016, 5, 326–337. [Google Scholar] [CrossRef]

- Hirokawa, M.; Funahashi, A.; Itoh, Y.; Suzuki, K. Design of affective robot-assisted activity for children with autism spectrum disorders. In Proceedings of the 23rd IEEE International Symposium on Robot and Human Interactive Communication, Edinburgh, UK, 25–29 August 2014; pp. 365–370. [Google Scholar] [CrossRef]

- Shi, X.-F.; Wang, Z.-L.; Ping, A.; Zhang, L.-K. Artificial emotion model based on reinforcement learning mechanism of neural network. J. China Univ. Posts Telecommun. 2011, 18, 105–109. [Google Scholar] [CrossRef]

- Kozima, H.; Nakagawa, C.; Yano, H. Can a robot empathize with people? Artif. Life Robot. 2004, 8, 83–88. [Google Scholar] [CrossRef]

- Vircikova, M.; Magyar, G.; Sincak, P. The Affective Loop: A Tool for Autonomous and Adaptive Emotional Human-Robot Interaction. In Robot Intelligence Technology and Applications 3. Advances in Intelligent Systems and Computing; Kim, J.H., Yang, W., Jo, J., Sincak, P., Myung, H., Eds.; Springer: Cham, Switzerland, 2015; Volume 345. [Google Scholar] [CrossRef]

- Picard, R.W. Affective Computing. In Media Laboratory Perceptual Computing Section Technical Report No. 321; MIT Media Lab: Cambridge, MA, USA, 1995. [Google Scholar]

- Bagheri, E.; Esteban, P.G.; Cao, H.-L.; De Beir, A.; Lefeber, D.; Vanderborght, B. An Autonomous Cognitive Empathy Model Responsive to Users’ Facial Emotion Expressions. ACM Trans. Interact. Intell. Syst. 2020, 10, 1–23. [Google Scholar] [CrossRef]

- Cominelli, L.; Mazzei, D.; De Rossi, D.E. Social Emotional Artificial Intelligence Based on Damasio’s Theory of Mind. Front. Robot. AI 2018, 5, 6. [Google Scholar] [CrossRef] [PubMed]

- Tielman, M.; Neerincx, M.; Meyer, J.-J.; Looije, R. Adaptive emotional expression in robot-child interaction. In Proceedings of the 2014 9th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Bielefeld, Germany, 3–6 March 2014; pp. 407–414. [Google Scholar]

- Moshkina, L.; Park, S.; Arkin, R.C.; Lee, J.K.; Jung, H. TAME: Time-Varying Affective Response for Humanoid Robots. Int. J. Soc. Robot. 2011, 3, 207–221. [Google Scholar] [CrossRef][Green Version]

- Leite, I.; Castellano, G.; Pereira, A.; Martinho, C.; Paiva, A. Empathic Robots for Long-term Interaction. Int. J. Soc. Robot. 2014, 6, 329–341. [Google Scholar] [CrossRef]

- Cañamero, L. Modeling motivations and emotions as a basis for intelligent behavior. In Proceedings of the 1st International Conference on Autonomous Agents (AGENTS 97), Marina del Rey, CA, USA, 5–8 February 1997; pp. 148–155. [Google Scholar]

- Gadanho, S. Reinforcement learning in autonomous robots: An empirical investigation of the role of emotions. In Emotions in Human and Artifacts; MIT Press: Cambridge, MA, USA, 2002. [Google Scholar]

- Murphy, R.R.; Lisetti, C.L.; Tardif, R.; Irish, L.; Gage, A. Emotion-based control of cooperating heterogeneous mobile robots. IEEE Trans. Robot. Autom. 2002, 18, 744–757. [Google Scholar] [CrossRef]

- Ogata, T.; Sugan, S. Emotional Communication Robot: WAMOEBA-2R Emotion Model and Evaluation Experiments. In Proceedings of the International Conference on Humanoid Robots, Boston, MA, USA, 7–8 September 2000. [Google Scholar]

- Velásquez, J. Modeling emotions and other motivations in synthetic agents. In Proceedings of the Fourteenth National Conference on Artificial Intelligence and Ninth Conference on Innovative Applications of Artificial Intelligence (AAAI’97/IAAI’97), Providence, RI, USA, 27–31 July 1997; pp. 10–15. [Google Scholar]

- Izard, C.E. Four Systems for Emotion Activation: Cognitive and Noncognitive Processes. Psychol. Rev. 1993, 100, 68–90. [Google Scholar] [CrossRef]

- Goerke, N. EMOBOT: A Robot Control Architecture Based on Emotion-Like Internal Values, Mobile Robotics, Moving Intelligence; Jonas Buchli; IntechOpen: Berlin, Germany, 2006; Available online: https://www.intechopen.com/books/mobile_robotics_moving_intelligence/emobot_a_robot_control_architecture_based_on_emotion-like_internal_values (accessed on 27 June 2021). [CrossRef]

- Thomaz, A.; Berlin, M.; Breazeal, C. An embodied computational model of social referencing. In Proceedings of the ROMAN 2005, IEEE International Workshop on Robot and Human Interactive Communication, Nashville, TN, USA, 13–15 August 2005; pp. 591–598. [Google Scholar] [CrossRef]

- Minsky, M. The Society of Mind; Simon & Schuster: New York, NY, USA, 1986. [Google Scholar]

- Cominelli, L.; Hoegen, G.; De Rossi, D. Abel: Integrating Humanoid Body, Emotions, and Time Perception to Investigate Social Interaction and Human Cognition. Appl. Sci. 2021, 11, 1070. [Google Scholar] [CrossRef]

- Samani, H.A.; Saadatian, E. A Multidisciplinary Artificial Intelligence Model of an Affective Robot. Int. J. Adv. Robot. Syst. 2012, 9, 6. [Google Scholar] [CrossRef]

- Truschzinski, M.; Mïller, N. An Emotional Model for Social Robots. In Proceedings of the 9th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Bielefeld, Germany, 3–6 March 2014; pp. 304–305. [Google Scholar]

- Agheri, E.; Roesler, O.; Cao, H.-L.; VanderBorght, B. A Reinforcement Learning Based Cognitive Empathy Framework for Social Robots. Int. J. Soc. Robot. 2020, 2020, 1–15. [Google Scholar] [CrossRef]

- Bagheri, E.; Roesler, O.; Vanderborght, B. Toward a Reinforcement Learning Based Framework for Learning Cognitive Empathy in Human-Robot Interactions. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems, Las Vegas, NV, USA, 25–29 October 2020. [Google Scholar]

- Huang, H.; Li, J.; Hu, M.; Tao, Y.; Kou, L. Cognitive Emotional Interaction Model of Robot Based on Reinforcement Learning. J. Electron. Inf. Technol. 2021, 43, 1781–1788. [Google Scholar] [CrossRef]

- Davis, M.H. Empathy. In Handbook of the Sociology of Emotions; Springer: New York, NY, USA, 2006; pp. 443–466. [Google Scholar]

- Johal, W.; Pellier, D.; Adam, C.; Fiorino, H.; Pesty, S. A Cognitive and Affective Architecture for Social Human-Robot Interaction. In Proceedings of the Tenth Annual ACM/IEEE International Conference on Human-Robot Interaction Extended Abstracts (HRI’15 Extended Abstracts); Association for Computing Machinery: New York, NY, USA, 2015; pp. 71–72. [Google Scholar] [CrossRef]

- Rao, A.S.; Georgeff, M.P. BDI-agents: From theory to practice. In Proceedings of the First International Conference on Multiagent Systems, San Francisco, CA, USA, 12–14 June 1995. [Google Scholar]

- Bratman, M.E. Intention, Plans, and Practical Reason; Cambridge University Press: Cambridge, MA, USA, 1987. [Google Scholar]

- Davis, D.; Gwatkin, J. robo-CAMAL: A BDI Motivational Robot. Paladyn J. Behav. Robot. 2010, 1, 116–129. [Google Scholar] [CrossRef]

- Hirth, J.; Berns, K. Emotion-based Architecture for Social Interactive Robots; Robots, H., Choi, B., Eds.; InTech: Kaiserlautern, Germany, 2009; ISBN 978-953-7619-44-2. Available online: http://www.intechopen.com/books/humanoid_robots/emotion-based_architecture_for_social_interactive_robots (accessed on 23 June 2021).

- Hobmair, H.; Altenhan, S.; Betcher-Ott, S.; Dirrigl, W.; Gotthardt, W.; Ott, W. Psychologie; Bildungsverlag EINS: Troisdorf, Germany, 2003. [Google Scholar]

- Proetzsch, M.; Luksch, T.; Berns, K. The Behaviour-Based Control Architecture iB2C for Complex Robotic Systems. In Proceedings of the German Conference on Artificial Intelligence (KI), Osnabrück, Germany, 10–13 September 2007; pp. 494–497. [Google Scholar]

- Saint-Aime, S.; Le Pévédic, B.; Duhaut, D. Children recognize emotions of EmI companion robot. In Proceedings of the 2011 IEEE International Conference on Robotics and Biomimetics, Karon Beach, Thailand, 7–11 December 2011; pp. 1153–1158. [Google Scholar] [CrossRef]

- Dang, T.H.H.; Letellier-Zarshenas, S.; Duhaut, D. GRACE—Generic Robotic Architecture to Create Emotions. In Proceedings of the 11th International Conference on Climbing and Walking Robots and the Support Technologies for Mobile Machines—CLAWAR 2008, Coimbra, Portugal, 8–10 September 2008. [Google Scholar]

- Scherer, K.R. Appraisal theory. In Handbook of Cognition and Emotion; Dalgleish, T., Power, M.J., Eds.; John Wiley & Sons Ltd.: Hoboken, NJ, USA, 1999. [Google Scholar]

- Isabel, B.-M.; Mary, M. Manual: A Guide to the Development and Use of the Myers-Briggs Type Indicator; Consulting Psychologists Press: Mountain View, CA, USA, 1985. [Google Scholar]

- Chen, J.; She, Y.; Zheng, M.; Shu, Y.; Wang, Y.; Xu, Y. A multimodal affective computing approach for children companion robots. In Proceedings of the Seventh International Symposium of Chinese CHI (Chinese CHI 19), Xiamen, China, 27–30 June 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 57–64. [Google Scholar] [CrossRef]

- Brackett, M.A.; Bailey, C.; Hoffmann, J.D.; Simmons, D.N. RULER: A Theory-Driven, Systemic Approach to Social, Emotional, and Academic Learning. Educ. Psychol. 2019, 54, 144–161. [Google Scholar] [CrossRef]

- Hoffman, M.L. Toward a comprehensive empathy-based theory of prosocial moral development. In Constructive & Destructive Behavior: Implications for Family, School, & Society; Bohart, A.C., Stipek, D.J., Eds.; American Psychological Association: Worcester, MA, USA, 2003; pp. 61–86. [Google Scholar] [CrossRef]

- Cutrona, C.; Suhr, J.; MacFarlane, R. Interpersonal transactions and the psychological sense of support. In Personal Relationships and Social Support; Sage Publications: London, UK, 1990; pp. 30–45. [Google Scholar]

- Tanevska, A.; Rea, F.; Sandini, G.; Sciutti, A. Towards an Affective Cognitive Architecture for Human-Robot Interaction for the iCub Robot. In Proceeding of the 1st Workshop on Behavior, Emotion and Representation: Building Blocks of Interaction, Bielefeld, Germany, 17 October 2017. [Google Scholar]

- Ghiglino, D.; De Tommaso, D.; Maggiali, M.; Parmiggiani, A.; Wykowska, A. Setup Prototype for Safe Inte Action between a Humanoid Robot (iCub) and Children with Autism-Spectrum Condition. 2020. Available online: https://osf.io/vk5cm/ (accessed on 23 June 2021).

- Jain, S.; Asawa, K. EMIA: Emotion Model for Intelligent Agent. J. Intell. Syst. 2015, 24, 449–465. [Google Scholar] [CrossRef]

- Pérez, J.; Cerezo, E.; Serón, F.J.; Rodriguez, L.-F. A cognitive-affective architecture for ECAs. Biol. Inspired Cogn. Arch. 2016, 18, 33–40. [Google Scholar] [CrossRef]

- Laird, J.E. Extending the soar cognitive architecture. In Frontiers in Artificial Intelligence and Applications; Wang, P., Goertzel, B., Franklin, S., Eds.; IOS Press: Amsterdam, The Netherlands, 2008; Volume 171, pp. 224–235. Available online: http://dblp.uni-trier.de/db/conf/agi/agi2008.html#Laird08 (accessed on 23 June 2021).

- Ribeiro, T.; Paiva, A. Animating the adelino robot with ERIK: The expressive robotics inverse kinematics. In Proceedings of the 19th ACM International Conference on Multimodal Interaction (ICMI 17), Glasgow, UK, 13–17 November 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 388–396. [Google Scholar] [CrossRef]

- Löffler, D.; Schmidt, N.; Tscharn, R. Multimodal Expression of Artificial Emotion in Social Robots Using Color, Motion and Sound. In Proceedings of the 2018 ACM/IEEE International Conference on Human-Robot Interaction (HRI 18), Chicago, IL, USA, 5–8 March 2018; Association for Computing Machinery: New York, NY, USA, 2018; pp. 334–343. [Google Scholar] [CrossRef]

- Kövecses, Z. Metaphor and Emotionâăŕ: Language, Culture, and the Body in Human Feeling; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Hurst, N.; Clabaugh, C.; Baynes, R.; Cohn, J.; Mitroff, D.; Scherer, S. Social and Emotional Skills Training with Embodied Moxie. arXiv 2020, arXiv:2004.12962. [Google Scholar]

- Hong, A.; Lunscher, N.; Hu, T.; Tsuboi, Y.; Zhang, X.; Alves, S.F.D.R.; Nejat, G.; Benhabib, B. A Multimodal Emotional Human-Robot Interaction Architecture for Social Robots Engaged in Bidirectional Communication. IEEE Trans. Cybern. 2020, 2020, 1–15. [Google Scholar] [CrossRef]

- Aly, A.; Tapus, A. On Designing Expressive Robot Behavior: The Effect of Affective Cues on Interaction. SN Comput. Sci. 2020, 1, 1–17. [Google Scholar] [CrossRef]

- Ekman, P.; Friesen, W.V. Facial Action Coding System: A Technique for the Measurement of Facial Movement; Consulting Psychologists Press: Mountain View, CA, USA, 1987. [Google Scholar]

- Qingji, G.; Kai, W.; Haijuan, L. A Robot Emotion Generation Mechanism Based on PAD Emotion Space. In Proceedings of the International Conference on Intelligent Information Processing, Beijing, China, 19–22 October 2008; Springer: Boston, MA, USA, 2008; pp. 138–147. [Google Scholar] [CrossRef]

- Liu, S.; Insua, D.R. An affective decision-making model with applications to social robotics. Eur. J. Decis. Process. 2019, 8, 13–39. [Google Scholar] [CrossRef]

- Scherer, K.R. Emotions are emergent processes: They require a dynamic computational architecture. Philos. Trans. R. Soc. B Biol. Sci. 2009, 364, 3459–3474. [Google Scholar] [CrossRef] [PubMed]

- Baron-Cohen, S.; Golan, O.; Ashwin, E. Can emotion recognition be taught to children with autism spectrum conditions? Philos. Trans. R. Soc. B Biol. Sci. 2009, 364, 3567–3574. [Google Scholar] [CrossRef] [PubMed]

- Mazzei, D.; Billeci, L.; Armato, A.; Lazzeri, N.; Cisternino, A.; Pioggia, G.; Igliozzi, R.; Muratori, F.; Ahluwalia, A.; De Rossi, D. The FACE of autism. In Proceedings of the 19th International Symposium in Robot and Human Interactive Communication, Viareggio, Italy, 12–15 September 2010; pp. 791–796. [Google Scholar] [CrossRef]

- Oliveira, P.A.; Sequeira, P.; Melo, F.S.; Castellano, G.; Paiva, A. Empathic Robot for Group Learning. ACM Trans. Hum. Robot Interact. 2019, 8, 1–34. [Google Scholar] [CrossRef]

- Yu, J.; Xie, L.; Wang, Z.; Xia, Y. An Affective Model Applied in Playmate Robot for Children. In Advances in Neural Networks—ISNN 2008; Lecture Notes in Computer Science; Sun, F., Zhang, J., Tan, Y., Cao, J., Yu, W., Eds.; Springer: Berlin/Heidelberg, Germany, 2008; Volume 5264. [Google Scholar] [CrossRef]

- Feinman, S.; Roberts, D.; Hsieh, K.F.; Sawyer, D.; Swanson, K. A critical review of social referencing in infancy. In Social Referencing and the Social Construction of Reality in Infancy; Feinman, S., Ed.; Plenum Press: New York, NY, USA, 1992. [Google Scholar]

- Davies, M.; Stone, T. Introduction. In Folk Psychology: The Theory of Mind Debate; Davies, M., Stone, T., Eds.; Cambridge: Blackwell, UK, 1995. [Google Scholar]

- Young, J.E.; Hawkins, R.; Sharlin, E. Igarashi, T. Toward Acceptable Domestic Robots: Applying Insights from Social Psychology. Int. J. Soc. Robot. 2008, 1, 95–108. [Google Scholar] [CrossRef]

- Meltzoff, A. The human infant as imitative generalist: A 20-year progress report on infant imitation with implications for comparative psychology. In Social Learning in Animals: The Roots of Culture; Heyes, B.G.C.M., Ed.; Academic Press: San Diego, CA, USA, 1996. [Google Scholar]

- Charman, T. Why is joint attention a pivotal skill in autism? Philos. Trans. R. Soc. B Biol. Sci. 2003, 358, 315–324. [Google Scholar] [CrossRef]

- Cibralic, S.; Kohlhoff, J.; Wallace, N.; McMahon, C.; Eapen, V. A systematic review of emotion regulation in children with Autism Spectrum Disorder. Res. Autism Spectr. Disord. 2019, 68, 101422. [Google Scholar] [CrossRef]

- Mayadunne, M.M.M.S.; Manawadu, U.A.; Abeyratne, K.R.; De Silva, P.R.S. A Robotic Companion for Children Diagnosed with Autism Spectrum Disorder. In Proceedings of the 2020 International Conference on Image Processing and Robotics (ICIP), Abu Dhabi, United Arab Emirates, 25–28 September 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Cañamero, L. Embodied Robot Models for Interdisciplinary Emotion Research. IEEE Trans. Affect. Comput. 2019, 12, 340–351. [Google Scholar] [CrossRef]

- Boucenna, S.; Gaussier, P.; Hafemeister, L. Development of First Social Referencing Skills: Emotional Interaction as a Way to Regulate Robot Behavior. IEEE Trans. Auton. Ment. Dev. 2013, 6, 42–55. [Google Scholar] [CrossRef]

- Suzuki, K.; Camurri, A.; Ferrentino, P.; Hashimoto, S. Intelligent agent system for human-robot interaction through artificial emotion. In Proceedings of the SMC’98 Conference, 1998 IEEE International Conference on Systems, Man, and Cybernetics (Cat. No. 98CH36218), San Diego, CA, USA, 14 October 1998; Volume 2, pp. 1055–1060. [Google Scholar] [CrossRef]

- Toda, M. The Urge Theory of Emotion and Cognition; Technical Report (1994); School of Computer and Cognitive Sciences, Chukyo University: Nagoya, Japan, 1994. [Google Scholar]

- Gadanho, S.C.; Hallam, J. Robot Learning Driven by Emotions. Adapt. Behav. 2001, 9, 42–64. [Google Scholar] [CrossRef]

- Yamaguchi, T.; Ando, N. Intelligenrobot system using “model of knowledge, emotion and intention” and “information sharing architecture”. In Proceedings of the IECON’01, 27th Annual Conference of the IEEE Industrial Electronics Society (Cat. No. 37243), Danver, CO, USA, 29 November–2 December 2001. [Google Scholar] [CrossRef]

- Diehl, J.J.; Schmitt, L.M.; Villano, M.; Crowell, C.R. The clinical use of robots for individuals with Autism Spectrum Disorders: A critical review. Res. Autism Spectr. Disord. 2012, 6, 249–262. [Google Scholar] [CrossRef]

- Ahn, H.S.; Baek, Y.M.; Na, J.H.; Choi, J.Y. Multi-dimensional emotional engine with personality using intelligent service robot for children. In Proceedings of the 2008 International Conference on Control, Automation and Systems, Seoul, Korea, 2–5 December 2008; pp. 2020–2025. [Google Scholar] [CrossRef]

- Watzlawick, P.; Beavin, J.H.; Jackson, D.D. Menschliche Kommunikation; Bern: Huber, Switzerland, 2000. [Google Scholar]

- Bartsch, K.; Wellman, H. Young children’s attribution of action to beliefs and desires. Child Dev. 1989, 60, 946–964. [Google Scholar] [CrossRef]

- Luo, Q.; Zhao, A.; Zhang, H. A Layered Model of Artificial Emotion Merging with Attitude. In Foundations of Intelligent Systems. Advances in Intelligent and Soft Computing; Wang, Y., Li, T., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; Volume 122. [Google Scholar] [CrossRef]

- Hasson, C.; Gaussier, P.; Boucenna, S. Emotions as a dynamical system: The interplay between the meta-control and communication function of emotions. Paladyn J. Behav. Robot. 2011, 2, 111–125. [Google Scholar] [CrossRef]

- Hoffmann, C.; Vidal, M.-E. Creating and Capturing Artificial Emotions in Autonomous Robots and Software Agents. In Proceedings of the International Conference on Web Engineering, Helsinki, Finland, 9–12 June 2020; Springer: Cham, Switzerland, 2020; pp. 277–292. [Google Scholar] [CrossRef]

- Mascarenhas, S.; Guimaraes, M.; Santos, P.A.; Dias, J.; Prada, R.; Paiva, A. FAtiMA Toolkit—Toward an effective and accessible tool for the development of intelligent virtual agents and social robots. arXiv 2021, arXiv:2103.03020. [Google Scholar]

- Breazeal, C. Emotion and sociable humanoid robots. Int. J. Hum. Comput. Stud. 2003, 59, 119–155. [Google Scholar] [CrossRef]

- Dautenhahn, K.; Ogden, B.; Quick, T. From embodied to socially embedded agents—Implications for interaction-aware robots. Cogn. Syst. Res. 2002, 3, 397–428. [Google Scholar] [CrossRef]

- Robert, L.; Alahmad, R.; Esterwood, C.; Kim, S.; You, S.; Zhang, Q. A Review of Personality in Human Robot Interactions. 2020. Available online: https://ssrn.com/abstract=3528496 (accessed on 23 June 2021).

- Alnajjar, F.; Cappuccio, M.; Renawi, A.; Mubin, O.; Loo, C.K. Personalized Robot Interventions for Autistic Children: An Automated Methodology for Attention Assessment. Int. J. Soc. Robot. 2020, 13, 67–82. [Google Scholar] [CrossRef]

- Drimalla, H.; Baskow, I.; Behnia, B.; Roepke, S.; Dziobek, I. Imitation and recognition of facial emotions in autism: A computer vision approach. Mol. Autism 2021, 12, 1–15. [Google Scholar] [CrossRef]

- Robins, B.; Dautenhahn, K.; Dubowski, J. Does appearance matter in the interaction of children with autism with a humanoid robot? Interact. Stud. 2006, 7, 479–512. [Google Scholar] [CrossRef]

- Rodrigues, S.; Mascarenhas, S.; Dias, J.; Paiva, A. “I can feel it too!”: Emergent empathic reactions between synthetic characters. In Proceedings of the International Conference on Affective Computing & Intelligent Interaction (ACII), Amsterdam, The Netherlands, 10–12 September 2009. [Google Scholar]

- Toyohashi University of Technology. Humans can empathize with robots: Neurophysiological evidence for human empathy toward robots in perceived pain. ScienceDaily, 3 November 2015. [Google Scholar]

- Duquette, A.; Michaud, F.; Mercier, H. Exploring the use of a mobile robot as an imitation agent with children with low-functioning autism. Auton. Robot. 2007, 24, 147–157. [Google Scholar] [CrossRef]

- Boucenna, S.; Narzisi, A.; Tilmont, E.; Muratori, F.; Pioggia, G.; Cohen, D.; Chetouani, M. Interactive Technologies for Autistic Children: A Review. Cogn. Comput. 2014, 6, 722–740. [Google Scholar] [CrossRef]

- Cavallo, F.; Semeraro, F.; Fiorini, L.; Magyar, G.; Sinčák, P.; Dario, P. Emotion Modelling for Social Robotics Applications: A Review. J. Bionic Eng. 2018, 15, 185–203. [Google Scholar] [CrossRef]

- Hashimoto, S.; Narita, S.; Kasahara, H.; Shirai, K.; Kobayashi, T.; Takanishi, A.; Sugano, S.; Yamaguchi, J.; Sawada, H.; Takanobu, H.; et al. Humanoid Robots in Waseda University—Hadaly-2 and WABIAN. Auton. Robot. 2002, 12, 25–38. [Google Scholar] [CrossRef]

- Salmeron, J.L. Fuzzy cognitive maps for artificial emotions forecasting. Appl. Soft Comput. 2012, 12, 3704–3710. [Google Scholar] [CrossRef]

| Research Keywords |

|---|

| “intelligent” AND “emotions” AND “robots” AND “children” |

| “intelligent” AND “emotions” AND “robots” AND “autism” |

| “empathy” AND “robot” AND “children” |

| “empathy” AND “robot” AND “autism” |

| “emotional model computational” AND “robots” AND “children” |

| “affective” AND “architecture” AND “robots” AND “children” |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cano, S.; González, C.S.; Gil-Iranzo, R.M.; Albiol-Pérez, S. Affective Communication for Socially Assistive Robots (SARs) for Children with Autism Spectrum Disorder: A Systematic Review. Sensors 2021, 21, 5166. https://doi.org/10.3390/s21155166

Cano S, González CS, Gil-Iranzo RM, Albiol-Pérez S. Affective Communication for Socially Assistive Robots (SARs) for Children with Autism Spectrum Disorder: A Systematic Review. Sensors. 2021; 21(15):5166. https://doi.org/10.3390/s21155166

Chicago/Turabian StyleCano, Sandra, Carina S. González, Rosa María Gil-Iranzo, and Sergio Albiol-Pérez. 2021. "Affective Communication for Socially Assistive Robots (SARs) for Children with Autism Spectrum Disorder: A Systematic Review" Sensors 21, no. 15: 5166. https://doi.org/10.3390/s21155166

APA StyleCano, S., González, C. S., Gil-Iranzo, R. M., & Albiol-Pérez, S. (2021). Affective Communication for Socially Assistive Robots (SARs) for Children with Autism Spectrum Disorder: A Systematic Review. Sensors, 21(15), 5166. https://doi.org/10.3390/s21155166