Abstract

It has been proven that Logarithmic Image Processing (LIP) models provide a suitable framework for visualizing and enhancing digital images acquired by various sources. The most visible (although simplified) result of using such a model is that LIP allows the computation of graylevel addition, subtraction and multiplication with scalars within a fixed graylevel range without the use of clipping. It is claimed that a generalized LIP framework (i.e., a parameterized family of LIP models) can be constructed on the basis of the fuzzy modelling of gray level addition as an accumulation process described by the Hamacher conorm. All the existing LIP and LIP-like models are obtained as particular cases of the proposed framework in the range corresponding to real-world digital images.

1. Introduction

Logarithmic Image Processing (LIP) models provide a suitable framework for visualizing and enhancing digital images, acquired from various sources and obtained by transmitted/reflected light through absorbing/reflecting media, where the effects are naturally of a multiplicative form. Such an approach was pioneered by the work of Stockham [1], who proposed an enhancement method based on the homomorphic theory introduced by Oppenheim [2,3]. The key to this approach is a homomorphism which transforms the product into a sum (by the use of the logarithm) and thus it allows the use of the classical linear filtering in the presence of multiplicative components.

The underlying initial reason for the introduction of such models has been the necessity to deal with multiplicative phenomena (as in the case of an X-ray image, where the image values represent the transparency/opacity of the real objects). Later, it has been proven that LIP models have a precise mathematical structure and, hence, are suitable for various image processing applications, not necessarily of multiplicative nature. The most used logarithmic representation, evolving from the multiplicative properties of the transmission of light and of the human visual perception, was developed by Jourlin and Pinoli [4,5], under a very well elaborated and rigorous mathematical form. The model has been later extended to color images [6].

The practical importance of the LIP models also comes from another perspective. As one may easily notice, the image functions that are currently used are bounded (taking values in a bounded interval ). In the course of image processing, the mathematical operations defined on real valued functions, implicitly using the algebra of the real numbers (i.e., on the whole real axis), may produce results that do not belong anymore to the given value interval —the only values with physical meaning. Such an approach was discussed for instance in [7] for X-ray image enhancement or in [8] for the creation of high dynamic range images by bracketing. The most recent and comprehensive review of the use of the LIP in various image processing and computer vision applications is presented in [9].

In this paper, we show that a generalized LIP framework (i.e., a parameterized family of LIP models) can be constructed, based on the fuzzy modelling of graylevel addition as an accumulation process described by fuzzy conorms. All the existing LIP and LIP-like models are obtained as particular cases of the proposed framework, under the use of the Hamacher conorm and within the range corresponding to real-world digital images. Thus, the paper presents the following claims: (i) a new, fuzzy logic based model for the generation of a parametric set of logarithmic-type image processing models that (ii) generalizes the main existing LIP models and (iii) can provide for some fundamental image processing operations a marginally better performance than the classical LIP.

The remainder of the paper is organized as follows: Section 2 describes the existing LIP models, Section 3 presents the background of the proposed fuzzy logic aggregational approach to graylevel addition and the subsequent construction of the resulting image processing model, which are proven to generalize the existing LIP models, and Section 4 suggests some applications of the proposed parametric LIP model family. The paper ends with some conclusions and suggestions for further research.

2. Logarithmic Image Processing Models

In this section, the key points of the existing logarithmic image processing models—the classical model introduced by Jourlin and Pinoli [5,10], and the model introduced by Pătraşcu [11] will be briefly presented, together with the pseudo-LIP model introduced in [12] and two parametric LIP models. This presentation will employ the original notation of the operators, as proposed by their respective authors.

2.1. The Classical LIP Model

The classical LIP model, introduced in [5,10], starts by modelling the scalar intensity image by a gray tone function, with values in the bounded domain . The basic arithmetic operations (addition and subtraction) between two gray tone functions (i.e., images) and the multiplication of the gray tone function via a real-valued scalar are defined in terms of classical operations on as pixel-level operations. Thus, image addition, subtraction and scalar multiplication are defined by operations on the pixel (scalar) values, denoted generally as v.

The addition of gray tones (pixel values) and , denoted by the operator , is defined as:

The subtraction of (or difference between) gray tones (pixel values) and , denoted by the operator , is defined as:

The multiplication of a gray tone (pixel value) v by a real scalar , denoted by the operator , is defined as:

The key mathematical fundamental of implementing the LIP operations defined above via classical, real-number operations, is the isomorphism between the vector space of gray tone functions and the vector space of real numbers . The isomorphism is realized through the function , defined as:

The particular nature of this isomorphism induces the logarithmic character of the mathematical model. In the following, we will consider only the part of the functional domain of transform T above, since this is the only one in which gray tones correspond to real-world digital images (that is, images with bounded, positive values), similarly to the observation in [13].

Recently, the logarithmic adaptive neighborhood image processing (LANIP) was proposed [14]; this approach is based on the logarithmic image processing (LIP) and on the general adaptive neighborhood image processing (GANIP) [15,16]. The logarithmic part of the model involves the use of the LIP operations instead of the real-axis arithmetic operations. The authors claim an impressive collection of applications arising from the intensity and spatial properties of the human brightness perception that are mathematically modelled and implemented through the combination of logarithmic arithmetics and adaptive selection of the spatial support of the operations.

2.2. The Homomorphic LIP Model

The logarithmic model introduced by Pătraşcu (presented for instance in [11]) does work with bounded, symmetrical real sets: the gray-tone values of the involved images, defined in , are linearly transformed into the standard set by:

where and .

The interval plays the central role in the proposed model: it is endowed with the structure of a linear (moreover, Euclidean) space over the scalar field of real numbers, . In this space, the addition between two graylevels, and is defined as:

The multiplication of a graylevel v with a real scalar is:

The difference between two graylevels and is given by:

The vector space of graylevels is isomorphic to the space of real numbers by the function , defined as:

2.3. The Pseudo-LIP Model

Another LIP-like model was introduced in [12] under the name of pseudo-LIP model. The graylevels that are processed are considered within the range, which is isomorphic with via a transform defined as:

The transform defined above in (10) is just resembling a logarithmic function (we should note that the functions used for the definitions of the isomorphisms in the classical LIP model (4) and in the homomorphic LIP model (9) are logarithmic functions), thus justifying the pseudo-LIP name.

Under the proposed isomorphism, the addition of graylevels and , denoted by the operator ⊕ is defined as:

The subtraction of (or difference between) graylevels and (with ), denoted by the operator ⊖ is defined as:

The multiplication of a graylevel v by a real scalar , denoted by the operator ⊗ is defined as:

2.4. The Multiparametric LIP

A first parametric LIP (PLIP) model was introduced in [18], by means of the functional modification of the upper range of the values involved in the computations. As such, instead of using the normalization to the upper range values, say D, the model proposed in [18] uses a set of functions ( and k) that depend on the upper range of graylevels, D, as described in equations below.

The difference between two graylevels and is given by:

The authors recommend that the and k functions used in (14) and (16) should be affine functions whose values should be experimentally determined following the optimization of the operations with respect to the given problem.

In order to set up a link between classical LIP and linear arithmetic operation, an extended parametric model, the PLIP model has been further proposed by Panetta et al. [19], by using five parameters, , , , k and , which allow for fine tuning of the classical LIP model, giving users greater control over the result. This model can switch between linear arithmetic operation and classical LIP with various parameters. The basic PLIP operations are defined as follows:

and, respectively:

The difference between two graylevels and is given by:

It can be shown that the graylevel interval is isomorphic with via the transform T defined as:

One may notice that the proposed multi-parametrization is cumbersome, not related to any physical or theoretical setup and is intrinsically subject to unmentioned constraints.

2.5. The Gigavision-Camera LIP Model

More recently, Deng [20] proposed a generalization of the classical LIP model of Jourlin and Pinoli as a result of the interpretation of the newly proposed Gigavision sensor model [21]. At first glance, the paper suggests a bridge between two, apparently different image models and proves that the classic LIP can be obtained under particular conditions from the functions that describe the Gigavision sensor.

In the Gigavision camera, each pixel of the sensor consists of N identical subpixels, each of them receiving the same amount of light, say . The output of the sensor, at each pixel, is the number of subpixels that have received at least a fixed amount T of incoming light, which is

In the equation above, and are the complete and incomplete Gamma functions. The bridge between the models comes from the consideration of the number of graylevels (maximal graylevel range) from the LIP equal to the number of the subpixels of the Gigavision sensor model (that is, ). The proposed generalization simply accommodates the use of different number of subpixels for various pixels being processed, under various Gigavision subpixel threshold values. Particularly, for , one obtains the classical LIP isomorphism; for , the transforms defining the model are not analytical.

2.6. The Spherical Color Coordinates Model

Within the framework of a very specific application (image compositing), in [22], Grundland et al. propose new operations for colors, such that the color space becomes an ordered field. The original RGB color components and mapped into by a new isomorphism, that has a logarithmic nature and has a fuzzy logic background, being the generator of the Frank T-conorm. The proposed isomorphism is:

The original color components are scaled into the interval; all the basic mathematical operations (addition, subtraction, scalar multiplication) are defined by the proposed isomorphism and applied for image blending, in conjunction with contrast enhancement, saliency computation and multiscale processing. This application is further discussed in Section 4.4.

3. Fuzzy Aggregation of Graylevels

All image processing algorithms must deal with the imprecision and vagueness that naturally arise in the digital representation of visual information. Noise, quantization, sampling errors, and the tolerance of the human visual system are some of the causes of this imprecision. This strongly suggests that fuzzy models could be used for dealing with the mentioned challenges, as proven by the important number of reported fuzzy image processing applications. Image content fuzziness was thoroughly investigated over the last two decades [23,24,25] and fuzzy image processing applications now range from image enhancement to segmentation and recognition.

Another quality of fuzzy logic, less exploited in the literature, is its ability to model accumulation of items (such as the image graylevels) within fixed bounds. The addition operation of the graylevels can be viewed as the accumulation of the contribution of each individual pixel into a global contribution (the result of the addition). Thus, the addition between the graylevels is modelled as a “stockpile” and described by a sum operation. This is the classical, real-number algebra operation, that clearly may exceed the interval of values with physical meaning. We propose here to view the above accumulation of pixel values as a fuzzy interaction model that groups together the individual pixel contributions (an idea that was previously used in the construction of fuzzy histograms in the context of content-based image retrieval [26,27]). Such an accumulation process (or aggregation of individual entities) is modelled at the most simple level in the fuzzy theory by a fuzzy T-conorm [28].

3.1. Fuzzy T-Conorms

Formally, any fuzzy T-conorm, denoted by S, applied on the fuzzy values a and b, is defined as the dual of its associated T-norm T [28], such that:

In a more theoretical manner, any T-norm can also be constructed by an additive generator function , a function that is decreasing and has the property that . The T-norm is defined as:

It follows that the associated T-conorm S is given, , by:

Over the time, several T-conorms have been proposed, such as the ones introduced by Zadeh, Lukasiewicz, Hamacher, etc. [28]. In the continuation of this work, the use of the Hamacher form, introduced in the late 1970s, will be the solely retained. The Hamacher T-conorm [28] is obtained from the following parametric additive generator function , with :

Obviously, one can easily compute the inverse of the Hamacher parametric additive generator function from Equation (26) above, obtaining:

3.2. Hamacher T-Conorm Induced Parametric LIP

It can be considered that the original graylevels g from an image can be transformed into fuzzy values by a typicality approach, normalizing all the values by the upper end of the graylevel range, D, namely . The fuzzy number v obtained following this approach measures how close (or typical, or representative) is the given graylevel g with respect to white (the brightest graylevel). This type of fuzzification of the graylevel is the most similar with respect to the notion of “gray tone function”, introduced in the classical LIP model. Any other fuzzification procedure may be applied on g, but discussing this matter is beyond the scope of the current contribution.

As a result of the above fuzzification of the graylevels, the addition of any two graylevels can be defined as the fuzzy Hamacher T-conorm of their fuzzified values, say and . The expression holds for all :

The operation in (28) is called the generalized addition. Similarly to the way of obtaining the definition (3) in the classical LIP model, as explained in [10] (following the generalization of an inductive approach), the scalar multiplication of a fuzzified graylevel can be defined as:

The replacement of the analytical expression of the Hamacher generator defined in (26) in the equations above produces the analytical form of the generalized parametric graylevel addition and scalar multiplication, , :

The detailed demonstrations for obtaining Equations (30) and (31) are presented in Appendixes Appendix A and Appendix B, respectively. One can easily check that the basic properties for addition (, , ) and multiplication (, ) hold for the new operations defined in (30) and (31).

The graylevel subtraction can be introduced in a similar manner, under the constraint that , by:

If one relates the subtraction operation to the classical LIP model, where the subtraction operation can issue both positive and negative results, it is obvious that the proposed FLIP subtraction does not. This is related to a more complex question: what is the nature of the graylevel difference? Is this difference a graylevel, or does it have another, different, nature (such as the edge intensity)? Through this paper, the graylevel difference is seen as another graylevel, and thus, is constrained to positive values (that is, we compute only if ). If needed for applications that require negative values (such as Canny edge extraction), one can redefine the subtraction for any pair of values as follows:

The basic operations defined in Equations (30)–(32) are linked to the real axis by the generator function (fundamental isomorphism) of the corresponding class of LIP models, given by:

This set of models, parametrized by p, is named Fuzzy Logarithmic Image Processing model set and denoted by FLIP.

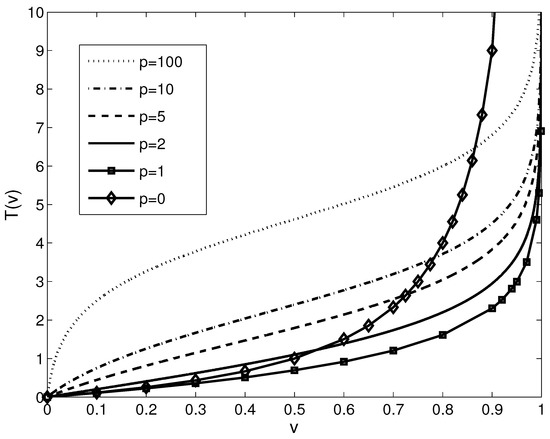

The generator function is a bijective function and since it is strictly monotonic, it thus becomes more than an isomorphism; it becomes a homeomorphism. Still, in order to keep the same expression as in the various references used for comparison, the function in (34) will be denoted as an isomorphism. Indeed, the isomorphism in (34) is parametrized by the parameter p; specific choices for p are presented in Table 1 and graphically shown in Figure 1.

Table 1.

Particular cases of the proposed FLIP framework.

Figure 1.

Plot of the fundamental isomorphisms for some of the discussed LIP models particularized from the proposed FLIP: pseudo-logarithmic model (continuous line with diamond marks, ), Jourlin and Pinoli’s classical model (4) (lower continuous line with square marks, ), Pătraşcu’s model (9) (continuous line, )), and new LIP models (dashed line, , dash-dotted line, and upper dotted line, ).

The proof for obtaining the classical LIP, the homomorphic LIP and the pseudo-logarithmic model as particular cases of the proposed FLIP, with p parameters chosen according to the values in Table 1 is straightforward. Still, it must be taken into account the fact that for the proposed FLIP, the graylevel range is , and thus the maximal allowed graylevel becomes , value which must be taken into account when evaluating the equivalence of (30) and (31) with the classical LIP addition (1) and multiplication (3).

One might notice that the result of the addition of any two graylevels increases with respect to the order of the FLIP model, and the difference between the graylevels decreases with respect to the order of the FLIP model.

4. Applications

Although the main contribution of this paper is theoretical, as it presents an unifying framework to approach most LIP models under the proposed FLIP, on the practical side several application may be developed. A few obvious applications, such as dynamic range enhancement, noise reduction and edge detection based on linear derivative filtering will be briefly discussed in the following subsections.

4.1. Dynamic Range Enhancement

The problem of dynamic range enhancement was addressed (within the classical LIP framework, i.e., FLIP with ) in [5], proposing an optimal derivation (analytical proof) of a single scalar multiplication constant of the entire image that maximizes the overall dynamic range. The problem can be expressed as follows: for a given image f, find the real scalar , such that the dynamic range obtained by graylevel amplification (multiplication by the scalar) is maximized. As proposed in the framework of the classical LIP in [4], the analytical solution for was:

For the implementation of the multiplication operation by the scalar will be used the generalized expression of FLIP scalar multiplication from (31); the problem can be now formulated as to maximize, for the given image f, the dynamic range defined as:

The FLIP form of the dynamic range is obtained by replacing in Equation (36) the operation with its analytical form from (31); one obtains (after minor algebraic simplifications, which are explained in Appendix C) that:

where

Determining the maximum achievable dynamic range for a given image f means to optimize with respect to both and p. Obviously, there is no analytic solution for the required optimization, such that numerical optimization was used instead. Simple tests show that one might expect, at least in some conditions, that the FLIP with performs better than the classical LIP. We shall denote in the following by the order of the best FLIP model achieving maximal dynamic range for a given image f, that is:

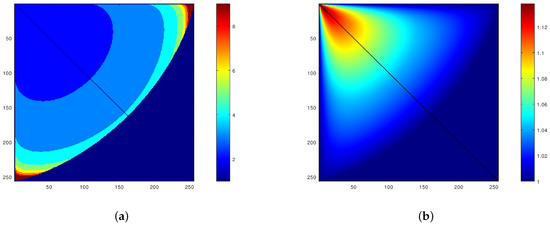

Testing of the optimal solution for (37) was performed extensively, over the entire range of possible image graylevels. Figure 2a,b show the most important results: , the order of the FLIP model that is the best (Figure 2a) and the ratio (Figure 2b). The ratio of the best FLIP dynamic range to the best classical LIP dynamic range is mostly bigger than 1, showing that in 67% of the image cases, one can achieve a bigger dynamic range using a FLIP with . The dynamic range increase ranges from to , with an average of .

Figure 2.

(a) Order of the FLIP model that achieves optimal dynamic range as a function of the minimal (horizontal axis) and maximal (vertical axis) values within the image (data is obviously symmetric with respect to the diagonal). (b) Ratio of the optimal dynamic range of the FLIP model to the optimal dynamic range of the classical LIP model as a function of the minimal (horizontal axis) and maximal (vertical axis) values within the image (data is obviously symmetric with respect to the diagonal). The parameter and ratio values are color-coded.

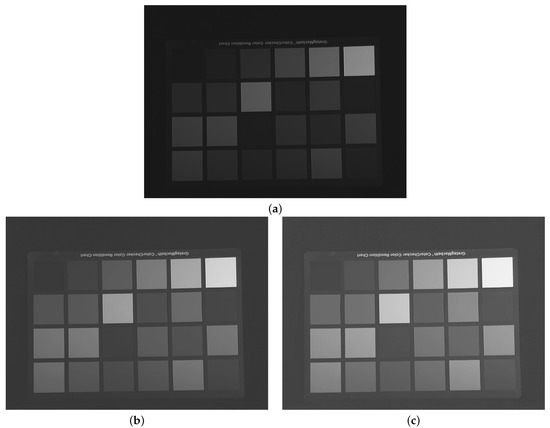

The images from Figure 3 show the result of such a dynamic range enhancement applied on the luminance component of a typical poorly illuminated image, taken from the Data for Computer Vision and Computational Colour Science set, described in [29].

Figure 3.

From top to bottom: (a) original underexposed image; (b) enhanced image () under the classical LIP model (FLIP with ); (c) enhanced image () under the proposed FLIP model (). The original image in (a) is taken from the Data for Computer Vision and Computational Colour Science set, described in [29].

4.2. Average-Based Noise Reduction

The simplest linear noise reduction method is the averaging operation, suited for white, additive, Gaussian noise (WAGN). It is known that the linear averaging provides the highest noise reduction from any linear filter with fixed weights. Replacing the linear averaging with FLIP additions and multiplications can provide an extra performance with respect to the classical LIP, using models with . A simple experiment was performed by applying the arithmetic averaging, implemented with the FLIP models within a filtering window, sliding across various natural images corrupted by WAGN. The FLIP averaging operation at pixel within the chosen filtering window is described in Equation (40) below:

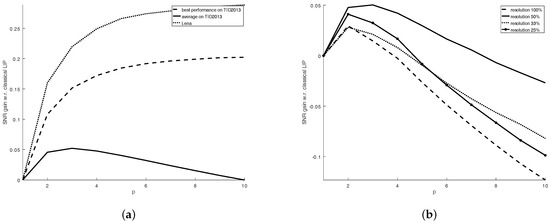

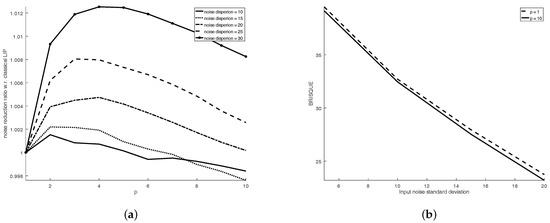

In the significant majority of cases (as Figure 4a shows for the selection of images from the classical TID2013 image quality database [30]), the filtering performance (in terms of SNR) was increased by using FLIP models with a high p value. Figure 4a depicts the difference between the SNR obtained for the image filtered with FLIP-based averaging with and the SNR obtained for the image filtered with FLIP-based averaging with (that is, ). The improvements are marginal, but non-negligible. Another quality estimation of the filtering result can be performed by using a non-reference image quality measure. One such measure is BRISQUE (Blind/Referenceless Image Spatial QUality Evaluator) [31], which computes a number within claimed to integrate the naturalness losses of images due to various distortion types. As such, the smaller the BRISQUE measure, the more naturally looking (and distortion-free) the image looks. The BRISQUE measure computed for the averaged TID2013 database images shows that an increased value of the parameter p can bring a very slight improvement in the naturalness of the image, as the BRISQUE values for the images average filtered by FLIP with (BRISQUE = 32.45 on average for the entire database for ) are consistently smaller than the BRISQUE values for the images average filtered by the classical LIP (BRISQUE = 32.71 on average for the entire database).

Figure 4.

(a) Plot of the SNR improvement () of the FLIP models with with respect to the SNR obtained by the classical LIP model (FLIP with ) for various images affected by WAGN with a standard deviation of 7. The SNR improvement is defined as the SNR obtained for minus the SNR obtained for . (b) Plot of the SNR improvement () of the FLIP models with with respect to the SNR obtained by the classical LIP model (FLIP with ) for various resolutions of images affected by WAGN with a standard deviation of 7.

The same natural images from TID2013, degraded with WAGN, are filtered by averaging at various resolutions. The results, as presented in Figure 4b, show that the performance increase for the FLIP implementation with appear mainly when there is a balance between the size of the uniform areas and details within the images. Furthermore, this test shows that the most promising FLIP models are obtained for . This experiment suggests that the averaging via FLIP can increase the noise reduction performance of the classical LIP.

For the investigation of the relative noise reduction power of the FLIP models with respect to the classical LIP (i.e., FLIP with ) a simple experiment was established. A fully uniform image was degraded by WAGN (with various dispersions) and the image was filtered by the averaging filter implemented according to (40). In the filtered image, the dispersion of the remaining noise was measured and the ratio between the noise dispersion after filtering according to FLIP with and the noise dispersion after filtering with the classical LIP () was measured. This noise reduction factor, in dependence to the noise level and the order of the FLIP model is presented in Figure 5, showing that for a significant range of models (i.e., p values and noise dispersions, the classical LIP model (i.e., FLIP with ) is outperformed by FLIP models with .

Figure 5.

(a) Plot of the noise reduction ratio of the FLIP models with with respect to the classical LIP model (FLIP with ) for various WAGN dispersions. (b) Average BRISQUE value (smaller is better) of the average filtered images from the TID2013 database for various WAGN dispersions, for images processed by the classical LIP model () and FLIP with .

4.3. Gradient-Based Edge Detection

One of the simplest edge-detection techniques is based on the measuring of the image derivatives, as indication of pixel value variation along specific directions. The usual implementations of such edge detectors are based on linearly implemented first- and second-order derivatives. We will experiment the implementation of such operators under the FLIP model, considering two common examples: the Sobel smoothed gradient as a first-order, linear, classical, derivative edge intensity detector and the Laplacian as a second-order linear, classical, derivative edge intensity detector. Both will be expressed by FLIP operations and their performance will be investigated, following the two important criteria established by Canny: noise rejection and edge localization. It will be shown how the use of the proposed FLIP can increase the performance of a LIP-based derivative edge extraction operator.

The classical Sobel derivative filter is implemented under the FLIP framework as:

The LIP-based Laplacian operator was already investigated (for instance in [12]) as a continuation of the work of Deng and Pinoli [32]. The Laplacian used in the experiments is based on the neighborhood and is implemented, for the graylevel image f, at pixel location in the classical, linear case as:

and in the FLIP case as:

From the derivative values, an edge intensity map is computed as their absolute value and the edge intensity map is adaptively thresholded by a value computed according to the Otsu method.

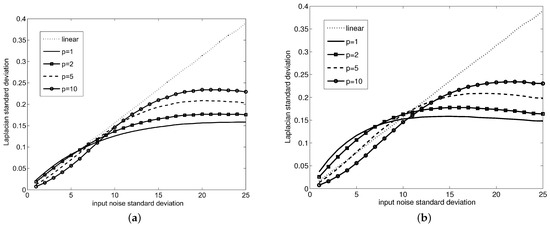

The main issue of linear derivative filters is that any noise existing within the image is amplified, in the sense that the variation of the noise is also measured by derivation, sometimes exceeding the intrinsic variations of the original image signal. A linear dependency exists between the noise power within the image and the noise power remaining in the linearly filtered image. We will show with simple experiments that the use of derivative operators based on the newly introduced logarithmic image processing operations can lead to a reduction of the noise power in the filtered image, as compared to the result obtained via classical, real-numbers implementations, or classical LIP implementations of the same derivatives. We will consider the case of a fully uniform image degraded by a white, zero-mean, additive Gaussian noise (WAGN), which is filtered by a Laplacian operator (which is a second-order derivative operator and thus is prone to high noise amplification) implemented under the to paradigms: the classical linear model and the FLIP model. The output noise standard deviation is used as measure of the noise power in both input and filtered images. The plots presented in Figure 6 present the behavior of several FLIP implementations of the Laplacian, for initial uniform images with different values, showing the overall good behavior of the proposed FLIP implementation. The ideal behavior of a noise-stable Laplacian is to have the noise standard deviation curve in Figure 6 as “low” as possible and below the curve corresponding to the classical, linear Laplacian. Namely, the typical behavior is that the Laplacian implemented via the FLIP models exhibits lower output noise dispersion than the classical, linear Laplacian for WAGN with higher standard deviation. In this high noise range, the best performance is achieved by the low-order FLIP models, such as the classical LIP (). In the lower noise range, some of the FLIP models perform worse than the classical, linear Laplacian; still, high-order FLIP models (with ) perform better than the classical Laplacian.

Figure 6.

Output WAGN standard deviation vs. input WAGN standard deviation for the classical Laplacian (dotted line), Laplacian under the logarithmic-like model from [12] () (continuous line with diamond marks), Laplacian under the classical Jourlin-Pinoli logarithmic model [5] () (continous line), Laplacian under the Pătraşcu logarithmic model [11] () (dash-dotted line), Laplacian under the proposed FLIP with (continuous line with square marks) and Laplacian under the proposed FLIP with (continuous line with circle marks) for two constant images with gray levels (a) 75 and (b) 150.

The edge localization property is based on the distance between the actual contour and the detected contour, measure in the thresholded edge intensity map. The classical Pratt figure of merit (FOM) is a commonly used tool for the characterization of edge localization. The FOM is computed as:

In the equation above, and are the detected and the ideal edge pixels and is the distance between any detected edge pixel and the closest ideal edge pixel. The FOM is computed for test images composed by vertical/ horizontal edges that separate perfectly uniform regions, affected by white, additive, Gaussian noise with various dispersions.

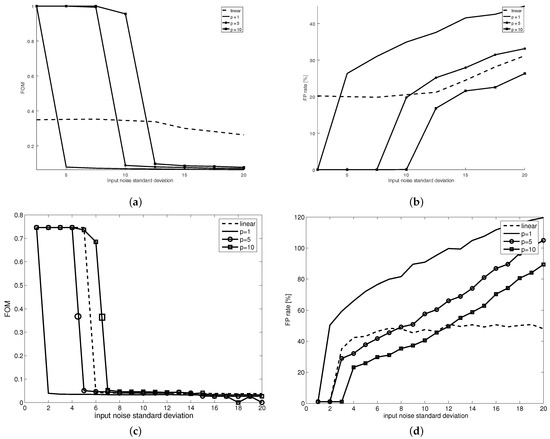

As Figure 7 shows, the FOM increases (and the Laplacian performs better) with the increase in the FLIP model order (p), for all noise dispersions. Still, the classical, linear Laplacian performance is matched and sometimes exceeded in the case of high-order FLIP models. Figure 7b shows the false positive edge pixel ratio for the same test images; it can be seen that while there is a general, natural increase in the false positive edge pixels ratio induced by the increase in the noise standard deviation, the FLIP models with can perform better than the classical, linear Laplacian.

Figure 7.

Pratt’s Figure of merit (a,c) and False Positives rates (b,d) with respect to the input noise dispersion for the classical, linear model (dashed line), for the classical Jourlin–Pinoli logarithmic model [5] () (continuous line), for the proposed FLIP with (continuous line with circular marks) and for the proposed FLIP with (continuous line with square marks) for a synthetic test image with gray levels difference across the edge of 100 for two standard derivative models: Sobel gradient (a,b) and Laplacian (c,d).

Finally, some typical visual edge extraction results are shown in Appendix D in Figure A1 and Figure A2; it can be observed that the order of the FLIP model can be seen as a trimming factor for the selection of the most important edges from the picture and as a means for rejecting false positive edges due to noise.

4.4. Image Blending

Image blending and High Dynamic Range (HDR) image creation are prime applications in computer graphics; the linear operators, used by their own, have been shown to fade colors and create false colors. There are approaches that, among other pre- and postprocessing steps, replace the linear operators with more suited ones. Such an example is, for instance, the logarithmic HDR, proposed in [8], or the image blending via the spherical color coordinates model, proposed in [22]. These two approaches use logarithmic-type operators for the addition and scalar multiplication of gray or RGB color components.

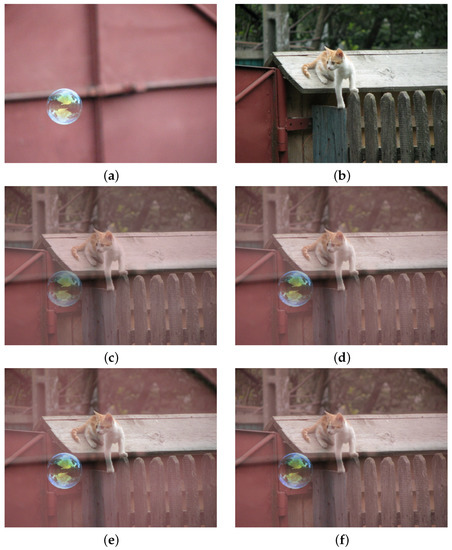

In the following example, shown in Figure 8, we compare the simple blending of two images, with equal weights, via linear and various LIP, LIP-like and FLIP models. Namely, the images and are composed into image J, for each color channel independently, as:

Figure 8.

Image blending with equal weights of the original color images in (a,b). The blending is performed according to: (c) linear operations (BRISQUE = 23.60); (d) Grundland et al. without contrasting, pyramid processing and saliency (BRISQUE = 26.13); (e) FLIP operations with (classical LIP, BRISQUE = 30.84); (f) FLIP operations with (BRISQUE = 23.72). One can notice that for pixel-based blending, the FLIP models with offer the best visual results.

Objective evaluation of the blending results can be performed only by the use of a non-reference image quality measure. We will use BRISQUE [31] again for estimating the naturalness of the various blending models. In Figure 8, each blended image was evaluated by BRISQUE (the score is included in the caption) and this evaluation shows that using FLIP operations with the resulting image looks more natural. Subjective evaluation also shows that the use of the FLIP model with produce better results than the classical LIP and the results are comparable with the ones proposed in [22] (check for instance the overall appearance of the soap bubble).

5. Conclusions

This paper presented a new parametric class of logarithmic models for image processing (LIP), which allows the computation of graylevel addition, subtraction and multiplication with scalars within a fixed graylevel range without the use of clipping. This class of models was named FLIP—Fuzzy Logarithmic Image Processing models. We may remind that this work claimed the proposed FLIP as (i) a new, fuzzy logic-based model for the generation of a parametric set of logarithmic-type image processing models that (ii) generalize the main existing LIP models and (iii) can provide for some particular cases a marginally better performance than the classical LIP for various image processing operations.

The derivation of the proposed model is based on the interpretation of graylevel addition as a process of accumulation (or reunion), and thus its modelling by a fuzzy T-conorm (the Hamacher family, in particular). The existing LIP models can be obtained as particular cases of the proposed parametric family. It should be noted that the proposed approach has more limited mathematical properties as compared with the classical LIP models; the most obvious limitation being the fact that the graylevel difference may not yield a negative result. The supplemental choice of a FLIP model (by choosing a particular value of the parameter p) may improve the result of some algorithmic approaches (such as the simple presented application of dynamic range enhancement).

Author Contributions

Conceptualization, C.V.; methodology, C.V.; software, C.V., C.F. and L.F.; validation, C.V., C.F. and L.F.; formal analysis, C.V.; investigation, C.V., C.F. and L.F.; resources, C.V., C.F. and L.F.; data curation, C.V., C.F. and L.F.; writing—original draft preparation, C.V.; writing—review and editing, C.V., C.F. and L.F.; visualization, C.V., C.F. and L.F.; supervision, C.V.; project administration, C.V.; funding acquisition, C.V. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Derivation of the FLIP Addition

We defined the FLIP addition as the aggregation of the fuzzyfied gray tones and in Equation (28) as:

The equation is particularized by replacing the analytical expressions of the Hamacher generator function and its inverse , as defined in (26) and (27):

We develop the algebraic computation in the case of and , respectively. First, for :

In the case of , we have:

Finally, we can write compactly that , (which is Equation (30)):

Appendix B. Derivation of the FLIP Scalar Multiplication

We defined the FLIP scalar multiplication as the repeated addition of a fuzzyfied gray tone v by a real scalar in Equation (29) as:

The equation is particularized by replacing the analytical expressions of the Hamacher generator function and its inverse , as defined in (26) and (27). We develop the algebraic computation in the case of and , respectively. First, for :

In the case of , we have:

Finally, we can write compactly that , (which is Equation (31):

Appendix C. Derivation of the Formula for the Dynamic Range

The dynamic range of an image f is the difference between its maximal and minimal values. After a simple image stretching performed under the FLIP model, the resulting dynamic range is given in Equation (36)

The FLIP scalar multiplication, for , as expressed in Equation (31), is:

For simplicity, we can compactly denote in the equation above

Then, the scalar multiplication can be expressed as:

The dynamic range can be simply rewritten, in order to obtain Equation (37):

Appendix D. Typical Edge Extraction Results for Natural Images

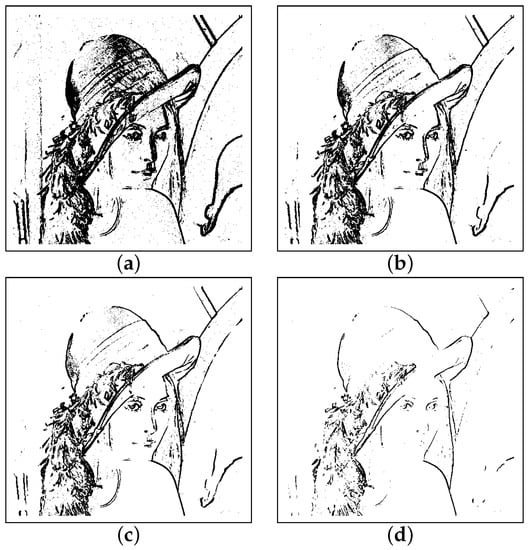

Figure A1.

FLIP Laplacian binary edge images of the classical Lena picture computed for various FLIP models: (a) (classical LIP); (b) ; (c) ; (d) . All the edge maps are obtained by the Otsu thresholding of the Laplacian. One can notice that with the increase in p small and un-contrasted contours disappear.

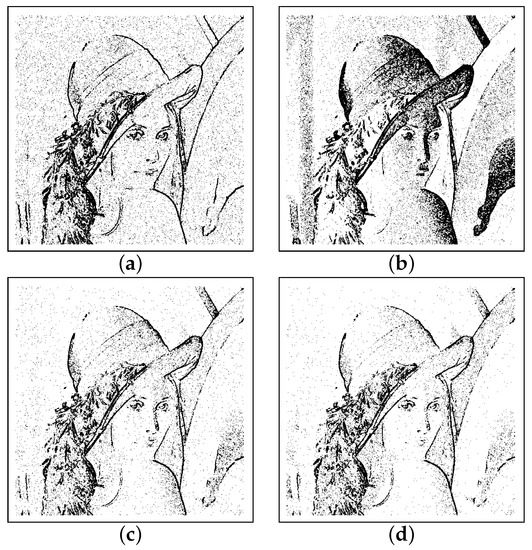

Figure A2.

FLIP Laplacian binary edge images of the classical Lena picture, degraded with WAGN of standard deviation 7, computed for various FLIP models: (a) classical, linear Laplacian; (b) (classical LIP); (c) ; (d) . All the edge maps are obtained by the Otsu thresholding of the Laplacian. One can notice that with the increase in p the false positive edge points produced by the noise decrease (image (d)) is much cleaner than image (b)).

References

- Stockham, T.G. Image processing in the context of visual models. Proc. IEEE 1972, 60, 828–842. [Google Scholar] [CrossRef]

- Oppenheim, A.V. Generalized Supperposition. Inf. Control 1967, 11, 528–536. [Google Scholar] [CrossRef]

- Oppenheim, A.V.; Shaffer, R.W.; Stockham, T.G., Jr. Nonlinear Filtering of Multiplied and Convolved Signals. IEEE Trans. Audio Electroaccoustics 1968, 16, 437–466. [Google Scholar] [CrossRef]

- Jourlin, M.; Pinoli, J.C. Image dynamic range enhancement and stabilization in the context of the logarithmic image processing model. Signal Process. 1995, 41, 225–237. [Google Scholar] [CrossRef]

- Jourlin, M.; Pinoli, J.C. A model for logarithmic image processing. J. Microsc. 1998, 149, 21–35. [Google Scholar] [CrossRef]

- Jourlin, M.; Breugnot, J.; Itthirad, F.; Bouabdellah, M.; Closs, B. Logarithmic image processing for color images. Adv. Imaging Electron Phys. 2011, 168, 65–107. [Google Scholar]

- Deng, G.; Cahill, L.W.; Tobin, G.R. The Study of Logarithmic Image Processing Model and Its Application to Image Enhancement. IEEE Trans. Image Process. 1995, 4, 506–512. [Google Scholar] [CrossRef] [PubMed]

- Florea, C.; Vertan, C.; Florea, L. Logarithmic Model-based Dynamic Range Enhancement of Hip Xray Images. In Lectures Notes in Computer Science LNCS; Springer: Berlin/Heidelberg, Germany, 2007; pp. 587–596. [Google Scholar]

- Jourlin, M. Logarithmic Image Processing: Theory and Applications. In Advances in Imaging and Electron Physics; Academic Press (Elsevier): Amsterdam, The Netherlands, 2016; Volume 195. [Google Scholar]

- Jourlin, M.; Pinoli, J.C. Logarithmic image processing. The mathematical and physical framework fro the representation and processing of transmitted images. Adv. Imaging Electron Phys. 2001, 115, 129–196. [Google Scholar]

- Pătraşcu, V.; Buzuloiu, V.; Vertan, C. Fuzzy image enhancement in the framework of logarithmic models. In Fuzzy Filters in Image Processing; Series Studies in Fuzziness and Soft Computing; Physica Verlag: Heidelberg, Germany, 2003; Chapter 10; pp. 219–237. [Google Scholar]

- Vertan, C.; Oprea, A.; Florea, C.; Florea, L. A Pseudo-Logarithmic Framework for Edge Detection. In Lectures Notes in Computer Science LNCS; Springer: Berlin/Heidelberg, Germany, 2008; pp. 637–644. [Google Scholar]

- Palomares, J.; Gonzalez, J.; Ros, E.; Prieto, A. General Logarithmic Image Processing Convolution. IEEE Trans. Image Process. 2006, 15, 3602–3608. [Google Scholar] [CrossRef] [PubMed]

- Pinoli, J.C.; Debayle, J. Logarithmic adaptive neighborhood image processing (LANIP): Introduction, connections to human brightness perception, and application issues. EURASIP J. Appl. Signal Process. 2007, 2007, 114–135. [Google Scholar] [CrossRef][Green Version]

- Debayle, J.; Pinoli, J.C. Generalized Adaptive Neighborhood Image Processing: Part I: Introduction and Theoretical Aspects. J. Math. Imaging Vis. 2006, 25, 245–266. [Google Scholar] [CrossRef]

- Debayle, J.; Pinoli, J.C. Generalized Adaptive Neighborhood Image Processing: Part II: Practical Application Examples. J. Math. Imaging Vis. 2006, 25, 267–284. [Google Scholar] [CrossRef]

- Berehulyak, O.; Vorobel, R. The Algebraic Model with an Asymmetric Characteristic of Logarithmic Transformation. In Proceedings of the IEEE 15th International Conference on Computer Sciences and Information Technologies (CSIT), Zbarazh, Ukraine, 23–26 September 2020; Volume 2, pp. 119–122. [Google Scholar]

- Panetta, K.; Wharton, E.; Agaian, S. Human Visual System-Based Image Enhancement and Logarithmic Contrast Measure. IEEE Tran. Syst. Man Cybern. Part B Cybern. 2008, 38, 174–188. [Google Scholar] [CrossRef] [PubMed]

- Panetta, K.; Agaian, S.; Zhou, Y.; Wharton, E. Parameterized Logarithmic Framework for Image Enhancement. IEEE Trans. Syst. Man Cybern. Part B Cybern. 2011, 41, 460–473. [Google Scholar] [CrossRef] [PubMed]

- Deng, G. A Generalized Logarithmic Image Processing Model Based on the Gigavision Sensor Model. IEEE Trans. Image Process. 2012, 21, 1406–1414. [Google Scholar] [CrossRef] [PubMed]

- Sbaiz, L.; Yang, F.; Charbon, E.; Susstrunk, S.; Vetterli, M. The Gigavision Camera. In Proceedings of the IEEE ICASSP, Taipei, Taiwan, 19–24 April 2009; pp. 1093–1096. [Google Scholar]

- Grundland, M.; Vohra, R.; Williams, G.P.; Dodgson, N.A. Cross Dissolve Without Cross Fade: Preserving Contrast, Color and Salience in Image Compositing. Comput. Graph. Forum 2006, 25, 577–586. [Google Scholar] [CrossRef]

- Bezdeck, J.C.; Pal, S.K. Fuzzy Models for Pattern Recognition; IEEE Press: New York, NY, USA, 1992. [Google Scholar]

- Tizhoosh, M. Fuzzy Image Processing; Springer: Berlin/Heidelberg, Germany, 1997. [Google Scholar]

- Nachtegael, M.; Van der Weken, D.; Van De Ville, D.; Kerre, E.E. (Eds.) Fuzzy Filters in Image Processing; Series Studies in Fuzziness and Soft Computing; Physica Verlag: Heidelberg, Germany, 2003. [Google Scholar]

- Vertan, C.; Boujemaa, N. Using Fuzzy Histograms and Distances for Color Image Retrieval. In Proceedings of the CIR, Brighton, UK, 3–5 May 2000. [Google Scholar]

- Vertan, C.; Boujemaa, N. Embedding Fuzzy Logic in Content Based Image Retrieval. In Proceedings of the NAFIPS, Atlanta, GA, USA, 13–15 July 2000; pp. 85–90. [Google Scholar]

- Zimmermann, H.J. Fuzzy Sets Theory and Its Applications; Kluwer Academic Publisher: Boston, MA, USA, 1996. [Google Scholar]

- Barnard, K.; Martin, L.; Funt, B.; Coath, A. A Data Set for Color Research. Color Res. Appl. 2002, 27, 148–152. [Google Scholar] [CrossRef]

- Ponomarenko, N.; Jin, L.; Ieremeiev, O.; Lukin, V.; Egiazarian, K.; Astola, J.; Vozel, B.; Chehdi, K.; Carli, M.; Battisti, F.; et al. Image database TID2013: Peculiarities, results and perspectives. Signal Process. Image Commun. 2015, 30, 57–77. [Google Scholar] [CrossRef]

- Mittal, A.; Moorthy, A.K.; Bovik, A.C. No-reference image quality assessment in the spatial domain. IEEE Trans. Image Process. 2012, 21, 4695–4708. [Google Scholar] [CrossRef] [PubMed]

- Deng, G.; Pinoli, J.C. Differentiation-based Edge Detection Using the Logarithmic Image Processing Model. J. Math. Imaging Vis. 1998, 8, 161–180. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).