Abstract

Nighttime light remote sensing has unique advantages on reflecting human activities, and thus has been used in many fields including estimating population and GDP, analyzing light pollution and monitoring disasters and conflict. However, the existing nighttime light remote sensors have many limitations because they are subject to one or more shortcomings such as coarse spatial resolution, restricted swath width and lack of multi-spectral data. Therefore, we propose an optical system of imaging spectrometer based on linear variable filter. The imaging principle, optical specifications, optical design, imaging performance analysis and tolerance analysis are illustrated. The optical system with a focal length of 100 mm, F-number 4 and 43° field of view in the spectrum range of 400–1000 nm is presented, and excellent image quality is achieved. The system can obtain the multi-spectral images of eight bands with a spatial resolution of 21.5 m and a swath width of 320 km at the altitude of 500 km. Compared with the existing nighttime light remote sensors, our system possesses the advantages of high spatial and high spectral resolution, wide spectrum band and wide swath width simultaneously, greatly making up for the shortage of the present systems. The result of tolerance analysis shows our system satisfy the requirements of fabrication and alignment.

1. Introduction

Nighttime light remote sensing can obtain various light information on the earth’s surface at cloudless night, most of which are sent out by artificial lights. In contrast to common remote sensing satellite imageries which are mainly used to monitor the physicochemical characteristics of the earth, nighttime light remote sensing imageries have higher correlation with human activities and possess the advantages of spatiotemporal continuity, independence and objectivity. Therefore, they have been widely used in the field of social science such as gross national product, greenhouse gas emissions, poverty index, urbanization monitoring and evaluation, urban agglomeration evolution analysis and light pollution analysis. In addition to reflecting the lights from urban areas, nighttime light remote sensing can also capture the lights of fishing boats, natural gas combustion, forest fires, etc., so it can also provide important support for many other research fields such as fishery monitoring, major event estimation and natural disaster risk assessment [1,2,3,4,5].

Up to now, the Defense Meteorological Satellite Program’s Operational Linescan System (DMSP/OLS) data and the Visible Infrared Imaging Radiometer Suite (VIIRS) instrument onboard the Suomi National Polar Partnership (NPP) satellite are the two most common sources of nighttime light data [6]. The DMSP/OLS has a rich database and can detect the nighttime light radiance from 1.54 × 10−9 to 3.17 × 10−7 W·cm −2·sr −1 with a swath width of 3000 km [7,8]. However, it has a coarse spatial resolution of 2700 m and can only obtain panchromatic images, limiting its use in fine application [9]. Other shortcomings such as lack of on-board calibration, blooming effects and saturation in urban cores make its application further limited [10]. The NPP-VIIRS has a radiometric range of 3 × 10−9 to 2 × 10−2 W·cm−2·sr−1 and swath width of 3000 km [11,12,13]. As the successor to the DMSP/OLS, it has a certain degree of upgrade such as finer resolution of 740 m and on-board radiometric calibration [8,14,15]. However, the resolution is still very low and only panchromatic images can be obtained [16]. Luojia 1–01 satellite launched by Wuhan University in China, has the spatial resolution of 130 m and swath width of 250 km [17]. However, like its predecessors, it cannot capture spectral images [18]. On 9 January 2017, Chang-guang Satellite Technology Co., Ltd. launched the JL1-3B satellite. It provides significant improvements over the previous satellite remote sensors, in terms of increased spatial resolution (0.92 m) and multispectral bands including 430–512 nm, 489–585 nm and 580–720 nm [19]. However, its swath width is only 11 km, and the number of wavebands is too few to provide more detailed information. Imaging information with high spatial resolution and high spectral resolution in a wide waveband is imperative to identify the type of the light source and their spatial arrangement [20]. Since the light from different areas such as residential, commercial and war area shows different spatial and spectral characteristics, high-resolution datasets have unique advantages over the coarse resolution imagery in monitoring, analyzing, evaluating, and predicting the changes on the earth’s surface and thus contribute to quantify and track the dynamic of human activities and their impacts on environment and economy [21,22]. In sum, a nighttime light remote sensor that can capture imageries with high spectral and spatial resolution in a wide waveband and swath width is worth studying.

In this paper, we present an optical system of imaging spectrometer based on linear variable filter for nighttime light remote sensing that can obtain the multi-spectral images of eight spectral bands in the range of 400–1000 nm with a spatial resolution of 21.5 m and a swath width of 320 km. Compared with the imaging spectrometers based on prism, prism-grating and prism-grating-prism dispersion module which consist of two optical system namely, the telescopic system and the spectroscopic system, our system possess the advantages of compactness, light weight, and low cost, although the spectral resolution is lower. For example, the imaging spectrometer based on prism-grating-prism developed by Xue consists of telescope system and spectral imaging system [23]. The imaging spectrometer contains 24 lenses and has a total length of 300 mm. The imaging spectrometer based on prism-grating by Chen contains 22 lenses [24]. In contrast, our system contains 15 lenses, and the total lenses is 250 mm. Although spectral shift may occur to our spectrometer due to the inherent limitation of linear variable filter, it can be greatly alleviated by spectral calibration. Compared with the existing nighttime light remote sensors, our system meeting the requirements of high spatial and high spectral resolution, wide working band and wide swath width simultaneously, greatly making up for the shortage of the present systems. For an imaging spectrometer for nighttime light remote sensing, it is necessary to maintain illumination uniformity of imaging plane to ensure sufficient SNR [25,26,27]. By analyzing the energy distribution of the focal plane and pupil aberration, we apply to the pupil coma to improve the illumination of the edge field of view. Since the waveband of our system is too wide, it is essential to correct the chromatic aberration and secondary spectrum to get excellent image quality. In order to obtain an apochromatic system, some special materials such as crystals and liquids are usually used [28,29]. However, the disadvantages of these materials such as fragility, instability and poor availability prevent their applications in aerospace. Fortunately, the Buchdahl dispersion model can help to provide an effective method to select glass materials for apochromatic systems [30]. In the design process of our system, the chromatic aberration and secondary spectrum are corrected by properly choosing glass for certain lenses with the dispersion vector method based on the Buchdahl dispersion model. Other aberrations such as astigmatism and distortion are reduced by adjusting the position of aperture stop and shape and bending factors of certain lenses. After iterative optimization in software ZEMAX, we get the final optical system with excellent image quality. We have carried out the tolerance analysis and the result shows that the system is qualified for practical application.

2. Imaging Principle

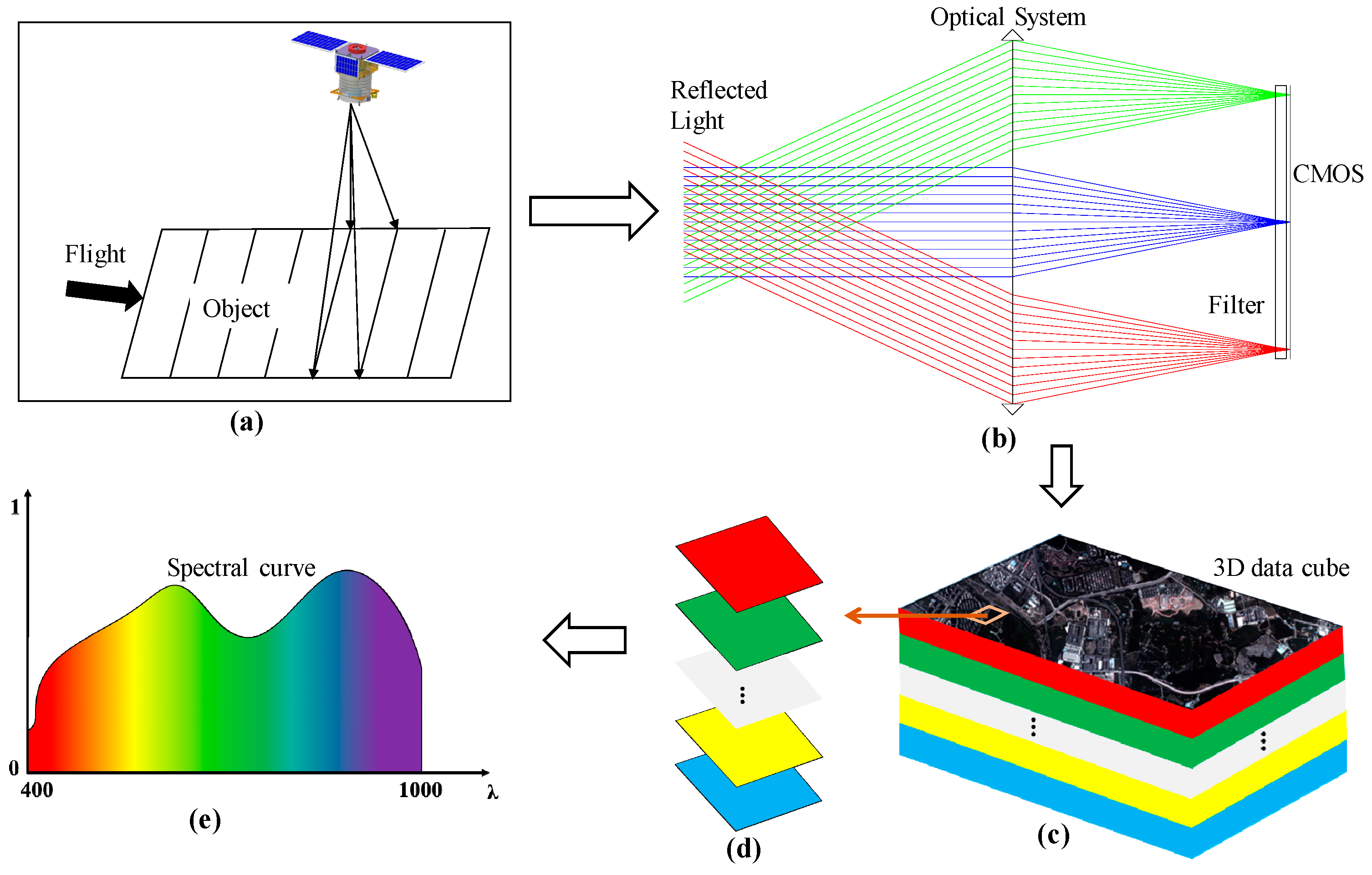

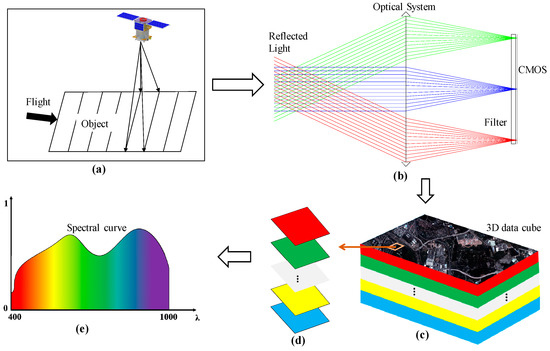

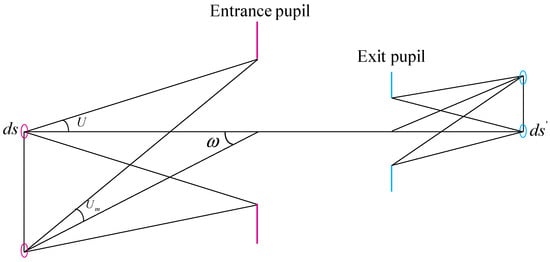

A schematic diagram of the imaging spectrometer’s optical system and its imaging principle is shown in Figure 1. The system consists of an optical system and a filter which is placed in proximity to the CMOS, with the coated side towards the pixels. The imaging spectrometer is a push-broom system and the working principle of this type of system is shown in Figure 1a. The light from the ground target is imaged by the optical system. After passing through the filter, the light forms an image on the focal plane and is finally received by the CMOS, as shown in Figure 1b, where the lights with different color represent different fields of view. We can therefore obtain multiple linear images of different wavebands which can be expanded into plane images using the push-broom imaging mode of the spectrometer.

Figure 1.

Schematic diagram of the imaging spectrometer’s optical system and its push-broom imaging principle. (a) Push-broom imaging principle, (b) Structure of the imaging spectrometer, (c) Three-dimensional data cube, (d) Data column in the data cube, (e) Spectrum curve of the object.

The data captured by the imaging spectrometer is referred to as a three-dimensional data cube denoted by two-dimensional space and one-dimensional spectrum [31]. The data cube contains spectral and spatial information of the targets within the whole scanning range of the imaging spectrometer, as shown in Figure 1c. Each target in the scanning range corresponds to a column in the data cube which can be converted into a spectral curve for spectrum characteristics analysis, as shown in Figure 1d,e.

3. Optical Specifications

The imaging spectrometer is mounted on a low-orbit satellite at the altitude of 500 km and the technical index requires that the ground sample distance (GSD) is less than 21.5 m. Such sampling frequency is sufficient to distinguish the adjacent street lamps on most roads [32]. Besides, it can help to distinguish large buildings by detecting all the artificial light on them such as billboards and neon lights [33]. The focal length of the optical system can be given by

where is the size of the CMOS pixel, H is the detection distance. The CMOS image sensor GSENSE5130 produced by Gpixel Inc with 5056 × 2968 pixels and 4.25 μm × 4.25 μm pixel size is chosen as the detector of our system [34]. It is calculated that the focal length is 98.8 mm. In order to have abundant allowance, we choose 100 mm as the focal length of our system. It should be noted that the ground sample distance of 21.5 m corresponds to the nadir pixels. For the marginal pixels, the corresponding ground sample distance will be slightly larger.

The width coverage L of our imaging spectrometer is required to be more than 320 km to be sufficient to cover two cities during one shot. Besides, such a wide coverage can reduce the number of revisiting when capture a large area. It can be calculated by the following equation that the width of the image plane l is 64 mm.

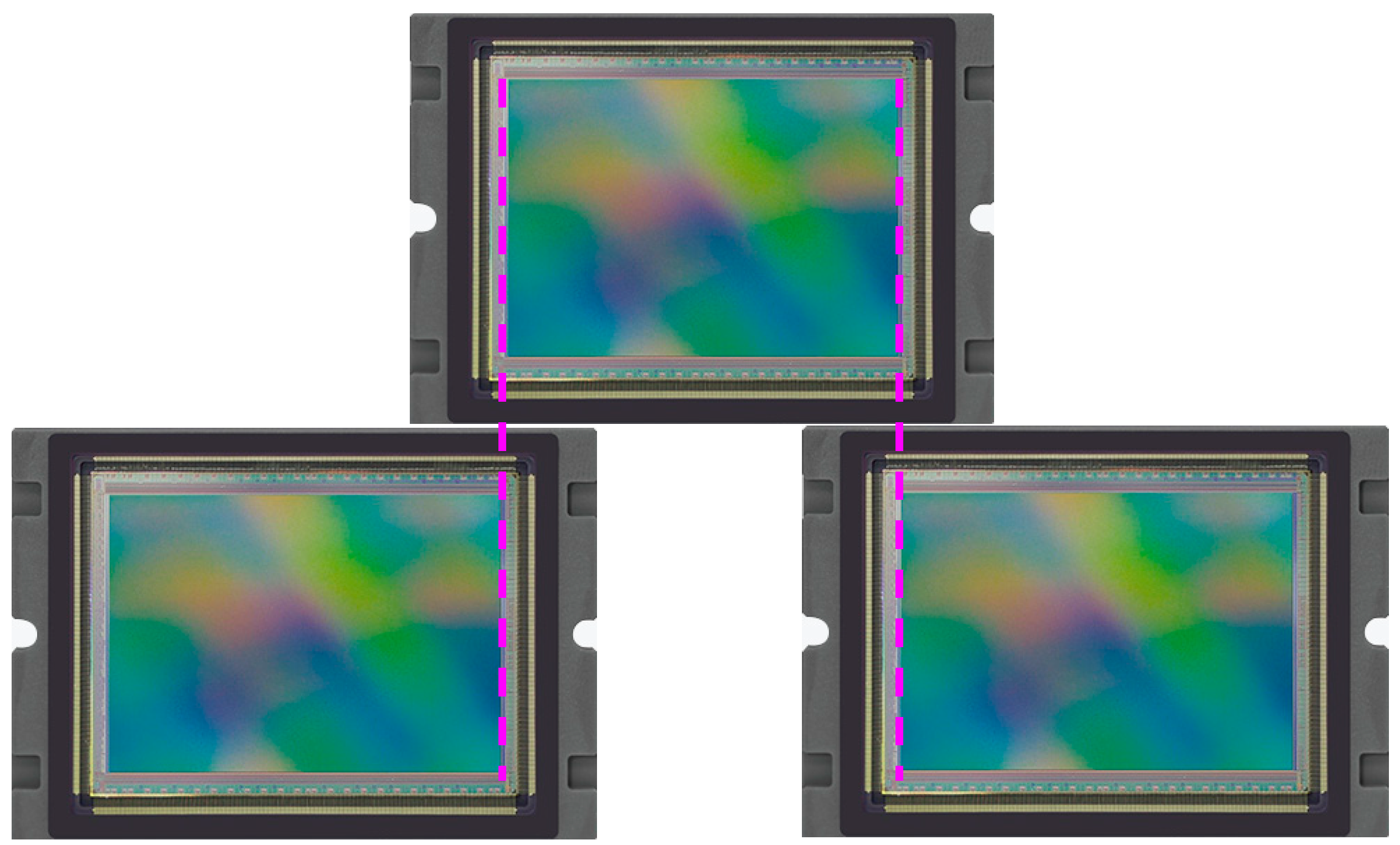

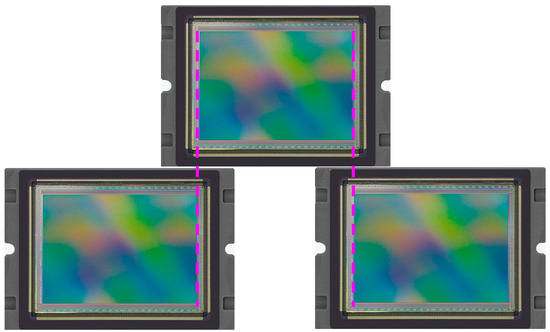

Since the width of the image sensor GSENSE5130 is 21.488 mm, less than that of the image plane, we use three image sensors to splice the image plane in the form of Figure 2. In order to avoid the chink between adjacent sensors in the assembly process, an overlap area of 50 pixels will be set between them. In this case, the width coverage can be calculated as 320.2 km by

where, P1 = 5006, and which are the effective number of pixels of the three sensors, is the ground sample distance calculated by Equation (1) when the focal length is 100 mm.

Figure 2.

Schematic diagram of image plane spliced by three image sensors.

Through geometry calculation, we can get that the circumcircle diameter of the spliced image plane is 77 mm. Then the half-field angle of the optical system can be calculated as follows:

where is the circumcircle diameter of the spliced image plane. We set to 21.5° to have abundant allowance.

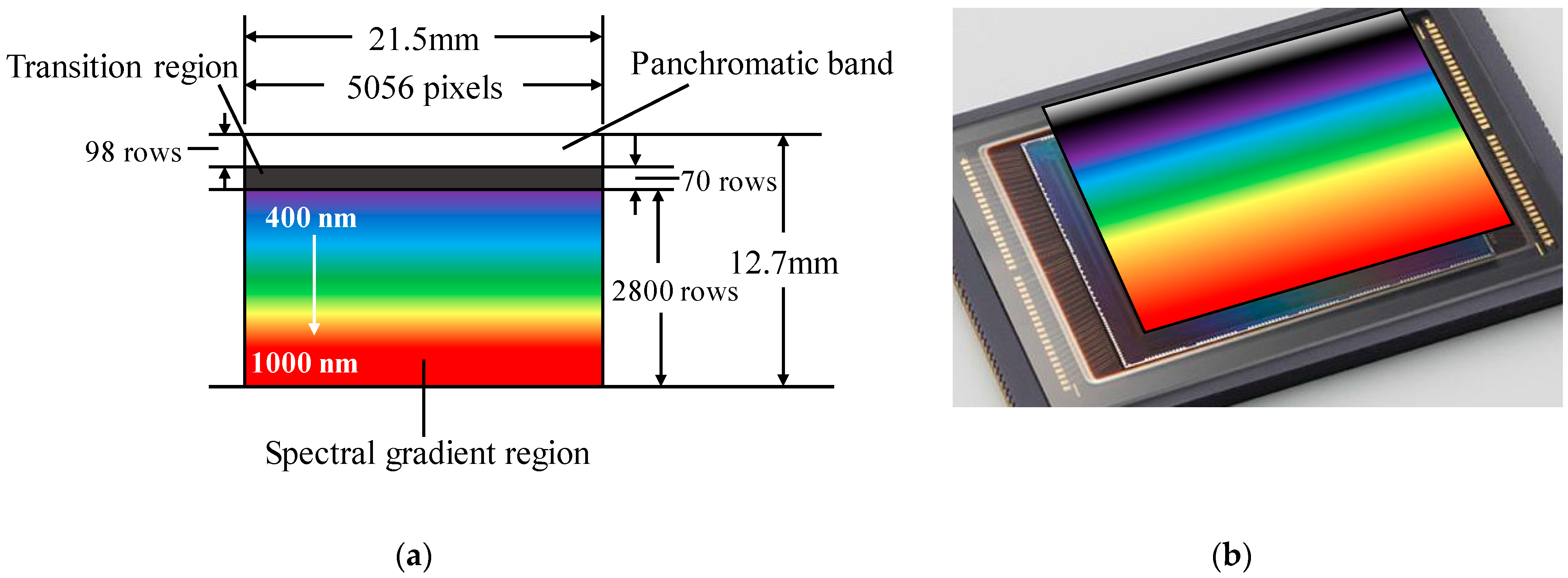

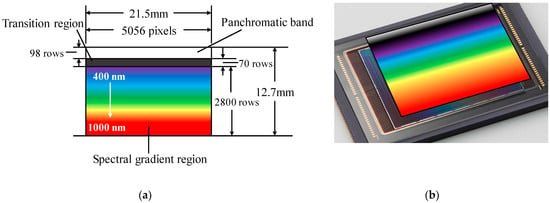

In order to match the detector in size, the size of the linear variable filter we choose is 33.2 mm × 33.2 mm × 1.2 mm and the effective coating area is 21.5 mm × 12.7 mm. The corresponding relationship between the filter and the detector pixels is shown in Figure 3. The area of the panchromatic band corresponds to the first 98 rows pixels of the sensor and the size is about 0.42 mm × 12.7 mm. The next 70 rows of pixels correspond to the transition region of the filter whose size is about 0.3 mm × 12.7 mm. The remaining area of the filter corresponding to the next 2800 rows of pixels is the spectral gradient region in the range of 400–1000 nm. The central wavelength varies along the longitudinal direction of the filter. The full width half maximum (FWHM) of filter is around 5% of the center wavelength and the transmittance is above 90%. Accurate co-registration of bands and different rows of pixels will be realized by spectral calibration. After co-registration of bands and detectors, spectral images can be obtained by integrating the data of corresponding pixels in the push-broom mode. The system can obtain eight spectral images and one panchromatic image at one time and thus can achieve accurate detection [35]. The bandwidth of spectral image can be set between 20 nm and 40 nm according to practical need. However, since the FWHM of the filter increases with the central wavelength increasing, the spectral images in the waveband near 1000 nm will have wider bandwidth. It should be noted that the spectral bands can be reset on orbit because of the use of the linear variable filter.

Figure 3.

(a) The corresponding relationship between filter and detector. (b) schematic of the structure.

In order to ensure the resolution and accuracy of measurement, the modulation transfer function (MTF) of the system should be greater than 0.12 at the Nyquist frequency which can be calculated as 118 lp/mm by

where N is the Nyquist frequency, is the size of the CMOS pixel. The MTF can be calculated as follows [36,37]:

where is the MTF of design value of the optical system, is the MTF of optical system manufacturing which can be set as 0.8 based on engineering experience, is the MTF of detector whose value is 0.5. After calculation, should be greater than 0.3.

High signal-to-noise ratio (SNR) of imaging spectrometer is necessary for nighttime light remote sensing, as it enables system to distinguish the target from the background. The SNR [38] is defined as the ratio of signal power to noise power, which can be calculated by

where Se is the number of signal electronics, is the number of noise electronics, , is the readout noise, , is the dark current.

The number of signal electrons within the integrated period is obtained by:

where is the area of a single pixel, is the integration number of our CMOS whose range is from 1 to 186, is the integration time of the system, is the radiance at the entrance pupil, which can be obtained by the software MODTRAN, is the transmittance of the optical system. We expect to use 15 lenses made of optical glass with a transmittance of 0.995 when the thickness is 10 mm in the spectral range of 500–1000 nm. The transmittance in the range of 400–500 nm will be lower; we must improve the quality of corresponding images by the increase in the integration number or specific image processing algorithm. All surfaces of the lenses will be coated with the anti-reflective film which guarantees a transmittance of more than 0.995 of each surface. Since the average transmittance of the linear variable filter is 0.9, the transmittance of the optical system can be calculated as 0.718. We set as 0.7 to have abundant allowance. is the quantum efficiency of the detector, is the F-number of the optical system, is Plank’s constant, is the frequency. The values of the variables used to calculate the SNR are listed as below: , , .

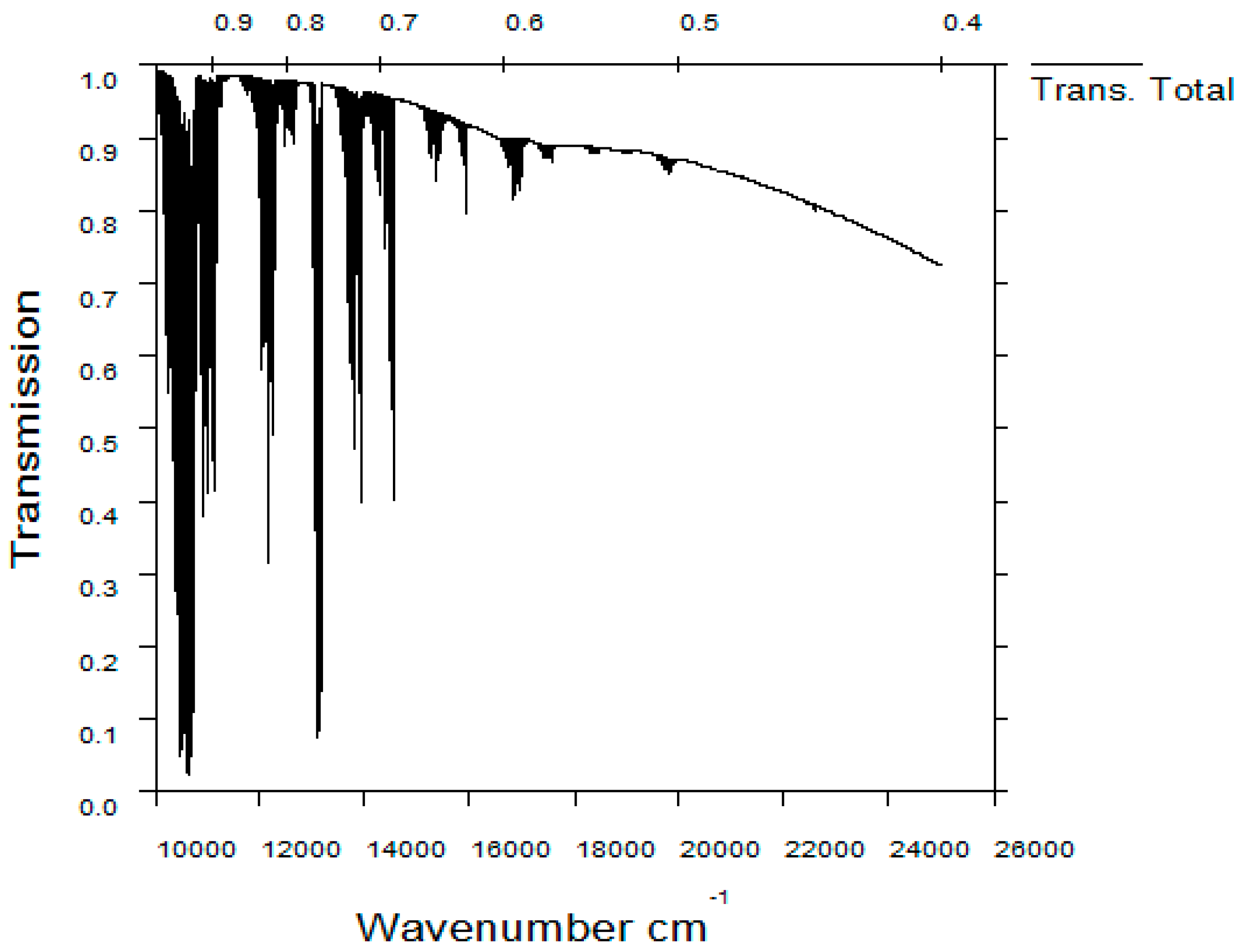

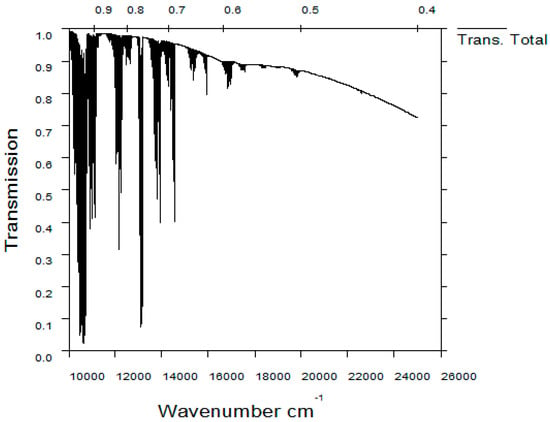

The technical index requires that the SNR of the system is more than 10 when the radiance of the target is 1E-7 W/cm2/sr in each imaging spectral band to distinguish most human activities [22]. The atmospheric transmittance per wavelength in the spectral range of 400–1000 nm obtained from the software MODTRAN is shown in Figure 4. The main parameters used to calculate the transmittance are shown in Table 1. Ignore the band with extremely low transmittance, we take the average transmittance as 85%. According to Figure 4, the wavelength range 400 nm to 450 nm should also be ignored when the radiance of object is quite low due to the poor atmospheric transmittance. Calculated by the above formulas, when F-number and integration number are 4 and 86, respectively, the SNR is 10. When the integration number is 86, the stability of the system should be controlled within 0.001 degree, which is feasible to the existing satellite. Besides, image motion compensation needs to be applied to avoid the image blurring caused by the relative motion between the object and detector. Spatial calibration is also necessary to achieve high-precision detection. In order to balance the performance and volume and weight of the system, we set the F-number to 4. The technical specifications of the optical system are listed in Table 2.

Figure 4.

The atmospheric transmittance per wavelength in the spectral range of 400–1000 nm.

Table 1.

Main parameters of MODTRAN used to calculate transmittance.

Table 2.

Technical Specifications of the optical system.

4. Image Plane Illumination Analysis

Relative illumination is the ratio of the illumination of the edge of the image plane to that of the center point. Nighttime light remote sensing require that the relative illumination of image plane should be greater than 80% for all fields of view to ensure sufficient SNR. However, the image plane illumination decreases as the field of view increases. Since the half field of view of our system is 21.5°, it is necessary to maintain the illumination uniformity of the image plane. In order to take effective measures to solve this problem, it is essential to determine the relation between the image-plane illumination and other parameters of optical system.

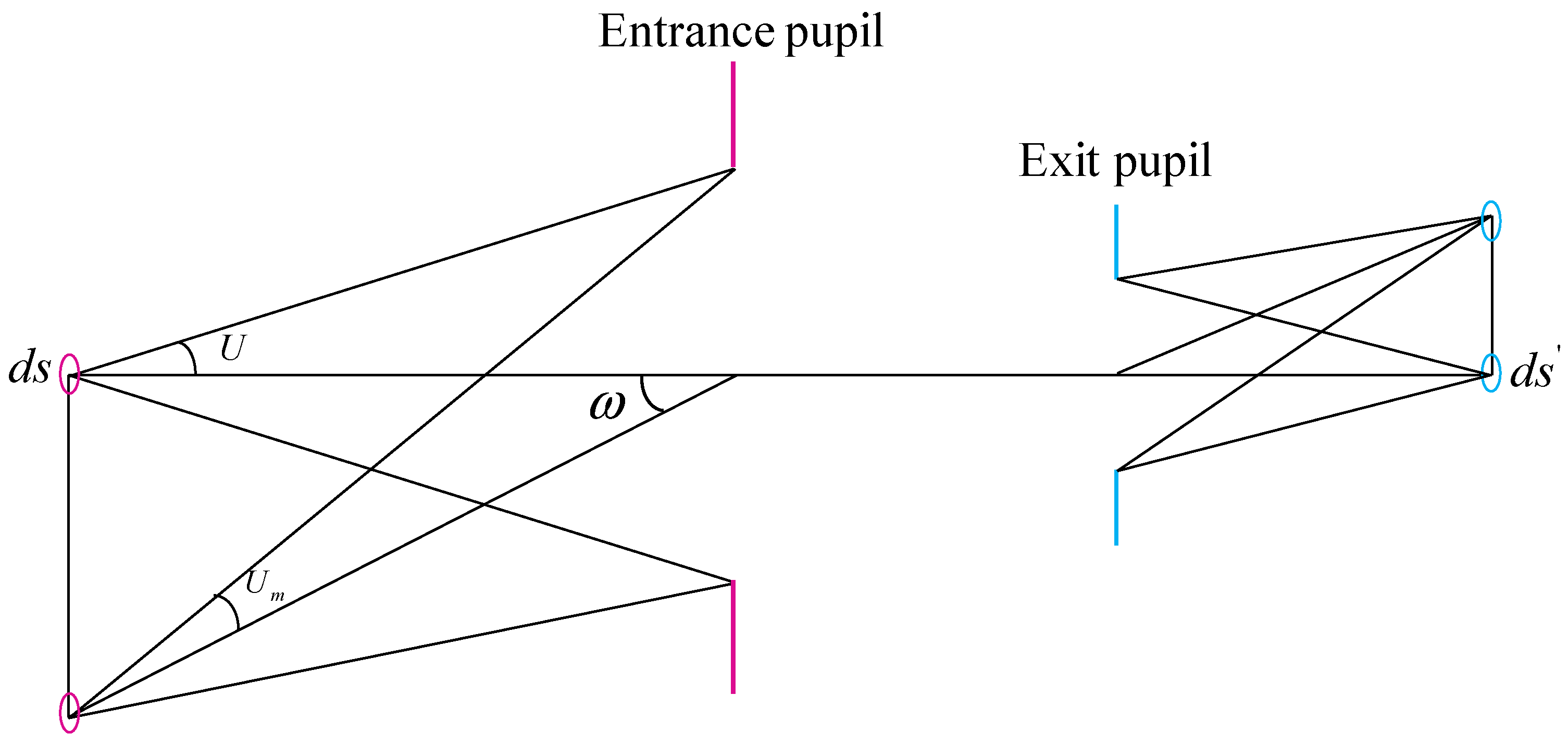

Figure 5 shows the transfer relation between object and image in optical system. Here is a radiation element on the object plane, which is imaged on the image plane as by the optical system. The radiation flux [39] received by the system from is

where is the radiance brightness of the object, is the aperture angle in object space. If the transmittance of the system is , the radiation flux of is

Figure 5.

Transfer relation between object and image in optical system.

Thus, the illumination of is

where is the lateral magnification of the optical system.

For the off-axis field of view, according to the geometric principle, the relationship between on-axis and off-axis aperture angles can be obtained as

Thus, the illumination of off-axis point on image plane is

As analyzed above, the illumination is proportional to the fourth power of the cosine of the angle of half field of view. Therefore, for the optical system with large field of view, the illumination at the edge of image plane is much lower than that at the center position, and we have to adjust the system to improve the uniformity of illumination on image plane.

According to Equation (10), when the half field of view increases, in order to keep the detection ability of the system unchanged, we can only reduce the lateral magnification to improve the illumination. Barrel distortion is the kind of aberration that compresses the edge of the image plane and thus it can make the lateral magnification decrease with the increase in the field of view. The barrel distortion could then be employed to improve the illumination of the edge field of view. However, for an imaging spectrometer based on linear variable filter, too much distortion will lead to sever spectrum crosstalk between different optical channels. Thus, taking the use of barrel distortion to promote the uniformity of image plane illumination is not desirable, and we have to find other ways.

Unlike paraxial optical system, pupil aberrations increase sharply in the optical system with large field of view [40]. Pupil aberrations in the optical system with large field of view will make the location and size of the entrance pupil at the off-axis field of view different from that at the paraxial field of view. Among several pupil aberrations, pupil spherical aberration and pupil coma have the greatest influence on the entrance pupil. Pupil spherical aberration makes the location of the entrance pupil at the edge of the field of view deviate from the ideal position, thus it mainly affects the calibration of the chief ray and has less influence on the relative illumination. Pupil coma can make the beam width of the off-axis field at the entrance pupil larger than that of the center field when both of them completely fill the stop aperture [41]. Such vignetting effect of pupil aberration can ensure that the off-axis object has a larger aperture than the on-axis object, so it is very beneficial to improve the relative illumination. Thus, we will improve the image plane illumination of the edge field of view by taking advantage of pupil coma.

5. Optical System Design and Optimization

5.1. Initial Optical Structure Design

According to the technical specifications of our system calculated in Section 3, we selected an inverse telephoto structure with 13 lenses as the starting point for further design. The first element of the system is set as a negative meniscus lens to compress the angle of the light in the off-axis field of view relative to the optical axis to reduce the diameter of the other elements. Besides, taking a negative meniscus lens as the first element contributes to correct chromatic aberration and generate pupil coma which is helpful to improve the image plane relative illumination. Due to the potential for spectral shift of the linear variable filter, the system should possess a telecentric optical path in image space to ensure that the chief ray in each field of view can be perpendicular incident on the filter and focal plane. In order to realize the telecentric characteristic of the system, a positive lens group is arranged in front of the focal plane to compress the emergent light so that it is parallel to the optical axis. two separated positive and negative lens groups are introduced to the system to emend the field curvature. Since the working band of the system is too wide, it is important to eliminate chromatic aberration and secondary spectrum. In order to achieve excellent chromatic correction, cemented doublet composed of a low dispersion crown glass and a short flint glass is usually used. However, cemented doublet is structurally unstable and rarely used in aerospace camera. Thus, we choose two air-spaced doublets that present the same optical properties in the middle of the system for apochromatism. In order to increase the transmittance of the system, we chose the glass with the high transmittance in the range of 400–1000 nm and controlled the angle between the light and the normal of each surface as small as possible to reduce the reflection of the light. For the purpose of increasing the optimization degrees of freedom, we added two lenses to the system.

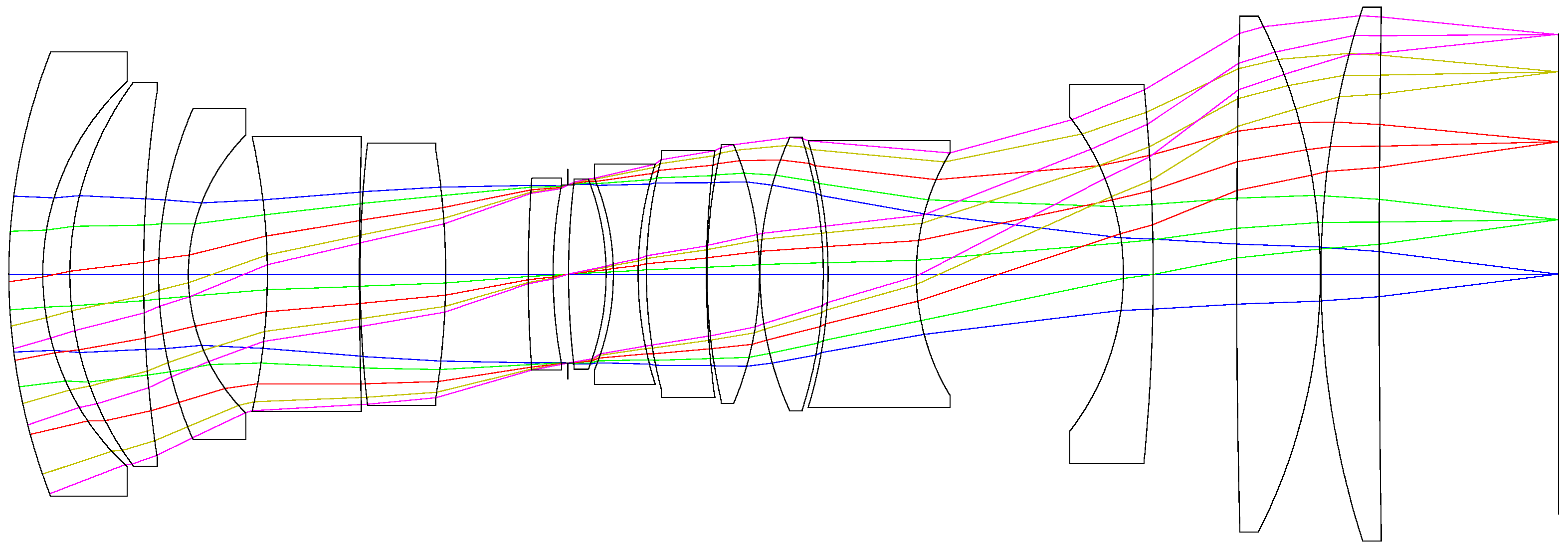

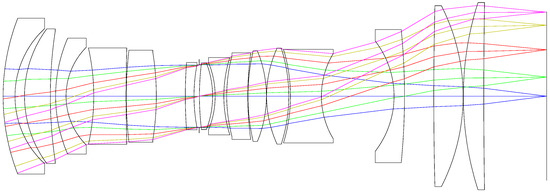

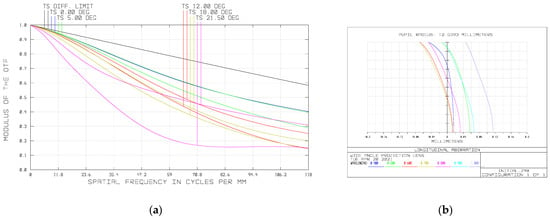

We then optimized the system with optical design software ZEMAX. After several iterations of optimization, the initial optical system was obtained, as shown in Figure 6. This is a quasi-symmetric system with a telecentric light path in image space. The MTF and longitudinal aberration of the system is shown in Figure 7. It can be seen that the MTF of the marginal field of view is very low, which means a poor image quality. We take the chromatic aberration at the pupil of 0.707 as the major reference, because achromatism of such pupil makes the chromatic aberration at the full pupil close to that of the paraxial area and thus achieve the best achromatic effect. In the figure of the longitudinal aberration curves, the wavelengths of 400 nm, 700 nm, 1000 nm do not converge in a point at the pupil of 0.707 and the chromatic aberration of the system at this zone is 0.0718 mm, which means that the system does not achieve apochromatism. To enhance the apochromatic ability, we need to replace the inappropriate glass in the system. Therefore, material selection method is the key to the subsequent design. In this paper, we will use the Buchdahl model to analyze the dispersion characteristics of materials and select proper glass for our system.

Figure 6.

Optical configuration of the initial system.

Figure 7.

(a) MTF; (b) longitudinal aberration of the initial system.

5.2. Buchdahl Dispersion Model

Dispersion is defined as the variation of refractive index with wavelength, and thus accurate description of the refractive index of glass materials is the premise of studying dispersion. The most commonly used model to calculate the refractive index of glass materials is

where is the refractive index when the wavelength is , is the coefficients of glass material.

The dispersive properties of glass can be modeled using the Taylor series expansion, which is expressed as

where , , represents the base wavelength. It can be seen that Equation (11) describes the relationship between the square of refractive index and wavelength, which will cause the calculation process of Equation (12) very complicated. Besides, Equation (12) converges very slowly even for a small value of , leading to a large number of terms to be calculated to obtain accurate result [42]. For the theoretical analysis of dispersive properties, Equations (11) and (12) are too cumbersome for practical application. In order to simplify the calculation process and ensure the accuracy, fast convergent formula of refractive index is essential.

Buchdahl model [43] simplifies the dispersion equation by changing the wavelength into chromatic coordinate , which is defined as

where is a constant that makes Buchdahl refractive index equation converge fast. It can be calculated by

Here the unit of is μm. , which is the Hartman formula constant and can be used to model for all types of glass [44]

By replacing the wavelength with chromatic coordinate, the Buchdahl’s equation for the refractive index is given by

where is the Buchdahl dispersion coefficients, which characterize the dispersion of the glass and the value is unique to each glass. Buchdahl’s equation for the refractive index converges very fast. When used to model the dispersive properties of the glasses, a quadratic equation is sufficient to achieve satisfactory accuracy. Thus, only and are necessary to be determined and the calculation process can be found in reference [43].

5.3. Method of Glass Selection for Apochromatic

As a function of wavelength, dispersion power [45] of an optical glass is defined as

Through proper variables’ transformation, the dispersion power can be expressed by Buchdahl model as

where is called the dispersion coefficient, n is the order of the

equation.

The expression for the lateral chromatic aberration of an optical system with the object at infinity can be expressed by Buchdahl model as

where TAchc is the lateral chromatic aberration, is the dispersion power of the whole system, is the ray height of the i-th lens, is the focal power of the i-th lens, is the focal power of the whole system, m is the number of lenses in the optical system. Taking the quadratic Buchdahl model into Equation (20), we can get the following equations:

where and are the primary and secondary dispersion coefficients of the whole system, and are primary and secondary dispersion coefficients of the i-th lens, is scale factor. If we take the dispersion properties of each glass as a vector in the coordinate system denoted by and , then the vector representing the dispersion of the whole system can be calculated by

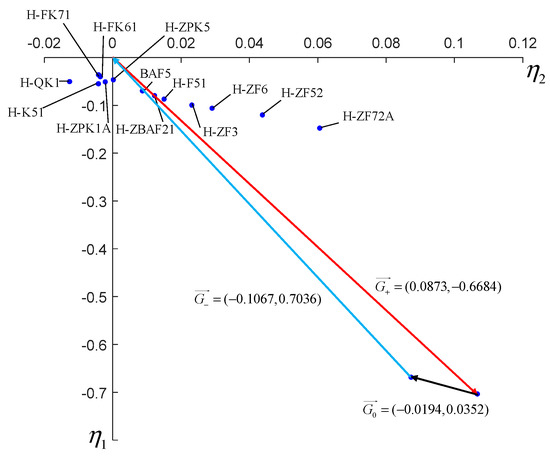

where , . We can then take the module of as the criterion to measure the apochromatic ability of the optical system. In order to obtain an apochromatic optical system, we should adjust the scale factors and change the glass in certain lenses to shorten the module of the vector .

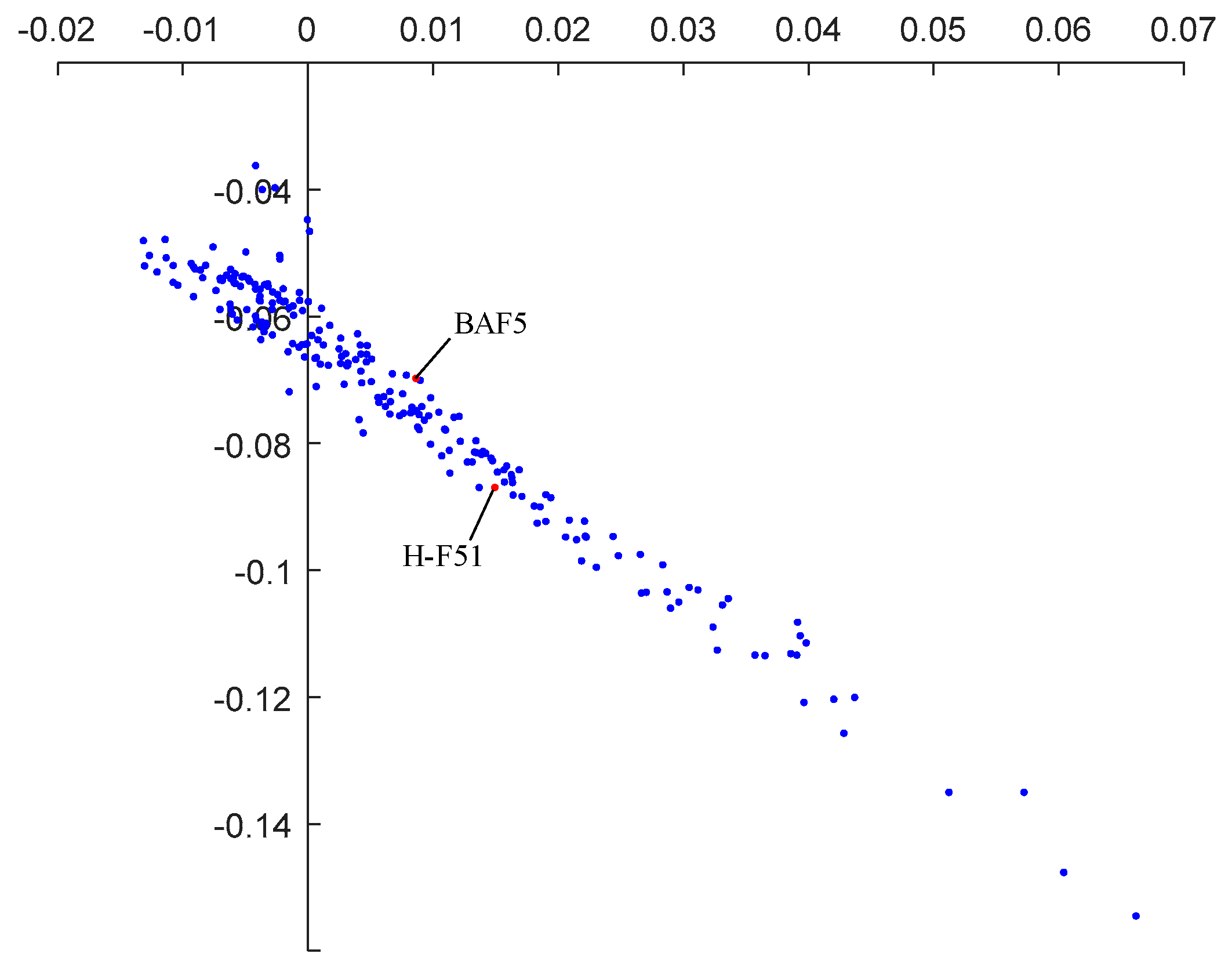

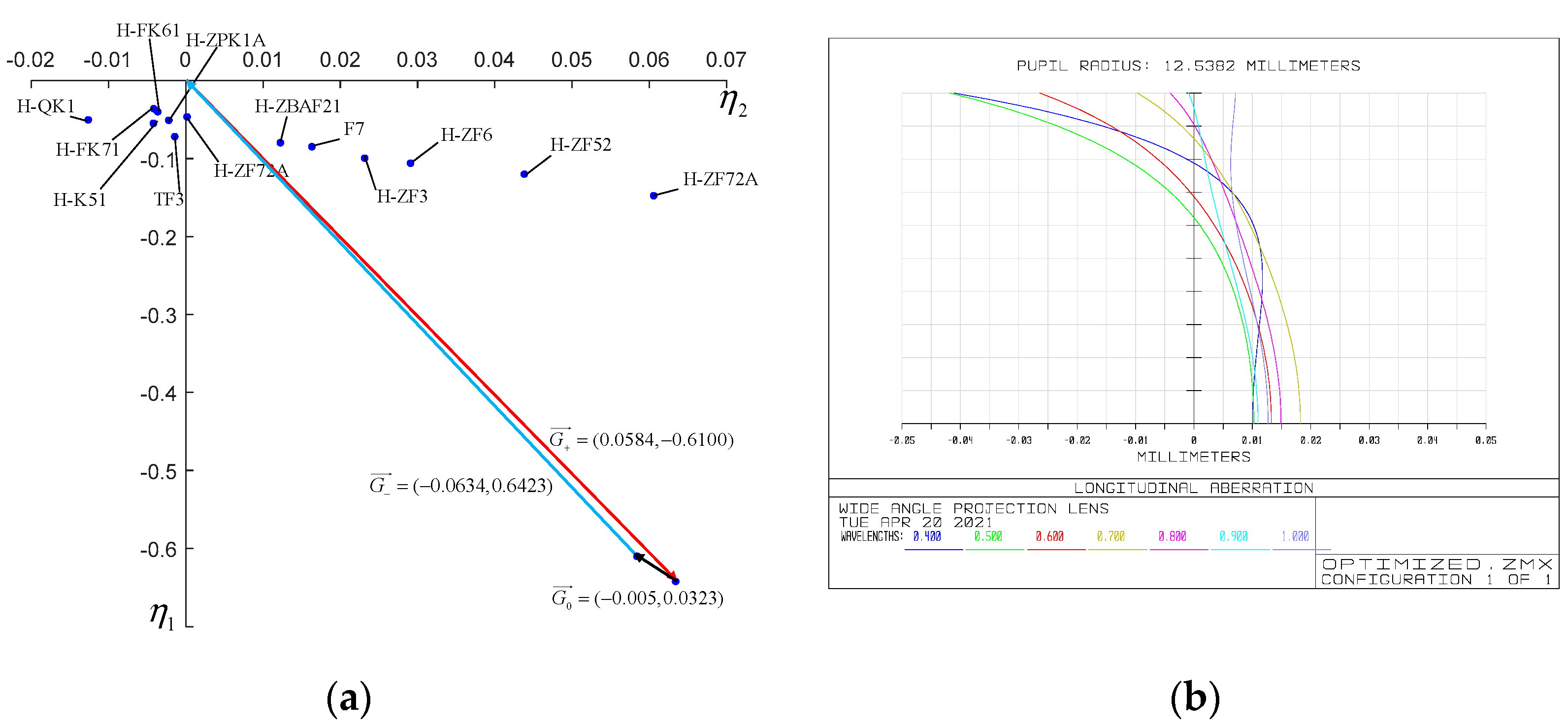

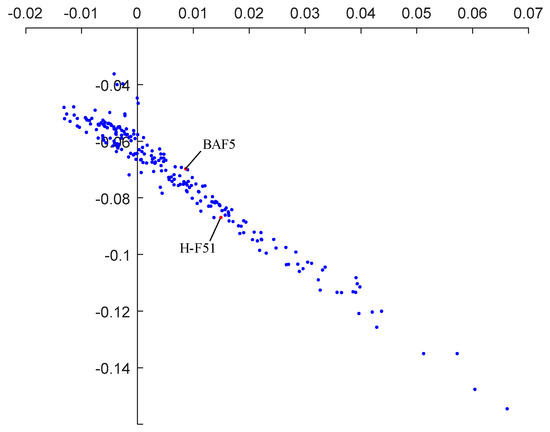

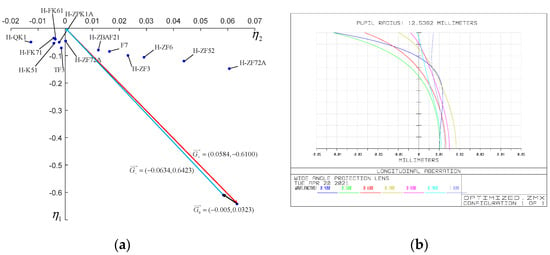

5.4. Dispersion Vector Analysis and Glass Replacement

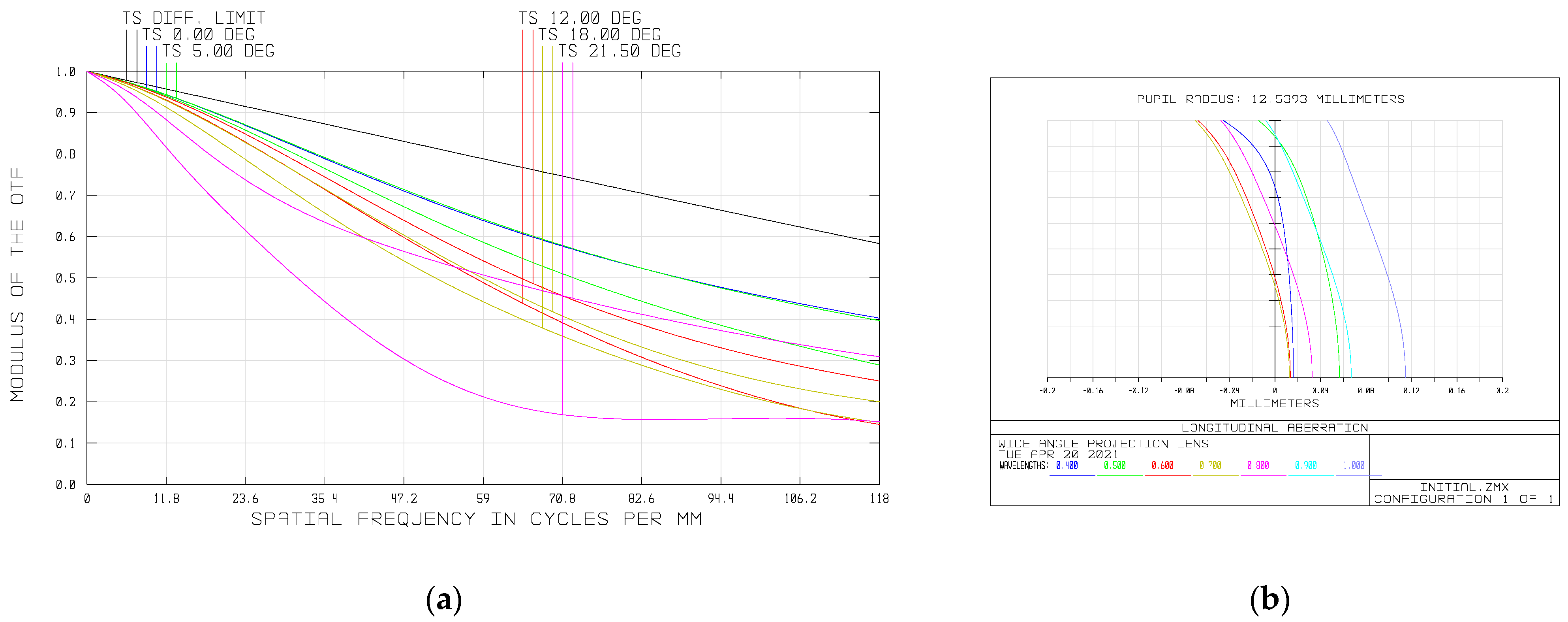

We have conducted raytracing to our initial optical system and the focal power, ray height, refractive index at the reference wavelength, scale factor of dispersion vector and dispersion coefficient of each lens are listed in Table 3. The coordinate of the dispersion coefficient of each lens and the dispersion vector of the whole system in the two-dimensional coordinate system are shown in Figure 6. The dispersion vector of the whole system is and its module is 0.0402. It can be seen from Figure 8 that the sum dispersion vector of the negative lenses is far away from that of the positive lenses, which lead to a large module of and thus an excessive chromatic aberration. In order to reduce the module of , we need to replace the glass material of some lenses. In consideration of the need to maintain the stability of the focal power, the glass far from the origin in the dispersion vector coordinate system cannot be replaced. However, the glass close to the origin has little effect on the chromatic aberration of the system. Thus, we should change the glass in the middle of Figure 8. It can be seen that BAF5, H-ZBAF21, H-F51 and H-ZF3 are in the middle place of Figure 8. However, H-ZF3 and H-ZBAF21 correspond to the second lens and the fifteenth lens of the system, respectively. In our system, the second lens is used to cooperate with the first lens to compress the incident light and reduce the primary aberration and thus has a great influence on the light path. Therefore, the material of the second lens cannot be replaced. As we mentioned in Section 5.1, the fifteenth lens is arranged to compress the emergent light to realize the telecentric characteristic of the system. If the material of the fifteenth lens is changed, the light path will be affected, leading to the loss of telecentric characteristic. BAF5 and H-F51 correspond to the eighth and twelfth lenses, and they are all part of the air-spaced doublets used to reduce chromatic aberration, as mentioned in Section 5.1. Therefore, we choose to replace the glass materials of the eighth and twelfth lenses. The new glass we select should be close to the original ones in the coordinate system so that their coefficients do not differ too much and thus prevent the increase of the other aberrations and excessive change of focal power. Figure 9 shows the dispersion coefficient of all glasses produced by CDGM. According to the principles above, glass materials TF3 and F7 are selected to replace BAF5 and H-F51. After the materials’ replacement, we optimize the system with the software ZEMAX and the raytracing parameters of the optimized system are listed in Table 4. The dispersion vector of the optimized optical system is shown in Figure 10a, from which we can see that the module of the vector is 0.0326, much smaller than the former system. The longitudinal aberration curves of the system are shown in Figure 10b. It can be seen that the wavelengths of 400 nm, 700 nm, 1000 nm converge in a point at the pupil of 0.707. The chromatic aberration and secondary spectrum of the system at the pupil of 0.707 is 0.06 μm and 0.1 μm, respectively, much less than that of the initial system. Thus, the system actualizes the apochromatism.

Table 3.

Ray-tracing parameters of the initial system.

Figure 8.

Dispersion coefficient of each lens and the dispersion vector of the initial system.

Figure 9.

Dispersion coefficient of each lens and the dispersion vector of the initial system.

Table 4.

Ray-tracing parameters of the optimized system.

Figure 10.

(a) Dispersion coefficient of each lens and the dispersion vector of the optimized system; (b) Longitudinal aberration curves of the optimized system.

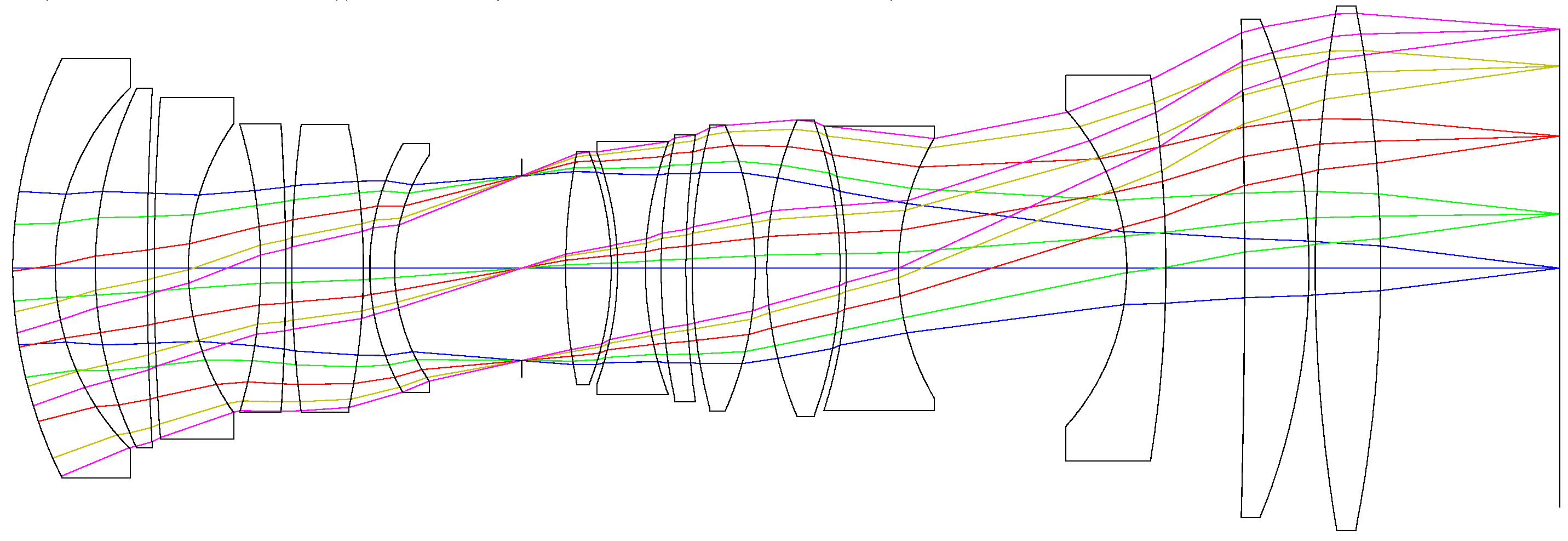

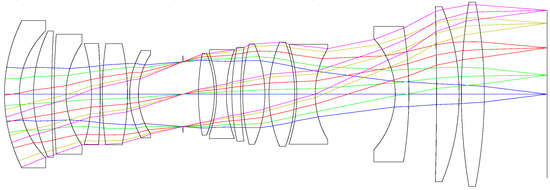

5.5. Final System

The secondary spectrum is only related to the focal length of the whole system and relative partial dispersion and Abbe number of the glass. Therefore, when the focal length and the glass in the system are fixed, the secondary spectrum will not increase when optimizing the structure parameters of the system. The position of the stop aperture plays an important role in determining the lens size and the correction of distortion and field curvature, and thus, we have to adjust it for a better image quality. Besides, we should also alter the shape and bending factors of the lenses to control the distortion of the system at a low level. There must be enough distance between different elements to provide adequate clearance for mechanical spacer and to correct the coma and astigmatism. In order to improve the optical transmittance of the system, all surfaces of the lenses will be coated with the anti-reflective film. The thickness of each lens is controlled within a reasonable range to reduce the weight and improve machinability. We then optimized the system using the software ZEMAX after setting the operands to constrain the geometric parameters and optical characteristics. The total axial length of the final system is about 249 mm, and the weight is 1.03 kg. The transmittance of the final system (including linear variable filter) is 0.723 and 0.463 in the spectral range of 500–1000 nm and 400–500 nm, respectively. As mentioned in Section 3, we will improve the quality of images in the range of 400–500 nm by the increase of the integration number or specific image processing algorithm. The optical layout is shown in Figure 11.

Figure 11.

Optical layout of the final system.

6. Image Performance and Tolerance Analysis

6.1. Image Performance

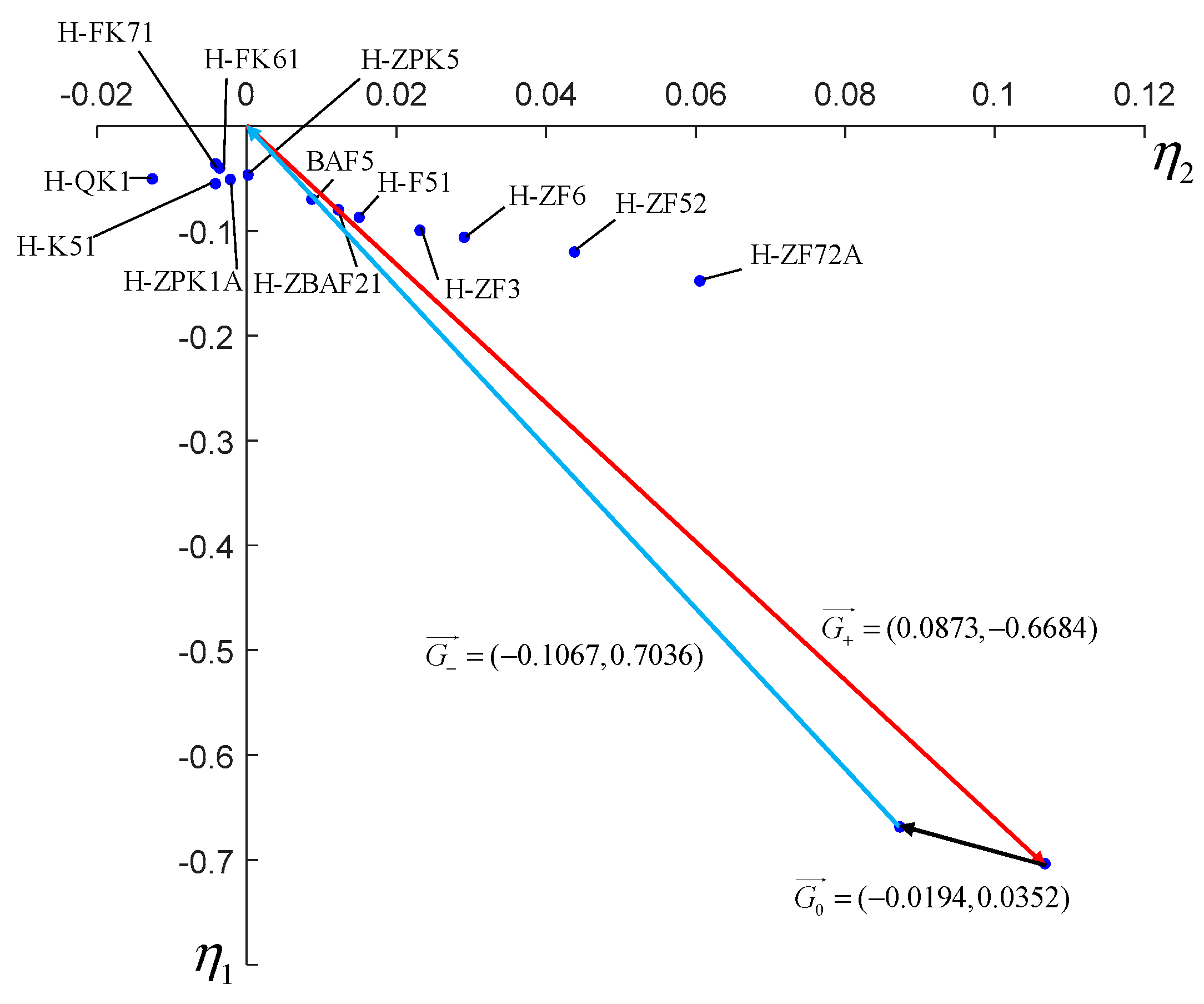

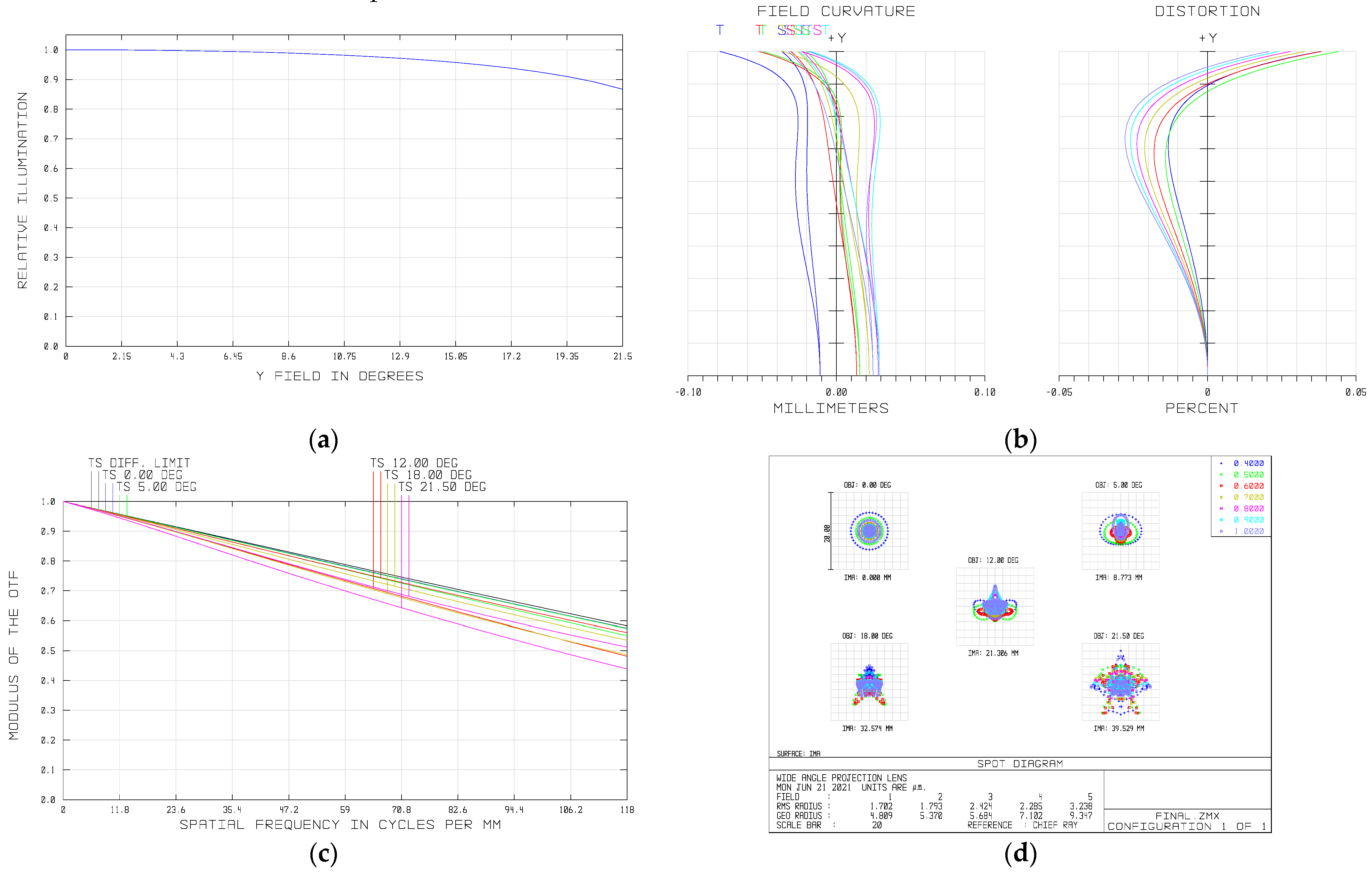

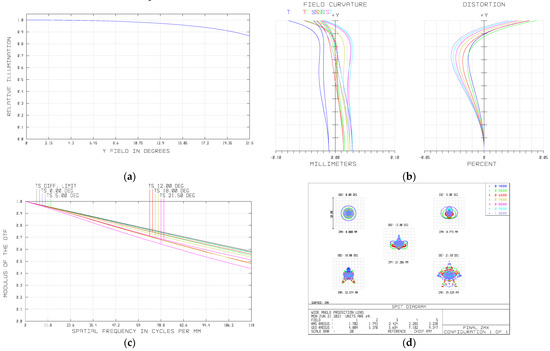

We have known from Section 4 that the relative illumination of the image plane decrease with the fourth power of the cosine value of the half field of view. Our system has a large field of view, and the illumination uniformity must be taken into account. As analyzed in Section 4, we apply to the pupil coma to improve the illumination of the off-axis field of view. The relative illumination curve is shown in Figure 12a, and it can be seen that the relative illumination of the edge field of view is above 85%, better than the design requirement.

Figure 12.

(a) Relative illumination of the image plane; (b) Field curvature and distortion of the final system; (c) MTF of the final system; (d) Spot diagram of the final system.

As an imaging spectrometer, the distortion must be controlled within a reasonable range. Figure 12b shows the curves of the distortion and field curvature of the system, from which we can see that the maximum distortion is less than 0.05% and the distortion correction is excellent, ensuring high-precision detection of the system. The field curvature of all bands is less than 0.08 mm, meaning a good correction.

The MTF represents the image quality of the optical system. As is shown in Figure 12c, the MTF of the full field of view exceeds 0.4 at 118 lp/mm, better than the design requirement. Therefore, the system possesses a good contrast ratio and the image quality of on axis and off axis exhibits good consistency. The spot diagram is shown in Figure 12d. It can be seen that the root mean square (RMS) diameter is less than on single pixel size (4.25 μm) over the whole field of view, meaning a high resolution of the system.

6.2. Tolerance Analysis

Tolerance analysis is used to systematically analyze the influence of slight error or chromatic dispersion on optical system performance. The purpose of tolerance analysis is to determine the types and values of the errors and introduce them into the optical system to analyze whether the system performance meets the practical requirement. In our system, we take the average diffraction MTF at 118 lp/mm as the evaluation criterion and allocate the tolerance as Table 5. According to the result of tolerance analysis, the average diffraction MTF at 118 lp/mm is above a value of 0.26 with a probability of 90%, which means that the system meets the requirement of practical fabrication and alignment.

Table 5.

Tolerance Allocation.

7. Discussion and Conclusions

We have designed an apochromatic optical system with a wide field of view for spectral remote sensing of nighttime light. Compared with the existing nighttime light remote sensors, our system meeting the requirements of high spatial and high spectral resolution, wide working band and wide swath width simultaneously. It can obtain the spectral information of eight bands in the range of 400 nm to 1000 nm with the ground sample distance of 21.5 m while the radiance of the target is above 1E-7 W/cm2/sr. Besides, the width coverage of our system reaches 320 km. We first determined the parameters of the system according to the practical requirement. Then we designed the initial structure of the optical system. In the optimization process, we applied the pupil coma to improve the relative illumination of the edge field of view. In order to achieve apochromatism, we employed the vector operation based on Buchdahl model to analyze the dispersion characteristics of glass and replace the glass materials of certain lenses in the system. After the final optimization, the system possesses excellent image quality. The RMS spot sizes of different field of view are all less than 4.25 μm, within one-pixel size. The MTF is above 0.4 at the Nyquist frequency of 118 lp/mm. The field curvature and distortion are also well corrected. The result of tolerance analysis shows that the system meets the requirement of fabrication and alignment.

The scheme of combining three lenses together can also enlarge the field of view. Such scheme can simplify the process of optical design. However, the volume and weight of the whole system will increase significantly, and the higher stability of the platform will be required. In contrast, our single lens scheme possesses the advantages of compactness and lightweight. Besides, it needs less balancing weight, promoting the stability of the whole system. Another scheme is to introduce aspheric surface to the optical system to use less glass and thus improve the transmittance. However, considering the price of an aspheric lens is about ten times that of spherical lens, the cost will increase sharply. The reflective optical system has a transmittance close to 1 but has a high cost and is difficult to realize the telecentric characteristics.

Author Contributions

Conceptualization, Y.X.; Formal analysis, Y.X.; Funding acquisition, C.L.; Methodology, Y.X.; Project administration, C.L.; Resources, S.L.; Supervision, C.L.; Validation, S.L. and X.F.; Writing—original draft, Y.X.; Writing—review and editing, C.L. All authors have read and agreed to the published version of the manuscript.

Funding

This paper was supported by the National Key Research and Development Program of China (Grant No. 2016YFB0501200).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to technical secrets.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Levin, N.; Kyba, C.C.M.; Zhang, Q.L.; de Miguel, A.S.; Roman, M.O.; Li, X.; Portnov, B.A.; Molthan, A.L.; Jechow, A.; Miller, S.D.; et al. Remote sensing of nighttime lights: A review and an outlook for the future. Remote Sens. Environ. 2020, 237, 33. [Google Scholar] [CrossRef]

- Levin, N.; Kyba, C.C.M.; Zhang, Q. Remote Sensing of Nighttime lights-Beyond DMSP. Remote Sens. 2019, 11, 1472. [Google Scholar] [CrossRef] [Green Version]

- Li, X.; Elvidge, C.; Zhou, Y.Y.; Cao, C.Y.; Warner, T. Remote sensing of night-time light. Int. J. Remote Sens. 2017, 38, 5855–5859. [Google Scholar] [CrossRef] [Green Version]

- Zheng, Q.M.; Weng, Q.H.; Wang, K. Developing a new cross-sensor calibration model for DMSP-OLS and Suomi-NPP VIIRS night-light imageries. ISPRS J. Photogramm. Remote Sens. 2019, 153, 36–47. [Google Scholar] [CrossRef]

- Li, D.R.; Zhao, X.; Li, X. Remote sensing of human beings—A perspective from nighttime light. Geo-Spat. Inf. Sci. 2016, 19, 69–79. [Google Scholar] [CrossRef] [Green Version]

- Zhao, M.; Zhou, Y.Y.; Li, X.C.; Cao, W.T.; He, C.Y.; Yu, B.L.; Li, X.; Elvidge, C.D.; Cheng, W.M.; Zhou, C.H. Applications of Satellite Remote Sensing of Nighttime Light Observations: Advances, Challenges, and Perspectives. Remote Sens. 2019, 11, 1971. [Google Scholar] [CrossRef] [Green Version]

- Elvidge, C.D.; Imhoff, M.L.; Baugh, K.E.; Hobson, V.R.; Nelson, I.; Safran, J.; Dietz, J.B.; Tuttle, B.T. Night-time lights of the world: 1994–1995. ISPRS J. Photogramm. Remote Sens. 2001, 56, 81–99. [Google Scholar] [CrossRef]

- Elvidge, C.D.; Baugh, K.E.; Zhizhin, M.; Hsu, F.C. Why VIIRS data are superior to DMSP for mapping nighttime lights. Proc. Asia-Pac. Adv. Netw. 2013, 35, 62–69. [Google Scholar] [CrossRef] [Green Version]

- Baugh, K.; Elvidge, C.; Ghosh, T.; Ziskin, D. Development of a 2009 Stable Lights Product using DMSP-OLS data. Proc. Asia-Pac. Adv. Netw. 2010, 30, 114. [Google Scholar] [CrossRef]

- Elvidge, C.D.; Baugh, K.E.; Dietz, J.B.; Bland, T.; Sutton, P.C.; Kroehl, H.W. Radiance calibration of DMSP-OLS low-light imaging data of human settlements. Remote Sens. Environ. 1999, 68, 77–88. [Google Scholar] [CrossRef]

- Miller, S.D.; Straka, W., III; Mills, S.P.; Elvidge, C.D.; Lee, T.F.; Solbrig, J.; Walther, A.; Heidinger, A.K.; Weiss, S.C. Illuminating the Capabilities of the Suomi National Polar-Orbiting Partnership (NPP) Visible Infrared Imaging Radiometer Suite (VIIRS) Day/Night Band. Remote Sens. 2013, 5, 6717–6766. [Google Scholar] [CrossRef] [Green Version]

- Liao, L.B.; Weiss, S.; Mills, S.; Hauss, B. Suomi NPP VIIRS day-night band on-orbit performance. J. Geophys. Res. Atmos. 2013, 118, 12705–12718. [Google Scholar] [CrossRef]

- Liang, C.K.; Mills, S.; Hauss, B.I.; Miller, S.D. Improved VIIRS Day/Night Band Imagery with Near-Constant Contrast. IEEE Trans. Geosci. Remote Sens. 2014, 52, 6964–6971. [Google Scholar] [CrossRef]

- Shi, K.F.; Yu, B.L.; Huang, Y.X.; Hu, Y.J.; Yin, B.; Chen, Z.Q.; Chen, L.J.; Wu, J.P. Evaluating the Ability of NPP-VIIRS Nighttime Light Data to Estimate the Gross Domestic Product and the Electric Power Consumption of China at Multiple Scales: A Comparison with DMSP-OLS Data. Remote Sens. 2014, 6, 1705–1724. [Google Scholar] [CrossRef] [Green Version]

- Small, C.; Elvidge, C.D.; Baugh, K. Mapping Urban Structure and Spatial Connectivity with VIIRS and OLS Nighttime light Imagery. In Proceedings of the Joint Urban Remote Sensing Event 2013, Sao Paulo, Brazil, 21–23 April 2013; pp. 230–233. [Google Scholar] [CrossRef]

- Hillger, D.; Kopp, T.; Lee, T.; Lindsey, D.; Seaman, C.; Miller, S.; Solbrig, J.; Kidder, S.; Bachmeier, S.; Jasmin, T.; et al. First-Light Imagery from Suomi NPP VIIRS. Bull. Amer. Meteorol. Soc. 2013, 94, 1019–1029. [Google Scholar] [CrossRef]

- Li, X.; Zhao, L.X.; Li, D.R.; Xu, H.M. Mapping Urban Extent Using Luojia 1-01 Nighttime Light Imagery. Sensors 2018, 18, 3665. [Google Scholar] [CrossRef] [Green Version]

- Li, X.; Li, X.Y.; Li, D.R.; He, X.J.; Jendryke, M. A preliminary investigation of Luojia-1 night-time light imagery. Remote Sens. Lett. 2019, 10, 526–535. [Google Scholar] [CrossRef]

- Zheng, Q.M.; Weng, Q.H.; Huang, L.Y.; Wang, K.; Deng, J.S.; Jiang, R.W.; Ye, Z.R.; Gan, M.Y. A new source of multi-spectral high spatial resolution night-time light imagery-JL1-3B. Remote Sens. Environ. 2018, 215, 300–312. [Google Scholar] [CrossRef]

- Levin, N.; Johansen, K.; Hacker, J.M.; Phinn, S. A new source for high spatial resolution night time images—The EROS-B commercial satellite. Remote Sens. Environ. 2014, 149, 1–12. [Google Scholar] [CrossRef]

- Kuechly, H.U.; Kyba, C.C.M.; Ruhtz, T.; Lindemann, C.; Wolter, C.; Fischer, J.; Holker, F. Aerial survey and spatial analysis of sources of light pollution in Berlin, Germany. Remote Sens. Environ. 2012, 126, 39–50. [Google Scholar] [CrossRef]

- Elvidge, C.D.; Cinzano, P.; Pettit, D.R.; Arvesen, J.; Sutton, P.; Small, C.; Nemani, R.; Longcore, T.; Rich, C.; Safran, J.; et al. The Nightsat mission concept. Int. J. Remote Sens. 2007, 28, 2645–2670. [Google Scholar] [CrossRef]

- Xue, Q.S.; Tian, Z.T.; Yang, B.; Liang, J.S.; Li, C.; Wang, F.P.; Li, Q. Underwater hyperspectral imaging system using a prism-grating-prism structure. Appl. Opt. 2021, 60, 894–900. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.J.; Yang, J.; Liu, J.N.; Liu, J.L.; Sun, C.; Li, X.T.; Bayanheshig; Cui, J.C. Optics design of a short-wave infrared prism-grating imaging spectrometer. Appl. Opt. 2018, 57, F8–F14. [Google Scholar] [CrossRef]

- Zhu, C.X.; Hobbs, M.J.; Grainger, M.P.; Willmott, J.R. Design and realization of a wide field of view infrared scanning system with an integrated micro-electromechanical system mirror. Appl. Opt. 2018, 57, 10449–10457. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.; Yuan, Q.; Ye, J.F.; Xu, N.Y.; Cao, X.; Gao, Z.S. Design of a compact dual-view endoscope based on a hybrid lens with annularly stitched aspheres. Opt. Commun. 2019, 453, 8. [Google Scholar] [CrossRef]

- Liang, D.; Liang, D.T.; Wang, X.Y.; Li, P. Flexible fluidic lens with polymer membrane and multi-flow structure. Opt. Commun. 2018, 421, 7–13. [Google Scholar] [CrossRef]

- Grammatin, A.P.; Kolesnik, E.V. Calculations for optical systems, using the method of simulation modelling. J. Opt. Technol. 2004, 71, 211–213. [Google Scholar] [CrossRef]

- Sigler, R.D. Apochromatic color correction using liquid lenses. Appl. Opt. 1990, 29, 2451–2459. [Google Scholar] [CrossRef] [PubMed]

- Sigler, R.D. Glass selection for airspaced apochromats using the Buchdal dispersion equation. Appl. Opt. 1986, 25, 4311–4320. [Google Scholar] [CrossRef]

- Pawlowski, M.E.; Dwight, J.G.; Thuc Uyen, N.; Tkaczyk, T.S. High performance image mapping spectrometer (IMS) for snapshot hyperspectral imaging applications. Opt. Express 2019, 27, 1597–1612. [Google Scholar] [CrossRef]

- Fotios, S.; Gibbons, R. Road lighting research for drivers and pedestrians: The basis of luminance and illuminance recommendations. Lighting Res. Technol. 2018, 50, 154–186. [Google Scholar] [CrossRef]

- Kyba, C.C.M.; Garz, S.; Kuechly, H.; de Miguel, A.S.; Zamorano, J.; Fischer, J.; Holker, F. High-Resolution Imagery of Earth at Night: New Sources, Opportunities and Challenges. Remote Sens. 2015, 7, 1–23. [Google Scholar] [CrossRef] [Green Version]

- Gpixel. Available online: https://www.gpixel.com/ (accessed on 10 June 2021).

- De Meester, J.; Storch, T. Optimized Performance Parameters for Nighttime Multispectral Satellite Imagery to Analyze Lightings in Urban Areas. Sensors 2020, 20, 3313. [Google Scholar] [CrossRef] [PubMed]

- Yao, Y.; Xu, Y.; Ding, Y.; Yuan, G. Optical-system design for large field-of-view three-line array airborne mapping camera. Opt. Precis. Eng. 2018, 26, 2334–2343. [Google Scholar] [CrossRef]

- Zhang, Z.D.; Zheng, W.B.; Gong, D.; Li, H.Z. Design of a zoom telescope optical system with a large aperture, long focal length, and wide field of view via a catadioptric switching solution. J. Opt. Technol. 2021, 88, 14–20. [Google Scholar] [CrossRef]

- Shi, Z.; Yu, L.; Cao, D.; Wu, Q.; Yu, X.; Lin, G. Airborne ultraviolet imaging system for oil slick surveillance: Oil-seawater contrast, imaging concept, signal-to-noise ratio, optical design, and optomechanical model. Appl. Opt. 2015, 54, 7648–7655. [Google Scholar] [CrossRef]

- Mouroulis, P.; Macdonald, J. Geometrical Optics and Optical Design; Oxford University Press: Oxford, UK, 1997. [Google Scholar]

- Reshidko, D.; Sasian, J. Role of aberrations in the relative illumination of a lens system. Opt. Eng. 2016, 55, 12. [Google Scholar] [CrossRef] [Green Version]

- Johnson, T.P.; Sasian, J. Image distortion, pupil coma, and relative illumination. Appl. Opt. 2020, 59, G19–G23. [Google Scholar] [CrossRef]

- Robb, P.N.; Mercado, R.I. Calculation of refractive indices using Buchdahl’s chromatic coordinate. Appl. Opt. 1983, 22, 1198–1215. [Google Scholar] [CrossRef]

- Reardon, P.J.; Chipman, R.A. Buchdahl’s glass dispersion coefficients calculated from Schott equation constants. Appl. Opt. 1989, 28, 3520–3523. [Google Scholar] [CrossRef]

- Chipman, R.A.; Reardon, P.J. Buchdahl’s glass dispersion coefficients calculated in the near-infrared. Appl. Opt. 1989, 28, 694–698. [Google Scholar] [CrossRef] [PubMed]

- Wang, W.; Zhong, X.; Liu, J.; Wang, X.H. Apochromatic lens design in he short-wave infrared band using the Buchdahl dispersion mode. Appl. Opt. 2019, 58, 892–903. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).