Abstract

This paper proposes a high-efficiency super-resolution frequency-modulated continuous-wave (FMCW) radar algorithm based on estimation by fast Fourier transform (FFT). In FMCW radar systems, the maximum number of samples is generally determined by the maximum detectable distance. However, targets are often closer than the maximum detectable distance. In this case, even if the number of samples is reduced, the ranges of targets can be estimated without degrading the performance. Based on this property, the proposed algorithm adaptively selects the number of samples used as input to the super-resolution algorithm depends on the coarsely estimated ranges of targets using the FFT. The proposed algorithm employs the reduced samples by the estimated distance by FFT as input to the super resolution algorithm instead of the maximum number of samples set by the maximum detectable distance. By doing so, the proposed algorithm achieves the similar performance of the conventional multiple signal classification algorithm (MUSIC), which is a representative of the super resolution algorithms while the performance does not degrade. Simulation results demonstrate the feasibility and performance improvement provided by the proposed algorithm; that is, the proposed algorithm achieves average complexity reduction of compared to the conventional MUSIC algorithm while achieving its similar performance. Moreover, the improvement provided by the proposed algorithm was verified in practical conditions, as evidenced by our experimental results.

1. Introduction

Radar sensors are a subject of research in various fields, such as defense, space, and vehicles, given their robustness against several conditions, including wind, rain, fog, light, humidity, and temperature [1,2,3,4,5,6]. The ultra wide band (UWB) radar systems with high-resolution and high-precision had been in the spotlight as a representative radar system [3]. The UWB radar systems employ very narrow pulse width and thus they require very wide bandwidth [7,8]. This is the reason for the high complexity of the UWB radar systems. Hence, UWB radar systems are mainly used in fields that are less sensitive to the burden of costs such as defense and space [9]. As the application range of radar gradually expands, those with low cost and low complexity have been placed in the research spotlight among the many types of radar systems. The continuous wave (CW) radar is a representative low-complexity radar system [10,11]. The CW radar systems use only the difference between the carrier frequencies at the transmitter and receiver to estimate the velocity of the target. Since it is only necessary to perform sampling on the sine wave signal corresponding to the carrier frequency difference, it is converted into a digital signal with less complexity burden. However, the CW radar has the limitation that it cannot be used for various purposes because it cannot measure the distance to the target.

As an alternative to these, studies on frequency modulation continuous wave (FMCW) radar systems have been reported [12,13,14,15,16,17,18]. FMCW radar systems are capable of estimating the range, Doppler, and angle of targets, despite their low-cost, low-complexity hardware systems, as their signal processing is performed in a low frequency band after mixing. As the applications of the radar sensors increase, FMCW radar technology is considered one of the most promising technologies. For example, the FMCW radar systems have been applied to surveillance applications [19,20,21]. In [19,20], they have presented the design and test of radar sensing platform based on FMCW radar in transport systems. In addition, they provided for the better trade-off that can be found in terms of power consumption and the detectable range. In [21], however, the authors proposed a solution to rapidly detect the moving targets by utilizing the subtract between two FMCW chirp signals. In [22,23,24,25], they addressed that the FMCW radar is one of the most promising techniques for non-contact monitoring to measure vital signals, such as heart respiration rates. In [23], they showed the human indication by measuring the respiration pattern using the FMCW radar and deep learning algorithm. In [25], the authors presented a vital sign monitoring systems by using the 120 GHz FMCW radar. In [26], a low complexity FMCW radar algorithm was proposed by reducing the dimension of 2D data. Meanwhile, FMCW radar systems had been utilized for vehicles [27,28,29]. In [27], the randomized switched antenna arrays FMCW radar were introduced for automotive applications. They tried to solve the delay-space coupling problem of the traditional switched antenna arrays systems. In [28], they proposed a method to simultaneously detect and classify objects by using a deep learning model, specifically, that you only look once, so-called YOLO, with pre-processed automotive radar signals using FMCW radar.

In FMCW radar systems, meanwhile, fast Fourier transform (FFT)-based estimators are widely employed [19]. In [16], a novel direction of arrival (DOA) estimation algorithm was proposed for FMCW radar systems. This algorithm virtually extends the number of arrays using simple multiplications. The FFT is employed in this algorithm, and thus, the computational complexity of this algorithm is very low compared with other high-resolution algorithms. However, it does not provide a large resolution improvement, although the resolution provided by this algorithm is higher than that of conventional FFT-based estimation algorithms. In [18], an algorithm employing only regions of interest in the total samples was proposed, in order to estimate the distance and the velocity of targets in an attempt to reduce redundant complexity. However, an improvement in resolution was not expected, as this algorithm was also based on the FFT. In other words, it is difficult for FFT-based estimators to distinguish between multiple adjacent targets.

To overcome this disadvantage of FFT-based estimators in FMCW radar systems, several algorithms have been proposed. In [30,31,32,33,34,35,36,37,38,39], various super-resolution algorithms have been proposed, such as the multiple signal classification (MUSIC) and estimation of signal parameters via rotational invariance technique (ESPRIT) algorithms. These algorithms employ eigenvalue decomposition (EVD) or singular-value decomposition (SVD) of the correlation matrix obtained from the received signal, in order to distinguish signal and noise subspaces. The parameters corresponding to the desired signals are accurately estimated using the relationship that the subspace of the signal and the subspace of the noise are orthogonal to each other. However, their computational complexity drastically increases as the number of input samples increases. Thus, these algorithms may not be suitable when the number of input samples is large. Oh et al. [35] employed the inverse of the covariance matrix, instead of the EVD or SVD, to reduce the complexity of super-resolution algorithms. However, under a low signal-to-noise ratio (SNR), the performance of this algorithm was significantly degraded [36]. In [37], a low-complexity MUSIC algorithm for DOA estimation was proposed. This algorithm properly uses the trade-off between the field-of-view (FOV) and the angular resolution. Hence, this algorithm attempts to reduce the computational complexity of the MUSIC algorithm. In this case, however, DOA estimation is considered; thus, the number of inputs to the MUSIC algorithm to be considered is not large, as the maximum number of samples is the same as the number of arrays.

To reduce the computational complexity while exploiting the high-resolution features of super-resolution-based estimators, the algorithm proposed in this paper reduces the number of samples used as input to the MUSIC algorithm, based on the beat frequency estimated by the FFT. In other words, in the proposed algorithm, the number of samples is set based on the distance estimated by FFT, instead of the maximum detectable distance (as in the conventional MUSIC algorithm). Based on this reduced number of samples, the overall complexity of the proposed algorithm is decreased, by using only some of the samples of a given beat signal as the input to the MUSIC algorithm. Compared to [37], in the proposed algorithm, the number of samples used as inputs of the MUSIC algorithm is also determined in various ways according to various situations depending on the estimated distance rather than one threshold condition. To this end, in this paper, we mathematically show the process of how many reduced samples were required for the same performance according to the ratio of the distance estimated by the FFT and the maximum detection distance. Our simulation results confirm the improvement in performance produced by the proposed algorithm; that is, the proposed algorithm can achieve similar performance to the conventional MUSIC algorithm, despite its considerably lower complexity. Moreover, our experimental results verify that the proposed algorithm can operate well in a real environment.

The remainder of this paper is structured as follows: in Section 2, we describe the system model considered in this study. Then, the proposed low-complexity MUSIC algorithm is described in Section 3. In Section 4, through simulations, the performance of the proposed algorithm is compared to that of the conventional MUSIC algorithm and their computational complexities are evaluated. In Section 5, the experimental setup is introduced and the experiment results are provided, which confirm the performance of the proposed algorithm in practical environments. Finally, we conclude this paper in Section 6.

2. System Model for FMCW Radar Systems

The system model of a FMCW radar system consisting of one transmitting (TX) antenna and one receiving (RX) antenna is considered in this section.

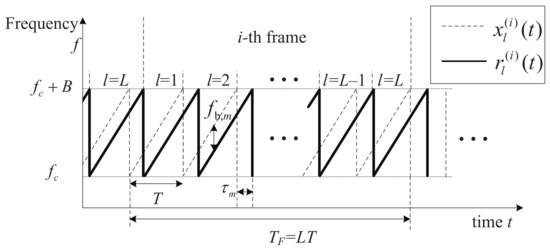

The TX signal at the frame of the FMCW radar is denoted by , which is transmitted from the TX antenna during frames, as shown in Figure 1.

Figure 1.

System model of an FMCW radar.

The TX FMCW signal, , is composed of a total of L ramp signals, and is expressed as [17,18]

where is a ramp signal, expressed as

where is the center frequency and is the sweep rate of the ramp signal. Let B and T denote the system bandwidth and the time duration of the ramp signal , respectively. Hence, is calculated as . The TX signal is reflected on M targets and is then received by the RX antenna. Let denote the RX signal corresponding to the ramp signal, expressed as follows:

where is the complex amplitude component corresponding to the target, is the Doppler frequency due to the movement of the target, is the time delay due to the distance between the radar and the target, and is the complex additive white Gaussian noise (AWGN) component. By multiplying the conjugate of (i.e., ) by , the beat signal is obtained and expressed as [37]

After analog/digital conversion (ADC), changes into the sampled beat signal with a sampling time interval , expressed as

where is the beat frequency (i.e., ). Here, the number of total samples is denoted by , and is expressed as

where is the sampling frequency (i.e., ) and is the floor operator. From Equation (6), it can be shown that the number of total samples is determined by the sampling frequency and T. In addition, is determined by the maximum detectable distance . Using the relation between and [40,41], the minimum sampling frequency is as follows:

where c is the speed of the electromagnetic wave. Hence, the minimum number of samples is denoted by , expressed as

For simplicity, the sampled beat signals are considered for only one frame; thus, the frame index i is omitted. For example, , , and are changed to , , and , respectively. By redefining the coefficient term (i.e., ), the sampled beat signal can be simply expressed as [37]

To effectively denote the variables, the variable is expressed, in vector form, as [37]

where is a vector form of (i.e., ), where is the transpose operator, and and are the matrix and vector corresponding to the beat signal and amplitude , respectively, where and are denoted by complex and real matrices, respectively, and is an AWGN vector. The beat signal matrix, , is composed of M beat signal column vectors for , and is expressed as

The amplitude vector and amplitude matrix are expressed as

As the range of the target is estimated based on the time delay , the range of the target can be obtained in the FMCW radar system by estimating the beat frequency of , which is denoted by . Let denote the estimated range, which is calculated using the time delay as follows:

By substituting into Equation (14), can be obtained by estimating , such that:

The range resolution, , is inversely proportional to the TX waveform bandwidth, B, as follows [42]:

In pulsed radar systems, which is one of the representative radar systems, since bandwidth is a factor that determines the range resolution, increasing T or could not improve the range resolution. From Equation (17), however, in order to improve the resolution performance in FMCW radar systems, an increase in the duration of the FMCW TX signal T or an increase in the number of samples is required for a given (i.e., to decrease ). [40,41] This implies that the computational complexity inevitably increases when improving the resolution performance.

3. Proposed Super-Resolution Algorithm Using FFT-Estimated Ranges

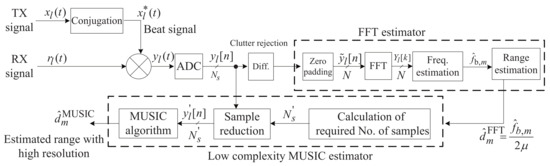

This section describes the proposed low-complexity super-resolution FMCW radar algorithm. Figure 2 shows a block diagram of the proposed algorithm. The proposed algorithm consists of two steps: first, simple clutter is rejected and the ranges are coarsely estimated using the FFT; second, the computational complexity of the super-resolution algorithm is reduced using the estimation results from the first step, thus reducing the considered number of samples used as input to the super-resolution algorithm. For this study, we employed the MUSIC algorithm, which is a representative super-resolution algorithm, for comparative purposes. The details of each step are provided in each subsection.

Figure 2.

Block diagram of the proposed super-resolution algorithm.

3.1. Simple Clutter Rejection and Coarse Range Estimation Using FFT

As mentioned above, the proposed algorithm performs simple clutter rejection and coarse range estimation using the FFT. To achieve simple clutter rejection, the proposed algorithm determines the difference between and , which are the partial matrices composed of for and , respectively. Let denote the difference between the two partial matrices; that is, . In general, the clutters that do not move do not generate Doppler. This means that is the same, regardless of l, ignoring the effect due to the noise component. Therefore, in , only the signals corresponding to targets whose velocity is not zero remain. Then, the FFT operation is performed on the sampled beat signal, , in order to estimate the range of targets. For the convenience of notation, the ramp signal of is denoted as . The FFT output of is represented by , and is calculated as

where N is the size of the FFT, which is a power of 2. The FFT output is expressed, in vector form, as

where is an matrix for the discrete Fourier transform operation, which consists of L column vectors; that is, , where is the column vector of (i.e., for ). Furthermore, is vector concatenating and for zero-padding (i.e., , where is a zero vector). By peak detection of the magnitude of the FFT outputs, the estimated beat frequency, , can be obtained. By substituting into Equation (15), the estimated range, , is obtained.

3.2. Fine Range Estimation Using MUSIC

As shown in Figure 2, after coarse range estimation using the FFT, fine range estimation is performed by the MUSIC algorithm, which can achieve a higher resolution, compared with the FFT. The MUSIC algorithm achieves significantly higher resolution performance than the FFT by using the orthogonality between the noise and signal subspaces. The matrix form of the beat signal is denoted by: Let denote the matrix form of the beat signal (i.e., ). Let denote the correlation matrix of , expressed as follows [18]:

where is the Hermitian operator and is the element at the row and column of . By performing SVD on , the signal and noise subspaces can be separated as [18]

where is the subspace of the signal (i.e., ); corresponds to the subspace of the AWGN component (i.e., ); and is a diagonal matrix based on eigenvalues (i.e., , where is the eigenvalue of and is the diagonal matrix operator). The eigenvalue, , is given as

where corresponds to the eigenvalue of the considered signal part and corresponds to the noise variance. The region of the beat frequency is divided into a grid of values (i.e., ), where and are the considered minimum and maximum values of the beat frequency, respectively. Hence, the steering vector is denoted by and expressed as , where for . We employ the orthogonal property between the steering vector and the subspace of the noise term , as follows:

Therefore, using Equation (23), the pseudo-spectrum of the MUSIC algorithm, , is calculated as

By using Equation (15) and the estimated beat frequency with high resolution in Equation (24), close targets that could not be distinguished in the FFT-based estimation can be successfully distinguished.

In this procedure, the proposed algorithm performs the MUSIC algorithm for only a part of , instead of performing the MUSIC algorithm for all samples of . As shown in Equation (7), the sampling frequency, , is determined by . However, as this is based on the worst case (i.e., ), can be reduced if the target is not . The proposed algorithm employs the reduced sampling frequency based on , instead of . The reduced sampling frequency, , is calculated as

As in most cases, is smaller than . In Equation (17), by setting the ratio of and equal to the ratio of and , the proposed algorithm achieves the same as the case based on with .

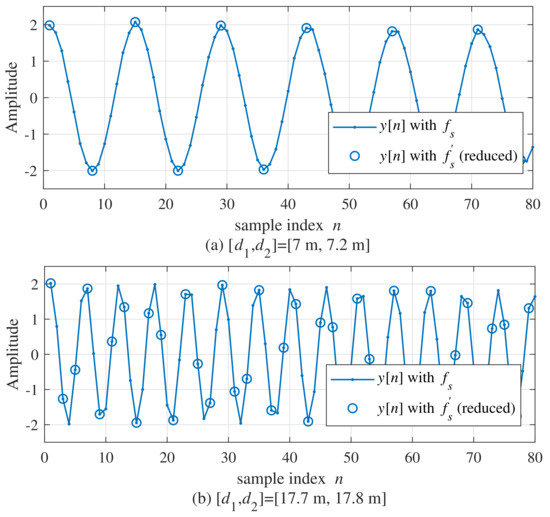

Figure 3 compares the waveforms of between (reduced) and . The ranges of the two targets are 7 and 7.2 m in Figure 3a and 17.7 and 17.8 m in Figure 3b, respectively. In this simulation, the considered maximum range was set to 50 m and the range estimated by FFT was 7.1 m in Figure 3a. Hence, can be set to be about to 2.82 times lower than . In the case of Figure 3b, as FFT was 17.75 m, can be set to about 1.94 times lower than .

Figure 3.

Comparison of waveforms between with (reduced) and ((a), (b)).

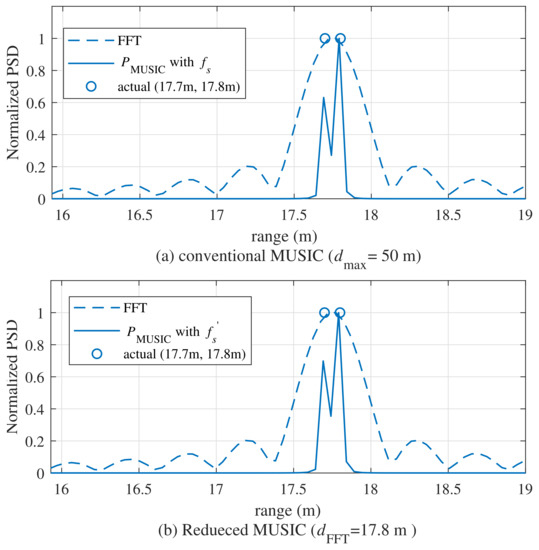

Figure 4 compares the range estimation results by between the conventional MUSIC algorithm and the proposed algorithm with a reduced sample, where and . In Figure 4, as can be observed from the power spectral density (PSD) result using the FFT, they were estimated as one target, even though there were two targets. Compared with the FFT, the conventional MUSIC algorithm and the proposed MUSIC algorithm could estimate the two adjacent targets. In Figure 4b, the proposed MUSIC algorithm achieved similar estimation performance as the conventional MUSIC algorithm, despite using a reduced number of samples.

Figure 4.

Comparison of between the conventional MUSIC algorithm and the reduced-sample MUSIC algorithm((a)conventional MUSIC algorithm, (b) reduced MUSIC algorithm).

4. Performance Evaluation

4.1. Simulation Results

Here, we discuss the results of our simulations, in order to verify the improvement in the performance provided by the proposed super-resolution algorithm. For the simulations, the parameters and the maximum distance were set to 24 GHz and 50 m, respectively. The number of targets was set to 2 (i.e., ) and the ranges of the two targets of each target, and , were selected to be independent and uniformly distributed between 1 and . For the initial estimate, we performed 1024-point FFT. To generate various sample sizes, the bandwidth B was set to 1.54 GHz, 768 MHz, and 384 MHz (leading to ). To calculate the RMSE, we performed simulations. As a measure to observe the performance difference between the conventional high-complexity algorithm and the proposed low-complexity algorithm, we calculated the root mean square error (RMSE) of the estimation of the range. The RMSE was calculated as .

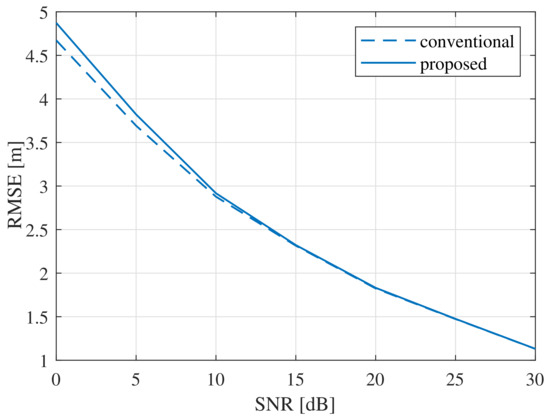

Figure 5 shows the RMSE of the range estimations by the conventional and proposed MUSIC algorithms. In the low-SNR region (i.e., SNR = 0 dB), the RMSE of the proposed algorithm was about 4.5% higher, compared with that of the conventional MUSIC algorithm. However, the RMSE results of the two algorithms were almost the same when SNR ≥ 0 dB, despite the significantly lower computational complexity of the proposed MUSIC algorithm.

Figure 5.

RMSE comparison for various sample sizes: .

4.2. Complexity Comparison

In this section, the computational complexity of the proposed and conventional MUSIC algorithms was analyzed and compared. To measure the complexity of each algorithm, we compared the required number of multiplications for the generation of noise subspace and the SVD operation [43]. Let and denote the required number of multiplications in the conventional MUSIC algorithm and the proposed MUSIC algorithm, respectively. The case of the conventional algorithm, , was calculated as follows:

For the proposed MUSIC algorithm, the number of samples decreases with the initial estimated range ; however, for the initial range estimation, the FFT operation is additionally required. Hence, was calculated as

where is the reduced number of samples (i.e., ).

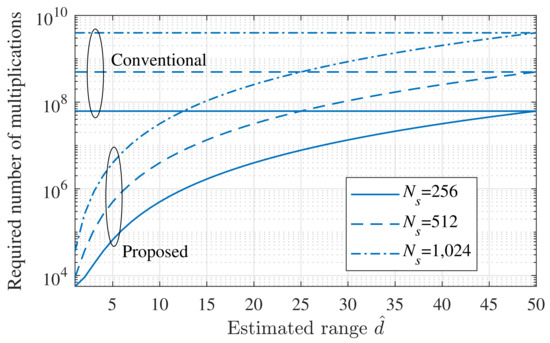

Figure 6 shows and , with respect to the initial estimation of the range. In the worst case (i.e., ), the complexity of the two algorithms was almost the same. However, as decreased, compared with , the complexity drastically decreased. In the case , the proposed algorithm achieved a 99.17% complexity reduction, compared with the conventional algorithm. In addition, when , the proposed algorithm achieved a 93.33% complexity reduction, compared with the conventional algorithm. In the general case, as d is smaller than , the complexity of the proposed algorithm was expected to be significantly lower, compared with the existing MUSIC algorithm. Assuming that the target distance was uniformly distributed between 1 m and , the average range was . Assuming these conditions, the complexity of the proposed algorithm was reduced by about , compared with the conventional algorithm.

Figure 6.

Required number of multiplications, according to the estimated range .

5. Experiments

In this section, we describe the experiments we conducted using a real FMCW radar system, in order to verify the performance of the proposed MUSIC algorithm in a practical environment. First, we introduce the modules and equipment used in the experiment and their specifications; then, the measurement results are provided and analyzed.

5.1. Experimental Set-Up

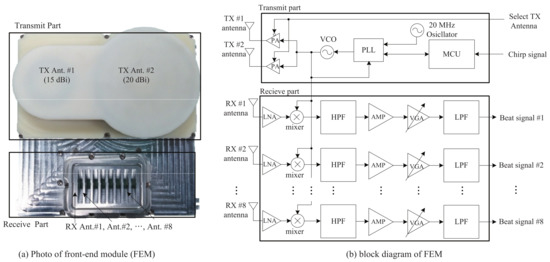

Figure 7a,b show photos of the actual structure of the front-end module (FEM). As shown in Figure 7a,b, the FEM was composed of two parts, a TX part and an RX part. Two TX antennas were located on the top of FEM, with gains of 15 and 20 dBi. The azimuth and elevation angles of the RX antennas were 99.6 and 9.9, respectively. A power amplifier (PA), voltage-controlled oscillator (VCO), phase-locked loop (PLL), oscillator at 20 MHz, and a micro-controller unit (MCU) were included in the TX part. The frequency synthesizer with the PLL was controlled by the MCU, and one of the two TX antennas was selected. The azimuth angles of the first and second TX antennas were 26 and 12, respectively. The RX part was located at the bottom of the FEM. There were 8 RX antennas, and the distance between two adjacent RX antennas was half a wavelength. In the RX part, low-noise amplifiers (LNAs) were included for noise reduction. In addition, a mixer to obtain the beat signals and two kinds of filters—that is, high-pass filters (HPFs) and low-pass filters (LPFs)—were included. The amplifier (AMP) was used to amplify the weak signal, and the variable-gain amplifier (VGA) was used to control the gain, according to the input. The RX signals from the RX antennas passed through the LNA and thus, the noise terms in the RX signals were reduced. Then, the output of the LNA was multiplied by the TX signal, synchronized by the PLL. The mixed signals were input to the (150 kHz) HPFs and then amplified by the AMPs. Finally, the outputs of the AMPs were passed through the (1.7 MHz) LPF.

Figure 7.

Photo and structure of the FEM.

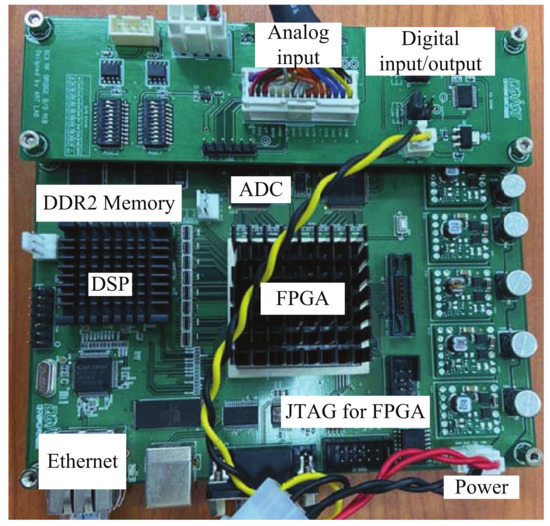

Figure 8 shows a photo of the back-end module (BEM). As shown in Figure 8, a field-programmable gate array and digital signal processing were included in the BEM. The analog signal from the FEM to the analog input was converted into a digital signal, with a 20 MHz sample rate, using an analog-to-digital converter. The converted signal was stored as data in two external memory banks with 512 Mbytes for DSP, called DDR2 SDRAMs. When the two DDR2 SDRAMs were filled with data, the stored data were moved to a personal computer (PC) using an ethernet cable.

Figure 8.

Photo of the BEM.

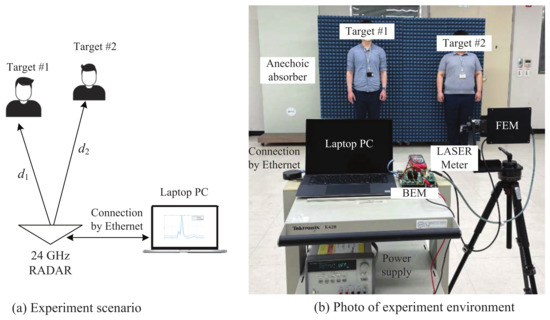

Figure 9 shows the scenario and environment used for the experiment. As shown in Figure 9a,b, the targets were two people, who were and meters away from the radar, respectively. As mentioned above, the data of the sampled beat signal transformed by the ADC were transmitted to the PC by an ethernet cable. By employing software installed for the experiment, as shown in Figure 9b, we easily set and selected the parameters, such as the sampling rate, number of ramps, and so on. Then, the performance of each algorithm was verified by applying the proposed algorithm and the conventional algorithm to the same data.

Figure 9.

Scenario and environment used for the experiment ((a)experiment scenario (b) photograph of experiment environment).

5.2. Experimental Results

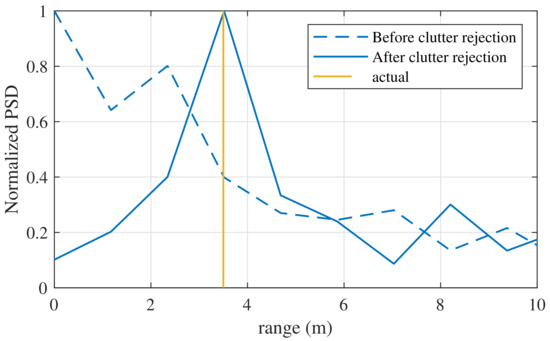

Figure 10 shows the estimation results using FFT with the clutter rejection algorithm. The two targets were located at the same range of 3.5 m, and each angle was located at . The two targets were not stationary objects but humans, and thus there is movement of the chest by breathing. Therefore, a Doppler change occurs due to the respiration of the targets and thus the clutters are easily canceled. In the case without the clutter rejection algorithm, we observed dominant peaks at 0 and 2.2 m, as shown in Figure 10. Hence, the ranges without clutter rejection were estimated as 1 m and 2.2 m. In contrast, according to the results of the clutter rejection algorithm, the dominant peaks at 0 and 2.2 m were removed. The result of the range estimation was almost identical to the actual range.

Figure 10.

Comparison with the algorithm without clutter rejection.

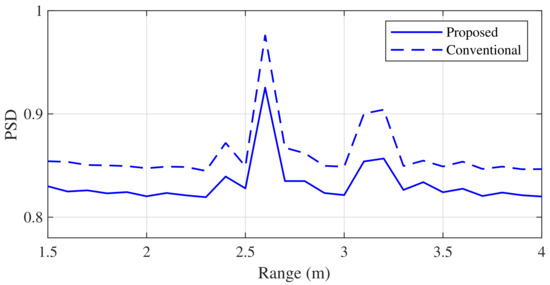

Figure 11 and Figure 12 show the results of the range estimation experiment using the proposed and conventional MUSIC algorithms. The simple clutter rejection algorithm was applied to both algorithms. In Figure 11, the ranges of the two targets, and , were 2.7 and 3.2 m, respectively. From these results, we observed that the two adjacent targets were properly distinguished by the proposed algorithm, despite its low complexity.

Figure 11.

Comparison of the range estimation experiment results (2.7 m and 3.2 m).

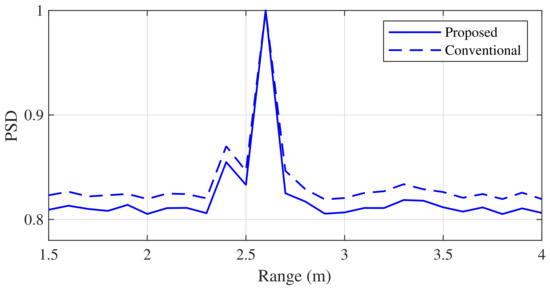

Figure 12.

Comparison of the range estimation experiment results (2.4 and 2.6 m).

Figure 12 shows the experimental results when the distance between the two targets was closer than that in Figure 11 (i.e., ). From these results, we found that two adjacent targets could be distinguished by the proposed algorithm, similarly to the conventional algorithm, even when the two targets were very close.

6. Conclusions

We constructed a low-complexity MUSIC algorithm based on the FFT-estimated beat frequency, and analyzed and compared the complexity of the proposed and conventional MUSIC algorithms. The proposed algorithm achieved a complexity reduction of 10 to 100 times, while producing similar performance to the conventional MUSIC algorithm. In addition, we experimentally confirmed the performance improvement provided by the proposed algorithm in a practical environment.

Author Contributions

B.-s.K. proposed the idea for this paper, verified it through simulations, and wrote this paper; Y.J. and J.L. performed the experiments using the FMCW radar system and contributed to the construction of the FMCW radar system; S.K. discovered and verified the idea for this paper with B.-s.K. and edited the paper. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the DGIST R&D Program of the Ministry of Science, ICT and Future Planning, Korea (21-IT-02).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Chen, X.; Chen, B.; Guan, J.; Huang, Y.; He, Y. Space-Range-Doppler Focus-Based Low-observable Moving Target Detection Using Frequency Diverse Array MIMO Radar. IEEE Access 2018, 6, 43892–43904. [Google Scholar] [CrossRef]

- Munoz-Ferreras, J.M.; Perez-Martinez, F.; Calvo-Gallego, J.; Asensio-Lopez, A.; Dorta-Naranjo, B.P.; Blanco-del-Campo, A. Traffic Surveillance System Based on a High-Resolution Radar. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1624–1633. [Google Scholar] [CrossRef]

- Mahafza, B.R. Radar Systems Analysis and Design Using MATLAB, 3rd ed.; CRC Press: Boca Raton, FL, USA, 2013. [Google Scholar]

- Richards, M.A. Fundementals of Radar Signal Processing; Tata McGraw-Hill Education: New York, NY, USA, 2005. [Google Scholar]

- Skolnik, M.I. Introduction to Radar Systems; Tata McGraw-Hill Education: New York, NY, USA, 2001. [Google Scholar]

- Felguera-Martin, D.; Gonzalez-Partida, J.-T.; Almorox-Gonzalez, P.; Burgos-Garcia, M. Vehicular Traffic Surveillance and Road Lane Detection Using Radar Interferometry. IEEE Trans. Veh. Technol. 2012, 61, 959–970. [Google Scholar] [CrossRef]

- Wang, D.; Yoo, S.; Cho, S. Experimental Comparison of IR-UWB Radar and FMCW Radar for Vital Signs. Sensors 2020, 22, 6695. [Google Scholar] [CrossRef] [PubMed]

- Qi, R.; Li, X.; Zhang, Y.; Li, Y. Multi-Classification Algorithm for Human Motion Recognition Based on IR-UWB Radar. IEEE Sensors J. 2020, 21, 12848–12858. [Google Scholar] [CrossRef]

- Pandeirada, J.; Bergano, M.; Neves, J.; Marques, P.; Barbosa, D.; Coelho, B.; Ribeiro, V. Development of the First Portuguese Radar Tracking Sensor for Space Debris. Signals 2021, 2, 122–137. [Google Scholar] [CrossRef]

- Petrovic, V.L.; Jankovic, M.M.; Lupsic, A.V.; Mihajlovic, V.R.; Popovic-Bozovic, J.S. High-Accuracy Real-Time Monitoring of Heart Rate Variability Using 24 GHz Continuous-Wave Doppler Radar. IEEE Access 2019, 7, 74721–74733. [Google Scholar] [CrossRef]

- Kim, S.; Lee, K. A Low Complexity Based Spectrum Partitioning-ESPRIT for Noncontact Vital Radar. Elektron. Elektrotechnika 2017, 23, 54–58. [Google Scholar] [CrossRef][Green Version]

- Geibig, T.; Shoykhetbrod, A.; Hommes, A.; Herschel, R.; Pohl, N. Compact 3D Imaging Radar Based On FMCW Driven Frequency-Scanning Antennas. In Proceedings of the 2016 IEEE Radar Conference, Philadelphia, PA, USA, 2–6 May 2016. [Google Scholar]

- Uysal, F. Phase-Coded FMCW Automotive Radar: System Design and Interference Mitigation. IEEE Trans. Veh. Technol. 2019, 69, 270–281. [Google Scholar] [CrossRef]

- Scherr, S.; Afroz, R.; Ayhan, S.; Thomas, S.; Jaeschke, T.; Marahrens, S.; Bhutani, A.; Pauli, M.; Pohl, N.; Zwick, T. Influence of Radar Targets on the Accuracy of FMCW Radar Distance Measurements. IEEE Trans. Microw. Theory Tech. 2017, 65, 3640–3647. [Google Scholar] [CrossRef]

- Hyun, E.; Jin, Y.; Lee, J. A Pedestrian Detection Scheme Using A Coherent Phase Difference Method Based on 2D Range-Doppler FMCW Radar. Sensors 2016, 16, 124. [Google Scholar] [CrossRef] [PubMed]

- Kim, B.; Kim, S.; Lee, J. A Novel DFT-based DOA Estimation by A Virtual Array Extension Using Simple Multiplications for FMCW Radar. Sensors 2018, 18, 1560. [Google Scholar] [CrossRef]

- Kim, B.; Jin, Y.; Kim, S.; Lee, J. A Low-complexity FMCW Surveillance Radar Algorithm Using Two Random Beat Signals. Sensors 2018, 19, 608. [Google Scholar] [CrossRef] [PubMed]

- Kim, B.; Jin, Y.; Lee, J.; Kim, S. Low-complexity MUSIC-based Direction-of-arrival Detection Algorithm for Frequency-modulated Continuous-wave Vital Radar. Sensors 2020, 20, 4295. [Google Scholar] [CrossRef]

- Saponara, S.; Neri, B. Radar Sensor Signal Acquisition and Multidimensional FFT Processing for Surveillance Applications in Transport Systems. IEEE Trans. Instrum. Meas. 2017, 66, 604–615. [Google Scholar] [CrossRef]

- Saponara, S.; Neri, B. Radar Sensor Signal Acquisition and 3D FFT Processing for Smart Mobility Surveillance Systems. In Proceedings of the 2016 IEEE Sensors Applications Symposium (SAS), Catania, Italy, 20–22 April 2016; pp. 1–6. [Google Scholar]

- Jin, Y.; Kim, B.; Kim, S.; Lee, J. Design and Implementation of FMCW Surveillance Radar Based on Dual Chirps. Elektron. Elektrotechnika 2018, 24, 60–66. [Google Scholar] [CrossRef]

- Islam, S.M.M.; Motoyama, N.; Pacheco, S.; Lubecke, V.M. Non-Contact Vital Signs Monitoring for Multiple Subjects Using a Millimeter Wave FMCW Automotive Radar. In Proceedings of the 2020 IEEE/MTT-S International Microwave Symposium (IMS), Los Angeles, CA, USA, 4–6 August 2020. [Google Scholar]

- Kim, S.; Kim, B.; Jin, Y.; Lee, J. Human Identification by Measuring Respiration Patterns Using Vital FMCW Radar. J. Electromagn. Eng. Sci. 2020, 4, 302–306. [Google Scholar] [CrossRef]

- Cunlong, L.; Weimin, C.; Gang, L.; Rong, Y.; Hengyi, X.; Yi, Q. A Noncontact FMCW Radar Sensor for Displacement Measurement in Structural Health Monitoring. Sensors 2015, 4, 7412–7433. [Google Scholar]

- Wenjie, L.; Wangdong, H.; Xipeng, L.; Jungang, M. Non-Contact Monitoring of Human Vital Signs Using FMCW Millimeter Wave Radar in the 120 GHz Band. Sensors 2021, 8, 2732. [Google Scholar]

- Kim, S.; Lee, K. Low-Complexity Joint Extrapolation-MUSIC-Based 2-D Parameter Estimator for Vital FMCW Radar. IEEE Access 2019, 6, 2205–2216. [Google Scholar] [CrossRef]

- Hu, C.; Liu, Y.; Meng, H.; Wang, X. Randomized Switched Antenna Array FMCW Radar for Automotive Applications. IEEE Trans. Veh. 2014, 63, 3624–3641. [Google Scholar] [CrossRef]

- Kim, W.; Cho, H.; Kim, J.; Kim, B.; Lee, S. YOLO-based Simultaneous Target Detection and Classification in Automotive FMCW Radar Systems. Sensors 2020, 10, 2897. [Google Scholar] [CrossRef] [PubMed]

- Lee, M.; Kim, Y. Design and Performance of a 24-GHz Switch-Antenna Array FMCW Radar System for Automotive Applications. IEEE Trans. Veh. 2010, 59, 2290–2297. [Google Scholar]

- Nie, W.; Xu, K.; Feng, D.; Wu, C.Q.; Hou, A.; Yin, X. A Fast Algorithm for 2D DOA Estimation Using An Omnidirectional Sensor Array. Sensors 2017, 17, 515. [Google Scholar] [CrossRef]

- Basikolo, T.; Arai, H. APRD-MUSIC Algorithm DOA Estimation for Reactance Based Uniform Circular Array. IEEE Trans. Antennas Propag. 2016, 64, 4415–4422. [Google Scholar] [CrossRef]

- Schmidt, R.O. Multiple Emitter Location and Signal Parameter Estimation. IEEE Trans. Antennas Prop. 1986, 34, 276–280. [Google Scholar] [CrossRef]

- Li, J.; Jiang, D.; Zhang, X. DOA Estimation Based on Combined Unitary ESPRIT for Coprime MIMO Radar. IEEE Commnun. Lett. 2017, 21, 96–99. [Google Scholar] [CrossRef]

- Meng, Z.; Zhou, W. Direction-of-Arrival Estimation in Coprime Array Using the ESPRIT-Based Method. Sensors 2019, 19, 707. [Google Scholar] [CrossRef]

- Oh, D.; Lee, J. Low-complexity Range-azimuth FMCW Radar Sensor Using Joint Angle and Delay Estimation Without SVD and EVD. IEEE Sens. J. 2015, 15, 4799–4811. [Google Scholar] [CrossRef]

- Li, B.; Wang, S.; Zhang, J.; Cao, X.; Zhao, C. Fast MUSIC Algorithm for mm-Wave Massive-MIMO Radar. arXiv 2019, arXiv:1911.07434. [Google Scholar]

- Kim, B.; Kim, S.; Jin, Y.; Lee, J. Low-complexity Joint Range and Doppler FMCW Radar Algorithm Based on Number of Targets. Sensors 2020, 20, 51. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Choi, B.; Chong, J.; Oh, D. 3D Target Localization of Modified 3D MUSIC for a Triple-Channel K-Band Radar. Sensors 2018, 5, 1634. [Google Scholar] [CrossRef] [PubMed]

- Nam, H.; Li, Y.; Choi, B.; Oh, D. 3D-Subspace-Based Auto-Paired Azimuth Angle, Elevation Angle, and Range Estimation for 24 G FMCW Radar with an L-Shaped Array. Sensors 2018, 4, 1113. [Google Scholar] [CrossRef] [PubMed]

- Milovanovic, V. On Fundamental Operating Principles and Range-Doppler Estimation in Monolithic Frequency-Modulated Continuous-Wave Radar Sensors. Facta Univ. Ser. Electron. Energ. 2018, 31, 547–570. [Google Scholar] [CrossRef]

- Rao, S. Introduction to mmWave Sensing: FMCW Radars; Texas Instruments Training Document; Texas Instruments: Dallas, TX, USA, 2017. [Google Scholar]

- Piper, S.O. Homodyne FMCW Radar Range Resolution Effects with Sinusoidal Nonlinearities in the Frequency Sweep. In Proceedings of the International Radar Conference 1995, Alexandria, VA, USA, 8–11 May 1995. [Google Scholar] [CrossRef]

- Nauman, A.B.; Mohammad, B.M. Comparison of Direction of Arrival Estimation Techniques for Closely Spaced Targets. Int. J. Future Comput. Commun. 2013, 2, 654–659. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).