Pose Estimation of Omnidirectional Camera with Improved EPnP Algorithm

Abstract

1. Introduction

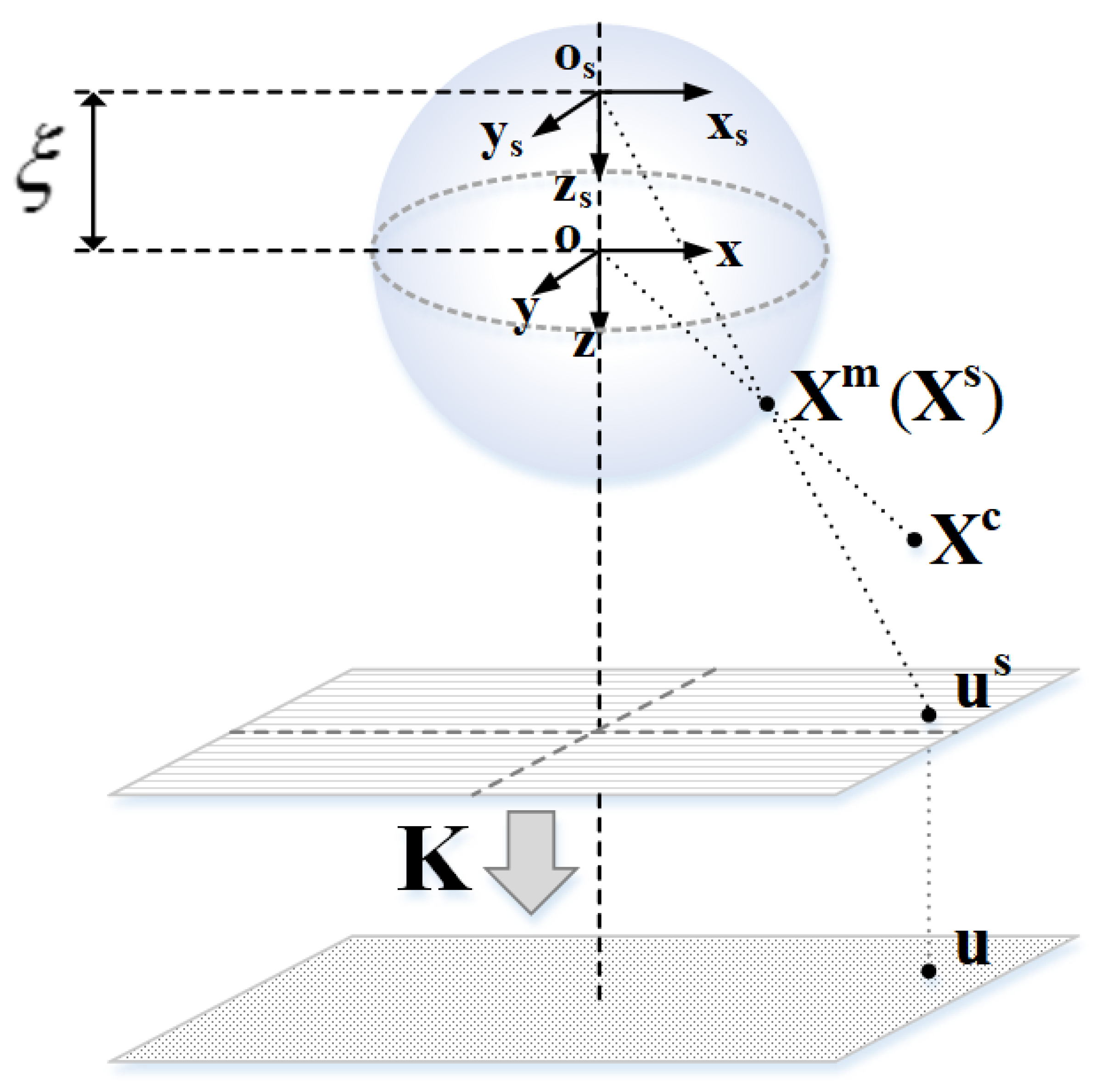

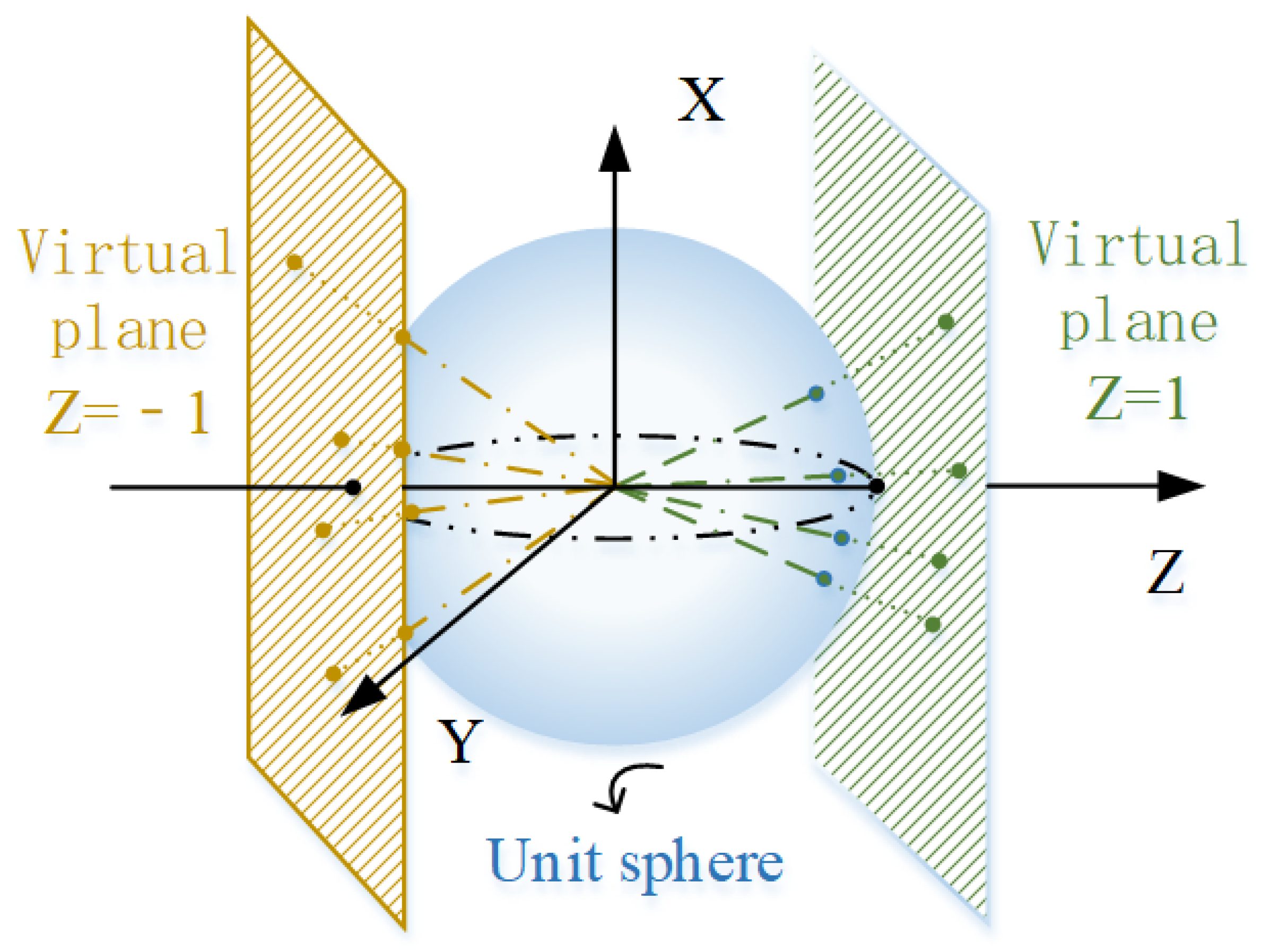

2. Omnidirectional Camera Spherical Model

3. Pose Estimation Algorithm

| Algorithm 1. Logic of omnidirectional camera pose estimation algorithms. |

| 1. Data acquisition. Extract corner point u and obtain its coordinate in the world coordinate system Xw |

| 2. Determine camera. Determine the number and location of virtual cameras |

| 3. Coordinate transformation. Convert corner coordinate to virtual image plane coordinate. |

| 4. Calculate Xvir. Use modified EPNP algorithm to calculate virtual camera coordinate Xvir. |

| 5. Coordinate transformation. Convert virtual camera coordinate to camera coordinate. |

| 6. Calculate pose. Calculate the camera pose according to Xc and Xw |

4. Results

4.1. Simulation

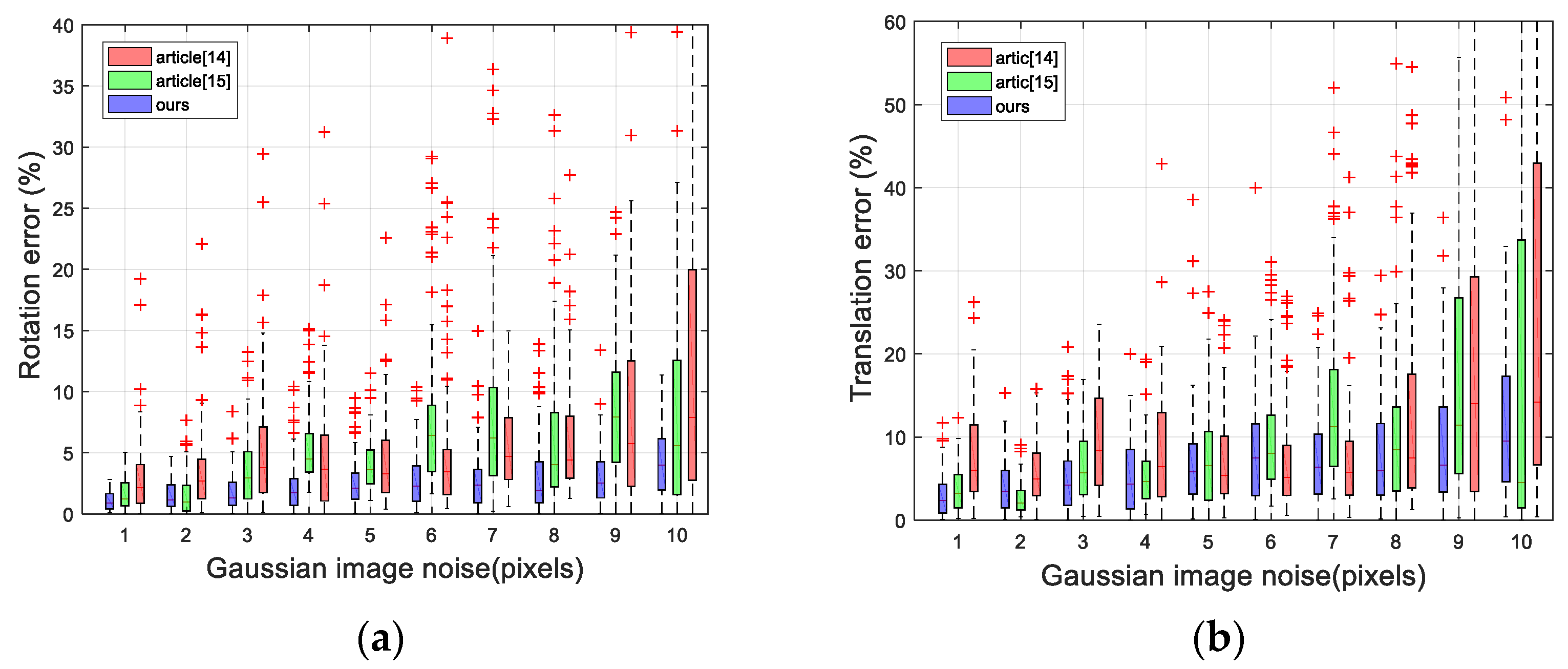

4.1.1. Synthetic Experiments of Accuracy about Noise

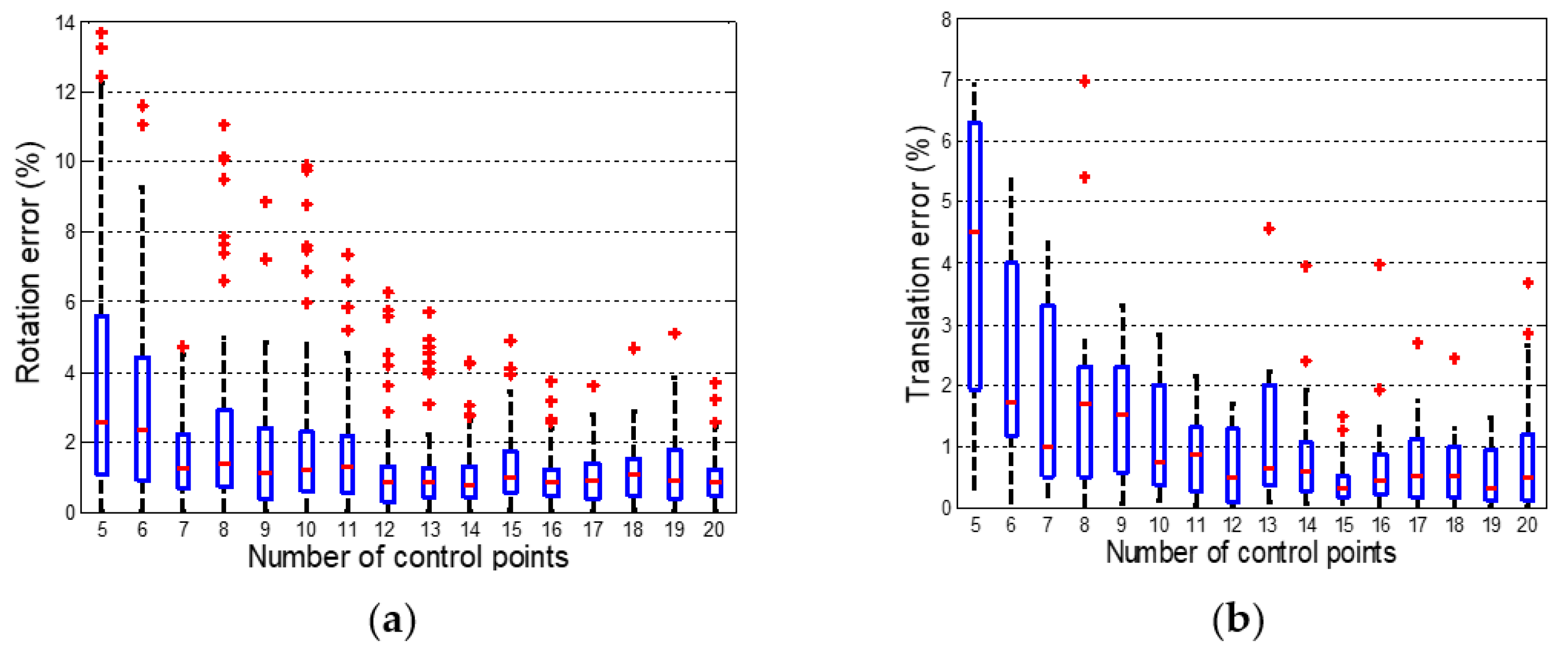

4.1.2. Synthetic Experiments of Accuracy about the Number of Control Points

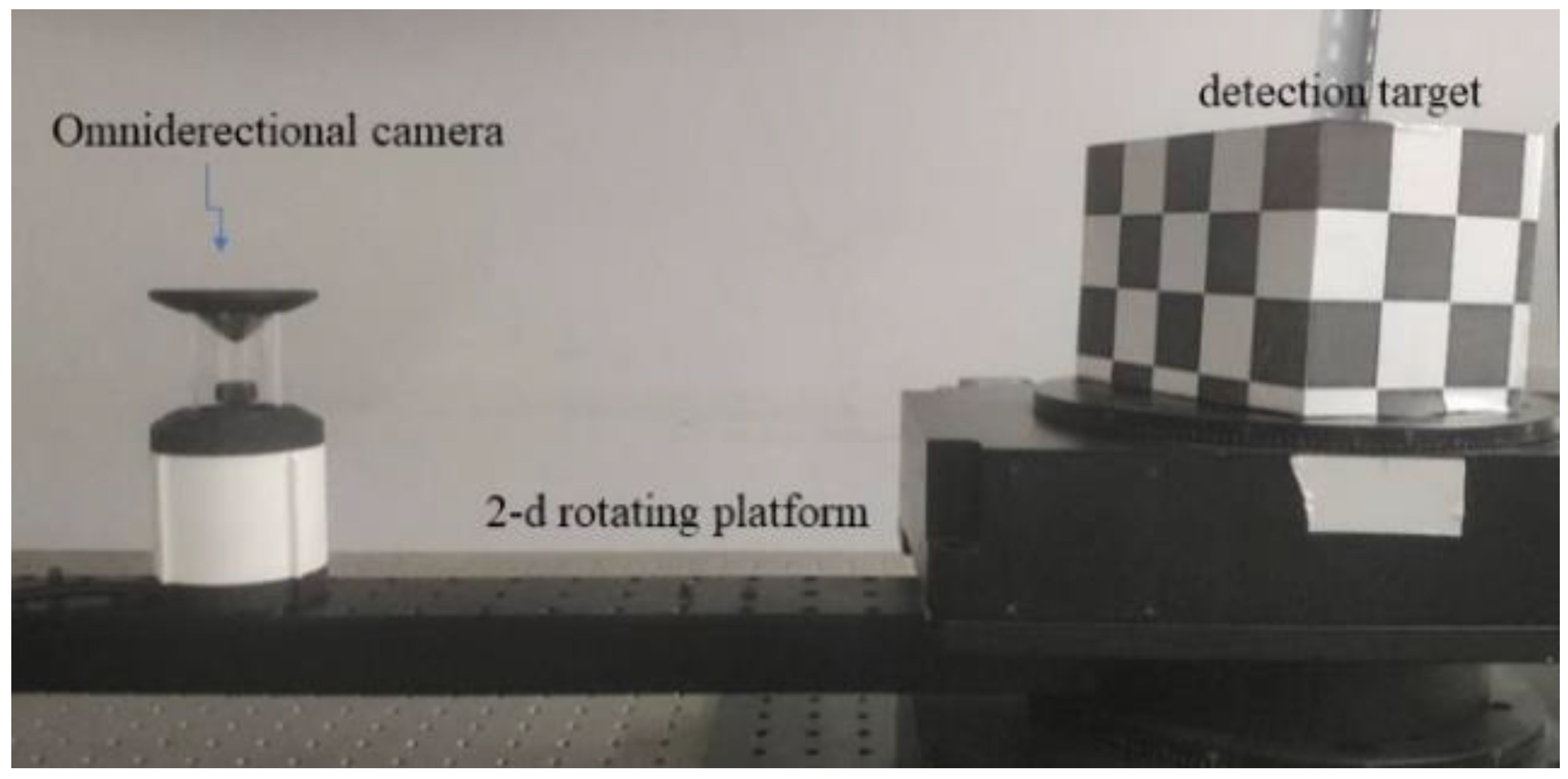

4.2. Real Images

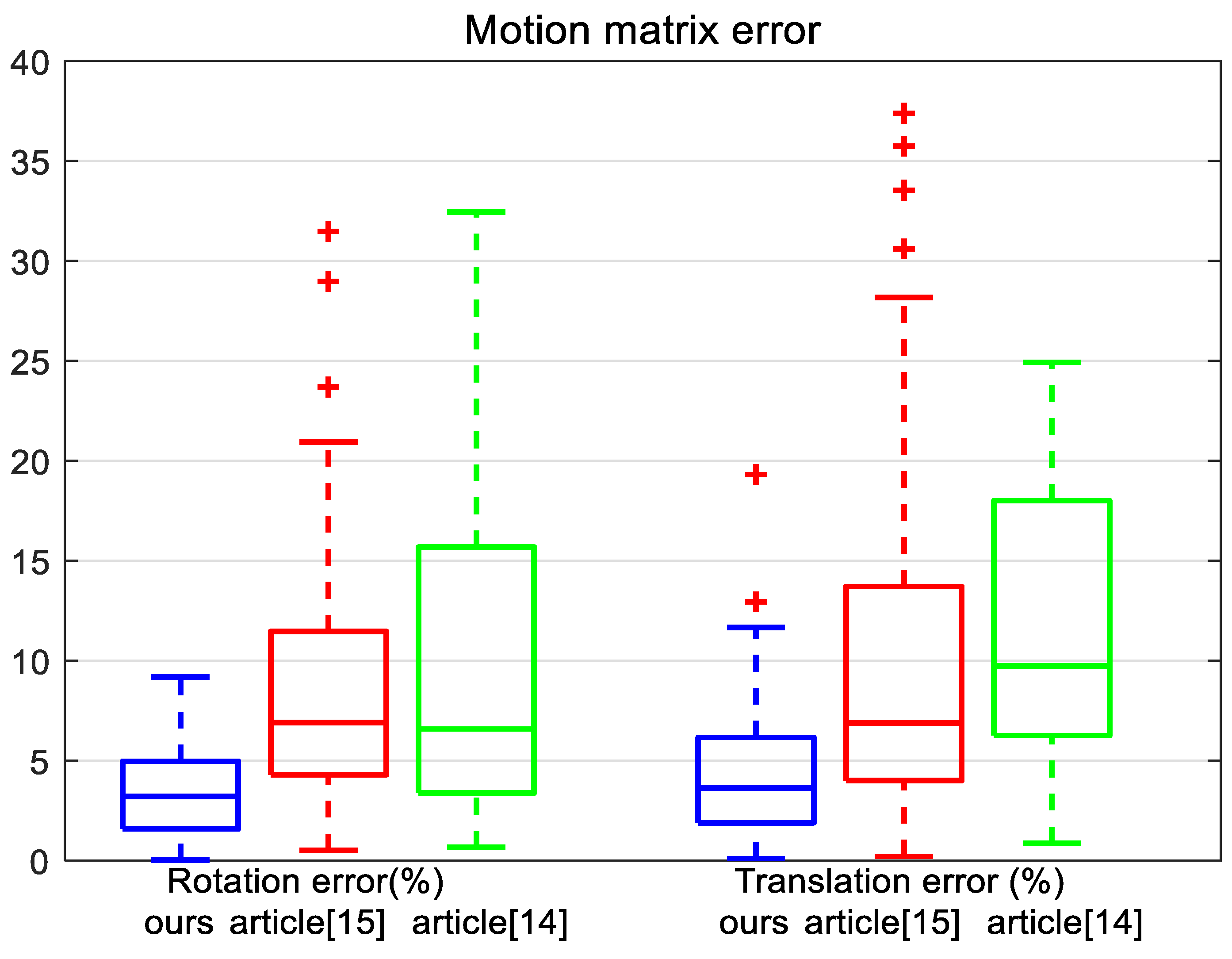

4.2.1. Calculating Rotation Angle

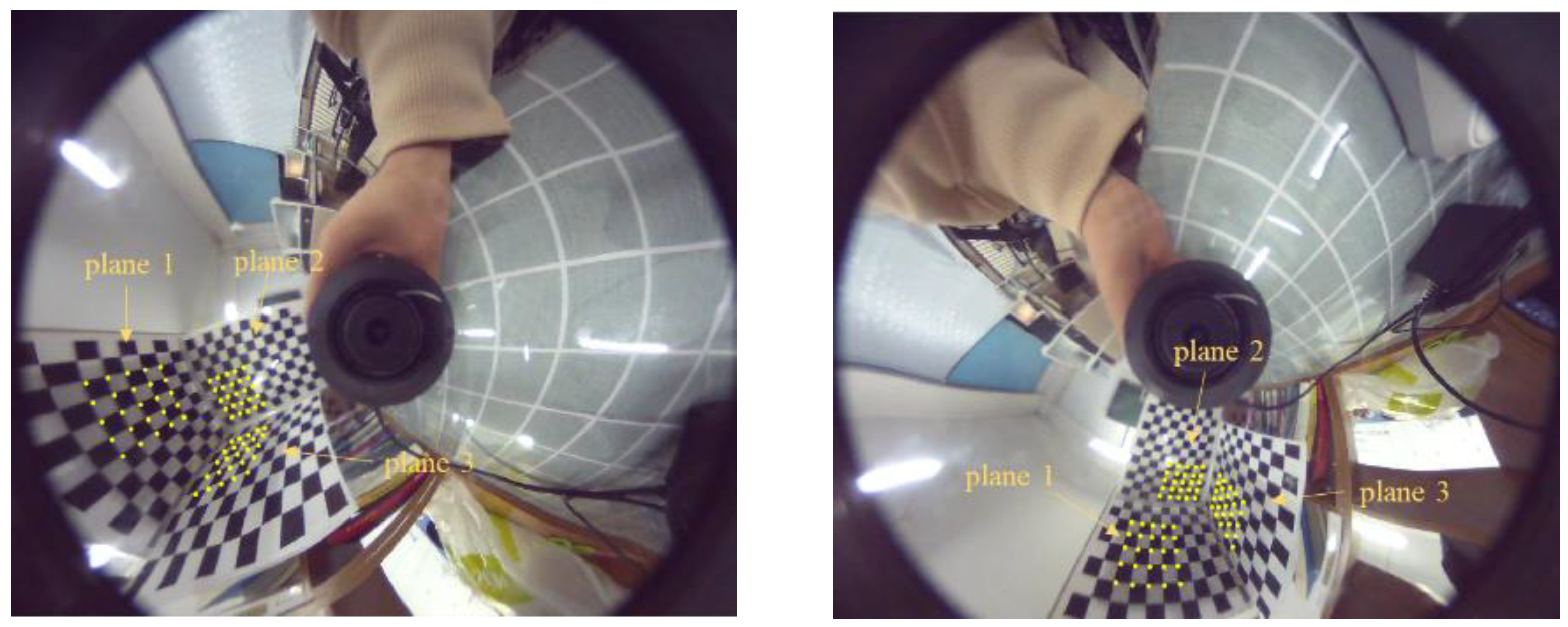

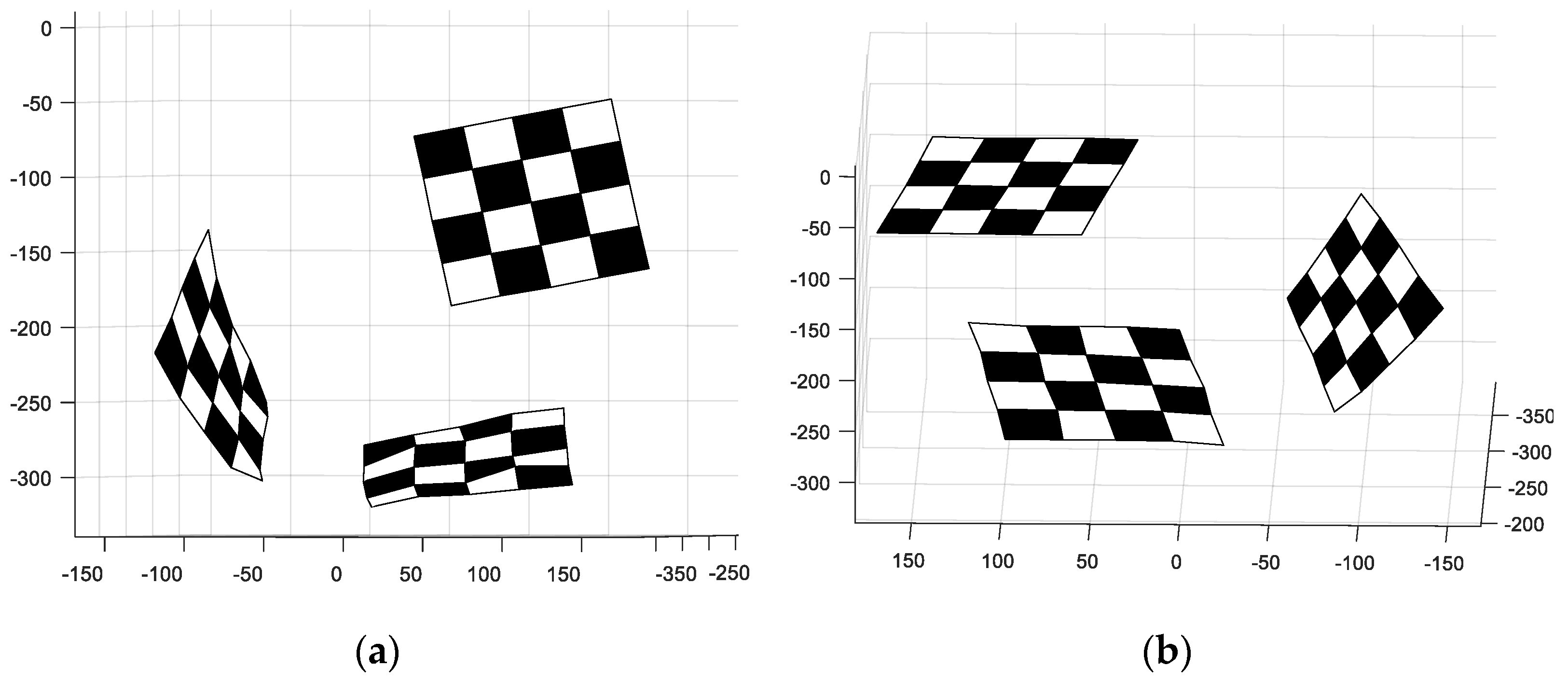

4.2.2. Reconstruction

4.3. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Xu, D.; Li, Y.F.; Tan, M. A general recursive linear method and unique solution pattern design for the perspective-n-point problem. Image Vis. Comput. 2008, 26, 740–750. [Google Scholar] [CrossRef]

- Li, S.; Xu, C.; Xie, M. A robust O (n) solution to the perspective-n-point problem. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 1444–1450. [Google Scholar] [CrossRef] [PubMed]

- Wang, P.; Xu, G.; Cheng, Y.; Yu, Q. A simple, robust and fast method for the perspective-n-point problem. Pattern Recognit. Lett. 2018, 108, 31–37. [Google Scholar] [CrossRef]

- Zhou, B.; Chen, Z.; Liu, Q. An Efficient Solution to the Perspective-n-Point Problem for Camera with Unknown Focal Length. IEEE Access 2020, 8, 162838–162846. [Google Scholar] [CrossRef]

- Meng, C.; Xu, W. ScPnP: A non-iterative scale compensation solution for PnP problems. Image Vis. Comput. 2021, 106, 104085. [Google Scholar] [CrossRef]

- Liu, G.F. Accurate and Robust Monocular SLAM with Omnidirectional Cameras. Sensors 2019, 19, 4494. [Google Scholar] [CrossRef] [PubMed]

- Patruno, C.; Colella, R.; Nitti, M.; Ren, V.; Mosca, N. A Vision-Based Odometer for Localization of Omnidirectional Indoor Robots. Sensors 2020, 20, 875. [Google Scholar] [CrossRef] [PubMed]

- Dias, T.; Miraldo1, P.; Gon Alves, N. A Framework for Augmented Reality using Non-Central Catadioptric Cameras. J. Intell. Robot. Syst. 2016, 83, 359–373. [Google Scholar] [CrossRef]

- Pais, G.D.; Dias, T.J.; Nascimento, J.C.; Miraldo, P. Omni-DRL: Robust Pedestrian Detection using Deep Reinforcement Learning on Omnidirectional Cameras. In Proceedings of the International Conference on Robotics and Automation, Montreal, QC, Canada, 20–24 May 2019; pp. 4782–4789. [Google Scholar]

- Karaim, H.C.; Baris, I.; Bastanlar, Y. Detection and classification of vehicles from omnidirectional videos using multiple silhouettes. Pattern Anal. Appl. 2017, 20, 893–905. [Google Scholar] [CrossRef]

- Morbidi, F.; Caron, G. Phase Correlation for Dense Visual Compass from Omnidirectional Camera-Robot Images. IEEE Robot. Autom. Lett. 2017, 2, 688–695. [Google Scholar] [CrossRef]

- Aliaga, D.G. Accurate Catadioptric Calibration for Real-time Pose Estimation in Room-size Environments. In Proceedings of the IEEE International Conference on Computer Vision, Vancouver, BC, Canada, 7–14 July 2001; pp. 127–134. [Google Scholar]

- Paulino, A.; Araujo, H. Pose Estimation for Central Catadioptric Systems: An Analytical Approach. In Proceedings of the Object Recognition Supported by User Interaction for Service Robots, Quebec, QC, Canada, 11–15 August 2002; pp. 696–699. [Google Scholar]

- Gebken, C.; Tolvanen, A.; Perwass, C. Perspective Pose Estimation from Uncertain Omnidirectional Image Data. In Proceedings of the 18th International Conference, Hong Kong, China, 20–24 August 2006; pp. 793–796. [Google Scholar]

- Gonçalves, N.; Araújo, H. Linear solution for the pose estimation of noncentral catadioptric systems. In Proceedings of the IEEE 11th International Conference on Computer Vision, Rio de Janeiro, Brazil, 14–21 October 2007; pp. 1–7. [Google Scholar]

- Ilizirov, G.; Filin, S. Pose Estimation and Mapping Using Catadioptric Cameras with Spherical Mirrors. Int. Arch. Photogramm. Remote Sens. 2016, XLI-B3, 43–47. [Google Scholar] [CrossRef]

- Miraldo, P.; Eiras, F.; Ramalingam, S. Analytical Modeling of Vanishing Points and Curves in Catadioptric Cameras. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2012–2018. [Google Scholar]

- Lepetit, V.; Moreno-Noguer, F.; Fua, P. EPnP: An AccurateO(n) Solution to the PnP Problem. Int. J. Comput. Vis. 2009, 81, 155–166. [Google Scholar] [CrossRef]

- Penatesanchez, A.; Andradecetto, J.; Morenonoguer, F. Exhaustive Linearization for Robust Camera Pose and Focal Length Estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 2387–2400. [Google Scholar] [CrossRef]

- Deng, F.; Wu, Y.; Hu, Y. Position and Pose Estimation of Spherical Panoramic Image with Improved EPnP Algorithm. Acta Geod. Cartogr. Sin. 2013, 45, 677–684. [Google Scholar]

- Chen, P.; Wang, C. IEPnP: An Iterative Estimation Algorithm for Camera Pose Based on EPnP. Chin. J. Opt. 2018, 38, 130–136. [Google Scholar]

- Mei, C.; Rives, P. Single View Point Omnidirectional Camera Calibration from Planar Grids. In Proceedings of the 2007 IEEE International Conference on Robotics and Automation, Roma, Italy, 10–14 April 2007; pp. 3945–3950. [Google Scholar]

- Horn, B.K.; Hilden, H.M.; Negahdaripour, S. Closed-form Solution of Absolute Orientation Using Orthonormal Matrices. J. Opt. Soc. Am. A 1988, 5, 1127–1135. [Google Scholar] [CrossRef]

- Geiger, A.; Moosmann, F.; Car, O. Automatic camera and range sensor calibration using a single shot. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012; pp. 3936–3943. [Google Scholar]

| Parameter | ||

|---|---|---|

| Parabola | 1 | −2p |

| Hyperbola | ||

| Ellipse | ||

| Planar | 0 | −1 |

| Parameter | fx | fy | u0 | v0 | |

|---|---|---|---|---|---|

| Value | 260.1 | 259.6 | 517.1 | 385.8 | 0.97 |

| Parameter | fx | fy | u0 | v0 | |

|---|---|---|---|---|---|

| Value | 370.647 | 370.018 | 807.551 | 597.126 | 1.027 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gong, X.; Lv, Y.; Xu, X.; Wang, Y.; Li, M. Pose Estimation of Omnidirectional Camera with Improved EPnP Algorithm. Sensors 2021, 21, 4008. https://doi.org/10.3390/s21124008

Gong X, Lv Y, Xu X, Wang Y, Li M. Pose Estimation of Omnidirectional Camera with Improved EPnP Algorithm. Sensors. 2021; 21(12):4008. https://doi.org/10.3390/s21124008

Chicago/Turabian StyleGong, Xuanrui, Yaowen Lv, Xiping Xu, Yuxuan Wang, and Mengdi Li. 2021. "Pose Estimation of Omnidirectional Camera with Improved EPnP Algorithm" Sensors 21, no. 12: 4008. https://doi.org/10.3390/s21124008

APA StyleGong, X., Lv, Y., Xu, X., Wang, Y., & Li, M. (2021). Pose Estimation of Omnidirectional Camera with Improved EPnP Algorithm. Sensors, 21(12), 4008. https://doi.org/10.3390/s21124008