3.3.1. Two-Stage Methodology

The Two-stage methodology is applied in order to transform the original raw dataset into a new transformed one, denoted by , based on normalisation and feature weighting, for representing the relative importance, each feature gathers for classifying the samples among the different classes.

(1) Normalisation. It is thought that normalisation equalises the contribution of each feature in the ML algorithm calculations [

36]. This is why normalisation methods are commonly applied during the preprocessing step in order to avoid the over-contribution of a set of features due to the magnitudes difference. However, each normalisation method transforms the dataset differently. In addition, each feature is compressed or expanded depending on the normalisation method and its statistical values [

37], which ultimately can condition the features’ influence on the ML algorithm calculations and its performance. Since there is no specific normalisation method suitable for all the problems, three of the most commonly employed approaches are selected in this work. All of them are linear transformations based on position and dispersion statistics.

Standardisation (ST): . The resultant features are centred around the mean with a standard deviation equal to 1.

Min–max normalisation (MM): , where . The samples of the resulting features take values between .

Median Absolute Deviation normalisation (MAD):

,

. In contrast to the other techniques, MAD normalisation is considered a robust transformation [

38] as the calculation of the median Me is not affected by the presence of outliers.

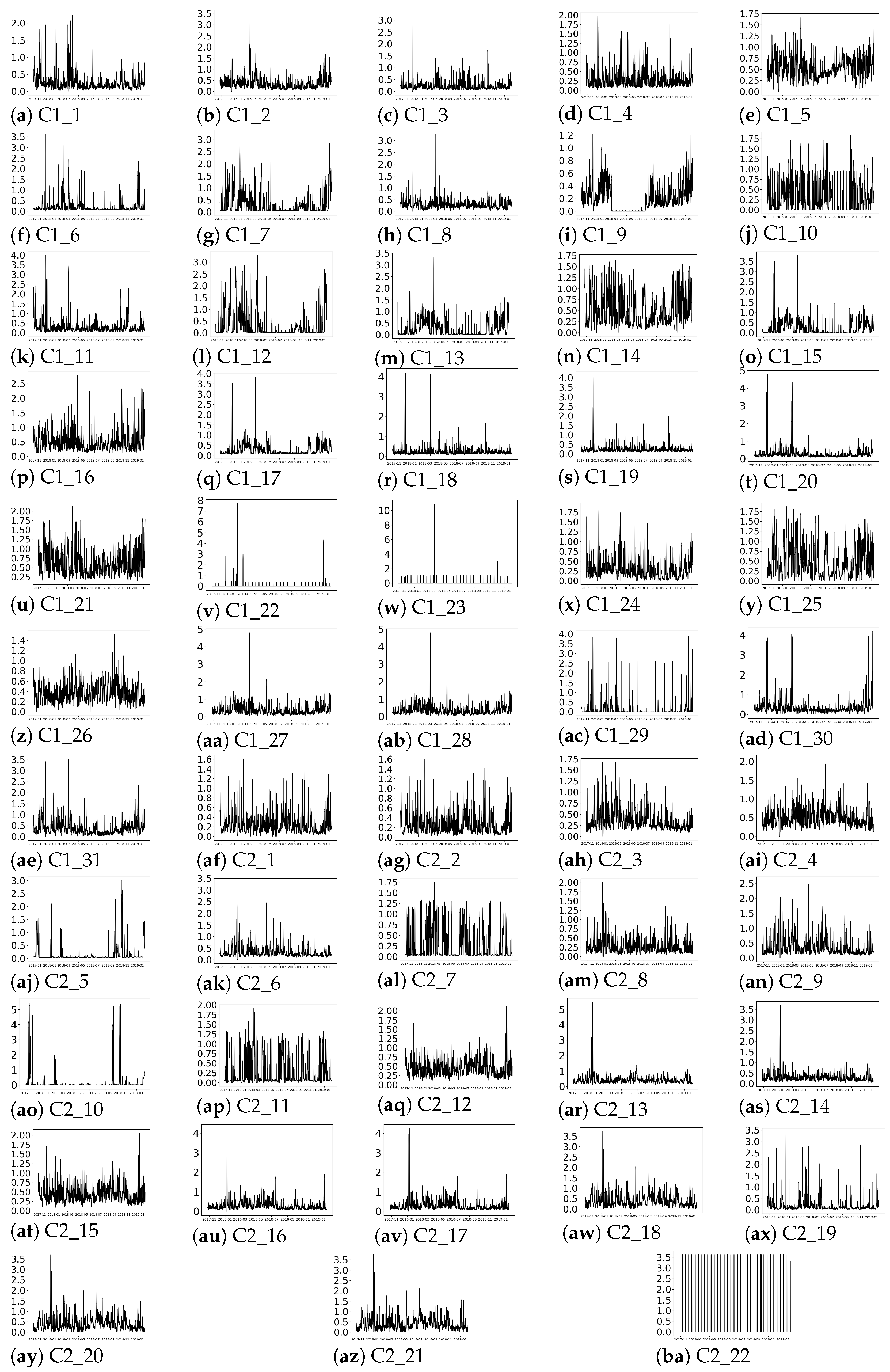

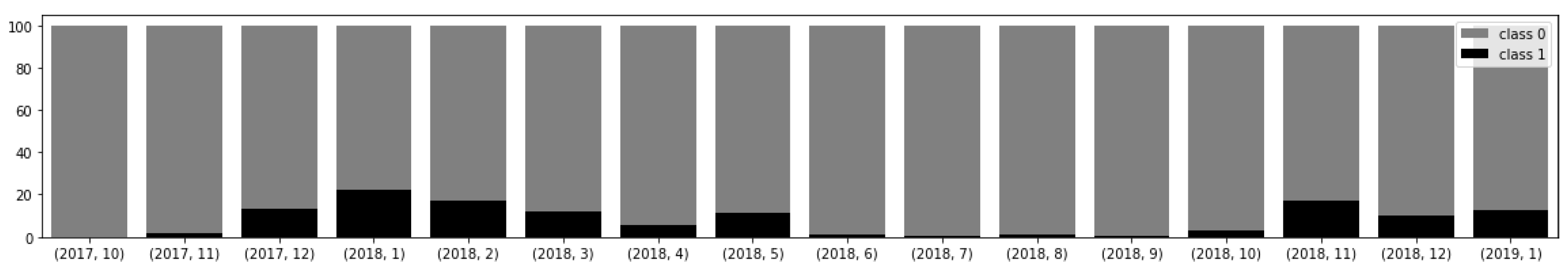

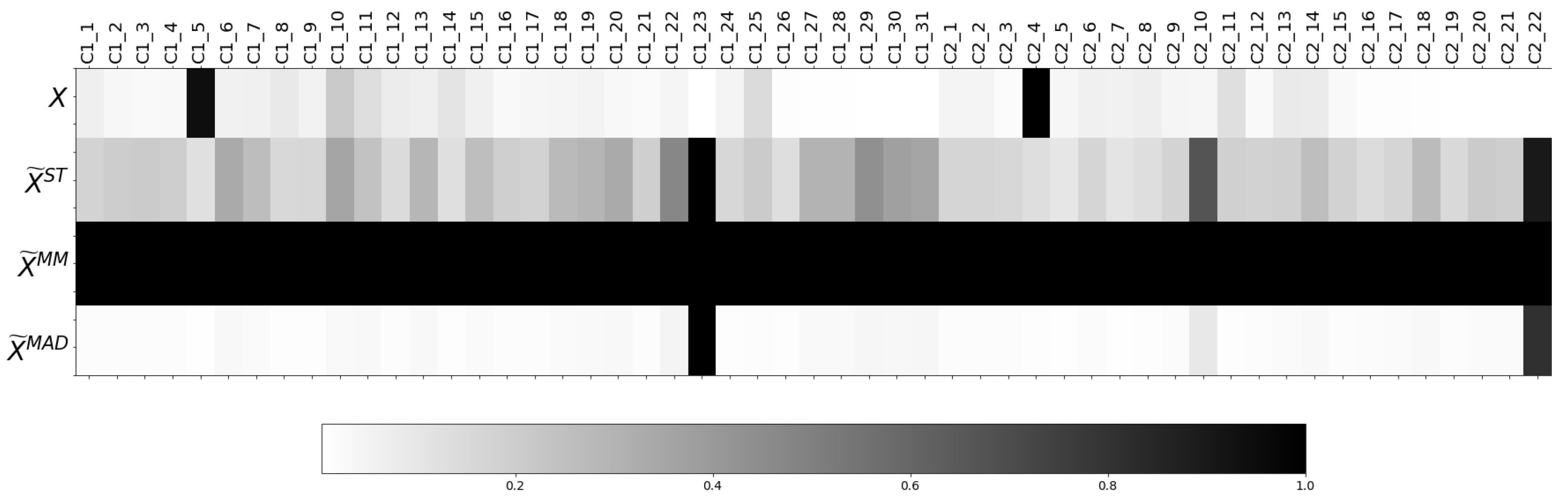

Since each normalisation method (ST, MM and MAD) depends on different statistics, and given that each feature is transformed differently depending on its statistical characteristics, it is expected that a different subset of features predominate for each normalised dataset (

and

). Then, in this work, the range of the features is employed as an indicator of the influence of each feature in the algorithm performance [

39]. To facilitate the comparison between features of the same dataset, the ranges are divided by the maximum range of the dataset

. This way, the most influencing features, i.e., with a range close to the maximum one, present a normalised range close to 1. In contrast, features that present range value much lower than the maximum one, being, in comparison, insignificant ranges, will present a normalised range close to 0.

(2) Feature Weighting. Feature weighting methods transform the features of the dataset to be representative of the relative information each gathers for estimating the output. This transformation is conducted by a vector

w of feature weights, where the components represent the relative importance of each feature. Note that each weight has a value in the range from 0 to 1, so the sum of

for all

is 1. Among the FW methods, the filter approaches calculate the feature weights independently from the ML algorithm. If information on the labels is employed for computing the weights, the FW approach is considered supervised. Three supervised filter FW methods are applied in this research: two well-known methods, Random Forest and Mutual Information, both based on Information Theory and the Adapted Pearson correlation [

37], a statistical-based method previously proposed by these authors. Random Forest calculates the feature weights conjointly, while Mutual Information and Adapted Pearson correlation estimate the weights for each feature in an independent manner, i.e., without considering the remaining ones. The three FW methods are briefly described below:

- -

Adapted Pearson correlation (P): this statistical-based FW method is an adaptation of the Pearson correlation coefficient for handling categorical and continuous features. It aims at estimating the relative importance of each feature for separating the classes in classification problems. With that purpose, the proposal presented in [

37] utilises the labels of the dataset to separate the samples according to the class. Thus, the labels are encoded as the centroid of the samples that correspond to such label. Then, for each component of the vector of weights, the absolute value of the Pearson correlation coefficient is estimated between each feature and the corresponding component of the encoded label. Finally, the weights vector is divided by the sum of their components to obtain the vector of weights

, so

.

- -

Random Forest classifier (RF): Random Forest [

40] is a decision tree-based ensemble-learning ML algorithm utilised for different tasks, such as classification or regression problems. In addition, it is also widely employed for calculating the relevance of the features for estimating the output, according to their contribution in the trees employed for creating the forest. Each tree in the ensemble employs bootstrapping, which, together with an elevated number of trees and the tree splitting strategy, are randomness sources that decrease the variance of the estimations. Thus, in this work, the RF employed as the FW method is constructed by 100 decision trees. The final feature weight vector

is calculated as the mean of the features importance of 30 RF-based models. Thus, in total, 3000 decision trees are considered. Each decision tree is constructed from a random subset of features of length equal to the square root of the total number of features of the dataset. A leaf node requires a minimum of one sample, while all the nodes with more than one sample are considered internal nodes. The sub-sample set employed for training each tree presents the same size as the original dataset, but, with bootstrap, this set is drawn with replacement. Once the algorithm is trained, the relative importance of each feature is calculated by the Mean Decrease Gini [

41], which computes the mean of the weighted impurity decrease of all the nodes of all the trees where the feature is used. In this work, the scikit-learn package [

42] of python has been used for the estimation of

.

- -

Mutual Information (MI): this FW method measures the degree of mutual relatedness between a feature and the labels, which can be interpreted as the amount of shared information between them. MI employs joint and marginal probability distributions to compute the calculations, which are generally unknown in real problems. Again, the scikit-learn package of python is utilised [

42], which adds a small amount of noise to continuous variables in order to remove repeated values, and employs a nearest neighbour method [

43,

44] for estimating the MI. In this work, the number of neighbours

k is set to 3, since small

k reduces systematic errors [

43,

44]. For each feature, the weight ranges from 0 to 1, and, the higher the values, the higher the relationship between the feature and the labels. In order to be the sum of the components of the vector of weights

equal to 1, the estimated weights are divided by the sum of the feature weights.

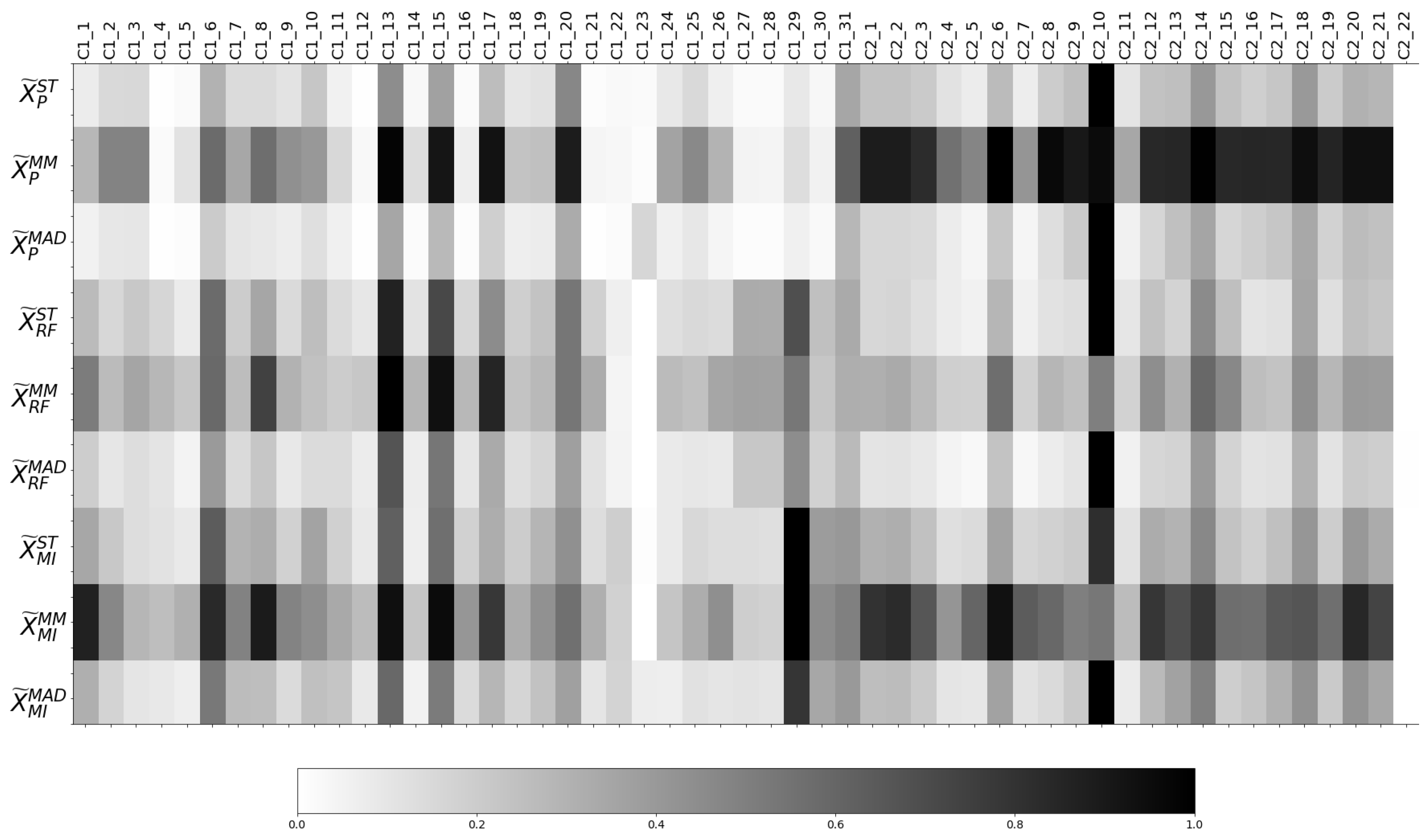

The feature weights and along with the normalisation approaches above-described are employed for creating the transformed dataset , as it will be explained next.

(3) Transformed Dataset Calculation. As depicted in

Figure 3, the two-stage methodology lies in combining both normalisation and feature weighting to intelligently transform the raw dataset. By this means, normalisation acts over the magnitude differences among the features in order to extol the resulting importance of the representativity of the features.

Then, the two-stage based transformation results from multiplying each normalised feature by the corresponding feature weight, , ..., , respectively, for .

This transformation can be expressed in matrix notation as follows: given the normalised dataset

and the diagonal matrix

formed by the elements of the vector of weights

, the transformed dataset is calculated as,

The matrix resultant from Equation (

1) contains in each column the normalised weighted feature. Thus, in this work, from the combination of each of the three selected normalisation methods (ST, MM, MAD) represented by

in Equation (

1), with each of the feature weights vectors

generated by the three FW approaches (P, RF, MI), a total of nine transformed datasets are obtained:

,

,

,

,

,

,

,

and

.

3.3.2. Machine Learning Algorithms

Once the original data have been intelligently transformed by Equation (

1) and the datasets with features representative of their relative importance for discriminating the class labels have been obtained, different ML classification algorithms are applied.

Specifically, seven ML classification algorithms [

27] from scikit-learn [

42] are employed: Quadratic Discriminant Analysis (QDA), K-Nearest Neighbours (KNN), Support Vector Classification (SVC), Ridge Regression (RID), Logistic Regression (LOG), MultiLayer Perceptron (MLP) with one hidden layer and Stochastic Gradient Descent (SGD).

QDA utilises a quadratic decision surface to separate the classes assuming that each class density function follows a multivariate Gaussian distribution. It calculates different covariance matrices for each class, which are regularised by the hyper-parameter . The algorithm KNN classifies each sample based on the class membership of its k neighbours, i.e., the k closest samples measured in terms of Euclidean distance. In contrast, SVC creates a hyper-plane, allocated between the supporting vectors, for separating the samples of both classes. It includes a soft-margin hyper-parameter C for controlling the misclassification cost. In addition, the SVC relies on the kernel trick, which allows operating in a higher dimension through inner product between pairs of data, and its hyper-parameter regulates the influence of samples selected by the model as support vectors. The RID algorithm is a regularised version of the Ordinary Least Squares regression model, where is a regularisation hyper-parameter for controlling the regression parameters. In the case of the LOG algorithm, it employs the logistic function to classify the samples, and like SVC, it includes a hyper-parameter C. The MLP employed in this work is a feedforward artificial neural network with a hidden layer composed of a user-defined number of . Each neuron applies an function to a weighted linear summation of the input values, and the final output is a weighted sum. Finally, SGD is an optimisation algorithm for minimising a function implemented to regularise linear models, where the hyper-parameter controls the strength of the regularisation.

Once the employed algorithms and their hyper-parameters have been described, a grid search (GS) algorithm is employed to select the hyper-parameters that maximise the score in terms of the selected performance metric described in

Section 3.3.3.

Table 1 collects the hyper-parameters employed in the GS for each ML algorithm and the total number of possible combinations.

Some of the selected algorithms, i.e., MLP and SGD, are stochastic and depend on the initialisation. Hence, 10 random initialisations are launched per combination of hyper-parameters, and the mean performance measure value is calculated. Then, the hyper-parameters with highest mean value are selected for the ML algorithm configuration. Similarly, once the optimal hyper-parameters have been selected, the results for the ML algorithms are given by the maximum, mean, standard deviation and minimum performance values from 30 random initialisations.

In order to validate the suitability of the two-stage methodology, the classification results of the nine transformed datasets resulting from the two-stage methodology (, , ,, , , , and ) are compared with those from the original and the normalised datasets (, and ).

3.3.3. Precision Analysis

After the application of the ML algorithms described in

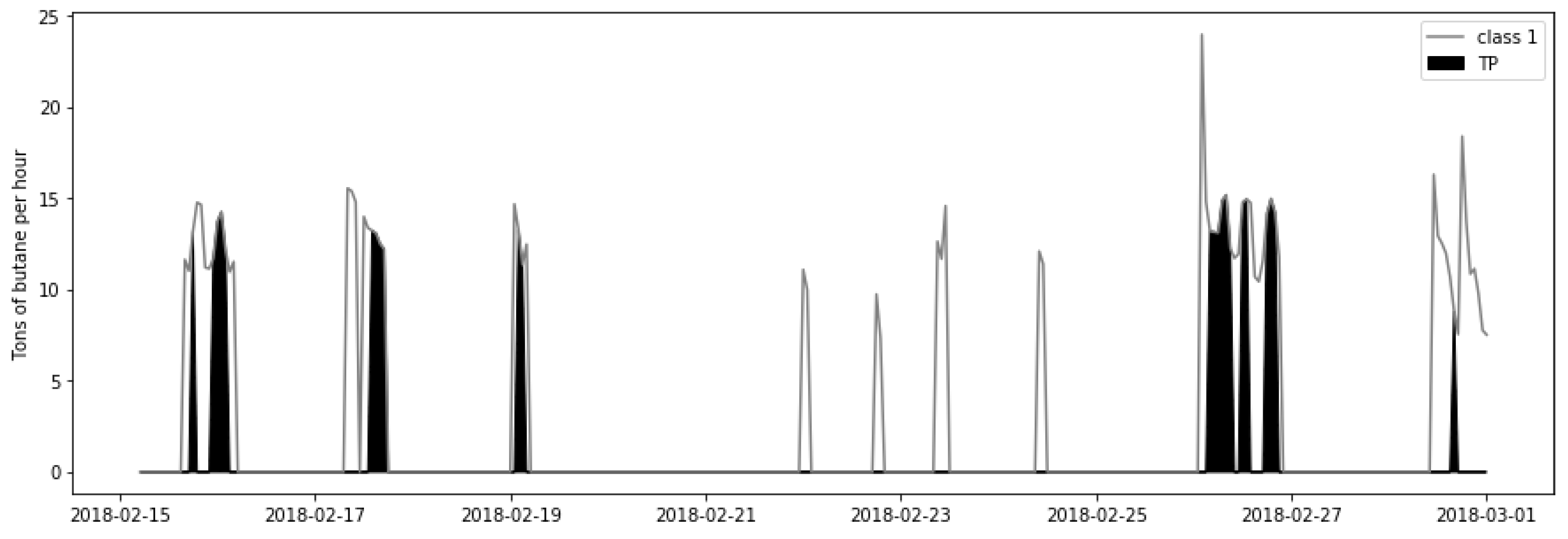

Section 3.3.2 to the nine resultant datasets from the two-stage methodology and to the raw and the normalised ones, the performance of the algorithms over each dataset is evaluated for comparison purposes. The classification ability of each model can be visualised through the confusion matrix; it reflects the relationship between the predicted classes and the real ones. Thus, in the diagonal, the number of samples correctly predicted as class 0 or 1 are collected, which are also known as true negative (TN) and true positive (TP), respectively. In contrast, the elements out of the diagonal represent the samples wrongly classified. More concretely, the cell (0,1) collects the number of samples classified as 1 with 0 their real label, known as false positive (FP) samples; and the cell (1,0) presents the false negative (FN) cases, those erroneously classified as 0 when they really belong to the class 1. The sum of the elements of the confusion matrix TP+TF+FP+FN=N is the total number of classified samples.

From the elements of the confusion matrix, different metrics are utilised for performance evaluation. A commonly employed performance metric is the accuracy , defined as the proportion of samples correctly classified. However, it is not recommended for imbalanced datasets, since a high overall accuracy can be reached by compromising the minority class. Thus, there are other metrics especially designed for measuring the classification performance in terms of class 1, such as precision and recall . Precision measures the proportion of samples the model predicts with label 1 that really correspond to such class. Thus, the higher the precision value, the lower the number of samples belonging to class 0 the are misclassified as 1. In contrast, recall represents the proportion of samples of class 1 detected by the model. Then, a low recall value corresponds to a model with poor ability for recognising the samples of class 1.

The main interest of the refinery is to complement the operators decision making with a highly-reliable predictor that detects when an improvable quality subproduct (class 1) is resulting from the process, with the minimum false alarms, so high-cost operational changes are avoided. Then, for the automatic soft-sensor creation, in the autoML approach, the precision is selected as the principal performance measure.