1. Introduction

Space particle radiation poses a serious threat to the health of astronauts. Intelligent robots are unrestricted by human physiological conditions. Thus, the use of these robots to assist astronauts in space utilization and detection in harsh environments is an inevitable choice in the development of the space station automation technology. This trend is also an important development planning for international and Chinese space stations [

1,

2,

3,

4]. The space station orbits the Earth in approximately 90 min. The air is thin, and the side facing the Sun is unobstructed and exposed to direct light. Meanwhile, the other side faces endless darkness, and the illuminance sharply drops. Furthermore, the unique space environment (e.g., excessive brightness or darkness) and the mirror-like coating of the space device can cause multiple reflections, particle radiation, and other convoluted interferences [

5], which might lead to severe interference to the operation target characteristics. In addition, the accuracy of intelligent robot target recognition poses a challenge. Pre-processing each frame of collected images, especially low-illumination images, is conducive to the accurate identification of the goal. In addition to the four-color cameras on the head of the Robotnaut2 robot astronaut developed by the National Aeronautics and Space Administration (NASA), an infrared time-of-flight camera is also attached to the robot to provide the depth of field information. Solving optical image interference in the complex space environment is challenging [

6]. In 2016, NASA adopted the visual image processing algorithm of Robonaut 2 to the complex space environment of the world to replace the algorithm used by the current visual sensor and help the Robonaut 2 robot astronauts in reliably identifying handles, tools, and other moving targets [

7]. The Beijing Institute of Technology conducted simulation experiments on the ground and practical applications in the Tiangong-2 laboratory under stable light conditions. The results show that the low-illumination image enhancement can accurately identify the target and improve the accuracy of the robot to grasp the blob [

8]. However, the image processing algorithm for complex interference caused by poor lighting in space is not yet developed. Thus, improving the image quality obtained by space intelligent robots and increasing the accuracy for the subsequent target recognition are urgent problems that must be solved.

This study investigates the negative effects caused by the tendency of low-illumination environments to conceal target feature information. The commonly used processing methods are classified into three categories. The first category is histogram equalization (HE), which balances the histogram of the whole image. Gamma correction [

9] is also a method used to enhance the contrast and brightness by simultaneously expanding dark regions and compressing bright ones. However, the main drawback of this method is that each pixel in the image is treated individually, without the dependence of their neighborhoods, thus providing inconsistent results with the real scenes. Image equalization technology is used for adjustments to resolve the aforementioned problems. Jenifer et al. [

10] proposed a fuzzy cropping contrast-limited adaptive HE (CLAHE) algorithm to enhance the local contrast of the image and maintain image brightness. Singh [

11] introduced an HE-based image enhancement method with highly adaptive group intelligence optimization to improve the overall image enhancement effect and retain the inherent detail information. Khan [

12] used wavelet transform to decompose the image into low- and high-frequency portions. The contrast of the low-frequency part is adjusted using CLAHE, and the resulting image is processed using fuzzy contrast enhancement technology to maintain the spectral information of the image. Fu et al. [

13] utilized a dual-branch network to compensate for the global color distortion and local contrast reduction and designed a compressed HE to supplement the data. In addition, variational methods that use different regularization terms on the histogram have been proposed. For example, contextual and variational contrast enhancement [

14] attempts to find histogram mapping to obtain large gray-level differences. The second category is based on the Retinex theory proposed by Land and McCann in 1971 [

15]. The dominant assumption of Retinex theory is that the image can be decomposed into reflection and illumination. Retinex theory has been widely studied and applied in the past four decades. The classic single-scale Retinex (SSR) [

16], multiscale Retinex (MSR) [

17], and multiscale Retinex with color restoration (MSRCR) [

18] approaches are continuously improved and extended to obtain additional image information. Similar to the difference-of-Gaussian function, which is widely used in natural vision science, SSR based on the center/surrounding Retinex treats the reflectance as the final enhanced result. MSR is considered to be the weighted sum of several different SSR outputs. MSR not only maintains image fidelity and compresses the dynamic range of the image but also achieves color enhancement and invariance. MSRCR adds a color restoration factor based on the MSR to solve the problem regarding channel color ratio and adjust the local image contrast enhancement, thereby resulting in the color distortion defect. NASA also uses the Retinex framework technology in processing related images [

19,

20]. Several new algorithms based on Retinex theory were proposed. Seonhee Park et al. [

21] used the variational optimization-based Retinex algorithm to enhance the low-illumination image. Li et al. [

22] used a recursive bilateral filter instead of the traditional Gaussian function as the brightness estimation function to achieve brightness estimation. In addition to the lighting effect, Jung et al. [

23] also used light normalization filtering to realize human eye detection in a wide range of lighting conditions. Fu et al. [

24] proposed a weighted variational model called simultaneous reflectance and illumination estimation, which simultaneously estimates the illumination and reflection components. The last category is the method based on the dehazing model. Inspired by the dark channel method on dehazing, [

25] identified the inverted low-illumination image similar to a haze image. They attempted to remove the inverted low-illumination image of haze by using the method proposed in [

26] and then inverted this image again to obtain the final result. Xiao and Gan [

27] used a guided joint bilateral filter to refine the initial atmospheric scattered light to generate a new atmospheric veil. They also utilized an atmospheric attenuation model to restore the scene brightness. Salazar et al. [

28] proposed an efficient dehazing algorithm based on morphological operations and Gaussian filters. The abovementioned traditional algorithms provide important theoretical support to the research on low-illumination image enhancement and improve the visual quality to a certain extent.

The application of deep learning in image classification, recognition, and tracking has yielded positive results [

29,

30,

31,

32]. Deep learning builds network models by imitating the neural network of the human brain and using efficient learning strategies to obtain results through multilevel analyses and calculations. Literature [

33] used convolutional neural networks (CNNs) to extract features at different levels and enhanced them through multiple subnets, outputting the images through multibranch fusion. Chao et al. used a four-layer convolutional network AR-CNN to demonstrate that a deep model can be effectively trained with the features learned in a shallow network [

34]. Literature [

35] presented a feed-forward fully convolutional residual network model trained using a generative adversarial framework (GAN). The results confirm the feasibility of deep learning methods. Several image enhancement algorithms related to deep learning were also proposed and rapidly developed in the past few years. Huang et al. [

36] proposed a novel frame-wise filtering method based on CNNs. A novel multiframe CNN, in which the non-peak quality frames (PQFs) and the two nearest PQFs are the input, is designed to improve the image quality [

37]. Zhang et al. proposed recursive residual CNN (RRCNN)-based in-loop filtering to further improve the quality of reconstructed intra frames while reducing the bitrates [

38]. Chen et al. [

39] proposed a low-illumination image processing pipeline based on the end-to-end training of a fully convolutional network, which can jointly process noise and color distortion. However, this pipeline is specific to RAW format data; such a condition limits its application in scenarios. Shen et al. argued that MRS is equivalent to a feedforward CNN with different Gaussian convolution kernels. They built a CNN called MSR-net [

40] to learn the end-to-end mapping between dark and bright images. Wei et al. designed a deep network called Retinex-Net, which combines image decomposition and light mapping [

41].

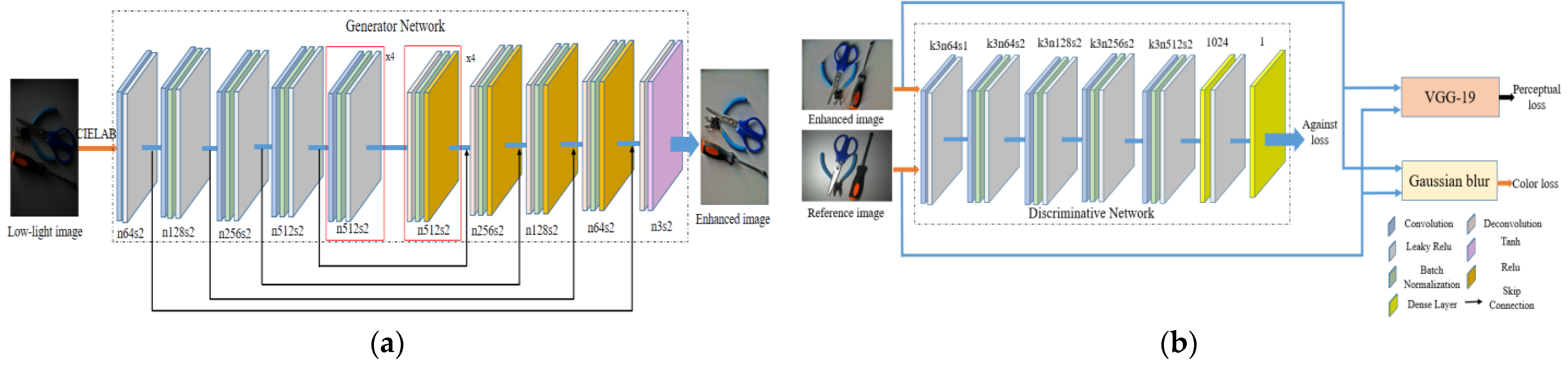

The above algorithms, whether based on traditional or deep learning, achieved excellent results. However, flexible ways for processing low-illumination images in spatial environments with sophisticated lighting are necessary. This study is based on the enhancement of the deep convolutional GAN (DCGAN) in the CIELAB color space to simulate the observed effect of the human eye. Overall, the contribution of this study is summarized in the following three aspects: (1) A deep neural network-based shimmering image enhancement method, which improves objective and subjective image quality, is proposed; (2) The use of CIELAB color space, which is consistent with the formation mechanism of human perception of color, enables the recovery of the entire image color to some extent; (3) The proposed method obtains bright and natural results, sharp textures, and rich details. Moreover, quantitative and qualitative evaluations demonstrate that the proposed method largely outperforms other methods.

The remainder of this paper is organized as follows.

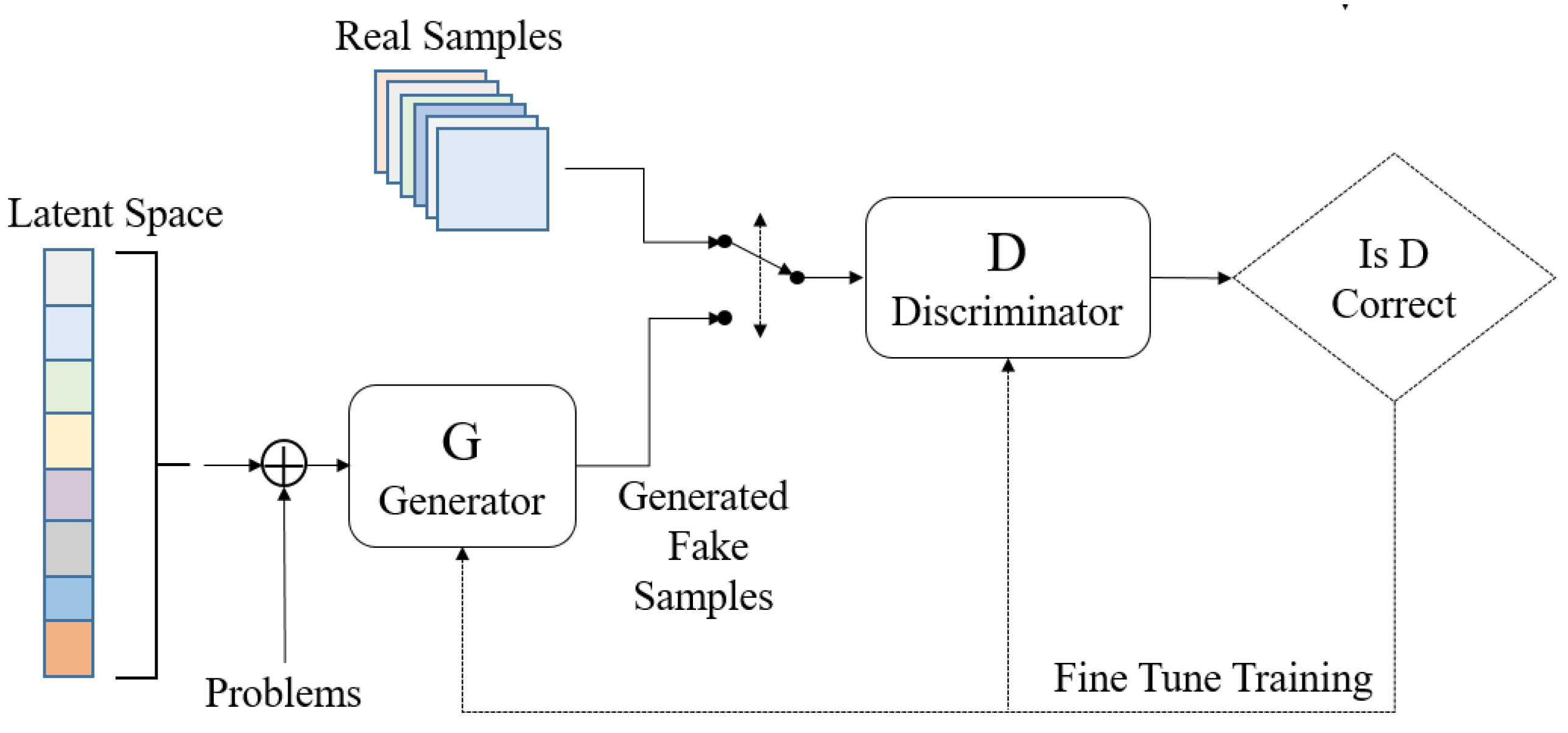

Section 2 introduces the related work of the algorithms used in this study, which mainly includes the GAN, DCGAN, and Wasserstein GAN (WGAN).

Section 3 explains the network model proposed in this paper based on the WGAN loss function, and the loss function of the proposed network is improved to address the unstable GAN training.

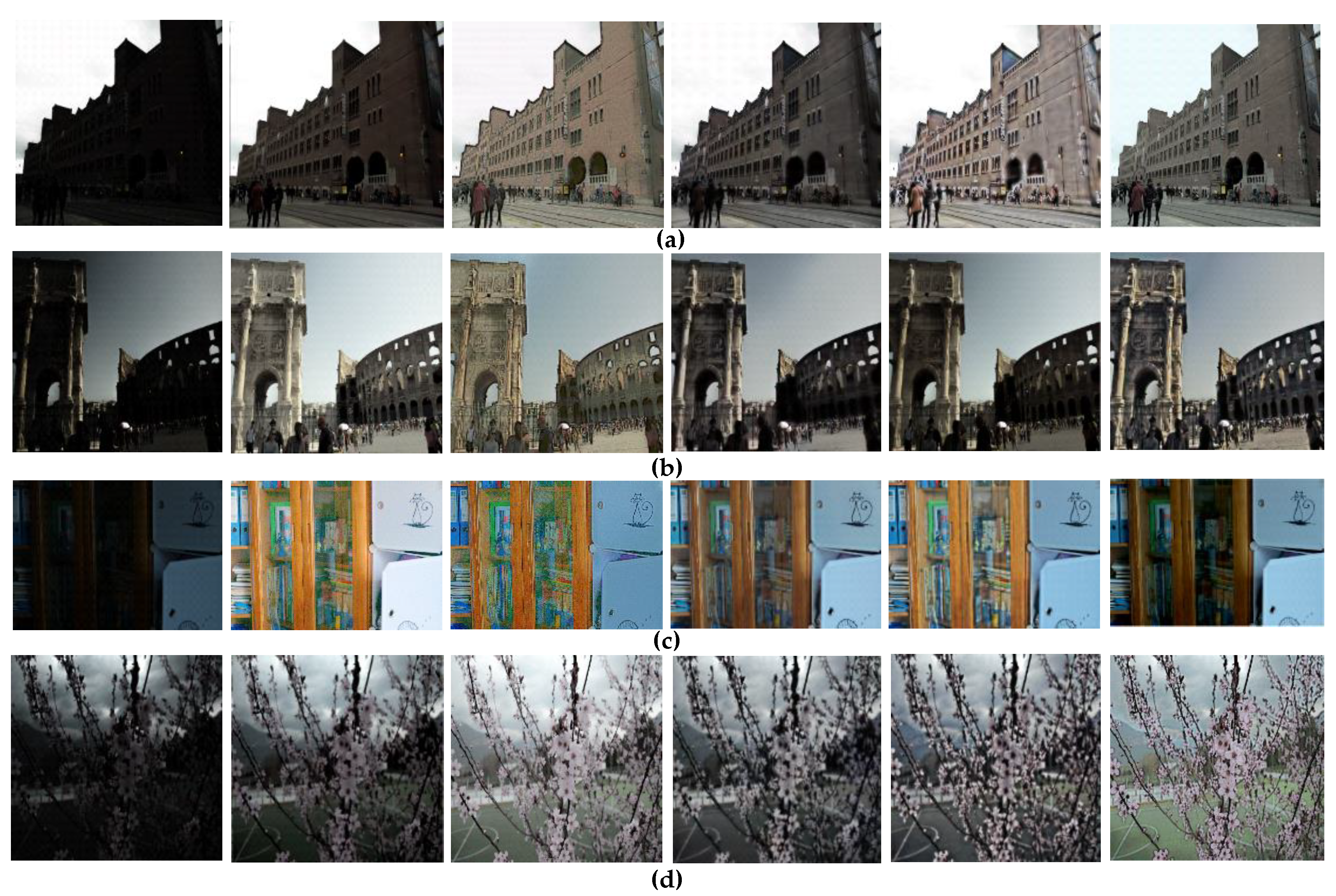

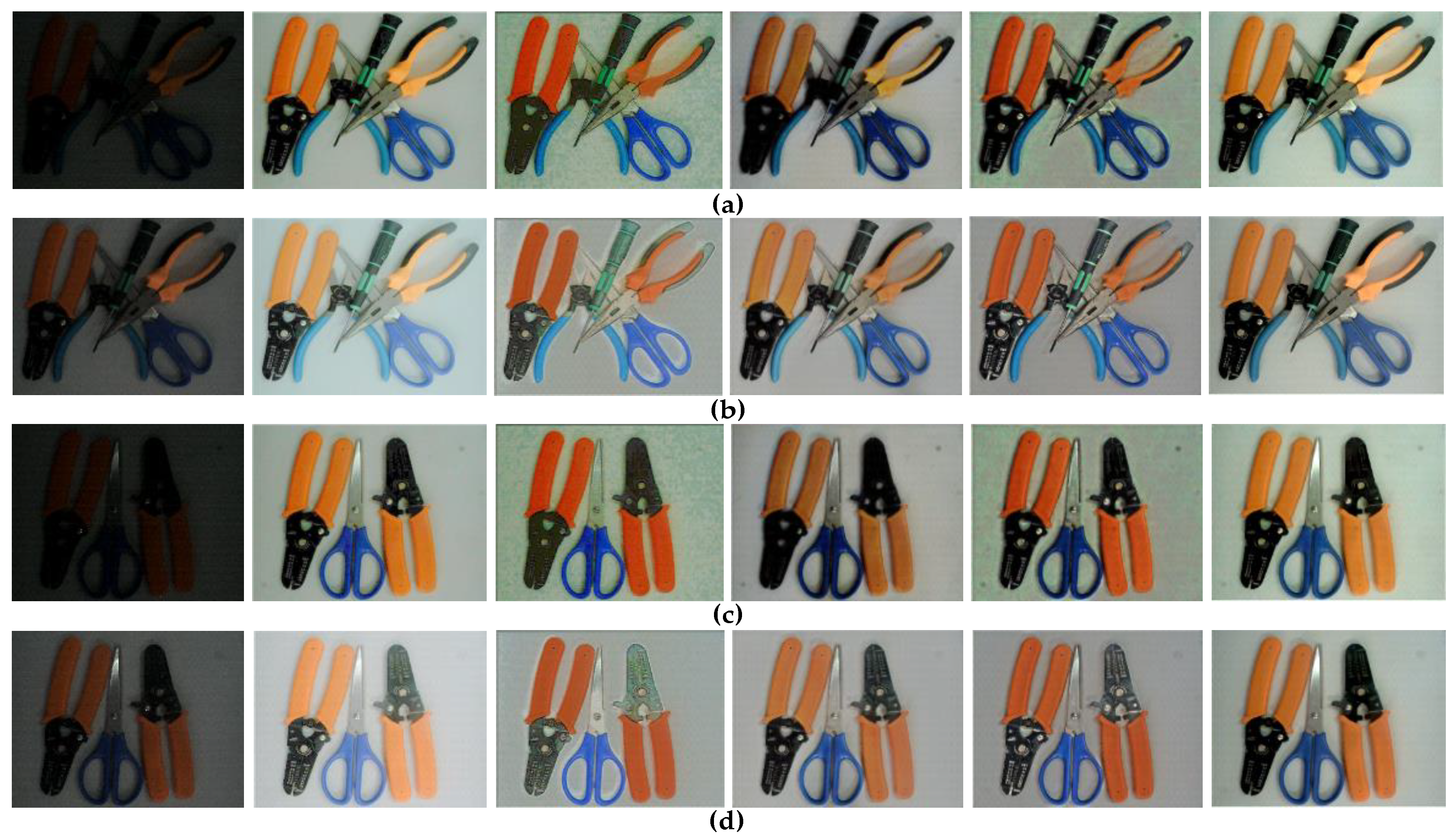

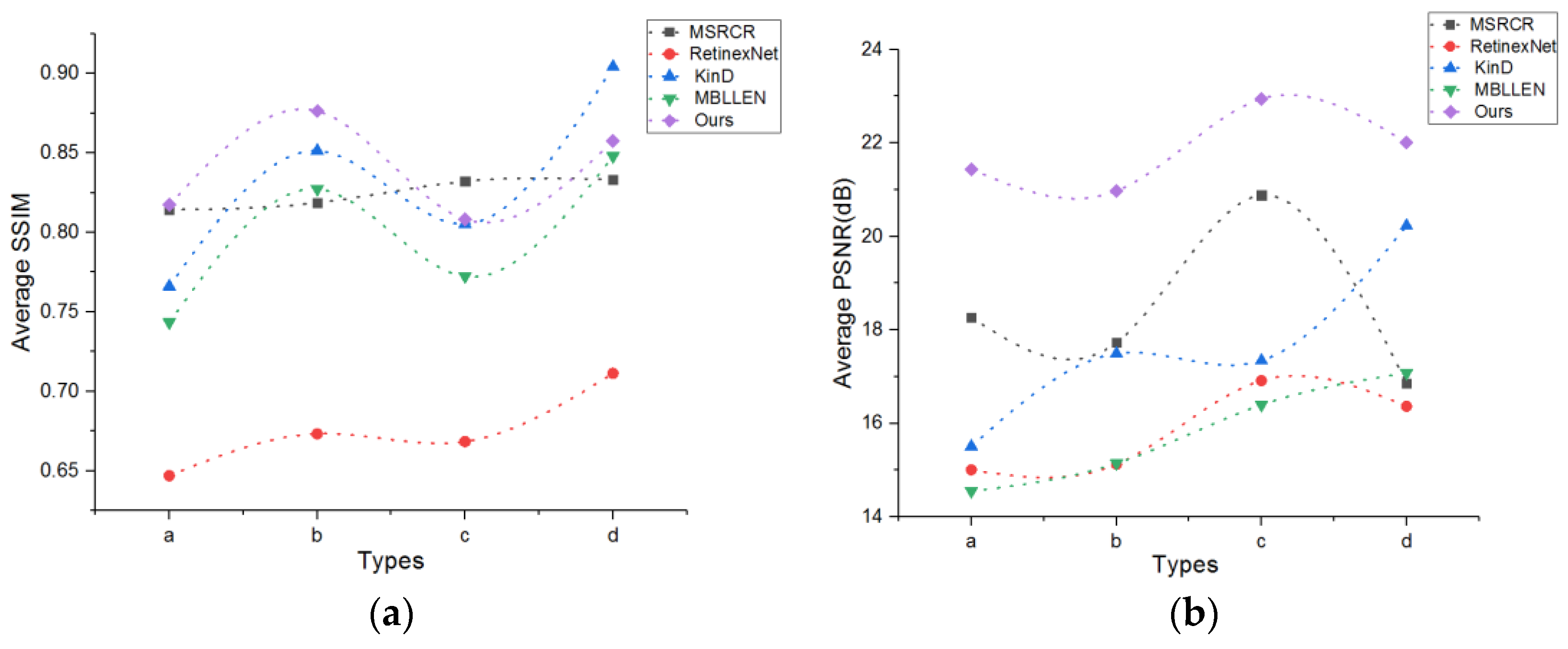

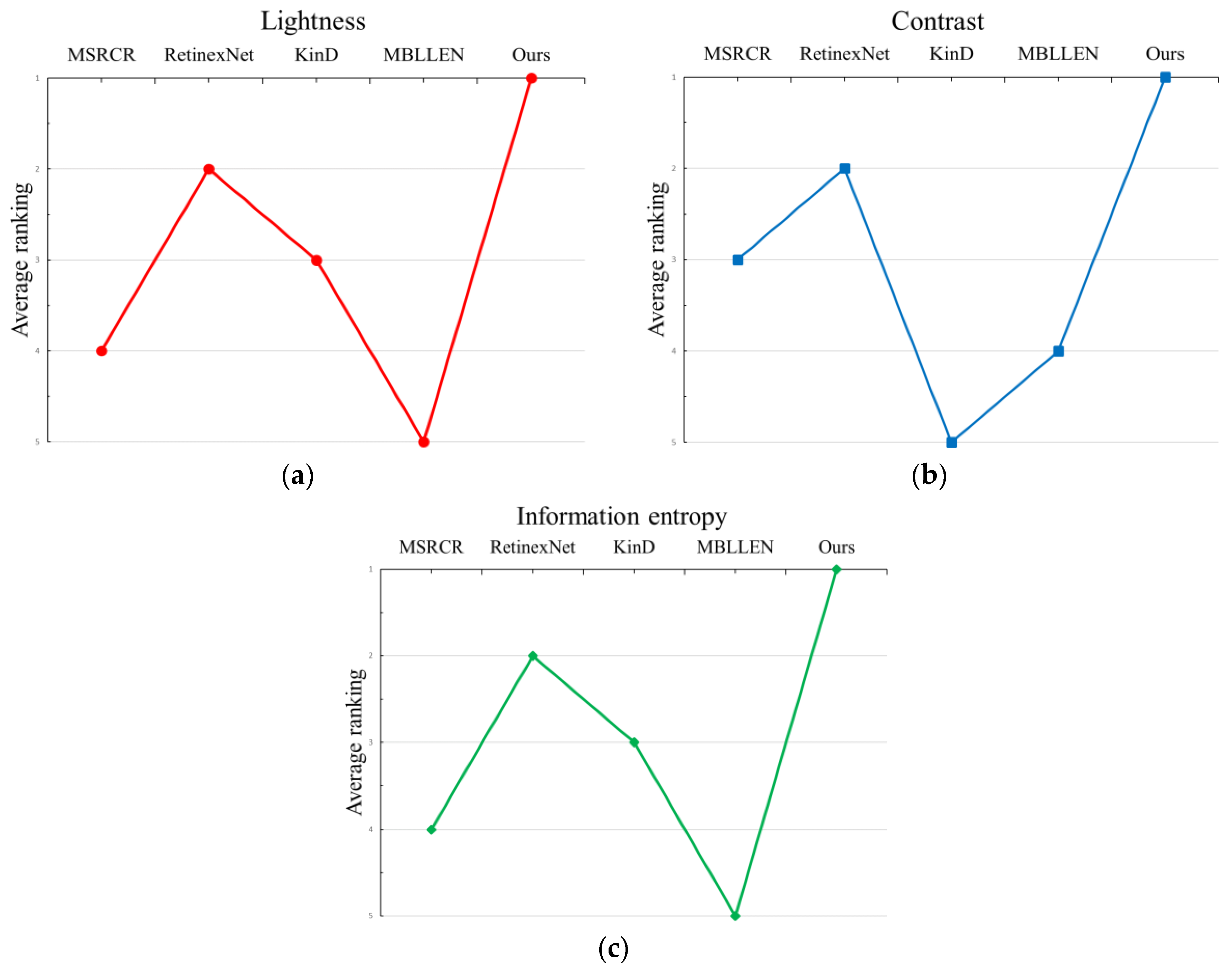

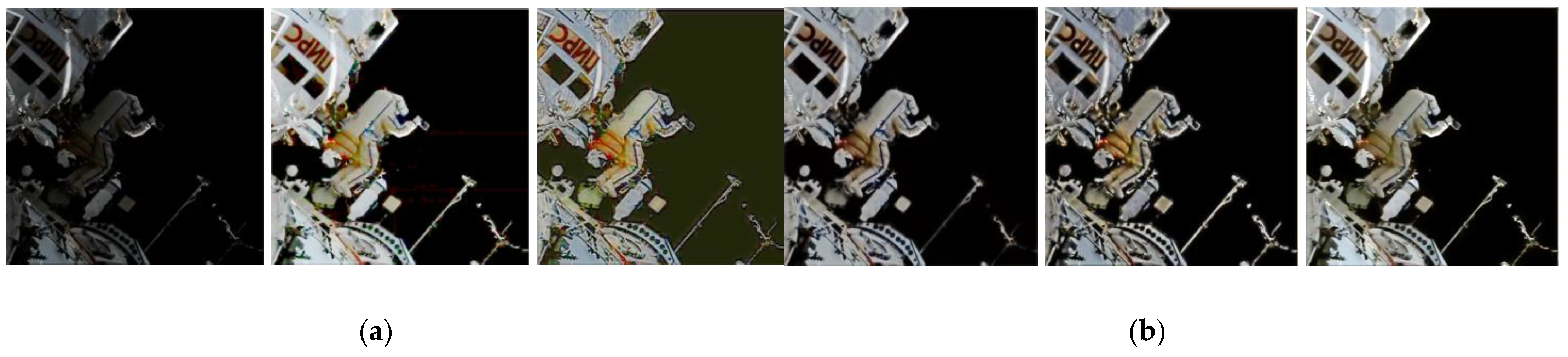

Section 4 introduces different low-illumination image processing algorithms and facilitates their comparison, and the results of low-illumination image processing under three different situations (general, special, and actual images) are analyzed.

Section 5 provides several conclusions drawn from this research.