Perception of a Haptic Stimulus Presented Under the Foot Under Workload

Abstract

1. Introduction

2. Background

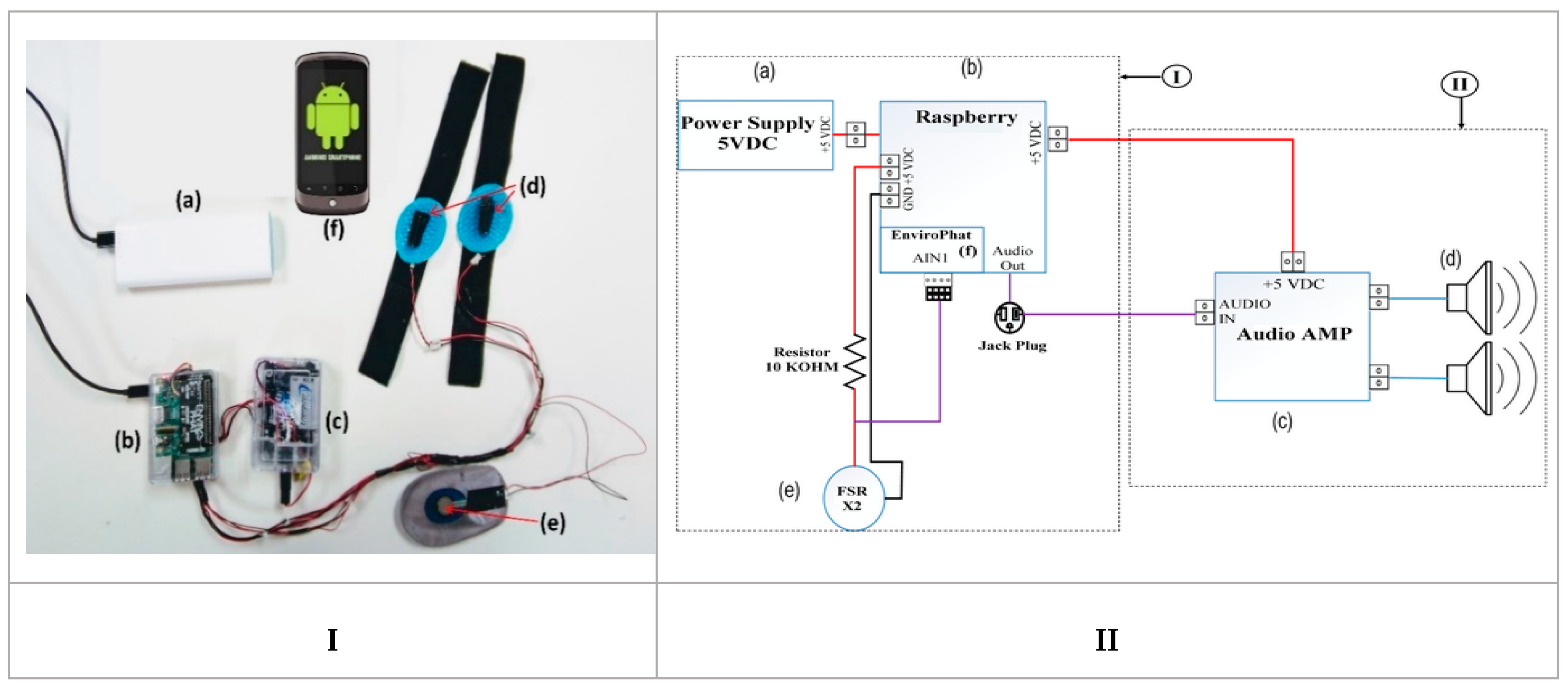

3. Apparatus: Enactive Shoe

3.1. Wearable Device

3.2. Used Haptic Stimulus

4. Experiment

4.1. Participants

4.2. Experimental Setup

4.2.1. Test Environment

4.2.2. Experiment conditions

4.3. Experimental Sessions: Control and Experimental

4.4. Familiarization Phase

4.5. Test Phase

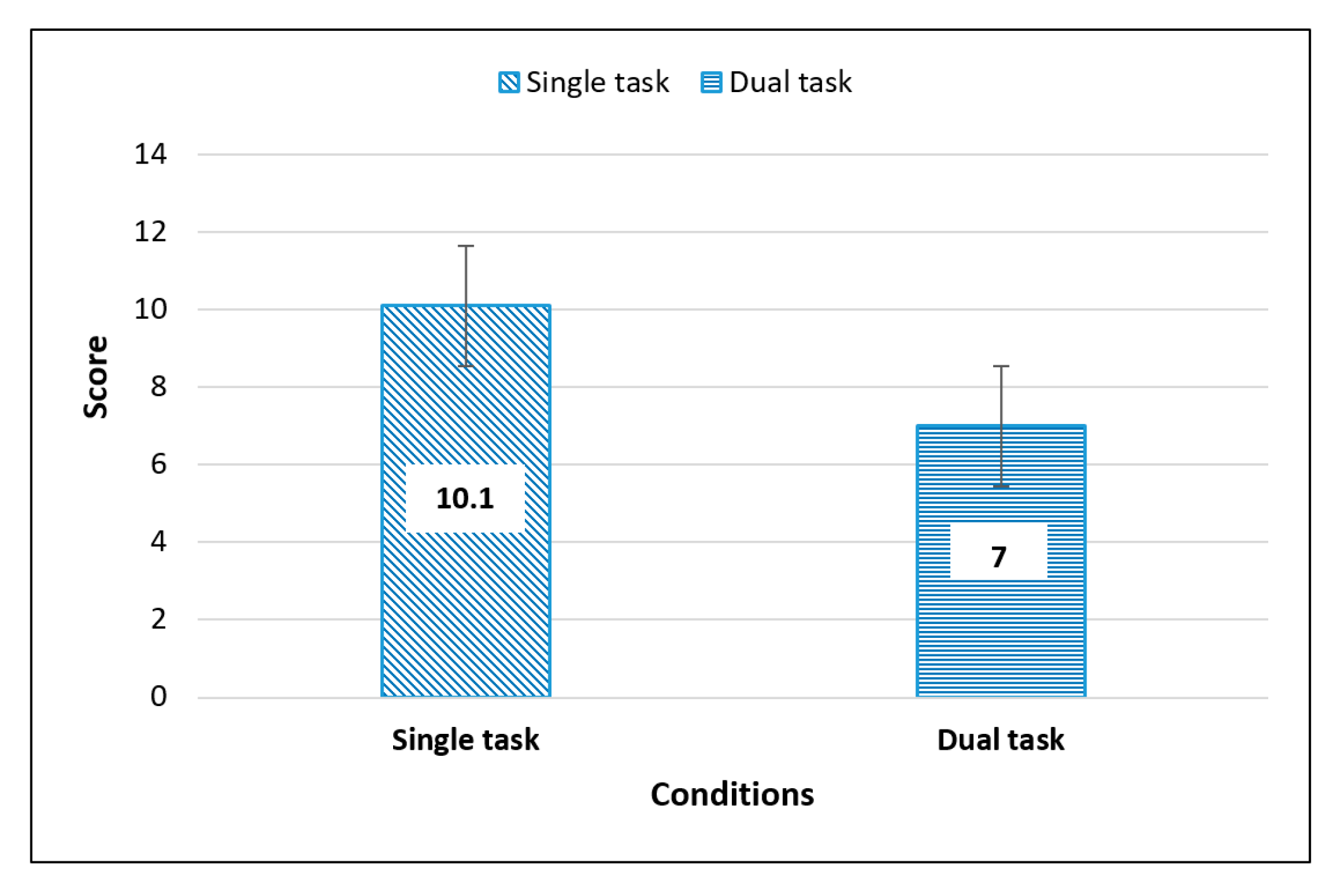

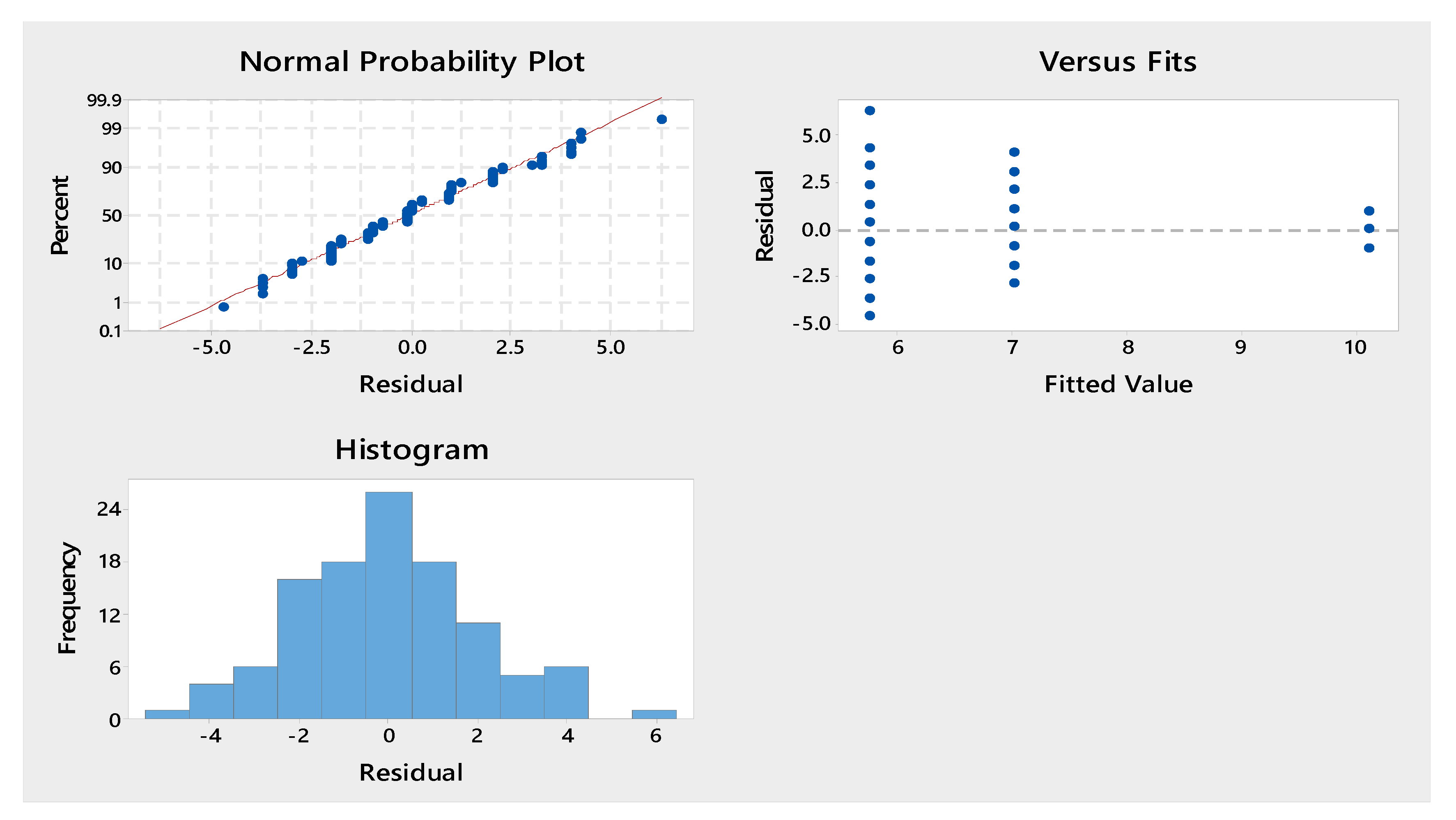

5. Results

6. Discussions

6.1. Influence of Walking on the Perception of a Vibrotactile Stimulus

6.2. Influence of Cognitive Tasks on Vibrotactile Stimulus Perception

6.3. Implication of the Results from This Study

7. Conclusions and Future Works

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Brewster, S.; Chohan, F.; Brown, L. Tactile feedback for mobile interactions. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (HI ’07), San Jose, CA, USA, 28 April–3 May 2007. [Google Scholar]

- Lederman, S.J.; Klatzky, R.L. Haptic perception: A tutorial. Atten. Percept. Psychophys. 2009, 71, 1439–1459. [Google Scholar] [CrossRef]

- MacLean, K.E. Haptic Interaction Design for Everyday Interfaces. Rev. Hum. Factors Ergon. 2008, 4, 149–194. [Google Scholar] [CrossRef]

- Tchakouté, L.D.C.; Gagnon, D.; Menelas, B.-A.J. Use of tactons to communicate a risk level through an enactive shoe. J. Multimodal User Interfaces 2018, 12, 41–53. [Google Scholar] [CrossRef]

- Hoggan, E.; Brewster, S. New parameters for tacton design. In CHI ’07 Extended Abstracts on Human Factors in Computing Systems (CHI EA ’07); Association for Computing Machinery: New York, NY, USA, 2007; pp. 2417–2422. [Google Scholar]

- Brewster, S.; Brown, L.M. Tactons: Structured tactile messages for non-visual information display. In Proceedings of the Fifth Conference on Australasian User Interface—Volume 28 (AUIC ’04).; Australian Computer Society Inc.: Darling Hurst, Australia, 2004; pp. 15–23. [Google Scholar]

- Lévesque, V. Blindness, Technology and Haptics; Center for Intelligent Machines: Montréal, QC, Canada, 2005; pp. 19–21. [Google Scholar]

- Otis, M.J.-D.; Ayena, J.C.; Tremblay, L.E.; Fortin, P.E.; Ménélas, B.-A.J. Use of an Enactive Insole for Reducing the Risk of Falling on Different Types of Soil Using Vibrotactile Cueing for the Elderly. PLoS ONE 2016, 11, e0162107. [Google Scholar] [CrossRef] [PubMed]

- Tchakouté, L.D.C.; Ménélas, B.A.J. Reaction Time to Vibrotactile Messages on Different Types of Soil. In Proceedings of the 13th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Funchal, Portugal, 27–29 January 2018. [Google Scholar]

- Tchakouté, L.C.; Tremblay, L.; Menelas, B.-A. Response Time to a Vibrotactile Stimulus Presented on the Foot at Rest and During Walking on Different Surfaces. Sensors 2018, 18, 2088. [Google Scholar] [CrossRef] [PubMed]

- Engle, R.W. Working Memory Capacity as Executive Attention. Curr. Dir. Psychol. Sci. 2002, 11, 19–23. [Google Scholar] [CrossRef]

- Novak, D.; Mihelj, M.; Munih, M. Dual-task performance in multimodal human-computer interaction: A psychophysiological perspective. Multimedia Tools Appl. 2012, 56, 553–567. [Google Scholar] [CrossRef]

- Levitin, D.J. The perception of cross-modal simultaneity (or “the Greenwich Observatory Problem” revisited). In AIP Conference Proceedings; AIP Publishing: Melville, NY, USA, 2000. [Google Scholar]

- Chan, A.; MacLean, K.; McGrenere, J. Learning and Identifying Haptic Icons under Workload. In Proceedings of the First Joint Eurohaptics Conference and Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems, World Haptics Conference, Pisa, Italy, 18–20 March 2005. [Google Scholar]

- Boy, G.A. A Human-Centered Design Approach. In The Handbook of Human-Machine Interaction; CRC Press: Boca Raton, FL, USA, 2017; pp. 1–20. [Google Scholar]

- Oakley, I.; Park, J. Did you feel something? Distracter tasks and the recognition of vibrotactile cues. Interact. Comput. 2008, 20, 354–363. [Google Scholar] [CrossRef]

- Tang, A.; McLachlan, P.; Lowe, K.; Saka, C.R.; MacLean, K. Perceiving ordinal data haptically under workload. In Proceedings of the 7th International Conference on Multimodal Interfaces—ICMI ’05, Torento, Italy, 4–6 October 2005. [Google Scholar]

- Kaber, D.B.; Zhang, T. Human Factors in Virtual Reality System Design for Mobility and Haptic Task Performance. Rev. Hum. Factors Ergon. 2011, 7, 323–366. [Google Scholar] [CrossRef]

- Chen, J.Y.C. Concurrent Performance of Military and Robotics Tasks and Effects of Cueing in a Simulated Multi-Tasking Environment. Presence Teleoper. Virtual Environ. 2009, 18, 1–15. [Google Scholar] [CrossRef]

- Alexander, N.B. Gait Disorders in Older Adults. J. Am. Geriatr. Soc. 1996, 44, 434–451. [Google Scholar] [CrossRef] [PubMed]

- Woollacott, M.; Shumway-Cook, A. Attention and the control of posture and gait: A review of an emerging area of research. Gait Posture 2002, 16, 1–14. [Google Scholar] [CrossRef]

- Montero-Odasso, M.; Casas, A.; Hansen, K.T.; Bilski, P.; Gutmanis, I.; Wells, J.L.; Borrie, M.J. Quantitative gait analysis under dual-task in older people with mild cognitive impairment: A reliability study. J. Neuroeng. Rehabil. 2009, 6, 35. [Google Scholar] [CrossRef] [PubMed]

- Timmermans, C.; Roerdink, M.; Janssen, T.W.J.; Meskers, C.G.M.; Beek, P.J. Dual-Task Walking in Challenging Environments in People with Stroke: Cognitive-Motor Interference and Task Prioritization. Stroke Res. Treat. 2018, 2018, 7928597. [Google Scholar] [CrossRef]

- Menelas, B.-A.J.; Otis, M.J. Design of a serious game for learning vibrotactile messages. In Proceedings of the 2012 IEEE International Workshop on Haptic Audio Visual Environments and Games (HAVE 2012), Munich, Germany, 8–9 October 2012. [Google Scholar]

- Otis, M.J.-D.; Otis, M.J.; Menelas, B.-A.J. Toward an augmented shoe for preventing falls related to physical conditions of the soil. In Proceedings of the 2012 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Seoul, Korea, 14–17 October 2012. [Google Scholar]

- Zanotto, D.; Turchet, L.; Boggs, E.M.; Agrawal, S.K. SoleSound: Towards a novel portable system for audio-tactile underfoot feedback. In Proceedings of the 5th IEEE RAS/EMBS International Conference on Biomedical Robotics and Biomechatronics, São Paulo, Brazil, 12–15 August 2014. [Google Scholar]

- Velázquez, R.; Pissaloux, E.E.; Hafez, M.; Szewczyk, J. Toward Low-Cost Highly Portable Tactile Displays with Shape Memory Alloys. Appl. Bionics Biomech. 2007, 4, 57–70. [Google Scholar] [CrossRef]

- Meier, A.; Matthies, D.J.C.; Urban, B.; Wettach, R. Exploring vibrotactile feedback on the body and foot for the purpose of pedestrian navigation. In Proceedings of the 2nd international Workshop on Sensor-based Activity Recognition and Interaction—WOAR ’15, Rostock, Germany, 25–26 June 2015. [Google Scholar]

- Tchakouté, L.D.C.; Ménélas, B.A.J. Impact of Auditory Distractions on Haptic Messages Presented Under the Foot. In Proceedings of the VISIGRAPP (2: HUCAPP), Funchal, Portugal, 27–29 January 2018; pp. 55–63. [Google Scholar]

- Menelas, B.-A.J.; Otis, M.J.D. Toward an Automatic System for Training Balance Control Over Different Types of Soil. In Virtual, Augmented Reality and Serious Games for Healthcare 1; Springer: Berlin, Germany, 2014; pp. 391–408. [Google Scholar]

- Yang, T.; Xie, D.; Li, Z.; Zhu, H. Recent advances in wearable tactile sensors: Materials, sensing mechanisms, and device performance. Mater. Sci. Eng. R Rep. 2017, 115, 1–37. [Google Scholar] [CrossRef]

- Bucks, R.S.; Ashworth, D.L.; Wilcock, G.K.; Siegfried, K. Assessment of activities of daily living in dementia: Development of the Bristol Activities of Daily Living Scale. Age Ageing 1996, 25, 113–120. [Google Scholar] [CrossRef]

- Lachenbruch, P.A.; Cohen, J. Statistical Power Analysis for the Behavioral Sciences (2nd ed). J. Am. Stat. Assoc. 1989, 84, 1096. [Google Scholar] [CrossRef]

- Posner, M.I.; Sandson, J.; Dhawan, M.; Shulman, G.L. Is word recognition automatic? A cognitive-anatomical approach. J. Cogn. Neurosci. 1989, 1, 50–60. [Google Scholar] [CrossRef]

- Clair-Thompson, H.L.S.; Allen, R.J. Are forward and backward recall the same? A dual-task study of digit recall. Mem. Cogn. 2012, 41, 519–532. [Google Scholar] [CrossRef]

- Wilson, G.F.; Russell, C.A. Operator functional state classification using multiple psychophysiological features in an air traffic control task. Hum. Factors J. Hum. Factors Ergon. Soc. 2003, 45, 381–389. [Google Scholar] [CrossRef] [PubMed]

- Chan, A.; MacLean, K.; McGrenere, J. Designing haptic icons to support collaborative turn-taking. Int. J. Hum. Comput. Stud. 2008, 66, 333–355. [Google Scholar] [CrossRef]

- Menelas, B.-A.J.; Picinali, L.; Bourdot, P.; Katz, B.F.G. Non-visual identification, localization, and selection of entities of interest in a 3D environment. J. Multimodal User Interfaces 2014, 8, 243–256. [Google Scholar] [CrossRef]

- Ménélas, B.; Picinali, L.; Katz, B.F.; Bourdot, P.; Ammi, M. Haptic audio guidance for target selection in a virtual environment. In Proceedings of the 4th International Haptic and Auditory Interaction Design Workshop (HAID’09), Dresden, Germany, 10–11 September 2009; Volume 2. [Google Scholar]

- Hunter, S.W.; Divine, A.; Frengopoulos, C.; Montero-Odasso, M. A framework for secondary cognitive and motor tasks in dual-task gait testing in people with mild cognitive impairment. BMC Geriatr. 2018, 18, 202. [Google Scholar] [CrossRef]

- Jones, L.A.; Nakamura, M.; Lockyer, B. Development of a tactile vest. In Proceedings of the 12th International Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems (HAPTICS ‘04), Chicago, IL, USA, 27–28 March 2004; pp. 82–89. [Google Scholar]

- Van Erp, J.; Van Veen, H.A.H.C.; Jansen, C.; Dobbins, T. Waypoint navigation with a vibrotactile waist belt. ACM Trans. Appl. Percept. 2005, 2, 106–117. [Google Scholar] [CrossRef]

- Karuei, I.; MacLean, K.E.; Foley-Fisher, Z.; MacKenzie, R.; Koch, S.; El-Zohairy, M. Detecting vibrations across the body in mobile contexts. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Vancouver, BC, Canada, 7–12 May 2011; pp. 3267–3276. [Google Scholar]

- Chapwouo Tchakoute, L.D. Exploitation of Haptic Renderings to Communicate Risk Levels of Falling. Ph.D. Thesis, Université du Québec à Chicoutimi, Chicoutimi, QC, Canada, 2018. [Google Scholar]

- Menelas, B.-A.; Benaoudia, R.S. Use of Haptics to Promote Learning Outcomes in Serious Games. Multimodal Technol. Interact. 2017, 1, 31. [Google Scholar] [CrossRef]

| Participants | Value |

|---|---|

| Age (Y) | 26.45 ± 4.45 * |

| Height (cm) | 162.95 ± 29.42 * |

| Weight (kg) | 78.98± 33.26 * |

| Gender | Men (n = 14)|Women (n = 14) |

| Sessions | Conditions | Phase | Distractions | Positioning |

|---|---|---|---|---|

| Control | 1: At rest | Familiarization phase | None | Static: At rest |

| Test phase | None | Static: At rest | ||

| Experi-mental | 2: Counting forwards | Familiarization phase | Counting Forwards | At rest |

| Test phase | Counting Forwards | At rest | ||

| 3: Counting backwards | Familiarization phase | Counting Backwards | At rest | |

| Test phase | Counting Backwards | At rest | ||

| 4: Walking | Familiarization phase | Walking | Moving | |

| Test phase | Walking | Moving |

| Difference of Levels | Difference of Means | SE of Difference | 95% CI | T-Value | Adjusted p-Value |

|---|---|---|---|---|---|

| CF–At rest | −3.107 | 0.550 | (−4.541; −1.673) | −5.65 | 0.000 |

| CB–At rest | −3.714 | 0.550 | (−5.148; −2.280) | −6.76 | 0.000 |

| Walking–At rest | −4.357 | 0.550 | (−5.791; −2.923) | −7.93 | 0.000 |

| CB–CF | −0.607 | 0.550 | (−2.041; 0.827) | −1.10 | 0.687 |

| Walking–CF | −1.250 | 0.550 | (−2.684; 0.184) | −2.27 | 0.11 |

| Walking–CB | −0.643 | 0.550 | (−2.077; 0.791) | −1.17 | 0.647 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chapwouo Tchakoute, L.D.; Menelas, B.-A.J. Perception of a Haptic Stimulus Presented Under the Foot Under Workload. Sensors 2020, 20, 2421. https://doi.org/10.3390/s20082421

Chapwouo Tchakoute LD, Menelas B-AJ. Perception of a Haptic Stimulus Presented Under the Foot Under Workload. Sensors. 2020; 20(8):2421. https://doi.org/10.3390/s20082421

Chicago/Turabian StyleChapwouo Tchakoute, Landry Delphin, and Bob-Antoine J. Menelas. 2020. "Perception of a Haptic Stimulus Presented Under the Foot Under Workload" Sensors 20, no. 8: 2421. https://doi.org/10.3390/s20082421

APA StyleChapwouo Tchakoute, L. D., & Menelas, B.-A. J. (2020). Perception of a Haptic Stimulus Presented Under the Foot Under Workload. Sensors, 20(8), 2421. https://doi.org/10.3390/s20082421