Abstract

The smooth variable structure filter (SVSF) is a new-type filter based on the sliding-mode concepts and has good stability and robustness in overcoming the modeling uncertainties and errors. However, SVSF is insufficient to suppress Gaussian noise. A novel smooth variable structure smoother (SVSS) based on SVSF is presented here, which mainly focuses on this drawback and improves the SVSF estimation accuracy of the system. The estimation of the linear Gaussian system state based on SVSS is divided into two steps: Firstly, the SVSF state estimate and covariance are computed during the forward pass in time. Then, the smoothed state estimate is computed during the backward pass by using the innovation of the measured values and covariance estimate matrix. According to the simulation results with respect to the maneuvering target tracking, SVSS has a better performance compared with another smoother based on SVSF and the Kalman smoother in different tracking scenarios. Therefore, the SVSS proposed in this paper could be widely applied in the field of state estimation in dynamic system.

1. Introduction

The state and parameter estimation of dynamic systems plays a key role in various application fields. In practice, not only the real-time state estimation of the system is required, but also an estimation result with higher precision through the smoother by using additional measurements made after the time of the estimated state vector is necessary [1,2,3,4]. For example, in a radar system, a smoother is used to obtain higher precision track trajectory for the battlefield situation estimation, and to make more accurate estimates for the aircraft performance. Smoothers are generally divided into fixed-point, fixed-interval, and fixed-lag smoothers [5,6,7], depending on attributes of the application, which type of smoothers can be decided to use.

In a dynamic system, the real-time sampled data contains various interferences and noises, and it is difficult to obtain the true state of the system. In 1960, in the case of linear Gaussian noise, a recursive solution was obtained using the Kalman filter (KF) [8]. This method was deemed to be the best under a linear, unbiased, and minimum mean square error condition. However, the KF estimates are imprecise and unstable when the system is nonlinear. To address these problems, scholars have proposed some different methods. For example, there is the extended Kalman filter (EKF) [9], the unscented Kalman filter (UKF) [10], and the cubature Kalman filter (CKF) [11,12]. Considering a very small region of nonlinear system as a linear system, EKF was proposed, which is equivalent to the first-order Taylor approximation. Compared with EKF, UKF equals to the second-order Taylor approximation without the Jacobian matrix calculating. In contrast, CKF is based on a third-degree spherical-radial cubature rule that provides a set of cubature points scaling linearly with the state-vector dimension to approximate the state expectation and covariance of a nonlinear system. CKF is equivalent to the fourth-order Taylor approximation and is more stable. In the case of nonlinear and non-Gaussian functions, research about the particle filter is very active, but the huge amount of calculation limits its scope of application [13,14].

One of the main problems of the KF-based filters is that in the case of modeling and parameter uncertainties, the performance of estimation is degraded. For example, state noises and measurement noises have heavy-tailed and/or skewed non-Gaussian distributions when measurements have much clutter [15,16,17]. To deal with this unknown noises, some robust methods have been presented [18,19], when the system with a heavy-tailed or a skewed measurement noises, robust Kalman filters have been proposed by employing the Student’s t or skew-t distribution to model measurement noises [15,20]. The filter based on a detect-and-reject idea when the measurements are partially disturbed by outliers [21]. For improving robustness and performance of EKF, optimization algorithms [22], adaptive step size control method [23] and online expectation-maximization approach [24] are applied, other theory include optimal [25], minimax estimators [26], etc. Above filters have poor performance in the system with an uncertain model, because mismatching exists between the theoretical signal model of those filters and the variational state model of the system, the theoretical model is entirely consistent variable state model, and is effective in estimation. When the system is a hybrid system that contains finite models, the multi-model methods are proposed to estimate the system state and it are different from single model strategies. The popular multi-model methods include the interactive multi-model method and the variable structure interactive multi-model method [27,28,29,30,31]. So far, the state estimation of dynamic systems is a difficult problem when the modeling and parameter are uncertain, which is worth further study.

The smooth variable structure filter (SVSF) was proposed based on variable structure theory in 2007, and it has advantages in dealing with the problem of uncertain modeling and noise interference [32]. Similar to the KF method, the SVSF is also a predictor-corrector strategy, its gain is based on the discontinuous correction gain, and limits the state estimation around the true state trajectory with a small deviation. Thereby it improves the stability and the robustness of the estimation [32]. The SVSF has been known as a sub-optimal filter [10,12], and different methods have been proposed continually to enrich the theory [33,34,35,36], such as a higher-order version and a revised version [37] of the SVSF, where Gadsden derived the state covariance of the SVSF, and introduced opportunity for its application [38]. Reference [10,12] calculated the optimal smoothing layer with state covariance to determine whether to use SVSF filtering or other filtering methods (e.g., EKF, UKF, CKF), and obtained better filtering results. According to previous research, the SVSF has achieved significant development and is applied in various fields, such as vehicle navigation [39,40], battery management [41,42], fault detection and diagnosis [43,44,45], and artificial intelligence [45,46,47]. However, the ability of SVSF to eliminate noises cannot meet the requirement of estimation in many application conditions such as target tracking, which can be improved by a smoother. There are not many works that previously studied the smoothing problem of the SVSF. The earliest related article [48,49] presented a two-pass smoother based on the SVSF (labeled as SVSTPS), which uses the SVSF gain. As mentioned above, the SVSF gain is considered suboptimal under linear cases [10,12]. Therefore, the performance of SVSF smoothing can be further improved.

This paper is inspired by the Kalman smoother [5,6] and infers the proposed SVSS algorithm in the linear case. Unlike using the SVSF gain, the proposed SVSS method is capable of getting a better state estimation by using the innovation sequence and projection theory [8]. Our former work focus on maneuvering target tracking [50,51,52,53], the SVSS is also applied in maneuvering target tracking. The rest of the paper is structured as follows. Section 2 reviews the SVSF. Section 3 expounds on the smooth theories and the SVSS algorithm. Section 4 exhibits the simulation and analysis. Section 5 concludes the main findings in this paper.

2. The SVSF Strategies

Sliding-mode control and estimation is a traditional technique [54] and is widely welcomed as the easy implementation and robustness to uncertainty modeling and errors. Based on Sliding-mode concepts, Habibi first proposed the variable structure filter(VSF) in 2003 [54], which is a new predictor-corrector estimator type, that is applied to linear systems. The SVSF, as an improved form of VSF, can be used for linear or non-linear systems [32].

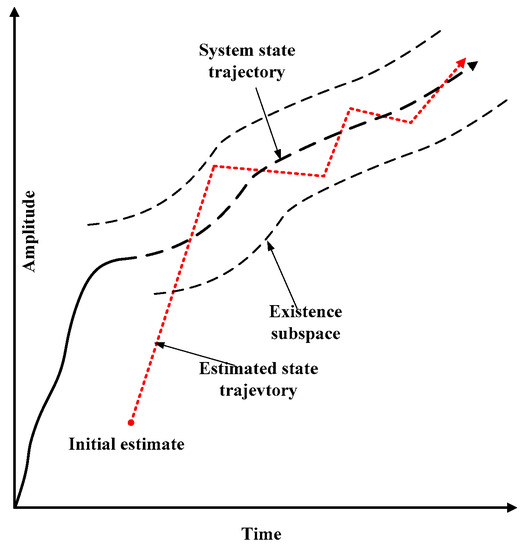

The trajectory of the state variable over time is shown in Figure 1. In the SVSF concept, the system state trajectory is generated by uncertain modeling. The estimated state trajectory is approximated towards the true trajectory until it reaches the existence subspace. When the estimated state remains in the existence subspace, it is forced to switch back and forth in the true state trajectory. If modeling errors and noises are bounded, then estimates will remain within this limit. The SVSF strategy shows its stability and robustness to modelling uncertainties and errors.

Figure 1.

SVSF estimation concept [32].

In the case of linear dynamic systems under a zero-mean and Gaussian noise, the state equation and observation equation of a dynamic system are given by Equation (1), respectively:

The iterative process of SVSF is as follows:

The predicted state estimation and measurement estimation are calculated as:

The measurement innovation is expressed by:

Based on Reference [32], the SVSF gain is given by:

where is a gain function, is the generalized inverse matrix of , () is the SVSF “memory” or convergence rate, is the absolute value of , and represents the multiplication of the corresponding elements of the two matrices (Hadamard product). Besides,

and

where () represents the element of a vector that means smoothing boundary value, and is the measurement dimension. The state estimate is updated by:

The posterior measurement estimation and its innovation are described as:

The SVSF estimation process converges to the existence subspaces as shown in Figure 1 and Figure 2. The width of the existence subspace is an uncertain dynamic and time-varying function. In general, the width of is not completely known, but an upper bound (i.e., ) can be chosen based on prior knowledge.

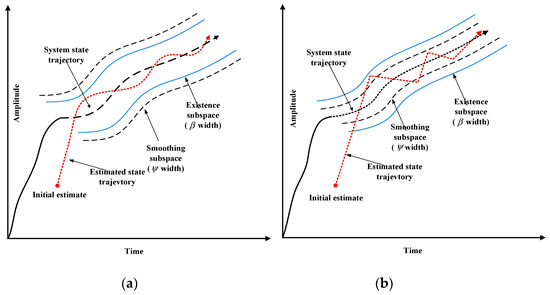

Figure 2.

Smoothing boundary layer concept [10,32] (a) Smoothed estimated trajectory ; (b) Presence of chattering effect .

In the existence subspace, the SVSF gain will force the estimated state to switch back and forth along the true state trajectory. The high-frequency switching caused by the gain of the SVSF is called chattering [32], the chattering makes the estimation result inaccurate, and it can be minimized with a given value of smoothing boundary layer . As shown in Figure 2a, when the smoothing boundary layer is larger than the existence subspace boundary layer, the estimated state trajectory is smoothed. When the smoothing boundary layer is smaller than the existence subspace boundary layer, the chattering remains due to the model uncertainty is underestimation, as shown in Figure 2b. Therefore, the SVSF algorithm is an effective estimation strategy for a system that has no an explicit model.

SVSF is generally used to process systems with the same dimension of state estimation and measurement. If the dimension of state estimation is larger than the dimension of measurement, the “artificial” measurement needs to be added [4]. For example, when estimate the speed in target tracking, it is necessary to increase the “artificial” measurements of speed.

3. Smoothing Theories and the Proposed Algorithms

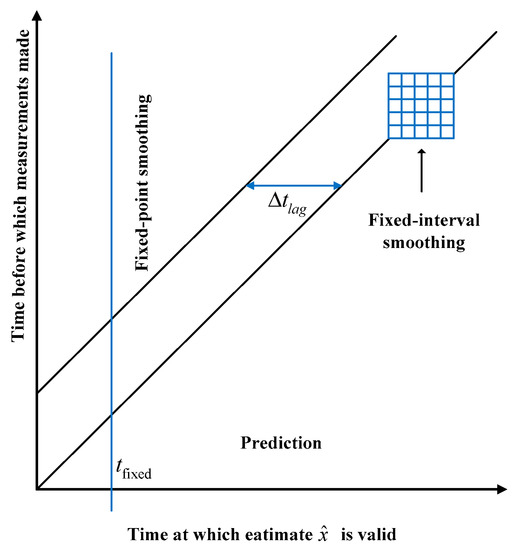

Smoothing is a practical strategy using additional measurements made after the time of the estimated state vector to obtain better estimates than those attainable by filtering only. Unfortunately, it is often overlooked. Since the KF algorithm was proposed in 1960 [8], the optimal smoothing method has been derived from the same model using the Kalman theory [55]. Smoothers are the algorithmic or analog implementations of smoothing methods. The relation among smoother, predictor and filter is illustrated in Figure 3, fixed-interval smoothers, fixed-lag smoothers, and fixed-point smoothers are the most typical smoothers, since they have been developed to solve different types of application problems. The fixed-interval smoother is suitable for the trajectory optimization problems.

Figure 3.

Estimate/measurement timing constraints [55].

SVSF is a novel model-based robust state estimation method and has efficient performance in reducing the disturbances and uncertainties. However, SVSF is less efficient in noise elimination compared to the Kalman filter [10]. Therefore, if its performance in noise canceling can be improved, SVSF would be developed further and be widely utilized in the state estimation field. Previous research indicates that the influence of noises can be eliminated effectively by a filter followed by an additional smoothing process. Therefore, this paper studies the fixed-interval smoother based on SVSF.

3.1. The Kalman Smoother

The popular Rauch–Tung–Striebel (RTS) Two-pass Smoother is one of the fixed-interval Kalman smoothers [55], which is labeled as KS. KS is widely used for its fast iteration and high precision performance, and its iterative process is described below. When the current measurements are obtained, system state and covariance calculated by the Kalman filter in real-time, then, they are used in the smoothing process to obtain a more accurate estimate [55].

The smoothing process is operated backward from the last estimation to the first one [55], and the corresponding iterations are given by:

Equations (11)–(17) summarize the Rauch–Tung–Striebel (RTS) Two-pass Smoother (the KS algorithm), which is an iterative process.

3.2. The Proposed SVSS Algorithm

The covariance does not necessarily have to be calculated in SVSF, but it is significant for the smoother [38]. The linear system is described by Equation (1). Similar to the calculation method of KF, the predicted state covariance matrix of SVSF is shown as;

where represents the posterior state covariance at time, and represents the system transition matrix, is the white noise involved in the system and is calculated by:

The state estimate is given by

According to updated estimates and real measurement , the innovation can be expressed by:

The state estimate is updated as:

The updated measurement innovation is calculated by:

The posterior state covariance at time can be written in:

The relationship between the measurement noises and state estimates is depicted as Equation (26) under the linear Gaussian system:

where means the measurement noise covariance matrix. In addition, follows a Gaussian distribution, i.e.

According to Formulas (25) and (26), the Equation (25) can be rewritten as:

The Equations (18)–(28) are the specific processes of the SVSF algorithm with the iterative update process of the covariance matrix.

The earliest article about SVSF [48] presented a two-pass smoother (labeled as SVSTPS) based on the Rauch–Tung–Striebel (RTS) Two-pass Smoother. The smoothing process uses Equation (17), in other words, it is based on the SVSF gain directly. However, the estimation result is not satisfying, so the SVSS using innovation sequences is proposed here. The innovation sequence is an orthogonal sequence containing the same statistical information as the original random sequence, so the innovation sequence is a white noise sequence with a zero mean. The smoothing process in the proposed smoother (SVSS) is updated by the innovation sequence according to the projective theorem (minimum mean square error criterion), and has better performance in eliminating Gaussian noise. The recursive equation is related with the smoothed estimates and (with ), as follows:

where and are calculated by and respectively.

Proof.

When and , Equation (29) can be written as:

where the smoothing gain is defined in projection theory as:

And according to Equation (1) and Equation (21), the innovation is given by:

Furthermore, according the References [56], the true state error is calculated by:

where and are defined as:

Suppose n > 0, then according to Equation (19) and Equation (26), when and , the expectation can be calculated as:

The true state value is expressed as and , substituting (33) into (36) yields

From Equation (28), Equation (37) can be written in:

Substituting Equation (38) and Equation (39) into Equation (30) yields:

So Equation (30) is written as:

When and . Equation (41) can be written as:

where and are calculated by ,. □

The other detailed derivation is described in the Appendix A. Moreover, the validity of this derivation process could be verified from Reference [6].

Equations (18)–(29) summarize the SVSS algorithm proposed in this paper. The pseudo-code is patched as follows.

| The SVSS Algorithm |

| Input {} and the sequence measurement {} Step 1 fiter Step 2 smoothing Output {} |

4. Simulation

4.1. A Classic Target Tracking Scenario

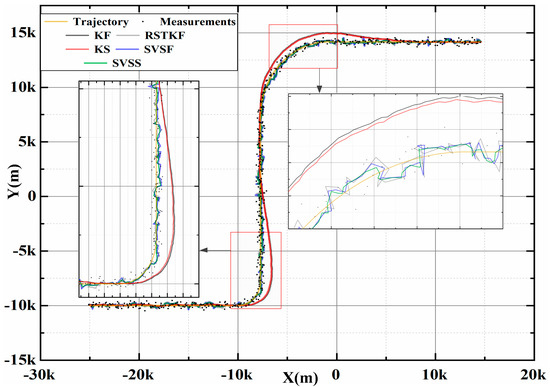

A classic simulation scenario is always used in References [10,30,57]. This is a tracking problem with a generic air traffic control (ATC) scenario: The target position is provided by a radar system. The system noise obeys a Gaussian distribution with standard deviation. Figure 4 shows that an aircraft spent 125 s to move from the initial position of at , and flew with a speed of along the x-axis direction and remained along the y-axis direction. Then it turned at a rate of for . Next, the target flew in a straight line for , and maneuvered at a rate of for . Finally the target flew straight for till the end.

Figure 4.

Position trajectory of one experiment.

In the ATC scenario, the behavior of the civilian aircraft could be modeled by a uniform motion (UM) [10,30,57], i.e.,

where refers to the sampling rate and denotes the system noise. The state vector of the systems is defined by:

where and represent the position of the aircraft along the x-axis and y-axis directions, and represent its speed, respectively. In the target tracking processing system, generally, radar only provides position measurements without target speed measurements. Therefore, the measurement model can be expressed as:

In the simulation, the state of the filter parameter is initialized as . Moreover, measurement covariance , state covariance and process noise covariance are set as follows:

where represents the power spectral densities and is defined as 0.16 [10,30,57]. Besides, the SVSF “memory” ( i.e. convergence rate) was set to , which is tuned based on some knowledge of the system uncertainties such as the noise to decrease the estimation errors [58]. Those methods are coded with MATLAB and the simulations are run on a laptop computer with Intel Core i5-3230M CPU at 2.40 GHz. Five hundred trials of Monte Carlo are performed in each simulation, and the estimation results are expressed in the figures of merit-root mean square error (RMSE), accumulative RMSE and the average value.

1 Compared with KF

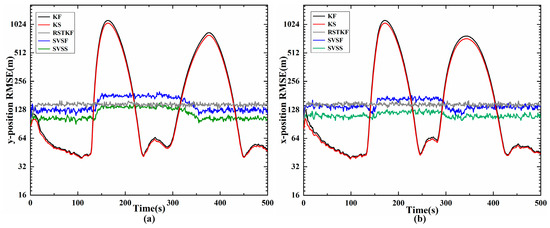

In this simulation, Kalman filter (KF), Kalman smoother (KS), Robust Student’s t-Based Kalman Filter (RSTKF) [20], SVSF and the proposed SVSF are tested. The SVSF and SVSS of smooth boundary layer widths is set to , the In the RSTKF, the prior parameters are set as: .

Figure 4 shows the target tracking position trajectory of one experiment, so the closer the tracking trajectory is to the real trajectory, the more accurate the tracking method is. The trajectory of SVSS is closer to true trajectory than SVSF, so SVSS reduces the chattering phenomena. The state estimation results of KF, KS, SVSF and the proposed SVSS are shown in Figure 5 and Table 1. Compared with RSTKF, SVSF and SVSS, when the system modeling is in accordance with target motions, the KF and KS exhibit better performance. But when the target motion model changes, a dramatic increase occurs in the tracking errors of KF and KS, while the estimations of RSTKF, SVSF and SVSS still maintain high accuracy. In Figure 5, the aircraft flies in a straight line after 155 s, the RMSE of SVSF does not decrease because the SVSF algorithm could not directly estimate the speed information. Therefore, there are no modifications in the relevant speed information. How to effectively use the “artificial” velocity measurements to modify the speed information will be described in the following. As shown in Figure 5 and Table 1, the simulation results demonstrate that for both KF and SVSF, the tracking errors obtained from their smoothers are smaller than that from the filters. In addition, Table 1 shows the position accumulative RMSE of SVSS is the lowest among KF, KS, RSTKF and SVSF. SVSS improves tracking accuracy by about 22% compared with SVSF and RSTKF, while KS only improves about 7% compared with KF. The reason for phenomenon is that the ability of SVSF to deal with noise is weaker than KF, so SVSS is easier to eliminate noises in its state estimates value compared to KS. KS and SVSS in smoothing process consume similar time, SVSF spends a little more time compared to KF, The RSKF cost most time. To sum up, SVSS has better robustness and higher tracking accuracy than the others.

Figure 5.

Position of RMSE of x-axis and y-axis (m) (a) position of RMSE on x-axis; (b) position of RMSE on y-axis.

Table 1.

The position accumulative RMSE on the x-axis and y-axis (m).

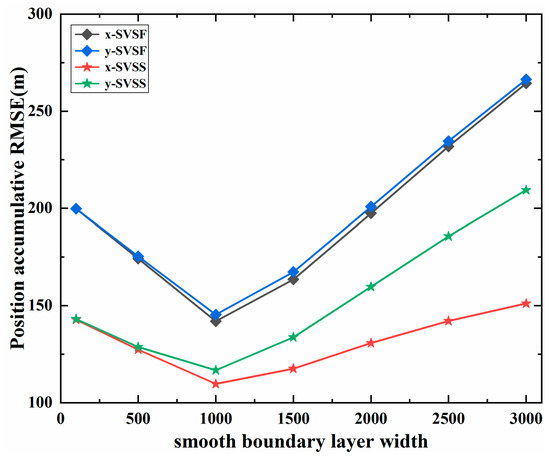

2 Results under different smooth boundary layer widths

Figure 6 and Table 2 show results of the position accumulative RMSE under different smooth boundary layer widths. We can see that SVSS has a better performance than SVSF, because the ability of SVSF to eliminate noise is almost the same under different smooth boundary layer widths. In addition, the smooth boundary layer width is an important parameter in SVSF. If it is not set properly, both the filter accuracy and stability would be deteriorated. The accumulative RMSE of SVSF estimation is high when the smooth boundary layer width is too large or too small. As shown in Figure 6, when the smoothing boundary layer is selected as , the proposed method has a better performance under the system measurement noises with standard deviation of . These parameters are selected based on the distribution of the system and measurement noises. Generally, the smoothing boundary layer width is set to be 5 times the maximum measurement noise width. And the SVSF “memory” or convergence rate is related to the rate of decay , such that ,where the is the sampling time [32]. As shown in Figure 6, when the width of smooth boundary layer is large, the accumulated RMSE of SVSS position on x-axis and y-axis is different, the reason is the same as above, there is no modifications in the relevant speed.

Figure 6.

The position accumulative of different on the x-axis and y-axis (m).

Table 2.

The position accumulative RMSE on the x-axis and y-axis (m) for different smooth boundary layer widths.

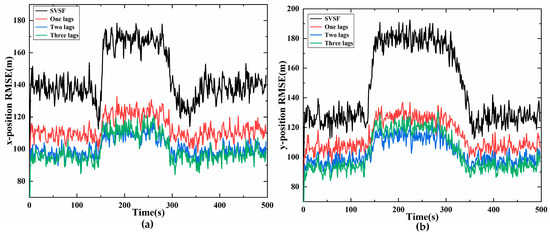

3 Results under different lag fixed intervals

Simulations under different lag fixed intervals are also considered. The width of the smooth boundary layer is , and the other experimental parameters are the same as mentioned above.

Figure 7 shows the estimation results using SVSS under three different lags and SVSF, and the performance of two lags and three lags is better than that of one lag. Compared with two lags, when the target moves with a constant velocity, the three lags improves the tracking accuracy slightly, but when the target makes a turning movement, the accuracy of three lags is lower than that of two lags. As a matter of fact, when the system model is consistent with the actual model, the proposed SVSS, which is derived under linear Gaussian noise, this can increase the estimation accuracy as does increasing the smoothing lag steps. However, if the models are inconsistent, the performance of the SVSS will probably decrease and is unstable as lag steps of smoothing increase, because innovation information in the SVSS contains more modeling error innovation. With the increase of the lag of SVSS, the computational complexity becomes higher. The computational complexity of lags is , and the detailed derivation is described in the Appendix B, the simulation time cost of different lags is also shown in Table A2 of the Appendix B. Therefore, under different requirements, users can choose the appropriate lag steps according to the needs of the system.

Figure 7.

SVSF smoother of different lags compare (a) position RMSE on x- axis; (b) position RMSE on y-axis.

4.2. A Complex Maneuvering Environment Scenario

As for this scenario, the maneuver of the target is more complicated, motion modes including uniform motion, turning motion, uniform acceleration motion and angular acceleration motion. The initial position value of the aircraft is also set to be . Target flies with a high speed of along the x-axis direction and along the y-axis direction for .Then the aircraft maneuvers at a rate of for . Next, the target turns at an initial angular velocity of with an angular acceleration of for . Finally the target continues to fly at an acceleration of along the x-axis direction and along the y-axis direction for .

The process noises obeys a Gaussian distribution with standard deviation. The process noises and measurement noises ( and ) are considered as Gaussian, with a zero mean and variances and , respectively. The initial state covariance , measurement noise covariance , and process noise covariance are defined, respectively, as follows:

where is given by the Equation (48) and is defined as 15. For the SVSF and their smoother estimation processes use the UM model, and smoothing boundary layer widths were defined as, ; the SVSF “memory” or convergence rate was set to . In order to meet the actual demand, the estimation of speed is increased. As per earlier discussions, the system also needs to add an “artificial” velocity measurement; it is necessary to transform the measurement matrix of (45) into a square matrix (i.e., identity), and the “artificial” velocity measurement can be calculated by position measurements. For example, where represents measurement, which contains the artificial velocity measurements:

The accuracy of Equation (51) depends on the sampling rate . A total of 500 Monte Carlo runs were performed.

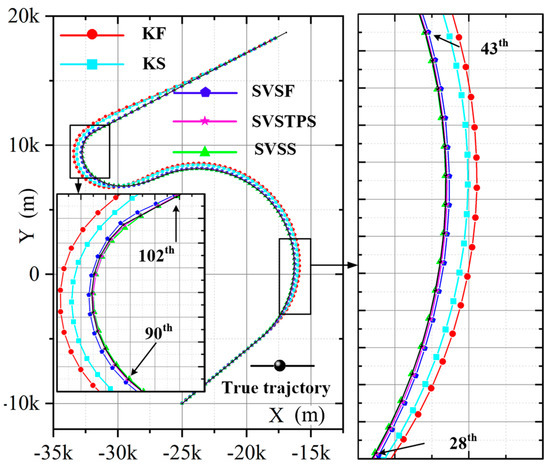

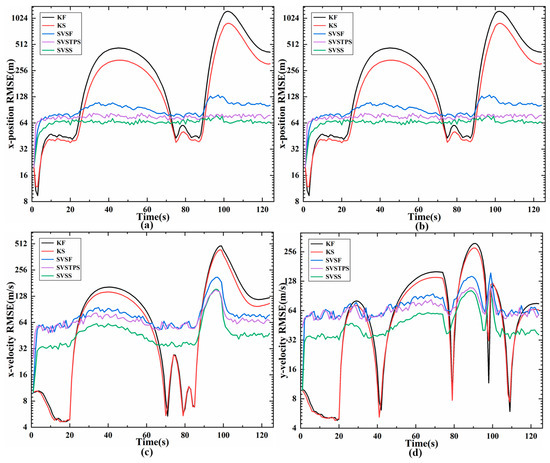

The results of different estimation methods are shown in the following figures and Table 3, so we can see that the KS algorithm has the best performance when the model is matched (0 s to 20 s) with the actual motions, and the position RMSE and speed RMSE of KS are significantly smaller than the other two SVSF smoothers. Once the models do not match, such as the high speed target change from uniform motion to turn, angular acceleration, or acceleration, KS is unstable and RMSE increases, but the SVSTPS and SVSS are still able to overcome the uncertainties and have high accuracy and robustness. Table 3 shows that the position (velocity) accumulative RMSE of SVSTPS and SVSS increased by about 21% (15%) and 31% (33%) than SVSF, respectively. This is because SVSTPS uses SVSF gain, and the proposed SVSS uses innovation, and innovation is more conducive to smoother processing due to it containing as much noises as the original measurements. Thus more noise errors can be eliminated by SVSS. However, there is a special case where the SVSS performs not as well as SVSTPS when modeling errors affect it more than Gaussian noise. For example, at around 95 s in Figure 8 and Figure 9, the target is flying at a high speed with the angular velocity of and angular acceleration of , so accuracy of SVSS is lower than that of SVSTPS. Therefore, SVSS exhibits the best performance with the above filters.

Table 3.

The state estimation accumulative RMSE on x-axis and y-axis (m) for different algorithms.

Figure 8.

Average position trajectory.

Figure 9.

RMSE of state estimation (m) (a) position RMSE on x- axis; (b) position RMSE on y-axis; (c) velocity RMSE on x- axis; (d) velocity RMSE on y-axis.

5. Conclusions

An SVSS algorithm is presented to improve the accuracy of the SVSF state estimation in the dynamic system with model uncertainty. Based on projection theory and SVSF, the smoothing recurrence formula of SVSS is deduced using innovation. The comparisons among SVSS, KS and SVSTPS are analyzed in the scene of aircraft trajectory tracking. According to the simulation results, the proposed SVSS performs well than SVSF under different bounded smoothing layers. In addition, compared with the popular KS algorithm, the SVSS is able to overcome inaccuracies and yield a stable solution in the presence of modeling uncertainties. Theory and simulations also prove that, the proposed SVSS has higher accuracy and better robustness compared with SVSTPS.

Author Contributions

Data processing and writing, Y.C.; supervision, L.X.; writing—review and editing, B.Y. and C.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Natural Science Foundation of China under Grant 61701383, the Natural Science Basic Research Plan in Shaanxi Province of China under Grant 2018JQ6100 and China Postdoctoral Science Foundation under Grant 2019M663633.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Proof process is based on the orthogonal projection theory:

Appendix A.1. Definition 1

Based on random measurement variables , estimate variables , which represent the linear minimum variance estimation of , is a linear function of , the minimization index of estimate which is given by the following

where is the mean sign, and is the transpose symbol,

Appendix A.2. Definition 2

The linear minimum variance estimation is only given by the following formula

Property 1

Property 2

Property 3

Where and are uncorrelated random variables, recorded as , called which the projection of on .

Appendix A.3. Definition 3

Based on random variables , The linear minimum variance estimate of the random variables is defined as:

estimate is called projective on the or .

Appendix A.4. Definition 4

If random variables has second moment, its innovation is defined as:

The predicted estimate of is defined as:

Appendix A.5. Definition 5

For the estimate of random variable , when the random variable has the second moment, we have the following iterative projective Formula (A14) (Projective recurrence theorem)

The derivation of the SVSS algorithm is also based on the above theory

(1) One lag fixed interval

For the linear Gaussian system given by Equation (1), under the linear minimum mean square deviation criterion, the state estimate value of SVSS is given by the following formula:

From (A2) to (A15), the state estimate value is obtained as:

(2) Two lags fixed interval

As one-lag, the state estimate value of SVSS is given by the following formula:

According to (A23)–(A26), the state estimate value of SVSS is calculated by:

(3) N lags fixed interval

where is obtained from the orthogonality of the projection theory:

Form (A29) to (A33), the n lag state estimate value of SVSS is calculated by:

Equation (A34) can be written as:

where and are calculated by ,.

Appendix B

The smoothing process is expressed by Equation (42). The state and the measurement dimension are i and j respectively, and the complexity of matrix computation is shown in Table A1.

Table A1.

The complexity of matrix computation.

Table A1.

The complexity of matrix computation.

| Complexity | Complexity | ||

|---|---|---|---|

| PF | O(i3) | Ci | O(2i3 + mi3) |

| PH | O(ji2) | BN | O(2ji2 + 2ij2 +mj3) |

| P−1 | O(mi3) |

Ignoring the low computational complexity, the computational complexity of one lag smoothing is .

The computational complexity of n lag smoothing is . The implementation times of SVSS under different lag fixed intervals is shown in Table A2.

Table A2.

Implementation Times of SVSS under different lag fixed intervals.

Table A2.

Implementation Times of SVSS under different lag fixed intervals.

| Different Lags | SVSF | One Lags | Two Lags | Three Lags |

|---|---|---|---|---|

| Single step run time (μs) | 48 | 72 | 120 | 180 |

References

- Di Viesti, P.; Vitetta, G.M.; Sirignano, E. Double Bayesian Smoothing as Message Passing. IEEE Trans. Signal Process. 2019, 67, 5495–5510. [Google Scholar] [CrossRef]

- Gao, R.; Tronarp, F.; Sarkka, S. Iterated Extended Kalman Smoother-Based Variable Splitting for L-1-Regularized State Estimation. IEEE Trans. Signal Process. 2019, 67, 5078–5092. [Google Scholar] [CrossRef]

- Wang, Y.H.; Zhang, H.B.; Mao, X.; Li, Y. Accurate Smoothing Methods for State Estimation of Continuous-Discrete Nonlinear Dynamic Systems. IEEE Trans. Autom. Control 2019, 64, 4284–4291. [Google Scholar] [CrossRef]

- Huang, Y.; Zhang, Y.; Li, N.; Chambers, J. Robust Student’st based nonlinear filter and smoother. IEEE Trans. Aerosp. Electron. Syst. 2016, 52, 2586–2596. [Google Scholar] [CrossRef]

- Rauch, H.E. Linear Estimation of Sampled Stochastic Processes with Random Parameters; Stanford Univ Ca Stanford Electronics Labs: Stanford, CA, USA, 1962. [Google Scholar]

- Rauch, H. Solutions to the linear smoothing problem. IEEE Trans. Autom. Control 1963, 8, 371–372. [Google Scholar] [CrossRef]

- Huang, Y.; Zhang, Y.; Zhao, Y.; Mihaylova, L.; Chambers, J. Robust Rauch-Tung-Striebel smoothing framework for heavy-tailed and/or skew noises. IEEE Trans. Aerosp. Electron. Syst. 2020, 56, 415–441. [Google Scholar] [CrossRef]

- Kalman, R.E. A new approach to linear filtering and prediction problems. J. Basic Eng. 1960, 82, 35–45. [Google Scholar] [CrossRef]

- Munguia, R.; Urzua, S.; Grau, A. EKF-Based Parameter Identification of Multi-Rotor Unmanned Aerial VehiclesModels. Sensors 2019, 19, 4174. [Google Scholar] [CrossRef]

- Gadsden, S.A.; Habibi, S.; Kirubarajan, T. Kalman and Smooth Variable Structure Filters for Robust Estimation. IEEE Trans. Aerosp. Electron. Syst. 2014, 50, 1038–1050. [Google Scholar] [CrossRef]

- Arasaratnam, I.; Haykin, S. Cubature kalman filters. IEEE Trans. Autom. Control 2009, 54, 1254–1269. [Google Scholar] [CrossRef]

- Gadsden, S.A.; Al-Shabi, M.; Arasaratnam, I.; Habibi, S.R. Combined cubature Kalman and smooth variable structure filtering: A robust nonlinear estimation strategy. Signal Process. 2014, 96, 290–299. [Google Scholar] [CrossRef]

- Zhang, K.W.; Hao, G.; Sun, S.L. Weighted Measurement Fusion Particle Filter for Nonlinear Systems with Correlated Noises. Sensors 2018, 18, 3242. [Google Scholar] [CrossRef] [PubMed]

- Zhou, H.; Xia, Y.; Deng, Y. A new particle filter based on smooth variable structure filter. Int. J. Adapt. Control Signal Process. 2020, 34, 32–41. [Google Scholar] [CrossRef]

- Huang, Y.; Zhang, Y.; Shi, P.; Wu, Z.; Qian, J.; Chambers, J.A. Robust Kalman filters based on Gaussian scale mixture distributions with application to target tracking. IEEE Trans. Syst. Man Cybern. Syst. 2019, 49, 2082–2096. [Google Scholar] [CrossRef]

- Huang, Y.; Zhang, Y.; Xu, B.; Wu, Z.; Chambers, J. A new outlier-robust Student’s t based Gaussian approximate filter for cooperative localization. IEEE Trans. Mechatron. 2017, 22, 2380–2386. [Google Scholar] [CrossRef]

- Huang, Y.; Zhang, Y.; Zhao, Y.; Chambers, J.A. A Novel Robust Gaussian–Student’s t Mixture Distribution Based Kalman Filter. IEEE Trans. Signal Process. 2019, 67, 3606–3620. [Google Scholar] [CrossRef]

- Huang, Y.; Zhang, Y.; Chambers, J.A. A Novel Kullback–Leibler Divergence Minimization-Based Adaptive Student’s t-Filter. IEEE Trans. Signal Process. 2019, 67, 5417–5432. [Google Scholar] [CrossRef]

- Huang, Y.; Zhang, Y.; Wu, Z.; Li, N.; Chambers, J. A novel adaptive Kalman filter with inaccurate process and measurement noise covariance matrices. IEEE Trans. Autom. Control 2017, 63, 594–601. [Google Scholar] [CrossRef]

- Huang, Y.; Zhang, Y.; Li, N.; Wu, Z.; Chambers, J.A. A Novel Robust Student’st-Based Kalman Filter. IEEE Trans. Aerosp. Electron. Syst. 2017, 53, 1545–1554. [Google Scholar] [CrossRef]

- Wang, H.; Li, H.; Fang, J.; Wang, H. Robust Gaussian Kalman filter with outlier detection. IEEE Signal Process. Lett. 2018, 25, 1236–1240. [Google Scholar] [CrossRef]

- Skoglund, M.A.; Hendeby, G.; Axehill, D. Extended Kalman filter modifications based on an optimization view point. In Proceedings of the 2015 18th International Conference on Information Fusion (Fusion), Washington, DC, USA, 6–9 July 2015; pp. 1856–1861. [Google Scholar]

- Bakhshande, F.; Söffker, D. Adaptive Step Size Control of Extended/Unscented Kalman Filter using Event Handling Concept. Front. Mech. Eng. 2019, 5, 74. [Google Scholar] [CrossRef]

- Huang, Y.; Zhang, Y.; Xu, B.; Wu, Z.; Chambers, J.A. A new adaptive extended Kalman filter for cooperative localization. IEEE Trans. Aerosp. Electron. Syst. 2017, 54, 353–368. [Google Scholar] [CrossRef]

- Milanese, M.; Tempo, R. Optimal algorithms theory for robust estimation and prediction. IEEE Trans. Autom. Control 1985, 30, 730–738. [Google Scholar] [CrossRef]

- Zhuk, S.M. Minimax state estimation for linear discrete-time differential-algebraic equations. Automatica 2010, 46, 1785–1789. [Google Scholar] [CrossRef][Green Version]

- Farrell, W.J. Interacting multiple model filter for tactical ballistic missile tracking. IEEE Trans. Aerosp. Electron. Syst. 2008, 44, 418–426. [Google Scholar] [CrossRef]

- Mazor, E.; Averbuch, A.; Bar-Shalom, Y.; Dayan, J. Interacting multiple model methods in target tracking: A survey. IEEE Trans. Aerosp. Electron. Syst. 1998, 34, 103–123. [Google Scholar] [CrossRef]

- Li, X.-R.; Bar-Shalom, Y. Multiple-model estimation with variable structure. IEEE Trans. Autom. Control 1996, 41, 478–493. [Google Scholar]

- Li, X.R.; Jilkov, V.P. Survey of maneuvering target tracking. Part V. Multiple-model methods. IEEE Trans. Aerosp. Electron. Syst. 2005, 41, 1255–1321. [Google Scholar]

- Goodman, J.M.; Wilkerson, S.A.; Eggleton, C.; Gadsden, S.A. A Multiple Model Adaptive SVSF-KF Estimation Strategy. In Proceedings of the Signal Processing, Sensor/Information Fusion, and Target Recognition XXVIII, Baltimore, MD, USA, 15–17 April 2019. [Google Scholar]

- Habibi, S. The smooth variable structure filter. Proc. IEEE 2007, 95, 1026–1059. [Google Scholar] [CrossRef]

- Lee, A.; Gadsden, S.A.; Wilkerson, S.A. An Adaptive Smooth Variable Structure Filter based on the Static Multiple Model Strategy. In Proceedings of the Signal Processing, Sensor/Information Fusion, and Target Recognition XXVIII, Baltimore, MD, USA, 15–17 April 2019. [Google Scholar]

- Gadsden, S.A.; Habibi, S.R. A New Robust Filtering Strategy for Linear Systems. J. Dyn. Syst. Meas. Control Trans. 2013, 135, 014503. [Google Scholar] [CrossRef]

- Afshari, F.H.; Gadsden, S.A.; Habibi, S. A nonlinear second-order filtering strategy for state estimation of uncertain systems. Signal Process. 2019, 155, 182–192. [Google Scholar] [CrossRef]

- Zhou, H.; Xu, L.; Chen, W.; Guo, K.; Shen, F.; Guo, Z. A novel robust filtering strategy for systems with Non-Gaussian noises. AEU Int. J. Electron. Commun. 2018, 97, 154–164. [Google Scholar] [CrossRef]

- Spiller, M.; Bakhshande, F.; Söffker, D. The uncertainty learning filter: A revised smooth variable structure filter. Signal Process. 2018, 152, 217–226. [Google Scholar] [CrossRef]

- Gadsden, S.A.; Habibi, S.R. A new form of the smooth variable structure filter with a covariance derivation. In Proceedings of the 49th IEEE Conference on Decision and Control (CDC), Atlanta, GA, USA, 15–17 December 2010; pp. 7389–7394. [Google Scholar]

- Demim, F.; Nemra, A.; Louadj, K.; Hamerlain, M.; Bazoula, A. Cooperative SLAM for multiple UGVs navigation using SVSF filter. Automatika 2017, 58, 119–129. [Google Scholar] [CrossRef]

- Demim, F.; Nemra, A.; Boucheloukh, A.; Kobzili, E.; Hamerlain, M.; Bazoula, A. SLAM based on Adaptive SVSF for Cooperative Unmanned Vehicles in Dynamic environment. IFAC Pap. 2019, 52, 73–80. [Google Scholar] [CrossRef]

- Kim, T.; Wang, Y.; Sahinoglu, Z.; Wada, T.; Hara, S.; Qiao, W. State of charge estimation based on a realtime battery model and iterative smooth variable structure filter. In Proceedings of the 2014 IEEE Innovative Smart Grid Technologies-Asia (ISGT ASIA), Kuala Lumpur, Malaysia, 20–23 May 2014; pp. 132–137. [Google Scholar]

- Gadsden, S.A.; Al-Shabi, M.; Habibi, S. Estimation strategies for the condition monitoring of a battery system in a hybrid electric vehicle. ISRN Signal Process. 2011, 2011, 120351. [Google Scholar] [CrossRef]

- Gadsden, S.A.; Song, Y.; Habibi, S.R. Novel Model-Based Estimators for the Purposes of Fault Detection and Diagnosis. IEEE Trans. Mechatron. 2013, 18, 1237–1249. [Google Scholar] [CrossRef]

- Gadsden, S.A.; Kirubarajan, T. Development of a Variable Structure-Based Fault Detection and Diagnosis Strategy Applied to an Electromechanical System. In Proceedings of the Signal Processing, Sensor/Information Fusion, and Target Recognition XXVI, Anaheim, CA, USA, 10–12 April 2017. [Google Scholar]

- Ahmed, R.; El Sayed, M.; Gadsden, S.A.; Tjong, J.; Habibi, S. Automotive Internal-Combustion-Engine Fault Detection and Classification Using Artificial Neural Network Techniques. IEEE Trans. Veh. Technol. 2015, 64, 21–33. [Google Scholar] [CrossRef]

- Ahmed, R.; El Sayed, M.; Gadsden, S.A.; Tjong, J.; Habibi, S. Artificial neural network training utilizing the smooth variable structure filter estimation strategy. Neural Comput. Appl. 2016, 27, 537–554. [Google Scholar] [CrossRef]

- Ismail, M.; Attari, M.; Habibi, S.; Ziada, S. Estimation theory and Neural Networks revisited: REKF and RSVSF as optimization techniques for Deep-Learning. Neural Netw. 2018, 108, 509–526. [Google Scholar] [CrossRef]

- Gadsden, S.A.; Lee, A.S. Advances of the smooth variable structure filter: Square-root and two-pass formulations. J. Appl. Remote Sens. 2017, 11, 015018. [Google Scholar] [CrossRef]

- Gadsden, S.; Al-Shabi, M.; Kirubarajan, T. Two-Pass Smoother Based on the SVSF Estimation Strategy; SPIE: Washington, WA, USA, 2015; Volume 9474. [Google Scholar]

- Yan, B.; Xu, N.; Wang, G.; Yang, S.; Xu, L.P. Detection of Multiple Maneuvering Extended Targets by Three-Dimensional Hough Transform and Multiple Hypothesis Tracking. IEEE Access 2019, 7, 80717–80732. [Google Scholar] [CrossRef]

- Yan, B.; Xu, N.; Zhao, W.B.; Li, M.Q.; Xu, L.P. An Efficient Extended Targets Detection Framework Based on Sampling and Spatio-Temporal Detection. Sensors 2019, 19, 2912. [Google Scholar] [CrossRef] [PubMed]

- Yan, B.; Xu, N.; Zhao, W.-B.; Xu, L.-P. A Three-Dimensional Hough Transform-Based Track-Before-Detect Technique for Detecting Extended Targets in Strong Clutter Backgrounds. Sensors 2019, 19, 881. [Google Scholar] [CrossRef] [PubMed]

- Yan, B.; Zhao, X.Y.; Xu, N.; Chen, Y.; Zhao, W.B. A Grey Wolf Optimization-Based Track-Before-Detect Method for Maneuvering Extended Target Detection and Tracking. Sensors 2019, 19, 1577. [Google Scholar] [CrossRef]

- Habibi, S.R.; Burton, R. The variable structure filter. J. Dyn. Syst. Meas. Control Trans. 2003, 125, 287–293. [Google Scholar] [CrossRef]

- Grewal, M.; Andrews, A. Kalman Filtering: Theory and Practice with MATLAB, 4th ed.; John Wiley & Sons: Hoboken, NJ, USA, 2015. [Google Scholar]

- Sun, S.L.; Ma, J. Optimal filtering and smoothing for discrete-time stochastic singular systems. Signal Process. 2007, 87, 189–201. [Google Scholar] [CrossRef]

- Bar-Shalom, Y.; Li, X.R.; Kirubarajan, T. Estimation with Applications to Tracking and Navigation: Theory Algorithms and Software; John Wiley & Sons: Hoboken, NJ, USA, 2004. [Google Scholar]

- Gadsden, S.A. Smooth Variable Structure Filtering: Theory and Applications. Ph.D. Thesis, McMaster University, Hamilton, ON, Canada, 2011. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).