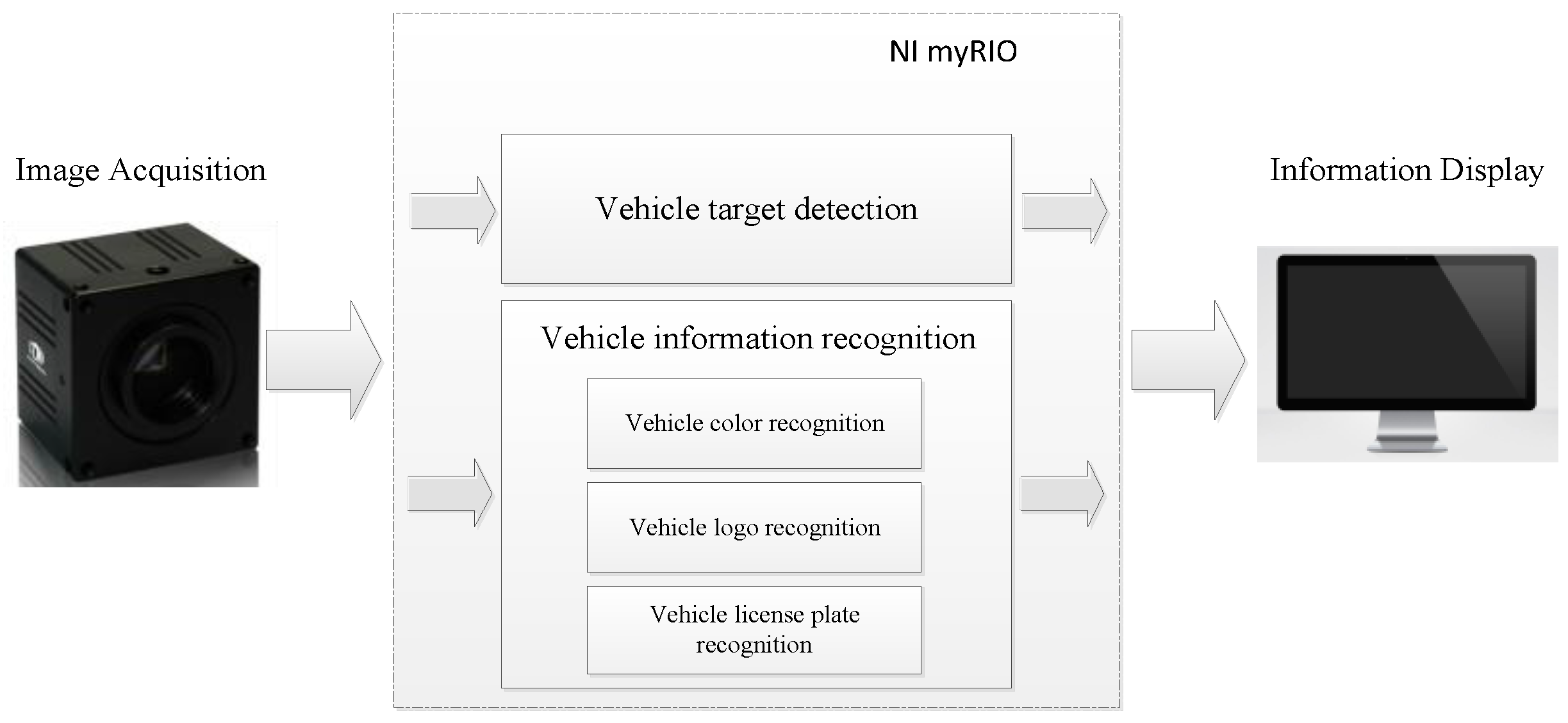

Research and Implementation of Vehicle Target Detection and Information Recognition Technology Based on NI myRIO

Abstract

1. Introduction

2. Materials and Methods

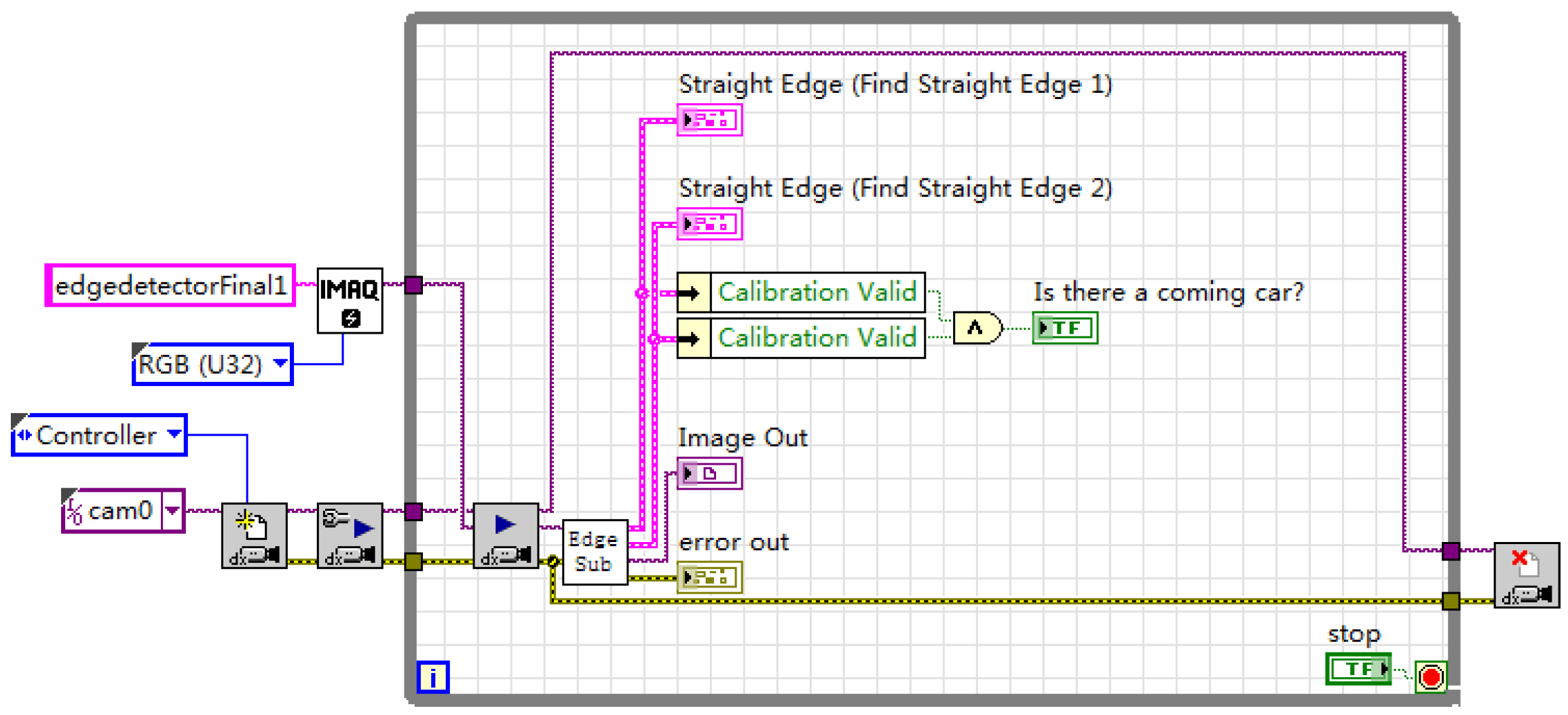

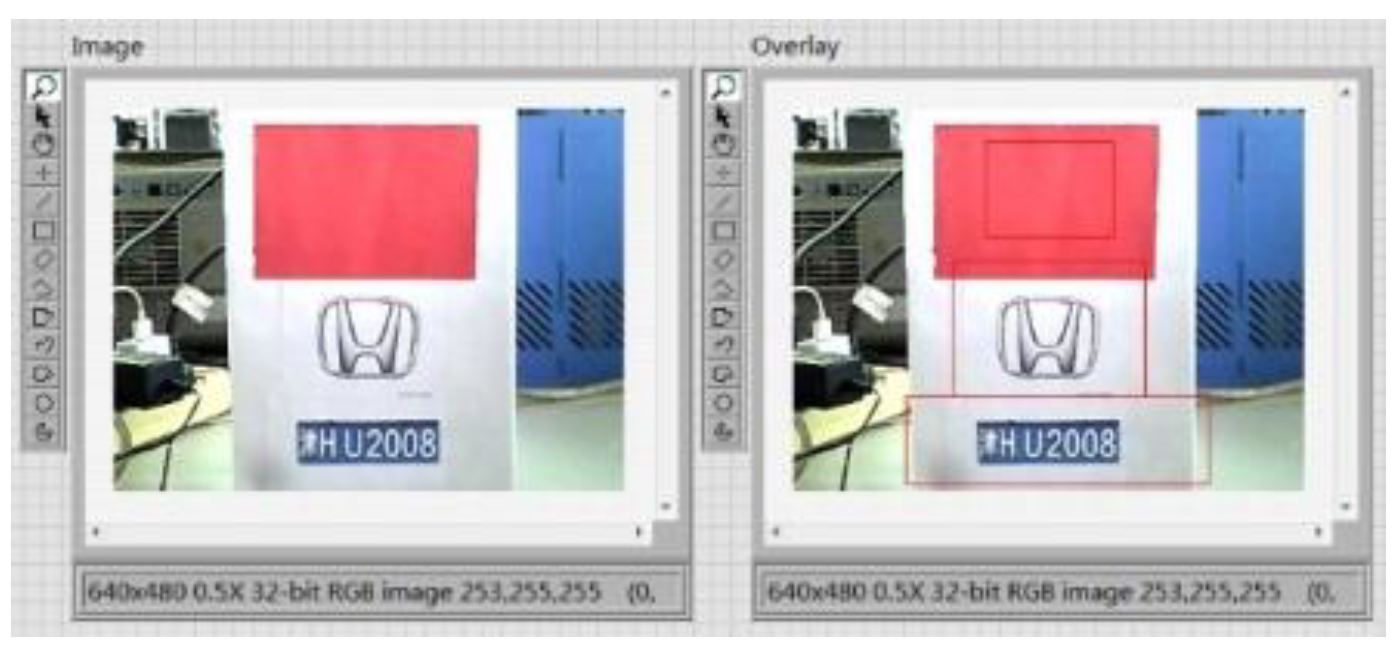

3. Vehicle Target Detection Scheme and Implementation

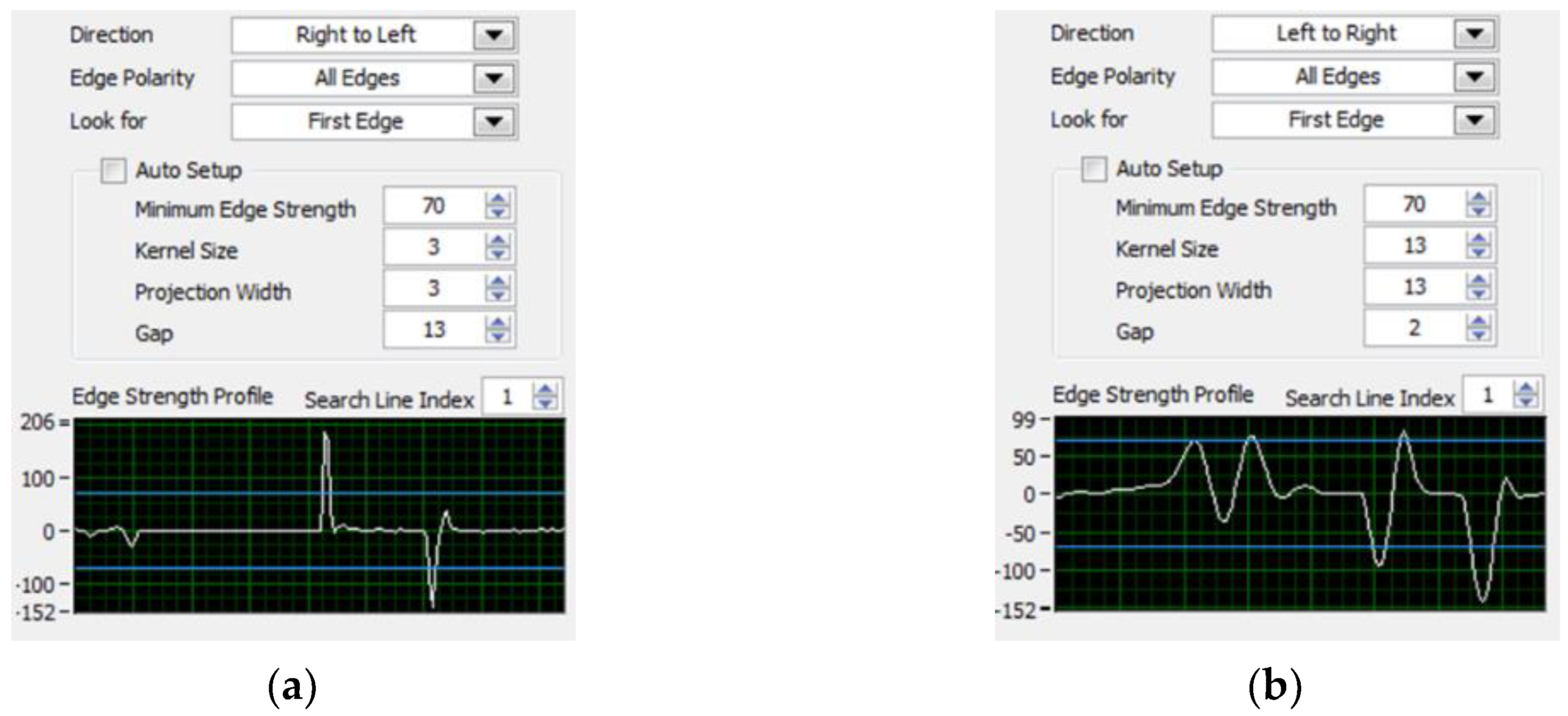

3.1. Edge Detection Method

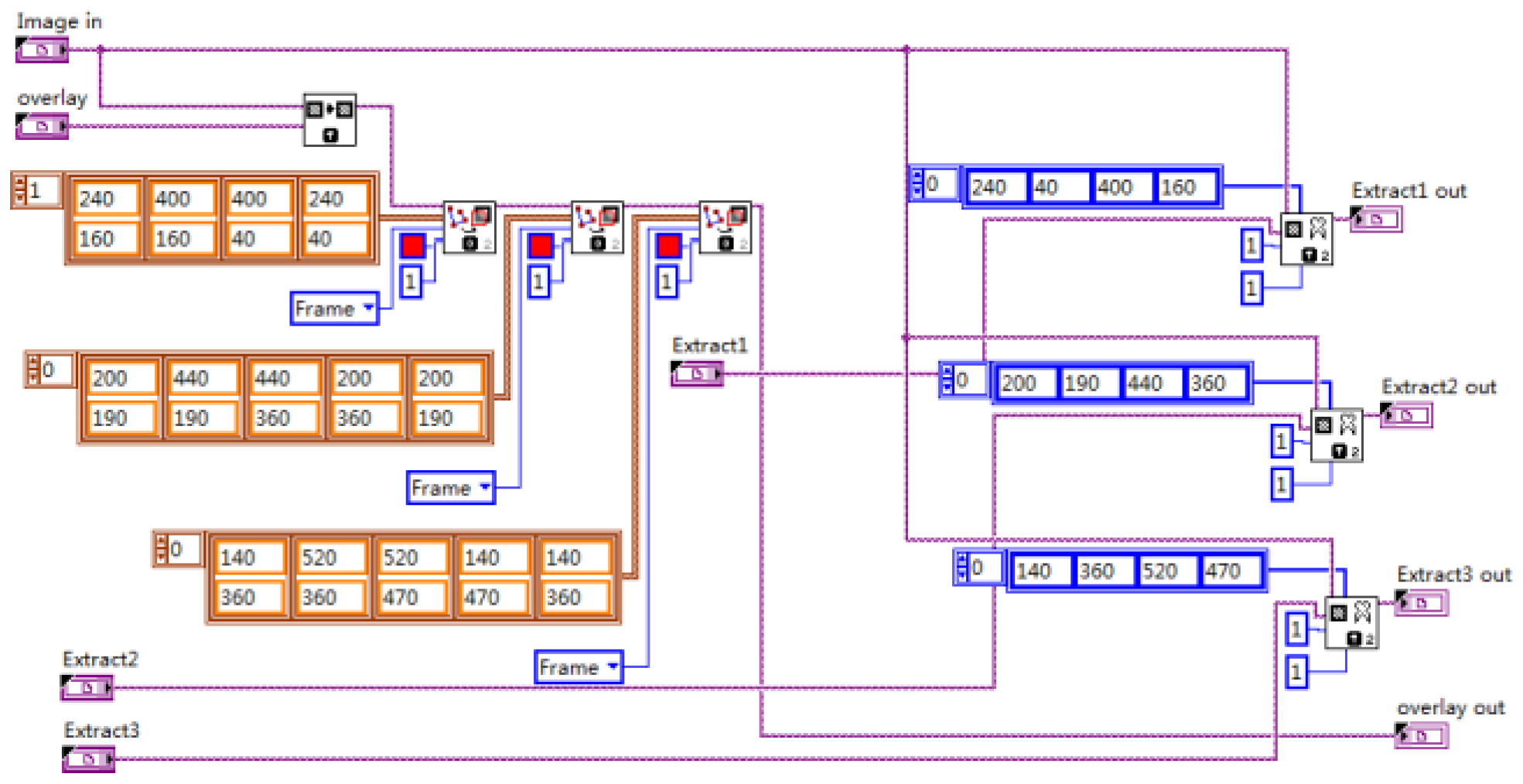

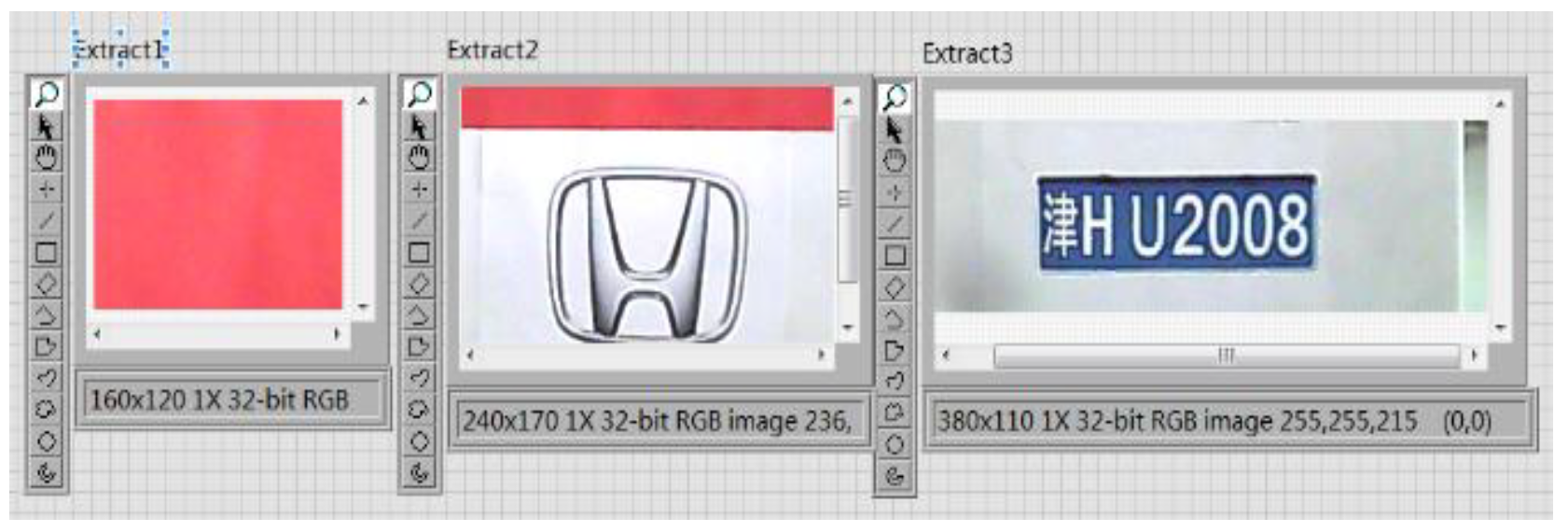

3.2. Vehicle Target Recognition Method

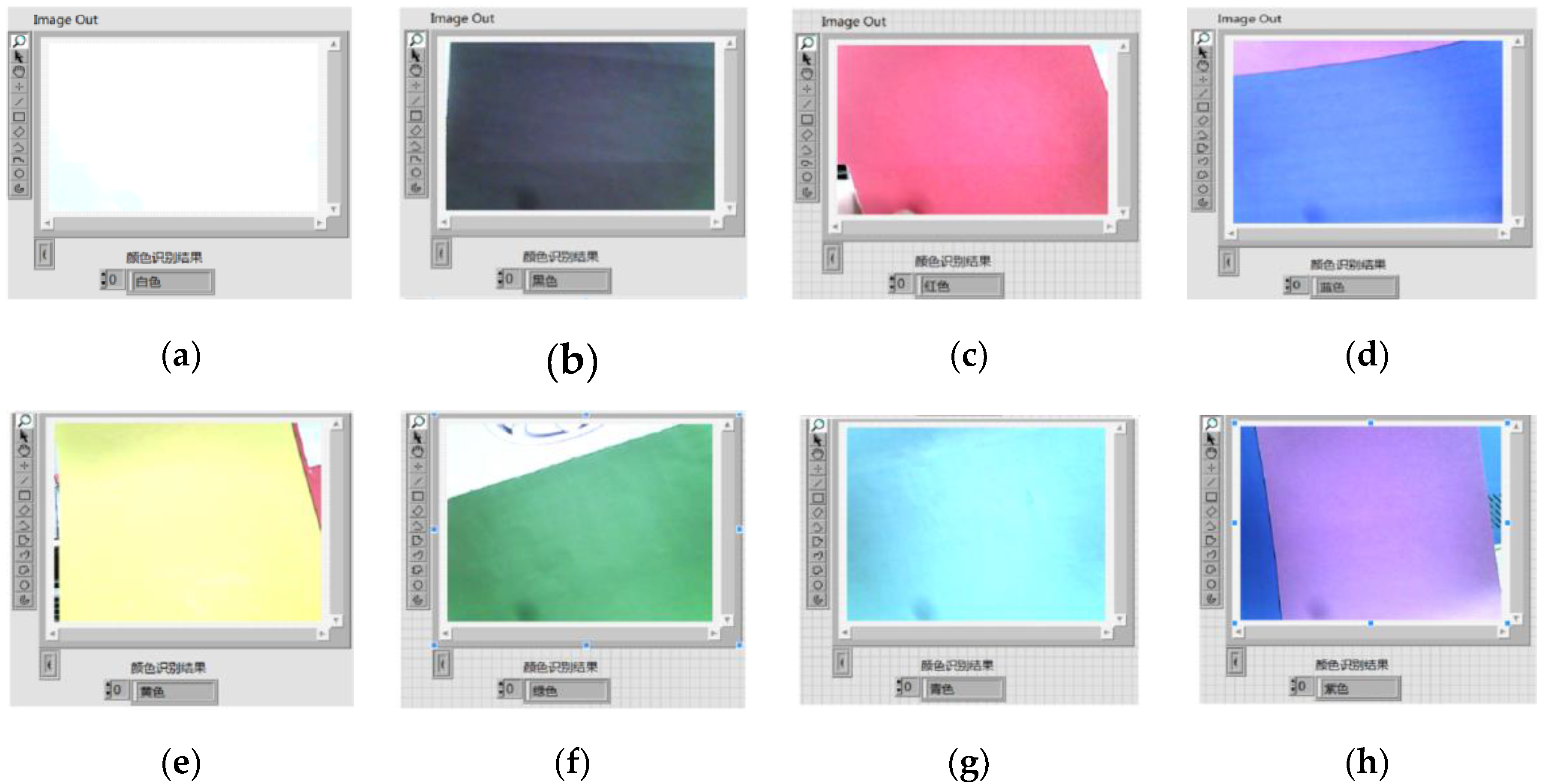

4. Vehicle Color Recognition Scheme and Implementation

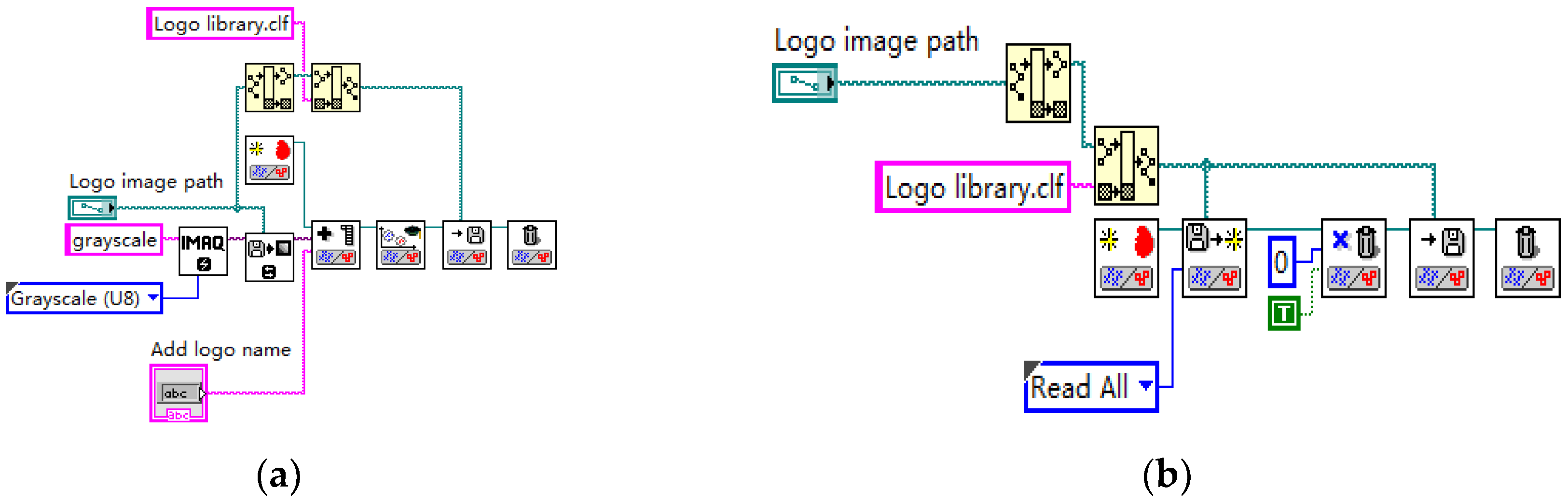

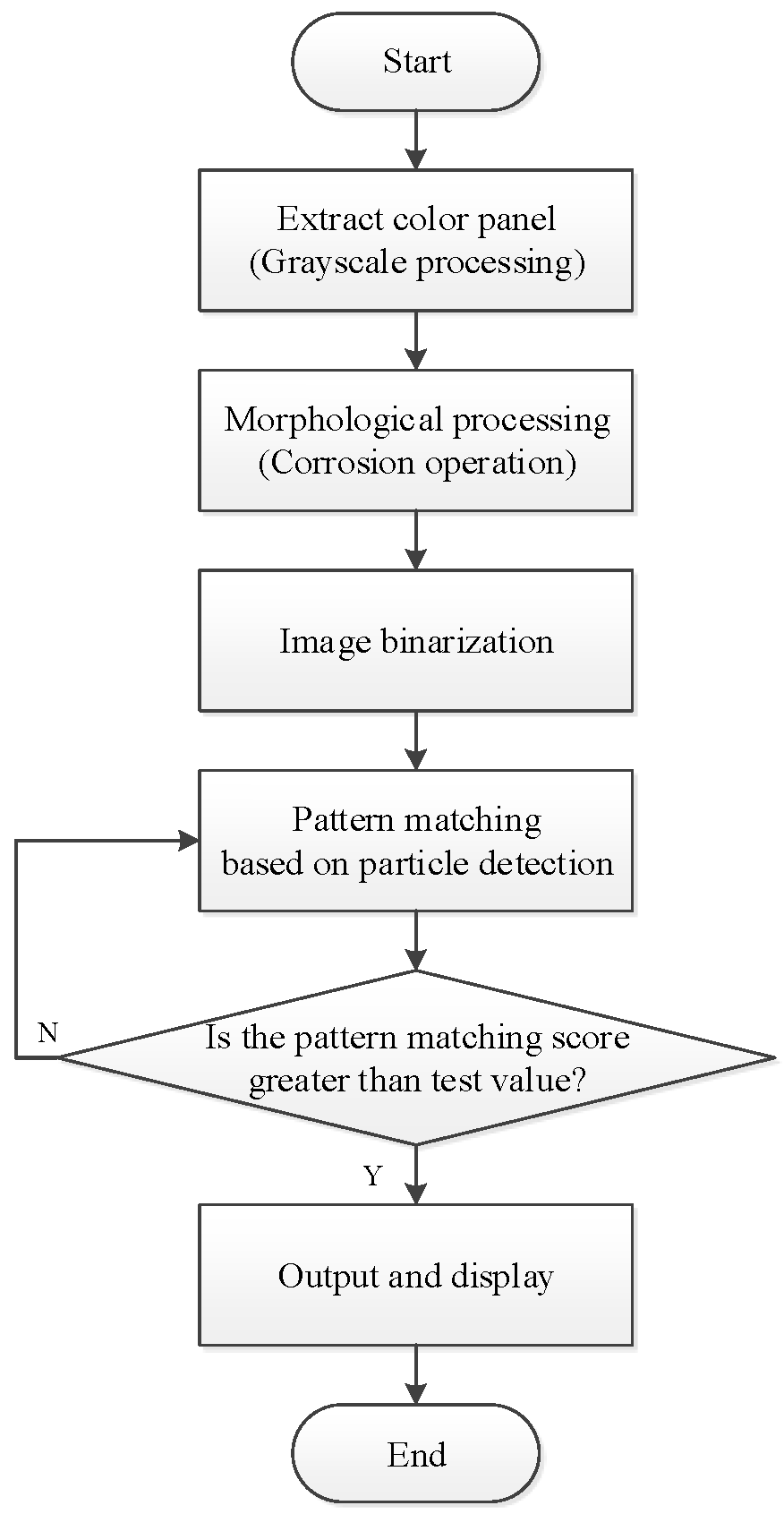

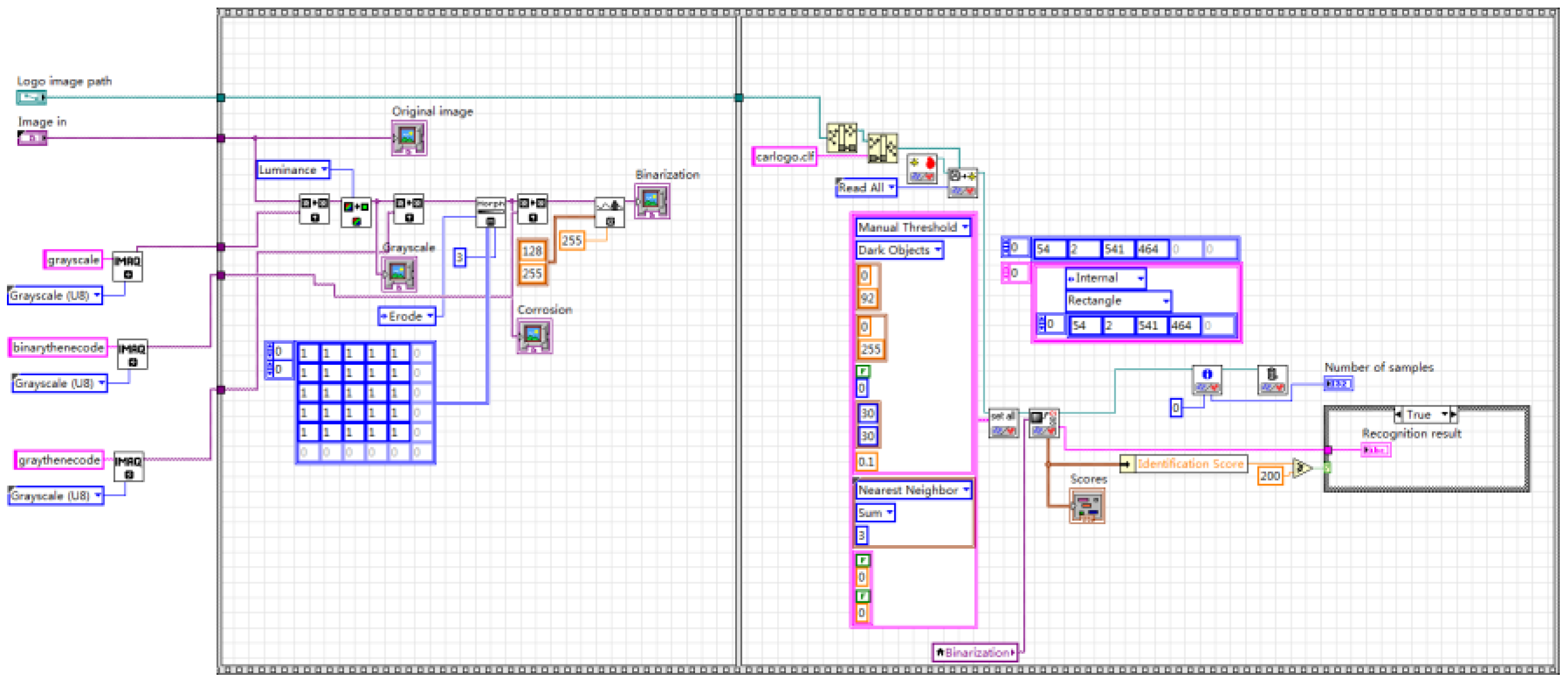

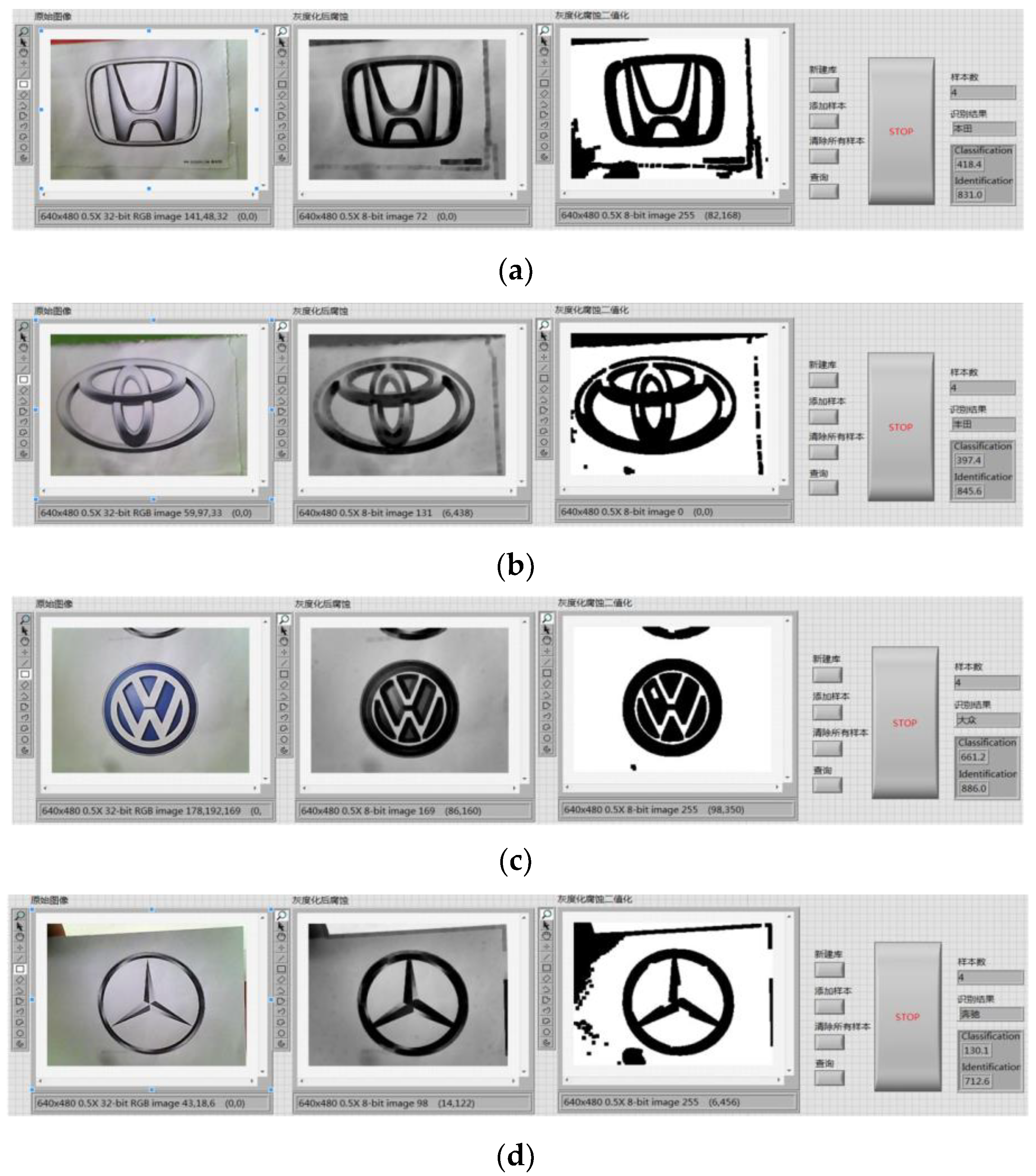

5. Vehicle Logo Recognition Scheme and Implementation

5.1. Classification Algorithm Introduction

- (1)

- Calculate the distance between the test data and each training data;

- (2)

- Sorting according to the increasing relationship of distances;

- (3)

- Select the K points with the small distance;

- (4)

- Determine the occurrence frequency of the category of the first K points;

- (5)

- Return the category with the highest frequency among the top K points as the prediction category of the test data.

5.2. Vehicle Logo Recognition Scheme

6. License Plate Recognition Scheme and Implementation

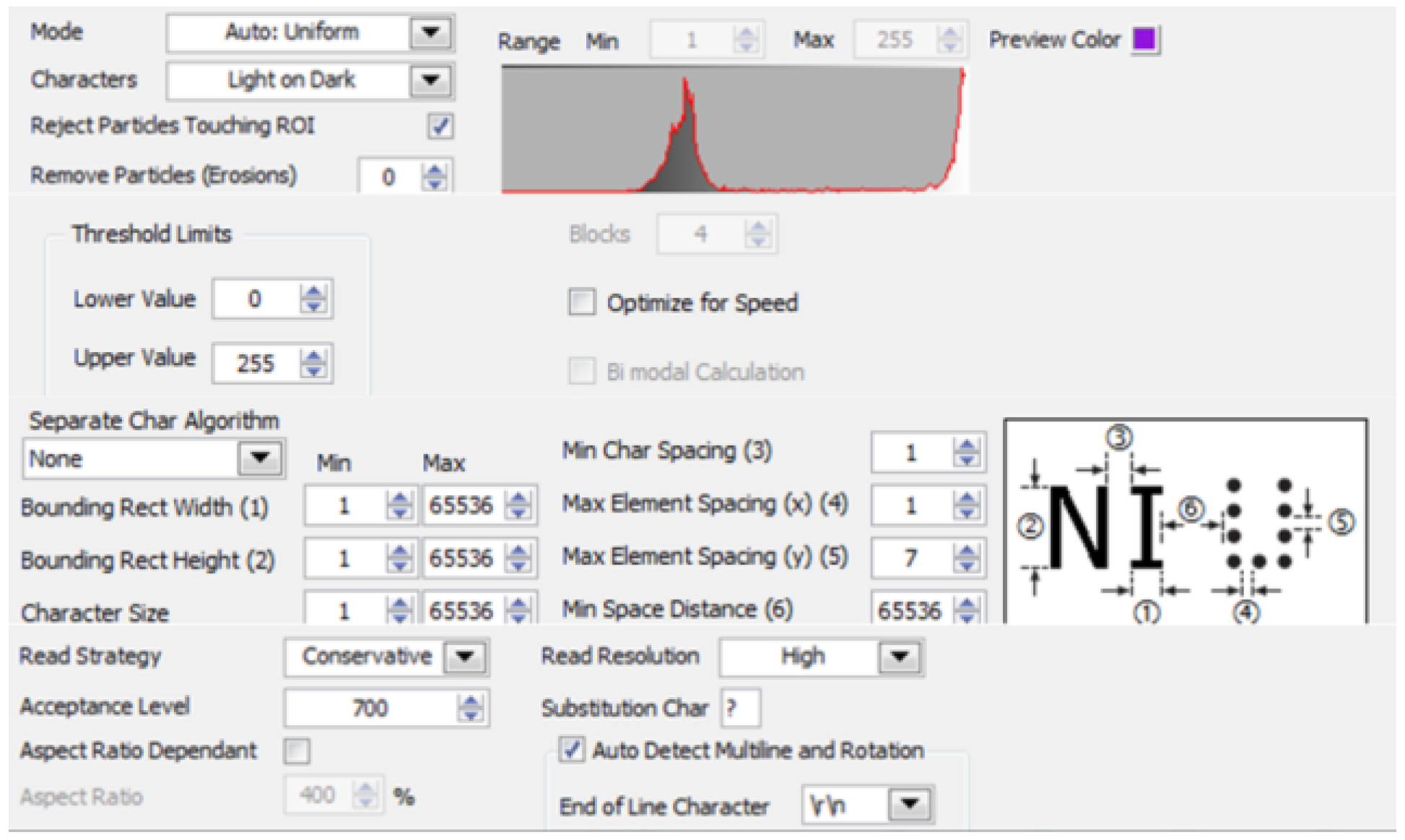

6.1. OCR Character Recognition

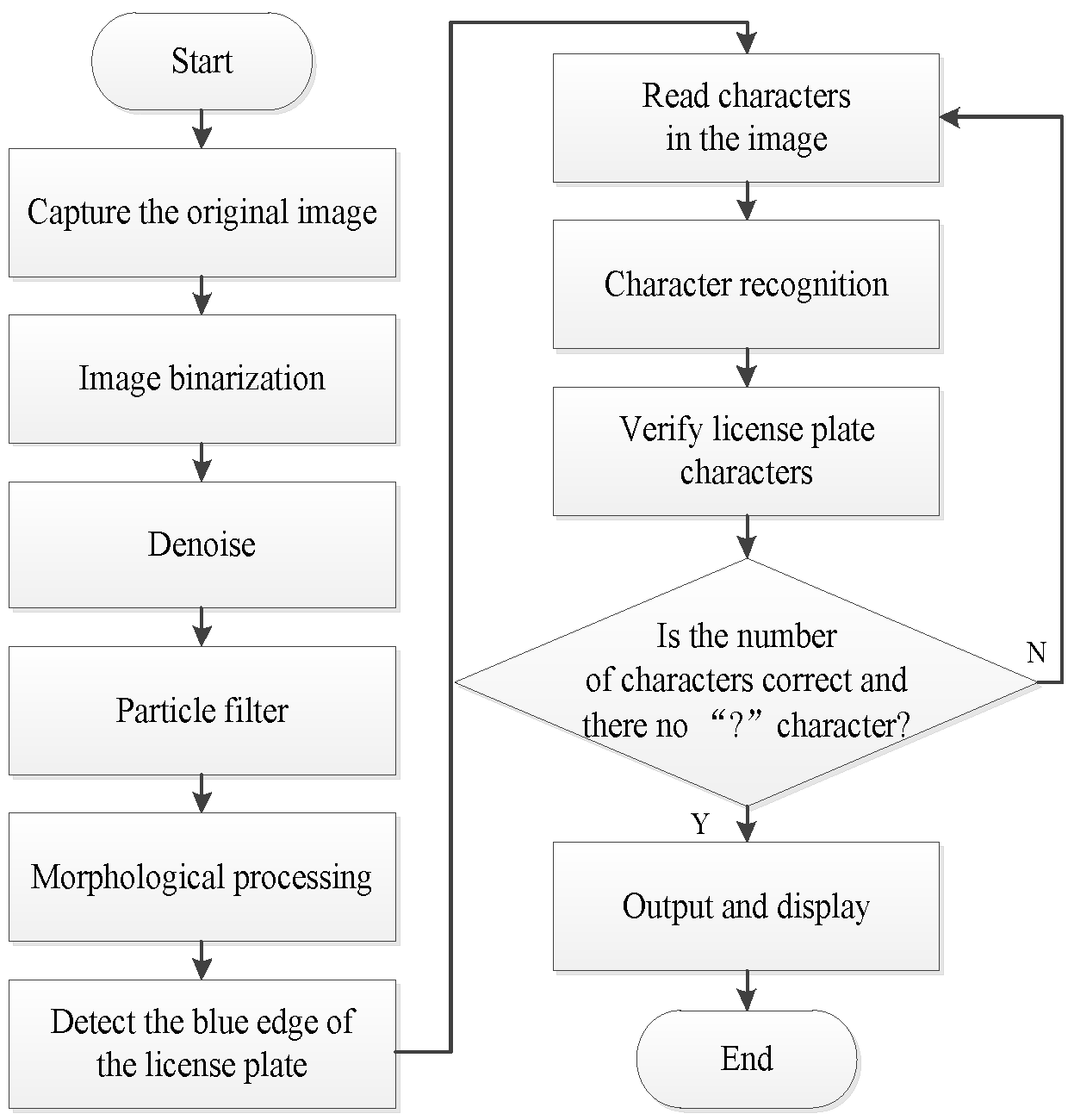

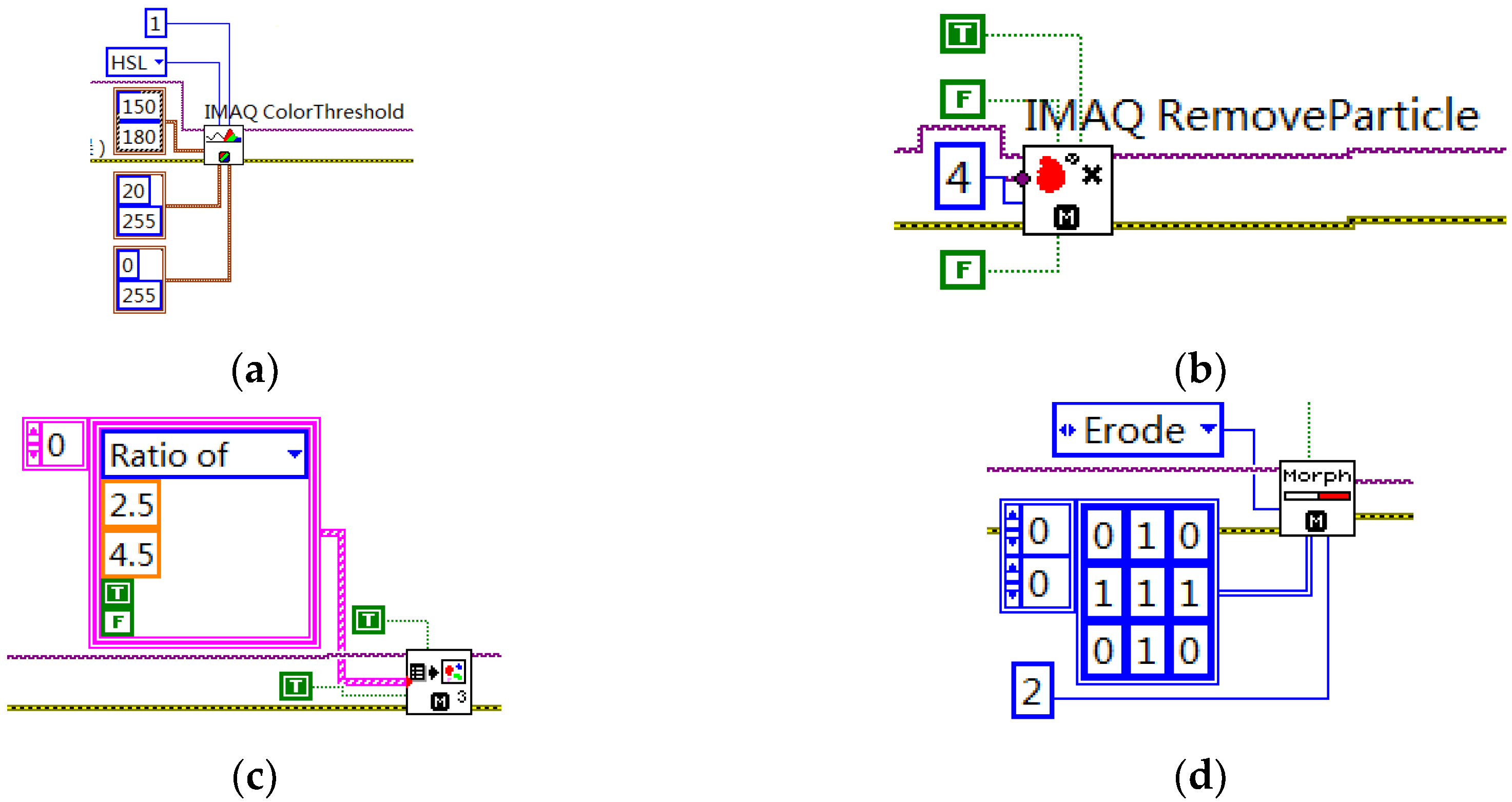

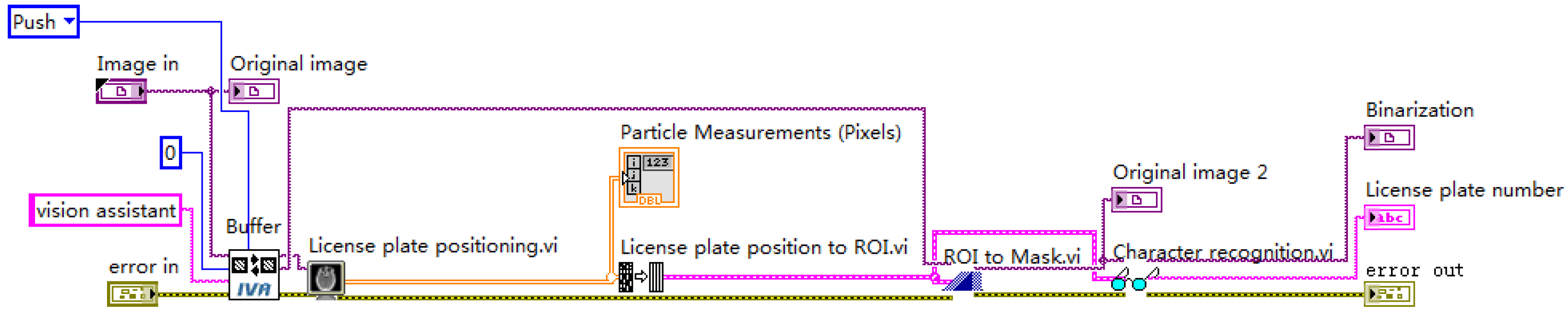

6.2. License Plate Recognition Scheme

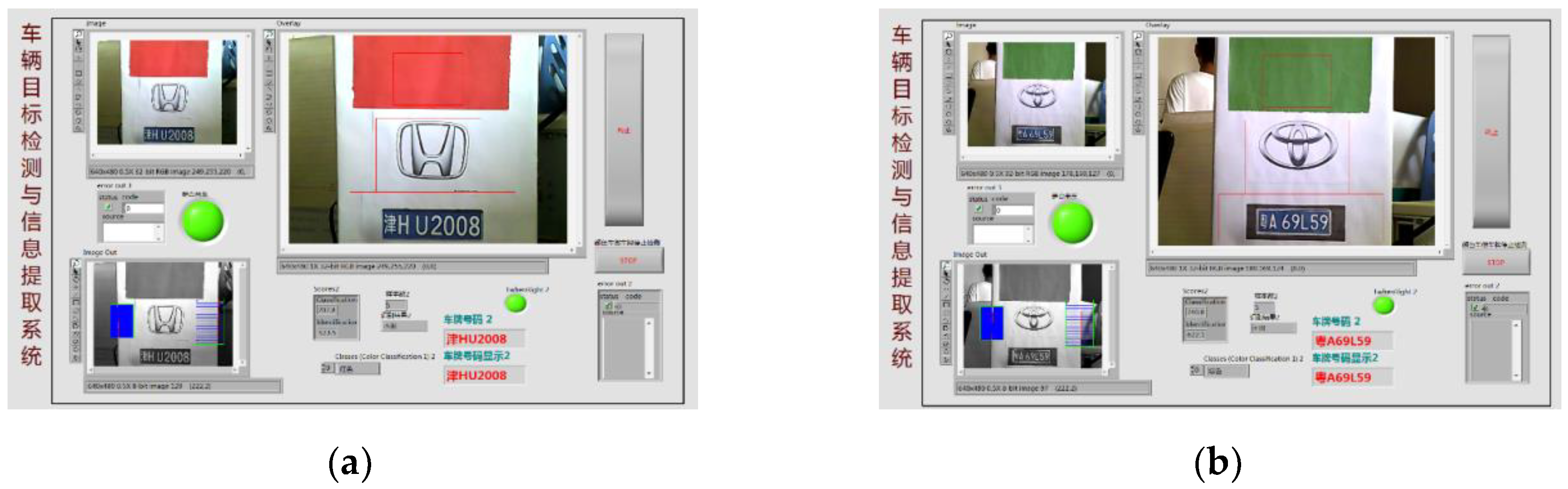

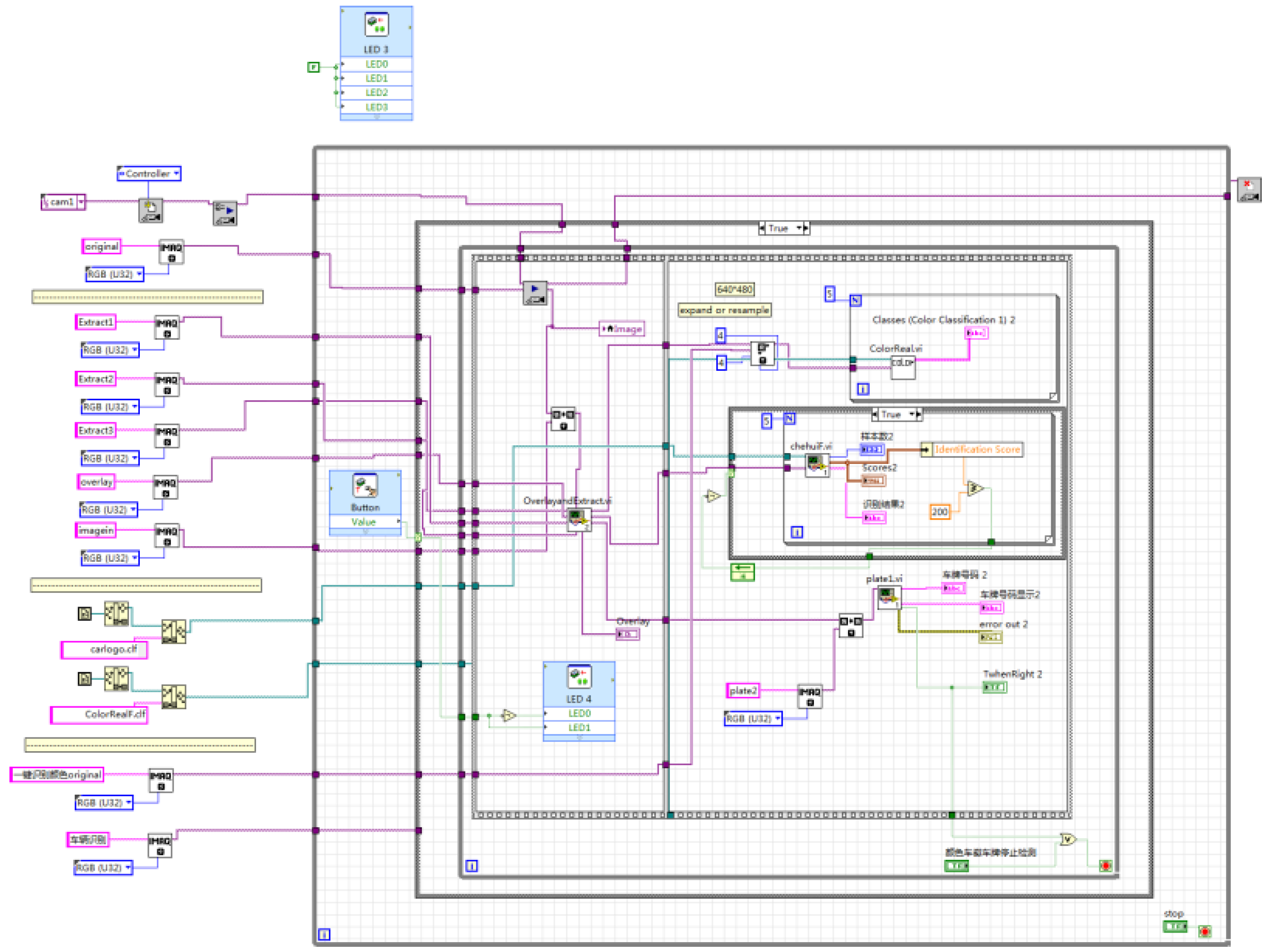

7. System Integration and Performance Analysis

8. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Morris, B.T.; Cuong, T.; Scora, G.; Trivedi, M.M.; Barth, M.J. Real-Time Video-Based Traffic Measurement and Visualization System for Energy/Emissions. IEEE Trans. Intell. Transp. Syst. 2012, 13, 1667–1678. [Google Scholar] [CrossRef]

- Nandyal1, S.; Patil, P. Vehicle Detection and Traffic Assessment Using Images. Int. J. Comput. Sci. Mob. Comput. 2013, 2, 8–17. [Google Scholar]

- Song, H.; Zhang, X.; Zheng, B.; Yan, T. Vehicle Detection Based on Deep Learning in Complex Scene. Appl. Res. Comput. 2018, 35, 1270–1273. [Google Scholar]

- Cho, H.; Hwang, S.Y. High-Performance On-Road Vehicle Detection with Non-Biased Cascade Classifier by Weight-Balanced Training. EURASIP J. Image Video Process. 2015, 1, 1–7. [Google Scholar] [CrossRef]

- Hadavi, M.; Shafahi, Y. Vehicle Identification Sensor Models for Origin-Destination Estimation. Transp. Res. Part B Methodol. 2016, 89, 82–106. [Google Scholar] [CrossRef]

- Mu, K.; Hui, F.; Zhao, X. Multiscale Edge Fusion for Vehicle Detection Based on Difference of Gaussian. Opt.-Int. J. Light Electron Opt. 2016, 127, 4794–4798. [Google Scholar] [CrossRef]

- Nur, S.A.; Ibrahim, M.M.; Ali, N.M.; Nur, F.I.Y. Vehicle Detection Based on Underneath Vehicle Shadow Using Edge Features. In Proceedings of the 2016 6th IEEE International Conference on Control System, Computing and Engineering (ICCSCE), Batu Ferringhi, Malaysia, 25–27 November 2016; pp. 407–412. [Google Scholar]

- Yuan, L.; Xu, X. Adaptive Image Edge Detection Algorithm Based on Canny Operator. In Proceedings of the 2015 4th International Conference on Advanced Information Technology and Sensor Application (AITS), Harbin, China, 21–23 August 2015; pp. 28–31. [Google Scholar]

- Wang, C.-M.; Liu, J.-H. License Plate Recognition System. In Proceedings of the 2015 12th International Conference on Fuzzy Systems and Knowledge Discovery (FSKD), Zhangjiajie, China, 15–17 August 2015; pp. 1708–1710. [Google Scholar]

- Zhao, W.; Li, X.; Wang, P. Study on Vehicle Target Detection Method in Gas Station Complex Environment. For. Eng. 2014, 30, 74–79. [Google Scholar]

- Chen, P. Research on Vehicle Color Recognition in Natural Scene. Master’s Thesis, Huazhong University of Science and Technology, Wuhan, China, 2016. [Google Scholar]

- Huang, Y.; Wu, R.; Sun, Y.; Wang, W.; Ding, X. Vehicle Logo Recognition System Based on Convolutional Neural Networks with a Pretraining Strategy. IEEE Trans. Intell. Transp. Syst. 2015, 16, 1951–1960. [Google Scholar] [CrossRef]

- Llorca, D.F.; Arroyo, R.; Sotelo, M.A. Vehicle logo recognition in traffic images using HOG features and SVM. In Proceedings of the 16th International IEEE Conference on Intelligent Transportation Systems (ITSC 2013), The Hague, The Netherlands, 6–9 October 2013; pp. 2229–2234. [Google Scholar]

- Tejas, B.; Omkar, D.; Rutuja, D.; Prajakta, K.; Bhakti, P. Number plate recognition and document verification using feature extraction OCR algorithm. In Proceedings of the 2017 International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 15–16 June 2017; pp. 1317–1320. [Google Scholar]

- Kakani, B.V.; Gandhi, D.; Jani, S. Improved OCR based automatic vehicle number plate recognition using features trained neural network. In Proceedings of the 2017 8th International Conference on Computing, Communication and Networking Technologies (ICCCNT), Delhi, India, 3–5 July 2017; pp. 1–6. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, H.; He, S.; Yu, J.; Wang, L.; Liu, T. Research and Implementation of Vehicle Target Detection and Information Recognition Technology Based on NI myRIO. Sensors 2020, 20, 1765. https://doi.org/10.3390/s20061765

Wang H, He S, Yu J, Wang L, Liu T. Research and Implementation of Vehicle Target Detection and Information Recognition Technology Based on NI myRIO. Sensors. 2020; 20(6):1765. https://doi.org/10.3390/s20061765

Chicago/Turabian StyleWang, Hongliang, Shuang He, Jiashan Yu, Luyao Wang, and Tao Liu. 2020. "Research and Implementation of Vehicle Target Detection and Information Recognition Technology Based on NI myRIO" Sensors 20, no. 6: 1765. https://doi.org/10.3390/s20061765

APA StyleWang, H., He, S., Yu, J., Wang, L., & Liu, T. (2020). Research and Implementation of Vehicle Target Detection and Information Recognition Technology Based on NI myRIO. Sensors, 20(6), 1765. https://doi.org/10.3390/s20061765