1. Introduction

Electromyography (EMG) is a technique to record electrical signals from the muscles during neuromuscular activity [

1]. Apart from clinical diagnosis, EMG signals have a wide range of applications in rehabilitation devices [

2], electronically controlled chairs [

3] and human-computer interactions [

4]. For the control of EMG-based upper prosthetic limbs, these signals are used as the main control source for these devices.

Different techniques have been utilized in upper limb artificial devices in order to provide natural and intuitive control for upper limb amputees they are: (1) on–off control, (2) proportional control, (3) direct control, (4) finite state machine control, (5) pattern recognition-based control, (6) posture control schemes and (7) regression control [

5,

6]. Although these control methods have shown great success, they can only control one device at a time such as a wrist, hand or elbow, so they have limited functionality. The amplitude of the EMG signals or rate of change in EMG is used as a control function in these techniques to change the state of the device.

Machine learning techniques have evolved over time to provide natural myoelectric control to amputees. These techniques (linear discriminant analysis (LDA), K nearest neighbor (KNN), support vector machine (SVM), artificial neural network (ANN)) assume that each motion of the hand generates a distinct and repeatable set of signals that can be recognized by the pattern recognition (PR) technique. In the literature, some PR techniques are preferred over others based on their signal presentation or feature set. Many studies have focused on optimizing feature sets to bridge the gap between the EMG signals and prosthetic control. Though successful [

7], machine learning (ML) algorithms have two major limitations, i.e., feature extraction (features have to be explicitly computed and fed to the network) and the inability to handle large datasets efficiently [

8].

The advent of deep learning (an extension of ML) has addressed both these limitations. Deep learning algorithms can intrinsically extract important features for classification and have shown improved efficiency with large datasets [

9]. As robustness over time of the classifier is the end goal, deep learning algorithms can be used as an alternative to both conventional and ML algorithms. Most recently deep learning methods have outperformed both conventional methods and ML algorithms [

9].

With advancements in deep learning, many studies have explored possibilities for EMG-based hand gesture classification based on the inherent ability of the networks to extract useful features intrinsically. Park and Lee [

10] introduced user-adaptive multi-layered CNN for classification of surface EMG (sEMG) from the NinaPro database and concluded that it outperformed SVM by 12%–18%. Atzori et al. [

11] showed CNN’s potential compared to traditional techniques (KNN, SVM and LDA). Allard et al. [

12] showed CNN’s performance in a real-time application using a wearable sensor for EMG (MYO armband) and achieved 97.8% classification accuracy. [

13] CNN’s robustness over time was compared to LDA and auto-encoders SSAE-f (Stacked Sparse Auto-Encoders with features) and SSAE-r (Stacked Sparse Auto-Encoders with raw samples) for data collected over seven days and showed CNN’s improved performance over conventional methods. Studies showed that the classification accuracies of the system changed over time [

14]. Zhai [

15] proposed a self-calibrating CNN to improve stability and performance over time on the NinaPro dataset and achieved an improvement of 10.18% on DB2 (intact, 50 motions) and 2.99% on DB3 (amputee, 10 motions) compared to an uncalibrated classifier. Chen [

16] proposed a “compact” strategy (EMGNet) to reduce the number of parameters involved in the designing of CNN on NinaPro DB5 and achieved slightly better performance compared to classical machine learning algorithms. Huang [

17] utilized spectrogram in conjunction with a CNN-LSTM (Long Short-Term Memory network) combination and showed improved classification (from 77.167% to 79.329%) on the NinaPro dataset. Tsinganos [

18] proposed a modified CNN and achieved an improvement of 3% on NinaPro dataset. Pinzón-Arenas [

19] used CNN to recognize six hand gestures using a wearable EMG recording device (Myo Armband, Thalamic Labs) and achieved a validation accuracy of 98.4% and 99% testing accuracy.

Normally, the convergence of the network and its variation across the considered range is not discussed, often because more importance is held by the best possible performance (error reduction) of the network. However, a network may converge at multiple points and the chance is random [

20]. So, another aspect of this study was to consider performance trends for different sets of hyper-parameters determined by statistically analyzing the performance of each combination (p-values < 0.05 were considered significant). Lastly, a comparison of classification results per learning rate for each individual motion to determine a feasible control strategy (general vs. subject-specific).

2. Materials and Methods

2.1. Subjects

The dataset used for the case study was recorded from 18 healthy male subjects (right-handed, aged 20–35 yrs, mean age 26.2 yrs). All participating subjects had no history of neuromuscular disease and congenital upper limb deformities. All subjects volunteered for the experiment and gave written consent before the experiment. a reference electrode (wristband electrode) was placed on the non-dominant hand near the carpus. The data acquisition protocol was approved by the NUST ethical committee Ref# NUST/SMME/BMES/ETH/092019/0042.

2.2. Data Acquisition

The surface EMG (sEMG) signals were recorded from the surface with six bipolar Ag/AgCl electrodes from the following muscles: extensor carpi radialis, extensor digitorum muscle, extensor carpi ulnaris, flexor carpi radialis, palmaris longus, flexor digitorum superficialis. Signals were sampled at 8 kHz, bandpass filtered (5–500Hz) and amplified (gain set to 2000 AnEMG12, OT Bioellectronica).

2.3. Experiment Protocol

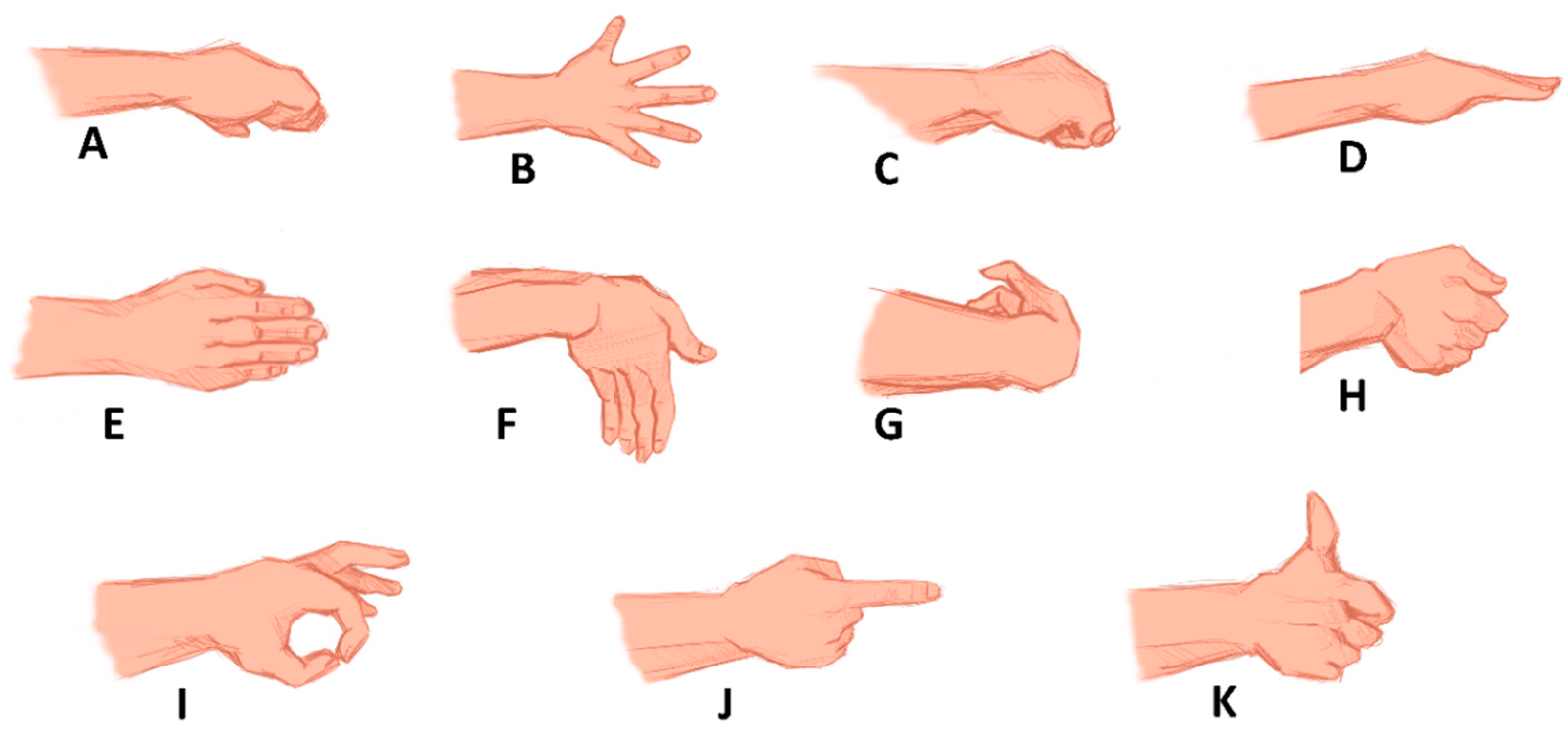

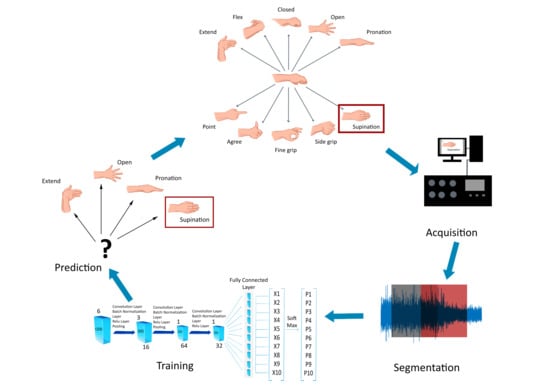

The subjects were asked to perform the following 10 active upper extremity movements (hand open, hand close), flexion (wrist), extension (wrist), pronation (forearm), supination (forearm), side grip (gripping the object perpendicular to the forearm with all fingers flexed and thumb around the object), fine grip, agree and pointer. The motions are displayed in

Figure 1.

Each session comprised of the subject performing four repetitions tasked with 6 s of contraction for each movement, holding the contraction for three to four seconds in each repetition (with medium intensity), as is usually the protocol for data acquisition [

21,

22]. The contraction time allows sufficient interval for the subject to see the visual cue, initiate contraction, stabilize it and then release to transition into a rest state. The subjects were visually cued for performing the specific movement via the image of the motion through BioPatRec [

22], an open-source acquisition Graphical User Interface (GUI) for pattern recognition. The resting time between consecutive contractions was four seconds. In each repetition, the subjects were asked to execute all motions randomly. The acquisition time for each subject was 400 s ([6 s contraction + 4 s rest × 10 motions) and total acquisition time for the complete experiment (18 subjects) was around 3 h.

2.4. Convolutional Neural Network (CNN)

Convolutional neural networks are traditional ANNs but with a convolutional layer and dropout layer essentially used to avoid overfitting [

23]. Traditional classification and machine learning techniques require feature extraction. These features are decided and computed explicitly and fed into the network. CNN develops several feature detectors on its own, normally known as convolutional layers, and during training sorts the important features required to improve accuracy. This is achieved by convolving the filters with patches of input, creating what is called a receptive field. Receptive fields allow individual filters to incorporate the same weights for learning for all input patches. This field is then fed to the activation function [

13].

For the network to be able to identify the input more effectively, the classifier needs what we can refer to as “spatial variance”. This ability of the network is gained by pooling. Not only does it help to prevent distortions, but it also reduces the dimensionality of the image reducing the parameters to account for. In order to reduce the problem of vanishing gradient and improve training speed rectified linear units (ReLU) were used as activation function [

24].

CNN primarily uses images as input the segmented data needs to be “morphed” into a suitable input layer for the network. Since the acquired signal was sampled at 8 kHz and recorded for 40 s, following segmentation, matrices of 6 × 1200 were obtained, where 6 is the number of channels and 1200 is the number samples in each window of 150 ms (8000 Hz × 0.15s).

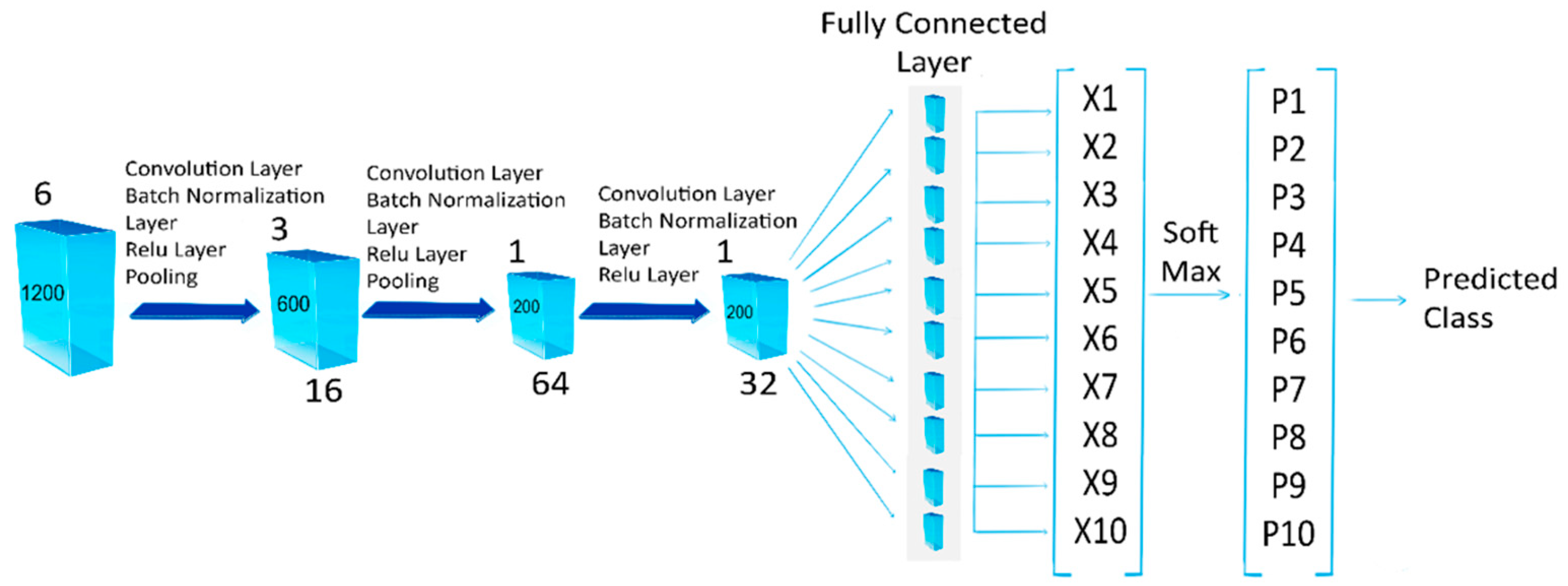

The architecture of the network comprises of 15 layers. The network has an input layer and three convolution layers with 16, 64, 32 (3 × 3) filters, respectively. The network has three normalization layers and two pooling layers of 2 × 2 and 3 × 3 regions with a stride of 2, respectively. The network also has 3 ReLU layers, a fully connected layer, a SoftMax classification layer and an output layer.

The training algorithm for the network was stochastic gradient descent with momentum (sgdm). The validation frequency was set to twice in each epoch. The minimum batch size was set to 128 since lower batch values increased the training time. These layers and parameters were chosen empirically.

Each motion was labeled as a different class, making a total of 10 classes

Figure 2.

2.5. Parametric Optimization

Deep learning neural networks produce exceptional results; training a neural network requires mostly empirical methods to tune hyper-parameters.

This can never be conclusive since the nature of the signal at hand is completely random and greatly varies from subject to subject; therefore, a generalized set of parameters cannot be obtained. However, the selection of these hyper-parameters greatly affects the obtained results [

23].

Typically, the deep learning neural network (DLNN) updates by stochastic gradient descent and weights are updated by parameter

. Mathematically,

where L is the loss function and

is the learning rate [

23].

A smaller learning rate results in the slow convergence of the network inversely, a larger learning rate tends to cause divergence. So, to determine a suitable value, it can take many trials. For example, for the selection of a suitable learning rate, Smith et al. have introduced a Cyclic Learning Rate (CLR) method that tends to reduce the number of iterations required to determine a suitable learning rate. Other methods utilize Bayesian optimization to determine hyper-parameters [

24].

Another important parameter to be considered is training cycles (Epochs). Tuning of this parameter is perhaps the easiest of all parameters. Given the principle of

early stopping and provided all the other parameters are set. As during progress, one can observe the number of cycles sufficient for the network to train

Early Stopping and

Regularization minimize overfitting with early stopping being the more efficient choice. Although grid search can take several iterations to tune hyper-parameters. It does have one benefit compared to other methods and that it is parallelizable meaning provided enough computing power is available it is possible to instead search for a suitable model that would essentially meet the requirement of the analysis [

25].

For this specific study, the learning rate was selected to be incremented logarithmically. Since the learning rates are equally spaced for this range. However, in the future, the CLR method can also be employed for the selection of learning rates to improve efficiency. Since the dataset is relatively smaller, a parallel grid search for the suitable learning rate and adequate training cycles is possible and can serve as a decent starting position for getting a general idea of parameter vs. performance and help pave the way later for optimization with different methods for multiple parameters.

The algorithm will grid search through all the possible combinations at different learning rates and different epoch values for each learning rate. Each network is then tested for classification accuracy and the performance metric is the mean classification error (MCE). Lower scores indicate better performance. Obtained results will then be statistically compared and analyzed.

The experiment was performed on a laptop equipped with a quad-core CPU, 16 GB RAM and 8 GB NVIDIA GTX 1070M GPU.

2.6. Hyper-Parameters

The number of training iterations can be adjusted almost freely since it is one of the more convenient parameters to optimize [

25].

The default training iterations in MATLAB are 30. So, a range of 20 to 100 training iterations with increments of 20 is proposed to observe whether or not further training would enhance performance. One hundred epochs were selected as the upper threshold as it does provide sufficient training time and further training yielded negligible performance improvement if any.

In contrast, if there is only one parameter that deserves attention in terms of optimizing for improving the overall performance of the network, it is the learning rate. The normal value for learning rate ranges from less than 1 to greater than 10

–6, with 0.01 considered as a standard value for most networks [

25]. Hence, the range of 0.00001 to 0.1 was selected.

Scanning between all of these values will require extensive computation and time and the chance of convergence of the network is random, and multiple algorithms have been developed to optimize the learning rate (CLR, Grid Search). The values were incremented logarithmically resulting in a learning rate array of commonly chosen values of [0.00001, 0.0001, 0.001, 0.01, 0.1] in order to observe the general trend of performance of a multi-layered neural network across the range of suggested values.

2.7. Analysis

For performance metrics, the mean classification error for each learning rate and mean classification accuracy for individual subjects were considered. For statistical analysis, a two-way analysis of variance (ANOVA) followed by multiple comparison was implemented to determine performance variation among learning rates. Performance variations were reported significant for p-values less than 0.05.

4. Discussion

The effect of the selection of considered parameters is crucial to the overall efficiency of the network, a suitable method would be to automate the selection of learning rate by employing a sequential or adaptive technique [

23,

24,

25]. This study is centered around not only the achieved performance of the network but also its effect on each individual motion. It is observable that selecting a suitable learning rate yields a significant change in classification accuracy. Even if the learning rate primarily dictates the overall validation success rate and, in turn, the classification accuracy of the network, it is also essential to determine whether if there is a possibility of obtaining an even smaller value for validation loss (error) beyond the selected value for training cycles. This is why it is necessary to train beyond the set number of epochs to validate this aspect.

During this study, another possible set of parameters was the learning rate set to 0.0001 and epochs set to 100, which gave almost similar results as 0.001 with iterations set to 80 (average difference of 1.4%) obtained p-value of 0.58 in multiple comparison test.

The trend as shown in

Figure 2 clearly depicts that for a smaller learning rate value, the number of epochs should be significantly large for the network to converge [

25]. Another interesting validation can be seen when the learning rate is larger, i.e., 0.1. Here we see that the network has completely diverged from learning, resulting in larger values for validation loss and poor training performance. An alternative is the use of random sampling for tuning hyper-parameters, due to the inherent scaling issues of grid search for multiple parameters [

25,

26,

27].

Higher accuracies have been achieved for multiple degrees of freedom through multiple techniques. However, primarily, the need here is to develop patient-specific control strategies. Since it is difficult to obtain a generalized solution for multiple subjects simultaneously (due to the limited number of subjects for testing), the use of adaptive control schemes based on deep learning architectures has become a more viable solution.

Current studies mostly deal with pattern recognition (PR) using different feature sets or proportional control non-invasively using sEMG for improved myoelectric control. However, the increased number of classes results in performance degradation [

27]. CNN eliminates the need for traditional feature extraction but at the same time requires multi-layered data as a valid input which requires extra computation for conversion. A suitable substitute method for biosignals would be to implement similarly layered architecture, which utilizes one-dimensional signals as input to reduce computational cost. Since the performance of DLNNs depends on the architecture and optimum parameter selection, an adequate method would either be sequential optimization algorithms [

28] or adaptive algorithms to intuitively select optimum parameters.

An extended study could include the selection of multiple hyper-parameters through some sequential or adaptive methods.

For real-time (myoelectric) control, the true limiting variable is time, more specifically the response time of the control system. The response time needs to be adequately small, roughly 300 ms [

29], to be unperceivable to the user. As far as time complexity is concerned, it has a 3:1 ratio for training vs. testing time per image due to one forward and two backward propagations [

30]. Multiple architectures can have the same time complexity and the run-times are greatly influenced by the GPU’s computing ability. This, in turn, greatly depends on the hardware and application. An attempt [

21] at a real-time convolutional neural network discussed its potential. Although the study showed that the CNN potential rivaled that of the standard SVM algorithm, the robustness of CNN over time in comparison to standard pattern recognition methods, and with respect to changes in limb or electrode placement, still needs to be explored. The true limiting factor to make it portable, however, still remains due to the limited processing power available in wearable embedded systems. Currently, wearable embedded systems are not powerful enough to reproduce the same results as a dedicated GPU, but faster learning neural networks with optimized parameters can be used in order to reduce the computational time, which can allow complex models to be implemented on low power devices.

The aim of this study was to observe performance variation of a multi-layered neural network for raw acquired data of several hand motions and observe that whether the network shows similar improvement for all motions or does it prefer some motions over others. The results have shown that the network does tend to perform better when classifying certain motion; however, further investigation is possible by increasing the number of subjects. The aim here is to see the behavior and then in the future make a workaround strategy for improving performance relatively uniformly across all motions.

The study here was constructed around one network architecture and dataset. Further studies may include multiple architectures and performance can be analyzed among various networks across multiple datasets.