A Repeated Game Freeway Lane Changing Model

Abstract

1. Introduction

2. Literature Review

2.1. Lane-Changing Decision-Making Models

2.2. Game Theory-Based Lane-Changing Decision-Making Model

2.3. Motivation and Contribution of the Paper

3. Merging Decision-Making Model Using a Repeated Game Concept

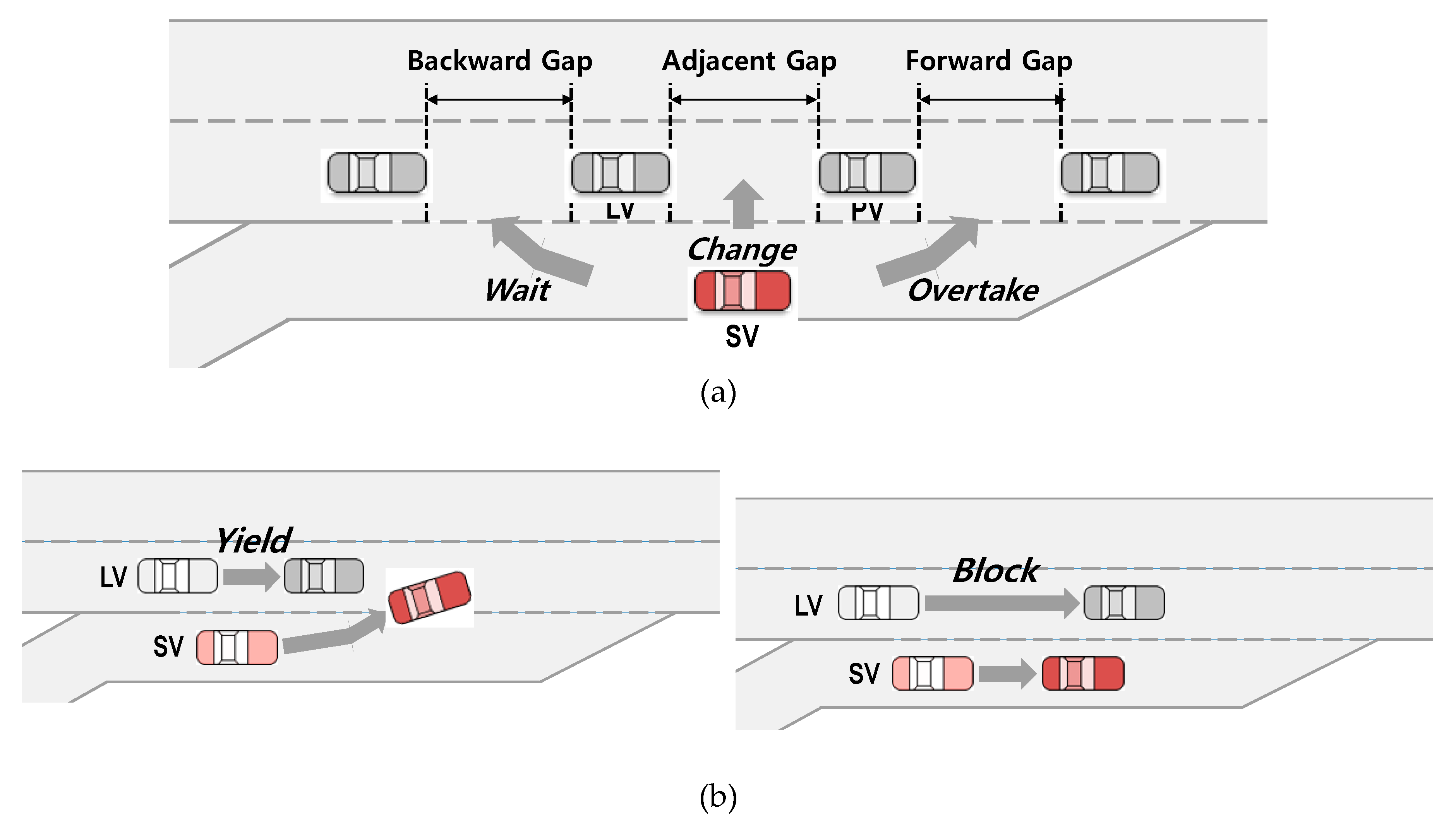

3.1. Stage Game Design

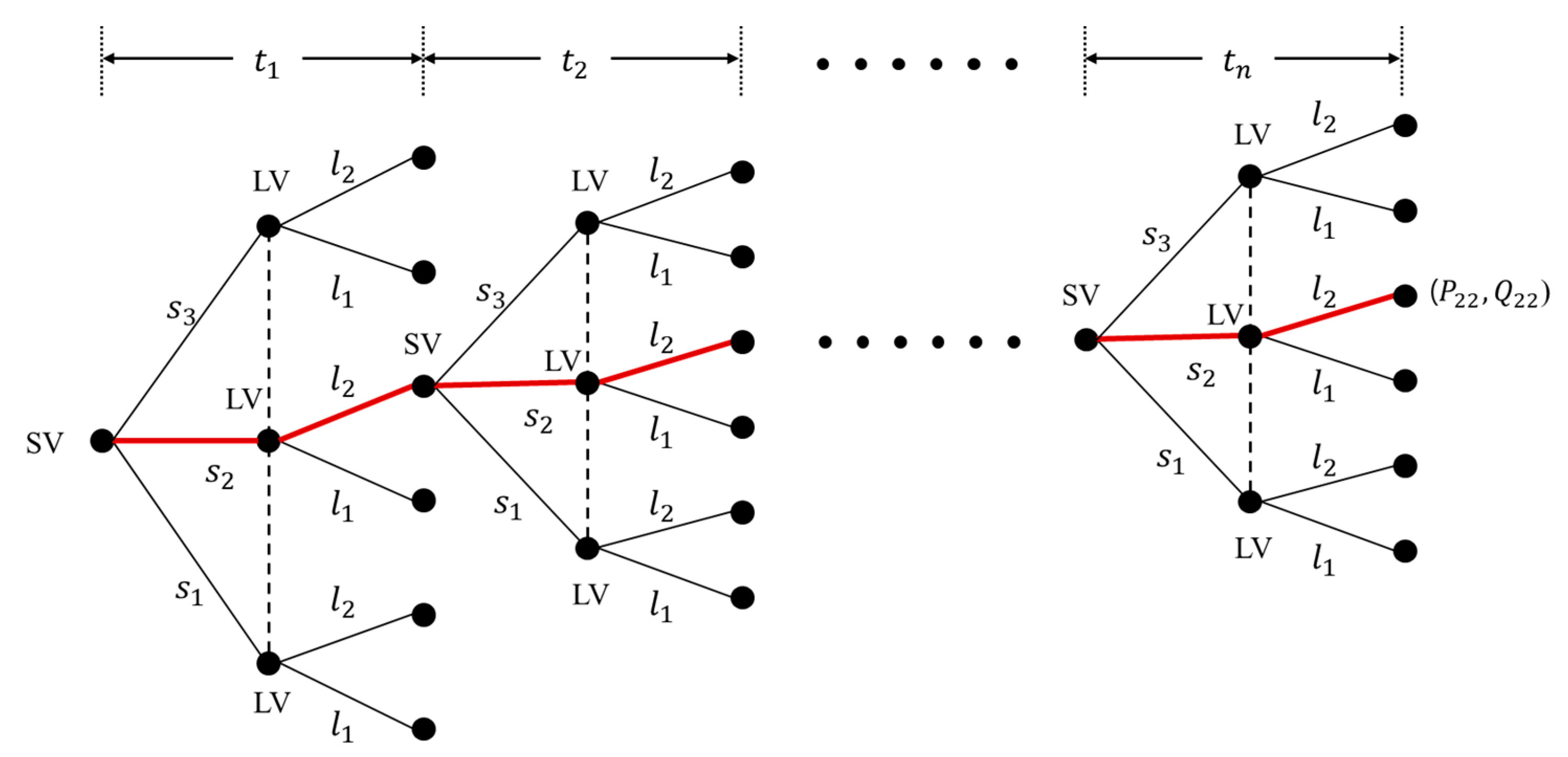

3.2. Repeated Game Design

3.3. Reformulated Payoff Functions

3.3.1. Safety Payoff

3.3.2. Forced Merging Payoff for the Driver of SV

3.3.3. Payoff Functions for the Drivers of the SV and LV

4. Model Calibration and Validation

4.1. Preparation of Observation Dataset

4.2. Model Calibration

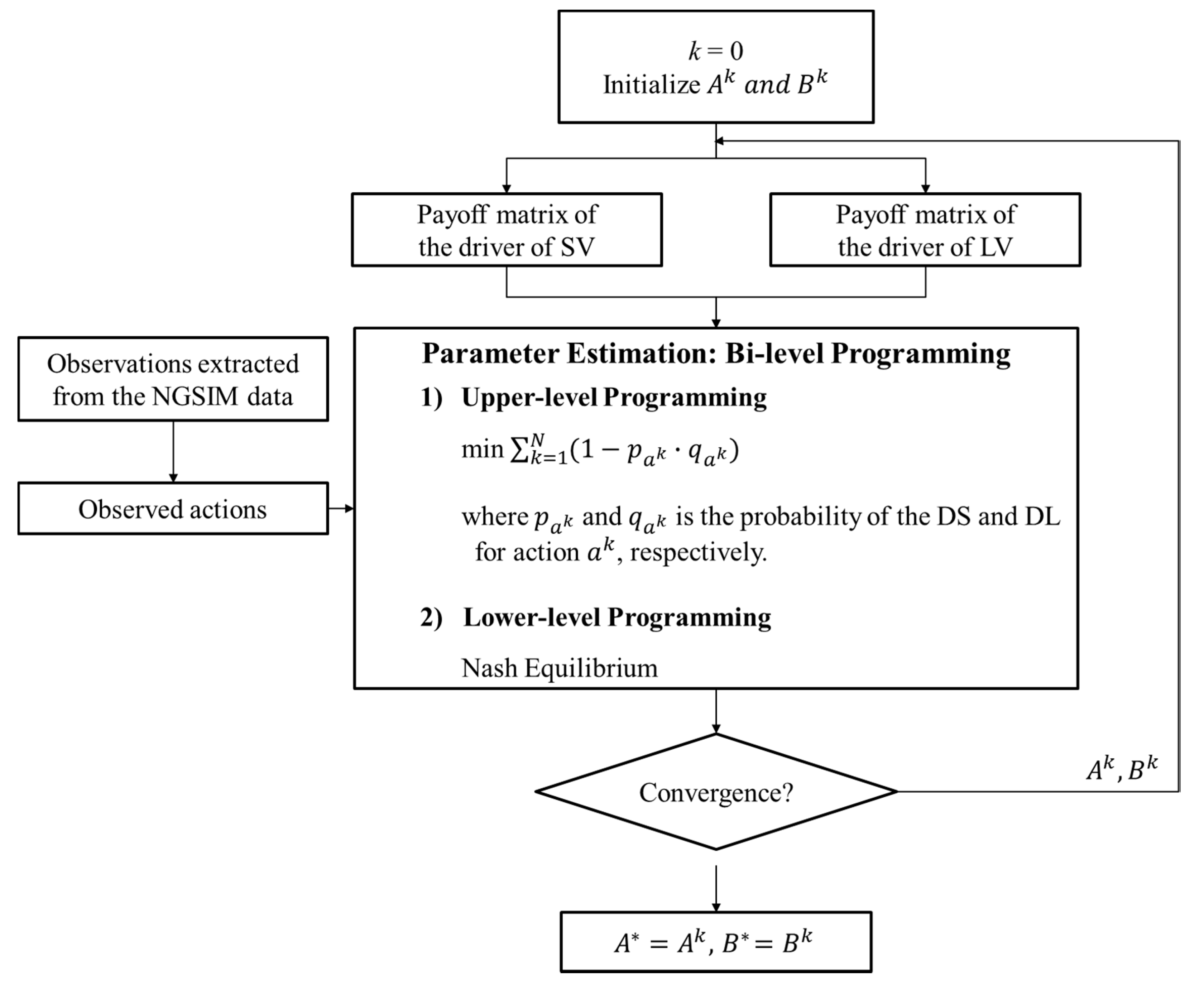

4.2.1. Calibration Approach

4.2.2. Calibration Results

- One-shot game model based on the stage game using the payoff functions developed in [13];

- One-shot game model based on the stage game using the reformulated payoff functions in Section 3.3

4.3. Model Validation

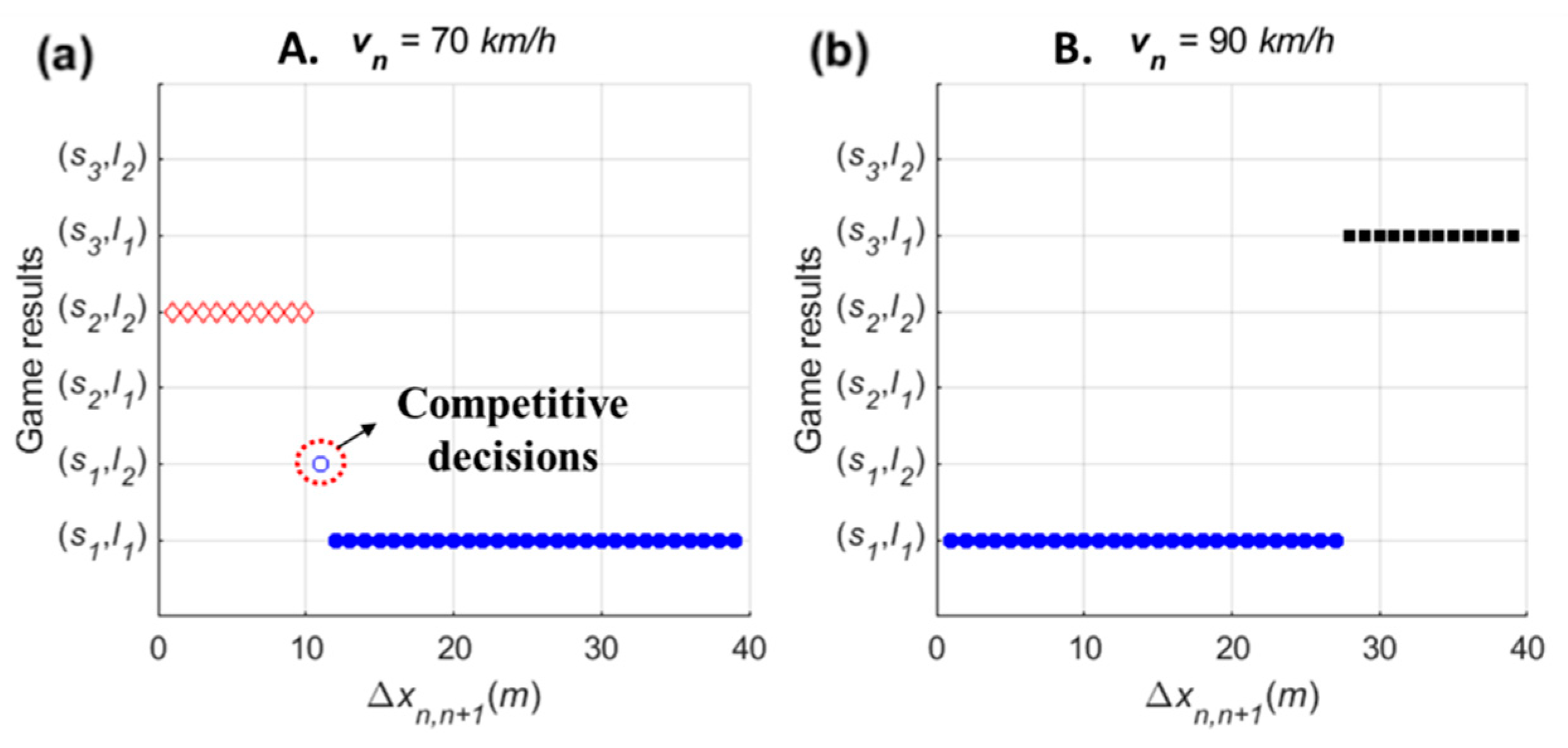

5. Sensitivity Analysis of the Calibrated Stage Game

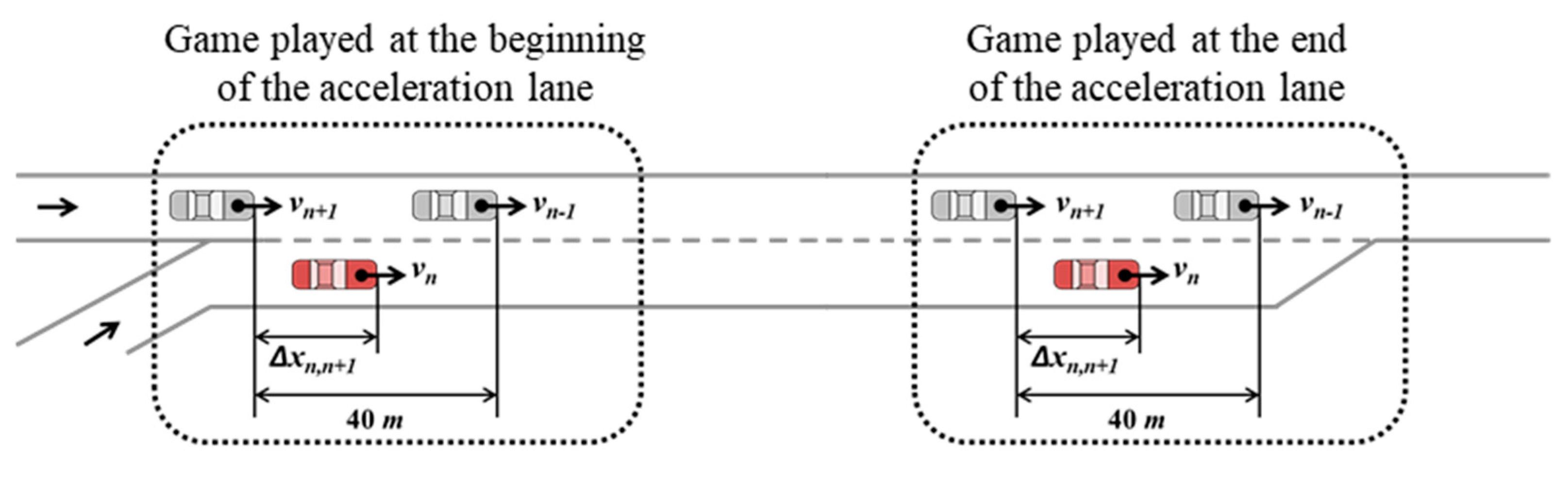

5.1. Sensitivity Analysis Setting

- The length of the acceleration lane was 250 m;

- Based on initial longitudinal coordination, , , and denote the PV, SV, and LV, respectively;

- It was assumed that spacing between the PV and LV, , was constant as 40 m: in the game played at the beginning of the acceleration lane, the PV and LV were located at 70 m and 30 m from the beginning of the acceleration lane, respectively. In the game played at the end of the acceleration lane, the longitudinal position of the PV and LV were 230 m and 190 m from the beginning point, respectively;

- The length of all vehicles was assumed as constant at 4.8 m;

- Link properties for the freeway are as follows. Saturation flow rate was 2400 veh/h/lane. Jam density was 160 veh/km/lane. Free-flow speed and speed-at-capacity were 100 km/h and 80 km/h, respectively;

- Calibrated parameters of payoff functions for the repeated game model with were used.

5.2. Sensitivity Analysis Results

6. Simulation Case Study

6.1. Simulation Model Development

- For ‘change ()’ action, the driver of SV determines acceleration level in consideration of not only speed synchronization but also gap acceptance. If , an acceleration level for speed harmonization is additionally calculated. By gap acceptance rule, another acceleration level is calculated to ensure a sufficient gap for lead and lag spacing;

- For ‘wait ()’ action, a required acceleration level to wait in acceleration lane until the lag vehicle passes the SV is computed. Generally, waiting cases are observed when and is not sufficient. If and the remaining distance to the end of the acceleration lane at time , , is sufficient to not require deceleration, the SV slightly accelerates to harmonize the speed with freeway vehicles during waiting time;

- Lastly, it needs to calculate the required acceleration level to use the forward gap for ‘overtake ()’ action. This case is observed when and is not sufficient. For this strategy, therefore, speed harmonization is excluded as an acceleration component.

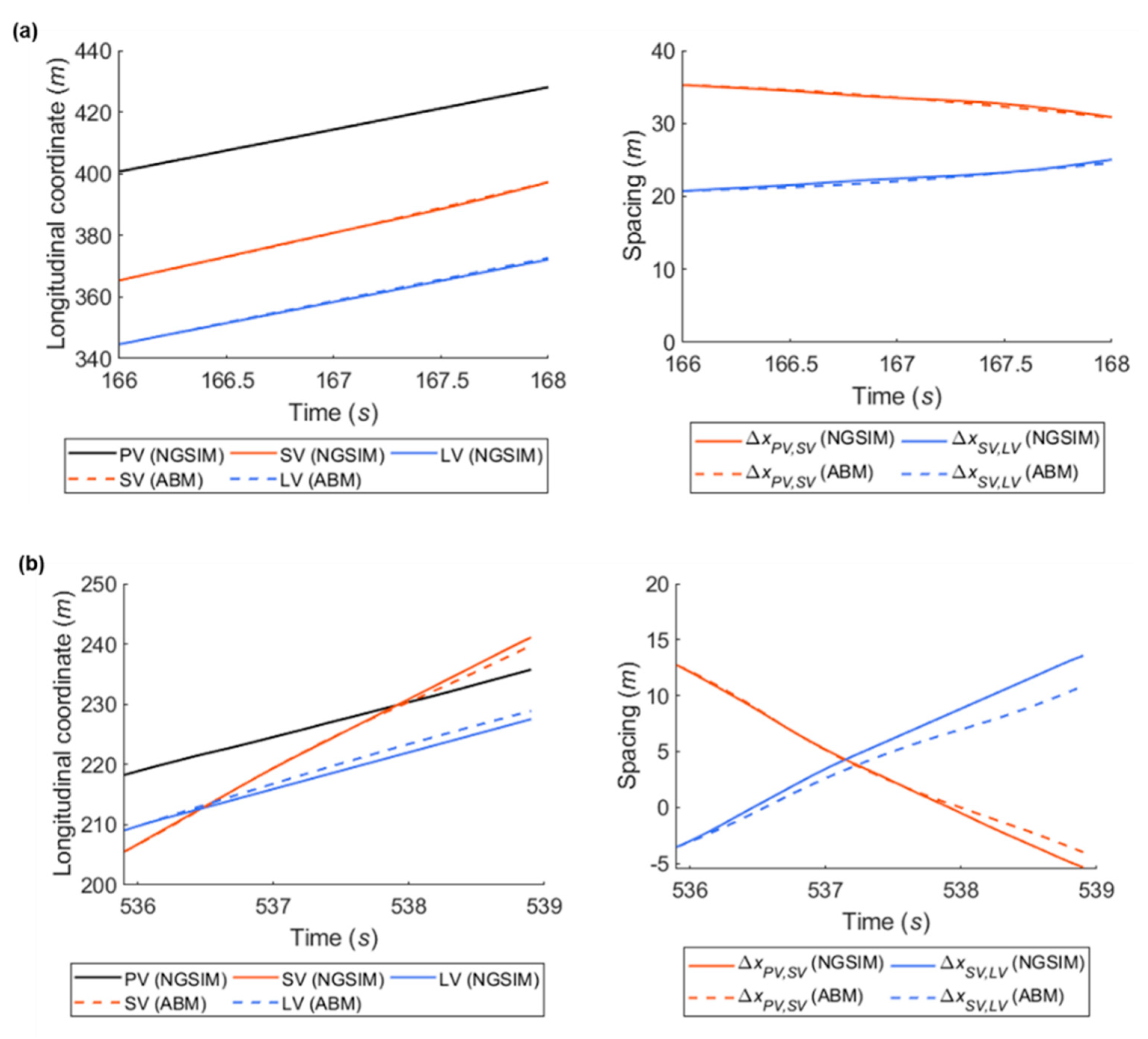

6.2. Simulation Model Validation

6.3. Simulation Setting and Cases

- Link properties for the freeway are as follows. Saturation flow rate was 2400 veh/h/lane. Jam density was 160 veh/km/lane. Free-flow speed and speed-at-capacity were 100 km/h and 80 km/h, respectively;

- Based on initial longitudinal coordination, vehicles on the network were designated as ,, , , and , respectively. Here, the vehicle denotes the SV;

- It was assumed that the average initial speed of freeway vehicles was . The initial speeds of four vehicles on the freeway mainline (i.e., ) were randomly determined using the normal distribution with a mean of and standard deviation of 0.2 at simulation start time;

- The initial spacing between freeway vehicles, i.e., , was determined using the Van Aerde’s steady-state model according to instantaneous speed of corresponding following vehicle at time-step 0;

- With regard to the game, the time interval for playing the game was 0.5 s. The stage game would be newly formed if the LV or PV changed;

- The rate factor () of 1.4 and corresponding calibrated parameters of payoff functions, as shown in Table 2, were used for the repeated game model;

- Maximum and minimum accelerations are 3.4 and −3.4 , respectively, as determined with reference to the NGSIM data. The length of all vehicles was assumed as constant as 4.8 m;

- In this simulation model, the freeway mainline vehicles’ behaviors to avoid a potential collision with the merging vehicle, i.e., lane change to left or deceleration before arriving at the merging section, were excluded. These behaviors could not be modeled for an individual vehicle’s driving maneuvers in traffic simulator because they are a result of vehicles’ independent decisions rather than any interaction with the merging vehicle after recognizing the merging vehicle.

6.4. Case Study Results

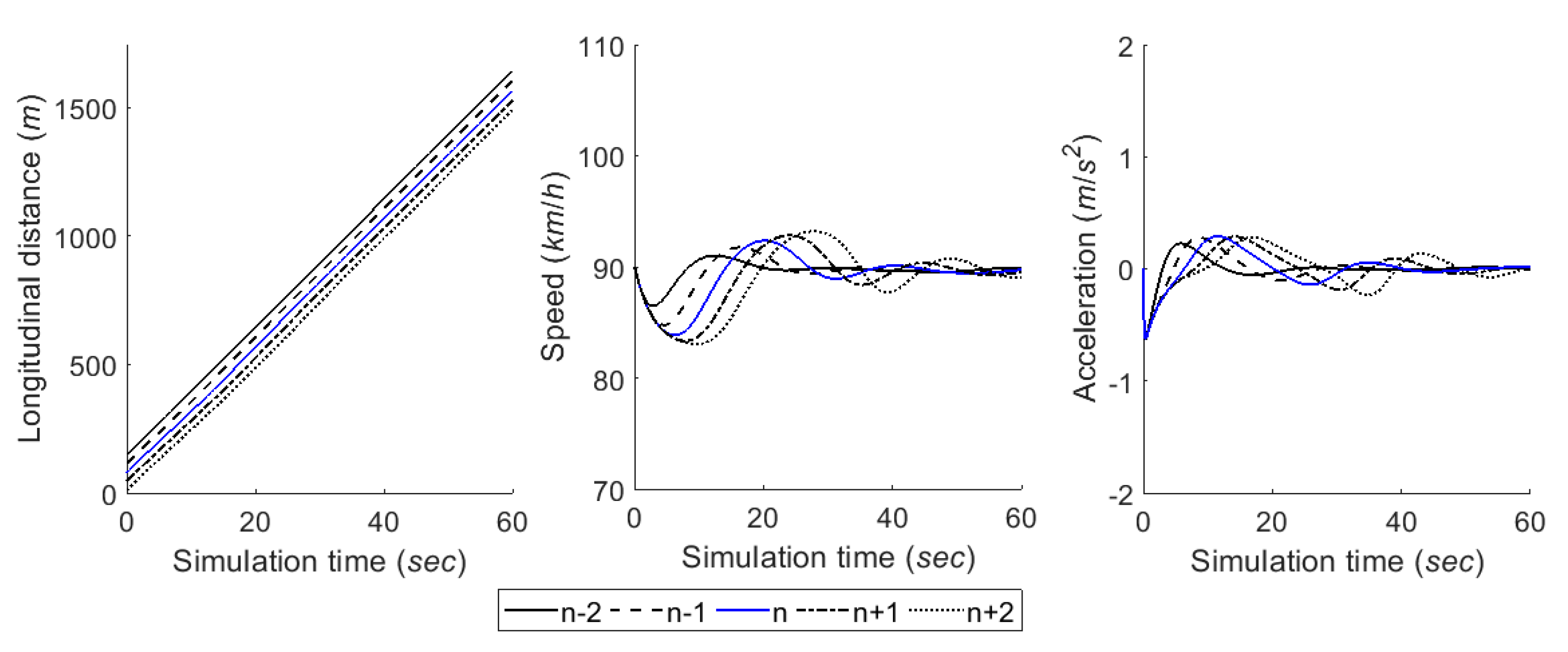

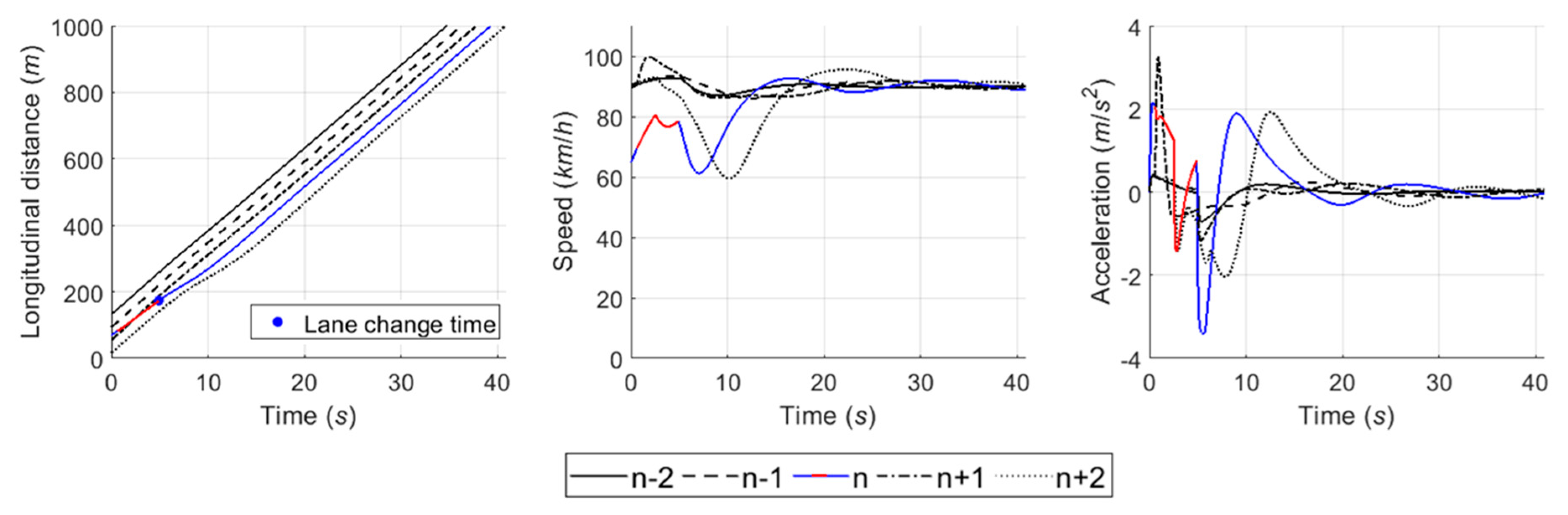

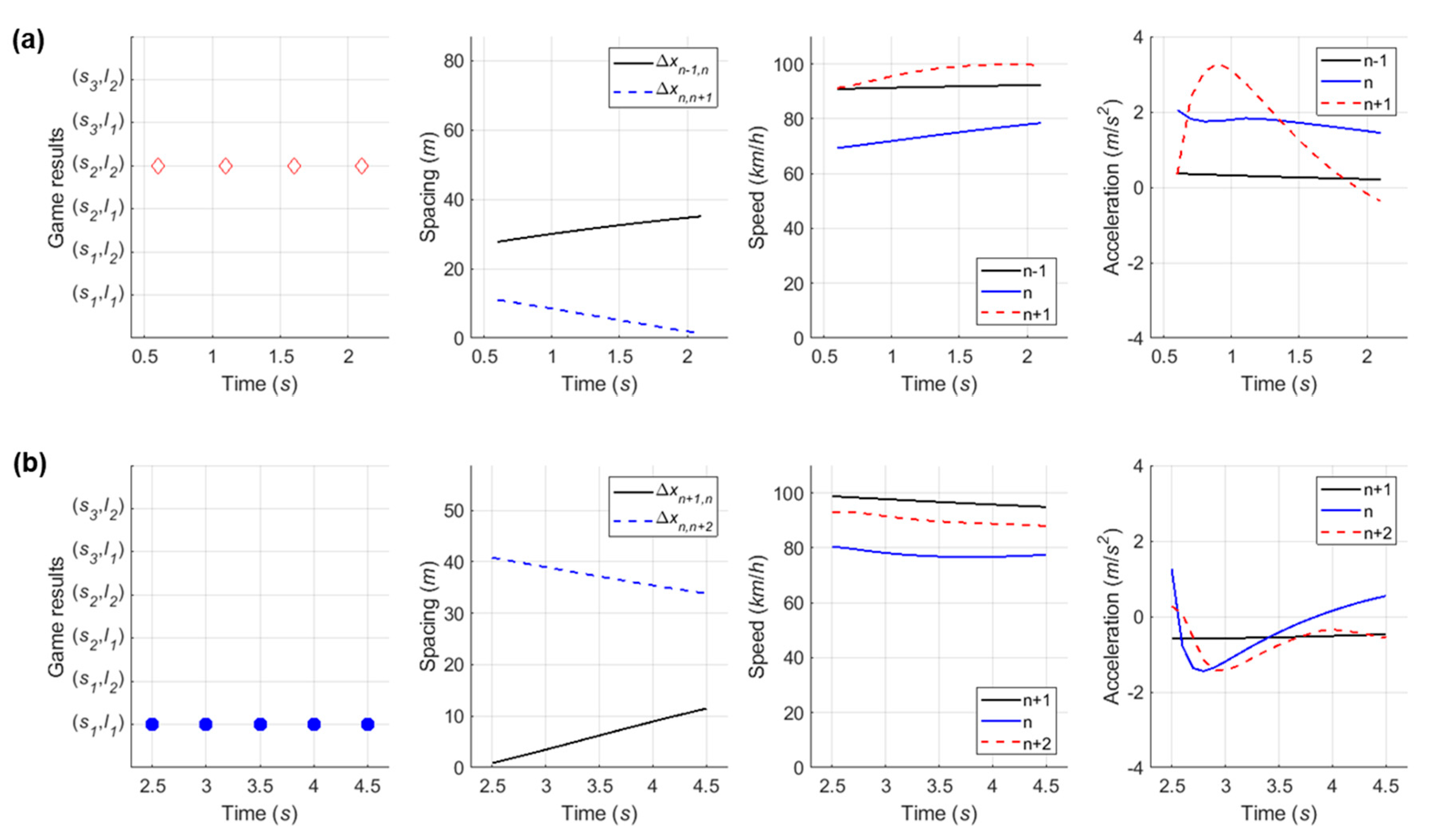

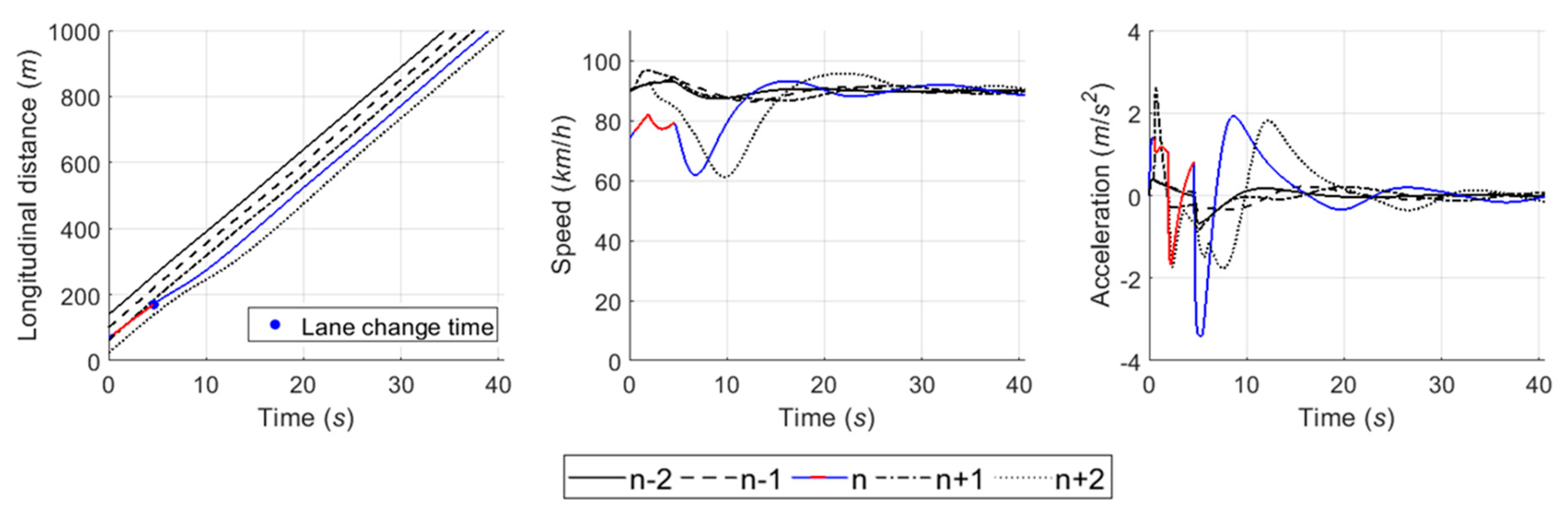

6.4.1. Case 1: Cooperative Merging Scenario Using an Adjacent Gap

6.4.2. Case 2: Cooperative Merging Scenario Using a Backward Gap

6.4.3. Case 3: Cooperative Merging Scenario Using a Forward Gap

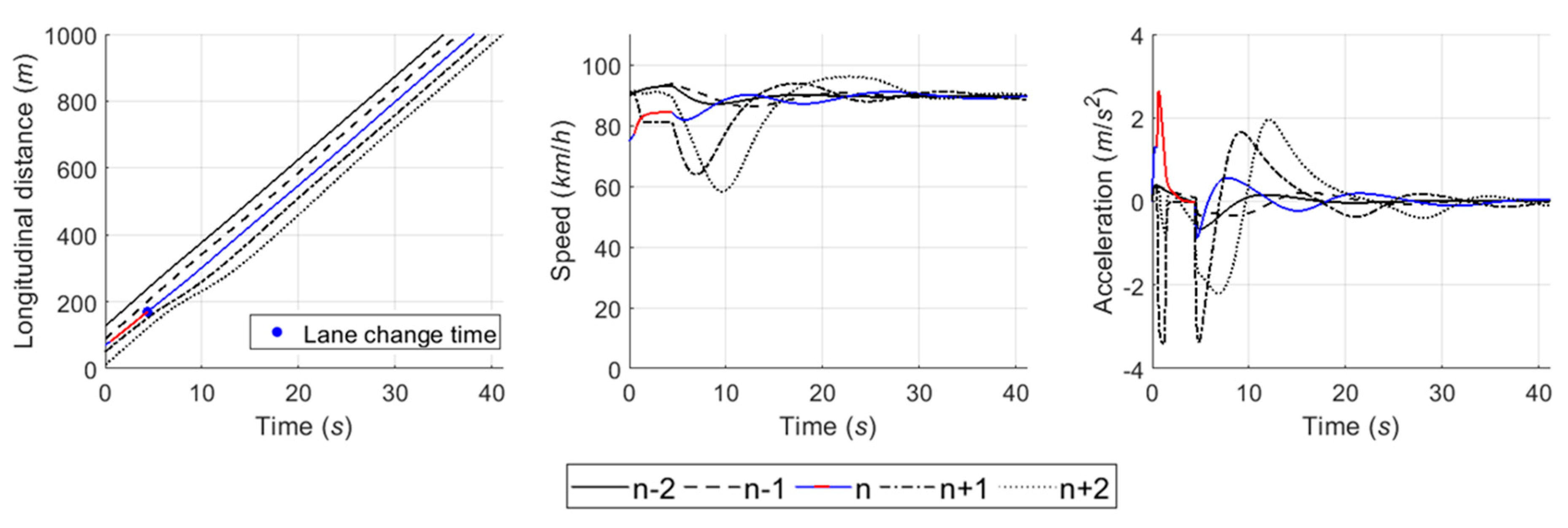

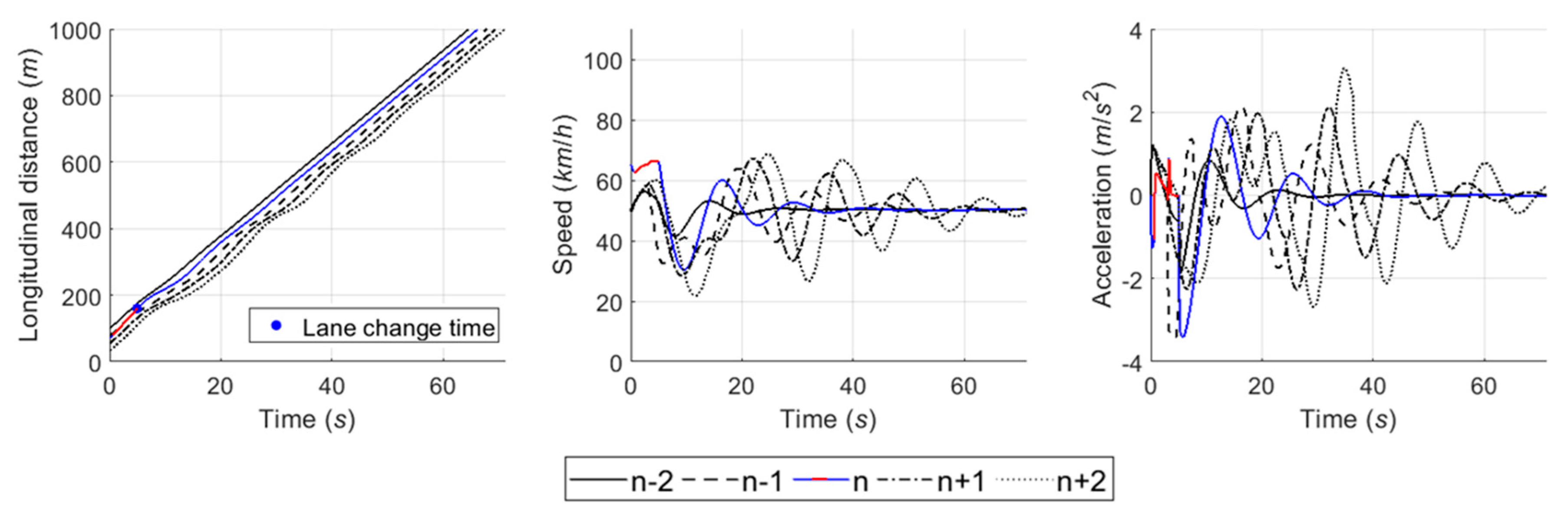

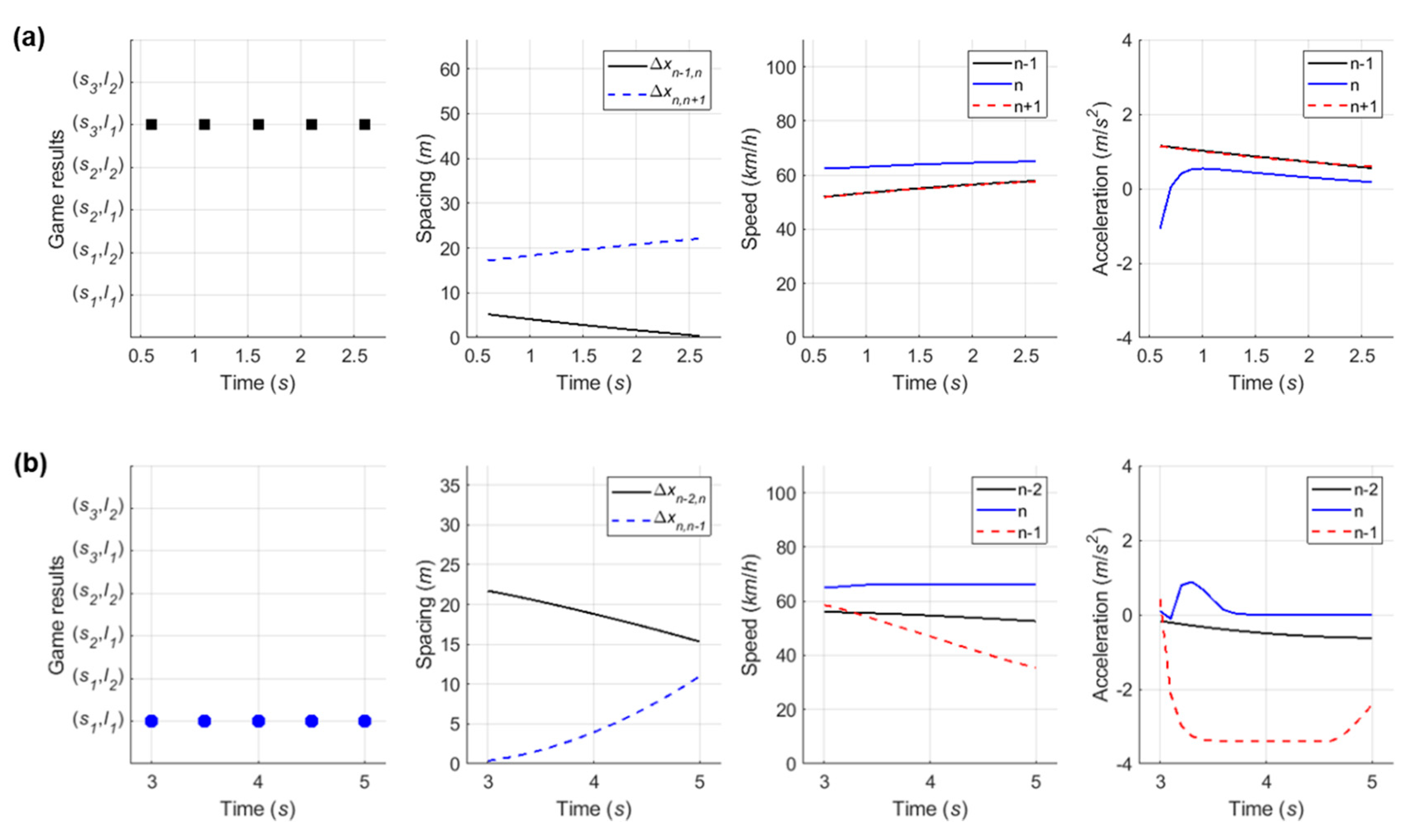

6.4.4. Case 4: Competitive Merging Scenario Choosing an Adjacent Gap or a Backward Gap (1)

6.4.5. Case 5: Competitive Merging Scenario Choosing an Adjacent Gap or a Backward Gap (2)

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Rahman, M.; Chowdhury, M.; Xie, Y.; He, Y. Review of Microscopic Lane-Changing Models and Future Research Opportunities. IEEE Trans. Intell. Transp. Syst. 2013, 14, 1942–1956. [Google Scholar] [CrossRef]

- Cassidy, M.J.; Bertini, R. Some traffic features at freeway bottlenecks. Transp. Res. Part B: Methodol. 1999, 33, 25–42. [Google Scholar] [CrossRef]

- Bertini, R.; Leal, M.T. Empirical Study of Traffic Features at a Freeway Lane Drop. J. Transp. Eng. 2005, 131, 397–407. [Google Scholar] [CrossRef]

- Laval, J.A.; Daganzo, C.F. Lane-changing in traffic streams. Transp. Res. Part B Methodol. 2006, 40, 251–264. [Google Scholar] [CrossRef]

- Coifman, B.; Mishalani, R.; Wang, C.; Krishnamurthy, S. Impact of Lane-Change Maneuvers on Congested Freeway Segment Delays: Pilot Study. Transp. Res. Rec. J. Transp. Res. Board 2006, 1965, 152–159. [Google Scholar] [CrossRef]

- Ahn, S.; Cassidy, M.J. Freeway Traffic Oscillations and Vehicle Lane-Change Maneuvers. In Transportation and Traffic Theory 2007; Allsop, R.E., Bell, M.G.H., Heydecker, B., Eds.; Elsevier: Amsterdam, The Netherlands, 2007; pp. 691–710. [Google Scholar]

- Pan, T.; Lam, W.; Sumalee, A.; Zhong, R. Modeling the impacts of mandatory and discretionary lane-changing maneuvers. Transp. Res. Part C Emerg. Technol. 2016, 68, 403–424. [Google Scholar] [CrossRef]

- Li, X.; Sun, J.-Q. Studies of Vehicle Lane-Changing Dynamics and Its Effect on Traffic Efficiency, Safety and Environmental Impact. Phys. A Stat. Mech. its Appl. 2017, 467, 41–58. [Google Scholar] [CrossRef]

- Liu, H.X.; Xin, W.; Adam, Z.M.; Ban, J.X. A game theoretical approach for modeling merging and yielding behavior at freeway on-ramp section. In Transportation and Traffic Theory 2007; Allsop, R.E., Bell, M.G.H., Heydecker, B., Eds.; Elsevier: Amsterdam, The Netherlands, 2007; pp. 196–211. [Google Scholar]

- Moridpour, S.; Sarvi, M.; Rose, G. Lane changing models: A critical review. Transp. Lett. 2010, 2, 157–173. [Google Scholar] [CrossRef]

- Kesting, A.; Treiber, M.; Helbing, D. General Lane-Changing Model MOBIL for Car-Following Models. Transp. Res. Rec. J. Transp. Res. Board 2007, 1999, 86–94. [Google Scholar] [CrossRef]

- Kang, K.; Rakha, H.A. Game Theoretical Approach to Model Decision Making for Merging Maneuvers at Freeway On-Ramps. Transp. Res. Rec. J. Transp. Res. Board 2017, 2623, 19–28. [Google Scholar] [CrossRef]

- Kang, K.; Rakha, H.A. Modeling Driver Merging Behavior: A Repeated Game Theoretical Approach. Transp. Res. Rec. J. Transp. Res. Board 2018, 2672, 144–153. [Google Scholar] [CrossRef]

- FHWA. Fact Sheet: Next Generation Simulation US101 Dataset, FHWA-HRT-07-030. Available online: http://www.fhwa.dot.gov/publications/research/operations/07030/ (accessed on 25 January 2016).

- FHWA. Next Generation Simulation: US101 Freeway Dataset. Available online: http://ops.fhwa.dot.gov/trafficanalysistools/ngsim.htm (accessed on 25 January 2016).

- Gipps, P. A model for the structure of lane-changing decisions. Transp. Res. Part B Methodol. 1986, 20, 403–414. [Google Scholar] [CrossRef]

- Toledo, T.; Koutsopoulos, H.N.; Ben-Akiva, M.E. Modeling Integrated Lane-Changing Behavior. Transp. Res. Rec. J. Transp. Res. Board 2003, 1857, 30–38. [Google Scholar] [CrossRef]

- Halati, A.; Lieu, H.; Walker, S. CORSIM—Corridor traffic simulation model. In Proceedings of the Traffic Congestion and Traffic Safety in the 21st Century: Challenges, Innovations, and Opportunities, Chicago, IL, USA, 8–10 June 1997; American Society of Civil Engineers: New York, NY, USA, 1997; pp. 570–576. [Google Scholar]

- FHWA. CORSIM User Manual, Version 1.04; U.S. Department of Transportation: McLean, VA, USA, 1998.

- Myerson, R.B. Game Theory: Analysis of Conflict; Harvard University Press: Cambridge, MA, USA, 1997. [Google Scholar]

- Ahmed, K.I. Modeling Drivers’ Acceleration and Lane-Changing Behavior. Ph.D. Thesis, Dept. Civil and Environmental Eng., Massachusetts Institute of Technology, Cambridge, MA, USA, 1999. [Google Scholar]

- Toledo, T.; Koutsopoulos, H.N.; Ben-Akiva, M. Integrated driving behavior modeling. Transp. Res. Part C Emerg. Technol. 2007, 15, 96–112. [Google Scholar] [CrossRef]

- Ma, X. Toward an integrated car-following and lane-changing model based on neural-fuzzy approach. In Proceedings of the Helsinki Summer Workshop, Espoo, Finland, 6–13 November 2004. [Google Scholar]

- Hunt, J.; Lyons, G. Modelling dual carriageway lane changing using neural networks. Transp. Res. Part C Emerg. Technol. 1994, 2, 231–245. [Google Scholar] [CrossRef]

- Kita, H. A merging–giveway interaction model of cars in a merging section: A game theoretic analysis. Transp. Res. Part A Policy Pr. 1999, 33, 305–312. [Google Scholar] [CrossRef]

- Kondyli, A.; Elefteriadou, L. Driver Behavior at Freeway-Ramp Merging Areas. Transp. Res. Rec. J. Transp. Res. Board 2009, 2124, 157–166. [Google Scholar] [CrossRef]

- Wan, X.; Jin, P.J.; Zheng, L.; Cheng, Y.; Ran, B. Speed Synchronization Process of Merging Vehicles from the Entrance Ramp. Transp. Res. Rec. J. Transp. Res. Board 2013, 2391, 11–21. [Google Scholar] [CrossRef]

- Kim, C.; Langari, R. Game theory based autonomous vehicles operation. Int. J. Veh. Des. 2014, 65, 360. [Google Scholar] [CrossRef]

- Talebpour, A.; Mahmassani, H.S.; Hamdar, S.H. Modeling Lane-Changing Behavior in a Connected Environment: A Game Theory Approach. Transp. Res. Procedia 2015, 7, 420–440. [Google Scholar] [CrossRef]

- Harsanyi, J.C. Games with Incomplete Information Played by “Bayesian” Players, I–III Part I. The Basic Model. Manag. Sci. 1967, 14, 159–182. [Google Scholar] [CrossRef]

- Yu, H.; Tseng, H.E.; Langari, R. A human-like game theory-based controller for automatic lane changing. Transp. Res. Part C Emerg. Technol. 2018, 88, 140–158. [Google Scholar] [CrossRef]

- Nash, J. Non-Cooperative Games. Ann. Math. 1951, 54, 286. [Google Scholar] [CrossRef]

- Kondyli, A.; Elefteriadou, L. Driver behavior at freeway-ramp merging areas based on instrumented vehicle observations. Transp. Lett. 2012, 4, 129–142. [Google Scholar] [CrossRef]

- Wang, Z.; Wu, G.; Barth, M. Distributed Consensus-Based Cooperative Highway On-Ramp Merging Using V2X Communications. In Proceedings of the WCX: SAE World Congress Experience, Detroit, MI, USA, 3 April 2018. [Google Scholar]

- Lee, S.E.; Olsen, E.C.; Wierwille, W.W. A Comprehensive Examination of Naturalistic Lane-Changes; American Psychological Association (APA): Washington, DC, USA, 2013. [Google Scholar]

- Brackstone, M.; McDonald, M.; Sultan, B. Dynamic Behavioral Data Collection Using an Instrumented Vehicle. Transp. Res. Rec. J. Transp. Res. Board 1999, 1689, 9–16. [Google Scholar] [CrossRef]

- Kusano, K.D.; Gabler, H. Method for Estimating Time to Collision at Braking in Real-World, Lead Vehicle Stopped Rear-End Crashes for Use in Pre-Crash System Design. SAE Int. J. Passeng. Cars-Mech. Syst. 2011, 4, 435–443. [Google Scholar] [CrossRef][Green Version]

- Vogel, K. A comparison of headway and time to collision as safety indicators. Accid. Anal. Prev. 2003, 35, 427–433. [Google Scholar] [CrossRef]

- National Satety Council: Maintaining a Safe Following Distance While Driving. Available online: https://www.nsc.org/Portals/0/Documents/TeenDrivingDocuments/DriveItHome/Lesson48-English.pdf (accessed on 11 December 2018).

- Marczak, F.; Daamen, W.; Buisson, C. Key Variables of Merging Behaviour: Empirical Comparison between Two Sites and Assessment of Gap Acceptance Theory. Procedia-Soc. Behav. Sci. 2013, 80, 678–697. [Google Scholar] [CrossRef]

- Hwang, S.Y.; Park, C.H. Modeling of the Gap Acceptance Behavior at a Merging Section of Urban Freeway. In Proceedings of the 2005 Eastern Asia Society for Transportation Studies, Bangkok, Thailand, 21–24 September 2005; pp. 1641–1656. [Google Scholar]

- Rakha, H.A.; Pasumarthy, P.; Adjerid, S. A simplified behavioral vehicle longitudinal motion model. Transp. Lett. 2009, 1, 95–110. [Google Scholar] [CrossRef]

- Sangster, J.D.; Rakha, H.A. Enhancing and Calibrating the Rakha-Pasumarthy-Adjerid Car-Following Model using Naturalistic Driving Data. Int. J. Transp. Sci. Technol. 2014, 3, 229–247. [Google Scholar] [CrossRef]

- Van Aerde, M. Single Regime Speed-Flow-Density Relationship for Congested and Uncongested Highways. In Proceedings of the 74th Annual Meeting of the Transportation Research Board, Washington, DC, USA, 27 January 1995. [Google Scholar]

- Van Aerde, M.; Rakha, H. Multivariate calibration of single regime speed-flow-density relationships [road traffic management]. In Proceedings of the Pacific Rim TransTech Conference. 1995 Vehicle Navigation and Information Systems Conference Proceedings. 6th International VNIS. A Ride into the Future, Seattle, WA, USA, 30 July–2 August 1995. [Google Scholar]

- Chatterjee, B. An optimization formulation to compute Nash equilibrium in finite games. In Proceedings of the 2009 International Conference on Methods and Models in Computer Science (ICM2CS), Delhi, India, 14–15 December 2009. [Google Scholar]

- Bonebau, E. Agent-based modeling: Methods and techniques for simulating human systems. Proc. Natl. Acad. Sci. USA 2002, 99, 7280–7287. [Google Scholar] [CrossRef] [PubMed]

- Macal, C.; North, M.J. Tutorial on Agent-Based Modeling and Simulation PART 2: How to Model with Agents. In Proceedings of the 2006 Winter Simulation Conference, Monterey, CA, USA, 3–6 December 2006. [Google Scholar]

- Zheng, H.; Son, Y.; Chiu, Y.; Head, L.; Feng, Y.; Xi, H.; Kim, S.; Hickman, M. A Primer for Agent-Based Simulation and Modeling in Transportation Applications (FHWA-HRT-13-054); Federal Highway Administration: McLean, VA, USA, 2013. [Google Scholar]

- Elliott, E.; Kiel, D.P. Exploring cooperation and competition using agent-based modeling. Proc. Natl. Acad. Sci. USA 2002, 99, 7193–7194. [Google Scholar] [CrossRef] [PubMed]

- Ljubovic, V. Traffic simulation using agent-based models. In Proceedings of the 2009 XXII International Symposium on Information, Communication and Automation Technologies, Bosnia, Serbia, 29–31 October 2009. [Google Scholar]

- Law, A.M.; Kelton, W.D. Simulation Modelling and Analysis, 2nd ed.; McGraw-Hill: New York, NY, USA, 1991. [Google Scholar]

- Xiang, X.; Kennedy, R.; Madey, G.; Cabaniss, S. Verification and Validation of Agent-Based Scientific Simulation Models. In Proceedings of the Agent-directed Simulation Conference, San Diego, CA, USA, April 2005; pp. 47–55. [Google Scholar]

- Balci, O. Verification, Validation, and Testing. In Handbook of Simulation; Wiley: New York, NY, USA, 2007; pp. 335–393. [Google Scholar]

| Player & Actions | Driver of LV | ||

|---|---|---|---|

| Driver of SV | Change [] 1 | ||

| Wait [] | |||

| Overtake [] | |||

| Payoff Function | Parameters | One-Shot Game Model | Repeated Game Models | |||||

|---|---|---|---|---|---|---|---|---|

| Model 1 | Model 2 | Model 3 | Model 4 | Model 5 | Model 6 | |||

| 9.64 | 5.10 | 2.88 | 6.69 | −1.77 | 7.08 | 7.11 | ||

| 23.51 | 74.83 | 48.38 | 96.45 | 9.20 | 27.34 | 8.38 | ||

| 32.69 | 59.51 | 69.45 | 1.00 | 5.16 | 97.08 | 2.75 | ||

| 9.43 | 8.83 | 3.58 | 7.87 | 8.64 | 7.27 | −6.26 | ||

| 87.57 | 77.60 | 44.40 | 86.30 | 3.11 | 50.13 | 4.25 | ||

| 10.98 | 43.84 | 1.80 | 71.19 | 5.73 | 84.75 | 7.34 | ||

| 0.63 | −9.78 | −7.49 | −6.91 | −8.88 | −6.65 | −8.13 | ||

| 3.35 | 26.60 | 10.68 | 62.49 | 3.18 | 31.94 | 1.75 | ||

| −7.88 | −8.50 | −3.42 | −6.19 | 9.73 | −8.98 | 5.56 | ||

| 42.64 | 20.75 | 5.21 | 65.72 | 6.22 | 19.43 | 7.16 | ||

| −0.66 | 6.07 | −9.38 | −6.21 | −2.84 | −5.18 | 6.41 | ||

| 67.24 | 48.05 | 78.92 | 94.59 | 11.19 | 25.08 | 7.53 | ||

| −0.53 | -3.10 | −5.39 | −0.44 | 2.75 | −3.69 | 8.35 | ||

| 16.91 | 52.79 | 95.22 | 59.86 | 2.21 | 30.06 | 4.79 | ||

| 9.93 | 3.78 | 6.96 | 9.80 | −1.99 | 7.97 | −3.75 | ||

| 13.30 | 17.29 | 6.64 | 25.06 | 6.88 | 5.86 | 10.22 | ||

| −1.26 | −8.39 | -6.24 | −5.83 | −7.03 | −8.90 | −8.36 | ||

| 3.70 | 0.29 | 19.40 | 23.84 | 10.20 | 18.49 | 1.89 | ||

| 5.78 | 7.64 | 8.05 | 8.74 | 5.52 | 8.25 | 0.27 | ||

| 89.18 | 57.76 | 58.65 | 78.06 | 2.76 | 82.45 | 4.12 | ||

| 7.73 | −4.36 | −4.36 | 0.63 | 0.34 | −8.66 | −5.95 | ||

| 57.97 | 6.64 | 55.26 | 14.12 | 7.43 | 38.74 | 7.61 | ||

| 3.88 | −4.02 | -6.99 | 6.38 | 9.39 | −0.82 | 3.68 | ||

| 55.87 | 96.95 | 98.01 | 1.12 | 4.35 | 46.49 | 9.22 | ||

| 4.26 | −9.75 | 1.08 | −8.01 | 6.78 | 1.53 | −4.85 | ||

| 27.87 | 26.74 | 22.93 | 74.89 | 2.20 | 86.19 | 7.83 | ||

| Models | Previous One-Shot Game Model (2018) | One-Shot Game Model | Repeated Game Models | |||||

|---|---|---|---|---|---|---|---|---|

| Model 1 | Model 2 | Model 3 | Model 4 | Model 5 | Model 6 | |||

| Rate factor, | na1 | na | 0.6 | 0.8 | 1.0 | 1.2 | 1.4 | 1.6 |

| MAE 2 | 0.2555 (74.45 %) | 0.1241 (87.59 %) | 0.1708 (82.92 %) | 0.1606 (83.94 %) | 0.1606 (83.94 %) | 0.1372 (86.28 %) | 0.1358 (86.42 %) | 0.1460 (85.40 %) |

| Models | Previous One-Shot Game Model (2018) | One-Shot Game Model | Repeated Game Models | |||||

|---|---|---|---|---|---|---|---|---|

| Model 1 | Model 2 | Model 3 | Model 4 | Model 5 | Model 6 | |||

| Rate factor, | na | na | 0.6 | 0.8 | 1.0 | 1.2 | 1.4 | 1.6 |

| MAE 1 | 0.2418 (75.82%) | 0.1197 (88.03%) | 0.1954 (80.46%) | 0.1758 (82.42%) | 0.1465 (85.35%) | 0.1368 (86.32%) | 0.1307 (86.94%) | 0.1355 (86.45%) |

| Index | Scenarios | Gap Type Used for Merging | |||

|---|---|---|---|---|---|

| 1 | Cooperative | Adjacent gap | 90 | 75 | 20.0 |

| 2 | Backward (lag) gap | 90 | 65 | 15.0 | |

| 3 | Forward (lead) gap | 50 | 65 | 15.0 | |

| 4 | Competitive | Adjacent gap or backward gap (Initial decision: non-cooperative) | 85 | 72 | 14.0 |

| 5 | Adjacent gap or backward gap (Initial decision: cooperative) | 90 | 75 | 7.5 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kang, K.; Rakha, H.A. A Repeated Game Freeway Lane Changing Model. Sensors 2020, 20, 1554. https://doi.org/10.3390/s20061554

Kang K, Rakha HA. A Repeated Game Freeway Lane Changing Model. Sensors. 2020; 20(6):1554. https://doi.org/10.3390/s20061554

Chicago/Turabian StyleKang, Kyungwon, and Hesham A. Rakha. 2020. "A Repeated Game Freeway Lane Changing Model" Sensors 20, no. 6: 1554. https://doi.org/10.3390/s20061554

APA StyleKang, K., & Rakha, H. A. (2020). A Repeated Game Freeway Lane Changing Model. Sensors, 20(6), 1554. https://doi.org/10.3390/s20061554