Energy-Efficient Depth-Based Opportunistic Routing with Q-Learning for Underwater Wireless Sensor Networks

Abstract

1. Introduction

- We introduce the Q-learning technique into the underwater OR algorithm, so as to fully utilize their respective advantages. On the one hand, it takes full advantage of the broadcast nature of wireless medium in OR for reliable data packet delivery. On the other hand, global optimization can be achieved through single step Q-value iteration with the aid of Q-learning technique, which overcomes the shortcomings of greedy strategy and local optimization in traditional underwater OR algorithms.

- In our proposal, the void detection factor, residual energy and depth information of nodes are jointly considered in the reward function of Q-learning, which contributes to proactively detecting and bypassing void nodes in advance as well as avoids excessive energy consumption for the nodes locating in hot regions.

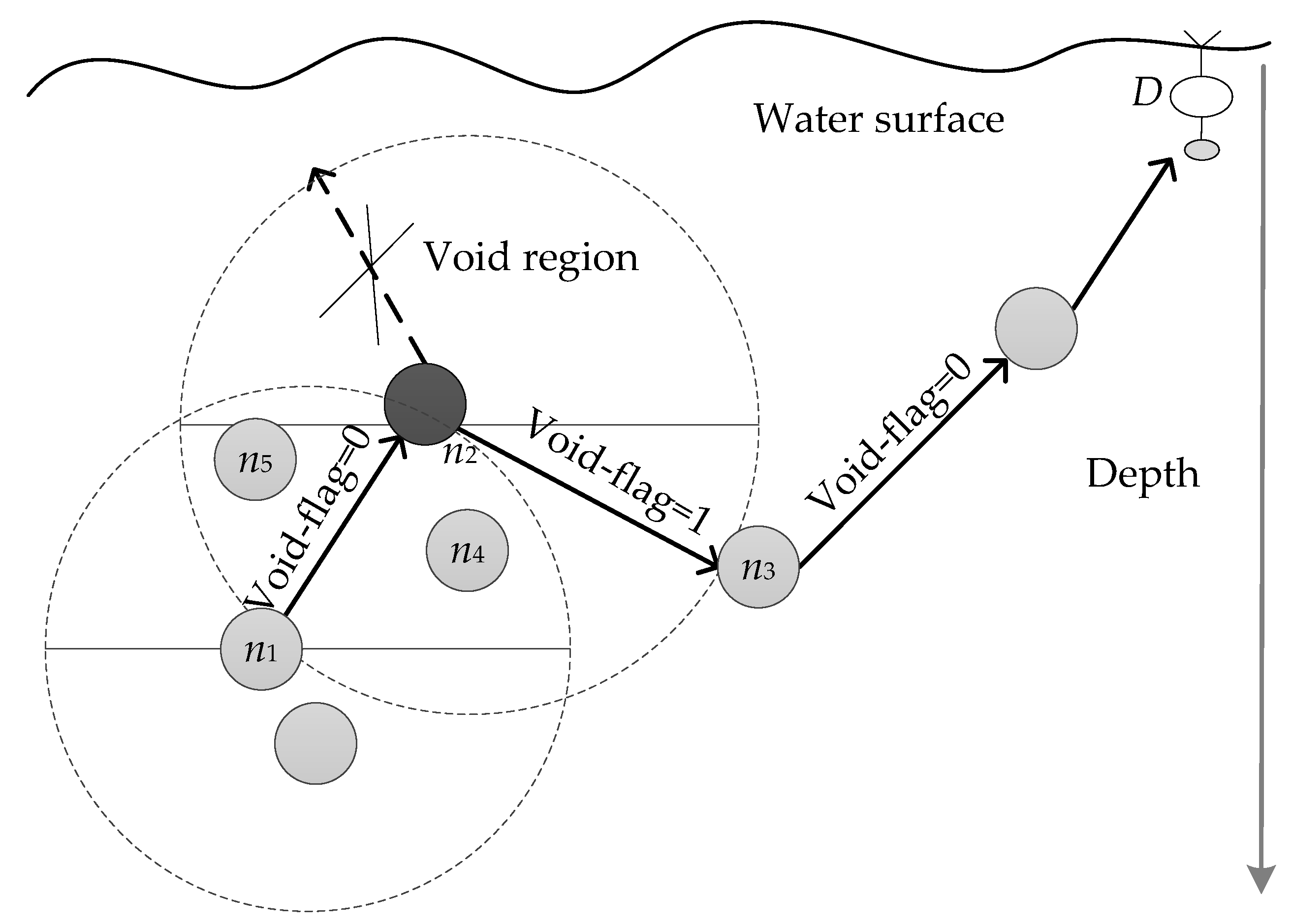

- We design a simple and scalable void node recovery mode in the candidate set selection phase through a packet backward retransmission manner to recover packets that encounter void nodes unfortunately. Instead of the network topology information, the void recovery mode needs only local depth to go around void nodes, which can further improve the packet delivery ratio, especially in a sparse network.

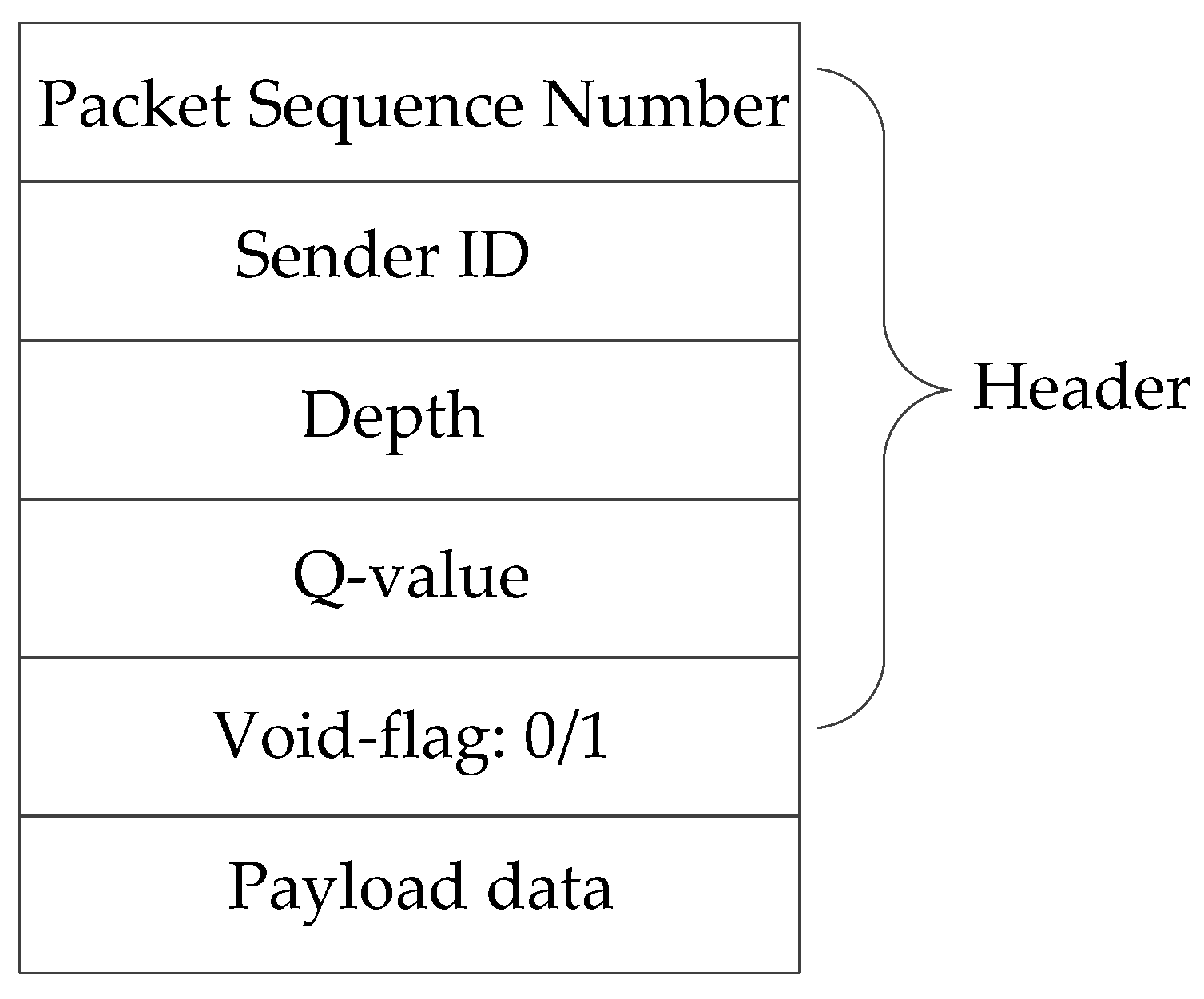

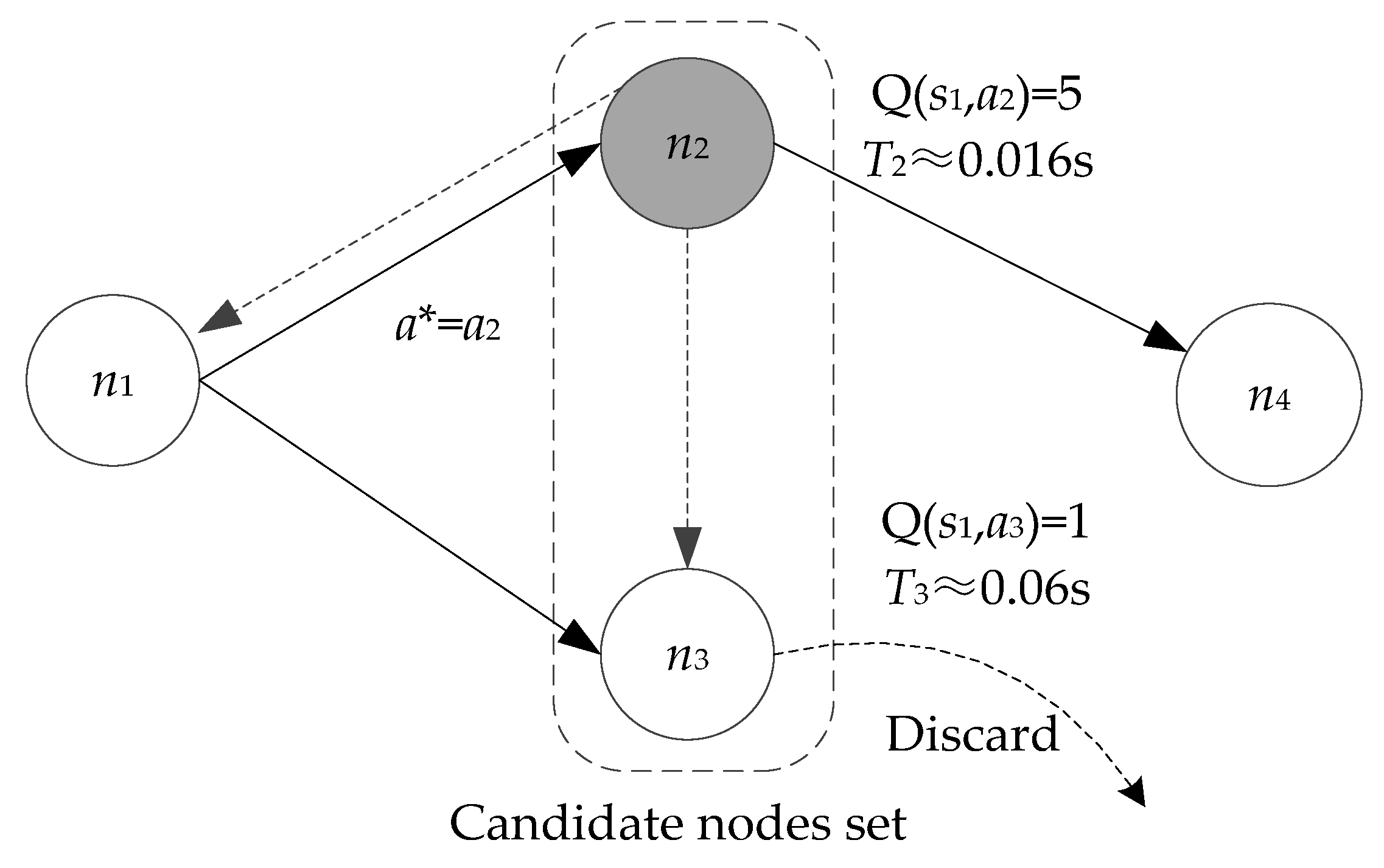

- We exploit a timer-based candidate set coordination approach to schedule packet forwarding, where a novel method to set the holding time is designed on the basis of Q-value, which helps to reduce the packet collisions and redundant transmissions. Besides, the Q-value is shared in only one hop neighbor, which is beneficial to further decrease overhead and energy cost.

2. Related Work

3. System Model

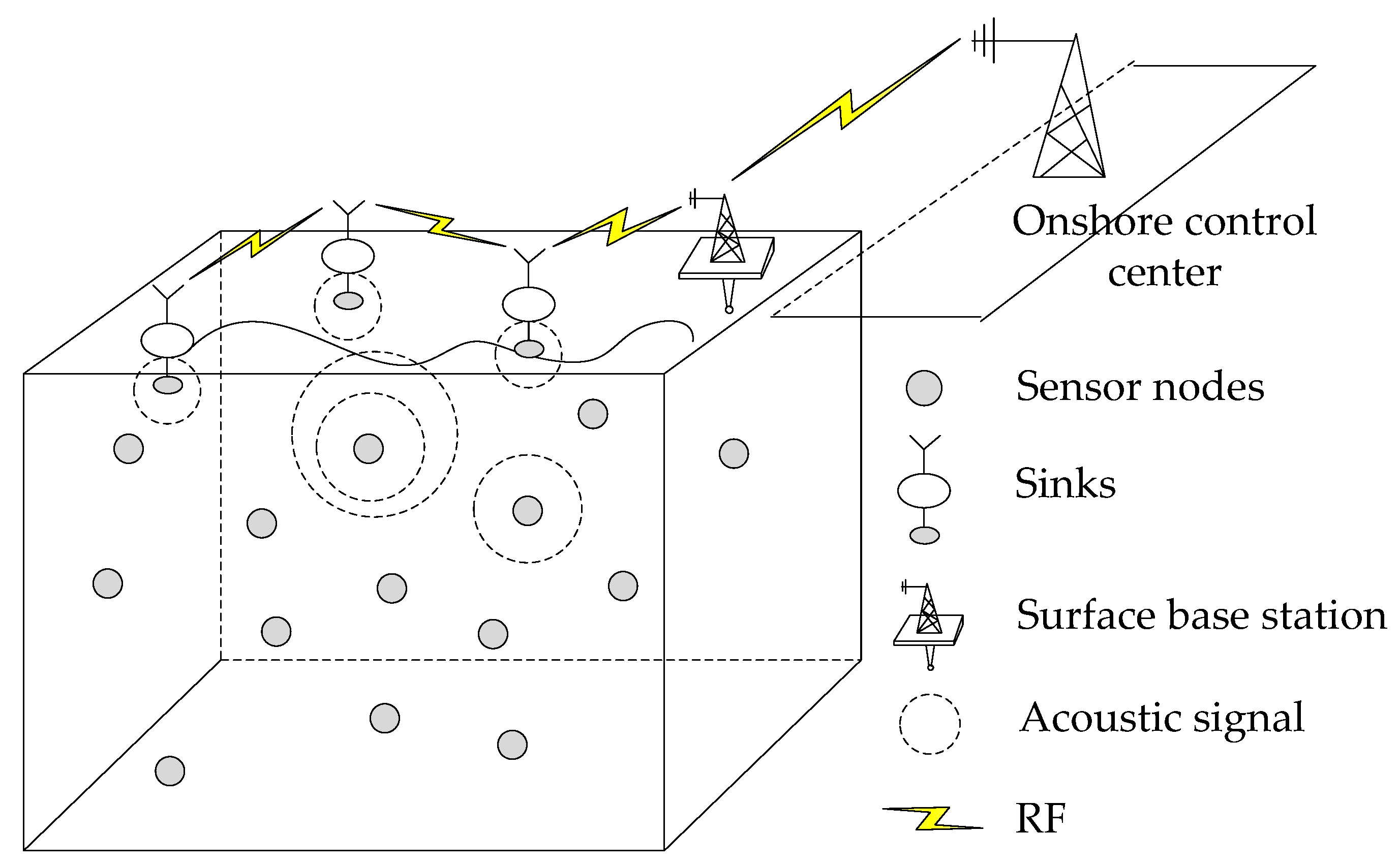

3.1. Network Architecture

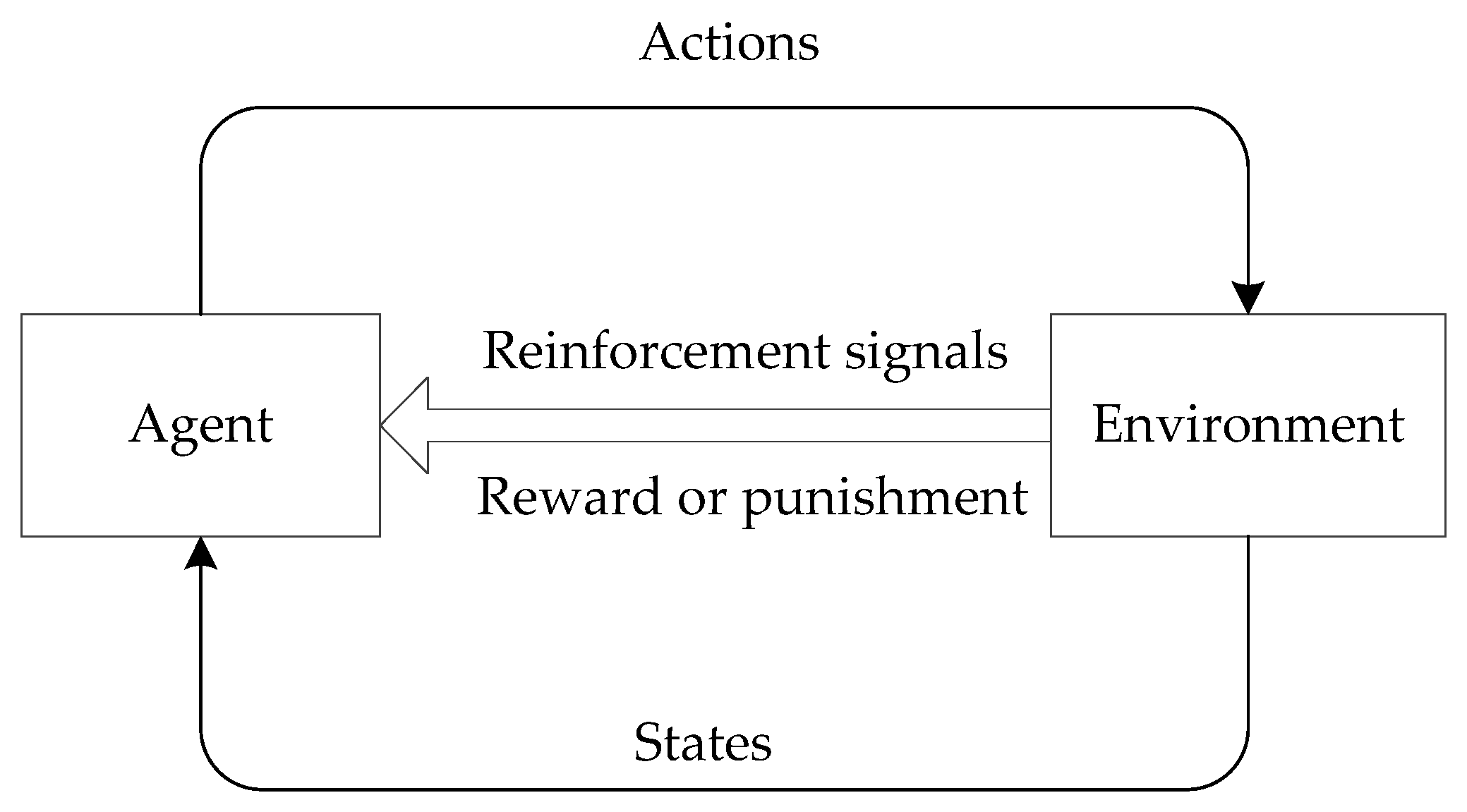

3.2. Q-Learning Model

3.2.1. Markov Decision Process (MDP) Model

3.2.2. The Basic Q-Learning Technique

4. EDORQ Algorithm

4.1. Overview of EDORQ

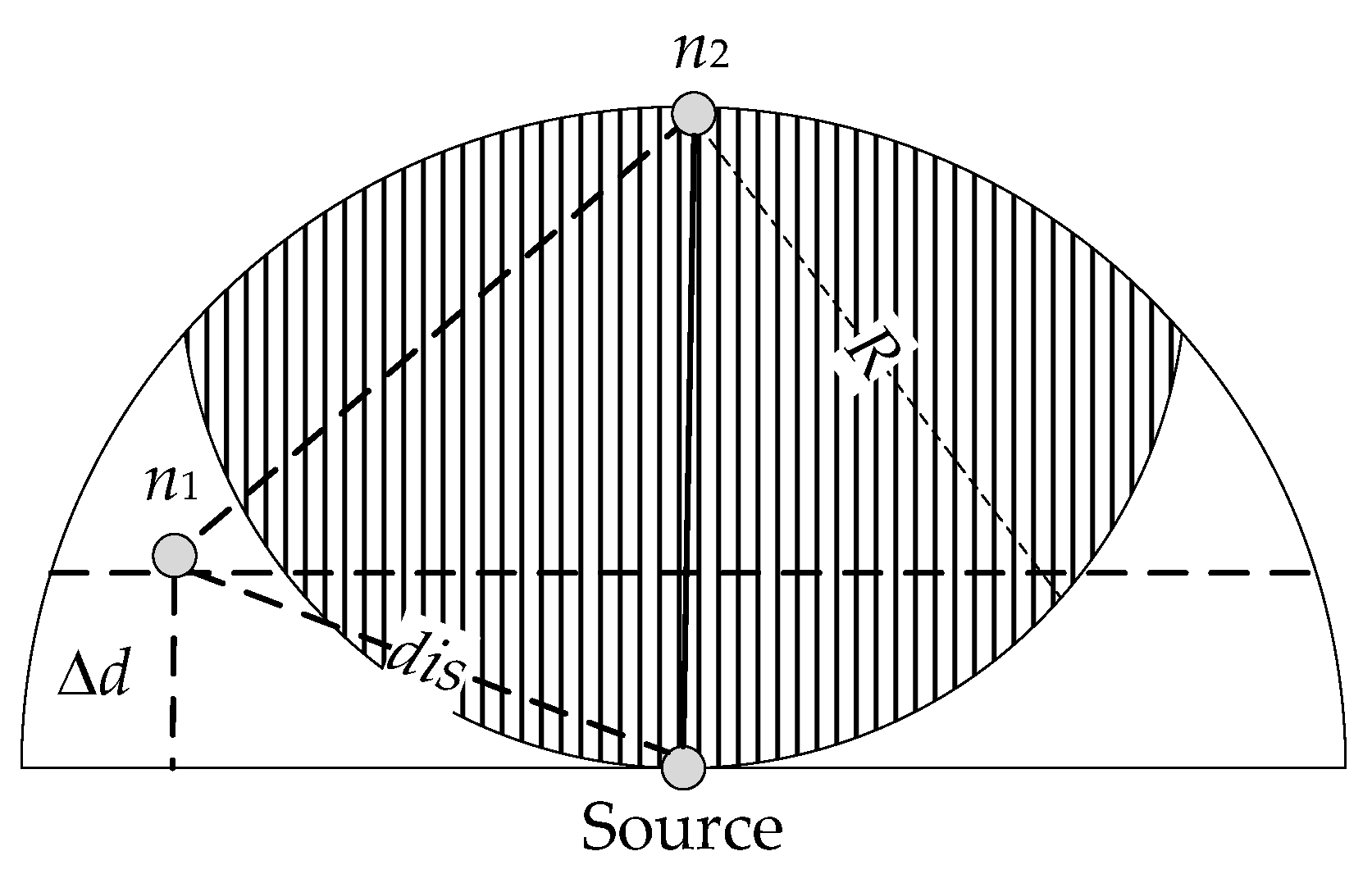

4.2. Void-Detection Based Candidate Set Selection

| Algorithm 1 Candidate Set Selection |

| Input: the packet p broadcasted by ni Output: CS(ni) //the candidate set of ni for packet forwarding

|

| Algorithm 2 Forwarding Mode Switch |

| Input: the packet p received by node ni Output: greedy mode or void recovery mode

|

4.3. Q-Learning Based Candidate Set Coordination

| Algorithm 3 Candidate Set Coordination |

| Input: CS (ni) //candidate set of node ni Output: broadcast or drop the packet

|

4.4. Summary

5. Simulation Results and Analysis

5.1. Simulation Setup

5.2. Simulation Metrics

5.3. Simulation Results

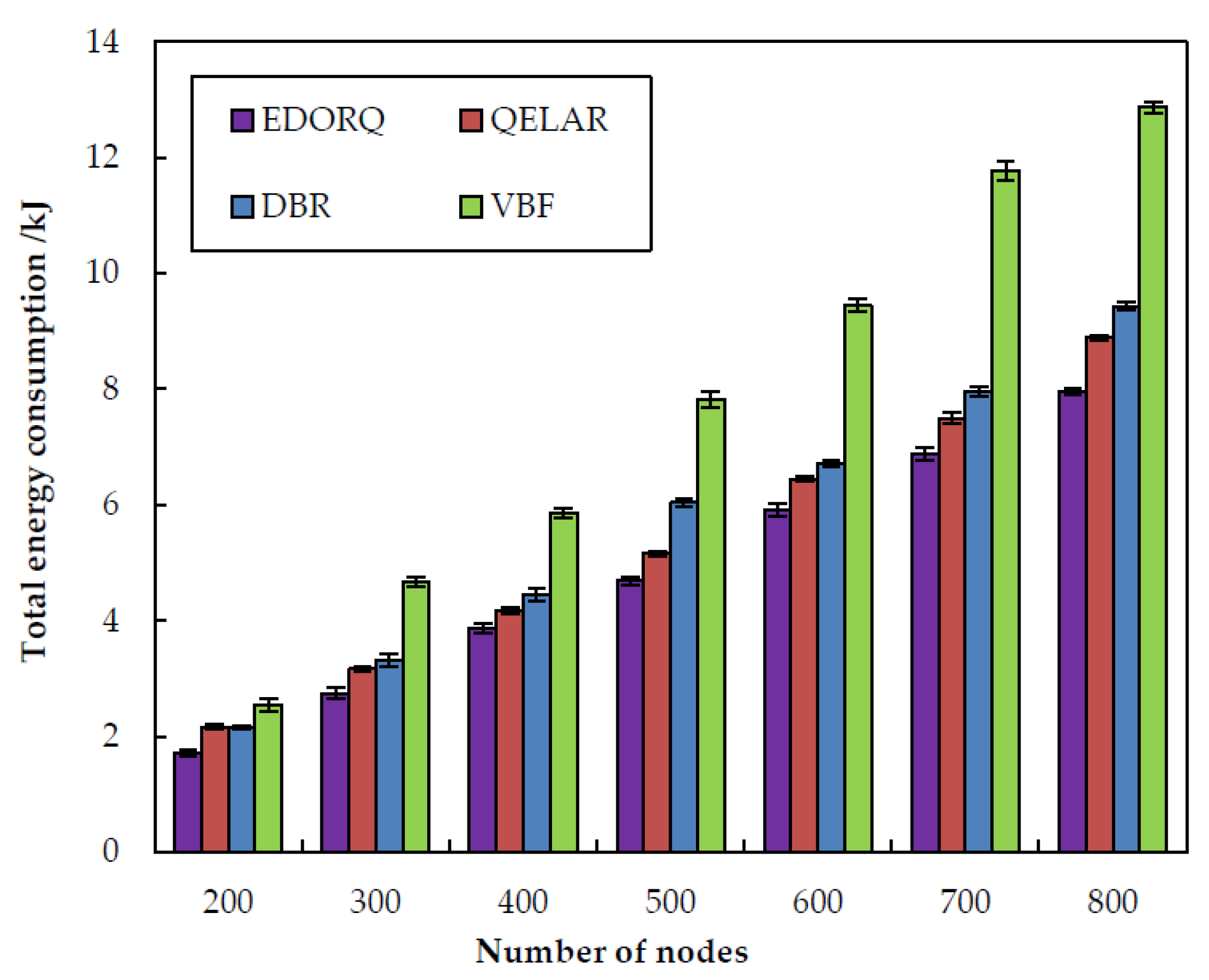

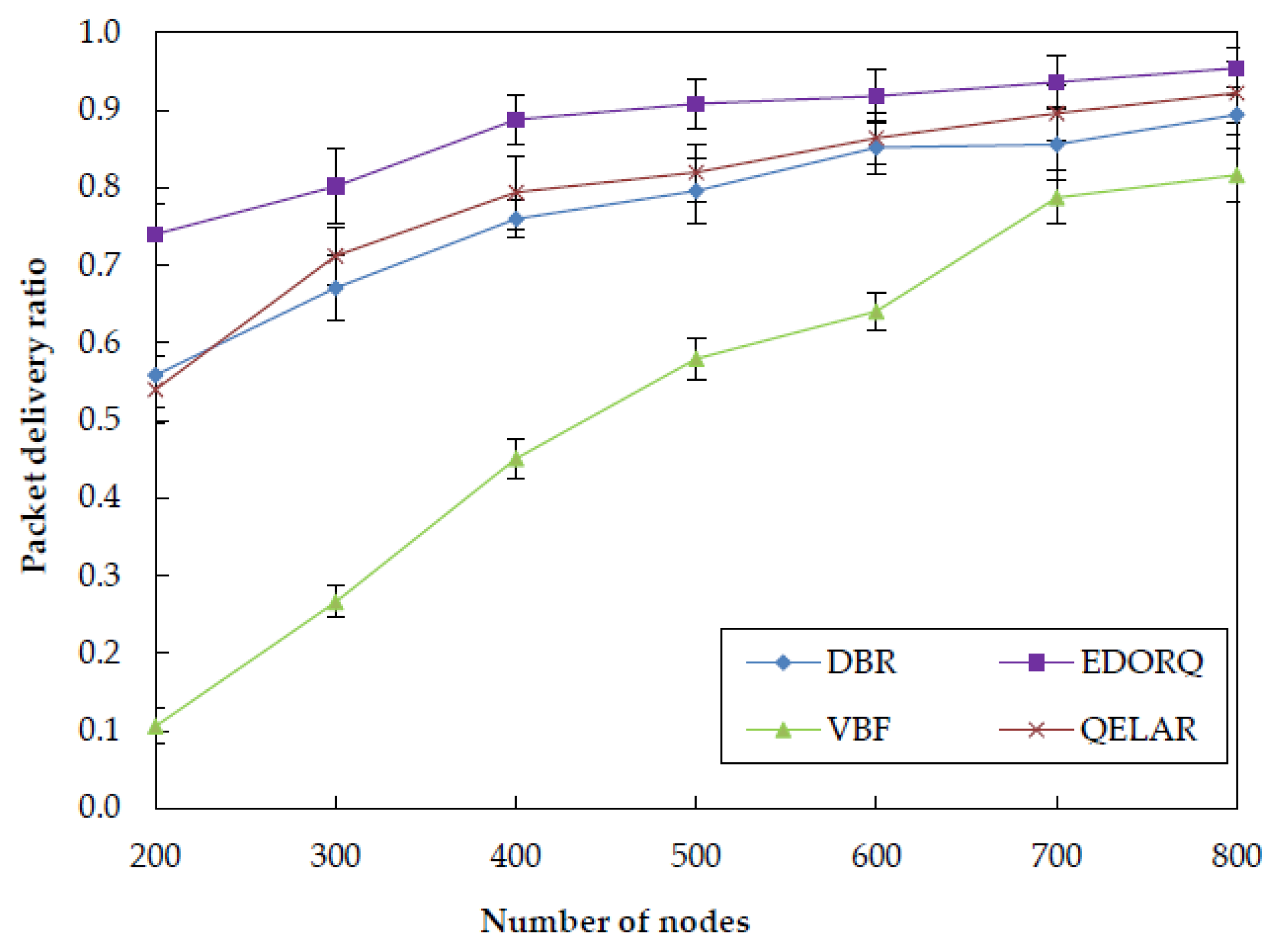

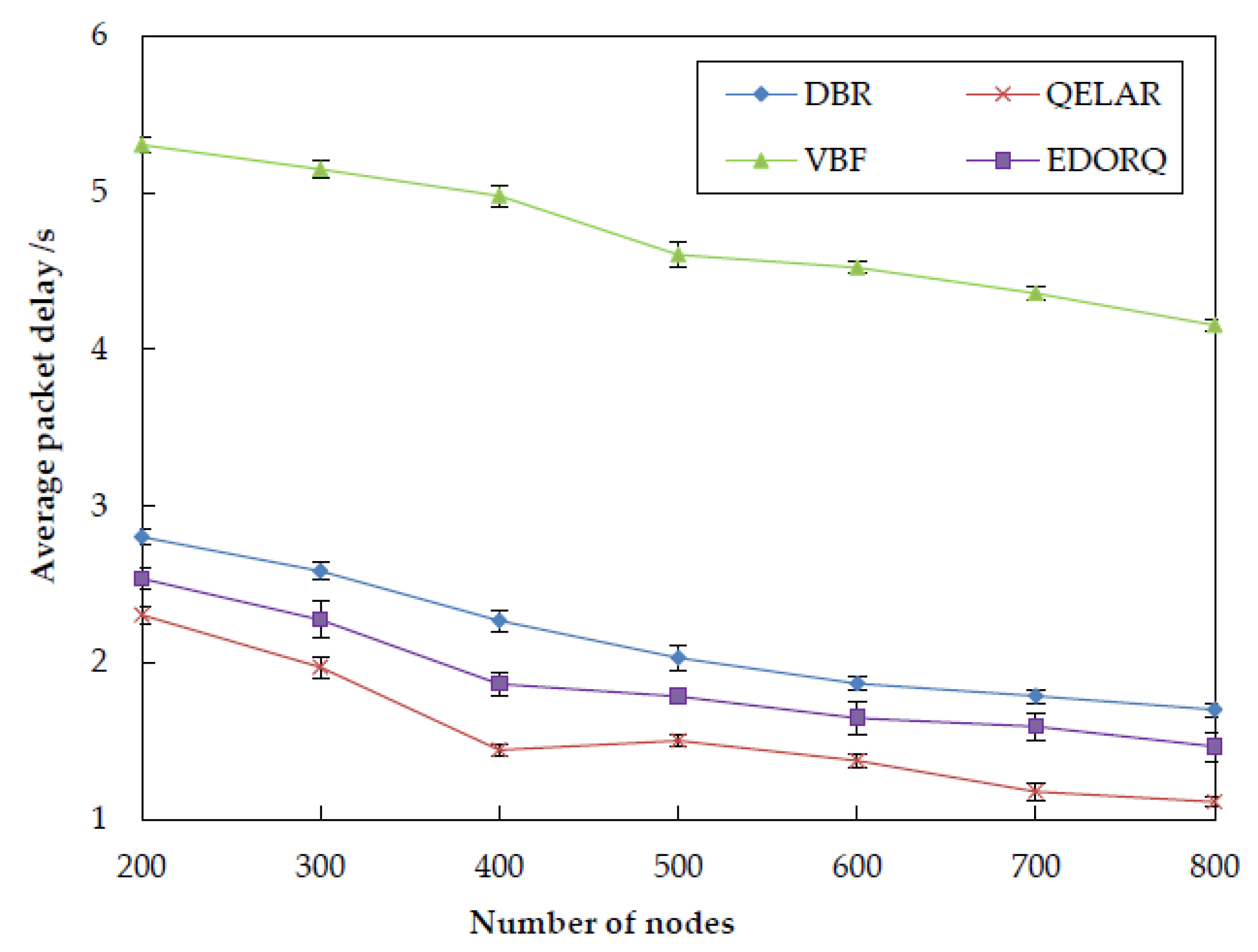

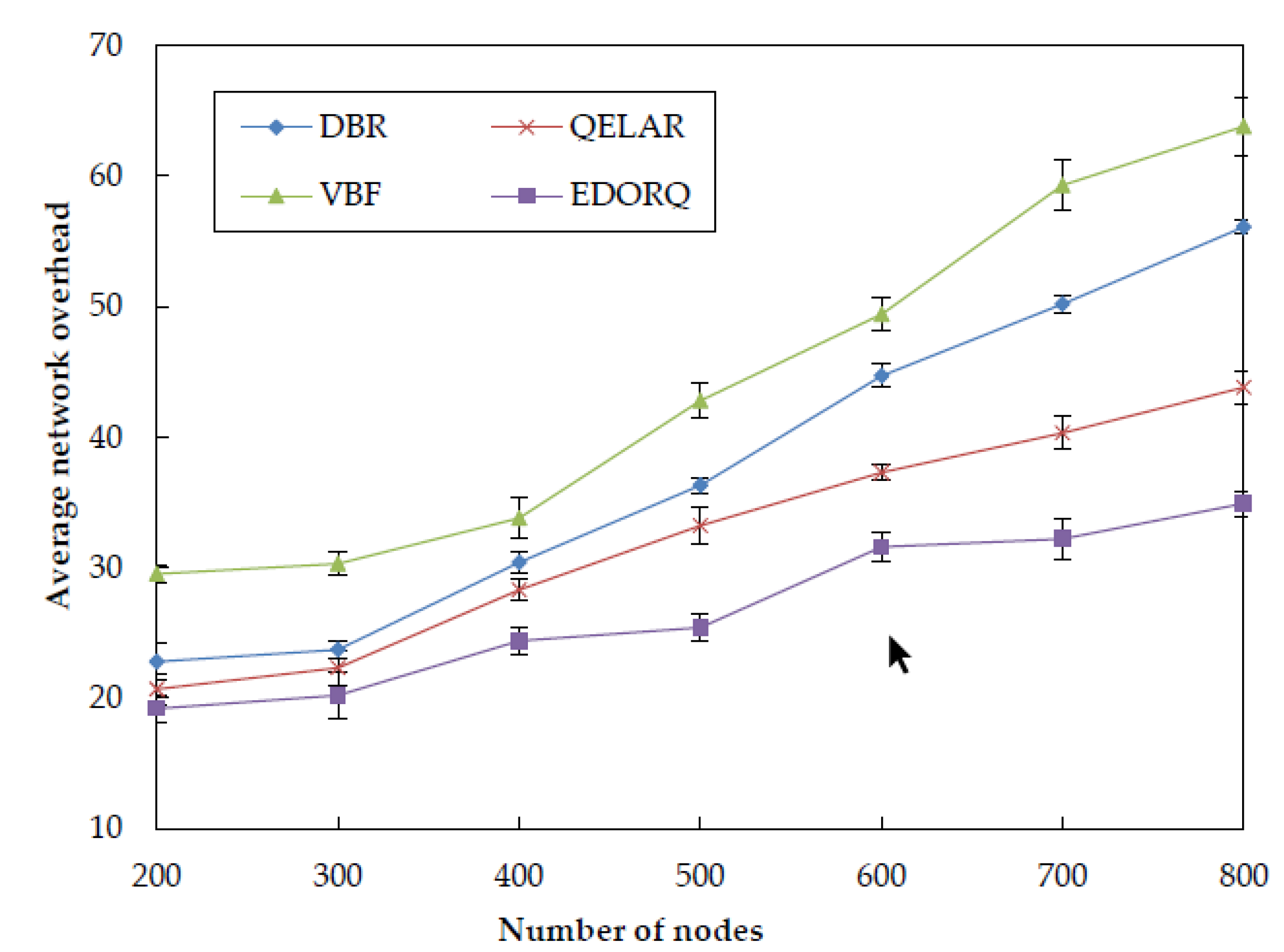

5.3.1. Performance Comparison

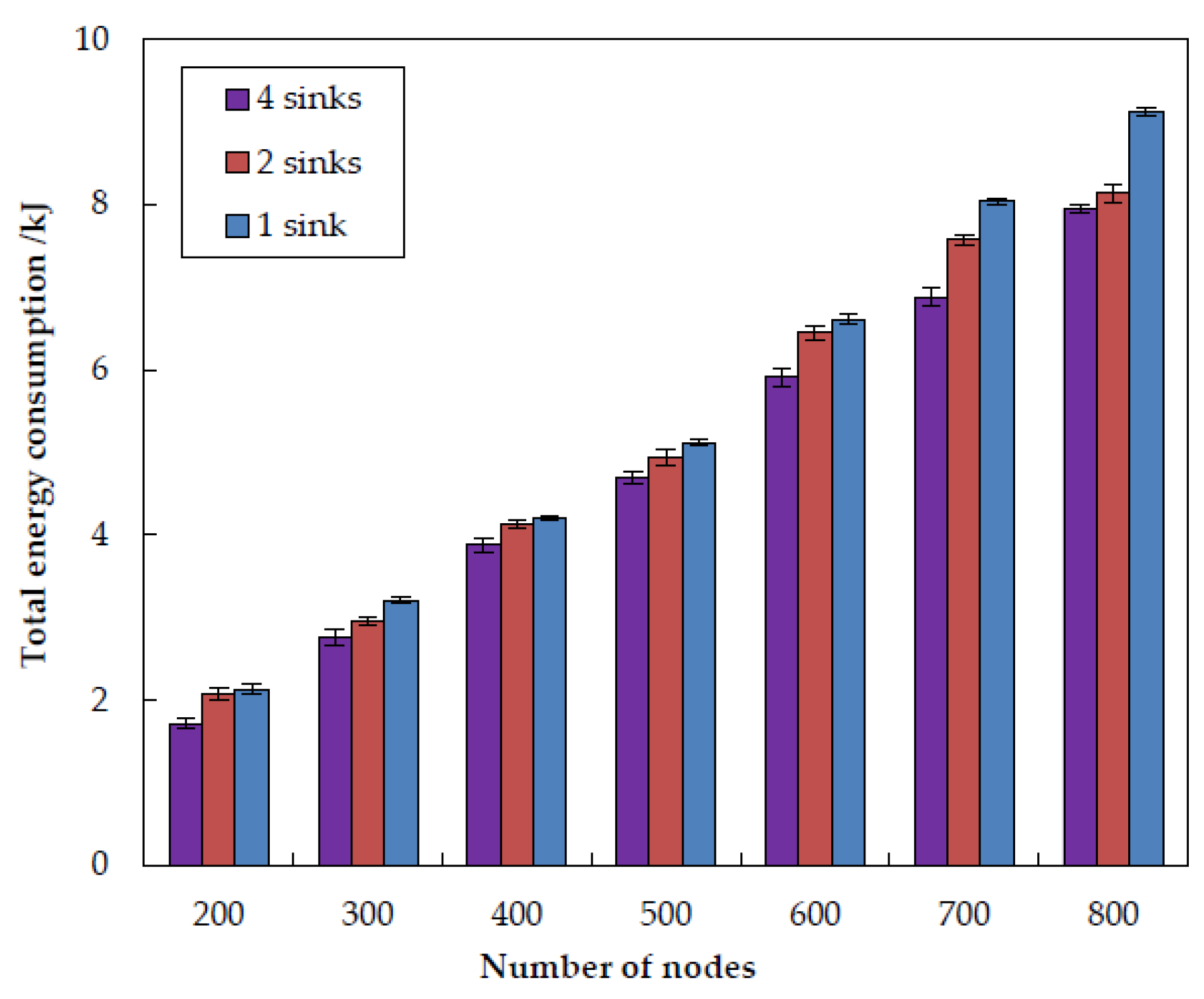

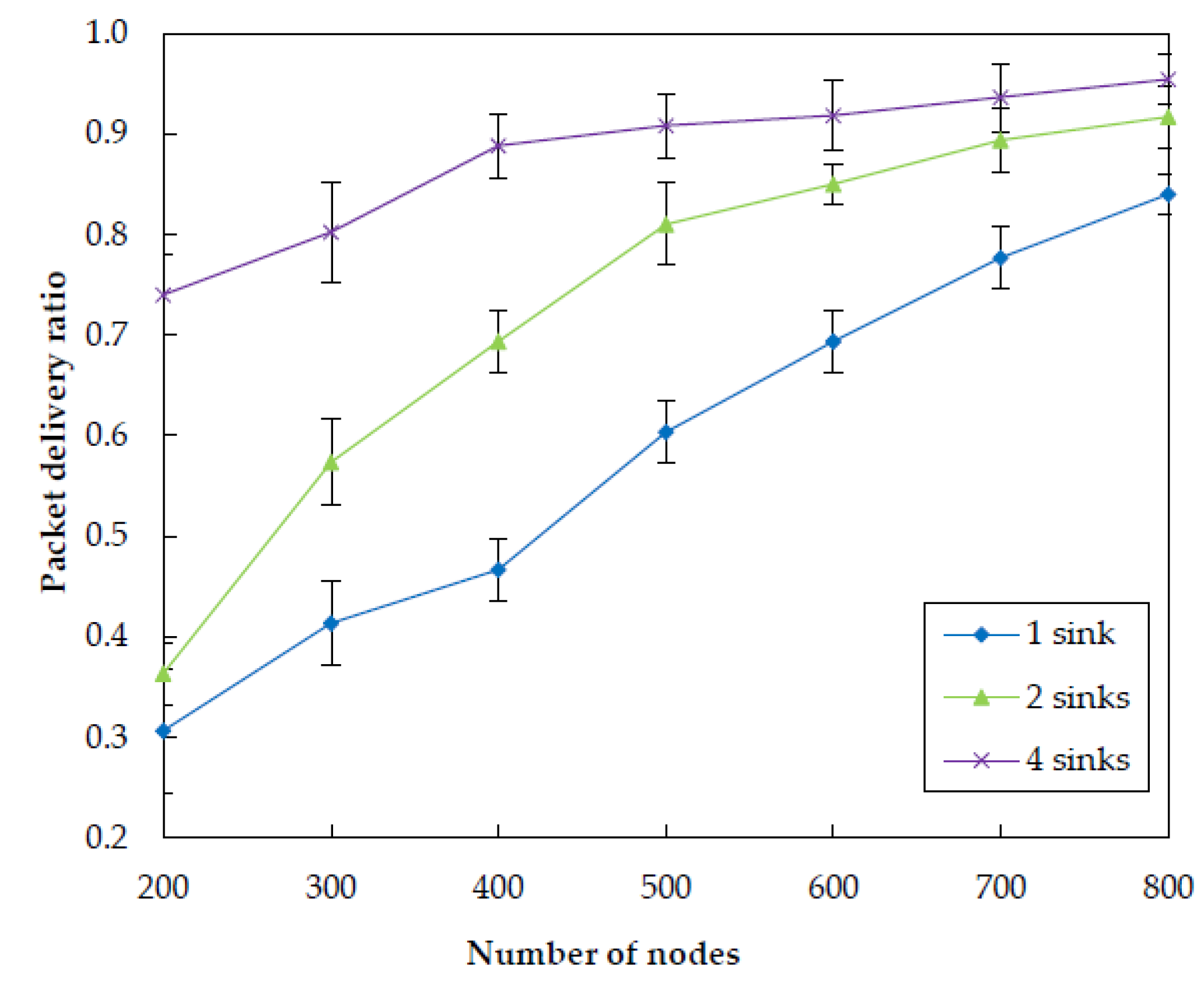

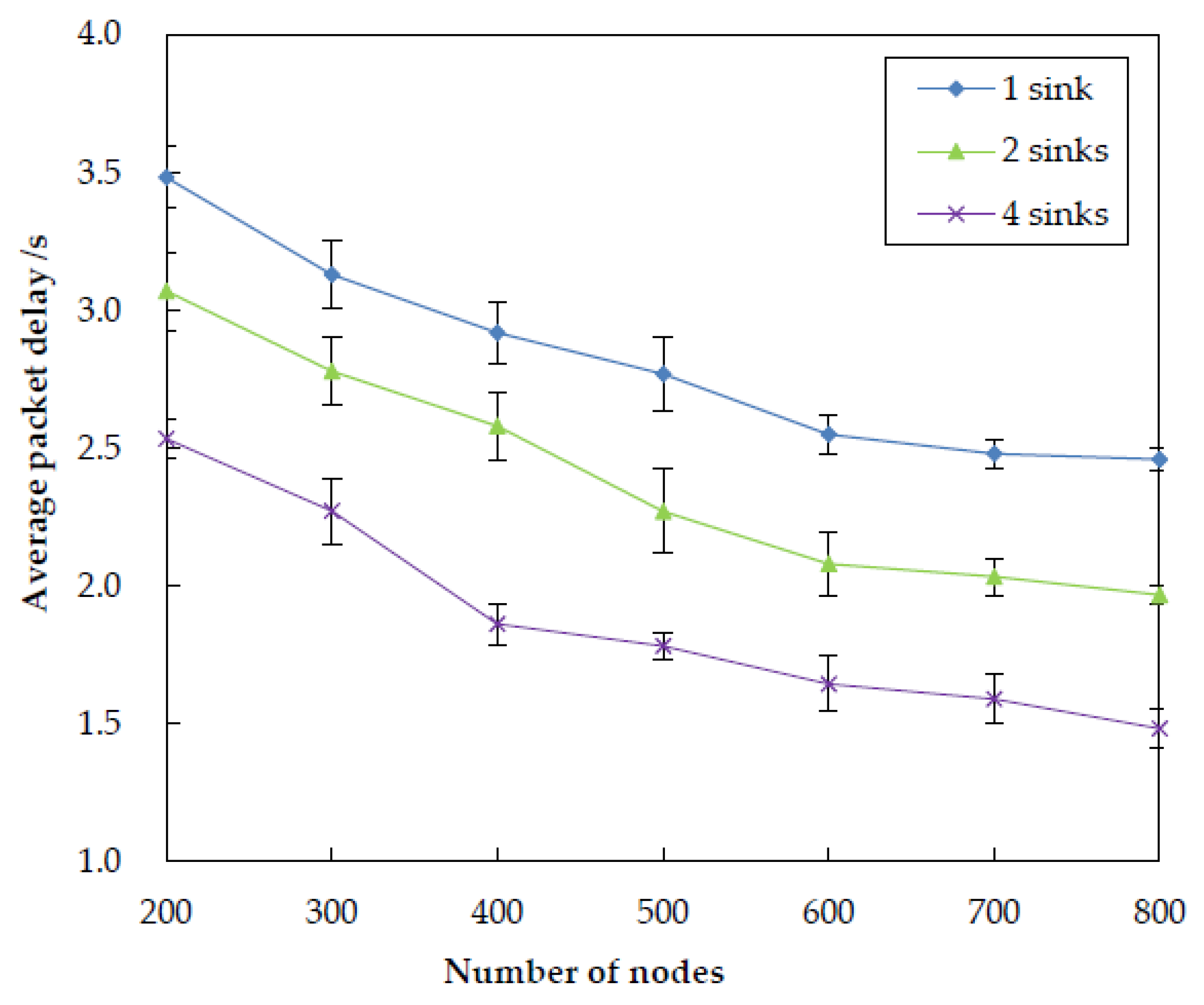

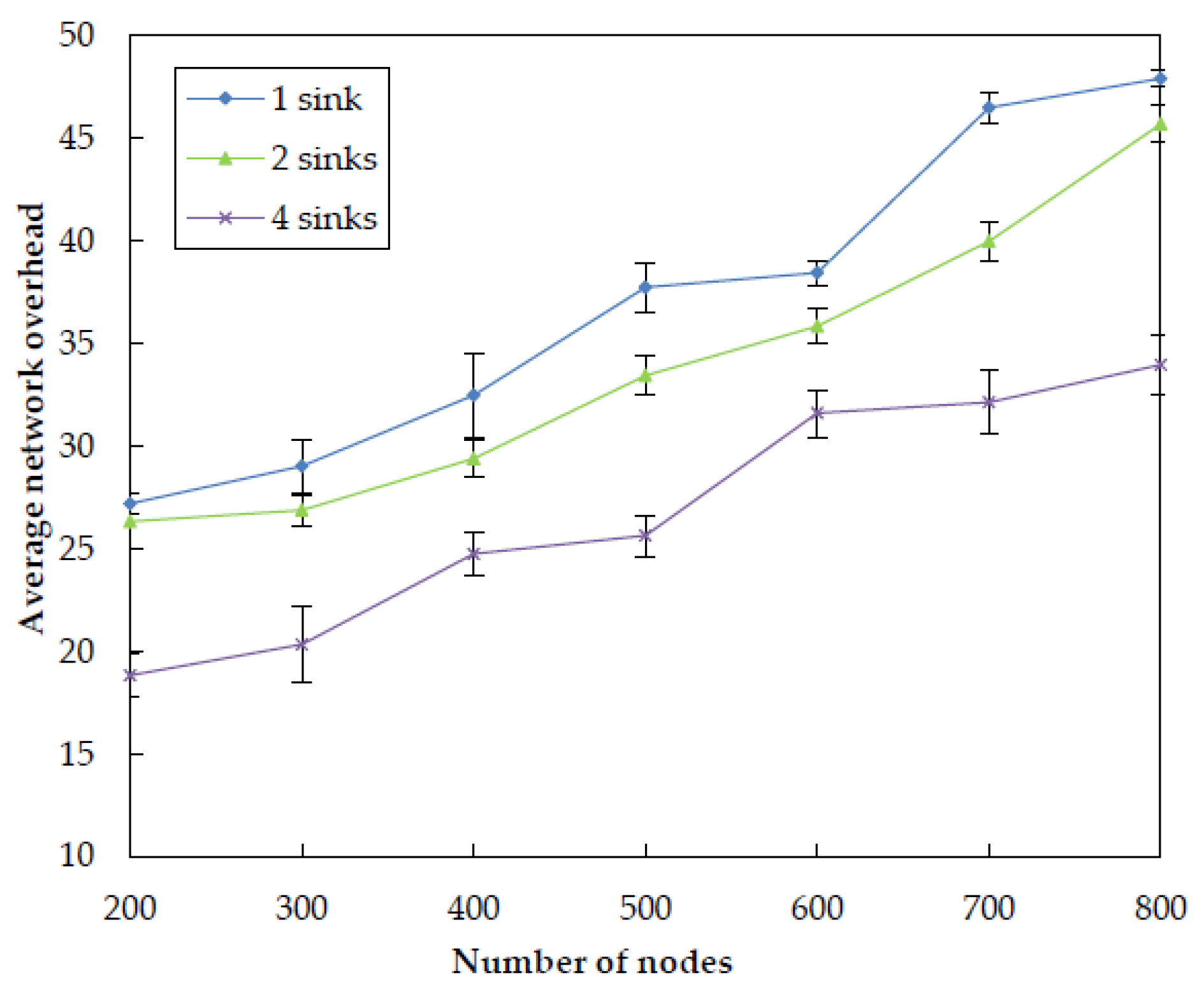

5.3.2. Impact of Sink Number

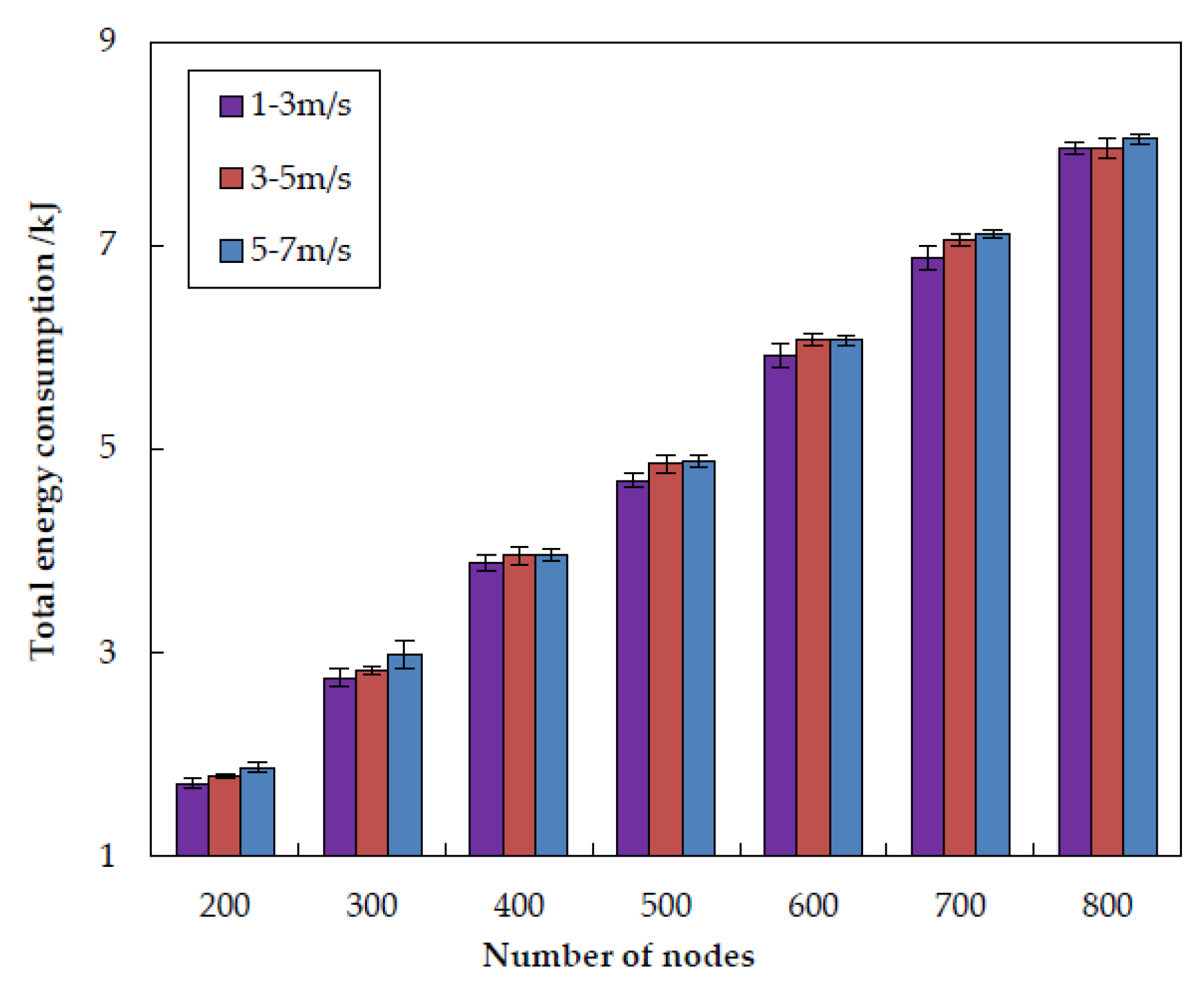

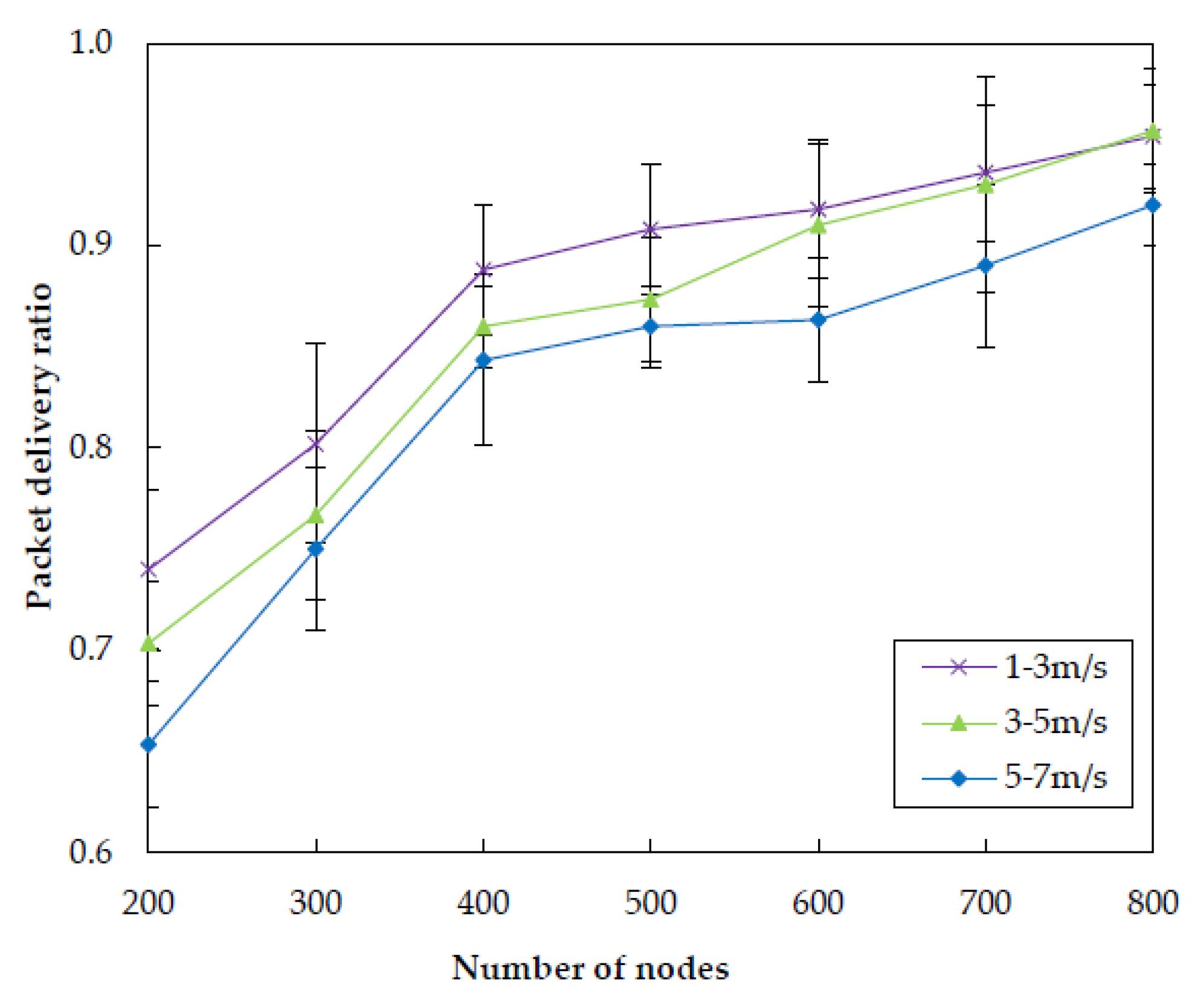

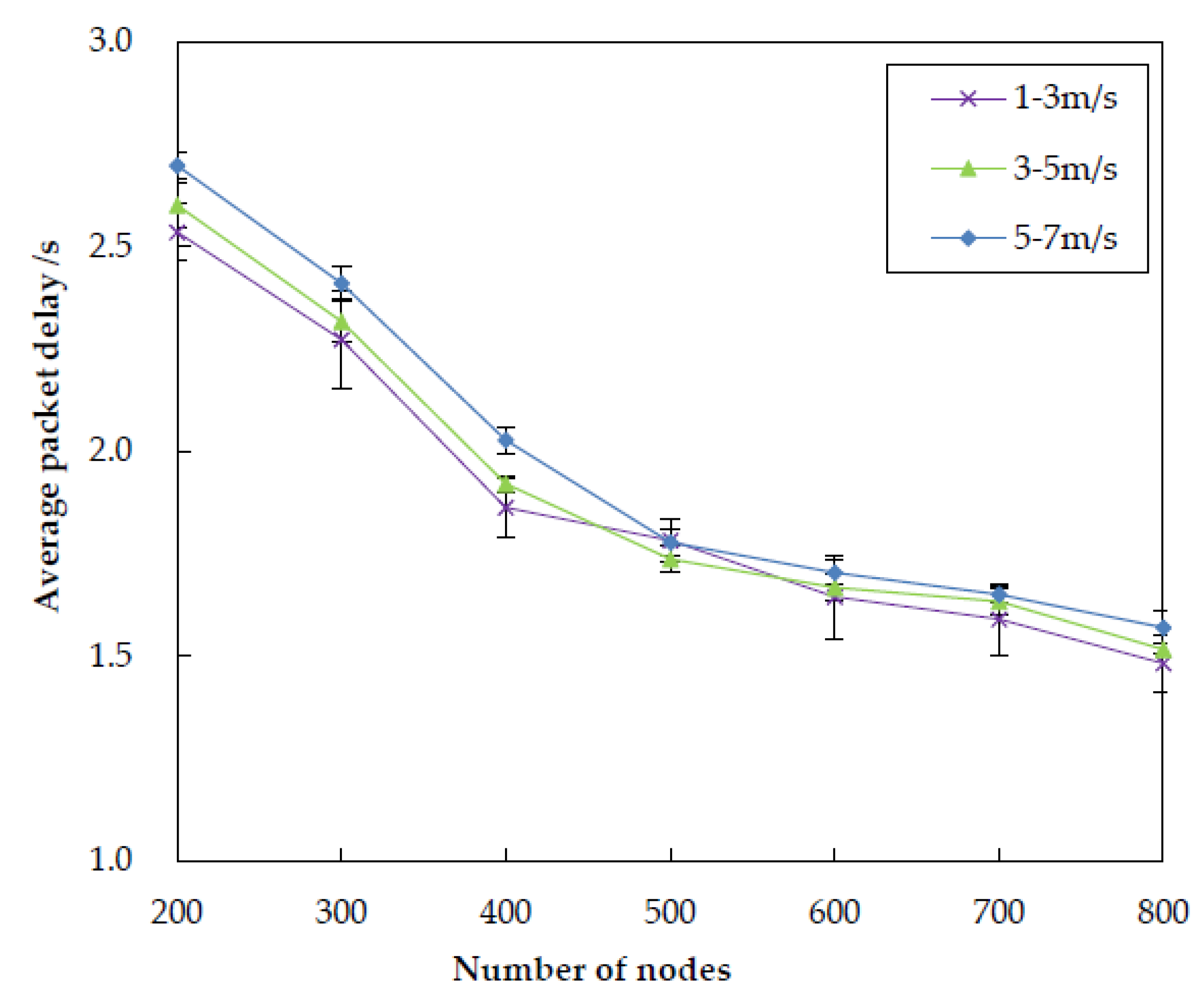

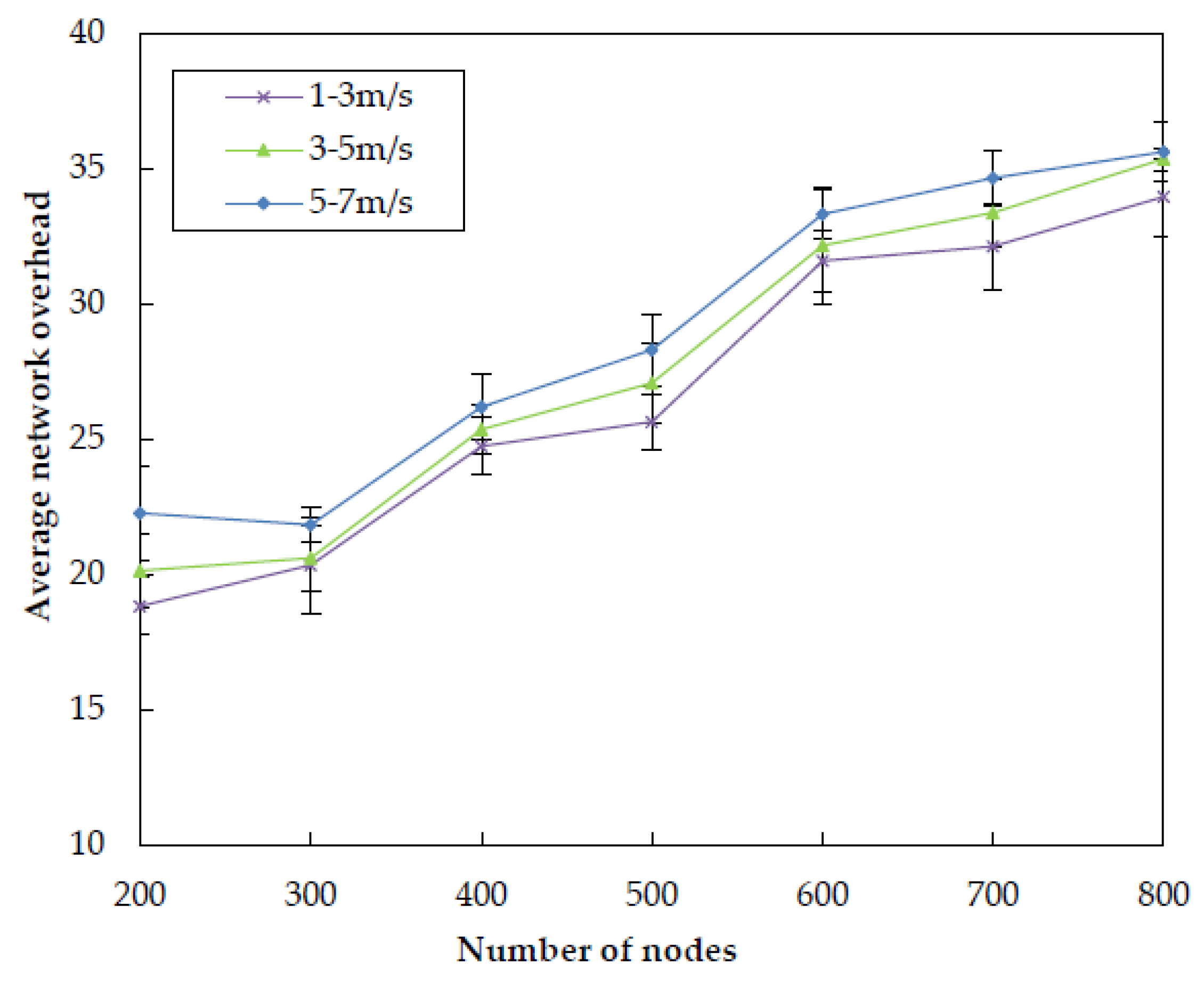

5.3.3. Impact of Node Mobility

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Trifunovic, S.; Kouyoumdjieva, S.T.; Distl, B.; Pajevic, L.; Karlsson, G.; Plattner, B. A Decade of Research Opportunistic Networks: Challenges, Relevance and Future Directions. IEEE Commun. Mag. 2017, 55, 168–173. [Google Scholar] [CrossRef]

- Awan, K.M.; Shah, P.A.; Iqbal, K.; Gillani, S.; Ahmad, W.; Nam, Y. Underwater wireless sensor networks: A review of recent issues and challenges. Wirel. Commun. Mob. Comput. 2019, 2019, 6470359. [Google Scholar] [CrossRef]

- Han, G.; Jiang, J.; Bao, N.; Wan, L.; Guizani, M. Routing protocols for underwater wireless sensor networks. IEEE Commun. Mag. 2015, 53, 72–78. [Google Scholar] [CrossRef]

- Khan, A.; Ali, I.; Ghani, A.; Khan, N.; Alsaqer, M.; Rahman, A.U.; Mahmood, H. Routing protocols for underwater wireless sensor networks: Taxonomy, research challenges, routing strategies and future directions. Sensors 2018, 18, 1619. [Google Scholar] [CrossRef]

- Li, S.N.; Qu, W.Y.; Liu, C.F.; Qiu, T.; Zhao, Z. Survey on high reliability wireless communication for underwater sensor networks. J. Netw. Comput. Appl. 2019, 148, 102446. [Google Scholar] [CrossRef]

- Zeng, Z.Q.; Fu, S.; Zhang, H.H.; Dong, Y.H.; Cheng, J.L. A survey of underwater optical wireless communications. IEEE Commun. Surv. Tuts. 2016, 19, 204–238. [Google Scholar] [CrossRef]

- Climent, S.; Sanchez, A.; Capella, J.V.; Meratnia, N.; Serrano, J.J. Underwater acoustic wireless sensor networks: advances and future trends in physical, MAC and routing layers. Sensors 2014, 14, 795–833. [Google Scholar] [CrossRef]

- Li, L.; Qin, Y.; Zhong, X. A Novel Routing Scheme for Resource-Constraint Opportunistic Networks: A Cooperative Multiplayer Bargaining Game Approach. IEEE Trans. Veh. Technol. 2016, 65, 6547–6561. [Google Scholar] [CrossRef]

- Coutinho, R.W.L.; Boukerche, A.; Vieira, L.F.M.; Loureiro, A.A.F. Underwater wireless sensor networks: A new challenge for topology control–based systems. ACM Comput. Surv. 2018, 51, 1–19. [Google Scholar] [CrossRef]

- Zorzi, M.; Casari, P.; Baldo, N.; Harris, A.F. Energy-efficient routing schemes for underwater acoustic networks. IEEE J. Sel. Areas Commun. 2008, 26, 1754–1766. [Google Scholar] [CrossRef]

- Bai, W.; Wang, H.; He, K.; Zhao, R. Path diversity improved opportunistic routing for underwater sensor networks. Sensors 2018, 18, 1293. [Google Scholar] [CrossRef]

- Boukerche, A.; Turgut, B.; Aydin, N.; Ahmad, M.Z. Routing protocols in ad hoc networks: A survey. Comput. Netw. 2011, 55, 3032–3080. [Google Scholar] [CrossRef]

- Shaghaghian, S.; Coates, M. Optimal Forwarding in Opportunistic Delay Tolerant Networks with Meeting Rate Estimations. IEEE Trans. Signal Inf. Process. Netw. 2015, 1, 104–116. [Google Scholar] [CrossRef]

- Vieira, L.F.M. Performance and trade-offs of opportunistic routing in underwater networks. In Proceedings of the IEEE Wireless Communications and Networking Conference (WCNC), Shanghai, China, 1–4 April 2012; pp. 2911–2915. [Google Scholar]

- Coutinho, R.W.L.; Boukerche, A.; Vieira, L.F.M.; Loureiro, A.A.F. Modeling and analysis of opportunistic routing in low duty-cycle underwater sensor networks. In Proceedings of the 18th ACM International Conference on Modeling, Analysis and Simulation of Wireless and Mobile Systems, Cancun, Mexico, 2–6 November 2015; pp. 125–132. [Google Scholar]

- Ghoreyshi, S.M.; Shahrabi, A.; Boutaleb, T. Void-handling techniques for routing protocols in underwater sensor networks: Survey and challenges. IEEE Commun. Surv. Tutor. 2017, 19, 800–827. [Google Scholar] [CrossRef]

- Darehshoorzadeh, A.; Boukerche, A. Underwater sensor networks: a new challenge for opportunistic routing protocols. IEEE Commun. Mag. 2015, 53, 98–107. [Google Scholar] [CrossRef]

- Coutinho, R.W.L.; Boukerche, A.; Vieira, L.F.M.; Loureiro, A.A.F. Design guidelines for opportunistic routing in underwater networks. IEEE Commun. Mag. 2016, 54, 40–48. [Google Scholar] [CrossRef]

- Li, N.; Martínez, J.F.; Chaus, J.M.M.; Eckert, M. A survey on underwater acoustic sensor network routing protocols. Sensors 2016, 16, 414. [Google Scholar] [CrossRef]

- Jornet, J.M.; Stojanovic, M.; Zorzi, M. Focused beam routing protocol for underwater acoustic networks. In Proceedings of the 3th Workshop on Underwater Networks, San Francisco, CA, USA, 15 September 2007; pp. 75–82. [Google Scholar]

- Wu, T.; Sun, N. A reliable and evenly energy consumed routing protocol for underwater acoustic sensor networks. In Proceedings of the 20th IEEE International Workshop on Computer Aided Modelling and Design of Communication Links and Networks (CAMAD), Guildford, UK, 7–9 September 2015; pp. 299–302. [Google Scholar]

- Yan, H.; Shi, Z.J.; Cui, J.H. DBR: Depth-based routing for underwater sensor networks. In Proceedings of the 7th International IFIP-TC6 Networking Conference, Singapore, 5–9 May 2008; pp. 72–86. [Google Scholar]

- Nowé, A.; Brys, T. A gentle introduction to reinforcement learning. In Proceedings of the International Conference on Scalable Uncertainty Management, Cham, Switzerland, 30 August 2016; pp. 18–32. [Google Scholar]

- Watkins, C.J.C.H.; Dayan, P. Q-learning. Mach. Learn. 1992, 8, 279–292. [Google Scholar] [CrossRef]

- Tan, M. Multi-agent reinforcement learning: Independent vs. Cooperative agents. In Proceedings of the 10th International Conference on Machine Learning; San Francisco, CA, USA, 27–29 June 1993, pp. 330–337.

- Hu, T.S.; Fei, Y.S. QELAR: A machine-learning-based adaptive routing protocol for energy-efficient and lifetime-extended underwater sensor networks. IEEE Trans. Mob. Comp. 2010, 9, 796–809. [Google Scholar]

- Jin, Z.G.; Ma, Y.Y.; Su, Y.S. A Q-learning-based delay-aware routing algorithm to extend the lifetime of underwater sensor networks. Sensors 2017, 17, 1660. [Google Scholar] [CrossRef]

- Chang, H.T.; Feng, J.; Duan, C.F. Reinforcement learning-based data forwarding in underwater wireless sensor networks with passive mobility. Sensors 2019, 19, 256. [Google Scholar] [CrossRef] [PubMed]

- Plate, R.; Wakayama, C. Utilizing kinematics and selective sweeping in reinforcement learning-based routing algorithms for underwater networks. Ad Hoc Netw. 2015, 34, 105–120. [Google Scholar] [CrossRef]

- Ayaz, M.; Baig, I.; Abdullah, A.; Faye, I. A survey on routing techniques in underwater wireless sensor networks. J. Netw. Comput. Appl. 2011, 34, 1908–1927. [Google Scholar] [CrossRef]

- Xie, P.; Cui, J.H.; Li, L. VBF: Vector-based forwarding protocol for underwater sensor net-works. In Proceedings of the 5th International IFIP-TC6 Networking Conference, Coimbra, Portugal, 15–19 May 2006; pp. 1216–1221. [Google Scholar]

- Nicolaou, N.; See, A.; Xie, P.; Cui, J.H.; Maggiorini, D. Improving the robustness of location-based routing for underwater sensor networks. In Proceedings of the Oceans 2007 Europe International Conference, Aberdeen, UK, 18–21 June 2007; pp. 1–6. [Google Scholar]

- Umar, A.; Javaid, N.; Ahmad, A.; Khan, Z.A.; Qasim, U.; Alrajeh, N.; Hayat, A. DEADS: Depth and energy aware dominating set based algorithm for cooperative routing along with sink mobility in underwater WSNs. Sensors 2015, 15, 14458–14486. [Google Scholar] [CrossRef]

- Diao, B.Y.; Xu, Y.J.; An, Z.L.; Wang, F.; Li, C. Improving both energy and time efficiency of depth-based routing for underwater sensor networks. Int. J. Distrib. Sens. Netw. 2015, 2015, 1–9. [Google Scholar] [CrossRef]

- Liang, Q.L.; Zhang, B.J.; Zhao, C.L.; Pi, Y.M. TDoA for passive localization: underwater versus terrestrial environment. IEEE Trans. Parallel Distrib. Syst. 2013, 24, 2100–2108. [Google Scholar] [CrossRef]

- Ullah, U.; Khan, A.; Zareei, M.; Ali, I.; Khattak, H.A.; Din, I.U. Energy-effective cooperative and reliable delivery routing protocols for underwater wireless sensor networks. Energies 2019, 12, 2630. [Google Scholar] [CrossRef]

- Qadir, J.; Khan, A.; Zareei, M.; Vargas-Rosales, C. Energy balanced localization-free cooperative noise-aware routing protocols for underwater wireless sensor networks. Energies 2019, 12, 4263. [Google Scholar] [CrossRef]

- Noh, Y.; Lee, U.; Wang, P.; Choi, B.S.C.; Gerla, M. VAPR: Void-Aware Pressure Routing for Underwater Sensor Networks. IEEE. Trans. Mob. Comput. 2012, 12, 895–908. [Google Scholar] [CrossRef]

- Noh, Y.; Lee, U.; Lee, S.; Wang, P.; Vieira, L.F.; Cui, J.H.; Gerla, M.; Kim, K. Hydrocast: pressure routing for underwater sensor networks. IEEE Trans. Veh. Technol. 2015, 65, 333–347. [Google Scholar] [CrossRef]

- Ghoreyshi, S.M.; Shahrabi, A.; Boutaleb, T. A novel cooperative opportunistic routing scheme for underwater sensor networks. Sensors 2016, 16, 297. [Google Scholar] [CrossRef] [PubMed]

- The Network Simulator - ns-2. Available online: http://www.isi.edu/nanam/ns/ (accessed on 9 October 2019).

- Sutton, R.S.; Barto, A.G. Reinforcement learning: An introduction. IEEE Trans. Neural Netw. Learn. Syst. 1998, 9, 1054. [Google Scholar] [CrossRef]

- Rolla, V.G.; Curado, M. A reinforcement learning-based routing for delay tolerant networks. Eng. Appl. Artif. Intell. 2013, 26, 2243–2250. [Google Scholar] [CrossRef]

- LinkQuest Underwater Acoustic Modems UWM1000 Specifications. Available online: http://www.link-quest.com/html/uwm1000.htm (accessed on 15 October 2019).

| Name | Description |

|---|---|

| The void detection factor of node nj | |

| Ej | The energy-related factor of node nj |

| Dij | The depth-related factor between nodes ni and nj |

| Binary-valued variable | |

| Adjustment coefficients of | |

| Weight factor of rewards Ej and Dij | |

| The holding time of node nj |

| Parameters Name | Value |

|---|---|

| Simulation scene range | 500 m × 500 m × 500 m |

| Rmax | 100 m |

| Send power | 2 w |

| Receive power | 0.1 w |

| Idle power | 0.01 w |

| Discount factor | 0.9 |

| 0.5 | |

| 0.5 | |

| Simulation time for each run | 800 s |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, Y.; He, R.; Chen, X.; Lin, B.; Yu, C. Energy-Efficient Depth-Based Opportunistic Routing with Q-Learning for Underwater Wireless Sensor Networks. Sensors 2020, 20, 1025. https://doi.org/10.3390/s20041025

Lu Y, He R, Chen X, Lin B, Yu C. Energy-Efficient Depth-Based Opportunistic Routing with Q-Learning for Underwater Wireless Sensor Networks. Sensors. 2020; 20(4):1025. https://doi.org/10.3390/s20041025

Chicago/Turabian StyleLu, Yongjie, Rongxi He, Xiaojing Chen, Bin Lin, and Cunqian Yu. 2020. "Energy-Efficient Depth-Based Opportunistic Routing with Q-Learning for Underwater Wireless Sensor Networks" Sensors 20, no. 4: 1025. https://doi.org/10.3390/s20041025

APA StyleLu, Y., He, R., Chen, X., Lin, B., & Yu, C. (2020). Energy-Efficient Depth-Based Opportunistic Routing with Q-Learning for Underwater Wireless Sensor Networks. Sensors, 20(4), 1025. https://doi.org/10.3390/s20041025