Real-Time Facial Affective Computing on Mobile Devices

Abstract

1. Introduction

2. Related Work

3. Facial Affective Computing

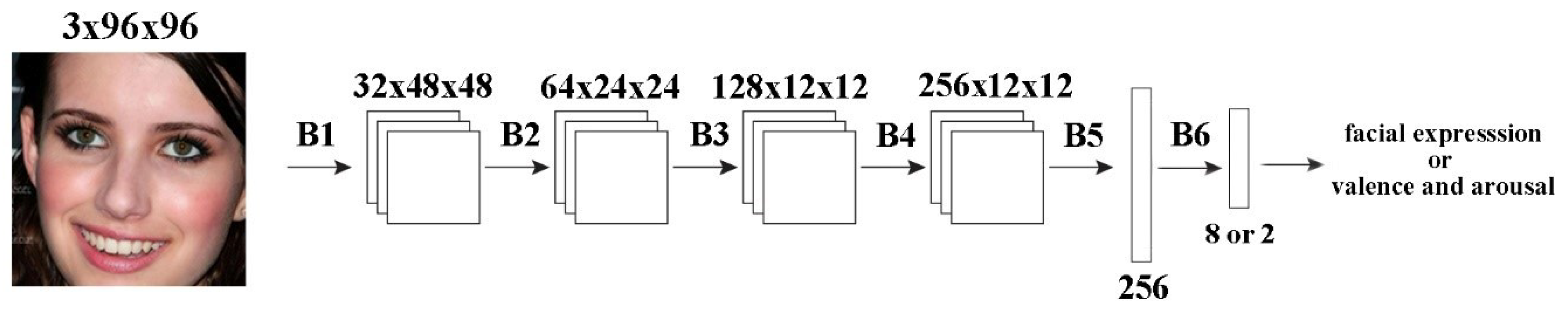

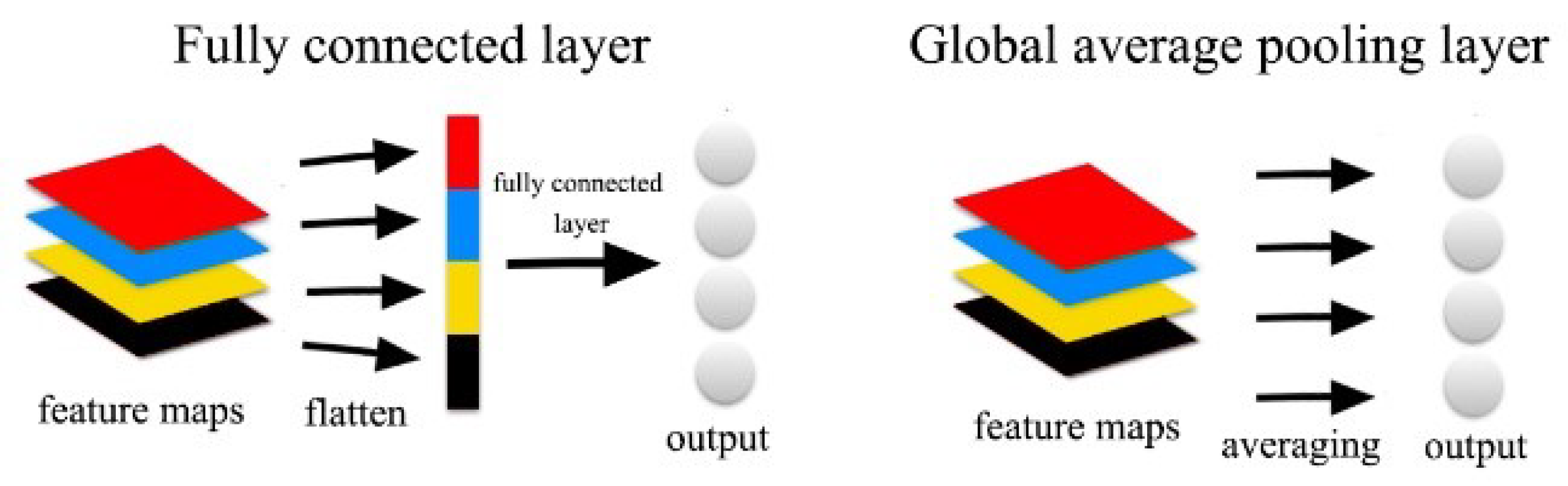

3.1. Network Architecture

3.2. Loss Function

4. Experiment

4.1. Experimental Setup

4.2. Evaluation Metrics

4.3. Experimental Results

5. Design for Mobile Platforms

5.1. Design Principles and Purpose

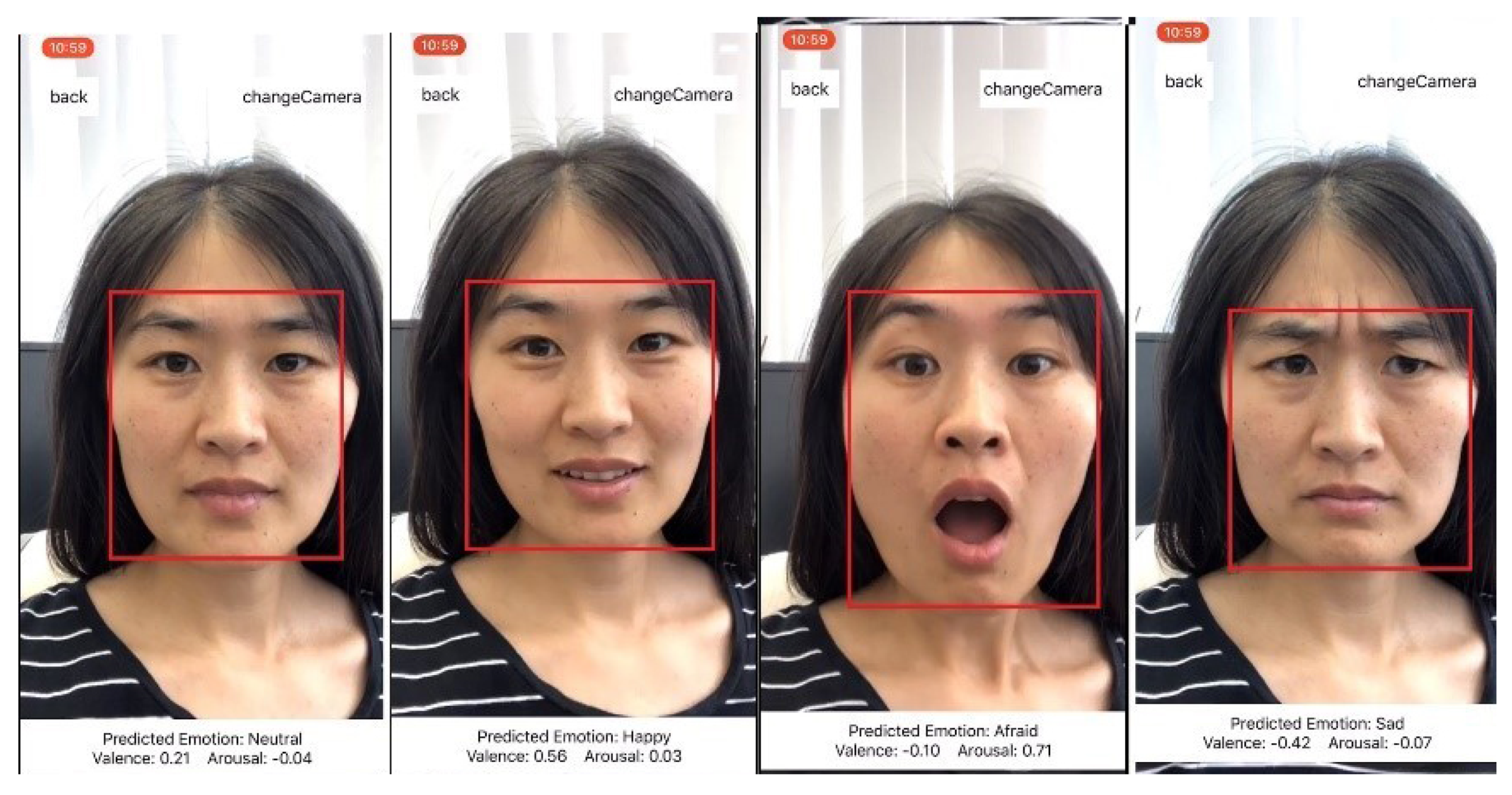

5.2. Mobile Implementation

5.3. Evaluation of Storage Consumption and Processing Time

6. Discussion

7. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Tao, J.; Tan, T. Affective computing: A review. In Proceedings of the International Conference on Affective Computing and Intelligent Interaction, Beijing, China, 22–24 October 2005; pp. 981–995. [Google Scholar]

- Ekman, P.; Friesen, W.V. Constants across cultures in the face and emotion. J. Pers. Soc. Psychol. 1971, 17, 124. [Google Scholar] [CrossRef]

- Russell, J.A. A circumplex model of affect. J. Pers. Soc. Psychol 1980, 39, 1161. [Google Scholar] [CrossRef]

- Wang, Y.; Yu, H.; Dong, J.; Jian, M.; Liu, H. Cascade support vector regression-based facial expression-aware face frontalization. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 2831–2835. [Google Scholar]

- Wang, Y.; Yu, H.; Dong, J.; Stevens, B.; Liu, H. Facial expression-aware face frontalization. In Proceedings of the Asian Conference on Computer Vision, Taipei, Taiwan, 20–24 November 2016; pp. 375–388. [Google Scholar]

- Yu, H.; Liu, H. Regression-based facial expression optimization. IEEE Trans. Hum.-Mach. Syst. 2014, 44, 386–394. [Google Scholar]

- Leo, M.; Carcagnì, P.; Distante, C.; Spagnolo, P.; Mazzeo, P.; Rosato, A.; Petrocchi, S.; Pellegrino, C.; Levante, A.; De Lumè, F.; et al. Computational Assessment of Facial Expression Production in ASD Children. Sensors 2018, 18, 3993. [Google Scholar] [CrossRef] [PubMed]

- Goulart, C.; Valadão, C.; Delisle-Rodriguez, D.; Funayama, D.; Favarato, A.; Baldo, G.; Binotte, V.; Caldeira, E.; Bastos-Filho, T. Visual and Thermal Image Processing for Facial Specific Landmark Detection to Infer Emotions in a Child-Robot Interaction. Sensors 2019, 19, 2844. [Google Scholar] [CrossRef]

- Cid, F.; Moreno, J.; Bustos, P.; Núnez, P. Muecas: A multi-sensor robotic head for affective human robot interaction and imitation. Sensors 2014, 14, 7711–7737. [Google Scholar] [CrossRef] [PubMed]

- Varghese, E.B.; Thampi, S.M. A Deep Learning Approach to Predict Crowd Behavior Based on Emotion. In Proceedings of the International Conference on Smart Multimedia, Toulon, France, 24–26 August 2018; pp. 296–307. [Google Scholar]

- Zhou, X.; Yu, H.; Liu, H.; Li, Y. Tracking multiple video targets with an improved GM-PHD tracker. Sensors 2015, 15, 30240–30260. [Google Scholar] [CrossRef] [PubMed]

- Shan, C.; Gong, S.; McOwan, P.W. Facial expression recognition based on local binary patterns: A comprehensive study. Image Vis. Comput. 2009, 27, 803–816. [Google Scholar] [CrossRef]

- Yu, H.; Liu, H. Combining appearance and geometric features for facial expression recognition. In Proceedings of the Sixth International Conference on Graphic and Image Processing (ICGIP 2014), International Society for Optics and Photonics, Beijing, China, 24–26 October 2015; Volume 9443, p. 944308. [Google Scholar]

- Wang, Y.; Ai, H.; Wu, B.; Huang, C. Real time facial expression recognition with adaboost. In Proceedings of the 17th International Conference on Pattern Recognition (ICPR 2004), Cambridge, UK, 23–26 August 2004; Volume 3, pp. 926–929. [Google Scholar]

- Uddin, M.Z.; Lee, J.; Kim, T.S. An enhanced independent component-based human facial expression recognition from video. IEEE Trans. Consum. Electron. 2009, 55, 2216–2224. [Google Scholar] [CrossRef]

- Edwards, G.J.; Taylor, C.J.; Cootes, T.F. Interpreting face images using active appearance models. In Proceedings of the Third IEEE International Conference on Automatic Face and Gesture Recognition, Nara, Japan, 14–16 April 1998; pp. 300–305. [Google Scholar]

- Cootes, T.F.; Taylor, C.J.; Cooper, D.H.; Graham, J. Active shape models-their training and application. Comput. Vis. Image Underst. 1995, 61, 38–59. [Google Scholar] [CrossRef]

- Choi, H.C.; Oh, S.Y. Realtime facial expression recognition using active appearance model and multilayer perceptron. In Proceedings of the 2006 SICE-ICASE International Joint Conference, Busan, Korea, 18–21 October 2006; pp. 5924–5927. [Google Scholar]

- Chen, J.; Chen, Z.; Chi, Z.; Fu, H. Facial expression recognition based on facial components detection and hog features. In Proceedings of the International Workshops on Electrical and Computer Engineering Subfields, Istanbul, Turkey, 22–23 August 2014; pp. 884–888. [Google Scholar]

- Orrite, C.; Gañán, A.; Rogez, G. Hog-based decision tree for facial expression classification. In Proceedings of the Iberian Conference on Pattern Recognition and Image Analysis, Povoa de Varzim, Portugal, 10–12 June 2009; pp. 176–183. [Google Scholar]

- Luo, Y.; Wu, C.m.; Zhang, Y. Facial expression recognition based on fusion feature of PCA and LBP with SVM. Opt. Int. J. Light Electron. Opt. 2013, 124, 2767–2770. [Google Scholar] [CrossRef]

- Barroso, E.; Santos, G.; Proença, H. Facial expressions: Discriminability of facial regions and relationship to biometrics recognition. In Proceedings of the 2013 IEEE Symposium on Computational Intelligence in Biometrics and Identity Management (CIBIM), Singapore, 16–19 April 2013; pp. 77–80. [Google Scholar]

- Carcagnì, P.; Del Coco, M.; Leo, M.; Distante, C. Facial expression recognition and histograms of oriented gradients: a comprehensive study. SpringerPlus 2015, 4, 645. [Google Scholar] [CrossRef] [PubMed]

- Gu, W.; Xiang, C.; Venkatesh, Y.; Huang, D.; Lin, H. Facial expression recognition using radial encoding of local Gabor features and classifier synthesis. Pattern Recognit. 2012, 45, 80–91. [Google Scholar] [CrossRef]

- Yang, H.; Ciftci, U.; Yin, L. Facial expression recognition by de-expression residue learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2168–2177. [Google Scholar]

- Xia, Y.; Yu, H.; Wang, F.Y. Accurate and robust eye center localization via fully convolutional networks. IEEE/CAA J. Autom. Sin. 2019, 6, 1127–1138. [Google Scholar] [CrossRef]

- Zheng, N. The new era of artificial intelligence. Chin. J. Intell. Sci. Technol. 2019, 1, 1–3. [Google Scholar]

- Xing, Y.; Lv, C.; Zhang, Z.; Wang, H.; Na, X.; Cao, D.; Velenis, E.; Wang, F.Y. Identification and analysis of driver postures for in-vehicle driving activities and secondary tasks recognition. IEEE Trans. Comput. Soc. Syst. 2017, 5, 95–108. [Google Scholar] [CrossRef]

- Hu, B.; Wang, J. Deep Learning Based Hand Gesture Recognition and UAV Flight Controls. Int. J. Autom. Comput. 2020, 27. [Google Scholar] [CrossRef]

- Sharma, A.; Balouchian, P.; Foroosh, H. A Novel Multi-purpose Deep Architecture for Facial Attribute and Emotion Understanding. In Iberoamerican Congress on Pattern Recognition; Springer: Cham, Switzerland, 2018; pp. 620–627. [Google Scholar]

- Zeng, J.; Shan, S.; Chen, X. Facial expression recognition with inconsistently annotated datasets. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 222–237. [Google Scholar]

- Lian, Z.; Li, Y.; Tao, J.H.; Huang, J.; Niu, M.Y. Expression Analysis Based on Face Regions in Read-world Conditions. Int. J. Autom. Comput. 2020, 17, 96–107. [Google Scholar] [CrossRef]

- Mollahosseini, A.; Hasani, B.; Mahoor, M.H. Affectnet: A database for facial expression, valence, and arousal computing in the wild. IEEE Trans. Affect. Comput. 2017, 10, 18–31. [Google Scholar] [CrossRef]

- Siqueira, H. An Adaptive Neural Approach Based on Ensemble and Multitask Learning for Affect Recognition. In Proceedings of the International PhD Conference on Safe and Social Robotics, Madrid, Spain, 29–30 September 2018; pp. 49–52. [Google Scholar]

- Suk, M.; Prabhakaran, B. Real-time mobile facial expression recognition system-a case study. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Columbus, OH, USA, 23–28 June 2014; pp. 132–137. [Google Scholar]

- FaceReader. Available online: https://www.noldus.com/facereader (accessed on 6 February 2020).

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- Lin, M.; Chen, Q.; Yan, S. Network in network. arXiv 2013, arXiv:1312.4400. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 2818–2826. [Google Scholar]

- Nicolaou, M.A.; Gunes, H.; Pantic, M. Continuous prediction of spontaneous affect from multiple cues and modalities in valence-arousal space. IEEE Trans. Affect. Comput. 2011, 2, 92–105. [Google Scholar] [CrossRef]

- Valstar, M.; Gratch, J.; Schuller, B.; Ringeval, F.; Lalanne, D.; Torres Torres, M.; Scherer, S.; Stratou, G.; Cowie, R.; Pantic, M. Avec 2016: Depression, mood, and emotion recognition workshop and challenge. In Proceedings of the 6th International Workshop on Audio/Visual Emotion Challenge, Amsterdam, The Netherlands, 16 October 2016; pp. 3–10. [Google Scholar]

- Viola, P.; Jones, M.J. Robust real-time face detection. Int. J. Comput. Vis. 2004, 57, 137–154. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Advances in Neural Information Processing Systems. 2012, pp. 1097–1105. Available online: http://papers.nips.cc/paper/4824-imagenet-classification-with-deep-convolutional-neural-networks.pdf (accessed on 6 February 2020).

| B1 | B2 | B3 |

|---|---|---|

| 2 × conv layer (32, 3 × 3, 1 × 1) 1 × Maxpooling (2 × 2, 2 × 2) | 3 × conv layer (64, 3 × 3, 1 × 1) 1 × Maxpooling (2 × 2, 2 × 2) | 3 × conv layer (128, 3 × 3, 1 × 1) 1 × Maxpooling (2 × 2, 2 × 2) |

| B4 | B5 | B6 |

| 3 × conv layer (256, 3 × 3, 1 × 1) | 1 × Global average pooling | Softmax (classification) or linear (regression) |

| Facial Expression | Number |

|---|---|

| Neutral | 74,874 |

| Happy | 134,415 |

| Neutral | 25,459 |

| Sad | 14,090 |

| Surprise | 6,378 |

| Fear | 3,803 |

| Disgust | 24,882 |

| Contempt | 3,750 |

| Total | 287,651 |

| Methods | Accuracy |

|---|---|

| Support Vector Machine (Mollahosseini et al. [33]) | 30.00% |

| Microsoft Cognitive Services (Mollahosseini et al. [33]) | 37.00% |

| AlexNet (Mollahosseini et al. [33]) | 58.00% |

| Siqueira [34] | 50.32% |

| Sharma et al. [30] | 56.38% |

| Zeng et al. [31] | 57.31% |

| Ours | 58.50% |

| Methods | RMSE | CC | SAGR | CCC | Params | ||||

|---|---|---|---|---|---|---|---|---|---|

| Valence | Arousal | Valence | Arousal | Valence | Arousal | Valence | Arousal | ||

| SVR [33] | 0.55 | 0.42 | 0.35 | 0.31 | 0.57 | 0.68 | 0.30 | 0.18 | - |

| MTL [34] | 0.46 | 0.37 | - | - | - | - | - | - | 50M |

| AlexNet [33] | 0.37 | 0.41 | 0.66 | 0.54 | 0.74 | 0.65 | 0.60 | 0.34 | 60M |

| Ours | 0.39 | 0.37 | 0.61 | 0.55 | 0.76 | 0.76 | 0.59 | 0.48 | 2M |

| Device | Total Memory | Memory Consumption | Running Speed |

|---|---|---|---|

| iPhone 5s | 1GB | 100 MB | 31 fps |

| iPhone X | 3GB | 150 MB | 60 fps |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guo, Y.; Xia, Y.; Wang, J.; Yu, H.; Chen, R.-C. Real-Time Facial Affective Computing on Mobile Devices. Sensors 2020, 20, 870. https://doi.org/10.3390/s20030870

Guo Y, Xia Y, Wang J, Yu H, Chen R-C. Real-Time Facial Affective Computing on Mobile Devices. Sensors. 2020; 20(3):870. https://doi.org/10.3390/s20030870

Chicago/Turabian StyleGuo, Yuanyuan, Yifan Xia, Jing Wang, Hui Yu, and Rung-Ching Chen. 2020. "Real-Time Facial Affective Computing on Mobile Devices" Sensors 20, no. 3: 870. https://doi.org/10.3390/s20030870

APA StyleGuo, Y., Xia, Y., Wang, J., Yu, H., & Chen, R.-C. (2020). Real-Time Facial Affective Computing on Mobile Devices. Sensors, 20(3), 870. https://doi.org/10.3390/s20030870