Automatic Museum Audio Guide

Abstract

1. Introduction

2. Related Work

3. Proposed Hands-Free Museum Audio Guide Hardware

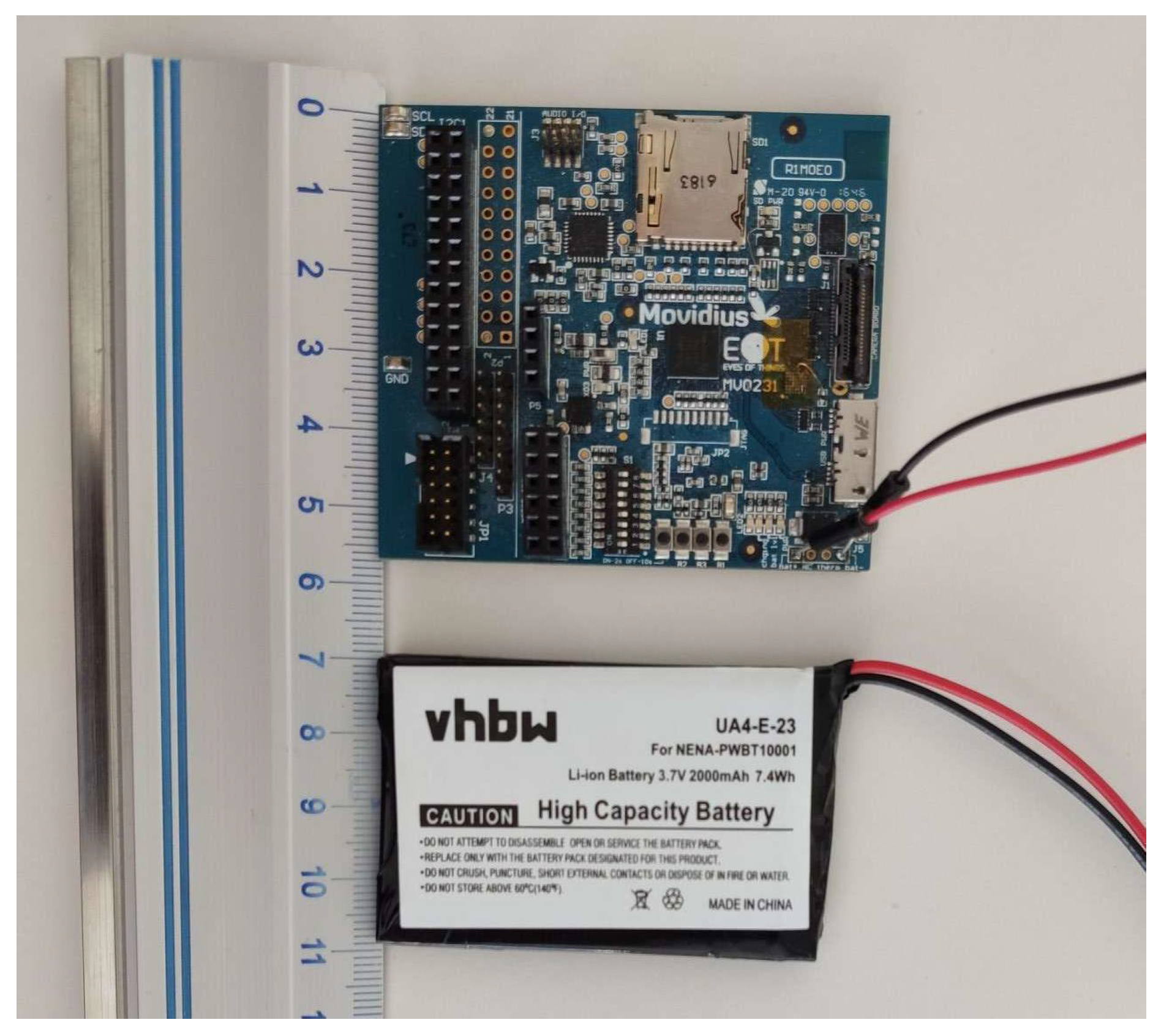

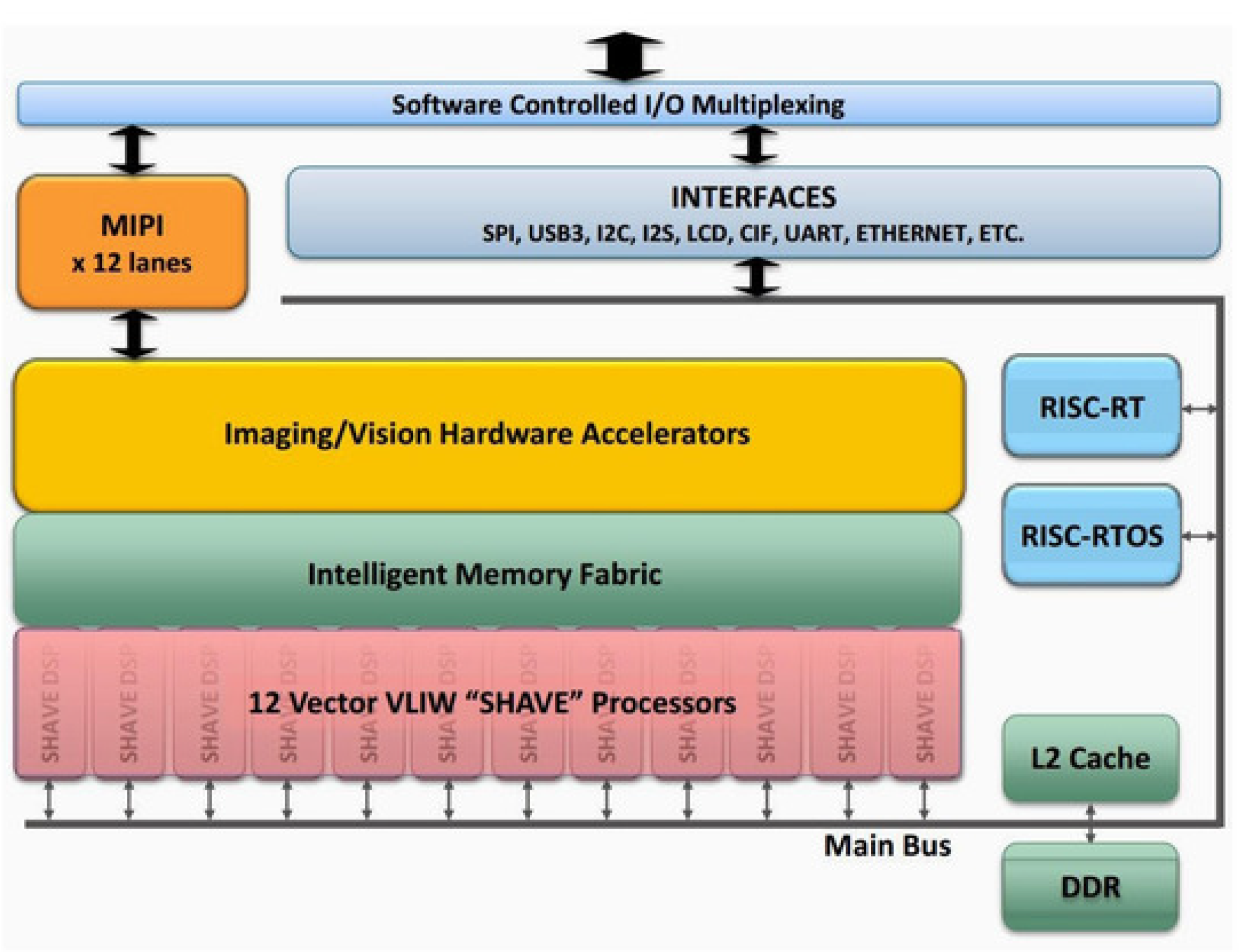

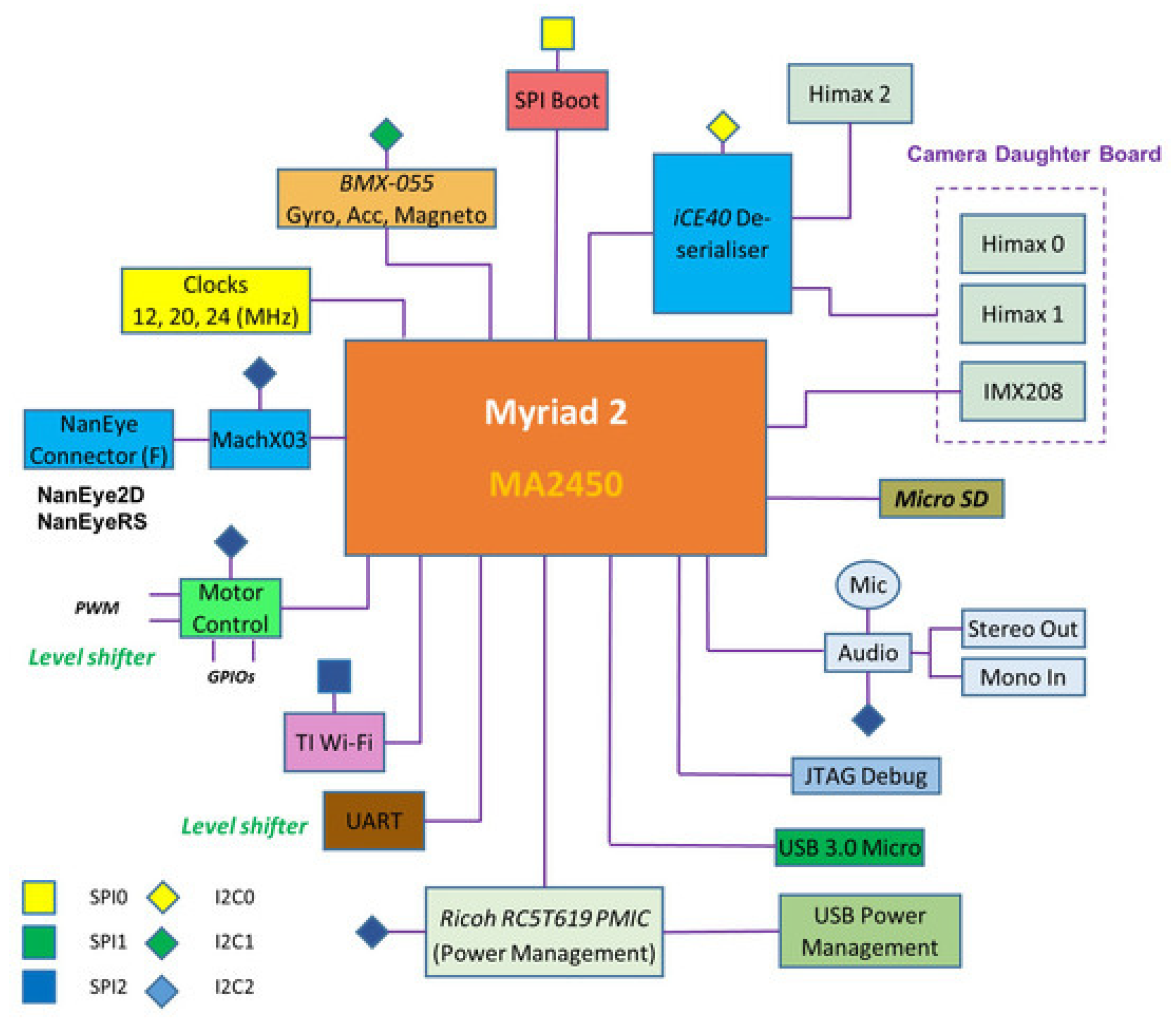

3.1. EoT Device

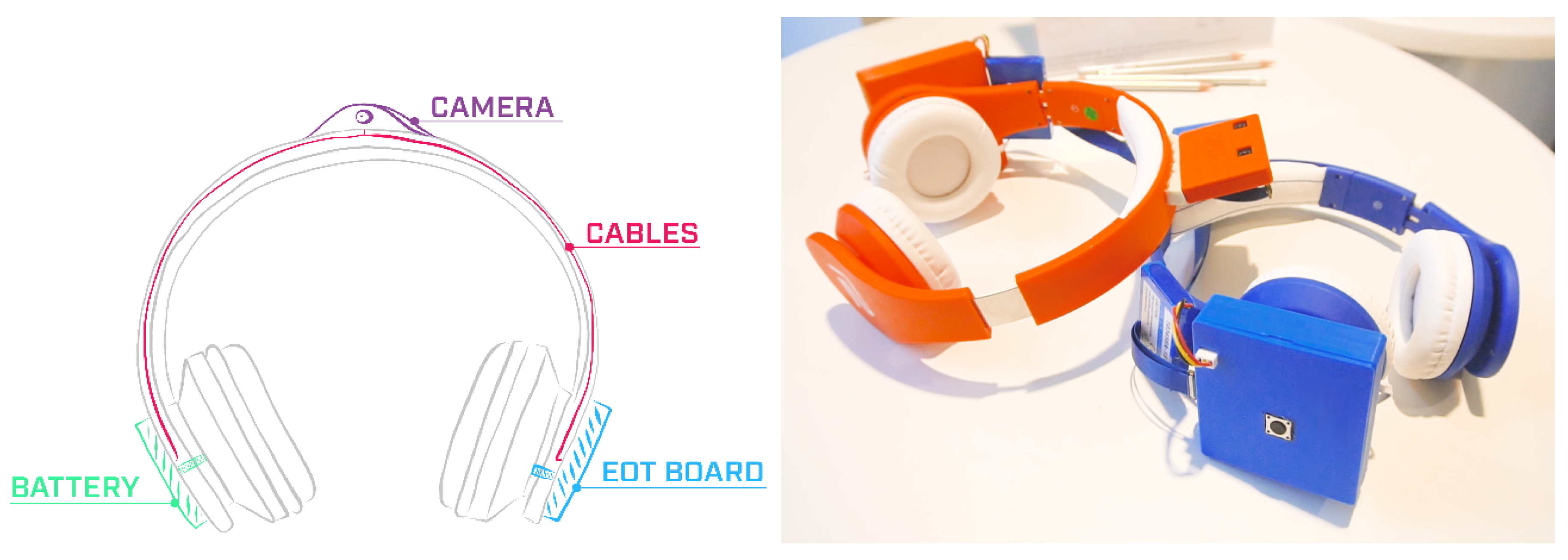

3.2. Headset Design

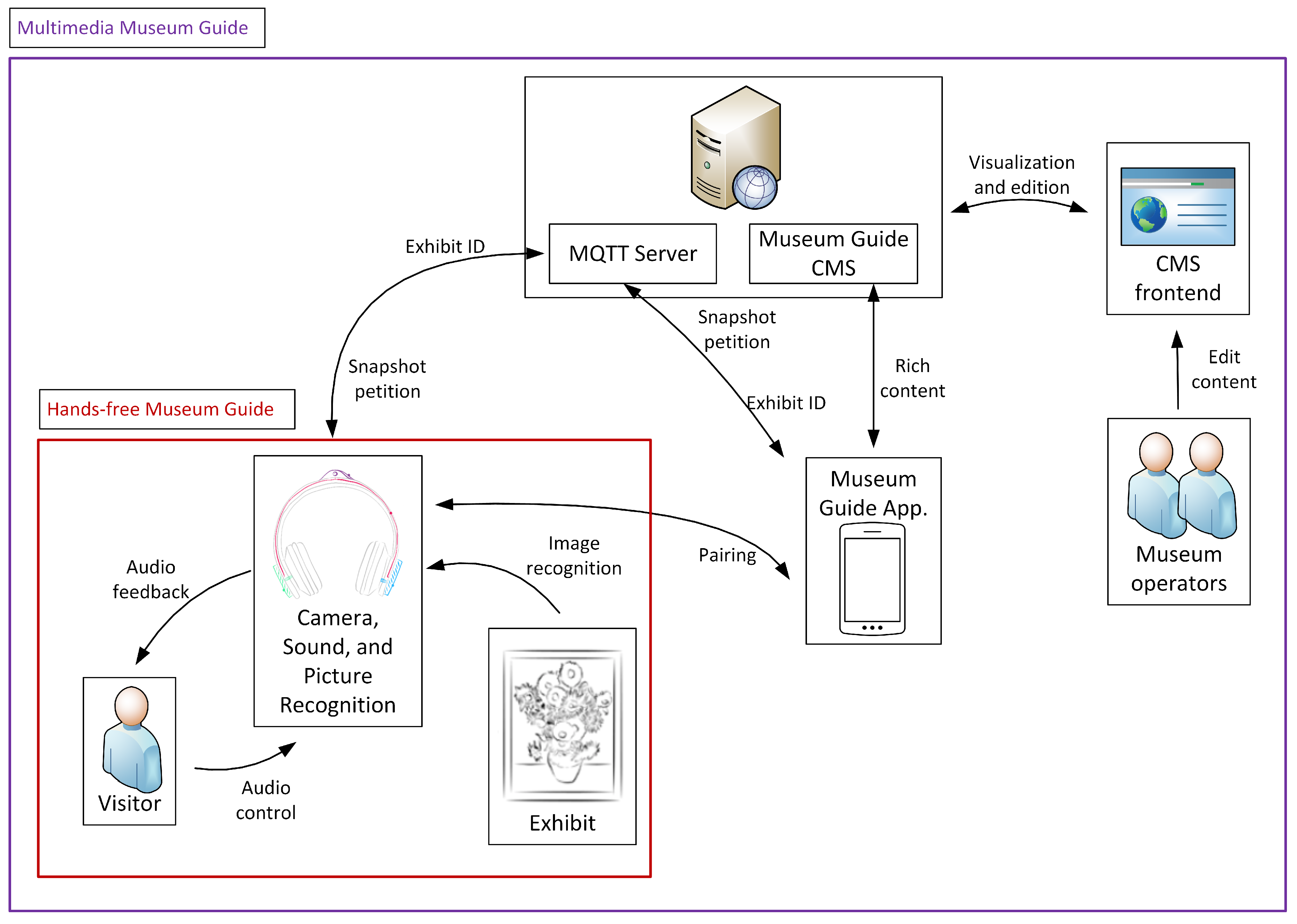

4. System Design

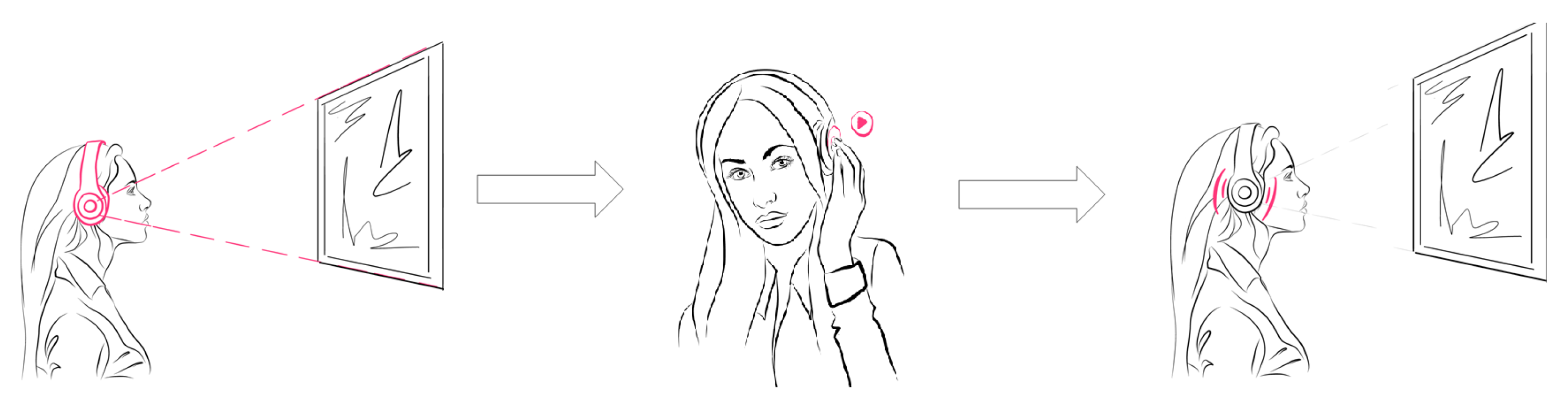

- Visitor enters the exhibition and receives EoT enhanced headset;

- Visitor explores the exhibition;

- Visitor is notified about available audio interpretation from the recognized exhibits;

- Visitor listens to audio interpretation and continues exploring the exhibition.

- Visitor enters the exhibition and receives the enhanced headset which connects to an application on the visitor’s smartphone;

- Visitor explores the exhibition;

- System detects available exhibits;

- Visitor receives rich content and/or performs interaction.

5. Applications

- EoT-based headset application;

- Museum Guide CMS;

- Museum Guide mobile application;

- CMS frontend for museum operators.

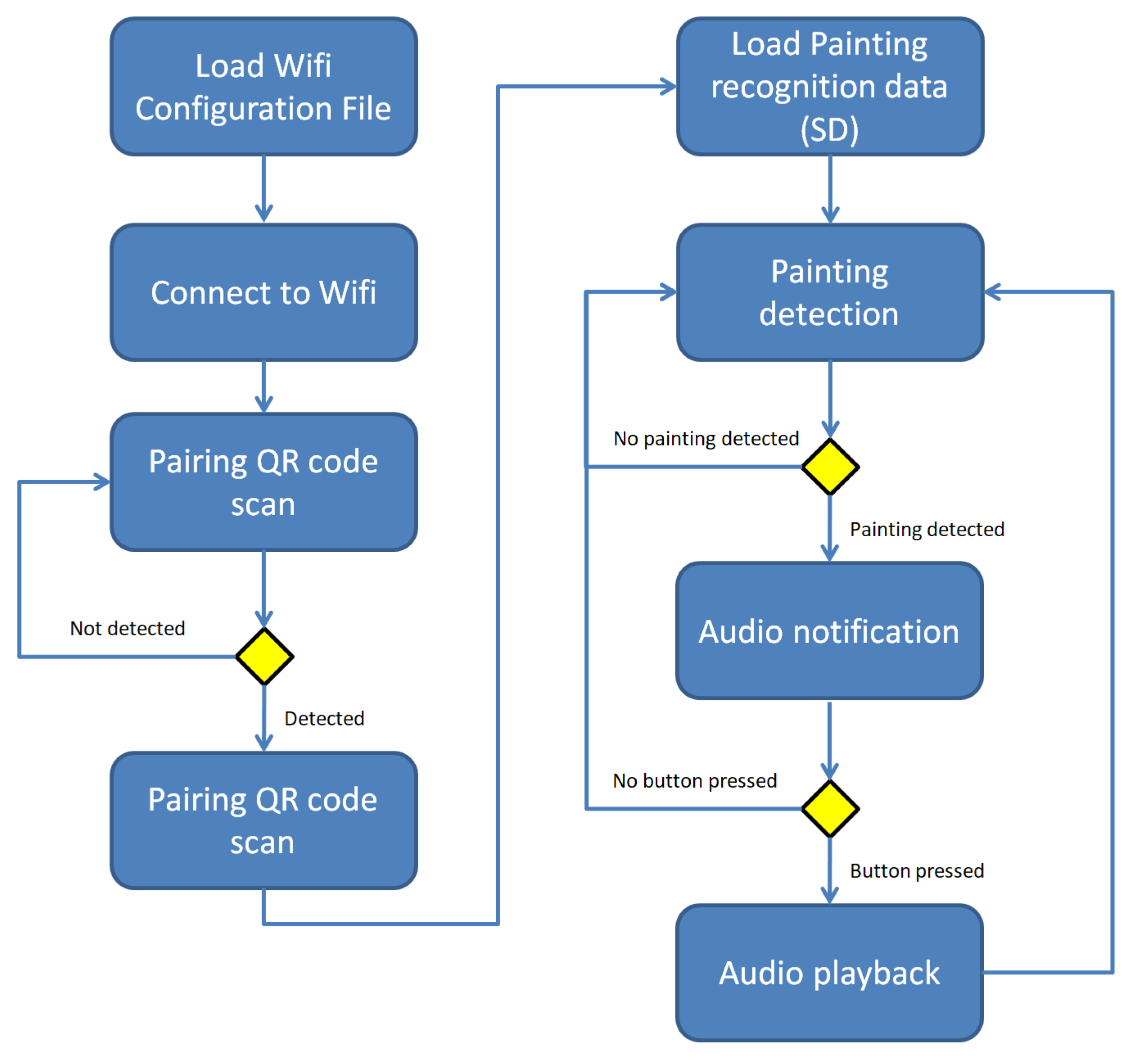

- The camera module provides control functionality for the camera. It provides frame rate control, auto-exposure (AE) and automatic gain control (AGC). The raw frames from the camera are pre-processed by denoising them, eliminating dead pixels, applying lens shading correction, gathering statistics for AE and AGC as well as applying gamma correction.

- The painting recognition module takes care of recognising paintings based on their visual appearance (see Section 6).

- The network communication module handles communication with the MQTT. It sends out notifications for recognized paintings and receives requests to take snapshots of what the camera sees.

- The control module contains the code that ties the functionalities of the other modules together and adds other functionalities. It handles the pairing of a companion device, signaling that a new painting was recognized and handling input from the push button to control audio pause and playback. Two implementation variants of this module exist: One for the hands-free scenario and one for the multimedia museum guide scenario. Pairing and network communication are only done in the multimedia version. Otherwise, the functionality is the same.

5.1. Scenario 1—Hands-Free Museum Guide

- Visitor enters the exhibition and receives a headset which is ready to use. No other devices will be handed out. The visitor only has to put on the headphones and start exploring the exhibition.

- Visitor explores the exhibition. Now the visit starts as like any other usual visit. No devices are carried around the neck or in the hands of the visitor. The visitor is only equipped with headphones. During the visit, the EoT device permanently tracks the view of the visitor. “Always-on” camera and image recognition algorithms permanently try to detect exhibits for which content is available. This works in the background without disturbing the visitor. And even if an exhibit is positively detected, the system waits for a specific time before the visitor is notified. The reason for that is that we generally want to provide the visitor with information about an exhibit only in a moment where there is a very high probability that the visitor is actually focusing on the respective exhibit, i.e., is open for additional knowledge about that very exhibit. Otherwise, the visitor would be notified too often. For this reason a time threshold has been implemented.

- Visitor is notified about available audio interpretation. After a positive image recognition match the device only notifies the visitor about the fact that content is available with an unobtrusive and discrete sound signal. Thus, playback is not started automatically until only after user action. The main reason for this is that automatic playback may be too intervening and disturbing. It would directly force the visitor to react (to stop the playback or take off the headphones) if he/she does not want playback at that time.

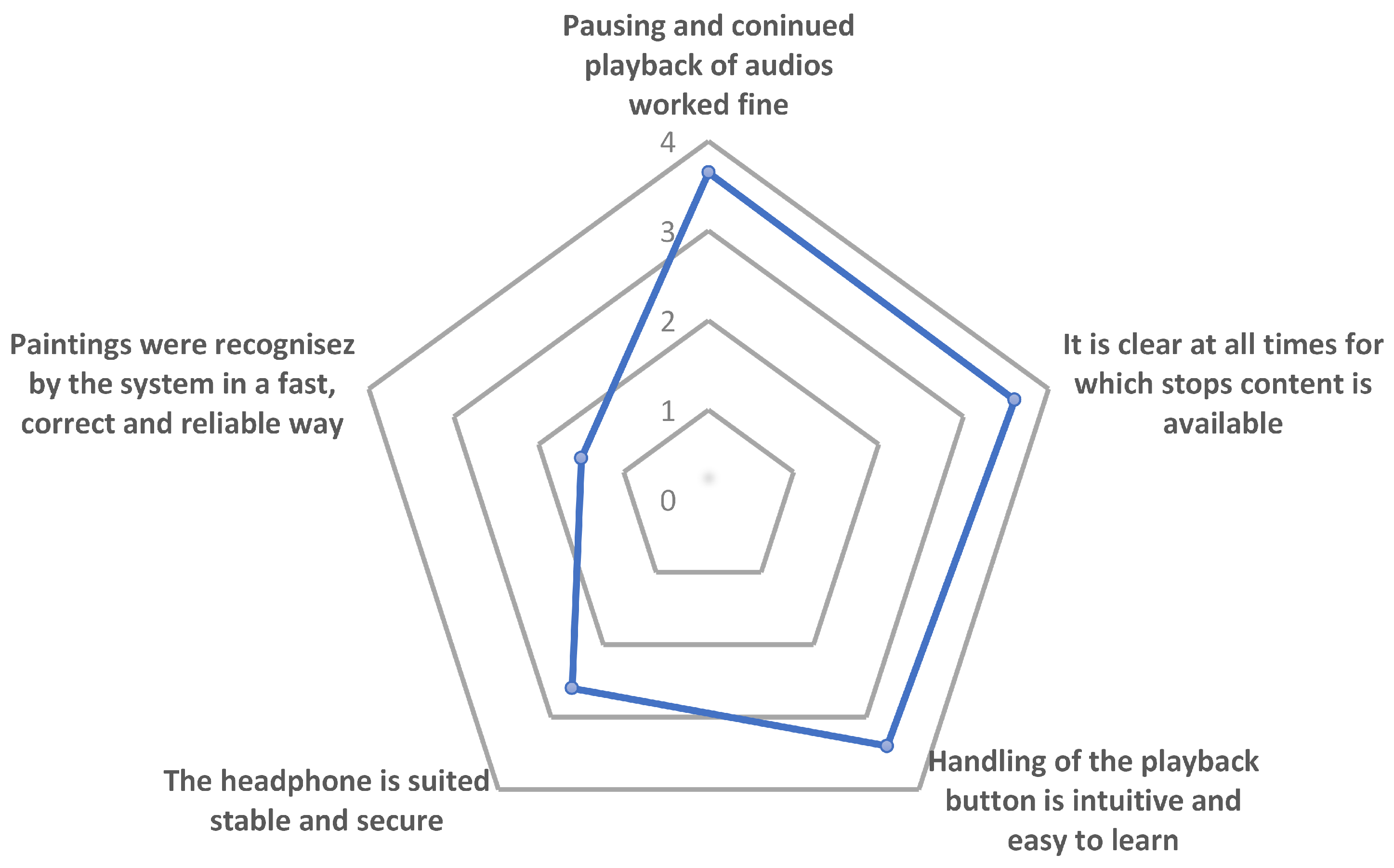

- Visitor listens to audio interpretation. This is where the only user interaction with the device takes place. As simplicity is key, starting playback is triggered simply by pressing the (one and only) user button on the enhanced headset. This starts the audio playback. By pressing the button again, playback is paused. To continue a paused playback, the button has to be pushed again. This seems to be the most intuitive way for user interaction in order to cover the minimum user control of play and pause. Image recognition continues during listening to active playback. Following our observations, many visitors continue listening to an exhibit audio while moving to other exhibits. Therefore, recognition continues and triggers the detection signal while listening to a previously detected exhibit audio. If a user then taps the play button the currently played audio is stopped and the new one is played.

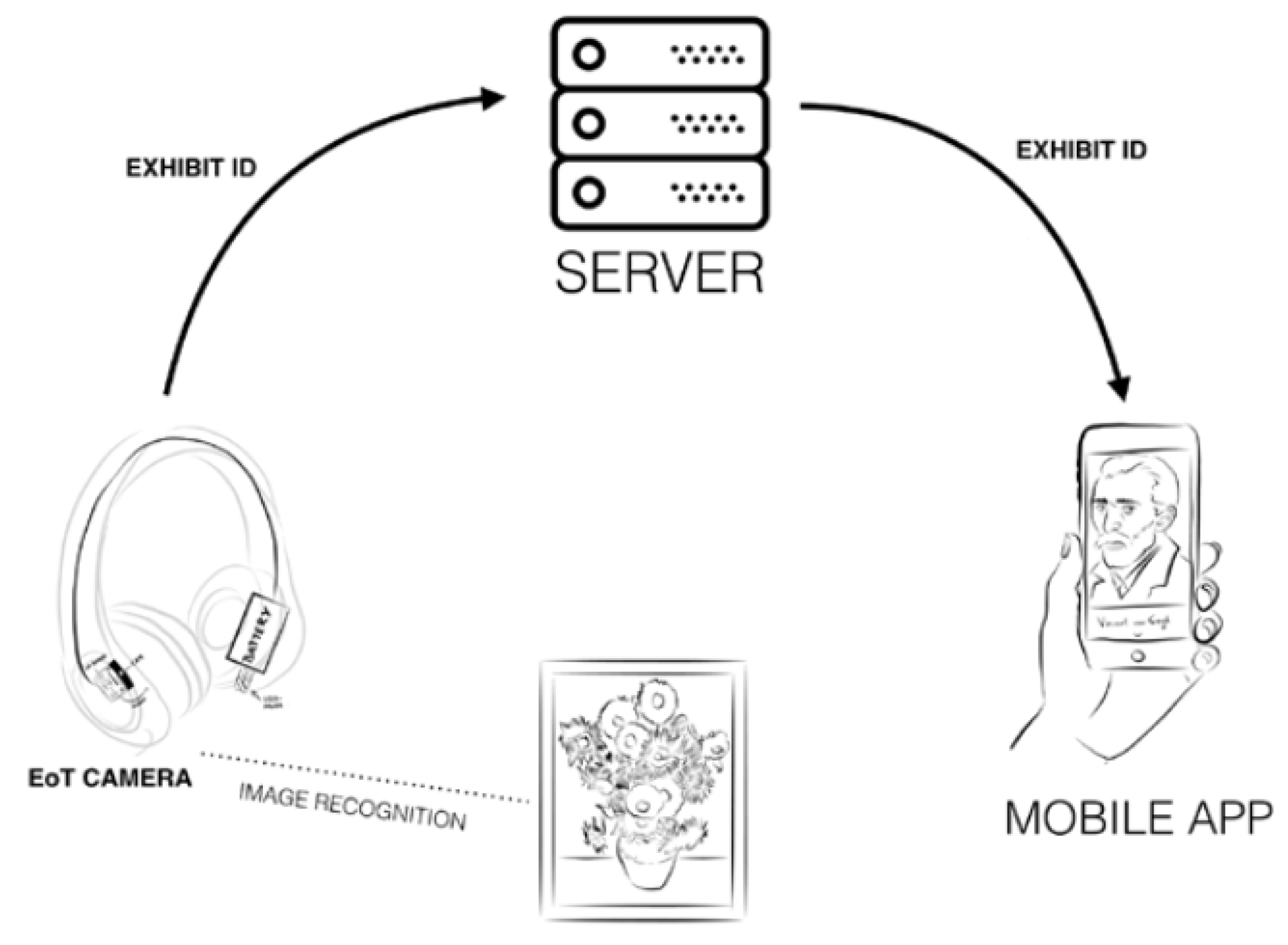

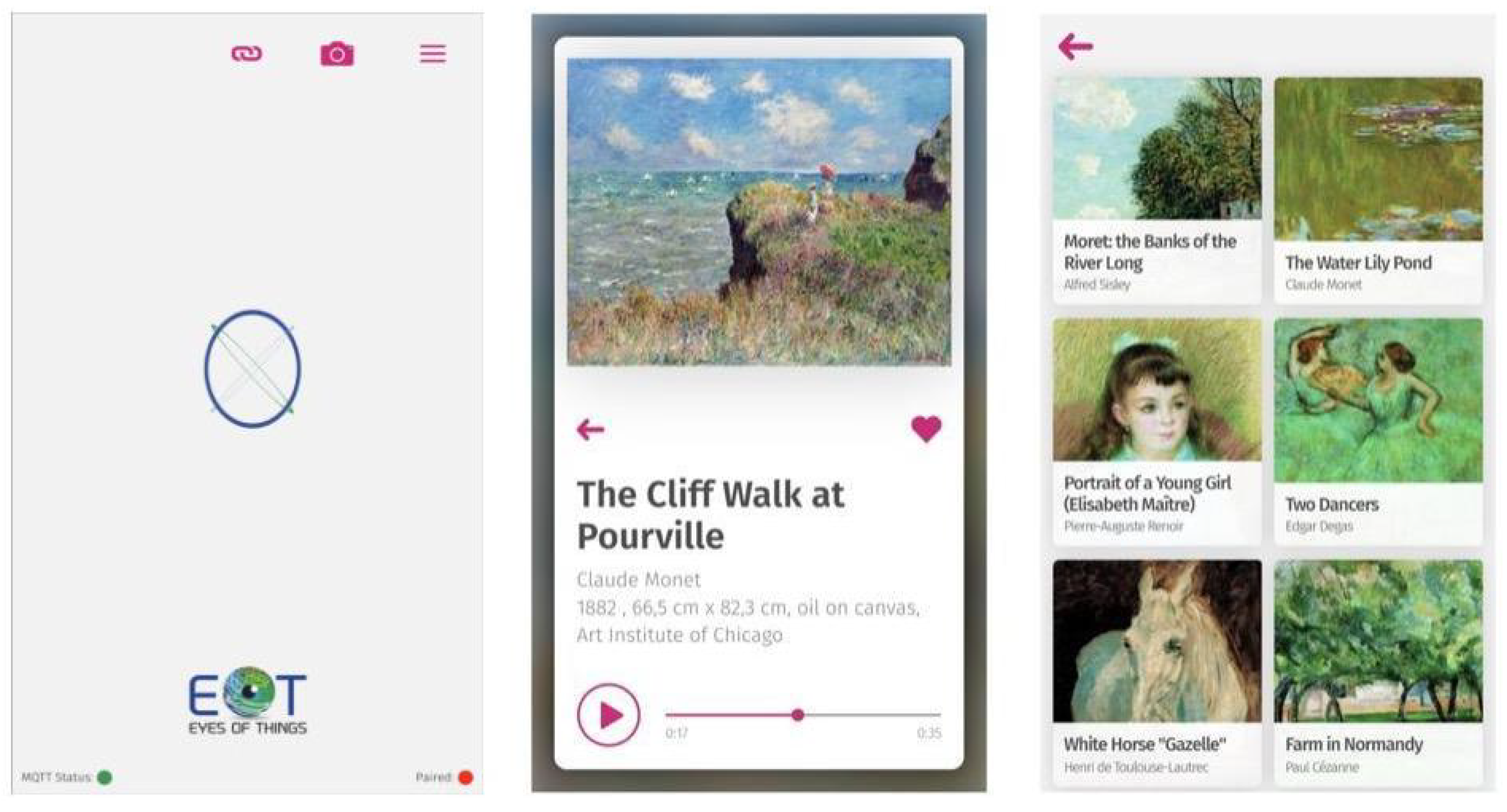

5.2. Scenario 2—Multimedia Museum Guide

- The visitor enters the exhibition and receives the enhanced headset which connects to an application on the visitor’s smartphone. The “visitor application” can be downloaded on smartphones/tablets or alternatively, visitors may receive a mobile touch device from the museum which is pre-deployed with the application and preconnected to the device. An easy to use pairing mode allows one to quickly connect any smartphone to the museum guide device via the application using the MQTT protocol combined with QR code recognition.

- The visitor explores the exhibition. The visitor puts on the headset and starts the museum visit. During the visit the device permanently tracks the view of the visitor. Image recognition algorithms automatically detect exhibits for which content is available.

- The system detects available exhibits. After an image recognition match (combined with a specific threshold time), the headset device notifies the mobile application via MQTT that a registered exhibit has been detected.

- The visitor receives rich content and/or performs interaction. The visitor is notified via audio signal on the headphones and in the mobile application, and then is provided with rich content, for example, the biography of the artist, the “making of” of the image, other related artworks, etc. The user is allowed to interactively zoom and pinch through high-resolution images or consume videos. This makes the system a full developed multimedia museum guiding system.

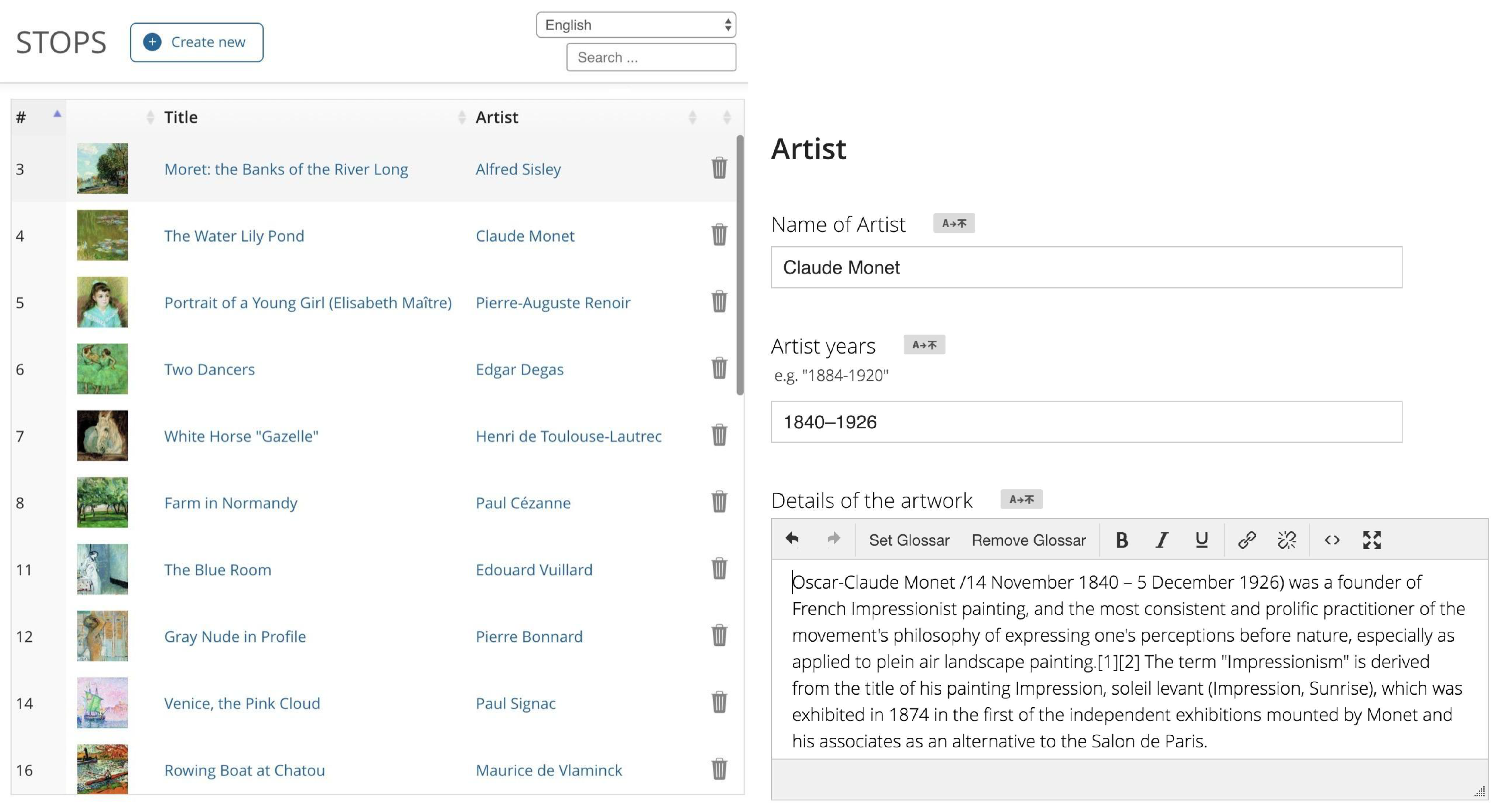

- Tech-Stack: Apache Webserver, PHP 7.0.13, MySQL Database, CodeIgniter PHP-Framework, NodeJS;

- Management and hosting of media files (Images, Audios);

- Management of all exhibits;

- HTTP-based API (JSON-format);

- Caching of files for increased performance.

- Via web browser (no additional software needed);

- Easy adding and editing of exhibits;

- Rich text editor for exhibit content;

- Photo/video/audio uploader with:

- -

- Automatic background conversion;

- -

- File size reduction mechanisms;

- -

- In-browser editing/cropping of images;

- File manager.

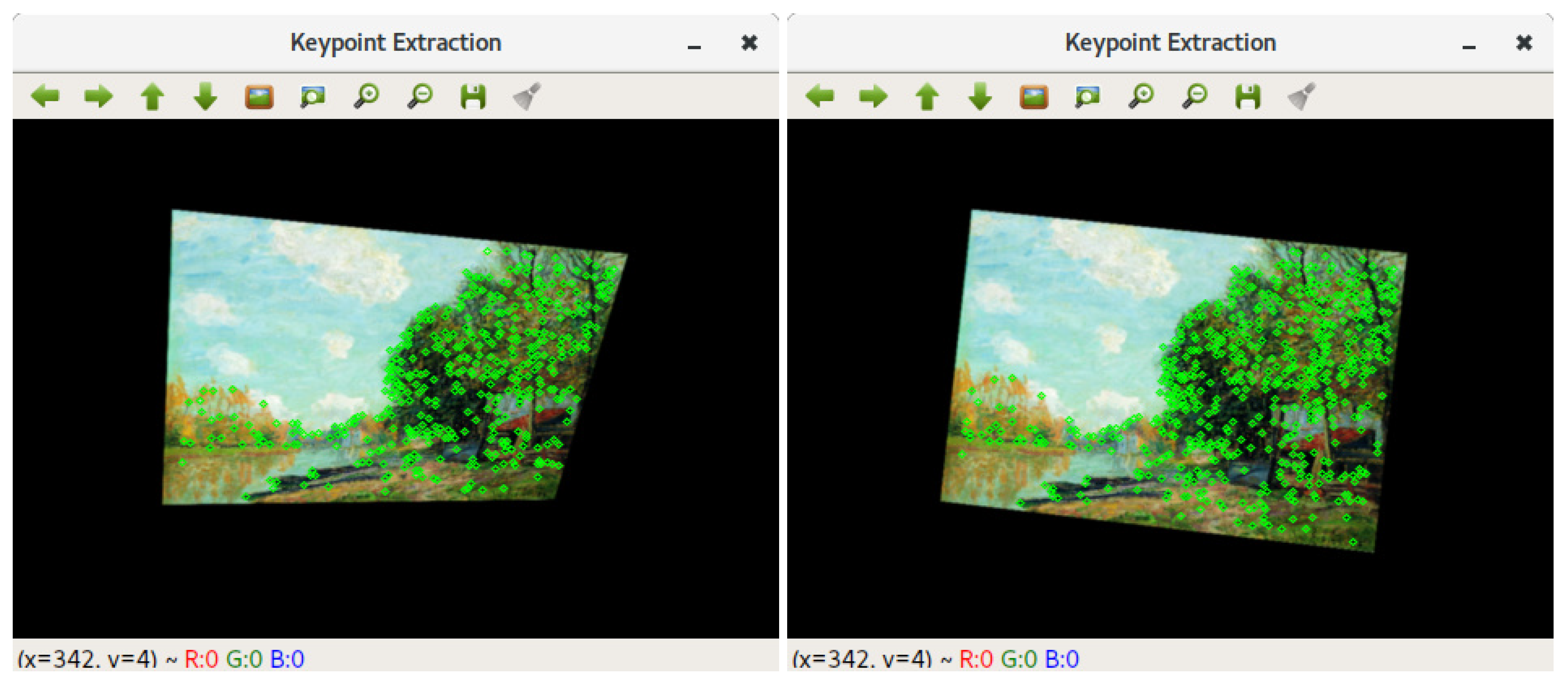

6. Painting Recognition

6.1. Keypoint Extraction

6.2. Keypoint Classification

6.3. Geometric Refinement

6.4. Model Training

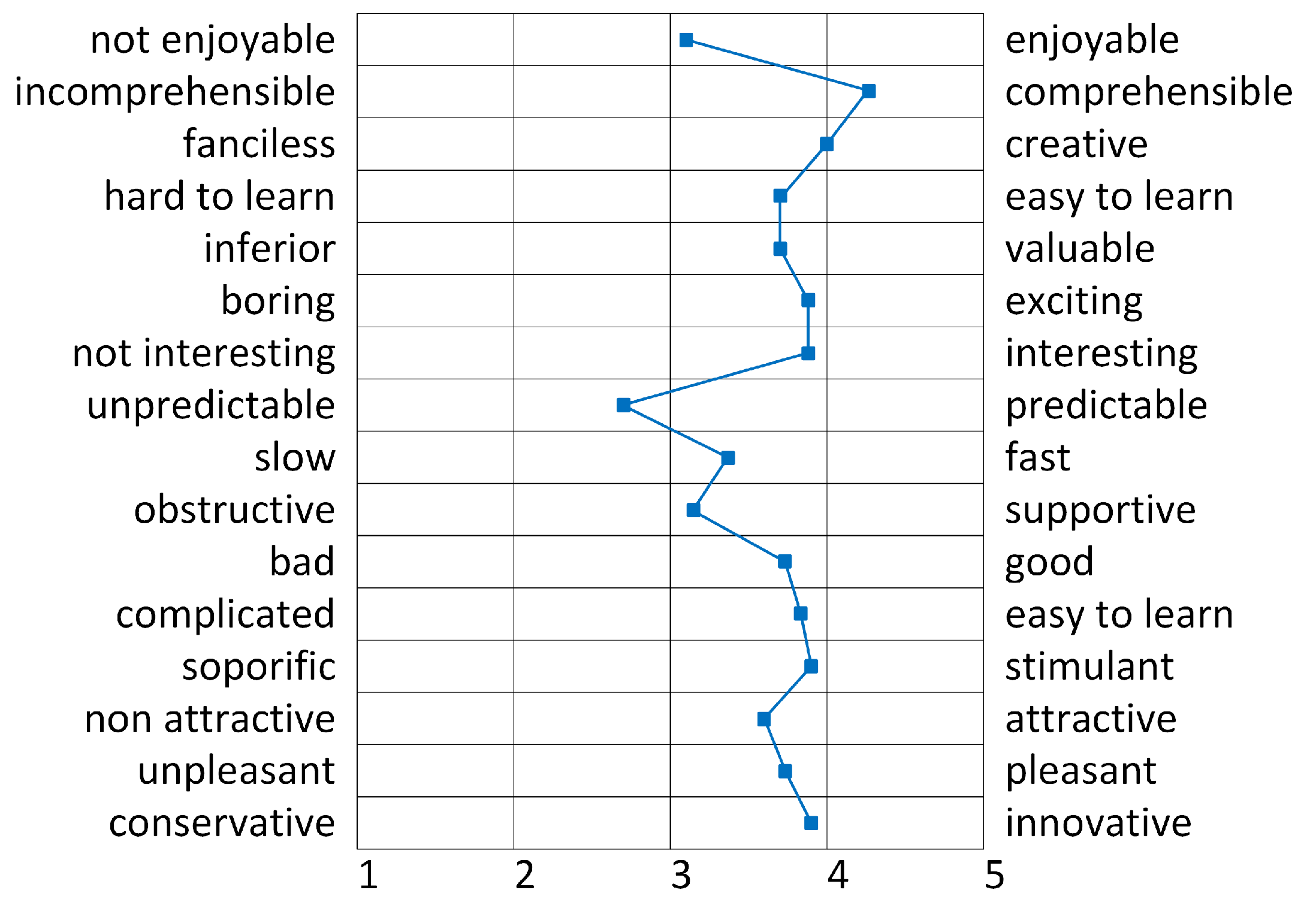

7. Results

7.1. Painting Recognition Results

- Pitch: degrees to degrees (looking from above or below);

- Yaw: degrees to degrees (looking from left or right);

- Roll: degrees to degrees (tilting the head/camera by up to 10 degrees).

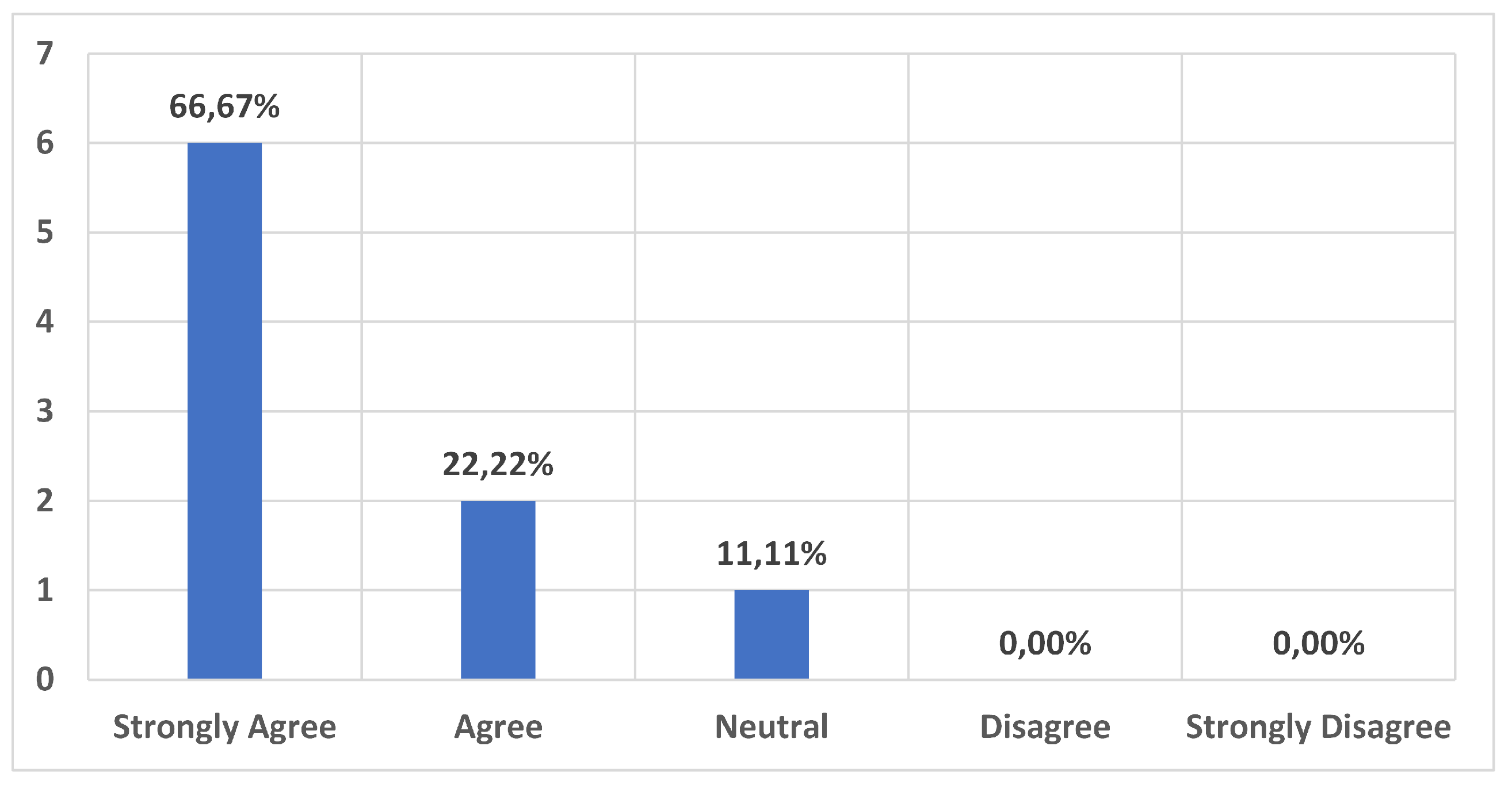

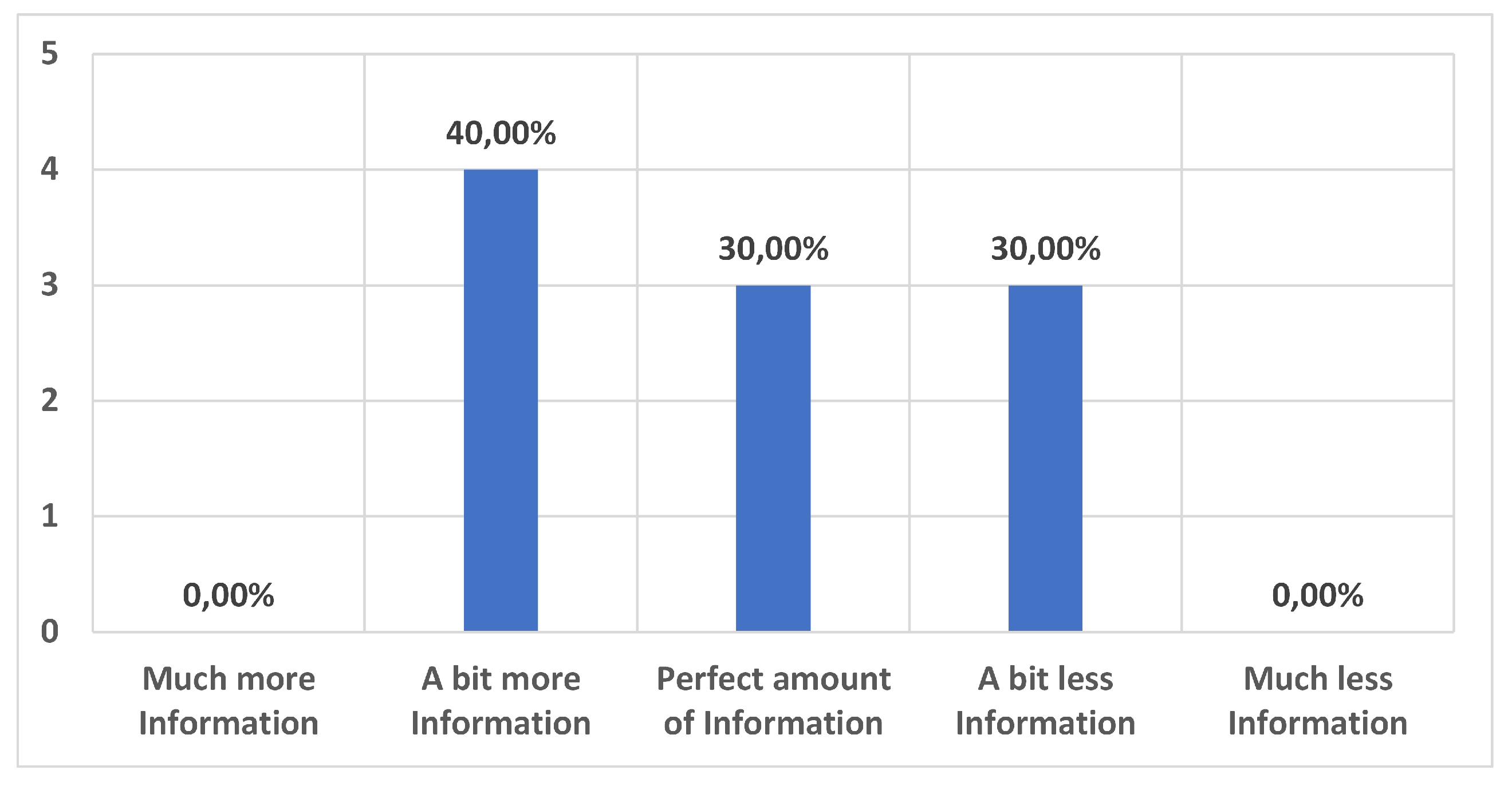

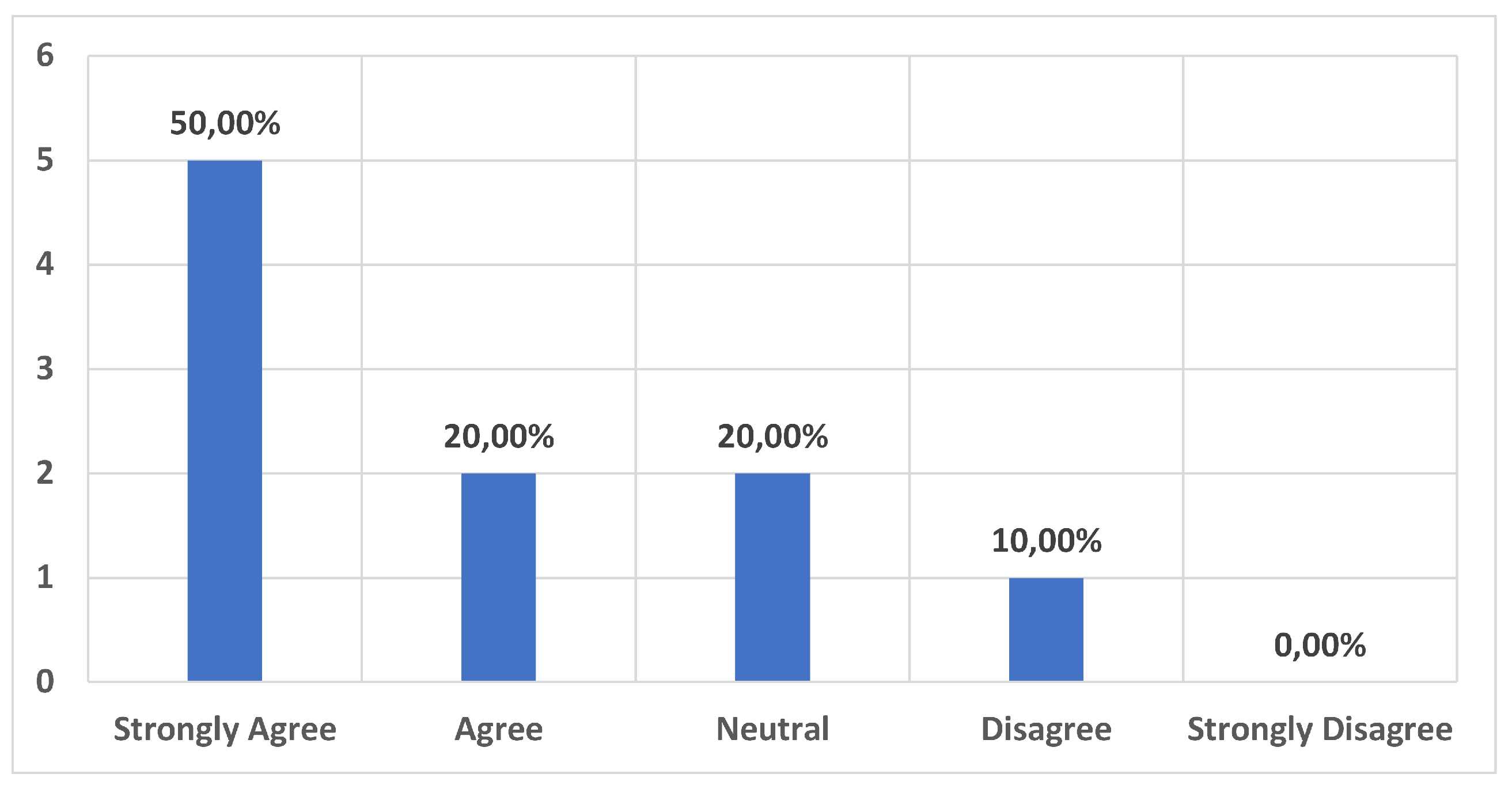

7.2. Test Pilot in Albertina Museum

7.3. Processing Time and Power Consumption

8. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Hu, F. Classification and Regression Trees, 1st ed.; CRC Press: Boca Raton, FL, USA, 2013. [Google Scholar] [CrossRef]

- Commission, E. Report from the Workshop on Cyber-Physical Systems: Uplifting Europe’s Innovation Capacity. 2013. Available online: https://ec.europa.eu/digital-single-market/en/news/report-workshop-cyber-physical-systems-uplifting-europe’s-innovation-capacity (accessed on 31 January 2020).

- Szeliski, R. Computer Vision: Algorithms and Applications; Springer Science & Business Media: London, UK, 2010. [Google Scholar]

- Belbachir, A.N. Smart Cameras; Springer: London, UK, 2010. [Google Scholar]

- BDTI. Implementing Vision Capabilities in Embedded Systems. Available online: https://www.bdti.com/MyBDTI/pubs/BDTI_ESC_Boston_Embedded_Vision.pdf (accessed on 31 January 2020).

- Kisačanin, B.; Bhattacharyya, S.S.; Chai, S. Embedded Computer Vision; Springer International Publishing: Cham, Switzerland, 2009. [Google Scholar]

- Bailey, D. Design for Embedded Image Processing on FPGAs; John Wiley & Sons Asia Pte Ltd.: Singapore, 2011. [Google Scholar]

- Akyildiz, I.F.; Melodia, T.; Chowdhury, K.R. A survey on wireless multimedia sensor networks. Comput. Netw. 2007, 51, 921–960. [Google Scholar] [CrossRef]

- Farooq, M.O.; Kunz, T. Wireless multimedia sensor networks testbeds and state-of-the-art hardware: A survey. In Communication and Networking, Proceedings of the International Conference on Future Generation Communication and Networking, Jeju Island, Korea, 8–10 December 2011; Springer: Berlin, Heidelberg, Germany, 2011; pp. 1–14. [Google Scholar]

- Almalkawi, I.T.; Guerrero Zapata, M.; Al-Karaki, J.N.; Morillo-Pozo, J. Wireless multimedia sensor networks: Current trends and future directions. Sensors 2010, 10, 6662–6717. [Google Scholar] [CrossRef] [PubMed]

- Soro, S.; Heinzelman, W. A Survey of Visual Sensor Networks. Available online: https://www.hindawi.com/journals/am/2009/640386/ (accessed on 30 January 2020).

- Fernández-Berni, J.; Carmona-Galán, R.; Rodríguez-Vázquez, Á. Vision-enabled WSN nodes: State of the art. In Low-Power Smart Imagers for Vision-Enabled Sensor Networks; Springer: Berlin/Heidelberg, Germany, 2012; pp. 5–20. [Google Scholar]

- Tavli, B.; Bicakci, K.; Zilan, R.; Barcelo-Ordinas, J.M. A survey of visual sensor network platforms. Multimedia Tools Appl. 2012, 60, 689–726. [Google Scholar] [CrossRef]

- Chen, P.; Ahammad, P.; Boyer, C.; Huang, S.I.; Lin, L.; Lobaton, E.; Meingast, M.; Oh, S.; Wang, S.; Yan, P.; et al. CITRIC: A low-bandwidth wireless camera network platform. In Proceedings of the 2008 Second ACM/IEEE International Conference on Distributed Smart Cameras, Stanford, CA, USA, 7–11 September 2008; pp. 1–10. [Google Scholar]

- Hengstler, S.; Prashanth, D.; Fong, S.; Aghajan, H. MeshEye: A hybrid-resolution smart camera mote for applications in distributed intelligent surveillance. In Proceedings of the 6th International Conference on Information Processing in Sensor Networks, Cambridge, MA, USA, 25–27 April 2007; pp. 360–369. [Google Scholar]

- Kerhet, A.; Magno, M.; Leonardi, F.; Boni, A.; Benini, L. A low-power wireless video sensor node for distributed object detection. J. Real-Time Image Process. 2007, 2, 331–342. [Google Scholar] [CrossRef]

- Kleihorst, R.; Abbo, A.; Schueler, B.; Danilin, A. Camera mote with a high-performance parallel processor for real-time frame-based video processing. In Proceedings of the 2007 IEEE Conference on Advanced Video and Signal Based Surveillance, London, UK, 5–7 September 2007; pp. 69–74. [Google Scholar]

- Feng, W.C.; Code, B.; Kaiser, E.; Shea, M.; Feng, W.; Bavoil, L. Panoptes: A scalable architecture for video sensor networking applications. ACM Multimedia 2003, 1, 151–167. [Google Scholar]

- Boice, J.; Lu, X.; Margi, C.; Stanek, G.; Zhang, G.; Manduchi, R.; Obraczka, K. Meerkats: A Power-Aware, Self-Managing Wireless Camera Network For Wide Area Monitoring. Available online: http://users.soe.ucsc.edu/~manduchi/papers/meerkats-dsc06-final.pdf (accessed on 30 January 2020).

- Murovec, B.; Perš, J.; Kenk, V.S.; Kovačič, S. Towards commoditized smart-camera design. J. Syst. Archit. 2013, 59, 847–858. [Google Scholar] [CrossRef]

- Qualcomm, Snapdragon. Available online: http://www.qualcomm.com/snapdragon (accessed on 30 January 2020).

- Deniz, O. EoT Project. Available online: http://eyesofthings.eu (accessed on 30 January 2020).

- Deniz, O.; Vallez, N.; Espinosa-Aranda, J.L.; Rico-Saavedra, J.M.; Parra-Patino, J.; Bueno, G.; Moloney, D.; Dehghani, A.; Dunne, A.; Pagani, A.; et al. Eyes of Things. Sensors 2017, 17, 1173. [Google Scholar] [CrossRef] [PubMed]

- Wacker, P.; Kreutz, K.; Heller, F.; Borchers, J.O. Maps and Location: Acceptance of Modern Interaction Techniques for Audio Guides. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; pp. 1067–1071. [Google Scholar]

- Kenteris, M.; Gavalas, D.; Economou, D. Electronic mobile guides: A survey. Pers. Ubiquitous Comput. 2011, 15, 97–111. [Google Scholar] [CrossRef]

- Abowd, G.D.; Atkeson, C.G.; Hong, J.; Long, S.; Kooper, R.; Pinkerton, M. Cyberguide: A mobile context-aware tour guide. Wireless Netw. 1997, 3, 421–433. [Google Scholar] [CrossRef]

- Kim, D.; Seo, D.; Yoo, B.; Ko, H. Development and Evaluation of Mobile Tour Guide Using Wearable and Hand-Held Devices. In Proceedings of the International Conference on Human-Computer Interaction, Toronto, ON, Canada, 17–22 July 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 285–296. [Google Scholar]

- Sikora, M.; Russo, M.; Đerek, J.; Jurčević, A. Soundscape of an Archaeological Site Recreated with Audio Augmented Reality. ACM Trans. Multimedia Comput. Commu. Appl. 2018, 14, 74. [Google Scholar] [CrossRef]

- Lee, G.A.; Dünser, A.; Kim, S.; Billinghurst, M. CityViewAR: A mobile outdoor AR application for city visualization. In Proceedings of the 2012 IEEE International Symposium on Mixed and Augmented Reality-Arts, Media, and Humanities (ISMAR-AMH), Altanta, GA, USA, 5–8 November 2012; pp. 57–64. [Google Scholar]

- DüNser, A.; Billinghurst, M.; Wen, J.; Lehtinen, V.; Nurminen, A. Exploring the use of handheld AR for outdoor navigation. Comput. Graphics 2012, 36, 1084–1095. [Google Scholar] [CrossRef]

- Baldauf, M.; Fröhlich, P.; Hutter, S. KIBITZER: A wearable system for eye-gaze-based mobile urban exploration. In Proceedings of the 1st Augmented Human International Conference, Megève, France, 2–3 April 2010; pp. 1–5. [Google Scholar]

- Szymczak, D.; Rassmus-Gröhn, K.; Magnusson, C.; Hedvall, P.O. A real-world study of an audio-tactile tourist guide. In Proceedings of the 14th International Conference on Human-Computer Interaction with Mobile Devices and Services, San Francsico, CA, USA, 21–24 September 2012; pp. 335–344. [Google Scholar]

- Lim, J.H.; Li, Y.; You, Y.; Chevallet, J.P. Scene Recognition with Camera Phones for Tourist Information Access. In Proceedings of the 2007 IEEE International Conference on Multimedia and Expo, Beijing, China, 2–5 July 2007; pp. 100–103. [Google Scholar]

- Skoryukina, N.; Nikolaev, D.P.; Arlazarov, V.V. 2D art recognition in uncontrolled conditions using one-shot learning. In Proceedings of the International Conference on Machine Vision, Amsterdam, The Netherlands, 1 October 2019; p. 110412E. [Google Scholar]

- Fasel, B.; Gool, L.V. Interactive Museum Guide: Accurate Retrieval of Object Descriptions. In Adaptive Multimedia Retrieval; Springer: Berlin/Heidelberg, Germany, 2006; pp. 179–191. [Google Scholar]

- Temmermans, F.; Jansen, B.; Deklerck, R.; Schelkens, P.; Cornelis, J. The mobile Museum guide: Artwork recognition with eigenpaintings and SURF. In Proceedings of the 12th International Workshop on Image Analysis for Multimedia Interactive Services, Delft, The Netherlands, 13–15 April 2011. [Google Scholar]

- Greci, L. An Augmented Reality Guide for Religious Museum. In Proceedings of the International Conference on Augmented Reality, Virtual Reality and Computer Graphics, Lecce, Italy, 15–18 June 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 280–289. [Google Scholar]

- Raptis, G.E.; Katsini, C.P.; Chrysikos, T. CHISTA: Cultural Heritage Information Storage and reTrieval Application. In Proceedings of the 6th EuroMed Conference, Nicosia, Cyprus, 29 October–3 November 2018; pp. 163–170. [Google Scholar]

- Ali, S.; Koleva, B.; Bedwell, B.; Benford, S. Deepening Visitor Engagement with Museum Exhibits through Hand-crafted Visual Markers. In Proceedings of the 2018 Designing Interactive Systems Conference (DIS ’18), Hong Kong, China, 9–13 June 2018; pp. 523–534. [Google Scholar]

- Ng, K.H.; Huang, H.; O’Malley, C. Treasure codes: Augmenting learning from physical museum exhibits through treasure hunting. Pers. Ubiquitous Comput. 2018, 22, 739–750. [Google Scholar] [CrossRef]

- Wein, L. Visual recognition in museum guide apps: Do visitors want it? In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Toronto, ON, Canada, 26 April 2014; pp. 635–638. [Google Scholar]

- Ruf, B.; Kokiopoulou, E.; Detyniecki, M. Mobile Museum Guide Based on Fast SIFT Recognition. Adaptive Multimedia Retrieval. Identifying, Summarizing, and Recommending Image and Music; Detyniecki, M., Leiner, U., Nürnberger, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2010; pp. 170–183. [Google Scholar]

- Serubugo, S.; Skantarova, D.; Nielsen, L.; Kraus, M. Comparison of Wearable Optical See-through and Handheld Devices as Platform for an Augmented Reality Museum Guide. In Proceedings of the 12th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications; SCITEPRESS Digital Library: Berlin, Germany, 2017; pp. 179–186. [Google Scholar] [CrossRef]

- Altwaijry, H.; Moghimi, M.; Belongie, S. Recognizing locations with google glass: A case study. In Proceedings of the IEEE winter conference on applications of computer vision, Steamboat Springs, CO, USA, 24–26 March 2014; pp. 167–174. [Google Scholar]

- Yanai, K.; Tanno, R.; Okamoto, K. Efficient mobile implementation of a cnn-based object recognition system. In Proceedings of the 24th ACM International Conference on Multimedia, Amsterdam, The Netherlands, October 2016; pp. 362–366. [Google Scholar]

- Seidenari, L.; Baecchi, C.; Uricchio, T.; Ferracani, A.; Bertini, M.; Bimbo, A.D. Deep artwork detection and retrieval for automatic context-aware audio guides. ACM Trans. Multimedia Comput. Commun. Appl. 2017, 13, 35. [Google Scholar] [CrossRef]

- Seidenari, L.; Baecchi, C.; Uricchio, T.; Ferracani, A.; Bertini, M.; Del Bimbo, A. Wearable systems for improving tourist experience. In Multimodal Behavior Analysis in the Wild; Elsevier: Amsterdam, The Netherlands, 2019; pp. 171–197. [Google Scholar]

- Crystalsound Audio Guide. Available online: https://crystal-sound.com/en/audio-guide (accessed on 30 January 2020).

- Locatify. Available online: https://locatify.com/ (accessed on 30 January 2020).

- Copernicus Guide. Available online: http://www.copernicus-guide.com/en/index-museum.html (accessed on 30 January 2020).

- xamoom Museum Guide. Available online: https://xamoom.com/museum/ (accessed on 30 January 2020).

- Orpheo Touch. Available online: https://orpheogroup.com/us/products/visioguide/orpheo-touch (accessed on 30 January 2020).

- Headphone Weight. Available online: https://www.headphonezone.in/pages/headphone-weight (accessed on 30 January 2020).

- OASIS Standards—MQTT v3.1.1. Available online: https://www.oasis-open.org/standards (accessed on 30 January 2020).

- Espinosa-Aranda, J.L.; Vállez, N.; Sanchez-Bueno, C.; Aguado-Araujo, D.; García, G.B.; Déniz-Suárez, O. Pulga, a tiny open-source MQTT broker for flexible and secure IoT deployments. In Proceedings of the 2015 IEEE Conference on Communications and Network Security (CNS), Florence, Italy, 28–30 September 2015; pp. 690–694. [Google Scholar]

- Monteiro, D.M.; Rodrigues, J.J.P.C.; Lloret, J. A secure NFC application for credit transfer among mobile phones. In Proceedings of the 2012 International Conference on Computer, Information and Telecommunication Systems (CITS), Amman, Jordan, 13 May 2012; pp. 1–5. [Google Scholar] [CrossRef]

- Lepetit, V.; Pilet, J.; Fua, P. Point matching as a classification problem for fast and robust object pose estimation. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 27 June–2 July 2004; Volume 2, pp. II-244–II-250. [Google Scholar] [CrossRef]

- Espinosa-Aranda, J.; Vallez, N.; Rico-Saavedra, J.; Parra-Patino, J.; Bueno, G.; Sorci, M.; Moloney, D.; Pena, D.; Deniz, O. Smart Doll: Emotion Recognition Using Embedded Deep Learning. Symmetry 2018, 10, 387. [Google Scholar] [CrossRef]

- Sanderson, C.; Paliwal, K.K. Fast features for face authentication under illumination direction changes. Pattern Recognit. Lett. 2003, 24, 2409–2419. [Google Scholar] [CrossRef]

- Rosten, E.; Porter, R.; Drummond, T. Faster and better: A machine learning approach to corner detection. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 32, 105–119. [Google Scholar] [CrossRef] [PubMed]

- Tareen, S.A.K.; Saleem, Z. A comparative analysis of sift, surf, kaze, akaze, orb, and brisk. In Proceedings of the 2018 International Conference on Computing, Mathematics and Engineering Technologies (iCoMET), Sukkur, Pakistan, 30 November 2018; pp. 1–10. [Google Scholar]

- Svetnik, V.; Liaw, A.; Tong, C.; Culberson, J.C.; Sheridan, R.P.; Feuston, B.P. Random Forest: A Classification and Regression Tool for Compound Classification and QSAR Modeling. J. Chem. Inf. Comput. Sci. 2003, 43, 1947–1958. [Google Scholar] [CrossRef] [PubMed]

- Breiman, L.; Friedman, J.H.; Olshen, R.A.; Stone, C.J. Classification and Regression Trees; Wadsworth and Brooks: Monterey, CA, USA, 1984. [Google Scholar]

- Bosch, A.; Zisserman, A.; Munoz, X. Image classification using random forests and ferns. In Proceedings of the 2007 IEEE 11th international conference on computer vision, Rio de Janeiro, Brazil, 14–20 October 2007; pp. 1–8. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random Sample Consensus: A Paradigm for Model Fitting with Applications to Image Analysis and Automated Cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Nvidia Developer Blogs: NVIDIA® Jetson™ TX1 Supercomputer-on-Module Drives Next Wave of Autonomous Machines. Available online: https://devblogs.nvidia.com/ (accessed on 30 January 2020).

| Application | Method | Advantages | Disadvantages |

|---|---|---|---|

| Audio Guide Crystalsound [48] | GPS positioning | Low consumption and size. Hands-free. | Indoor positioning not included. |

| Locatify Museum Guide [49] | Mobile phone app. Positioning | Outdoor and indoor positioning. | Power consumption. The user has to select the information from a list because several art pieces are close. Not hands-free. |

| Copernicus Guide [50] | Mobile phone app. Positioning | Tours are initially downloaded and are available offline. Outdoor and indoor positioning. | Power consumption. Not hands-free. |

| xamoom Museum Guide [51] | Mobile phone app. QR/NFC scanner | Easy to use | Power consumption. Not fully automatic since the user has to scan the QR. |

| Orpheo Touch Multimedia Guide [52] | GPS + Camera | Augmented Reality | Power consumption. Not hands-free. |

| Camera | Resolution | FPS | Consumption |

|---|---|---|---|

| AMS International AG/Awaiba NanEye2D | pixels | 60 FPS | 5 mW |

| AMS International AG/Awaiba NanEyeRS | pixels | 50 FPS | 13 mW |

| Himax HM01B0 | pixels | 30 FPS | <2 mW |

| Himax HM01B0 | pixels | 30 FPS | 1.1 mW |

| Sony MIPI IMX208 | pixels | 30 FPS | 66 mW |

| ID | Depth | Trees | Inliers | Classification |

|---|---|---|---|---|

| 1 | 8 | 16 | 85.8 | 89.08% |

| 2 | 8 | 16 | 49.3 | 95.26 % |

| 3 | 8 | 16 | 54.2 | 93.71% |

| 4 | 8 | 16 | 84.7 | 96.68% |

| 5 | 8 | 16 | 92.9 | 92.72% |

| 6 | 8 | 16 | 94.9 | 77.12% |

| 7 | 8 | 16 | 92.1 | 90.18% |

| 8 | 8 | 16 | 62.3 | 75.18% |

| 9 | 8 | 16 | 81.5 | 85.18% |

| 10 | 8 | 20 | 102 | 76.86% |

| 11 | 8 | 20 | 112 | 80.35% |

| 12 | 8 | 20 | 93.3 | 76.83% |

| 13 | 8 | 16 | 103 | 86.12% |

| 14 | 8 | 16 | 112.3 | 86.011% |

| 15 | 8 | 16 | 49.5 | 83.4% |

| 16 | 8 | 16 | 106 | 81.99% |

| 17 | 8 | 16 | 79.1 | 85.22% |

| 18 | 8 | 16 | 98.2 | 81.39% |

| 19 | 8 | 16 | 125 | 83.74% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vallez, N.; Krauss, S.; Espinosa-Aranda, J.L.; Pagani, A.; Seirafi, K.; Deniz, O. Automatic Museum Audio Guide. Sensors 2020, 20, 779. https://doi.org/10.3390/s20030779

Vallez N, Krauss S, Espinosa-Aranda JL, Pagani A, Seirafi K, Deniz O. Automatic Museum Audio Guide. Sensors. 2020; 20(3):779. https://doi.org/10.3390/s20030779

Chicago/Turabian StyleVallez, Noelia, Stephan Krauss, Jose Luis Espinosa-Aranda, Alain Pagani, Kasra Seirafi, and Oscar Deniz. 2020. "Automatic Museum Audio Guide" Sensors 20, no. 3: 779. https://doi.org/10.3390/s20030779

APA StyleVallez, N., Krauss, S., Espinosa-Aranda, J. L., Pagani, A., Seirafi, K., & Deniz, O. (2020). Automatic Museum Audio Guide. Sensors, 20(3), 779. https://doi.org/10.3390/s20030779