Abstract

As the core task of scene understanding, semantic segmentation and depth completion play a vital role in lots of applications such as robot navigation, AR/VR and autonomous driving. They are responsible for parsing scenes from the angle of semantics and geometry, respectively. While great progress has been made in both tasks through deep learning technologies, few works have been done on building a joint model by deeply exploring the inner relationship of the above tasks. In this paper, semantic segmentation and depth completion are jointly considered under a multi-task learning framework. By sharing a common encoder part and introducing boundary features as inner constraints in the decoder part, the two tasks can properly share the required information from each other. An extra boundary detection sub-task is responsible for providing the boundary features and constructing cross-task joint loss functions for network training. The entire network is implemented end-to-end and evaluated with both RGB and sparse depth input. Experiments conducted on synthesized and real scene datasets show that our proposed multi-task CNN model can effectively improve the performance of every single task.

1. Introduction

Scene understanding [1,2,3] is an essential task in lots of intelligent applications such as robotics navigation, AR/VR and autonomous driving. As the core content of scene understanding, semantic [4,5,6,7,8] and depth estimation [9,10,11,12] parse the scene in the view of semantics and geometry, respectively. In recent years, much research focusing on either depth estimation or semantic segmentation has been carried out. With the help of deep learning technologies, great success has been made.

Semantic segmentation refers to the classification and labeling of each pixel in an image, thereby dividing the image into several semantic meaningful regions, and converting the original color-data image into a pixel-level class-labeled image. Following the success of deep neural networks in image classification [13], the invention of fully convolutional neural network (FCN) [5] makes the pixel-level semantic labeling possible. Based on FCN, recent studies develop deconvolution-based architectures to improve segmentation accuracy [6,7]. Deeplab [8] proposes an atrous convolution to tackle the problem of low-resolution features caused by the traditional cascading pooling structure. Their later work [14] introduces bottleneck and skip-connection structure with Resnet [15] framework. ERFNet [16] further proposes a non-bottleneck structure to reduce computing complexity. Extra data sources can also be introduced to improve performance through feature fusion. Dense or sparse depth images, acquired with range sensors such as Kinect or Lidar, are used to strengthen the semantic segmentation results [17,18]. A depth-aware CNN [19] is proposed to optimize the convolution operation with the sparse depth input. To further improve the segmentation results on small objects or near the borderline among classes, boundary-aware convolution is proposed in our previous work [20].

Depth completion refers to predicting dense depth information for each pixel given the sparse depth input. A closely related area is depth estimation from a single RGB image. Lots of deep learning based algorithms are proposed for depth estimation from one image [21,22], and they usually suffer from a similar over-fitting outcome since the problem itself is ill-posed. In contrast, depth completion [12,23] is able to achieve better performance than depth estimation from RGB since it can have sparse depth points as references. IP-Basic [23] uses some delicate morphological operations, e.g., inversion, dilation and hole closure, to perform depth completion. Following FCN’s network structure, some methods treat the depth completion as depth up-sampling work with pixel-level prediction [11]. To counter the special situation of missing data, Uhrig et al. [12] proposes a sparsity invariant convolution. It considers the irregular distribution of sparse inputs and designs the mask-based convolution operating on the valid data only.

Data fusion is a good way to improve the performance of the tasks. In recent years, studies utilizing heterogeneous data [17,24,25,26,27,28,29] for semantic or depth tasks have been carried out. Couprie [17] concatenates the RGB and depth image and builds a multi-scale semantic segmentation network with early fusion. Hazirbas proposes a middle fusion structure FuseNet [24] for better rgb-d semantic segmentation. For depth completion, image-guided methods have achieved quite a lot of attention. Mal [25] first introduces RGB and sparse depth simultaneously to perform better depth estimation. Jaritz [26] improves the work by combining RGB and depth features with late fusion in a multi-task NASNet-based [27] network. Other than introducing RGB data, researchers have also attempted to integrate other features, such as obstacle boundary and surface normal [28,29].

Joint modeling [26] multiple closely related tasks appears to be another feasible way to improve the performance of the overall mission. Conditional random fields (CRF) is usually used to solve the scene-understanding problem by multi-task joint modeling. Murphy [30] first proposes a multi-task joint model for scene classification and target detection in 2003, which effectively combines global and local feature information in image space. Semantic segmentation, which is considered relevant to other similar tasks such as object detection and classification, could be improved by multi-task learning [31,32,33,34]. A cross-stitch structure among feature layers is proposed to better learn the shared features [33]. Uhrig [34] further studies semantic and instance segmentation together based on the fully convolutional neural network. In [35], Kendall proposes to learn the weights among the loss functions for different tasks, i.e., semantic segmentation, instance segmentation and depth estimation, and achieves better performance.

In this paper, we focus on simultaneously performing semantic segmentation and depth completion. We believe the two tasks have some hidden similarities and relationships between them, although they are not obvious. A heuristic observation is that the depth distribution pattern in the same semantic regions may be similar, such as roads and walls. Furthermore, although the two tasks depend on the heterogeneous data input, i.e., color image and sparse range data respectively, there is some evidence that using both data as input can be beneficial for each task [20].

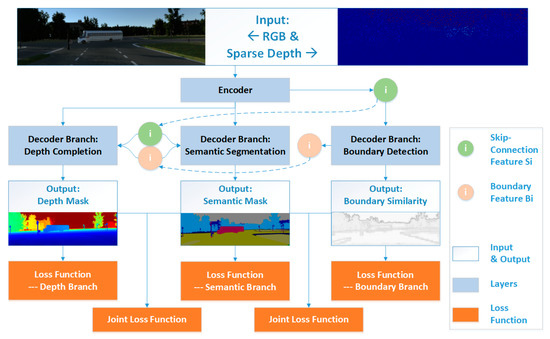

Building upon our previous work on boundary-aware CNN [20] for semantic segmentation, we propose a multi-task CNN for simultaneously predicting the semantic and dense depth of scenes. Besides the network model for the two major tasks, an extra boundary detection sub-net is also designed in the framework. Taking the boundary as the regularizing constraint between the two major tasks, predicted semantic and dense depths could be largely improved. The entire network adopts the single-encoder-multi-decoder architecture, which is able to extract more effective features suitable for all of the three sub-tasks during the encoding process. Skip connections are also designed among all of the separate decoders for each sub-task to strengthen the feature sharing. To the best of our knowledge, it is the first time that the semantic segmentation and depth completion tasks are jointly modeled in this close-coupled manner. The experimental results show that our end-to-end multi-task CNN is able to boost the performance of each task. The framework of the proposed simultaneous semantic segmentation and depth completion multi-task network (SSDNet) is shown in Figure 1.

Figure 1.

Proposed simultaneous semantic segmentation and depth completion multi-task network (SSDNet). Boundary constraint is embedded into the two major tasks via multi-scale feature sharing and cross-task joint loss function.

The main contributions of our work are threefold. First, a triple-task network with single-encoder-multi-decoder architecture is designed for simultaneous predicting semantic and dense depth of an image. It takes RGB and sparse depth data as input, capable of utilizing the complementary information hidden in each of the heterogeneous data. Second, a boundary constraint is embedded into the two major tasks via multi-scale feature sharing and cross-task joint loss function. The boundary plays a role of bridge connecting the semantic and depth prediction tasks and strengthens the relationship between them. The last contribution is the end-to-end implementation of the entire network and evaluation of the method on different datasets. The experiments on both synthesized (Virtual KITTI [36]) and real datasets (Cityscapes [37]) demonstrate that our method can effectively improve the performance for both depth completion and semantic segmentation.

2. Proposed Methods

In this section, the proposed network architecture is introduced first, and the loss functions for training the network are described later.

2.1. Network Architecture

The overall network is based on FCN structure with a single sharing encoder and multiple branch decoder. As shown in Figure 1, the architecture of the proposed SSDNet is mainly composed of a feature-sharing encoder and three branch decoders corresponding to boundary-detection, semantic-segmentation, and depth-completion tasks, respectively.

2.1.1. Feature-Sharing Encoder

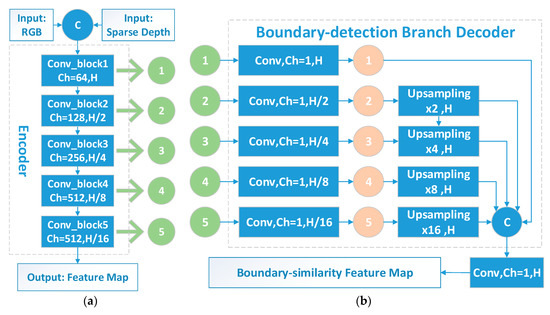

The main structure of the proposed feature-sharing encoder is based on VGG’s [13] multi-scale cascading convolutions, as shown in Figure 2a. It consists of 5 convolution blocks denoted as Conv_block. The scaled outputting features from Conv_block, marked as green nodes , are transferred to all of the three subsequent decoder branches in the form of skip-connection, as shown in Figure 1.

Figure 2.

Proposed feature-sharing encoder (a) and boundary-detection decoder (b). Blue node C refers to the concatenate layer. The green and pink node with the number represent the skipped and the boundary similarity feature from the th scale, respectively. H represents the raw resolution of the input image and refers to the resolution after the th pooling. represents the output channel number for each Conv_Block. The bilinear interpolation upsampling layer in (b) is denoted as Upsampling , where represents the upsampling ratio.

2.1.2. Decoder for Boundary Detection

The boundary is valuable information to hint the discontinuity in the semantic labels and depth image. Therefore, a boundary detection decoder is designed to produce the required boundary similarity to the other two major tasks. As shown in Figure 2b, together with the output of the encoder part, different scales of skip-connection features are also fed into the boundary-detection branch. The boundary computed from the semantic ground truth image can be utilized as supervision signals to train the boundary detection branch. Different boundary feature maps from the th scale, marked as pink nodes in Figure 2b, are then introduced to the following semantic segmentation and depth completion branches.

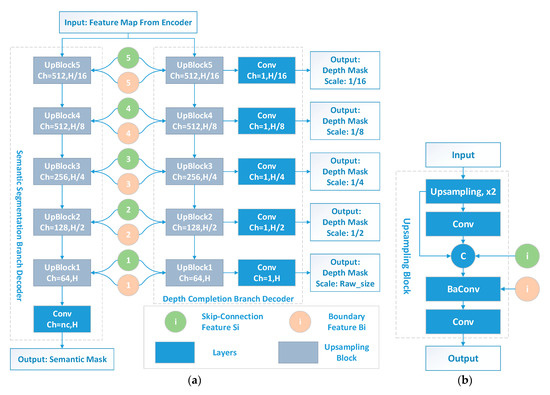

2.1.3. Decoder for Semantic Segmentation and Depth Completion

Besides the feature maps from the encoder, the semantic and depth decoder branches also share the multi-scale skipped features and the boundary feature maps, as shown in Figure 3a. The core modules for both branches are the multiple UpBlocks, where the boundary feature is absorbed to construct a special boundary-aware convolution layer BaConv [20], as illustrated in Figure 3b. With the guidance of the boundary, boundary-aware convolution could focus more on the regions with similar semantic features and gather the contributions more adaptively to produce the output. The operation of boundary-aware convolution is shown in Equation (1):

where the output at the position is produced by the convolution of three parts, i.e., kernel weight , input feature map and boundary similarity feature . denotes each position in the local window around the target position . The size of is defined by the convolution kernel size, and parameters in are determined through the training process.

Figure 3.

Semantic segmentation and depth completion decoder (a) and UpBlock structure (b). represents the number of semantic classes. BaConv refers to the boundary-aware convolution [20]. The bilinear interpolation upsampling layer is used in (b) as Upsampling, x2.

Comparing with standard convolution operation, BaConv introduces the boundary-similarity term and brings the idea of adaptively setting contributions to each . Based on BaConv, pixels which have higher similarities as object boundaries will have a higher weight of and have less impact on the convolution results.

Following five UpBlocks, a 1 × 1 convolution layer with channels equal to the class number is employed to produce the final semantic results. For the depth completion branch, a 1 × 1 convolution layer with an output channel number of 1 is introduced after each UpBlock, and produces a normalized depth prediction on each scale. All of the five scaled outputs are used for producing depth task loss during training, while only the depth outputs in the final scale are used in the testing stage.

2.2. Loss Function

Given the network model and training samples , where and separately represent the RGB image and sparse depth data,, and represent the ground-truth for dense depth, semantic labels and boundary, the loss functions for each single and joint task have to be designed. Boundary ground truth can be calculated from the discontinuity of the semantic ground truth. The output of the triple task is denoted as , and , which represent semantic, depth and boundary prediction, respectively.

2.2.1. Loss Function for Depth Completion

For the depth completion branch, the model can be optimized by L1 and L2 loss between the prediction and the ground truth. SSIM loss [38] is also induced to compare the features of brightness , contrast , and structure between the completed depth images and the ground truth . SSIM loss is defined as:

In Equation (2), represents the number of 11x11 sliding windows to calculate local image quality. SSIM loss for the overall image, i.e., , is obtained by averaging all of the sliding windows. In Equation (3), and are the parameters for the metric functions, represents the expectation function to calculate the mean value for the feature map, and , , denote mean, variance, covariance, respectively. , and are the constants to avoid instability when the denominator is close to zero. Empirically, they are set as: , and .

In summary, the final loss function for depth completion is composed of three terms:

where , and are the weights for each loss, respectively. Empirically, they are set as , and in our implementation.

2.2.2. Loss Function for Semantic Segmentation and Boundary Detection

For semantic segmentation-related tasks, the class weighted cross-entropy is applied in the loss function. Given and the probability of the th pixel labeled as the th class in the ground truth and the prediction, respectively. The class-weighted cross-entropy (WCE) is given as:

where represents the proportion of pixels with the semantic category in the whole sample dataset, and represent the total number of pixels and semantic categories. Class-weighted cross-entropy (WCE) is used to evaluate the output of the semantic segmentation branch decoder.

Similarly, boundary-detection could be defined as a binary semantic segmentation problem and two-class-weighted cross-entropy is employed in Equation (6):

where denotes the boundary-similarity of the th pixel in the boundary-prediction map and denotes if th pixel is labeled as a boundary.

2.2.3. Loss Function for Joint Tasks

Aiming to enhance the correlation among different tasks and further improve the overall generalization performance, joint cross-task loss functions are proposed. Boundary constraint is embedded into the two major tasks via multi-scale feature sharing and cross-task joint loss function. The boundary plays the role of bridges connecting the semantic and depth prediction tasks and strengthens the relationship between them. In brief, the positions of the semantic boundary, depth boundary and boundary predicted through the detection sub-task should all be compatible.

Specifically, defining local boundary function on the semantic prediction image , the semantic boundary confidence in the horizontal and vertical direction can be respectively computed as shown in Equation (7):

where the subscript and represent the pixel position in the horizontal and vertical direction, respectively. Then the semantic-boundary joint loss function is defined in Equation (8). The boundary calculated from semantic prediction should have the structure-similarity with the boundary-similarity prediction result. The semantic-boundary joint loss function can be minimized when the pixels at the semantic boundary () has a high boundary-similarity ( reaches the maximum value of 1).

For the depth prediction, the predicted is a continuous quantity and the local gradients on could be built in Equation (9). The larger the gradient is, the more the pixel tends to the position of a boundary. Numerically, the partial derivatives could be calculated in Equation (10):

Then the semantic-depth joint loss can be formulated in Equation (11). It can reach the minimum when the pixels at the semantic boundary () has a high depth gradient .

Finally, the full loss function for the entire multi-task model is defined as:

3. Experimental Results

In this section, the experimental setup and the evaluation datasets are described first. Then the quantitative and qualitative results are presented with some comparisons to state-of-the-art methods.

3.1. Experimental Setup and Dataset Introduction

To evaluate the performance of the proposed network, we introduce metrics for semantic segmentation and depth completion task respectively. As shown in Table 1, is the number of pixels that labeled as class and predicted as class , indicates the number of classes, and is the number of pixels with ground truth class , and represents the total number of all pixels. For depth completion metrics, and represent the ground truth and depth prediction of the th pixel.

Table 1.

Evaluation metrics.

Among semantic segmentation evaluation metrics, stands for the total correct-predicted pixels in the overall image, and denotes the mean accuracy among different classes. is the average between the IoU (the ratio between the correct-predicted area and the union area of the ground truth and the predicted areas) of different semantic labels over all the images, and represents the class-weighted IoU metric.

The network model is implemented using the PyTorch framework and trained on the NVIDIA GeForce GTX 1080 Ti with 11GB of graphics processing unit (GPU) memory. The network parameters are randomly initialized by Xavier, and the initial offset is set to zero. The loss function is optimized using the SGD optimizer in the experiment, where the initial learning rate is set to 1 × 10−4 and the batch size is set to 4. The experiment is done primarily on the Virtual KITTI and Cityscapes datasets.

- Virtual KITTI [36] is a synthetic outdoor dataset. The dataset contains 10 different rendering variants in each sequence, one of them is an outdoor environment cloned as close as possible to the original KITTI benchmark and the others are geometry transformed or adjusted with weather conditions from the cloned one. Each RGB image in the dataset has a corresponding depth image and semantic segmentation groundtruth. The ground truth depth maps are randomly down-sampled to only 5% of the original density to produce the sparse depth input: 11,112 images are randomly selected for training, 2320 images for validation and 3576 images for testing.

- CityScapes [37] is a real outdoor dataset, which contains high-quality semantic annotations of 5000 images collected in street scenes from 50 different cities. A total of 19 semantic labels are used for evaluation. They belong to 7 super categories: ground, construction, object, nature, sky, human, and vehicle. The ground truth of depth (disparity) is provided by the SGM method [37]. In the experiment, the original disparity images are randomly down-sampled to 5% density and used as sparse depth input. The training, validation, and testing sets contain 2975, 500 and 1525 images, respectively.

3.2. Experiment Analysis: Virtual KITTI

Virtual KITTI [36] is a synthetic outdoor dataset and mostly used for ablation experiments among models with different settings. All the models are trained from scratch and do not rely on any pre-training model.

3.2.1. Experiments on Semantic Segmentation

The evaluation of the experiment is first carried out on the Virtual KITTI dataset. The specific settings of the comparative experimental model are listed as follows:

- BaCNN: Baseline model proposed [20]. BaCNN is based on the backbone of FCN8S [5], and modified by replacing the first layer of each Conv_Block into the boundary-aware convolution. With the help of a front-built boundary-detection sub-network, the boundary-similarity map is introduced.

- SSDNet_Sem: Remove the depth completion branch from our proposed multi-task learning framework. It could also be understood as two modifications to the BaCNN model:

- (a)

- BaCNN employs the boundary-detection sub-network as one cascading task and produces a boundary-similarity map for the following semantic segmentation sub-network, while SSDNet_Sem treats the boundary task as a dependent branch and shares the encoder features. Moreover, other than independent loss functions for boundary and semantic sub-task, SSDNet_Sem model could also be optimized by joint semantic-boundary loss function;

- (b)

- Comparing with BaCNN who performs Early Fusion with introducing boundary-similarity in the encoder stage, SSDNet_Sem performs Later Fusion in the decoder stage.

- SSDNet (Full model): The complete network model with multi-tasks optimized by the full joint loss function.

- SSDNet_ind: The complete network model without using joint loss functions.

The quantitative comparison results are shown in Table 2. For following tables, we highlight our method’s performance in bold, and underline the best performance. As expected, all of the three models perform better than the single semantic task model FCN8S [5]. With adding of the boundary and depth task, the performance is gradually improved. BaCNN [20] introduces boundary detection task and replaces the standard convolution with the boundary-aware convolution. SSDNet_Sem achieves further improvement than the baseline BaCNN model. It is due to the changes in the boundary fusion phase (from early fusion to later fusion) and the task hierarchy from the original cascaded style into a parallel multi-task architecture. By adding the depth completion task, the full SSDNet model achieved further significant improvements compared to SSDNet_Sem and performs the best on all four metrics. Without any help from cross-task joint loss functions, SSDNet_ind performs a little lower than our full model and supports the effectiveness of cross-task joint loss functions. The full model also has lower complexity and higher real-time performance than FCN8S and BaCNN (FLOPs are all computed under image resolution of 125 × 414).

Table 2.

Semantic segmentation ablation experiment on Virtual KITTI dataset.

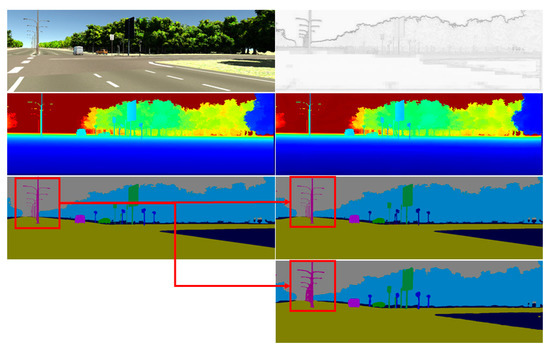

The qualitative results are shown in Figure 4, where the predictions of depth and semantics look good with the help of boundary prediction. Comparing to the baseline BaCNN model, the full multi-task model is able to produce much shaper segmentations on very close objects (as marked in the red box). This also proves that the proposed multi-task joint network can promote the performance of every single task.

Figure 4.

The SSDNet experimental results on Virtual KITTI. In an order of top-down: the first column shows the RGB color image, the depth ground truth and the semantic segmentation ground truth; the second column shows the boundary-similarity result, the depth completion output, the semantic segmentation result, and corresponding BaCNN [20] result respectively.

3.2.2. Experiments on Depth Completion

Several ablation experiments are conducted with the following model configurations:

- SSDNet (full model): The proposed semantic segmentation and depth completion multi-task network.

- SSDNet_Dep: Removing the semantic branch from the full model, but still using both sparse depth and RGB image as input.

- SSDNet_Dep_d: Using sparse depth as the only data source on the model of SSDNet_Dep.

- SSDNet_Dep_rgb: Using RGB image as the only data source and perform depth prediction on the model of SSDNet_Dep.

- SSDNet_ind: The complete model without using joint loss functions.

In the depth completion ablation experiment, the RMSE and MAE are analyzed for depth outputs in the range of 20 m, 50 m and 100 m, respectively. Sparse depth points and image pixels become sparser with the increasing of range, leading decreasing of prediction accuracy along the distance. The experiments on these three ranges can well represent the system performance in near, middle and far range ahead of the vehicle. The quantitative comparison results are shown in Table 3, where n/a represents unpublished data. The full SSDNet performs the best among all of the models. Compared with the full model, SSDNet_Dep obtained slightly worse results on the depth prediction accuracy, which shows the importance of the semantic task to the depth completion. If only one type of data could be used, SSDNet_Dep with sparse depth can achieve better results than using the RGB image. However, they are both worse than SSDNet_Dep with full heterogeneous input, which inversely demonstrates the advantages of data fusion. SSDNet_ind is a little worse than the full model, which proves that cross-task joint loss function could help improve depth accuracy. Compared with the traditional methods such as MRF [39], TGV [40] and the state-of-the-art CNN methods such as Sparse-to-dense [25] and SparseConvNet [12], our full model also performs the best.

Table 3.

Depth Completion Ablation Experiment on Virtual KITTI Dataset.

3.3. Experimental Analysis on CityScapes

To further evaluate the proposed SSDNet model in a real environment, this section conducts experiments in the CityScapes dataset and compares with state-of-the-art methods. Unlike the simulated Virtual KITTI dataset, the CityScapes dataset contains more noises in the original RGB and disparity (depth) image. The proposed SSDNet model is trained from scratch and does not rely on any ImageNet pre-training. The quantitative verification results for the semantic segmentation task are shown in Table 4, where IoU_cat and IoU_cla represent mIoU corresponding to all of the 7 categories and 19 classes, respectively; fwt represents the running time of each frame in seconds. With the help of the multi-task learning framework, the proposed SSDNet model performs better than the baseline BaCNN [20] and most of its counterparts. Compared with the state-of-the-art real-time CNN methods such as encoder-decoder based ENet model [41] and its loss-edited version [42], ESPNet [43] and two-branch-fusion Fast-SCNN [44], our full model performs better in IoU without costing more time. With fewer layers built in the network and without any pre-training on ImageNet, our method performs still slightly worse than ERFNet [16]. However, our full SSDNet model with three tasks can run at 100 fps, which is 2 times faster than ERFNet.

Table 4.

Semantic segmentation results on CityScapes dataset with a resolution of 512 × 1024 px.

Detailed category evaluation comparisons with the baseline are shown in Table 5. The statistics show that our SSDNet outperforms the baseline BaCNN model in almost all categories, which verified the effectiveness of our proposed multi-task learning framework.

Table 5.

Detailed IoU (in %) on CityScapes with an image resolution of 512 × 1024 px.

Compared to the dense depth map in Virtual KITTI, the depth (disparity) ground truth in the Cityscapes dataset is much noisier and only semi-dense with lots of holes in it. Our proposed method is susceptible to this incomplete and noisy supervisory signal and still able to produce full density depth results. The quantitative results of depth are shown in Table 6. Compared with multi-task learning [35] and unsup-stereo-depthGAN [45] method, our SSDNet multi-task model achieves the best performance thanks to the effective sharing of semantic and boundary features.

Table 6.

Depth completion results on CityScapes dataset.

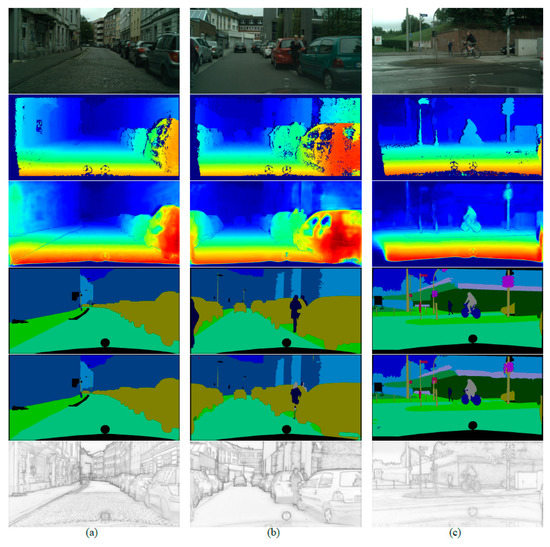

Some qualitative results of the proposed method in the Cityscapes dataset are shown in Figure 5. Each column from top to down displays RGB images, depth (disparity) ground truth, depth completion output, semantic ground truth, semantic prediction and boundary detection result, respectively. Despite the noisy depth ground truth, the proposed model can still benefit from triple-task learning and produce satisfying results.

Figure 5.

Qualitative results of the SSDNet in the CityScapes dataset. (a) and (b) show the scenes of large objects, such as vehicles and buildings. (c) shows the scene of small objects, such as riders and traffic signs. Top-down order for each column: RGB color image, depth (disparity) groundtruth, depth completion prediction, semantic ground truth, semantic prediction and boundary prediction.

4. Conclusions

In this paper, a multi-task network for simultaneous semantic segmentation and depth completion is proposed. With the structure of single-encoder-multi-decoder, the model is capable of learning the enhanced features suitable for the entire task. Boundary constraint is embedded into the two major tasks via multi-scale feature sharing and cross-task joint loss function. The boundary features play a role of the bridge connecting the semantic and depth prediction tasks and strengthen the relationship between them. The boundary associated cross-task joint loss functions are beneficial for each task. The entire network is implemented end-to-end and evaluated on both synthesized and real datasets. The ablative and comparative results show that our multi-task SSDNet model is able to effectively improve the performance of both semantic segmentation and depth completion tasks in a real-time frame rate.

Future work will focus on further improving the task performance by designing a more robust feature fusion mechanism and better network structure. We also plan to test our algorithm in more complex environments.

Author Contributions

Conceptualization, N.Z., Z.X., Y.C., S.C. and C.Q.; methodology, N.Z. and Z.X.; software, N.Z.; validation, N.Z. and Z.X.; formal analysis, N.Z. and S.C.; investigation, N.Z., Z.X. and C.Q.; resources, S.C., Y.C. and C.Q.; writing—original draft preparation, N.Z.; writing—review and editing, Z.X.; visualization, S.C.; supervision, Z.X.; project administration, Z.X.; funding acquisition, Z.X. All authors have read and agreed to the published version of the manuscript.

Funding

The work is supported by NSFC-Zhejiang Joint Fund for the Integration of Industrialization and Informatization under grant No. U1709214 and China NSFC grant NO. 61571390.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Ladický, L.U.; Russell, C.; Kohli, P.; Torr, P.H. Associative hierarchical crfs for object class image segmentation. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 739–746. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Noh, H.; Hong, S.; Han, B. Learning deconvolution network for semantic segmentation. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1520–1528. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Semantic Image Segmentation with Deep Convolutional Nets and Fully Connected CRFs. arXiv 2014, arXiv:1412.7062. [Google Scholar]

- Torralba, A.; Oliva, A. Depth estimation from image structure. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 1226–1238. [Google Scholar] [CrossRef]

- Liu, B.; Gould, S.; Koller, D. Single image depth estimation from predicted semantic labels. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 1253–1260. [Google Scholar]

- Hua, J.; Gong, X. A Normalized Convolutional Neural Network for Guided Sparse Depth Upsampling. In Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018; pp. 2283–2290. [Google Scholar]

- Uhrig, J.; Schneider, N.; Schneider, L.; Franke, U.; Brox, T.; Geiger, A. Sparsity invariant cnns. In Proceedings of the 2017 International Conference on 3D Vision (3DV), Qingdao, China, 10–12 October 2017; pp. 11–20. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Romera, E.; Alvarez, J.M.; Bergasa, L.M.; Arroyo, R. Erfnet: Efficient residual factorized convnet for real-time semantic segmentation. IEEE Trans. Intell. Trans. Syst. 2017, 19, 263–272. [Google Scholar] [CrossRef]

- Couprie, C.; Farabet, C.; Najman, L.; LeCun, Y. Indoor semantic segmentation using depth information. arXiv 2013, arXiv:1301.3572. [Google Scholar]

- Gupta, S.; Girshick, R.; Arbeláez, P.; Malik, J. Learning Rich Features from RGB-D Images for Object Detection and Segmentation. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2014; pp. 345–360. [Google Scholar]

- Wang, W.; Neumann, U. Depth-aware CNN for RGB-D segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 135–150. [Google Scholar]

- Zou, N.; Xiang, Z.; Chen, Y.; Chen, S.; Qiao, C. Boundary-Aware CNN for Semantic Segmentation. IEEE Access 2019, 7, 114520–114528. [Google Scholar] [CrossRef]

- Eigen, D.; Puhrsch, C.; Fergus, R. Depth map prediction from a single image using a multi-scale deep network. In Proceedings of the 27th International Conference on Neural Information Processing Systems—Volume 2 (NIPS’14), Montreal, QC, Canada, 8–13 December 2014; MIT Press: Cambridge, MA, USA, 2014; pp. 2366–2374. [Google Scholar]

- Eigen, D.; Fergus, R. Predicting depth, surface normals and semantic labels with a common multi-scale convolutional architecture. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 2650–2658. [Google Scholar]

- Ku, J.; Harakeh, A.; Waslander, S.L. In defense of classical image processing: Fast depth completion on the cpu. In Proceedings of the 2018 15th Conference on Computer and Robot Vision(CRV), Toronto, ON, Canada, 9–11 May 2018; pp. 16–22. [Google Scholar]

- Hazirbas, C.; Ma, L.; Domokos, C.; Cremers, D. Fusenet: Incorporating Depth into Semantic Segmentation via Fusion-Based CNN Architecture. In Asian Conference on Computer Vision; Springer: Cham, Switzerland, 2016; pp. 213–228. [Google Scholar]

- Mal, F.; Karaman, S. Sparse-to-dense: Depth prediction from sparse depth samples and a single image. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 1–8. [Google Scholar]

- Jaritz, M.; De Charette, R.; Wirbel, E.; Perrotton, X.; Nashashibi, F. Sparse and dense data with cnns: Depth completion and semantic segmentation. In Proceedings of the 2018 International Conference on 3D Vision (3DV), Verona, Italy, 5–8 September 2018; pp. 52–60. [Google Scholar]

- Zoph, B.; Vasudevan, V.; Shlens, J.; Le, Q.V. Learning transferable architectures for scalable image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8697–8710. [Google Scholar]

- Zhang, Y.; Funkhouser, T. Deep depth completion of a single RGB-D image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 175–185. [Google Scholar]

- Qiu, J.; Cui, Z.; Zhang, Y.; Zhang, X.; Liu, S.; Zeng, B.; Pollefeys, M. Deeplidar: Deep surface normal guided depth prediction for outdoor scene from sparse lidar data and single color image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3313–3322. [Google Scholar]

- Murphy, K.P.; Torralba, A.; Freeman, W.T. Using the forest to see the trees: A graphical model relating features, objects, and scenes. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2004; pp. 1499–1506. [Google Scholar]

- Teichmann, M.; Weber, M.; Zoellner, M.; Cipolla, R.; Urtasun, R. Multinet: Real-time joint semantic reasoning for autonomous driving. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 1013–1020. [Google Scholar]

- Sermanet, P.; Eigen, D.; Zhang, X.; Mathieu, M.; Fergus, R.; LeCun, Y. Overfeat: Integrated recognition, localization and detection using convolutional networks. arXiv 2013, arXiv:1312.6229. [Google Scholar]

- Misra, I.; Shrivastava, A.; Gupta, A.; Hebert, M. Cross-stitch networks for multi-task learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3994–4003. [Google Scholar]

- Uhrig, J.; Cordts, M.; Franke, U.; Brox, T. Pixel-level Encoding and Depth Layering for Instance-Level Semantic Labeling. In German Conference on Pattern Recognition; Springer: Cham, Switzerland, 2016; pp. 14–25. [Google Scholar]

- Kendall, A.; Gal, Y.; Cipolla, R. Multi-task learning using uncertainty to weigh losses for scene geometry and semantics. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7482–7491. [Google Scholar]

- Gaidon, A.; Wang, Q.; Cabon, Y.; Vig, E. Virtual Worlds as proxy for multi-object tracking analysis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4340–4349. [Google Scholar]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The cityscapes dataset for semantic urban scene understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Harrison, A.; Newman, P. Image and Sparse Laser Fusion for Dense Scene Reconstruction. In Field and Service Robotics; Springer: Berlin/Heidelberg, Germany, 2010; pp. 219–228. [Google Scholar]

- Ferstl, D.; Reinbacher, C.; Ranftl, R.; Rüther, M.; Bischof, H. Image guided depth upsampling using anisotropic total generalized variation. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, NSW, Australia, 1–8 December 2013; pp. 993–1000. [Google Scholar]

- Paszke, A.; Chaurasia, A.; Kim, S.; Culurciello, E. Enet: A deep neural network architecture for real-time semantic segmentation. arXiv 2016, arXiv:1606.02147. [Google Scholar]

- Berman, M.; Rannen Triki, A.; Blaschko, M.B. The Lovász-Softmax loss: A tractable surrogate for the optimization of the intersection-over-union measure in neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4413–4421. [Google Scholar]

- Mehta, S.; Rastegari, M.; Caspi, A.; Shapiro, L.; Hajishirzi, H. Espnet: Efficient spatial pyramid of dilated convolutions for semantic segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Seattle, WA, USA, 19 March 2018; pp. 552–568. [Google Scholar]

- Poudel, R.P.; Liwicki, S.; Cipolla, R. Fast-SCNN: Fast semantic segmentation network. arXiv 2019, arXiv:1902.04502. [Google Scholar]

- Pilzer, A.; Xu, D.; Puscas, M.; Ricci, E.; Sebe, N. Unsupervised adversarial depth estimation using cycled generative networks. In Proceedings of the 2018 International Conference on 3D Vision (3DV), Verona, Italy, 5–8 September 2018; pp. 587–595. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).