Abstract

Electroencephalogram (EEG) is an effective indicator for the detection of driver fatigue. Due to the significant differences in EEG signals across subjects, and difficulty in collecting sufficient EEG samples for analysis during driving, detecting fatigue across subjects through using EEG signals remains a challenge. EasyTL is a kind of transfer-learning model, which has demonstrated better performance in the field of image recognition, but not yet been applied in cross-subject EEG-based applications. In this paper, we propose an improved EasyTL-based classifier, the InstanceEasyTL, to perform EEG-based analysis for cross-subject fatigue mental-state detection. Experimental results show that InstanceEasyTL not only requires less EEG data, but also obtains better performance in accuracy and robustness than EasyTL, as well as existing machine-learning models such as Support Vector Machine (SVM), Transfer Component Analysis (TCA), Geodesic Flow Kernel (GFK), and Domain-adversarial Neural Networks (DANN), etc.

1. Introduction

In recent years, there has been rapid increase in the number of traffic accidents, yielded to huge losses to people’s lives and their properties. A lot of evidence shows that driving under the condition of fatigue state (fatigue driving) is one of the main causes of traffic accidents. Statistical results also indicate that fatigue driving leads to 35–45% of road traffic accidents [1,2,3], and directly causes 1550 deaths, 71,000 injuries, and $12.5 billion in economic losses each year according to the reports of American National Highway Traffic Safety Administration (NHTSA) [4]. Therefore, it is of vital importance to design an efficient and accurate analysis model for detecting fatigue over time during driving.

Generally, there are three ways to detect fatigue mental states. The first is video-based detections. Computer vision techniques are used for detecting fatigue by analyzing the facial expressions such as blinking, eye closure duration, yawning, and so on [5]. In this way, the blink frequency is one of the key factors for detecting fatigue. However, changes in illumination or wearing sunglasses will reduce the detection effect, because such methods adopt the vertical and horizontal projection intensities of the image to detect whether blinking or not. The second is psychology-based surveys. The subjects evaluate their mental states by filling in some psychological questionnaires [6,7]. Such questionnaires rely heavily on the subjective factors of the subjects that maybe lack objective judgment for verification [8,9]. The third is the measurements based on physiological signals. Usually, EEG, electrooculogram (EOG), electrocardiogram (ECG) or their mixtures are employed for measuring or detecting brain activity, eye movements [10,11,12], etc. Thus, fatigue states could be correspondingly identified as well. EEG-based methods are considered to be one of the most efficient methods since EEG could directly reflect the activities of the brain’s nerve cells to reveal the changes of the drivers’ mental states during driving [13,14].

Recent studies have shown that EEG-based methods could achieve better reliability and accuracy compared with other methods [15]. So far, many classical machine-learning algorithms such as SVM [16,17], linear discriminant analysis (LDA) [18], K Nearest Neighbor (KNN) [19], etc., as well as some typical deep-learning models such as Long Short-Term Memory Network (LSTM) [20], Convolutional Neural Network (CNN) [21], are adopted to analyze and uncover those important patterns of EEG signals. Despite the great success of these methods, regarding the significant differences of EEG signals, many manually labeled EEG data is still required to perform the prediction and classification tasks of mental states, especially for cross-subject prediction, and a large amount of training is needed as well to identify those EEG patterns between different subjects. In other words, the existing analysis is to a certain extent not only time-consuming, but also subject-dependent, which will not be quite fit for cross-subject EEG analysis, and will decrease the classification performance for across subjects.

Transfer-learning models are efficient methodologies that aim at transferring the previously extracted features from a labeled domain to a similar but different domain with limited or no labels to perform some specific decision tasks on the different domain [22,23,24]. Transfer-learning methods have achieved good performance in areas of image recognition and classification. Easy Transfer Learning (EasyTL) [24] was proposed by Wang et al. in 2019 for image recognition, which is divided into two phases: the first one aligns both the source and target domains to reduce the differences between both domains, and the second one makes use of a kind of probability annotation matrix for classification [24]. Since EasyTL does not require model selection and hyper-parameter tuning, it could achieve better competitive performance.

Although EasyTL is easy, accurate, efficient, and extensible, due to the significant differences of EEG signals across subjects which will cause different distributions between EEG feature patterns for different subjects, it is still difficult for EasyTL to address the issue of EEG-based cross-subject fatigue mental state detection. Please note that cross-subject fatigue detection is to train a model by extracting EEG features of some subjects and using them in the analysis of mental states of other subjects. To overcome the above shortcoming of EasyTL in dealing with cross-subject EEG signals, in this paper, we propose an improved EasyTL-based method, InstanceEasyTL, to perform cross-subject EEG-based fatigue detection. To verify our proposed model, we also conduct the comparative analysis between InstanceEasyTL, SVM, and some other existing transfer-learning methods such as Transfer Component Analysis (TCA) [25], Geodesic Flow Kernel (GFK) [26], and Domain-adversarial Neural Networks (DANN) [27].

The paper is organized as follows: Section 2 introduces the setup of the fatigue driving experiment, including subjects, EEG recording and preprocessing, as well as EEG feature extraction. Section 3 presents the existing EasyTL method and states in detail our proposed InstanceEasyTL model. Section 4 shows the related experimental results. Section 5 compares and discusses these results with other existing models, and demonstrates the efficiency of the proposed method. Finally, Section 6 concludes our works.

2. Materials

2.1. Subjects

This experiment is performed under the approval of the local ethical committee of University of Rome “La Sapienza” (Rome, Italy). 15 subjects (ages range [24–30] years, mean ± std = 26.8 ± 3.2 years) are selected to participate in the driving experiment. All the subjects have qualified driving licenses. Before the experiment, all the subjects are informed and interpreted of the experimental intention, and sign the written consents. In addition, all of them are not allowed to drink alcohol the day before the experiment, and no caffeine is taken 5 h before the experiment, respectively. Finally, the experiment is conducted in accordance with the principles outlined in the Helsinki Declaration of 1975, which was revised in 2013.

2.2. Experimental Protocol

To eliminate the possible effects of circadian rhythms and meals, the experiment is performed between 2 and 5 pm. In addition, the Driving track is the Spa Francorchamps (Belgium) track, and the Vehicle type is an Alfa Romeo Giulietta QV (1750 TBi, 4 cylinders, 235 HP) on our driving simulation platform.

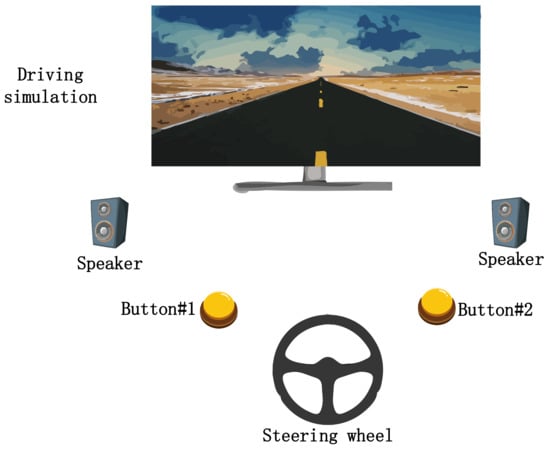

Figure 1 is the driving experimental setting. The experiment is started in a quiet environment without any noise, the subjects are asked to sit on a comfortable sofa to drive a car by controlling a steering wheel according to the stimulation track 1m in front of them.

Figure 1.

Experimental settings.

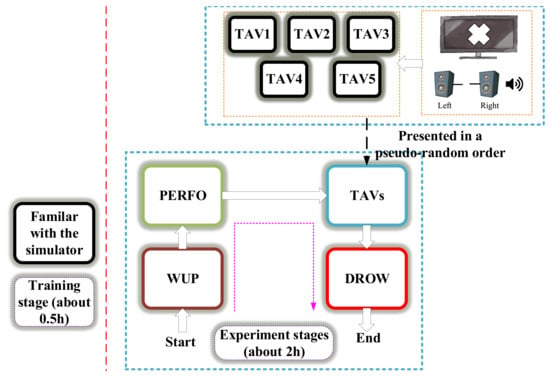

There are 8 tasks during the experiment, as shown in Table 1. To obtain a reference, we define warm-up (WUP) as the baseline of the experiment that is at the beginning of the experiment. In WUP, the subject is asked to drive a car for 2 laps without any stimuli and any errors. Next stage performance (PERFO) is similar to WUP, but the total time of driving is 2% less than WUP. Then the task of “alert“ with video and “vigilance” with audio (TAV) is designed and appeared randomly during the process of the experiment to make the subjects feel tired more easily, which are follow after PERFO with a pseudo-random order, TAV3, TAV1, TAV5, TAV2, TAV4. Please note that they will receive visual or sound stimuli with different frequencies in TAVs stages. Different stimulus frequencies of “alert” or “vigilance” represent the different degree of stimulation, defined as TAV1, TAV2, TAV3, TAV4, and TAV5 with different stimulus intervals [28,29,30,31]. The TAVs duration will be set up depending on the total time spent in WUP. From TAV1 to TAV5, the stimuli intervals are 9800–10,200, 7700–8100, 5900–6300, 4100–4500, and 2300–2700 ms, respectively. The last stage is drowsiness (DROW) with a slow speed just like driving in a crowded city center, without any video or audio stimuli alongside the track for 2 laps. The subject should press “Button#1” with his left finger when ‘X’ appears, which is an “alert” task, and press “Button#2” with his right finger when two consecutive “beep”’s come, which is a “vigilance” task [32,33,34]. “Alert” stimuli is used to mimic the actual road conditions, such as traffic lights, pedestrians crossing the road, other vehicles, etc. (as shown in Figure 2) [35], while “vigilance” stimuli is used to stimulate the car radio, engine noise or a phone call (as shown in Figure 3). Usually, the whole experiment will last 2 h. WUP, PERFO, 5 TAVs, and DROW will need about 10 min, 2% less than that of WUP, 1 h, and 20 min, respectively. There is a break of about 5 min between each stage.

Table 1.

Description of different tasks.

Figure 2.

Alert stimuli response process in TAV.

Figure 3.

Vigilance stimuli response process in TAV.

All subjects are required to take a half-hour training session to familiarize themselves with the simulator’s commands and interfaces before starting the experiment, the experimental flow is shown in Figure 4.

Figure 4.

The experimental flow.

After finishing every stage, the subjects are required to fill in the NASA-TLX questionnaire to provide subjective workload perception during the task. Moreover, a run sheet is used to take note of the subjects’ drive performance (“off-road”) during the experiment. “off-road” means that the subject has driven out of the track at least with one wheel.

2.3. EEG Recording and Preprocessing

EEG is recorded by using a digital monitoring system (Brain Products GmbH, Germany), in which all 61 EEG channels are referenced to the earlobe, grounded to FCz, their impedances are kept below 10 K , and the sampling frequency F_s is 200 Hz. We use eeglab toolbox in Matlab to pre-process and process EEG data. A band-pass filter is employed to keep EEG signals with a frequency range between 1 and 30 Hz for fatigue driving analysis [36], independent component analysis (ICA) [28,37] is used for removing EOG artifacts.

2.4. EEG Feature Extraction

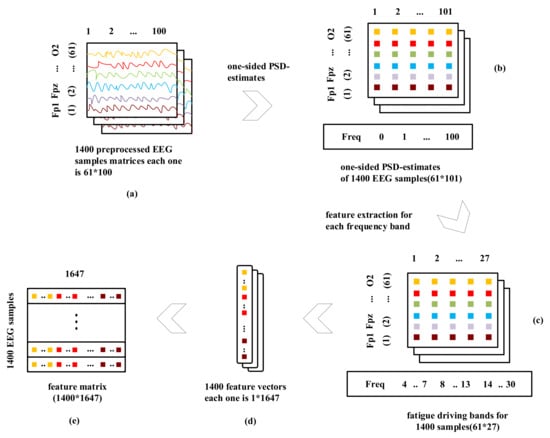

Before feeding the EEG data of each subject into the classifier for training, we extract the power spectrum density (PSD) features from EEG data of each subject [38,39], which is usually used for feature extraction in EEG analysis. The detailed PSD feature exaction procedure is listed as the following steps:

- For the recorded EEG of each channel, a 0.5 s hamming window without overlapping between two successive windows is used for dividing EEG into multiple samples. We extract 1400 hamming windows of sample points and each window have 0.5 s × F_s = 0.5 × 200 = 100 sample points (as shown in Figure 5a). Thus, the number of hamming windows (HW) is 1400 and the sample points in each hamming window are 100 × 61 channels = 6100.

Figure 5. EEG feature vectors generation process.

Figure 5. EEG feature vectors generation process. - For each channel in each window, we apply the one-sided PSD-estimate of EEG signals with the frequency of 200 Hz that represent the strength in terms of the logarithm of power content of the signal at integral frequencies between 0 and 100 Hz. This produces 101 feature-length vectors. Therefore, 100 sample points in each channel become 101 features as well (as shown in Figure 5b).

- From the acquired 101 features in step 2, we can then extract 27 features at the frequency bands of band (4–7 Hz), band (8–13 Hz), and band (14–30 Hz), respectively [40] (as shown in Figure 5c).

- Then, the extracted features will be appended together to form D = (61 × 27) = 1647 dimension of feature vectors (as shown in Figure 5d).

- Consequently, for those HW =1400 windows/samples, we now have a feature space FS with HW × D =1400 × 1647 of order that will be fed into our proposed model for training (as shown in Figure 5e).

3. Methods

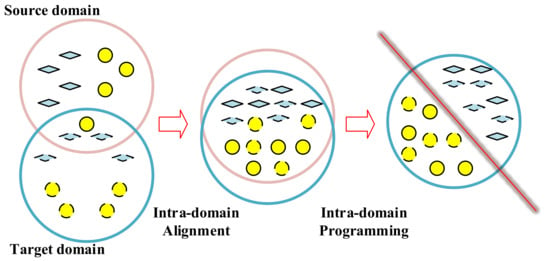

3.1. The Existing EasyTL Method

EasyTL [24] is a kind of transfer-learning method that has been applied in the field of image applications and achieved better performance. It consists of two parts: intra-domain alignment and intra-domain programming, as shown in Figure 6. For intra-domain alignment, it aligns samples to form the sample set in the source domain s as , and samples to form the sample set in the target domain t as through intra-domain alignment [41], aiming at making the difference between s and t as small as possible. Please note that the source domain is and the target domain is .

Figure 6.

The procedure of EasyTL. The colored diamonds and circles denote samples from the source and target domain, respectively. The red line denotes the classifier.

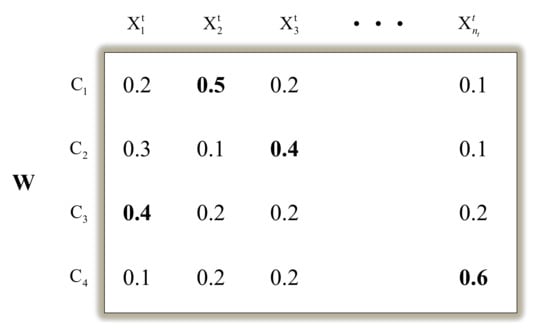

Intra-domain programming builds the classifier model by proposing a new Probability Annotation Matrix W, the rows of W denote the class label , and the column represents the target samples. The element indicates the annotation probability of belonging to class c. Based on the matrix W, EasyTL can predict the target samples. Please note that the class labels of the target sample that we choose are the corresponding ones with the maximum of . For instance, as shown in Figure 7, the class label of will belong to class since it has the maximum probability of 0.4 among all elements with the probabilities of , respectively.

Figure 7.

An example of the probability annotation matrix. The rows denote the class labels, and the columns denote the target samples. The entry value indicates the annotation probability of belonging to class and . The class labels of belonging to class are marked in bold.

3.2. InstanceEasyTL

The main reason EasyTL has a better performance in the field of image recognition is that there is basically not much difference on pixel features between the images in the target domain and those in the source domain, which makes it be relatively easy to adopt the intra-domain alignment to align the target domain with the source domain. However, for cross-subject EEG analysis, due to the significant differences of EEG among different subjects, which will lead to the large difference in the distribution of EEG features. The existing intra-domain alignment method in EasyTL is difficult to align the features between the source domain and target domain, hence it is difficult to obtain an ideal cross-subject analysis effect.

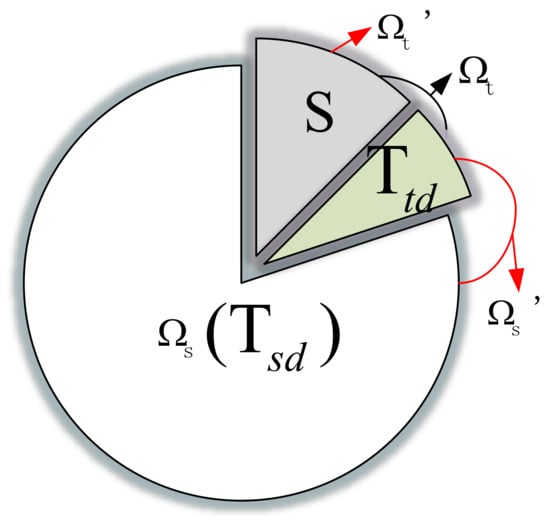

Therefore, in this paper, an improved EasyTL-based method, InstanceEasyTL, is proposed for overcoming such shortcoming of EasyTL for cross-subject EEG analysis. The main idea of the proposed InstanceEasyTL lies in the aspect that to match the different distribution of EEG signals from different subjects, we adopt a strategy of alignment with certain weights to align EEG samples collected from both source and target domains. To achieve this goal, InstanceEasyTL will “borrow” some EEG samples from the target domain , together with the original source domain (also called ) to form a new sample set of source domain for training, which will get more EEG data and reduce the cost of EEG collection. As shown in Figure 8, the initial target domain is divided into two parts: S and , in which is employed as part of the new source domain , thus consists of the initial source domain and in , and accordingly, the new target domain only includes the part of S.

Figure 8.

New training sample set in InstanceEasyTL.

Mathematically, InstanceEasyTL can be depicted as follows.

First, we can determine and according to the coefficient as Equations (1) and (2), respectively:

and

where and are the sets of class labels corresponding to and . (, ) and (, ) are the i-th sample and its corresponding class label in the source domain and target domain , respectively. and m are the number of samples in and .

Accordingly, we can then form the new source domain by Equation (3), and the new target domain by Equation (4), respectively:

and

here, T and S denote the sample sets of and , respectively, l is the number of samples in .

Algorithm 1 illustrates the proposed InstanceEasyTL method in detail.

First, initial weights are assigned to both training source domain and training target domain by using Equation (5). We should note that compared with the EasyTL method, = , , , and , respectively (can also refer to Figure 8).

Secondly, the assigned weights for both and are divided by the summation of all weights and stored as (as shown in Equation (6)). Based on the intra-domain programming method (also called in Algorithm 1), we take the training sample set T in (please see Equation (3)), , and the new testing set S in (please see Equation (4)) as the input of InstanceEasyTL algorithm, then we can calculate the output of . Here, S is not for the update of the weights, but for the testing after the end of iterations.

Thirdly, we will calculate the error between and real class labels . The weights of and are updated by -based function (please see steps 6 and 7 in Algorithm 1).

Finally, if the number of iteration reaches N, the expected output in S will be calculated by Equation (10).

| Algorithm 1. InstanceEasyTL |

|

4. Results

The experiment is performed on a GPU with a memory of 64 GB, Titan Xp graphic memory with 12 GB. Additionally, Intel i5-4570 CPU with a frequency of 3.2 G Hz, 1 TB of storage capacity, and 8 GB of memory are also employed to run these algorithms. InstanceEasyTL and other comparable models except for DANN are tested on MATLAB R2016b software and DANN on pycharm_2018.3.3 software. Codes are available at https://github.com/13etterrnan/InstanceEasyTL.

We compare InstanceEasyTL with the traditional machine-learning and transfer-learning methods, including SVM with linear kernel and intra-domain alignment [41], Transfer Component Analysis (TCA), Geodesic Flow Kernel (GFK), Domain-adversarial Neural Networks (DANN), and the existing EasyTL.

4.1. Selection of Experimental Conditions

To distinguish experimental conditions used to train models, we make use of the subjective (i.e., NASA_TLX) and subjects’ drive performance (i.e., “off-road”). With the change of the workload in different experimental conditions, it shows TAV3 and DROW have the highest and lowest workload, respectively. In addition, due to TAV3 is the first stage with audio and video stimuli in each experiment, subjects will be most sober. Correspondingly, when entering into the stage of DROW, the subjects are very likely to feel tired. Therefore, TAV3 and DROW are used as the typical mental states for analysis.

There are a total of 1400 samples for each subject, including 700 samples for TAV3 and 700 samples for DROW, respectively.

4.2. Parameter Configurations of Models

For SVM, The kernel type = linear. For TCA, the used parameters are , , , and kernel type = primal. For GFK, the used parameter is . For DANN, the used parameters are , , , and . In InstanceEayTL, in Equation (2) is set to be 0.3.

In this paper, we set the number of loops for InstanceEasyTL, since we find that the performances reach convergence after 30 loops. We perform 15 times of experiments to test the performances of InstanceEasyTL, in each experiment, 14 of 15 subjects are used as training samples, and the remaining as the testing samples. Thus, after 15 experiments, each subject is employed as the target domain to be tested at least one time, and the in each experiment belongs to a different subject to ensure we can acquire more objective performances of InstanceEasyTL.

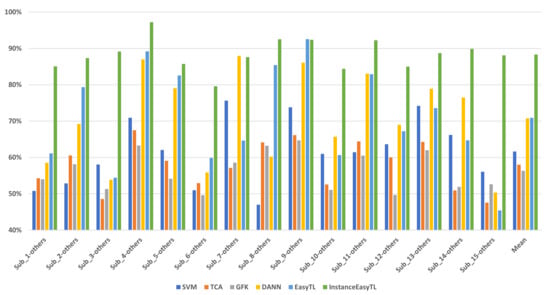

4.3. Classification Accuracy of InstanceEasyTL

Figure 9 shows the classification performance of different models. We can find that in these 15 experiments, InstanceEasyTL has almost the highest classification accuracy in cross-subject EEG analysis among these classifiers, which is more obvious in the three experiments of Sub_1-others, Sub_3-others, and Sub_15-others. Regarding the average performance, the average accuracy of InstanceEasyTL is 88.33%, which is significantly higher than that of SVM (61.65%), TCA (58.01%), GFK (56.32%), DANN (70.75%), and EasyTL (70.91%). Please note that it is 15% higher than that of the second-ranked classifier.

Figure 9.

Classification accuracy comparison. X axis is represented as Sub_i-others (i = 1, 2, …, 15), where Sub_i is the samples of target domain, others are the samples of source domain. For example, the samples of “Sub_1” are used as the target domain, and those of the other 14 subjects are represented as “others” as the source domain. Y axis shows classification accuracy of InstanceEasyTL and other methods used for comparison.

4.4. Statistical Analysis Results

To further analyze the performance of InstanceEasyTL on fatigue driving detection, we calculate the indicators of Recall, Precision, and F1score for each classifier, the results are shown in Table 2. These three indicators can be calculated as follows:

where TP is the number of samples correctly predicted as DROW, FP is the number of samples incorrectly predicted as DROW, FN is the number of samples incorrectly predicted as TAV3.

Table 2.

Recall, Precision and F1score(%) performance.

Therefore, Recall means the ratio of the samples correctly predicted as DROW to total samples in DROW state, and Precision means the samples correctly predicted as DROW to the samples predicted as DROW, respectively, F1score is the harmonic mean of the precision and recall.

We can find in 15 experiments InstanceEasyTL outperforms all other methods. The average Recall, Precision, and F1score achieved by InstanceEasyTL on our dataset are 89.08, 88.02, and 88.46, respectively, which significantly outperforms DANN by 18%, 16%, and 17%, respectively.

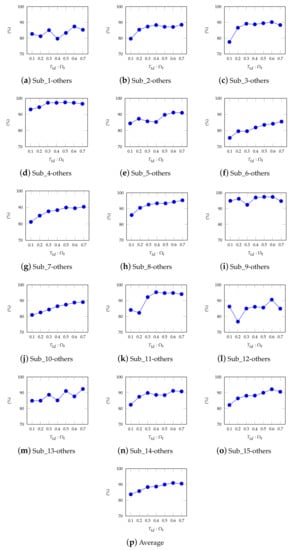

4.5. The Impact of Different on InstanceEasyTL

In this section, we count the impact of different ratio of on InstanceEasyTL. Here, we set the ratio of to be 0.1, 0.2, 0.3, 0.4, and 0.5, respectively. We can find that different ratio of results in different classification accuracy on InstanceEasyTL, as shown in Figure 10. From Figure 10, the cross-subject recognition accuracy of InstanceEasyTL is above 80% and shows an increasing trend as the ratio of increases, except for very few exceptions. Similar results can also be observed from Figure 10p by calculating the average accuracy of InstanceEasyTL. We can then conclude that the higher the ratio of , the higher the accuracy of InstanceEasyTL.

Figure 10.

The accuracy of InstanceEasyTL with different , where Sub_i-others (i = 1, 2, …, 15) has the same meaning as in Figure 9. We also calculate the average accuracy of InstanceEasyTL.

5. Discussion

Compared to other traditional methods, InstanceEasyTL could acquire better classification accuracy performance for cross-subject EEG analysis. There are mainly two reasons. First, from the perspective of data analysis, InstanceEasyTL “borrows” some samples and labels from the target domain , which will be regarded as a part of the new generated source domain , thus, InstanceEasyTL can acquire more information of to make classification accuracy much higher. However, for other traditional methods, although the samples in the target domain can also be used for classification, they usually have no corresponding labels for these samples. Secondly, from the perspective of model training, InstanceEasyTL adjusts the weights of samples during multiple iterations, and can adaptively choose the samples that are similar to those in the target domain , which is more helpful for the subsequent training process. Because InstanceEasyTL adopts the samples and labels from for training, which comes from different subjects, much better performance across subjects will then be acquired. Although other methods, such as TCK and EasyTL, have proven to be effective in image recognition applications, because they make the feature distributions between the source and target domains much similar, due to the significant differences of EEG signals over time and across subjects, it is very difficult to train those models to acquire similar features between the source and target domains for EEG analysis across subjects.

Then, we employ Recall, Precision, and F1score for statistical comparison analysis. It can be known from Equation (11) that the greater the Recall/Precision/F1score are, the easier it can distinguish DROW from TAV3. From Table 2, we can find InstanceEasyTL has the highest values of Recall, Precision, and F1score statistical analysis results, which illustrates better classification performance that InstanceEasyTL has in the cross-subject analysis.

Moreover, the impact of different ratios of on InstanceEasyTL is counted as well, as shown in Figure 10. Here, S and are used to form the new target domain and new source domain , respectively. Accordingly, samples in and are used by InstanceEasyTL for training and testing. In general, the different ratios of samples in these two domains will also affect the performance of InstanceEasyTL. Obviously, as the ratio of increases, on the one hand, the samples used for training in get larger accordingly, the better the performance of InstanceEasyTL as well. On the other hand, in this way, InstanceEasyTL can extract more characteristics from the original target domain , which is more suitable for EEG analysis across subjects. Additionally, we can find more information from when equals to 0.7 since the number of samples in is getting larger, which is more helpful to InstanceEasyTL for extracting the characteristics in , and helps improve the performance of InstanceEasyTL as well. However, few abnormal results exist. For instance, as shown in Figure 10a when the ratio of increases from 0.3 to 0.4, the accuracy of InstanceEasyTL decreases. The main possible reason is that we do not completely remove the artifact components in EEG signals during preprocessing stage, which will result in the inclusion of some artifact components in the new source domain or the new target domain , thereby reducing the classification accuracy of InstanceEasyTL.

6. Conclusions

In this paper, we propose an EasyTL-based model, named InstanceEasyTL, that focuses on performing fatigue detection across subjects based on EEG. InstanceEasyTL can extract important characteristic information from some subjects and then transfer it to the other new subjects by assigning weights to the samples and “borrowing” a part of data from the new subject as training samples. To verify the performance of InstanceEasyTL, we compare it with the traditional methods, such as SVM, TCA, GFK, DANN, and EasyTL. The results show that InstanceEasyTL can obtain better cross-subject classification performance. Moreover, the statistical analysis shows that InstanceEasyTL can better distinguish the mental state of DROW from TAV3, hence we can then make a further conclusion that InstanceEasyTL has a more comprehensive classification performance. In addition, by adjusting the ratio of , we can find the performance of InstanceEasyTL will be improved as the ratio increases. In our future work, we will continue to focus on the research on partial transfer learning where the target domain label space is a subspace of the source domain label space.

Author Contributions

Conceptualization, H.Z.; methodology, H.Z., J.Z. and W.Z.; software, J.Z. and X.L.; validation, J.Z. and X.L.; formal analysis, H.Z.; investigation, H.Z.; resources, H.Z.; data curation, F.B. and B.G.; writing—original draft preparation, H.Z.; writing—review and editing, W.Z. and W.K.; visualization, J.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Key R&D Program of China with grant No. 2017YFE0118200, NSFC with grant No. 62076083, Excellent Dissertation Cultivation Fund of Zhejiang Province with grant No. GK208802299013-101, the Graduate Scientific Research Foundation of Hangzhou Dianzi University with grant No. CXJJ2020086 and the Fundamental Research Funds for the Provincial Universities of Zhejiang with grant No. GK209907299001-008.

Acknowledgments

The authors would like to thank Yixian Yuan from Dongfang College, Zhejiang University of Finance & Economics for his help with writing–review and editing. The authors also thank the International Joint Research Center for Brain-Machine Collaborative Intelligence of Hangzhou Dianzi University with grant No. 2017B01020, Key Laboratory of Brain-Machine Collaborative Intelligence of Zhejiang Province with grant No. 2020E10010, and Industrial Neuroscience Laboratory of University of Rome “La Sapienza”.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Connor, J.L. The role of driver sleepiness in car crashes: A review of the epidemiological evidence. In Drugs, Driving and Traffic Safety; Springer: Basel, Switzerland, 2009; pp. 187–205. [Google Scholar]

- Khushaba, R.N.; Kodagoda, S.; Lal, S.; Dissanayake, G. Driver drowsiness classification using fuzzy wavelet-packet-based feature-extraction algorithm. IEEE Trans. Biomed. Eng. 2010, 58, 121–131. [Google Scholar] [PubMed]

- Hartley, L.; Horberry, T.; Mabbott, N.; Krueger, G.P. Review of Fatigue Detection and Prediction Technologies; National Road Transport Commission: Melbourne, Australia, 2000. [Google Scholar]

- Rau, P.S. Drowsy driver detection and warning system for commercial vehicle drivers: Field operational test design, data analyses, and progress. In Proceedings of the 19th International Conference on Enhanced Safety of Vehicles, Washington, DC, USA, 6–9 June 2005; pp. 6–9. [Google Scholar]

- Zhang, Y.; Hua, C. Driver fatigue recognition based on facial expression analysis using local binary patterns. Optik 2015, 126, 4501–4505. [Google Scholar] [CrossRef]

- Michielsen, H.J.; De Vries, J.; Van Heck, G.L.; Van de Vijver, F.J.; Sijtsma, K. Examination of the dimensionality of fatigue. Eur. J. Psychol. Assess. 2004, 20, 39–48. [Google Scholar] [CrossRef]

- Lai, J.S.; Cella, D.; Choi, S.; Junghaenel, D.U.; Christodoulou, C.; Gershon, R.; Stone, A. How item banks and their application can influence measurement practice in rehabilitation medicine: A PROMIS fatigue item bank example. Arch. Phys. Med. Rehabil. 2011, 92, S20–S27. [Google Scholar] [CrossRef]

- Meng, F.; Li, S.; Cao, L.; Li, M.; Peng, Q.; Wang, C.; Zhang, W. Driving fatigue in professional drivers: A survey of truck and taxi drivers. Traffic Inj. Prev. 2015, 16, 474–483. [Google Scholar] [CrossRef]

- Bener, A.; Yildirim, E.; Özkan, T.; Lajunen, T. Driver sleepiness, fatigue, careless behavior and risk of motor vehicle crash and injury: Population based case and control study. J. Traffic Transp. Eng. (Engl. Ed.) 2017, 4, 496–502. [Google Scholar] [CrossRef]

- Chai, R.; Ling, S.H.; San, P.P.; Naik, G.R.; Nguyen, T.N.; Tran, Y.; Craig, A.; Nguyen, H.T. Improving EEG-based driver fatigue classification using sparse-deep belief networks. Front. Neurosci. 2017, 11, 103. [Google Scholar]

- Huo, X.Q.; Zheng, W.L.; Lu, B.L. Driving fatigue detection with fusion of EEG and forehead EOG. In Proceedings of the 2016 International Joint Conference on Neural Networks (IJCNN), Vancouver, BC, Canada, 24–29 July 2016; pp. 897–904. [Google Scholar]

- Wang, F.; Wang, H.; Fu, R. Real-Time ECG-based detection of fatigue driving using sample entropy. Entropy 2018, 20, 196. [Google Scholar] [CrossRef]

- Aricò, P.; Borghini, G.; Di Flumeri, G.; Sciaraffa, N.; Colosimo, A.; Babiloni, F. Passive BCI in operational environments: Insights, recent advances, and future trends. IEEE Trans. Biomed. Eng. 2017, 64, 1431–1436. [Google Scholar]

- Aricò, P.; Borghini, G.; Di Flumeri, G.; Sciaraffa, N.; Babiloni, F. Passive BCI beyond the lab: Current trends and future directions. Physiol. Meas. 2018, 39, 08TR02. [Google Scholar]

- Akin, M.; Kurt, M.B.; Sezgin, N.; Bayram, M. Estimating vigilance level by using EEG and EMG signals. Neural Comput. Appl. 2008, 17, 227–236. [Google Scholar] [CrossRef]

- Zhang, T.; Chen, W. LMD based features for the automatic seizure detection of EEG signals using SVM. IEEE Trans. Neural Syst. Rehabil. Eng. 2016, 25, 1100–1108. [Google Scholar] [CrossRef] [PubMed]

- Hearst, M.A.; Dumais, S.T.; Osuna, E.; Platt, J.; Scholkopf, B. Support vector machines. IEEE Intell. Syst. Their Appl. 1998, 13, 18–28. [Google Scholar] [CrossRef]

- Yuan, S.; Liu, J.; Shang, J.; Kong, X.; Yuan, Q.; Ma, Z. The earth mover’s distance and Bayesian linear discriminant analysis for epileptic seizure detection in scalp EEG. Biomed. Eng. Lett. 2018, 8, 373–382. [Google Scholar] [CrossRef] [PubMed]

- Mehmood, R.M.; Lee, H.J. Emotion classification of EEG brain signal using SVM and KNN. In Proceedings of the 2015 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), Turin, Italy, 29 June–3 July 2015; pp. 1–5. [Google Scholar]

- Alhagry, S.; Fahmy, A.A.; El-Khoribi, R.A. Emotion recognition based on EEG using LSTM recurrent neural network. Emotion 2017, 8, 355–358. [Google Scholar] [CrossRef]

- Acharya, U.R.; Oh, S.L.; Hagiwara, Y.; Tan, J.H.; Adeli, H. Deep convolutional neural network for the automated detection and diagnosis of seizure using EEG signals. Comput. Biol. Med. 2018, 100, 270–278. [Google Scholar] [CrossRef]

- Zeng, H.; Yang, C.; Zhang, H.; Wu, Z.; Zhang, J.; Dai, G.; Babiloni, F.; Kong, W. A lightGBM-based EEG analysis method for driver mental states classification. Comput. Intell. Neurosci. 2019, 2019. [Google Scholar] [CrossRef]

- Deng, Z.; Xu, P.; Xie, L.; Choi, K.S.; Wang, S. Transductive joint-knowledge-transfer TSK FS for recognition of epileptic EEG signals. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 1481–1494. [Google Scholar] [CrossRef]

- Wang, J.; Chen, Y.; Yu, H.; Huang, M.; Yang, Q. Easy Transfer Learning By Exploiting Intra-domain Structures. In Proceedings of the IEEE International Conference on Multimedia & Expo (ICME), Shanghai, China, 8–12 July 2019. [Google Scholar]

- Pan, S.J.; Tsang, I.W.; Kwok, J.T.; Yang, Q. Domain adaptation via transfer component analysis. IEEE Trans. Neural Netw. 2010, 22, 199–210. [Google Scholar] [CrossRef]

- Gong, B.; Yuan, S.; Fei, S.; Grauman, K. Geodesic flow kernel for unsupervised domain adaptation. In Proceedings of the IEEE Conference on Computer Vision & Pattern Recognition, Providence, RI, USA, 16–21 June 2012. [Google Scholar]

- Ganin, Y.; Ustinova, E.; Ajakan, H.; Germain, P.; Larochelle, H.; Laviolette, F.; Marchand, M.; Lempitsky, V. Domain-adversarial training of neural networks. J. Mach. Learn. Res. 2016, 17, 2030–2096. [Google Scholar]

- Zeng, H.; Yang, C.; Dai, G.; Qin, F.; Zhang, J.; Kong, W. EEG classification of driver mental states by deep learning. Cogn. Neurodynamics 2018, 12, 597–606. [Google Scholar] [CrossRef] [PubMed]

- Kong, W.; Zhou, Z.; Jiang, B.; Babiloni, F.; Borghini, G. Assessment of driving fatigue based on intra/inter-region phase synchronization. Neurocomputing 2017, 219, 474–482. [Google Scholar] [CrossRef]

- Vecchiato, G.; Borghini, G.; Aricò, P.; Graziani, I.; Maglione, A.G.; Cherubino, P.; Babiloni, F. Investigation of the effect of EEG-BCI on the simultaneous execution of flight simulation and attentional tasks. Med. Biol. Eng. Comput. 2016, 54, 1503–1513. [Google Scholar] [CrossRef]

- Jahankhani, P.; Kodogiannis, V.; Revett, K. EEG signal classification using wavelet feature extraction and neural networks. In Proceedings of the IEEE John Vincent Atanasoff 2006 International Symposium on Modern Computing (JVA’06), Sofia, Bulgaria, 3–6 October 2006; pp. 120–124. [Google Scholar]

- Borghini, G.; Astolfi, L.; Vecchiato, G.; Mattia, D.; Babiloni, F. Measuring neurophysiological signals in aircraft pilots and car drivers for the assessment of mental workload, fatigue and drowsiness. Neurosci. Biobehav. Rev. 2014, 44, 58–75. [Google Scholar] [CrossRef]

- Maglione, A.; Borghini, G.; Aricò, P.; Borgia, F.; Graziani, I.; Colosimo, A.; Kong, W.; Vecchiato, G.; Babiloni, F. Evaluation of the workload and drowsiness during car driving by using high resolution EEG activity and neurophysiologic indices. In Proceedings of the 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Chicago, IL, USA, 26–30 August 2014; pp. 6238–6241. [Google Scholar]

- Lal, S.K.; Craig, A. Driver fatigue: Electroencephalography and psychological assessment. Psychophysiology 2002, 39, 313–321. [Google Scholar] [CrossRef]

- Bach, K.M.; Jæger, M.G.; Skov, M.B.; Thomassen, N.G. Interacting with in-vehicle systems: Understanding, measuring, and evaluating attention. In Proceedings of the People and Computers XXIII Celebrating People and Technology (HCI), Cambridge, UK, 1–5 September 2009; pp. 453–462. [Google Scholar]

- Lay-Ekuakille, A.; Vergallo, P.; Caratelli, D.; Conversano, F.; Casciaro, S.; Trabacca, A. Multispectrum approach in quantitative EEG: Accuracy and physical effort. IEEE Sens. J. 2013, 13, 3331–3340. [Google Scholar] [CrossRef]

- Zeng, H.; Wu, Z.; Zhang, J.; Yang, C.; Zhang, H.; Dai, G.; Kong, W. EEG Emotion Classification Using an Improved SincNet-Based Deep Learning Model. Brain Sci. 2019, 9, 326. [Google Scholar] [CrossRef]

- Bhattacharyya, S.; Sengupta, A.; Chakraborti, T.; Konar, A.; Tibarewala, D.N. Automatic feature selection of motor imagery EEG signals using differential evolution and learning automata. Med. Biol. Eng. Comput. 2014, 52, 131–139. [Google Scholar] [CrossRef]

- Zeng, H.; Dai, G.; Kong, W.; Chen, F.; Wang, L. A novel nonlinear dynamic method for stroke rehabilitation effect evaluation using eeg. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 2488–2497. [Google Scholar] [CrossRef]

- Li, L. The differences among eyes-closed, eyes-open and attention states: An EEG study. In Proceedings of the 2010 6th International Conference on Wireless Communications Networking and Mobile Computing (WiCOM), Chengdu, China, 23–25 September 2010; pp. 1–4. [Google Scholar]

- Sun, B.; Feng, J.; Saenko, K. Return of frustratingly easy domain adaptation. In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016. [Google Scholar]

- Dai, W.; Yang, Q.; Xue, G.R.; Yu, Y. Boosting for transfer learning. In Proceedings of the 24th international conference on Machine learning, Madison, Corvallis, OR, USA, 20–24 June 2007; ACM: New York, NY, USA, 2007; pp. 193–200. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).