Cascaded Cross-Modality Fusion Network for 3D Object Detection

Abstract

1. Introduction

2. Related Work

2.1. RGB-Based 3D Object Detection

2.2. LIDAR-Based 3D Object Detection

2.3. LIDAR-RGB Fusion-Based 3D Object Detection

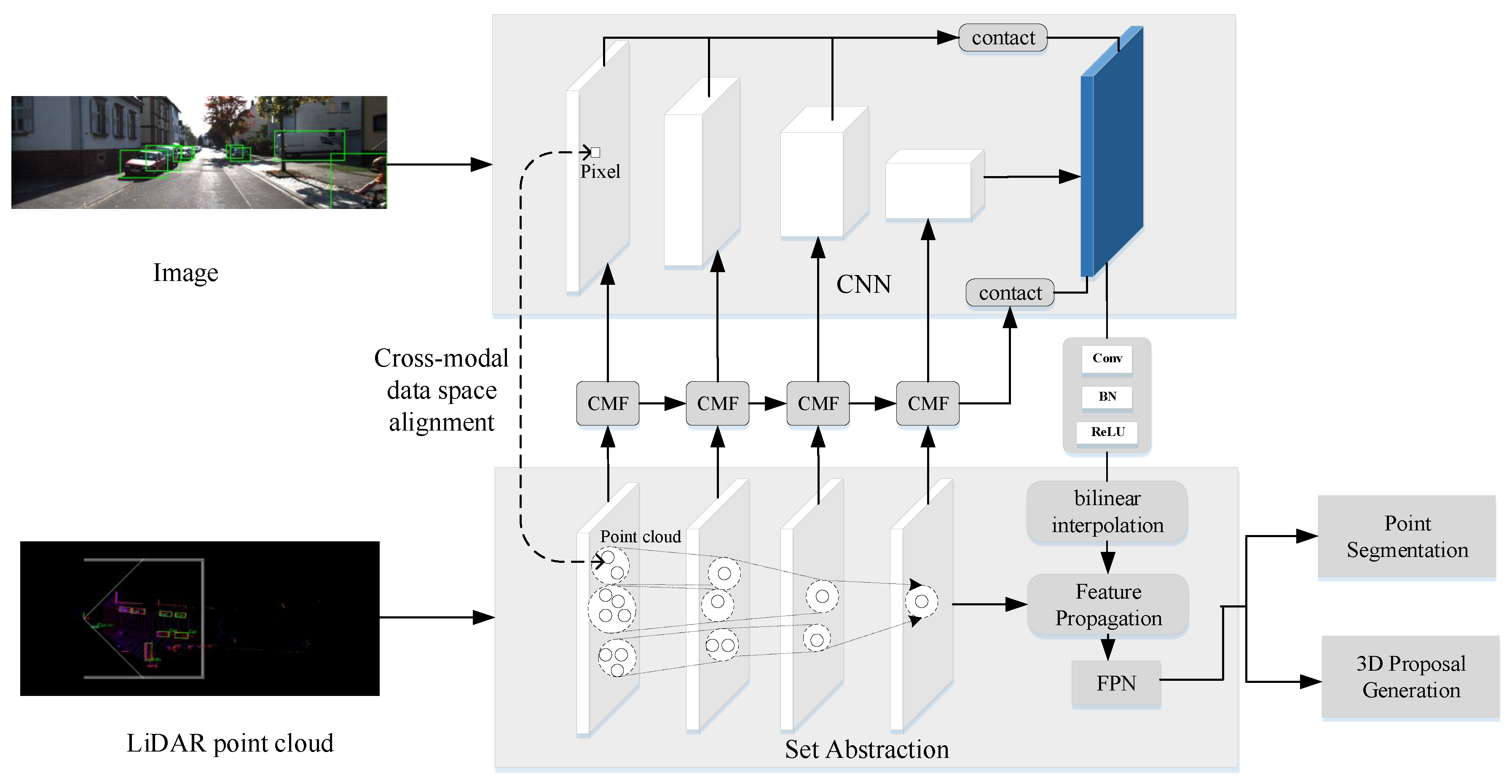

3. Our Approach

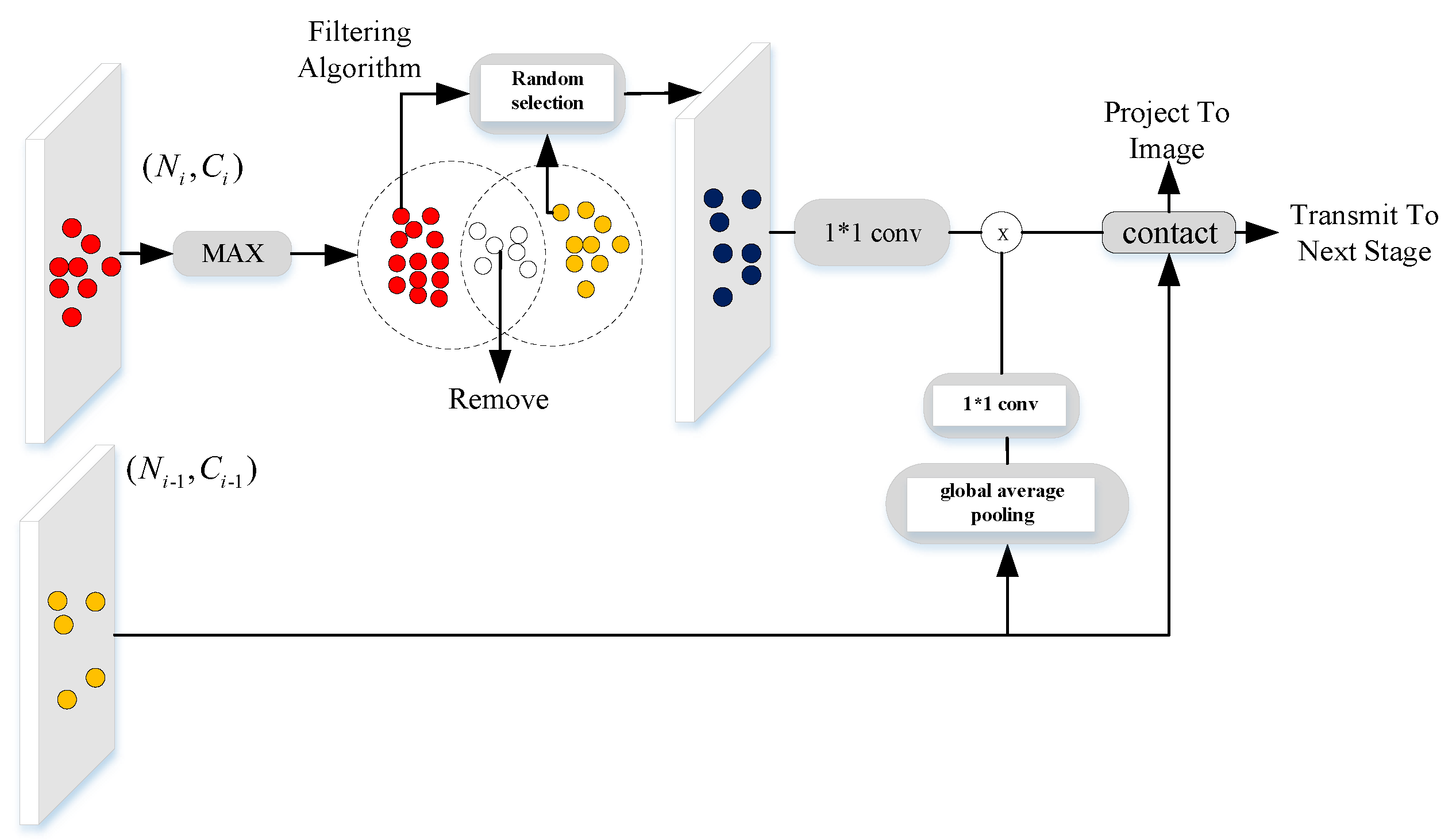

3.1. Cascaded Multi-Scale Fusion Module

| Algorithm 1 A regulation algorithm of CMF module to avoid over-saturation of repeated points in the points candidates. |

Input: point cloud features from previous stage and point set of . Output: regulated point cloud features .

|

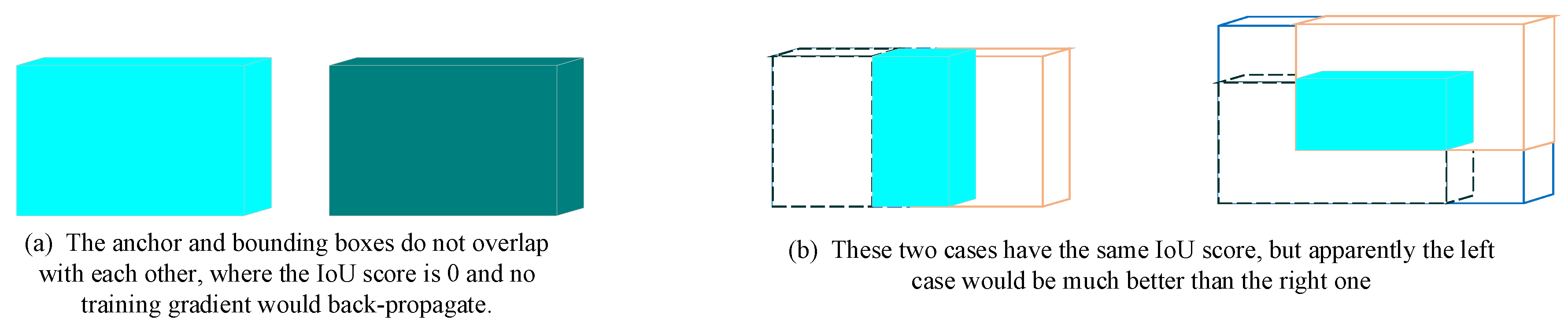

3.2. Center 3D IoU Loss

3.3. 3D Region Proposal Network

4. Experiments

4.1. Dataset and Evaluation Metrics

4.2. Implementation Detail

4.3. Anchors and Targets

4.4. Ablation Study

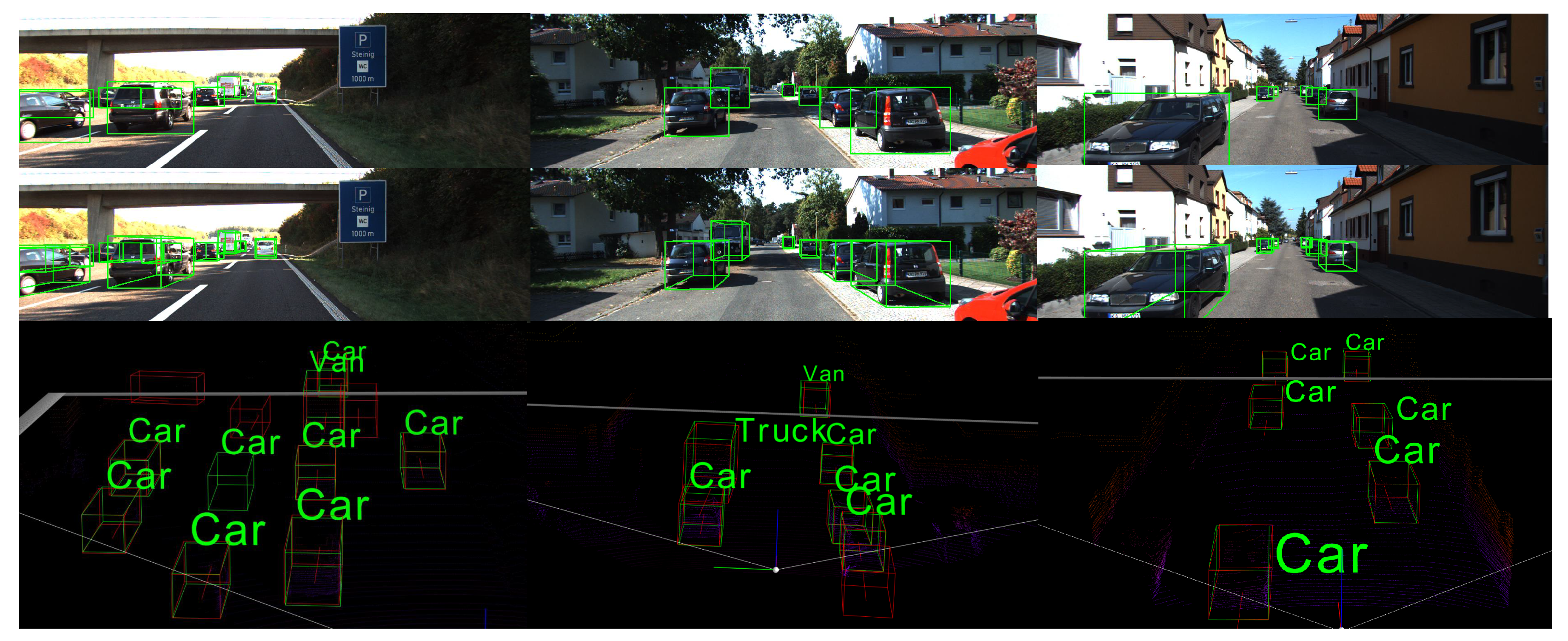

4.5. Evaluation on the KITTI Validation Set

4.6. Evaluation on the KITTI Test Set

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Siam, M.; Mahgoub, H.; Zahran, M.; Yogamani, S.; Jagersand, M.; EI-Sallab, A. MODNet: Motion and Appearance based Moving Object Detection Network for Autonomous Driving. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 122–134. [Google Scholar]

- Cai, Z.; Fan, Q.; Feris, R.S.; Vasconcelos, N. A unified multi-scale deep convolutional neural network for fast object detection. In Proceedings of the 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; pp. 354–370. [Google Scholar]

- Ku, J.; Mozifian, M.; Lee, J.; Harakeh, A.; Waslander, S. Joint 3D proposal generation and object detection from view aggregation. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems, Madrid, Spain, 1–5 October 2018; pp. 1–8. [Google Scholar]

- Wu, F.; Chen, F.; Jing, X.; Hu, C.; Ge, Q.; Ji, Y. Dynamic attention network for semantic segmentation. Neurocomputing 2020, 384, 182–191. [Google Scholar] [CrossRef]

- Vora, S.; Lang, A.; Helou, B.; Beijbom, O. Pointpainting: Sequential fusion for 3d object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–18 June 2020; pp. 4604–4612. [Google Scholar]

- Xie, L.; Xiang, C.; Yu, G.; Yang, Z.; Cai, D.; He, X. PI-RCNN: An efficient multi-sensor 3D object detector with point-based attentive cont-conv fusion module. arXiv 2020, arXiv:1911.06084. [Google Scholar] [CrossRef]

- Chen, X.; Ma, H.; Wan, J.; Li, B.; Xia, T. Multi-view 3d object detection network for autonomous driving. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1907–1915. [Google Scholar]

- Pang, S.; Morris, D.; Radha, H. CLOCs: Camera-LiDAR object candidates fusion for 3D object detection. arXiv 2020, arXiv:2009.00784. [Google Scholar]

- Yoo, J.; Kim, Y.; Kim, J.; Choi, J. 3D-CVF: Generating joint camera and LiDAR features using cross-view spatial feature fusion for 3D object detection. arXiv 2020, arXiv:2004.12636. [Google Scholar]

- Liang, M.; Yang, B.; Chen, Y.; Hu, R.; Urtasun, R. Multi-Task multi-sensor fusion for 3D object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2019, Long Beach, CA, USA, 15–21 June 2019; pp. 7345–7353. [Google Scholar]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.Y.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2019, Long Beach, CA, USA, 15–21 June 2019; pp. 658–666. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 91–99. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 June 2017; pp. 7263–7271. [Google Scholar]

- Song, S.; Xiao, J. Deep sliding shapes for amodal 3d object detection in rgb-d images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 808–816. [Google Scholar]

- Gupta, S.; Xiao, J. Learning rich features from RGB-D images for object detection and segmentation. In Proceedings of the 13th European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 345–360. [Google Scholar]

- Tekin, B.; Sinha, S.N.; Fua, P.; Fua, P. Real-time seamless single shot 6D object pose prediction. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 292–301. [Google Scholar]

- Dhiman, V.; Tran, Q.H.; Corso, J.; Chandraker, M. A continuous occlusion model for road scene understanding. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4331–4339. [Google Scholar]

- Laidlow, T.; Czarnowski, J.; Leutenegger, S. DeepFusion: Real-time dense 3D reconstruction for monocular slam using single-view depth and gradient predictions. In Proceedings of the 2019 International Conference on Robotics and Automation, Montreal, QC, Canada, 20–24 May 2019; pp. 4068–4074. [Google Scholar]

- Bo, L.; Zhang, T.; Xia, T. Vehicle detection from 3d lidar using fully convolutional network. arXiv 2016, arXiv:1608.07916. [Google Scholar]

- Yang, B.; Luo, W.; Urtasun, R. Pixor: Real-time 3d object detection from point clouds. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7652–7660. [Google Scholar]

- Shi, S.; Wang, X.; Li, H. Pointrcnn: 3d object proposal generation and detection from point cloud. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–21 June 2019; pp. 770–779. [Google Scholar]

- Yang, Y.; Chen, F.; Wu, F.; Zeng, D.; Ji, Y.; Jing, X. Multi-view semantic learning network for point cloud based 3D object detection. Neurocomputing 2020, 397, 477–485. [Google Scholar] [CrossRef]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L. PointNet: Deep learning on point sets for 3D classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L. PointNet++: Deep hierarchical feature learning on point sets in a metric space. In Proceedings of the Advance in Neural Information Processing Systems 2017, Long Beach, CA, USA, 4–9 December 2017; pp. 5099–5108. [Google Scholar]

- Shi, S.; Guo, C.; Jiang, L.; Wang, Z.; Shi, J.; Wang, X. PV-RCNN: Point-Voxel Feature Set Abstraction for 3D Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2020, Seattle, WA, USA, 16–18 June 2020; pp. 10529–10538. [Google Scholar]

- He, C.; Zeng, H.; Huang, J.; Hua, X.; Zhang, L. Structure Aware Single-stage 3D Object Detection from Point Cloud. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2020, Seattle, WA, USA, 16–18 June 2020; pp. 11873–11882. [Google Scholar]

- Yang, Z.; Sun, Y.; Liu, S.; Shen, X.; Jia, J. Std: Sparse-to-dense 3d object detector for point cloud. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2019, Long Beach, CA, USA, 15–21 June 2019; pp. 1951–1960. [Google Scholar]

- Zhou, Y.; Tuzel, O. VoxelNet: End-to-end learning for point cloud based 3D object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2018, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4490–4499. [Google Scholar]

- Kuang, H.; Wang, B.; An, J.; Zhang, M.; Zhang, Z. Voxel-FPN: Multi-scale voxel feature aggregation in 3D object detection from point clouds. Sensors 2020, 20, 704. [Google Scholar] [CrossRef] [PubMed]

- Yan, Y.; Mao, Y.; Li, B. SECOND: Sparsely embedded convolutional detection. Sensors 2018, 18, 3337. [Google Scholar] [CrossRef] [PubMed]

- Lang, A.H.; Vora, S.; Caesar, H. PointPillars: Fast encoders for object detection from point clouds. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2019, Long Beach, CA, USA, 15–21 June 2019; pp. 12697–12705. [Google Scholar]

- Shi, S.; Wang, Z.; Shi, J.; Wang, X.; Li, H. From Points to parts: 3D object detection from point cloud with part-aware and part-aggregation Network. arXiv 2019, arXiv:1907.03670. [Google Scholar] [CrossRef] [PubMed]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? The kitti vision benchmarksuite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Qi, C.R.; Liu, W.; Wu, C.; Su, H.; Guibas, L. Frustum pointnets for 3d object detection from rgb-d data. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2018, Salt Lake City, UT, USA, 18–22 June 2018; pp. 918–927. [Google Scholar]

- Liu, Z.; Tang, H.; Lin, Y.; Han, S. Point-Voxel CNN for efficient 3D deep learning. In Proceedings of the Advances in Neural Information Processing Systems 2019, Vancouver, BC, Canada, 10–12 December 2019; pp. 965–975. [Google Scholar]

| Group | Stage | 3D Detection | |||||

|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | Easy | Mod | Hard | |

| (1) | 87.82 | 77.41 | 75.94 | ||||

| (2) | √ | 88.47 | 78.68 | 76.10 | |||

| (3) | √ | 89.72 | 78.60 | 76.51 | |||

| (4) | √ | 89.25 | 78.92 | 76.91 | |||

| (5) | √ | 89.35 | 79.10 | 77.03 | |||

| (6) | √ | √ | 90.02 | 79.90 | 78.63 | ||

| (7) | √ | √ | √ | 90.80 | 80.19 | 78.73 | |

| (8) | √ | √ | √ | √ | 90.93 | 81.79 | 79.60 |

| 3D Detection | ||||

|---|---|---|---|---|

| Easy | Mod | Hard | ||

| 0.5 | 0.75 | 90.23 | 80.21 | 79.22 |

| 0.5 | 0.85 | 90.01 | 80.13 | 79.09 |

| 0.6 | 0.75 | 90.41 | 79.58 | 78.82 |

| 0.6 | 0.85 | 89.23 | 79.25 | 78.53 |

| 0.75 | 0.85 | 89.14 | 78.91 | 78.22 |

| Method | 3D Detection | ||

|---|---|---|---|

| Easy | Mod | Hard | |

| traditional IoU | 88.41 | 80.21 | 78.21 |

| CCF IoU | 91.12 | 82.42 | 79.63 |

| Method | Easy | Mod | Hard |

|---|---|---|---|

| MV3D [7] | 71.29 | 62.28 | 56.56 |

| AVOD-FPN [3] | 84.41 | 74.44 | 68.65 |

| FPointNet [35] | 83.76 | 70.92 | 63.65 |

| SECOND [31] | 87.43 | 76.48 | 69.10 |

| CCFNet | 91.93 | 81.79 | 79.60 |

| Method | Easy | Mod | Hard |

|---|---|---|---|

| MV3D [7] | 86.55 | 78.10 | 76.67 |

| AVOD-FPN [3] | 84.41 | 74.44 | 68.65 |

| FPointNet [35] | 88.16 | 84.02 | 76.44 |

| SECOND [31] | 89.96 | 87.07 | 79.66 |

| CCFNet | 94.01 | 88.92 | 82.93 |

| Method | Car | Pedestrian | Cyclist | Input Data | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Easy | Mod | Hard | Easy | Mod | Hard | Easy | Mod | Hard | ||

| VoxelNet [29] | 81.97 | 65.46 | 62.85 | 57.86 | 53.42 | 48.87 | 67.17 | 47.65 | 45.11 | LIDAR |

| PointRCNN [22] | 85.94 | 75.76 | 68.32 | 49.43 | 41.78 | 38.63 | 73.93 | 59.60 | 53.59 | LIDAR |

| SECOND [31] | 83.13 | 73.66 | 66.20 | 51.07 | 42.56 | 37.29 | 70.51 | 53.85 | 46.90 | LIDAR |

| MV3D [7] | 71.09 | 62.35 | 55.12 | N/A | N/A | N/A | N/A | N/A | N/A | LIDAR + Image |

| AVOD [3] | 73.59 | 65.78 | 58.38 | 38.28 | 31.51 | 26.98 | 60.11 | 44.90 | 38.80 | LIDAR + Image |

| AVOD-FPN [3] | 81.94 | 71.88 | 66.38 | 46.35 | 39.00 | 36.58 | 59.97 | 46.12 | 42.36 | LIDAR + Image |

| FPointNet [35] | 81.20 | 70.39 | 62.19 | 51.21 | 44.89 | 40.23 | 71.96 | 56.77 | 50.39 | LIDAR + Image |

| MMF [10] | 88.40 | 77.43 | 70.22 | N/A | N/A | N/A | N/A | N/A | N/A | LIDAR + Image |

| CLOCs_SecCas [8] | 86.38 | 78.45 | 72.45 | N/A | N/A | N/A | N/A | N/A | N/A | LIDAR + Image |

| Our | 89.07 | 78.62 | 73.33 | 53.92 | 46.58 | 42.14 | 73.65 | 57.26 | 52.42 | LIDAR + Image |

| Method | Car | Pedestrian | Cyclist | Input Data | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Easy | Mod | Hard | Easy | Mod | Hard | Easy | Mod | Hard | ||

| VoxelNet [29] | 89.35 | 79.26 | 77.39 | 46.13 | 40.74 | 38.11 | 66.70 | 54.76 | 50.55 | LIDAR |

| PointRCNN [22] | 84.32 | 75.42 | 67.86 | 85.94 | 75.76 | 68.32 | 89.47 | 85.68 | 79.10 | LIDAR |

| SECOND [31] | 83.13 | 73.66 | 66.20 | 51.07 | 42.56 | 37.29 | 70.51 | 53.85 | 46.90 | LIDAR |

| MV3D [7] | 86.02 | 76.90 | 68.49 | N/A | N/A | N/A | N/A | N/A | N/A | LIDAR + Image |

| AVOD [3] | 86.80 | 85.44 | 77.73 | 42.51 | 35.24 | 33.97 | 63.66 | 47.74 | 46.55 | LIDAR + Image |

| AVOD-FPN [3] | 88.53 | 83.79 | 77.90 | 50.66 | 44.75 | 40.83 | 62.39 | 52.02 | 47.87 | LIDAR + Image |

| F-PointNet [35] | 88.70 | 84.00 | 75.33 | 58.09 | 50.22 | 47.20 | 75.38 | 61.96 | 54.68 | LIDAR + Image |

| MMF [10] | 93.67 | 88.21 | 81.99 | N/A | N/A | N/A | N/A | N/A | N/A | LIDAR + Image |

| CLOCs_SecCas [8] | 91.16 | 88.23 | 82.63 | N/A | N/A | N/A | N/A | N/A | N/A | LIDAR + Image |

| Our | 94.10 | 88.62 | 82.33 | 60.92 | 52.58 | 49.14 | 77.65 | 63.26 | 56.42 | LIDAR + Image |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Z.; Lin, Q.; Sun, J.; Feng, Y.; Liu, S.; Liu, Q.; Ji, Y.; Xu, H. Cascaded Cross-Modality Fusion Network for 3D Object Detection. Sensors 2020, 20, 7243. https://doi.org/10.3390/s20247243

Chen Z, Lin Q, Sun J, Feng Y, Liu S, Liu Q, Ji Y, Xu H. Cascaded Cross-Modality Fusion Network for 3D Object Detection. Sensors. 2020; 20(24):7243. https://doi.org/10.3390/s20247243

Chicago/Turabian StyleChen, Zhiyu, Qiong Lin, Jing Sun, Yujian Feng, Shangdong Liu, Qiang Liu, Yimu Ji, and He Xu. 2020. "Cascaded Cross-Modality Fusion Network for 3D Object Detection" Sensors 20, no. 24: 7243. https://doi.org/10.3390/s20247243

APA StyleChen, Z., Lin, Q., Sun, J., Feng, Y., Liu, S., Liu, Q., Ji, Y., & Xu, H. (2020). Cascaded Cross-Modality Fusion Network for 3D Object Detection. Sensors, 20(24), 7243. https://doi.org/10.3390/s20247243