A Gesture Elicitation Study of Nose-Based Gestures

Abstract

1. Introduction

- Dual task interaction: a primary task is ongoing (e.g., a conversation during a meeting) and a secondary task (e.g., a phone call) occurs, potentially interrupting the primary one, and requires some discreet interaction to minimize interference with the primary task. For example, a Rubbing gesture discreetly ignores a phone call without disturbing the conversation too much. This is inspired by the dual task performance, a test for assessing the cognitive workload in psychology [13]

- Eyes-free and/or touch-free interaction [14]: a task should be carried out by interacting with a system without requiring any visual attention and physical touch. Gestures are discreetly performed on the face, an always-accessible area in principle.

- A gesture elicitation study conducted with two groups of participants, one composed of 12 females and another one with 12 males, to determine their user-defined, preferred nose-based gestures, as detected by a sensor [7], for executing Internet-of-Things (IoT) actions.

- Based on criteria for classifying the elicited gestures, a taxonomy of gestures and a consensus set of final gestures are formed based on agreement scores and rates computed for all actions.

- A set of design guidelines which provide researchers and practitioners with some guidance on how to design a user interface exploiting nose-based gestures.

- An inferential statistical analysis testing the gender effect on preferred gestures.

2. Related Work

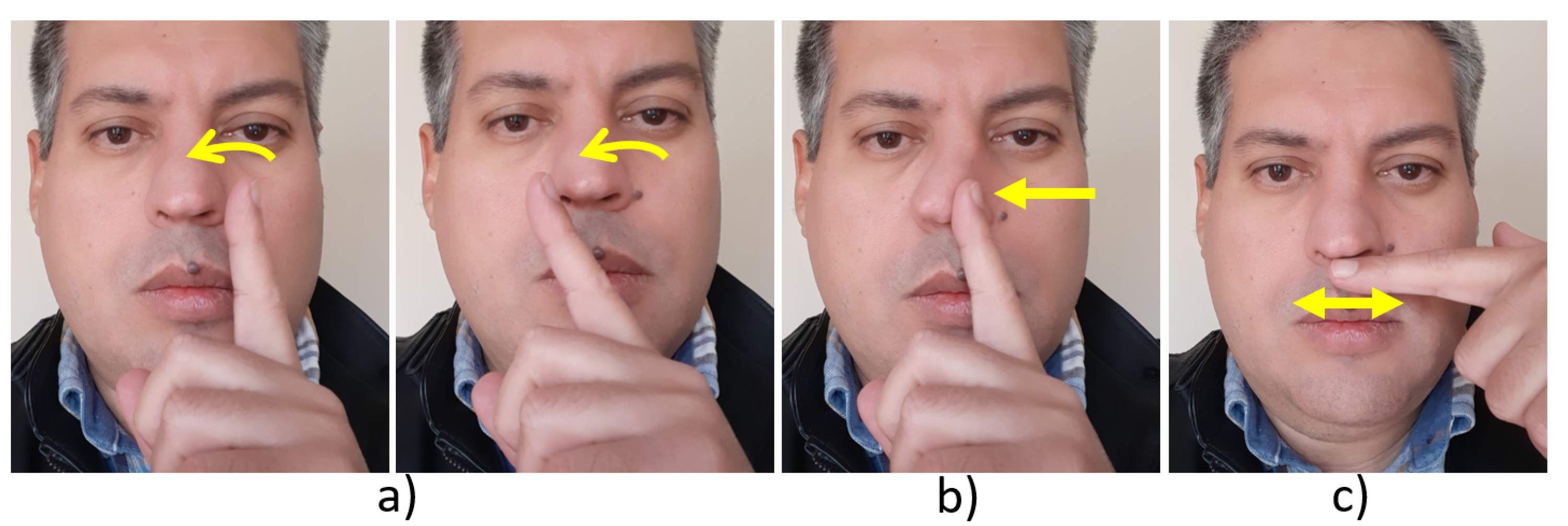

3. Experiment

3.1. Participants

3.2. Apparatus

3.3. Procedure

3.3.1. Pre-Test Phase

3.3.2. Test Phase

3.3.3. Post-Test Phase

3.4. Design

3.5. Quantitative and Qualitative Measures

- Participants’ Creativity was evaluated using an online creativity instrument. The test returns a result between the values 0 and 100 where higher scores denote more creativity. The results are calculated from a set of responses grouped into categories: (1) abstraction of concepts from the presentation of ideas; (2) connection between things/elements or objects without an apparent link; (3) perspective shift in terms of space, time, and other people; (4) curiosity to change and improve things/elements and situations accepted as the norm; (5) boldness to push boundaries beyond the normally accepted conventions; (6) paradox the ability to accept and work with concepts that are contradictory; (7) complexity the ability to operate with a large amount of information; and (8) persistence to derive stronger solutions even when good ones exist.

- Participants’ fine motor skills was measured with a standard motor test of the NEPSY (a developmental NEuroPSYchological assessment) test batteries [56]. The test consists of touching each fingertip with the thumb of the same hand for eight times in a row. Higher motor skills are reflected in less time to perform this task.

- Thinking-Time measures the time, in seconds, elapsed to elicit any gesture for a referent.

- Goodness-of-Fit represents participants’ subjective assessment, as a rating between 1 and 10, of their confidence about how well the proposed gestures fit the referents. Participants could elicit their two gestures in any order with a different Goodness-of-Fit.

4. Results and Discussion

- Dimension: the cardinality of the gesture space: 0D (point), 1D (line), 2D (plane), 3D (space).

- Laterality: which side(s) have been used to issue the gesture, unilateral (when a gesture is elicited only on one side of the dorsum nasi) or central (if the gesture is issued on the edge).

- Gesture motion: which is the intensity of the movement stroke (as a snap or a hit), static (if performed on a single location) or dynamic (if the speed or movement is changing over time).

- Nature: describes the meaning of a gesture with four values adapted from [8]: symbolic gestures depict commonly accepted symbols conveying information, such as emblems and cultural gestures, e.g., the Call me gesture performed with the thumb and little finger stretched out, or swiping the index finger from left to right; metaphorical gestures give shape to an idea or concept, such as using the thumb to press a button on an imaginary remote control to turn on/off the TV set; abstract gestures have no symbolic or metaphorical connections to their referents; physical gestures refer to the real world physics.

- Number of fingers: how many fingers were involved.

- Finger type: type of finger involved in the elicited gesture.

- Path type: direct, flexible, without any particular path.

- Movement axis: stationary, horizontal, vertical, or composed.

- Area: above the nose, under the nose, left part of the dorsum nasi, right part, center, multiple areas.

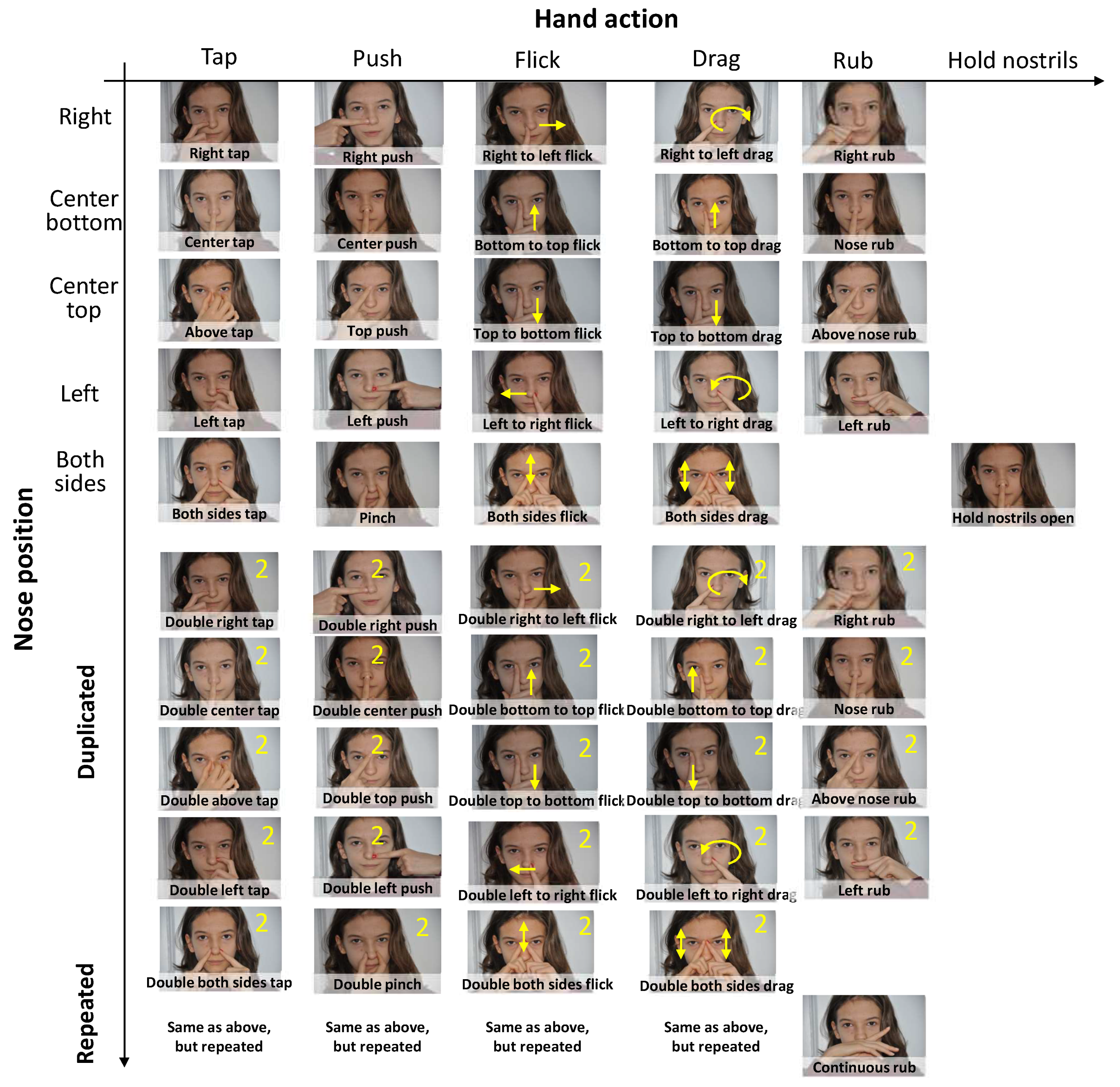

4.1. Gesture Classification

- Tap: tap any side of the dorsum nasi with the back or the top of one or several fingers with one hand (1.0), on the center (1.1), with two hands on both sides of the nose (1.2), repeated center tap (1.3), right tap (1.5), left tap (1.6).

- Double tap: tap two times on the center (2.1), both sides of the nose (2.2), right (2.5), left (2.6), above the nose (2.7).

- Triple tap: tap three times in a row on the center (3.1), right (3.5), left (3.6), above (3.7).

- Flicking: from right to left (4.5), from left to right (4.6), from the top of the dorsum nasi to the bottom (4.7).

- Pushing: center push (5.1), right push (5.5), left push (5.6).

- Rubbing: rub once on right/left side (6.5/6.6), above the nose (6.7), continuous rub on the right/left (8.8/8.9).

- Double rubbing: repeat rubbing two times in a row.

- Triple rubbing: repeat rubbing three times in a row.

- Drag: stays pressed from the initial point to the final one with the right (9.1) or left hand (9.2), from bottom to top (9.3) or vice versa (9.4), from right to left (9.5) or inverse (9.6).

- Double drag: on the right (10.5), on the left (10.6), from bottom to top (10.7), from top to bottom (10.8).

- Triple drag: drag repeated three times in a row.

- Quadruple drag: drag repeated four times in a row.

- Pinch: when two fingers come far from each other.

- Double pinch: when two fingers come far, close to each other.

- Circle: draw a circle on a facet.

- Double flicking: rapid unilinear movement repeated twice.

- Hold nostrils open: as defined.

- Push nose up: push on the nose with a finger up

- Wrinkle: pulling up the nose without hands.

- Double wrinkle: repeat the wrinkle two times.

- Pull on nose: pull the nose with a finger.

- Finger in nose: in the right/left nostril (22.5/22.6).

- Sniffing: right part (23.5), left part (23.6).

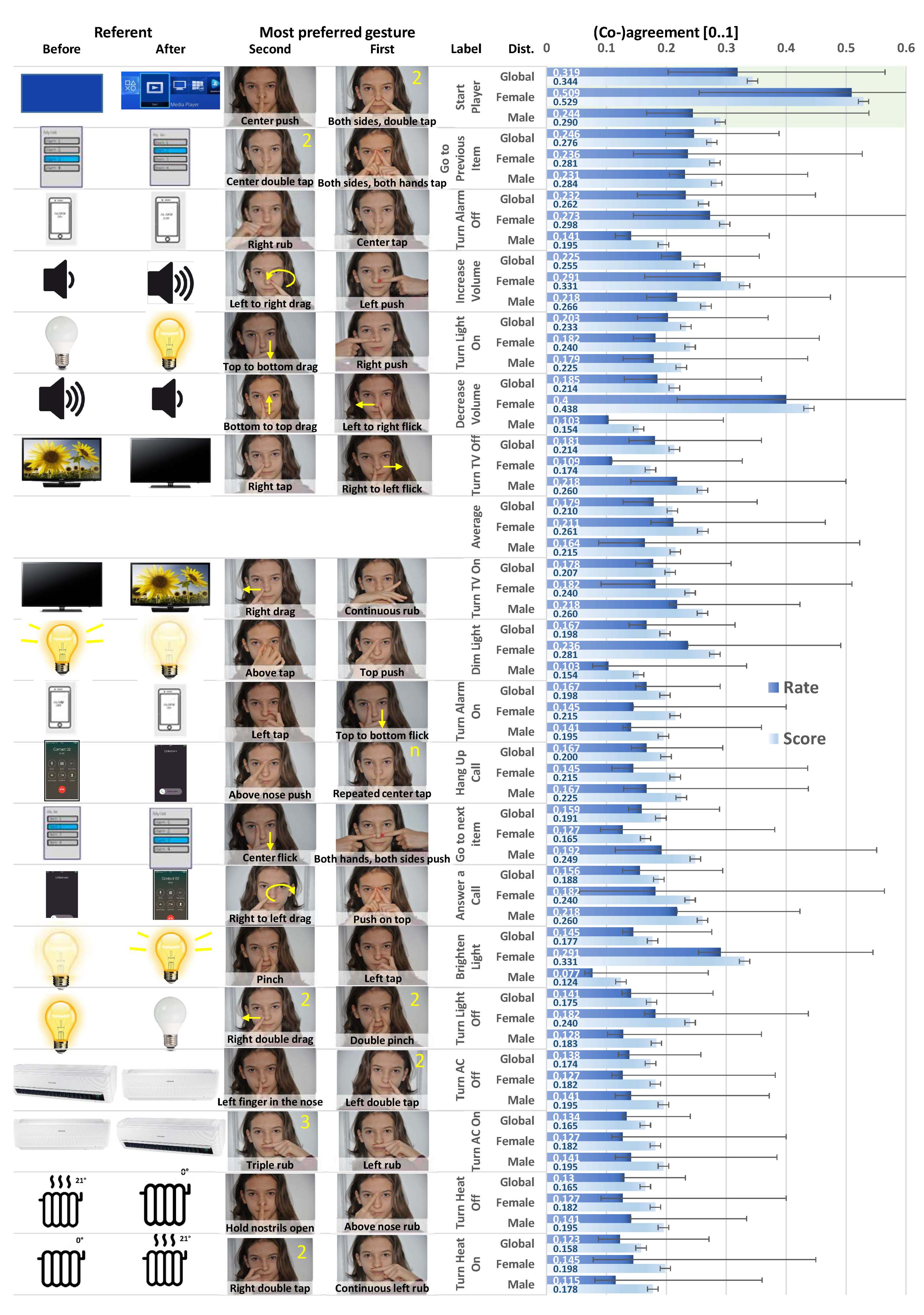

4.2. Agreement Scores and Co-Agreement Rates

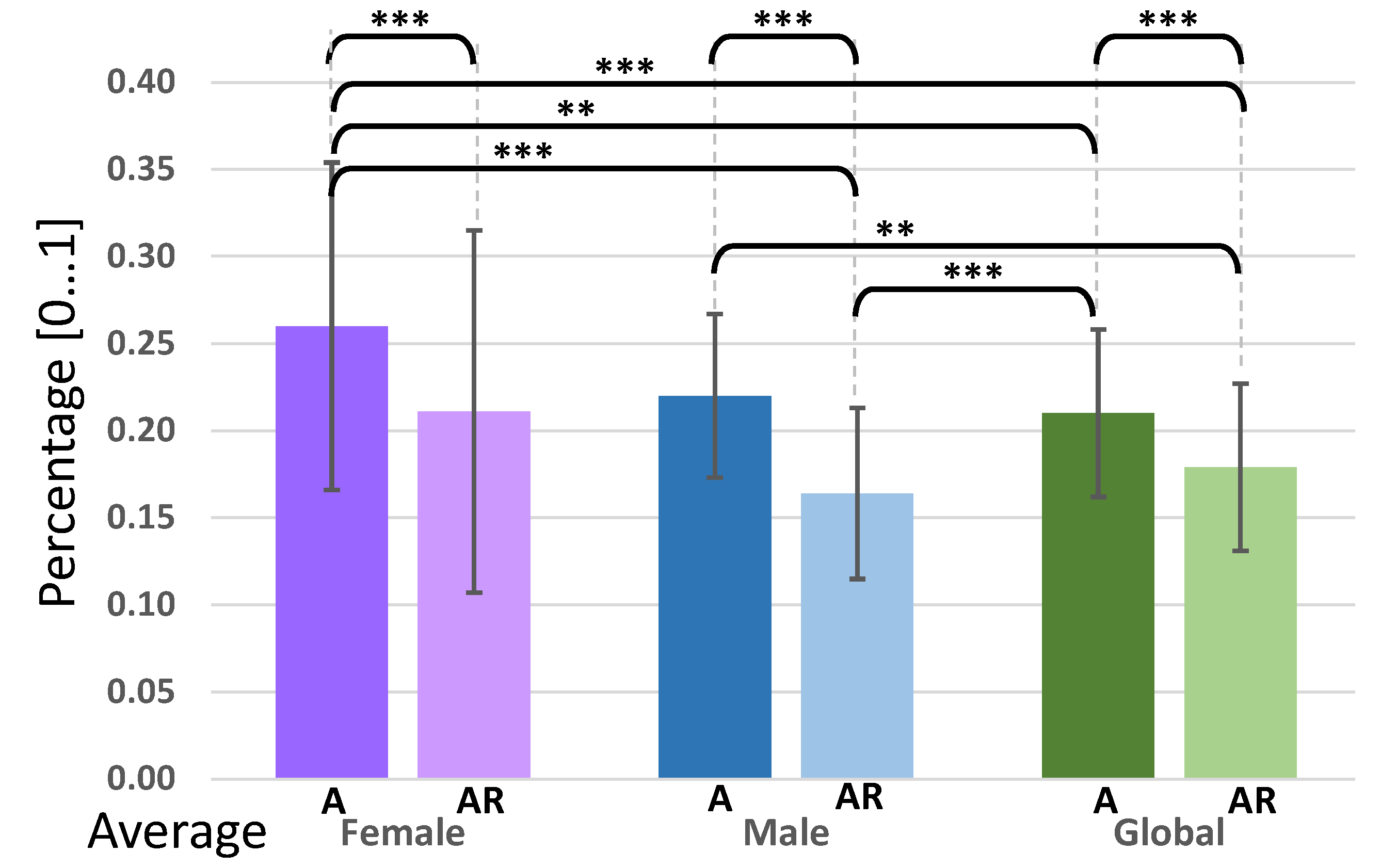

4.3. Further Analysis and Gender Effect

4.3.1. Gender

4.3.2. Type and Dimension

4.3.3. Area

4.3.4. Pairs of Commands

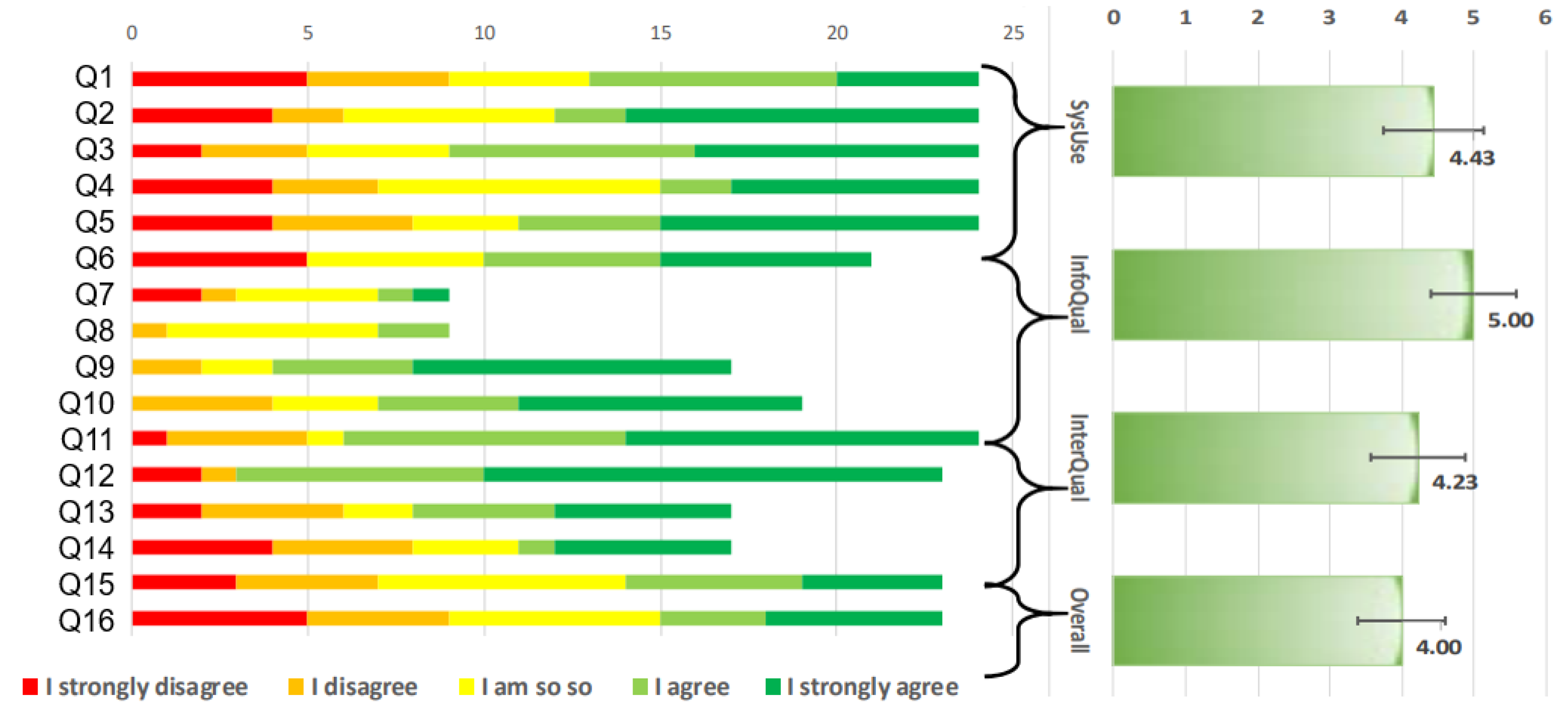

4.3.5. User Satisfaction with Nose Interaction

4.3.6. Nose-Based Gesture Recognition

5. Design Guidelines

- Match the gesture dimension to task dimension. Used referents cover 0D and 1D tasks. Participants prefer gestures whose dimension is consistent with the task dimension, such as tap for activate/deactivate, tap to select, swipe to scroll. pinch and reverse pinch to shrink or enlarge an object. There is no need to add any extra dimension to the task dimension.

- Prefer gestures with low dimension. From all elicited gestures, the amount of preferred gestures dramatically decreases with their dimension to the point that probably only 0D and 1D gestures are required as the minimum. Higher dimension gestures were always coming afterwards.

- Prefer larger areas over small ones. Larger areas (e.g., the dorsum nasi) are adequate for 1D gestures such as scrolling, swiping gestures while small areas (e.g., the ala, the apex or the philtrum) are available for 0D gestures.

- Favor repetition as a pattern over location. When a gesture is repeated, the repetition factor replaces the fine-grained distinction between individual gestures belonging to the same category. Participants tend to rely less frequently on the physical areas, such as changing the face of the dorsum nasi or preferring the apex.

- Favor centrality instead of laterality. Gestures that are independent of any laterality are easier to produce and remember than asymmetric ones. For instance, swiping on the dorsum nasi is easier than on any face.

- Use location only as a last factor. Location could distinguish between gestures, but only as the last refining factor.

6. Other Measures for Elicited Gestures

7. Conclusions and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| A(r) | Agreement score |

| AR(r) | Agreement rate |

| GES | Gesture Elicitation Study |

| EOG | electrooculography |

| IoT | Internet-of-Things |

References

- Aliofkhazraei, M.; Ali, N. Recent Developments in Miniaturization of Sensor Technologies and Their Applications. In Comprehensive Materials Processing; Hashmi, S., Batalha, G.F., Van Tyne, C.J., Yilbas, B., Eds.; Elsevier: Oxford, UK, 2014; pp. 245–306. [Google Scholar] [CrossRef]

- Benitez-Garcia, G.; Haris, M.; Tsuda, Y.; Ukita, N. Finger Gesture Spotting from Long Sequences Based on Multi-Stream Recurrent Neural Networks. Sensors 2020, 20, 528. [Google Scholar] [CrossRef] [PubMed]

- Abraham, L.; Urru, A.; Norman, N.; Wilk, M.P.; Walsh, M.J.; O’Flynn, B. Hand Tracking and Gesture Recognition Using Lensless Smart Sensors. Sensors 2018, 18, 2834. [Google Scholar] [CrossRef] [PubMed]

- Zengeler, N.; Kopinski, T.; Handmann, U. Hand Gesture Recognition in Automotive Human-Machine Interaction Using Depth Cameras. Sensors 2019, 19, 59. [Google Scholar] [CrossRef] [PubMed]

- Luo, X.; Wu, X.; Chen, L.; Zhao, Y.; Zhang, L.; Li, G.; Hou, W. Synergistic Myoelectrical Activities of Forearm Muscles Improving Robust Recognition of Multi-Fingered Gestures. Sensors 2019, 19, 610. [Google Scholar] [CrossRef]

- Lee, D.; Oakley, I.R.; Lee, Y. Bodily Input for Wearables: An Elicitation Study. In Proceedings of the International Conference on HCI Korea 2016 (HCI Korea ’16), Jeongseon, Korea, 27–29 January 2016; pp. 283–285. [Google Scholar]

- Lee, J.; Yeo, H.S.; Starner, T.; Quigley, A.; Kunze, K.; Woo, W. Automated Data Gathering and Training Tool for Personalized Itchy Nose. In Proceedings of the 9th Augmented HCI (AH ’18), Seoul, Korea, 7–9 February 2018; pp. 43:1–43:3. [Google Scholar]

- Wobbrock, J.O.; Morris, M.R.; Wilson, A.D. User-defined gestures for surface computing. In Proceedings of the Conference on Human Factors in CS (CHI’09), Boston, MA, USA, 4–9 April 2009; pp. 1083–1092. [Google Scholar]

- Polacek, O.; Grill, T.; Tscheligi, M. NoseTapping: What else can you do with your nose? In Proceedings of the 12th International Conference on Mobile and Ubiquitous Multimedia (MUM’13), Lulea, Sweden, 2–5 December 2013; pp. 32:1–32:9. [Google Scholar]

- Magrofuoco, N.; Pérez-Medina, J.L.; Roselli, P.; Vanderdonckt, J.; Villarreal, S. Eliciting Contact-Based and Contactless Gestures With Radar-Based Sensors. IEEE Access 2019, 7, 176982–176997. [Google Scholar] [CrossRef]

- Horcher, A.M. Hitting Authentication on the Nose: Using the Nose for Input to Smartphone security. In Proceedings of the Usenix Symposium on Usable Privacy and Security (SOUPS ’14), Menlo Park, Canada, 9–11 July 2014. [Google Scholar]

- Cooperrider, K.; Núñez, R. Nose-pointing: Notes on a facial gesture of Papua New Guinea. Gesture 2012, 12, 103–129. [Google Scholar] [CrossRef]

- Sanders, A. Dual Task Performance. In International Encyclopedia of the Social & Behavioral Sciences; Smelser, N.J., Baltes, P.B., Eds.; Pergamon: Oxford, UK, 2001; pp. 3888–3892. [Google Scholar] [CrossRef]

- Oakley, I.; Park, J.S. Designing Eyes-Free Interaction. In Haptic and Audio Interaction Design; Oakley, I., Brewster, S., Eds.; Springer: Berlin/Heidelberg, Germnay, 2007; pp. 121–132. [Google Scholar]

- Pivato, M. Condorcet meets Bentham. J. Math. Econ. 2015, 59, 58–65. [Google Scholar] [CrossRef]

- Emerson, P. The original Borda count and partial voting. Soc. Choice Welfare 2013, 40, 353–358. [Google Scholar] [CrossRef]

- Eston, P. Anatomy and Physiology. Chapter 22.1 Organs and Structures of the Respiratory System. 2010. Available online: https://open.oregonstate.education/aandp/chapter/22-1-organs-and-structures-of-the-respiratory-system/ (accessed on 1 October 2020).

- Harshith, C.; Shastry, K.R.; Ravindran, M.; Srikanth, M.V.V.N.S.; Lakshmikhanth, N. Survey on Various Gesture Recognition Techniques for Interfacing Machines Based on Ambient Intelligence. Int. J. Comput. Sci. Eng. Surv. 2010, 1, 31–42. [Google Scholar] [CrossRef]

- Henry, T.R.; Hudson, S.E.; Yeatts, A.K.; Myers, B.A.; Feiner, S. A Nose Gesture Interface Device: Extending Virtual Realities. In Proceedings of the 4th Annual ACM Symposium on User Interface Software and Technology, UIST ’91, Hilton Head Island, SC, USA, 11–13 November 1991; pp. 65–68. [Google Scholar] [CrossRef]

- Rico, J.; Brewster, S. Usable gestures for mobile interfaces: Evaluating social acceptability. In Proceedings of the SIGCHI Conference on Human Factors in CS (CHI’10), Atlanta, GA, USA, 10–15 April 2010; pp. 887–896. [Google Scholar]

- Freeman, E.; Griffiths, G.; Brewster, S.A. Rhythmic micro-gestures: Discreet interaction on-the-go. In Proceedings of the 19th ACM International Conference on Multimodal Interaction (ICMI’17), Glasgow, UK, 13–17 November 2017; pp. 115–119. [Google Scholar]

- Zarek, A.; Wigdor, D.; Singh, K. SNOUT: One-handed use of capacitive touch devices. In Proceedings of the International Working Conference on Advanced Visual Interfaces (AVI’12), Capri Island, Italy, 22–25 May 2012; pp. 140–147. [Google Scholar]

- Ogata, M.; Imai, M. SkinWatch: Skin Gesture Interaction for Smart Watch. In Proceedings of the 6th Augmented Human International Conference, AH ’15, Marina Bay Sands, Singapore, 9–11 March 2015; pp. 21–24. [Google Scholar] [CrossRef]

- Wen, H.; Ramos Rojas, J.; Dey, A.K. Serendipity: Finger Gesture Recognition Using an Off-the-Shelf Smartwatch. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, CHI ’16, San Jose, CA, USA, 7–12 May 2016; pp. 3847–3851. [Google Scholar] [CrossRef]

- McIntosh, J.; Marzo, A.; Fraser, M. SensIR: Detecting Hand Gestures with a Wearable Bracelet Using Infrared Transmission and Reflection. In Proceedings of the 30th Annual ACM Symposium on User Interface Software and Technology, UIST ’17, Quebec, QC, Canada, 22–25 October 2017; pp. 593–597. [Google Scholar] [CrossRef]

- Wobbrock, J.O.; Aung, H.H.; Rothrock, B.; Myers, B.A. Maximizing the guessability of symbolic input. In Proceedings of the CHI’05 EA on Human Factors in CS, CHI’05, Portland, OR, USA, 2–7 April 2005; pp. 1869–1872. [Google Scholar]

- Calvary, G.; Coutaz, J.; Thevenin, D.; Limbourg, Q.; Bouillon, L.; Vanderdonckt, J. A unifying reference framework for multi-target user interfaces. Interact. Comput. 2003, 15, 289–308. [Google Scholar] [CrossRef]

- Vatavu, R.D. User-defined gestures for free-hand TV control. In Proceedings of the 10th European Conference on Interactive tv and Video EuroITV ’12, Berlin, Germany, 4–6 July 2012; pp. 45–48. [Google Scholar]

- Ruiz, J.; Li, Y.; Lank, E. User-defined motion gestures for mobile interaction. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI ’11), Vancouver, BC, Canada, 7–12 May 2011; pp. 197–206. [Google Scholar]

- Mauney, D.; Howarth, J.; Wirtanen, A.; Capra, M. Cultural similarities and differences in user-defined gestures for touchscreen user interfaces. In Proceedings of the 28th International Conference on Human Factors in Computing Systems (CHI ’10), Atlanta, GA, USA, 10–15 April 2010; pp. 4015–4020. [Google Scholar]

- Morris, M.R.; Wobbrock, J.O.; Wilson, A.D. Understanding Users’ Preferences for Surface Gestures. In Proceedings of the Graphics Interface 2010, Canadian Information Processing Society (GI ’10), Toronto, ON, Canada, 31–2 June 2010; pp. 261–268. [Google Scholar]

- Akpan, I.; Marshall, P.; Bird, J.; Harrison, D. Exploring the effects of space and place on engagement with an interactive installation. In Proceedings of the 28th International Conference on Human Factors in Computing Systems (CHI ’13), Paris, France, 27 May–2 June 2013; pp. 2213–2222. [Google Scholar]

- Dong, H.; Danesh, A.; Figueroa, N.; El Saddik, A. An elicitation study on gesture preferences and memorability toward a practical hand-gesture vocabulary for smart televisions. IEEE Access 2015, 3, 543–555. [Google Scholar] [CrossRef]

- Yim, D.; Loison, G.N.; Fard, F.H.; Chan, E.; McAllister, A.; Maurer, F. Gesture-Driven Interactions on a Virtual Hologram in Mixed Reality. In Proceedings of the 2016 ACM Companion on Interactive Surfaces and Spaces; ISS’16 Companion. Association for Computing Machinery: New York, NY, USA, 2016; pp. 55–61. [Google Scholar] [CrossRef]

- Berthellemy, M.; Cayez, E.; Ajem, M.; Bailly, G.; Malacria, S.; Lecolinet, E. SpotPad, LociPad, ChordPad and InOutPad: Investigating gesture-based input on touchpad. In Proceedings of the 27th Conference on L’Interaction Homme-Machine IHM ’15, Toulouse, France, 27–30 October 2015; ACM: New York, NY, USA, 2015; pp. 4:1–4:8. [Google Scholar]

- Serrano, M.; Lecolinet, E.; Guiard, Y. Bezel-Tap gestures: Quick activation of commands from sleep mode on tablets. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, CHI ’13, Paris, France, 27 April–2 May2013; ACM: New York, NY, USA, 2013; pp. 3027–3036. [Google Scholar]

- Seyed, T.; Burns, C.; Costa Sousa, M.; Maurer, F.; Tang, A. Eliciting usable gestures for multi-display environments. In Proceedings of the 2012 ACM International Conference on Interactive Tabletops and Surfaces; ACM: New York, NY, USA, 2012; pp. 41–50. [Google Scholar]

- Kray, C.; Nesbitt, D.; Dawson, J.; Rohs, M. User-defined gestures for connecting mobile phones, public displays, and tabletops. In Proceedings of the 12th International Conference on Human computer Interaction with Mobile Devices and Services, MobileHCI ’10, Lisbon, Portugal, 7–10 September 2010; ACM: New York, NY, USA, 2010; pp. 239–248. [Google Scholar]

- Villarreal-Narvaez, S.; Vanderdonckt, J.; Vatavu, R.D.; Wobbrock, J.O. A Systematic Review of Gesture Elicitation Studies: What Can We Learn from 216 Studies? In Proceedings of the 2020 ACM Designing Interactive Systems Conference; DIS ’20. Association for Computing Machinery: New York, NY, USA, 2020; pp. 855–872. [Google Scholar] [CrossRef]

- Bostan, I.; Buruk, O.T.; Canat, M.; Tezcan, M.O.; Yurdakul, C.; Göksun, T.; Özcan, O. Hands as a controller: User preferences for hand specific on-skin gestures. In Proceedings of the 2017 Conference on Designing Interactive Systems, Edinburgh, UK, 10–14 June 2017; pp. 1123–1134. [Google Scholar]

- Chan, E.; Seyed, T.; Stuerzlinger, W.; Yang, X.D.; Maurer, F. User elicitation on single-hand microgestures. In Proceedings of the 2016 Conference on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; pp. 3403–3414. [Google Scholar]

- Havlucu, H.; Ergin, M.Y.; Bostan, İ.; Buruk, O.T.; Göksun, T.; Özcan, O. It made more sense: Comparison of user-elicited on-skin touch and freehand gesture sets. In International Conference on Distributed, Ambient, and Pervasive Interactions; Springer International Publishing: Cham, Switzerland, 2017; pp. 159–171. [Google Scholar]

- Liu, M.; Nancel, M.; Vogel, D. Gunslinger: Subtle arms-down mid-air interaction. In Proceedings of the 28th Annual ACM Symposium on User Interface Software & Technology, Charlotte, NC, USA, 8–11 November 2015; pp. 63–71. [Google Scholar]

- Mardanbegi, D.; Hansen, D.W.; Pederson, T. Eye-based head gestures. In Proceedings of the Symposium on Eye Tracking Research and Applications, ETRA ’12, Santa Barbara, CA, USA, 28–30 March 2012; ACM: New York, NY, USA, 2012; pp. 139–146. [Google Scholar]

- Rodriguez, I.B.; Marquardt, N. Gesture Elicitation Study on How to Opt-in & Opt-out from Interactions with Public Displays. In Proceedings of the 2017 ACM International Conference on Interactive Surfaces and Spaces, ISS ’17, Brighton, UK, 17–20 October 2017; ACM: New York, NY, USA, 2017; pp. 32–41. [Google Scholar]

- Vanderdonckt, J.; Magrofuoco, N.; Kieffer, S.; Pérez, J.; Rase, Y.; Roselli, P.; Villarreal, S. Head and Shoulders Gestures: Exploring User-Defined Gestures with Upper Body. In Design, User Experience, and Usability. User Experience in Advanced Technological Environments; Marcus, A., Wang, W., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 192–213. [Google Scholar]

- Silpasuwanchai, C.; Ren, X. Designing concurrent full-body gestures for intense gameplay. Int. J. Hum. Comput. Stud. 2015, 80, 1–13. [Google Scholar] [CrossRef]

- Vo, D.B.; Lecolinet, E.; Guiard, Y. Belly gestures: Body centric gestures on the abdomen. In Proceedings of the 8th Nordic Conference on Human-Computer Interaction: Fun, Fast, Foundational, Helsinki, Finland, 26–30 October 2014; pp. 687–696. [Google Scholar]

- Seipp, K.; Verbert, K. From Inaction to Interaction: Concept and Application of the Null Gesture. In Proceedings of the 2016 CHI Conference Extended Abstracts on Human Factors in Computing Systems; CHI EA ’16. Association for Computing Machinery: New York, NY, USA, 2016; pp. 525–540. [Google Scholar] [CrossRef]

- Morris, D.; Collett, P.; Marsh, P.; O’Shaughnessay, M. Gestures: Their Origins and Distribution; Cape London: London, UK, 1979. [Google Scholar]

- Vatavu, R.D.; Wobbrock, J.O. Formalizing agreement analysis for elicitation studies: New measures, significance test, and toolkit. In Proceedings of the 33rd ACM Conference on Human Factors in Computing Systems, CHI ’15, Seoul, Korea, 18–23 April 2015; ACM: New York, NY, USA, 2015; pp. 1325–1334. [Google Scholar]

- Gheran, B.F.; Vanderdonckt, J.; Vatavu, R.D. Gestures for Smart Rings: Empirical Results, Insights, and Design Implications. In Proceedings of the 2018 Designing Interactive Systems Conference; DIS ’18. Association for Computing Machinery: New York, NY, USA, 2018; pp. 623–635. [Google Scholar] [CrossRef]

- Kendon, A. Gesture: Visible Action as Utterance; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Dornyei, Z. Research Methods in Applied Linguistics; Oxford University Press: Oxford, UK, 2007. [Google Scholar]

- Likert, R. A technique for the measurement of attitudes. Arch. Psychol. 1932, 22, 5–55. [Google Scholar]

- Korkman, M. NEPSY: A Developmental Neuropsychological Assessment. Test Mater. Man. 1998, 2, 375–392. [Google Scholar] [CrossRef]

- Lewis, J.R. IBM computer usability satisfaction questionnaires: Psychometric evaluation and instructions for use. Int. J. Hum. Comput. Interact. 1995, 7, 57–78. [Google Scholar] [CrossRef]

- Lewis, J.R. Sample sizes for usability tests: Mostly math, not magic, interactions, v. 13 n. 6. November + December 2006, 13, 29–33. [Google Scholar]

- Liang, H.N.; Williams, C.; Semegen, M.; Stuerzlinger, W.; Irani, P. User-defined surface+ motion gestures for 3d manipulation of objects at a distance through a mobile device. In Proceedings of the 10th Asia Pacific Conference on Computer Human Interaction, APCHI ’12, Matsue-City, Shimane, Japan, 28–31 August 2020; ACM: New York, NY, USA, 2012; pp. 299–308. [Google Scholar]

- Lee, J.; Yeo, H.S.; Dhuliawala, M.; Akano, J.; Shimizu, J.; Starner, T.; Quigley, A.; Woo, W.; Kunze, K. Itchy nose: Discreet gesture interaction using EOG sensors in smart eyewear. In Proceedings of the 2017 ACM International Symposium on Wearable Computers, Maui, HI, USA, 13–15 September 2017; pp. 94–97. [Google Scholar]

- Ho, T.K. Random decision forests. In Proceedings of the 3rd International Conference on Document Analysis and Recognition, Montreal, QC, Canada, 14–16 August 1995; Volume 1, pp. 278–282. [Google Scholar] [CrossRef]

- Martinez, B.; Valstar, M.F.; Jiang, B.; Pantic, M. Automatic Analysis of Facial Actions: A Survey. IEEE Trans. Affect. Comput. 2019, 10, 325–347. [Google Scholar] [CrossRef]

- Ahmad, F.; Najam, A.; Ahmed, Z. Image-based face detection and recognition: “State of the art”. arXiv 2013, arXiv:1302.6379. [Google Scholar]

- Shah, M.N.; Rathod, M.R.; Agravat, M.J. A survey on Human Computer Interaction Mechanism Using Finger Tracking. Int. J. Comput. Trends Technol. 2014, 7, 174–177. [Google Scholar] [CrossRef]

- Guo, G.; Wang, H.; Yan, Y.; Zheng, J.; Li, B. A fast face detection method via convolutional neural network. Neurocomputing 2020, 395, 128–137. [Google Scholar] [CrossRef]

- Zhang, S.; Chi, C.; Lei, Z.; Li, S.Z. Refineface: Refinement neural network for high performance face detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 1. [Google Scholar] [CrossRef] [PubMed]

- Bandini, A.; Zariffa, J. Analysis of the hands in egocentric vision: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2020. [Google Scholar] [CrossRef] [PubMed]

- Schölkopf, B.; Smola, A.J.; Williamson, R.C.; Bartlett, P.L. New support vector algorithms. Neural Comput. 2000, 12, 1207–1245. [Google Scholar] [CrossRef] [PubMed]

- Silverman, B.W.; Jones, M.C. E. fix and jl hodges (1951): An important contribution to nonparametric discriminant analysis and density estimation: Commentary on fix and hodges (1951). Int. Stat. Rev. Int. Stat. 1989, 57, 233–238. [Google Scholar] [CrossRef]

- Cover, T.; Hart, P. Nearest neighbor pattern classification. IEEE Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar] [CrossRef]

| Variable | Gender | Mean | Standard Deviation | Standard Error M. |

|---|---|---|---|---|

| Creativity | Female | 64.503 | 7.780 | 2.345 |

| Male | 57.825 | 10.274 | 2.849 | |

| Unlogical items | Female | 3.36 | 2.157 | 0.650 |

| Male | 2.08 | 1.977 | 0.548 | |

| Device familiarity | Female | 4.000 | 0.721 | 0.217 |

| Male | 3.754 | 1.042 | 0.289 | |

| Thinking time | Female | 7.406 | 4.825 | 1.455 |

| Male | 6.891 | 3.118 | 0.864 | |

| Age | Female | 30.82 | 9.888 | 2.981 |

| Male | 29.69 | 14.620 | 4.055 |

| Creativity | Age | Unlogic Items | Familiarity | Think Time | ||

|---|---|---|---|---|---|---|

| Creativity | Pearson c. | 1 | 0.080 | 0.117 | 0.410 * | 0.071 |

| Sig. (2-tld.) | 0.712 | 0.587 | 0.047 | 0.742 | ||

| Age | Pearson c. | 0.080 | 1 | 0.065 | −0.307 | −0.259 |

| Sig. (2-tld.) | 0.712 | 0.761 | 0.144 | 0.222 | ||

| Unlogic items | Pearson c. | 0.117 | 0.065 | 1 | −0.321 | −0.215 |

| Sig. (2-tld.) | 0.587 | 0.761 | 0.126 | 0.313 | ||

| Familiarity | Pearson c. | 0.410 * | −0.307 | −0.321 | 1 | −0.302 |

| Sig. (2-tld.) | 0.047 | 0.144 | 0.126 | 0.151 | ||

| Thinking time | Pearson c. | 0.071 | 0.259 | 0.215 | −0.302 | 1 |

| Sig. (2-tld.) | 0.742 | 0.222 | 0.313 | 0.151 |

| Levene’s Test for Equality of Variances | t-Test for Equality of Means | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| F | Sig. | t | df | Sig. (2-Tailed) | Mean Difference | Std. Error Difference | 95% Confidence Interval of the Difference | |||

| Lower | Upper | |||||||||

| Creativity | Equal variances assumed | 0.577 | 0.456 | 1.767 | 22 | 0.091 | 6.67825 | 3.77911 | −1.15914 | 14.51564 |

| Equal variances not assumed | 1.809 | 21.775 | 0.084 | 6.67825 | 3.69096 | −0.98092 | 14.33742 | |||

| Unlogic items | Equal variances assumed | 0.534 | 0.473 | 1.524 | 22 | 0.142 | 1.287 | 0.844 | −0.465 | 3.038 |

| Equal variances not assumed | 1.512 | 20.597 | 0.146 | 1.287 | 0.851 | −0.485 | 3.058 | |||

| Familiarity device | Equal variances assumed | 0.207 | 0.654 | 0.660 | 22 | 0.516 | 0.2462 | 0.3732 | −0.5277 | 1.0200 |

| Equal variances not assumed | 0.680 | 21.250 | 0.504 | 0.2462 | 0.3619 | −0.5058 | 0.9981 | |||

| Thinking time | Equal variances assumed | 3.577 | 0.072 | 0.315 | 22 | 0.756 | 0.51483 | 1.63311 | −2.87205 | 3.90170 |

| Equal variances not assumed | 0.304 | 16.590 | 0.765 | 0.51483 | 1.69274 | −3.06329 | 4.09294 | |||

| Age | Equal variances assumed | 2.099 | 0.161 | 0.217 | 22 | 0.831 | 1.126 | 5.198 | −9.655 | 11.907 |

| Equal variances not assumed | 0.224 | 21.086 | 0.825 | 1.126 | 5.033 | −9.338 | 11.589 | |||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pérez-Medina, J.-L.; Villarreal, S.; Vanderdonckt, J. A Gesture Elicitation Study of Nose-Based Gestures. Sensors 2020, 20, 7118. https://doi.org/10.3390/s20247118

Pérez-Medina J-L, Villarreal S, Vanderdonckt J. A Gesture Elicitation Study of Nose-Based Gestures. Sensors. 2020; 20(24):7118. https://doi.org/10.3390/s20247118

Chicago/Turabian StylePérez-Medina, Jorge-Luis, Santiago Villarreal, and Jean Vanderdonckt. 2020. "A Gesture Elicitation Study of Nose-Based Gestures" Sensors 20, no. 24: 7118. https://doi.org/10.3390/s20247118

APA StylePérez-Medina, J.-L., Villarreal, S., & Vanderdonckt, J. (2020). A Gesture Elicitation Study of Nose-Based Gestures. Sensors, 20(24), 7118. https://doi.org/10.3390/s20247118