Non-Contact Heart Rate Detection When Face Information Is Missing during Online Learning

Abstract

1. Introduction

- (1)

- We propose a symmetry substitution method. When the head is rotated 30 degrees to 45 degrees, and the region of interest in the face is partially missing, the data detected in the left and right cheeks are symmetrically copied;

- (2)

- We designed a method to determine the effective facial region of interest (ROI) based on the face–eye location then calculate the physiological parameters;

- (3)

- We designed a video dataset in minutes.

2. Related Work

3. Methods

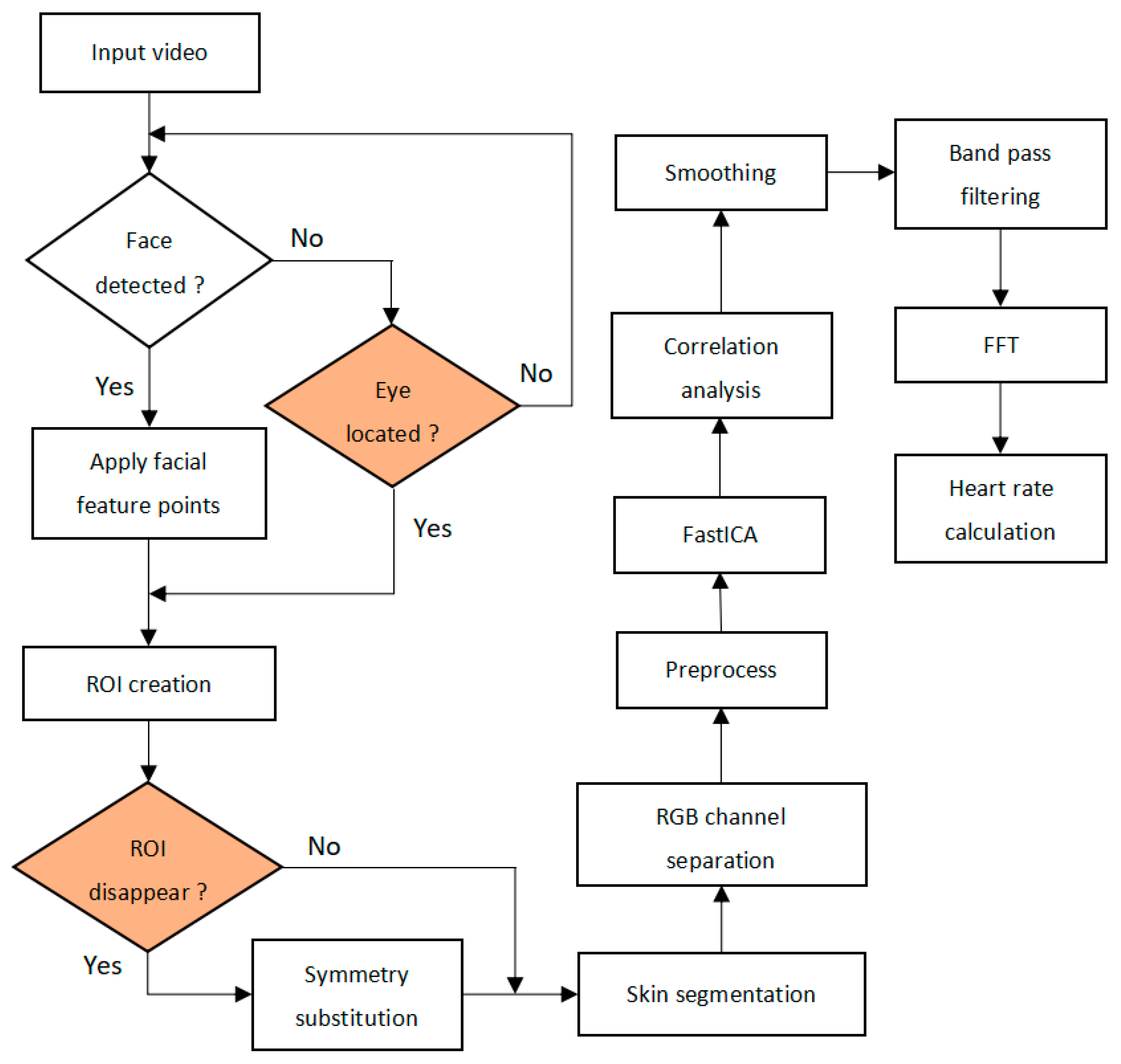

3.1. Process

3.1.1. Face Detection and Facial Feature Points Determination

3.1.2. ROI Creation

3.1.3. Skin Segmentation

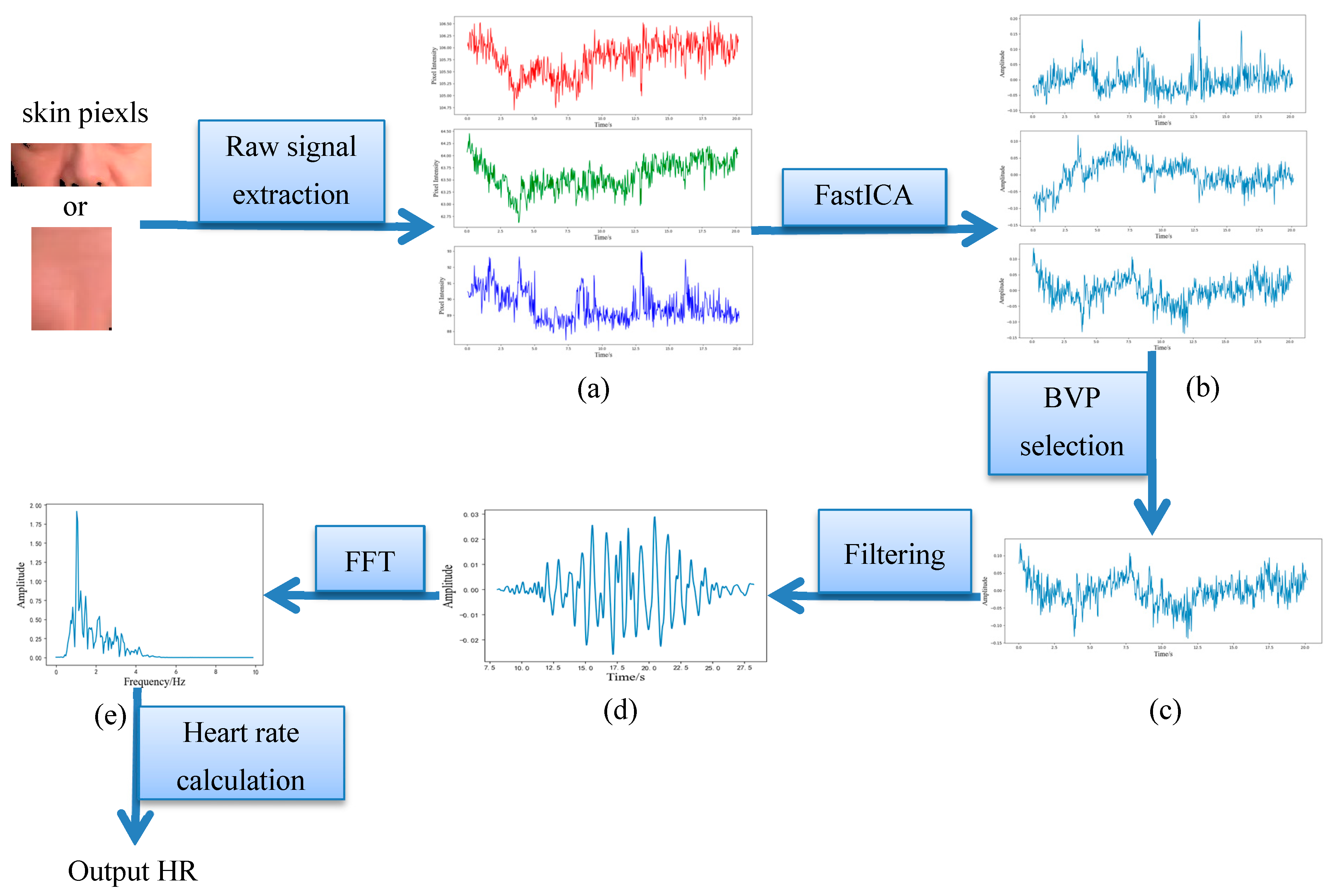

3.1.4. Raw Traces Extraction and Signal Processing

3.1.5. Independent Component Analysis

3.1.6. Heart Rate Calculation

3.2. Symmetry Substitution Method

3.3. Heart Rate Estimation by Face–Eye Location

3.4. Participants

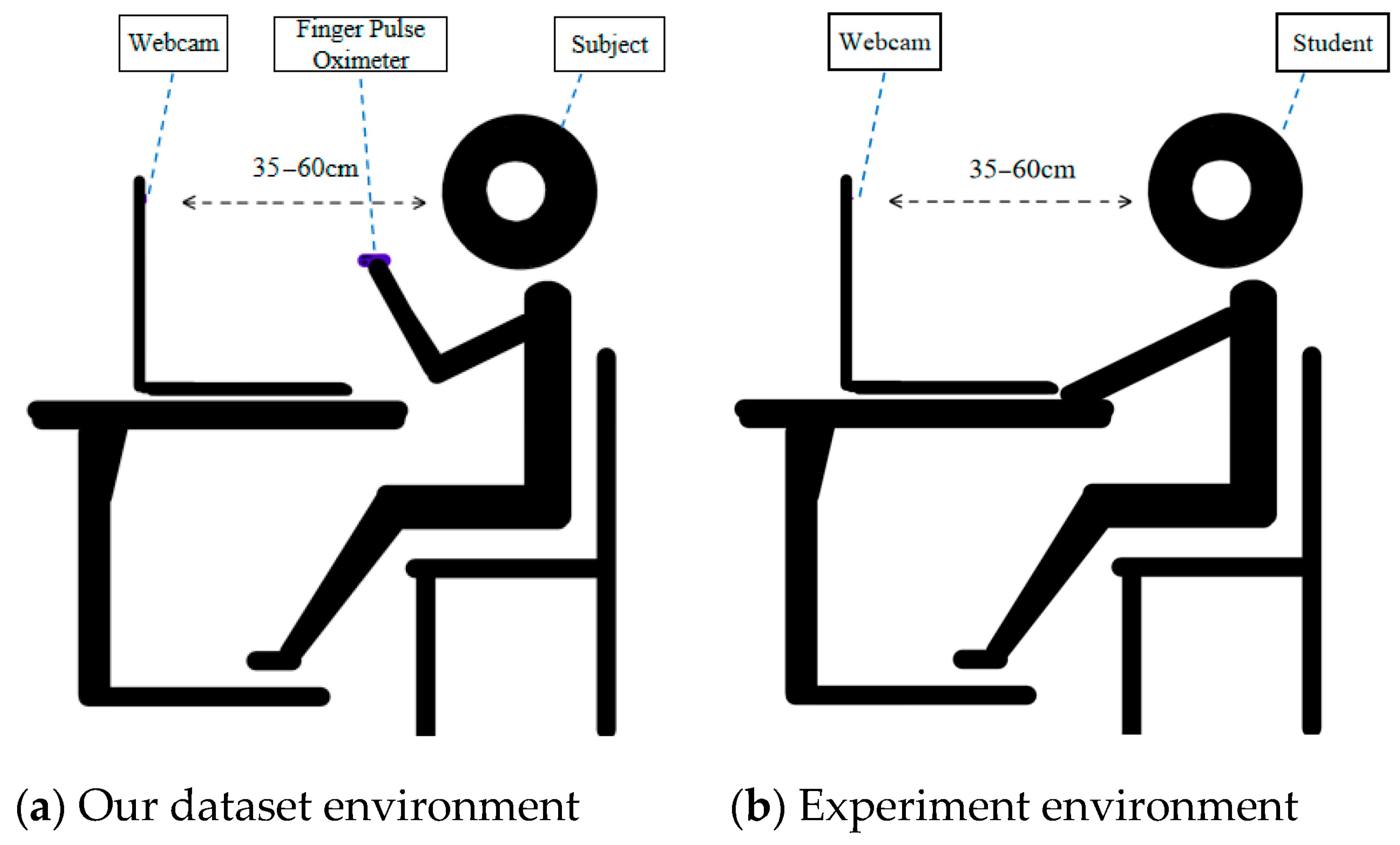

3.5. Experimental Environment and Benchmark Dataset

3.6. Emotion Experiments Design

4. Results

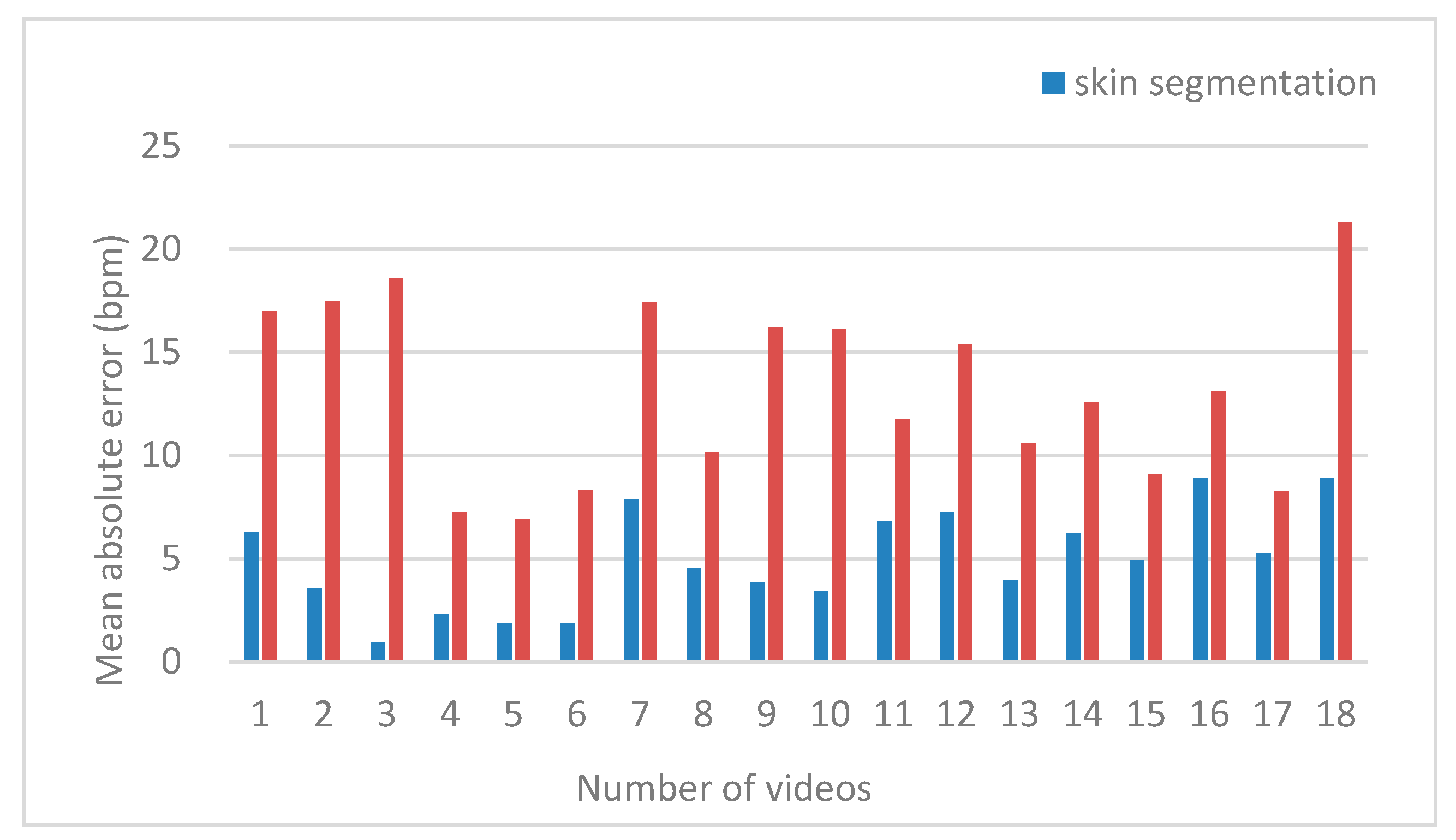

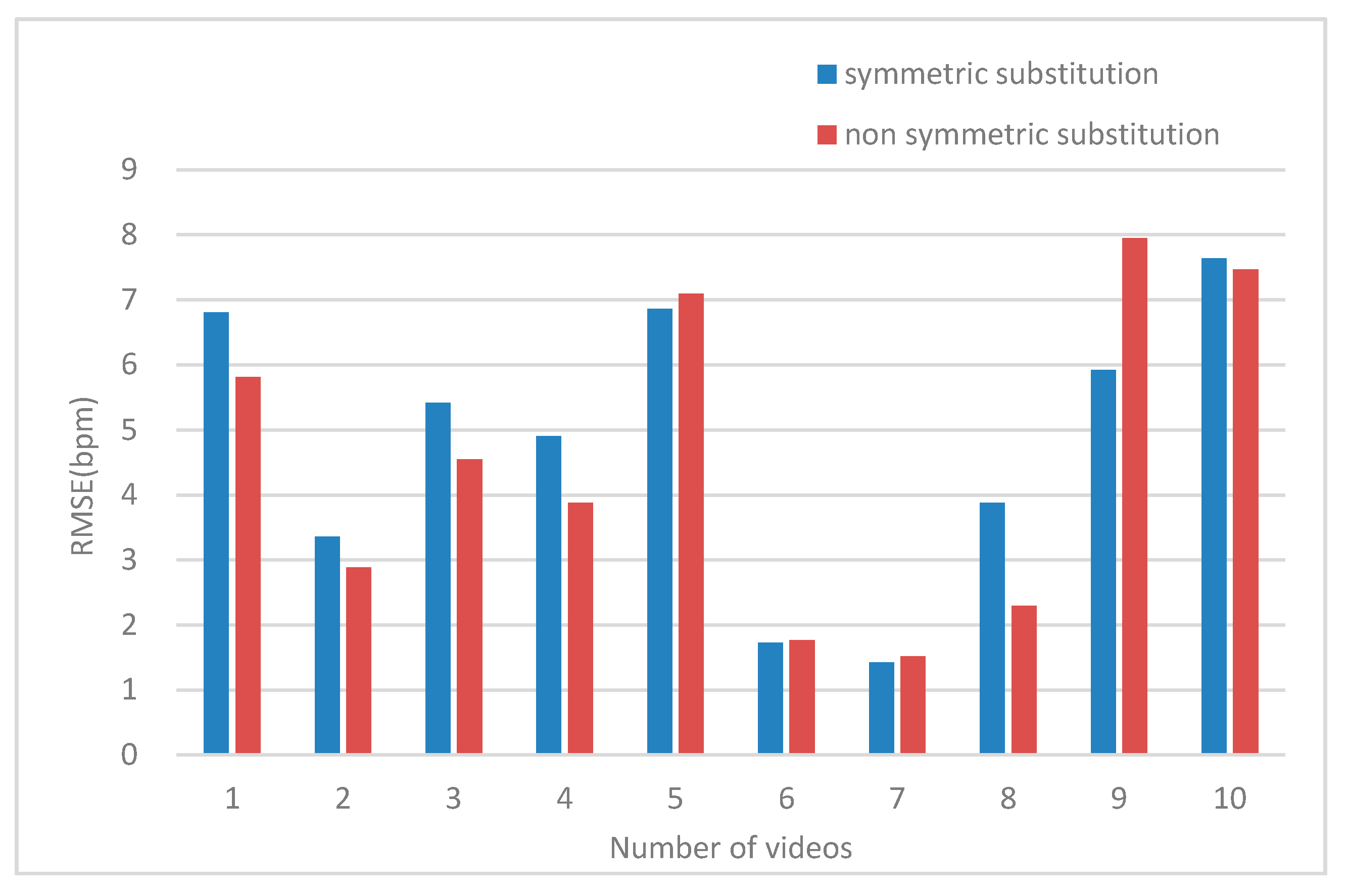

4.1. Results of the Symmetrical Substitution Method

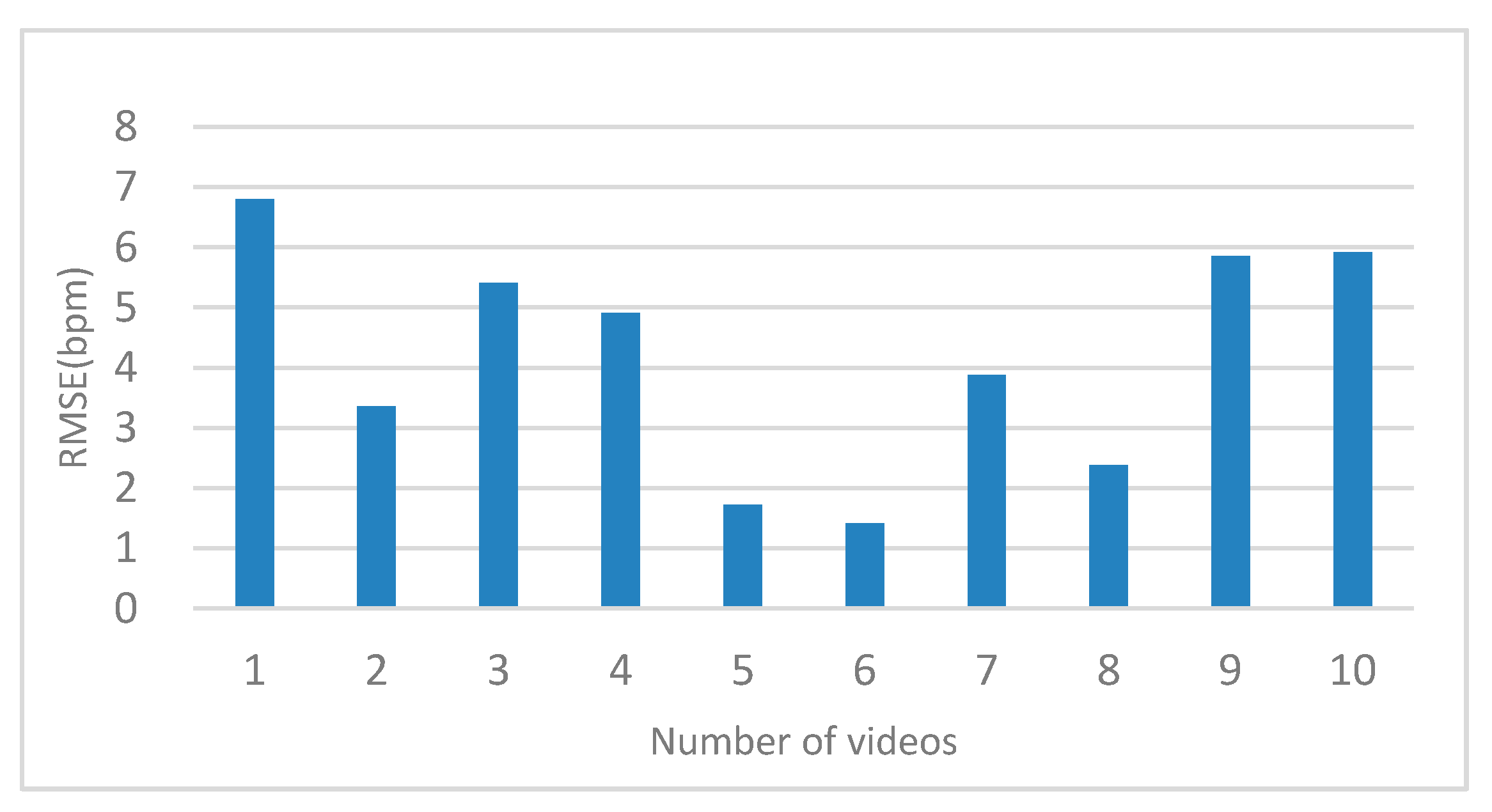

4.2. Results of Heart Rate Estimated by Face–Eye Location

4.3. Comparison with Other Methods

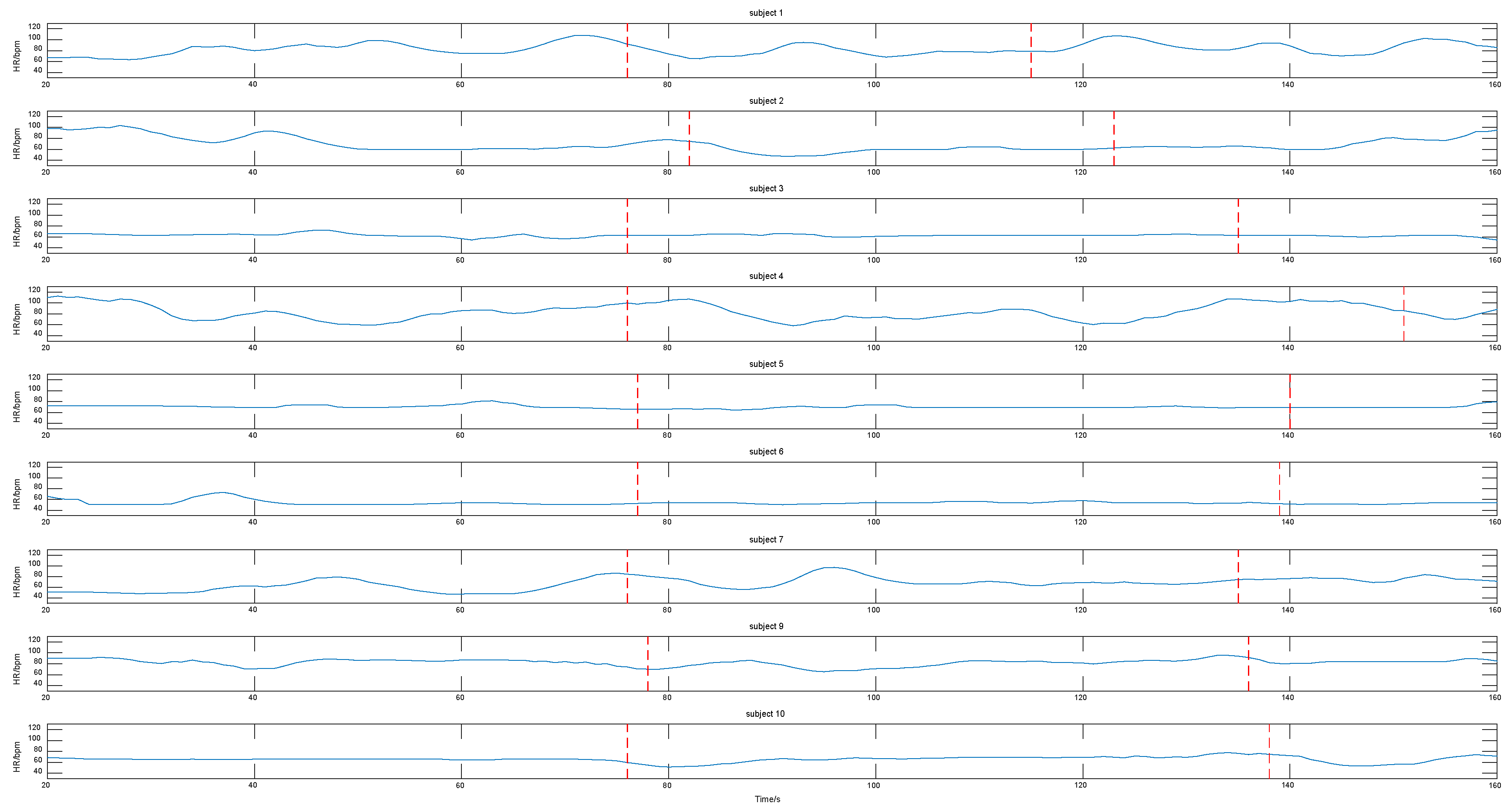

4.4. Results of Emotion Experiments

5. Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Pekrun, R.; Goetz, T.; Titz, W.; Perry, R.P. Academic Emotions in Students’ Self-Regulated Learning and Achievement: A Program of Qualitative and Quantitative Research. Educ. Psychol. 2002, 37, 91–105. [Google Scholar] [CrossRef]

- Chettupuzhakkaran, P.; Sindhu, N. Emotion Recognition from Physiological Signals Using Time-Frequency Analysis Methods. In Proceedings of the 2018 International Conference on Emerging Trends and Innovations in Engineering and Technological Research (ICETIETR), Ernakulam, India, 11–13 July 2018; pp. 1–5. [Google Scholar]

- Joesph, C.; Rajeswari, A.; Premalatha, B.; Balapriya, C. Implementation of physiological signal based emotion recognition algorithm. In Proceedings of the 2020 IEEE 36th International Conference on Data Engineering (ICDE), Dallas, TX, USA, 20–24 April 2020; pp. 2075–2079. [Google Scholar]

- Bland, M.W.; Morrison, E. The Experimental Detection of an Emotional Response to the Idea of Evolution. Am. Biol. Teach. 2015, 77, 413–420. [Google Scholar] [CrossRef]

- Stavroulia, K.E.; Christofi, M.; Baka, E.; Michael-Grigoriou, D.; Magnenat-Thalmann, N.; Lanitis, A. Assessing the emotional impact of virtual reality-based teacher training. Int. J. Inf. Learn. Technol. 2019, 36, 192–217. [Google Scholar] [CrossRef]

- Thompson, N.; McGill, T.J. Genetics with Jean: The design, development and evaluation of an affective tutoring system. Educ. Technol. Res. Dev. 2016, 65, 279–299. [Google Scholar] [CrossRef]

- Verkruysse, W.; Svaasand, L.O.; Nelson, J.S. Remote plethysmographic imaging using ambient light. Opt. Express 2008, 16, 21434–21445. [Google Scholar] [CrossRef] [PubMed]

- Chen, D.-Y.; Wang, J.-J.; Lin, K.-Y.; Chang, H.-H.; Wu, H.-K.; Chen, Y.-S.; Lee, S.-Y. Image Sensor-Based Heart Rate Evaluation from Face Reflectance Using Hilbert–Huang Transform. IEEE Sens. J. 2015, 15, 618–627. [Google Scholar] [CrossRef]

- Lin, K.-Y.; Chen, D.-Y.; Tsai, W.-J. Face-Based Heart Rate Signal Decomposition and Evaluation Using Multiple Linear Regression. IEEE Sens. J. 2016, 16, 1351–1360. [Google Scholar] [CrossRef]

- Chen, X.; Chen, Q.; Zhang, Y.; Wang, Z.J. A Novel EEMD-CCA Approach to Removing Muscle Artifacts for Pervasive EEG. IEEE Sens. J. 2018, 19, 8420–8431. [Google Scholar] [CrossRef]

- Cheng, J.; Chen, X.; Xu, L.; Wang, J. Illumination Variation-Resistant Video-Based Heart Rate Measurement Using Joint Blind Source Separation and Ensemble Empirical Mode Decomposition. IEEE J. Biomed. Health Inform. 2017, 21, 1422–1433. [Google Scholar] [CrossRef]

- Xu, L.; Cheng, J.; Chen, X. Illumination variation interference suppression in remote PPG using PLS and MEMD. Electron. Lett. 2017, 53, 216–218. [Google Scholar] [CrossRef]

- Poh, M.-Z.; McDuff, D.; Picard, R.W. Non-contact, automated cardiac pulse measurements using video imaging and blind source separation. Opt. Express 2010, 18, 10762–10774. [Google Scholar] [CrossRef] [PubMed]

- De Haan, G.G.; Jeanne, V. Robust Pulse Rate from Chrominance-Based rPPG. IEEE Trans. Biomed. Eng. 2013, 60, 2878–2886. [Google Scholar] [CrossRef]

- Bousefsaf, F.; Maaoui, C.; Pruski, A. Continuous wavelet filtering on webcam photoplethysmographic signals to remotely assess the instantaneous heart rate. Biomed. Signal Process. Control. 2013, 8, 568–574. [Google Scholar] [CrossRef]

- Prakash, S.K.A.; Tucker, C.S. Bounded Kalman filter method for motion-robust, non-contact heart rate estimation. Biomed. Opt. Express 2018, 9, 873–897. [Google Scholar] [CrossRef] [PubMed]

- McDuff, D.J.; Blackford, E.B.; Estepp, J.R. The Impact of Video Compression on Remote Cardiac Pulse Measurement Using Imaging Photoplethysmography. In Proceedings of the 2017 12th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2017), Washington, DC, USA, 30 May–3 June 2017; pp. 63–70. [Google Scholar]

- Zhao, C.; Lin, C.-L.; Chen, W.; Li, Z. A Novel Framework for Remote Photoplethysmography Pulse Extraction on Compressed Videos. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 1380–1389. [Google Scholar]

- Zhao, C.; Chen, W.; Lin, C.-L.; Wu, X. Physiological Signal Preserving Video Compression for Remote Photoplethysmography. IEEE Sens. J. 2019, 19, 4537–4548. [Google Scholar] [CrossRef]

- Nooralishahi, P.; Loo, C.K.; Shiung, L.W. Robust remote heart rate estimation from multiple asynchronous noisy channels using autoregressive model with Kalman filter. Biomed. Signal Process. Control. 2019, 47, 366–379. [Google Scholar] [CrossRef]

- Yang, Z.; Yang, X.; Jin, J.; Wu, X. Motion-resistant heart rate measurement from face videos using patch-based fusion. Signal Image Video Process. 2019, 13, 423–430. [Google Scholar] [CrossRef]

- Yang, Z.; Yang, X.; Wu, X. Motion-tolerant heart rate estimation from face videos using derivative filter. Multimed. Tools Appl. 2019, 78, 26747–26757. [Google Scholar] [CrossRef]

- Bobbia, S.; Luguern, D.; Benezeth, Y.; Nakamura, K.; Gomez, R.; Dubois, J. Real-Time Temporal Superpixels for Unsupervised Remote Photoplethysmography. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 1422–14227. [Google Scholar]

- Van Der Kooij, K.M.; Naber, M. An open-source remote heart rate imaging method with practical apparatus and algorithms. Behav. Res. Methods 2019, 51, 2106–2119. [Google Scholar] [CrossRef]

- Qi, L.; Yu, H.; Xu, L.; Mpanda, R.S.; Greenwald, S.E. Robust heart-rate estimation from facial videos using Project_ICA. Physiol. Meas. 2019, 40, 085007. [Google Scholar] [CrossRef]

- Fouad, R.M.; Omer, O.A.; Aly, M.H. Optimizing Remote Photoplethysmography Using Adaptive Skin Segmentation for Real-Time Heart Rate Monitoring. IEEE Access 2019, 7, 76513–76528. [Google Scholar] [CrossRef]

- Qiu, Y.; Liu, Y.; Arteaga-Falconi, J.S.; Dong, H.; El Saddik, A. EVM-CNN: Real-Time Contactless Heart Rate Estimation From Facial Video. IEEE Trans. Multimed. 2018, 21, 1778–1787. [Google Scholar] [CrossRef]

- Rong, M.; Fan, Q.; Li, K. Research on non-contact physiological parameter measurement algorithm based on IPPG. Biomed. Eng. Res. 2018, 37, 27–31. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of Oriented Gradients for Human Detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; pp. 886–893. [Google Scholar]

- Viola, P.; Jones, M.J. Robust Real-Time Face Detection. Int. J. Comput. Vis. 2004, 57, 137–154. [Google Scholar] [CrossRef]

- Zhang, K.; Zhang, Z.; Li, Z.; Qiao, Y. Joint Face Detection and Alignment Using Multitask Cascaded Convolutional Networks. IEEE Signal Process. Lett. 2016, 23, 1499–1503. [Google Scholar] [CrossRef]

- Kowalski, M.; Naruniec, J.; Trzcinski, T. Deep Alignment Network: A Convolutional Neural Network for Robust Face Alignment. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 July 2017; pp. 2034–2043. [Google Scholar]

- Soleymani, M.; Lichtenauer, J.; Pun, T.; Pantic, M. A Multimodal Database for Affect Recognition and Implicit Tagging. IEEE Trans. Affect. Comput. 2012, 3, 42–55. [Google Scholar] [CrossRef]

- Koelstra, S.; Muhl, C.; Soleymani, M.; Lee, J.-S.; Yazdani, A.; Ebrahimi, T.; Pun, T.; Nijholt, A.; Patras, I. DEAP: A Database for Emotion Analysis Using Physiological Signals. IEEE Trans. Affect. Comput. 2011, 3, 18–31. [Google Scholar] [CrossRef]

- Tulyakov, S.; Alameda-Pineda, X.; Ricci, E.; Yin, L.; Cohn, J.F.; Sebe, N. Self-Adaptive Matrix Completion for Heart Rate Estimation from Face Videos under Realistic Conditions. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2396–2404. [Google Scholar]

- Stricker, R.; Muller, S.; Gross, H.-M. Non-contact video-based pulse rate measurement on a mobile service robot. In Proceedings of the the 23rd IEEE International Symposium on Robot and Human Interactive Communication, Edinburgh, UK, 25–29 August 2014; pp. 1056–1062. [Google Scholar]

- Li, X.; Alikhani, I.; Shi, J.; Seppanen, T.; Junttila, J.; Majamaa-Voltti, K.; Tulppo, M.; Zhao, G. The OBF Database: A Large Face Video Database for Remote Physiological Signal Measurement and Atrial Fibrillation Detection. In Proceedings of the 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), Xi’an, China, 15–19 May 2018; pp. 242–249. [Google Scholar]

- Niu, X.; Shan, S.; Han, H.; Chen, X. RhythmNet: End-to-End Heart Rate Estimation from Face via Spatial-Temporal Representation. IEEE Trans. Image Process. 2020, 29, 2409–2423. [Google Scholar] [CrossRef]

- Poh, M.-Z.; McDuff, D.; Picard, R.W. Advancements in Noncontact, Multiparameter Physiological Measurements Using a Webcam. IEEE Trans. Biomed. Eng. 2010, 58, 7–11. [Google Scholar] [CrossRef]

- Lam, A.; Kuno, Y. Robust Heart Rate Measurement from Video Using Select Random Patches. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 3640–3648. [Google Scholar]

- Wang, W.; Brinker, A.C.D.; Stuijk, S.S.; De Haan, G.G. Algorithmic Principles of Remote PPG. IEEE Trans. Biomed. Eng. 2017, 64, 1479–1491. [Google Scholar] [CrossRef]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. Exploiting the Circulant Structure of Tracking-by-Detection with Kernels. Lect. Notes Comput. Sci. 2012, 7575, 702–715. [Google Scholar]

| Article | ROI Definition | Method | Result |

|---|---|---|---|

| [13] | full face | ICA | The root mean square error of the static dataset was 2.29 bpm, and that of moving dataset was 4.63 bpm. |

| [25] | full face | Project_ICA | In static, human–computer interaction and exercise recovery scenarios, the mean absolute deviation were 3.30, 3.93, and 9.80 bpm, respectively. |

| [16] | three rectangular regions | bounded Kalman filter | The average measurement error was 3 bpm when the subjects walked to the camera from 4 feet away. |

| [20] | three rectangular regions | RADICAL | The average error was 1.42 bpm in a well-controlled dataset. |

| [26] | three rectangular regions | PCA | The accuracy rate of heart rate measurement of five subjects was above 98%. |

| [27] | band region | EVM + CNN | 74.13% of the test data were well estimated. |

| [28] | band region | ICA | The root mean square error was 2.258 bpm under static condition. |

| Face Detection Effect | Performance Description | Advantage | Disadvantage | |

|---|---|---|---|---|

| HOG [29] |  | greatly outperforms the wavelet, PCA-SIFT, and Shape Context methods | fast running speed; 68 facial feature points | greatly influenced by light intensity and direction; inaccurate location of feature points on profile |

| Viola–Jones [30] |  | extremely rapid image processing, while achieving high detection rates | fast running speed | no facial feature point; greatly influenced by light intensity and direction |

| MTCNN [31] |  | can achieve very fast speed in joint face detection and alignment | accurate face detection; less affected by light intensity and direction | complicated models; complex calculation; slow running speed; only five feature points can be marked |

| DAN [32] |  | reduces the state-of-the-art failure rate by up to 70% | accurate location of feature points on profile; less affected by light intensity and direction; can mark 68 facial feature points | complicated models; complex calculation; slow running speed |

| Datasets | Number of Subjects | Number of Videos | Camera Parameters | Video Parameters |

|---|---|---|---|---|

| MAHNOB-HCI [33] | 27 | 527 | Allied Vision Stingray F-046C; F-046B | RGB videos 780 × 580 @ 60 fps |

| DEAP [34] | 32 | 120 | Sony DCR-HC27E | RGB videos 800 × 600 @ 50 fps |

| MMSE-HR [35] | 40 | 102 | RGB 2D color camera | RGB image sequences 1040 × 1392 @ 25 fps |

| PURE [36] | 10 | 60 | eco274CVGE | RGB videos 640 × 480 @ 30 fps |

| OBF [37] | 106 | 2120 | Blackmagic URFA mini | RGB:1920 × 1080 @ 60 fps |

| Camera box | NIR:640 × 480 @ 30 fps | |||

| VIPL-HR [38] | 107 | 3130 | Logitech C310 | 960 × 720 @ 25 fps |

| Realsense F200 | NIR:640 × 480 @ 30 fps Color:1920 × 1080 @ 30 fps | |||

| Huawei P9 smartphone | Color:1920 × 1080 @ 30 fps |

| No. | L | R | F | L-F | R-F | L-R | L-R-F | L-R-N |

|---|---|---|---|---|---|---|---|---|

| 1 | 12.73 | 12.38 | 10.28 | 16.35 | 14.13 | 12.05 | 16.68 | 8.15 |

| 2 | 8.75 | 6.15 | 8.50 | 7.85 | 7.08 | 10.75 | 6.38 | 5.50 |

| 3 | 8.13 | 9.95 | 7.95 | 7.25 | 8.33 | 8.85 | 6.18 | 5.68 |

| 4 | 8.46 | 6.10 | 8.05 | 9.41 | 7.21 | 11.41 | 8.36 | 6.97 |

| 5 | 2.93 | 1.33 | 3.69 | 3.36 | 3.55 | 2.36 | 1.29 | 1.48 |

| 6 | 1.57 | 1.95 | 1.50 | 3.19 | 2.88 | 1.90 | 2.57 | 3.12 |

| 7 | 4.36 | 0.69 | 4.38 | 3.81 | 2.36 | 1.86 | 3.74 | 0.38 |

| 8 | 5.93 | 1.34 | 18.10 | 12.49 | 15.71 | 1.27 | 15.54 | 1.39 |

| 9 | 23.40 | 11.10 | 18.52 | 21.36 | 16.17 | 4.57 | 16.33 | 2.86 |

| 10 | 9.81 | 4.79 | 7.95 | 3.67 | 3.14 | 3.29 | 3.88 | 4.81 |

| Parameter | Value |

|---|---|

| Pulse Rate Display | 25–250 bpm |

| Resolution | 1 bpm |

| Measurement accuracy | 2 bpm |

| Classification | Distance (cm) | Numbers | |

|---|---|---|---|

| Frontal face | with sunglasses | 35–60 | 10 |

| Wearing a mask | 35–60 | 10 | |

| too small a face–camera distance | <20 | 10 | |

| no occlusion of the face | 35–60 | 30 | |

| Profile | no occlusion of the face | 35–60 | 10 |

| Methods | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

|---|---|---|---|---|---|---|---|---|---|---|

| L-R | 5.53 | 5.42 | 2.57 | 2.15 | 3.98 | 2.44 | 5.43 | 5.99 | 3.69 | 0.99 |

| R-L | 1.32 | 5.43 | 4.69 | 1.37 | 3.62 | 3.78 | 1.76 | 2.36 | 1.25 | 1.47 |

| N-U-S | 2.25 | 6.86 | 3.77 | 1.41 | 5.20 | 1.75 | 3.81 | 2.77 | 2.36 | 1.88 |

| Video | MAE (bpm) | RMSE (bpm) |

|---|---|---|

| Too small a face-camera distance | 4.00 | 5.87 |

| Wearing a mask | 4.19 | 6.15 |

| Methods | RMSE in Different Conditions (bpm) | ||||

|---|---|---|---|---|---|

| Frontal Face | Profile | Too Small a Face-Camera Distance | Wearing a Mask | ||

| CHROM_De Haan [14] | Viola–Jones [30] | 1.1 | Undetected | Undetected | Undetected |

| Skin segmentation | 1.44 | 10.51 | 9.45 | 21.34 | |

| ICA_Poh [39] | Viola–Jones [30] | 1.24 | Undetected | Undetected | Undetected |

| Skin segmentation | 6.53 | 11.18 | 30.25 | 26.20 | |

| POS_Wang [41] | OC-SVM [42] | 8.04 | Undetected | Undetected | Undetected |

| Skin segmentation | 8.37 | 20.74 | 13.61 | 24.69 | |

| Bounded Kalman filter [16] | Viola–Jones [30] | 5.56 | Undetected | Undetected | Undetected |

| Back Projection | 6.62 | Undetected | Undetected | Undetected | |

| Proposed algorithm | 3.49 | 4.79 | 5.87 | 6.15 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zheng, K.; Ci, K.; Cui, J.; Kong, J.; Zhou, J. Non-Contact Heart Rate Detection When Face Information Is Missing during Online Learning. Sensors 2020, 20, 7021. https://doi.org/10.3390/s20247021

Zheng K, Ci K, Cui J, Kong J, Zhou J. Non-Contact Heart Rate Detection When Face Information Is Missing during Online Learning. Sensors. 2020; 20(24):7021. https://doi.org/10.3390/s20247021

Chicago/Turabian StyleZheng, Kun, Kangyi Ci, Jinling Cui, Jiangping Kong, and Jing Zhou. 2020. "Non-Contact Heart Rate Detection When Face Information Is Missing during Online Learning" Sensors 20, no. 24: 7021. https://doi.org/10.3390/s20247021

APA StyleZheng, K., Ci, K., Cui, J., Kong, J., & Zhou, J. (2020). Non-Contact Heart Rate Detection When Face Information Is Missing during Online Learning. Sensors, 20(24), 7021. https://doi.org/10.3390/s20247021