1. Introduction

There is a growing need for environments that not only facilitate 24/7 care but also fulfil the desire for one’s privacy at home. An environment that can provide such necessities is known as a smart environment and has been defined as being able to not only acquire but also apply the knowledge gained from the environment and those in it to improve the experience within the environment [

1]. Effectively providing automated care within an environment while providing sufficient privacy can be a challenging and potentially contradictory objective. The need for this type of care environment arises from the frequently highlighted fact that the world’s population will increase from 7.7 to 8.5 billion by 2030 and that by 2050 the number of people aged over 65 will outnumber those aged from 15–24, with projections showing that 16% of the world’s population will be aged 65 or older [

2]. It can, therefore, be anticipated that the number of people requiring care (either in their own home or a secure and safe environment) will increase and so the need to provide “aging in place” will become more significant. Findings have shown that to elderly people, “aging in place” means that they would be able to continue to live with a degree of independence and autonomy [

3]. Facilitating feelings of familiarity and security have been shown to be important aspects of providing “aging in place” and so allowing someone to remain living at home rather than a care home is vital. This has been reinforced by the fact that those who have moved into institutionalised life have often lost motivation for their own self autonomy and experienced feelings of isolation and depression [

4].

Delivering the capability to provide 24/7 monitoring of those in need of care would significantly benefit the healthcare professionals who have responsibility for such care. Expecting healthcare professionals to be able to provide 24/7 care is neither realistic nor cost effective. Installing sensors within the home is one way to allow for automated monitoring. Video cameras could potentially be used, however, this would contradict the previously stated need for privacy as concerns for the lack of privacy provided by video cameras have previously been expressed [

5]. Thermal sensors could provide a balance between descriptive data and privacy as images of the environment and its inhabitants would be able to be captured while omitting any of their discernible features.

For suitable monitoring, it would be necessary to use sensors integrated throughout the home to recognise and understand inhabitants’ actions in the context of daily life within the home. Such an action is called an Activity of Daily Living (ADL) and covers all activities performed in full autonomy in day to day life. Recognising the ADLs of a home’s inhabitants can, e.g., allow for accurate detection of abnormal behaviour during the completion of activities, resulting in the detection of cognitive decline [

6]. Identifying such decline and providing warnings can be highly beneficial to caregivers. Prior to detecting ADLs, it is important to understand how they are constructed as each ADL consists of subactivities. An example of this can be highlighted by the ADL “making a tea/coffee,” where the ADL itself can be made up of tasks such as “using the fridge, “opening the coffee cupboard” and “using the kettle.” There are significant differences between each of these subactivities in terms of the inhabitant’s body shape or “pose” when performing the subactivities. In this case, thermal-based sensors could be used to classify the poses as they can effectively detect sources of heat, without identifying discernible characteristics of the inhabitant, and thus provide a detailed and unobtrusive description of the inhabitant’s pose. Accurately recognising the poses being performed can, therefore, be extremely helpful in understanding the ADL being performed and potentially, the quality of its execution in relation to assessments such as the Katz index [

7].

There is potential that the accuracy and efficiency of providing “aging in place” can suffer due to the common need for privacy measures, however, the unobtrusive and low-resolution nature of the thermal sensors used in this work are a viable solution. Attempting automated and constant care using sensors can, however, involve practical limitations of integrating sensors within the environment. The challenge, therefore, is to find the correct balance between accuracy, privacy and practicality in the approach to monitoring a smart environment.

The primary contributions of the research described in this paper are:

Investigation of the capabilities of utilising five thermal sensors to provide multiple perspectives of a smart environment in conjunction with a Convolutional Neural Network to provide an unobtrusive and noninvasive approach to recognising full body poses/actions.

Practical limitations of using a ceiling sensor are considered and the use of only the sensors providing lateral views is tested. The various permutations and combinations of the sensors are investigated to determine the trade-off between performance, cost and the number of required sensors.

The remainder of the paper is structured as follows:

Section 2 presents background and related work;

Section 3 discusses the proposed methodology for pose recognition using deep learning and describes the experiments conducted to find the best performing sensor permutation;

Section 4 presents an analysis of the results obtained from the experiments; finally,

Section 5 presents discussion and conclusions along with details of limitations and future work.

2. Background

To understand the behaviour of an environment’s inhabitant, it is important to detect and recognise the ADLs that are performed within the environment. Sensors can be used to collect data from known performances of ADLs to train machine learning algorithms to recognise the same ADLs from future unseen data. Some sensors that can be used to collect this descriptive data are wearable and image capture devices. Wearable devices can be placed on a person’s body to collect data as they perform actions or ADLs. The data captured for each action acts almost as a fingerprint in its uniqueness for classification tasks in which an inhabitant’s actions must be described to a machine learning algorithm.

Body worn accelerometers can be used to collect data that is descriptive of the wearer’s actions. The pose or activity being performed can be represented numerically in the form of features extracted from the sensor data. The study in [

8] used body worn accelerometers to capture physiological data from the performances of activities such as walking, running and jumping. The signal retrieved by the sensors was first segmented to determine the beginning and ending of the activity and from this segment, various features were extracted. The extracted features most useful for the classification of the activity were then selected and any remaining features were deemed redundant and subsequently discarded. The selected features were provided as the input for the random forest and C4.5 decision tree machine learning algorithms where the output was a prediction of the activity class label. Once their respective results were compared, it was found that the random forest produced the marginally better performance with a 99.90% recognition rate, whereas the C4.5 decision tree achieved 99.87%.

Wearable devices were also successfully integrated for gesture recognition in [

9]. In this study, a flexible bracelet for electromyography (EMG) gesture recognition that boasted flexible solar panels to charge the battery was proposed. The device consisted of four EMG sensors, and a selection of features for EMG preprocessing were extracted for the classification problem. Of the tested features, the Discrete Wavelet Transform (DWT) achieved the highest accuracy score and so was used in the final application. The Support Vector Machine (SVM) was chosen as the classification algorithm to train and test where the data was broken down into three datasets made up of different volunteers. To determine the overall performance, fivefold cross-validation was applied. The five classes of gesture that were targeted in this study were rest position, hand open, power grasp and pronation and supination of the wrist. The experiment consisted of 3 people performing gestures while wearing the device. An average accuracy of 94% was achieved while avoiding being intrusive in nature. Issues, however, may arise from the utilisation of wearable sensors for gesture recognition solutions if the inhabitant forgets to wear the device or decides not to wear it at all due to discomfort [

10]. In the case of elderly people, the residents may not accept such a system due to a lack of user friendliness and the need for specific training for using the device [

10,

11]. The sensor’s battery life and constant need for recharging can also limit the implementation of such a solution where there is a necessity for 24/7 monitoring within the home [

12].

Image capture devices such as visible spectrum video cameras can detect a person’s full range of motion and so can be utilised to recognise the actions performed within the home. The importance of this recognition capability is indicated by the study completed in [

13] as detecting abnormal behaviour can subsequently highlight potential health problems. An RGB-D video camera was positioned in the ceiling to provide a top-down view of the environment where the camera was used to collect image and depth data to locate the person as well as the 3D positions of their head and hands in each frame. Sequences of the 3D positions were analysed during the completion of various activities so that the Hidden Markov Model (HMM) could be trained to recognise activities from such head and hand position sequences. An F1-score of 0.8000 was achieved with the HMM that utilised only the sequence of head positions for its input.

An analysis on 3D posture data was conducted in [

14], which included the recognition of various gestures using an RGB-D camera. A Microsoft Kinect was used to capture images of the person from which numerous joints were detected that would act as the features to aid in the classification of the posture. A K-means clustering method detected the postures and for the classification of each posture, an SVM was used. Each activity to be classified was defined as a unique sequence of known postures. In one of the tests performed on the novel dataset, Kinect Activity Recognition Dataset, the 18 classes of activities were split into two categories: gestures and actions. The gestures involved examples such as

Bend,

High Arm Wave and

Horizontal Arm Wave while the actions included

Drink,

Phone Call and

Walk. The test was conducted to find how accurately the image-based system could classify the gestures and actions that were based on similar postures. After the data samples of each subject were split into two-thirds training and one-third testing, the gestures were recognised with an accuracy of 93.00%.

For classification tasks, there are various machine learning algorithms to select from. Machine learning algorithms, such as SVMs and random forests, utilise a training dataset from which descriptive and unique features are extracted. It is important that the extracted features are effective at training the algorithm to accurately predict the classes within unseen data samples [

15]. Nevertheless, [

16] states that once the task of extracting features becomes almost as challenging as the problem for which the machine learning model is used, this method of learning may not be suitable. Alternatively, a more advanced manner of learning called deep learning can be used. Deep learning algorithms obtain knowledge from experience where simple concepts are used to build complex concepts. For example, corners and contours are simple concepts that can be combined to be defined as edges, subsequently representing the concept of a person. It is also detailed in [

16] that concepts are built on top of one another, establishing multiple layers and a “deep” architecture.

A Convolutional Neural Network (CNN) is a type of deep learning neural network designed to process series and image data. With the capability of automatically selecting features from the input data, it can be useful to employ a CNN for recognition-based tasks involving images of 3D shapes. Such tasks have previously been fulfilled using CNNs to recognise 3D shapes [

17]. This work was motivated by the theory that rather than using 3D shape descriptors, a 3D shape could be better recognised using a series of views of the 3D shape rendered as 2D images. The Multiview CNN (MVCNN) was designed by capturing 12 unique 2D views of the 3D shape that was to be recognised and inputting the 2D images separately into the CNN. View-based features were then extracted and inputted into a view-pooling layer. The shape descriptor was finally obtained by passing the extracted features through the final part of the network. The network primarily consisted of five convolutional layers, three fully connect layers and a softmax classification layer. After pretraining the network with ImageNet [

18], all of the 2D views for each 3D shape were used to finalise the training. The work was evaluated on the Princeton ModelNet dataset [

19] and compared against various other works. The MVCNN outperformed each of the state-of-the-art descriptors. The MVCNN was fine-tuned on the 2D views of the 3D shapes from the ModelNet training set. This fine-tuning bolstered the performance, obtaining 89.9% classification accuracy. This accuracy was a 12.8% increase from a state-of-the-art 3D shape descriptor, demonstrating how 3D objects within an environment can be successfully recognised using a CNN without the need of 3D shape descriptors. Using multiple 2D images representing various views of the object were instead shown to be a superior solution.

As there is potential for video cameras to have an intrusive nature [

5] a different method of image capture can be facilitated to avoid this. Various approaches for the preservation of privacy using thermal imagery have been proposed, such as preserving privacy during the digitisation of the thermal image, altering the sensor noise to remove facial features and the use of exposure bracketing to preserve privacy in thermal high-dynamic range (HDR) images [

20]. In the first approach, sensor values that fell within the human temperature range were detected as the image was digitised and so their pixels were zeroed. This prevented any successful recognition of a person’s face. The second approach was also conducted during the creation of the image where microbolometer voltages and gain amplification of the thermal device were altered to permit only image noise that would hide a person’s facial features. The same gesture dataset consisting of 20 examples of 10 hand gesture classes was used for both approaches to determine how well the gestures could be classified following the approach’s application. A multiclass bag-of-words SVM-based classifier was trained, and an accuracy of 97% was achieved with both approaches. In the third approach, areas within a thermal image equating to the temperature range of human skin were removed by either overexposing or underexposing the pixels, while HDR is maintained throughout the rest of the image.

There has been a concern highlighted that even if a system was not designed to report images, the images could still be accessed if the system was hacked. The study in [

21] proposed an approach to detecting and identifying people while the captured data preserved their privacy. The system, called Lethe, used limited memory storage and data transmission so that full thermal images would not be compromised in the event of the system being hacked. Two thermal cameras were stacked on top of one another in a door frame to collect data as people walked through the door. Values that fell within the range of human skin temperature were used for person detection where the direction of movement was determined by comparing the current frame with the previous frame. Height was chosen as the feature to detect a person’s identity as only the pixel representing the top of a person’s head, within a single thermal camera’s Field of View (FOV), was required to determine their height. Both cameras calculated a height value, which was used alongside the person’s distance from the cameras to estimate their physical height. The experiment found that Lethe could predict the direction of a person’s movement with an overall accuracy of 99.70%. In a best case example, 3 participants were identified, as they passed though the doorway several times, with an accuracy of 99.10%. Pairs of participants, with a difference of 5 cm or greater in their height when walking, were identified with 96.00% and an accuracy of 92.90% was achieved for differences of 2.5 cm. In total, 21 values were required in the thermal camera’s memory for this approach, resulting in a memory requirement of 33 bytes. Only 0.69% of an image could, therefore, ever be stored on the 60 × 80 pixel thermal camera that was used in this study. A low data rate hardware transmitter was also used in the attempt to preserve privacy. The transmissions from the device were expected to last a minimum of 0.366 s, therefore, the data rate was only required to be 30 bytes per second. The limited memory storage alongside the low data rate ensured that full thermal images could not be taken from the device.

Thermopiles use thermal energy as their input in order to output a voltage in the range of tens of hundreds of millivolts. This voltage is directly proportional to the local temperature difference on the thermopile. The thermopiles can be used to provide a level of spatial temperature averaging [

22], therefore, the imaging solution can be realised and has been done so through the development of thermopile 2D arrays [

23]. The thermopile infrared sensor (TIS) devices generate temperature values of the local space that are stored within arrays whose sizes range from 8×8 to 120x84 The temperature values generated at any given instant can be used to generate a thermal image by scaling the values to the range of 0–255. In such a case, each value would represent a pixel’s grey level. This would result in the hottest aspects of the image being represented by pixels closer to the upper limit of the range (white) with the colder portions being represented by pixels closer to the lower limit (black). The thermal images produced can clearly show the heat signature of the person within a monitored space, however, no discernible characteristics of the person’s body or their face can be seen [

24].

In contrast to the previously highlighted privacy preserving approaches, our work utilises the low-resolution images captured by TISs. High-resolution thermal images have proven to be advantageous while preserving privacy [

20,

21] where the facial features of a person were successfully hidden while maintaining a high-quality image for the rest of the scene. A high resolution was particularly practical in [

21] as identifying the pixel representing the top of a person’s head allowed for the eventual estimation of their height, therefore, facilitating the identification of the person. A low-resolution image would not allow for an accurate determination of the top of the head, subsequently hindering the system’s capability to use height to accurately differentiate between people. For our work, however, only the differences in shape of the inhabitant’s heat signature between different pose classes and their position within the frame are needed from the data, therefore, the TIS provides sufficient information. The low resolution of the data benefits our approach as no image processing techniques are necessary to remove characteristics of the person’s face from the data, therefore, if a system incorporating TIS data was hacked, privacy would still be preserved. Unlike the previously detailed work that is able to maintain a high resolution for the environment within the images, the low-resolution TIS data is unable to include details of the environment. This feature is not, however, necessary for our work’s application area and, instead, our work benefits from a lack of environmental detail as images of one’s home cannot be recorded, subsequently improving the privacy preservation.

In our previous work with TISs, we proposed an approach for inferring basic activities performed within a smart kitchen, such as using the fridge and sitting at the kitchen table [

25]. This was accomplished by combining knowledge of the inhabitant’s pose with the object that was “nearest” to the person at the time of the pose performance. The “nearest” object was determined using the ceiling TIS frame to calculate the Euclidean distance between the person and each kitchen object, whereby if the shortest distance was less than an empirically chosen threshold, the person was deemed “near” the object. The pose prediction and “nearest object” calculation pairing was used in conjunction with one another to infer the most likely activity being performed, e.g., when the person bent down while near the fridge, it was inferred that the fridge was being used.

Seven poses were selected for classification: left arm extended forwards, right arm extended forwards, left arm extended sideward, right arm extended sideward, bending, sitting and both arms down. Random forest, SVM and complex decision tree machine learning models were each utilised to classify the poses and a comparison was conducted where the random forest was found to be the best performing model. Both the training data and the testing data were captured using two TISs integrated within the environment. One TIS was embedded in the ceiling to provide a top-down perspective of the pose performances and one was positioned in a corner of the room to provide a lateral perspective. For each pose performance, the person’s heat signature was detected within both the ceiling TIS frame and the corner TIS frame. From the person’s heat signature in both TIS frames, 14 unique features were extracted, upon which both features vectors were combined to result in a feature vector consisting of 28 feature values. This feature vector was used as the random forest’s input where a prediction for the pose was the subsequent output. The poses were classified at a rate of 88.91% and the nearest objects were calculated with an accuracy of 81.05%. The activities were inferred with an accuracy of 91.47%, therefore, demonstrating how low-resolution thermal imagery could be effectively utilised for the prediction of poses and, resultantly, the inference of activities.

As shown by our previous work, using TIS data to recognise the poses being performed can provide an understanding of the activities an inhabitant conducts within the smart environment, while preserving privacy. It was believed, however, that the pose recognition rate could be significantly improved from what was achieved in our previous work. The work conducted in this paper, therefore, introduces two major changes to the previous pose recognition approach. The first change was that the number of TISs deployed in the smart kitchen was increased from two to five. It was hypothesised that introducing the means of capturing additional perspectives of each pose performance would reduce misclassifications that resulted from either the person not being detected by a TIS or part of their pose being occluded. How CNNs could be used with the TIS data in place of the random forest machine learning algorithm was the second change. Where a single random forest was previously used and relied on feature level fusion for its input, in this work, CNNs were used to correspond with each of the five TISs where the inputs were the TIS data recorded by each corresponding TIS. A minor change to the approach was with regards to the number of pose classes. The differentiation between the left and right arm whenever the inhabitant was extending an arm outward was determined to be a redundant feature of the previous approach as, ultimately, it was only the extension of the arm itself that was required to understand the actions of the inhabitant. For this reason, the work presented in this paper does not include predictions for the specific arm that was being extended outwards during the completion of a pose, only that an arm was being extended outwards.

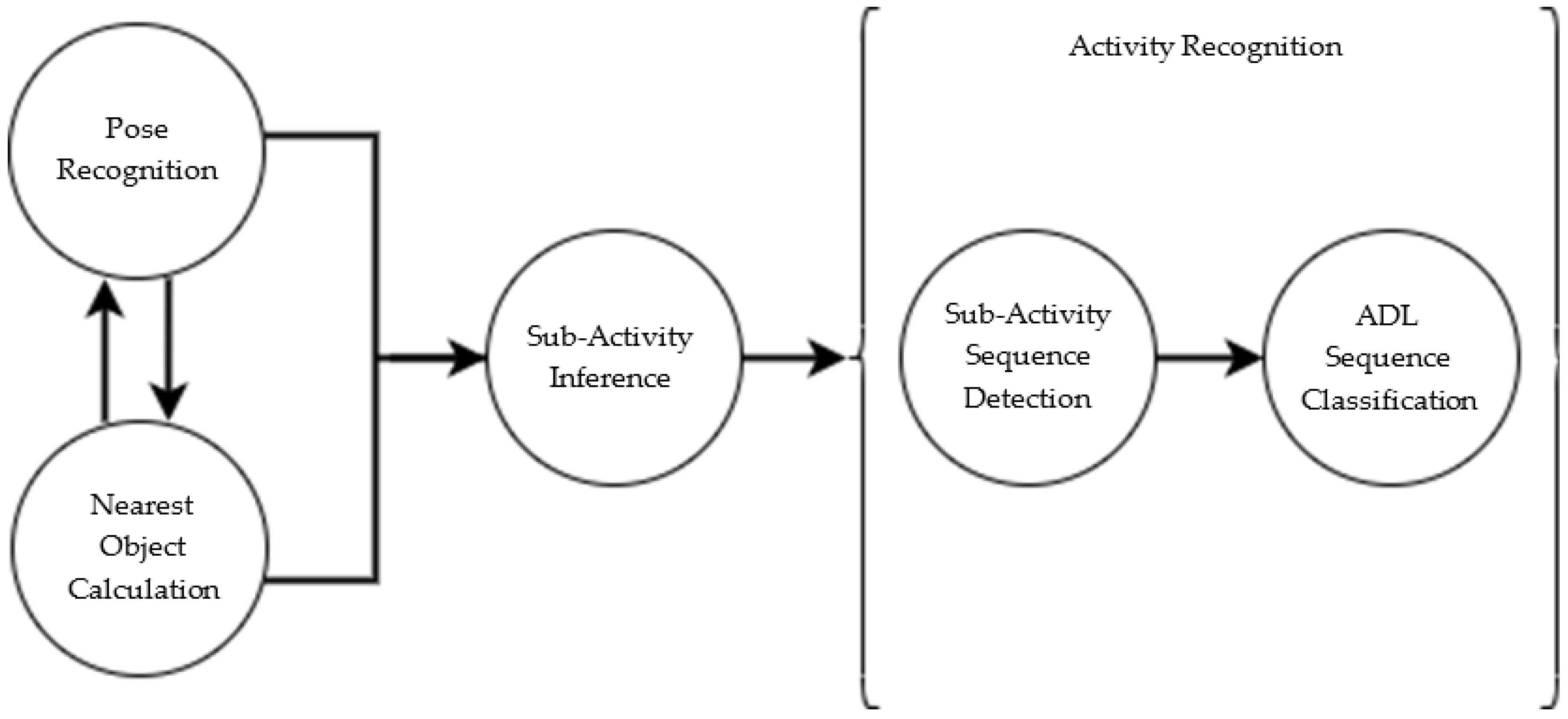

This work is a low-level component of our overall proposed framework for ADL recognition, which is depicted in

Figure 1. This paper expands upon the pose recognition approach to improve classification accuracy so that, ultimately, we will be able to improve upon our subactivity inference rate in future work when we investigate the use of subactivity sequences to detect and predict ADLs.

3. Materials and Methods

This section details the materials required for the experiment with regards to the sensors, the data and the CNN architecture. In addition, discussed are the methods employed to determine the most accurate permutation of TISs and CNNs that maintains a sufficient level of practicality.

3.1. Thermopile Infrared Sensor Details

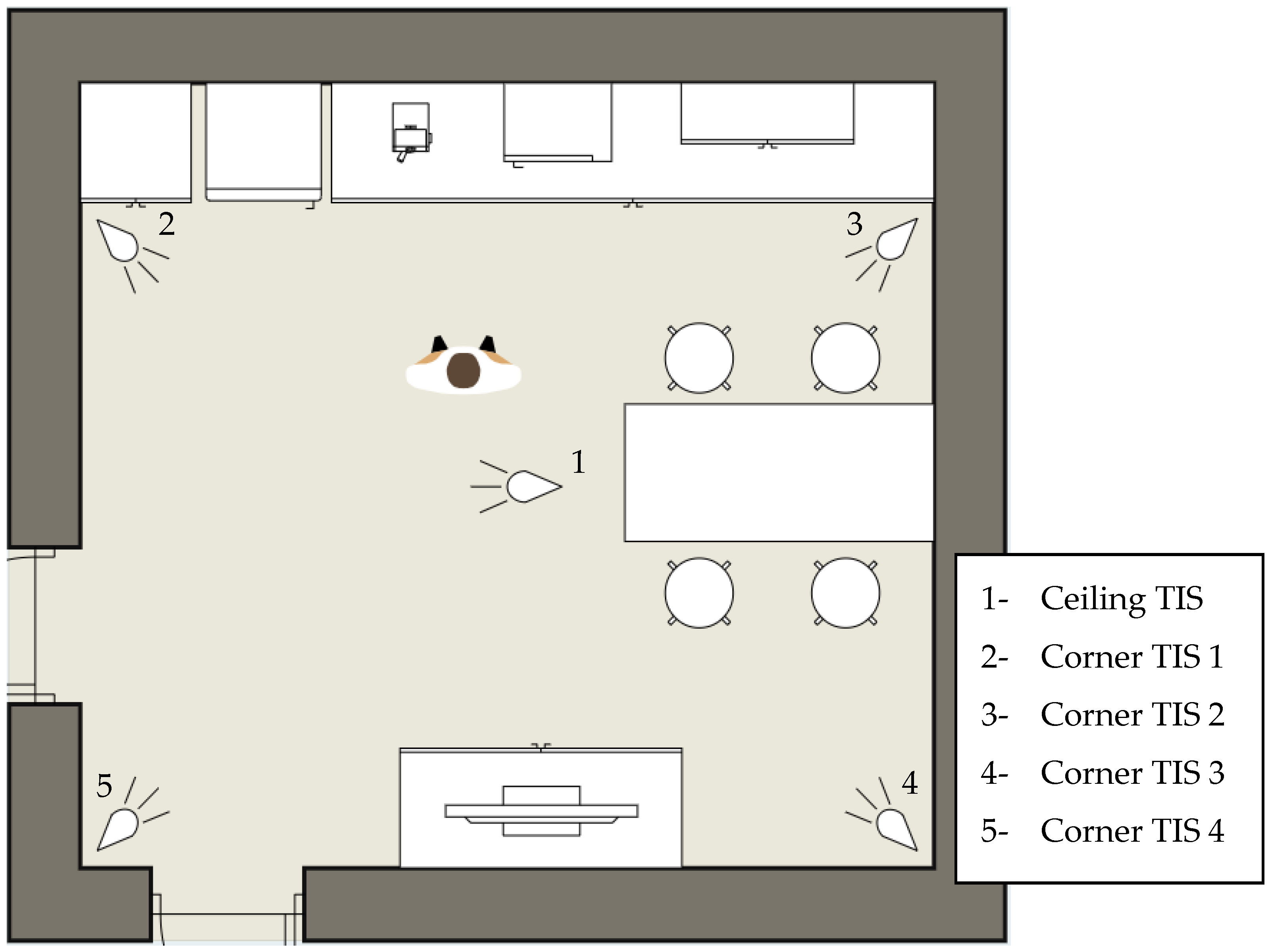

A smart kitchen environment, located within Ulster University [

26], was used for the deployment of TISs. Within the smart environment, there are common kitchen objects: kettle, microwave overhead cupboards, fridge and a kitchen table with four surrounding chairs. Images of the smart environment are presented in

Figure 2.

TISs were installed in each of the room’s four corners and one TIS was embedded in the ceiling. The deployment of TISs offered four lateral views of pose performances and one top-down view. A top-down perspective of the environment is visualised in

Figure 3 where the positions of each TIS are labelled. The laterally positioned sensors were stabilised using tripods and were each maintained at the same height. The TISs were integrated with the SensorCentral [

27] middleware, allowing for the captured thermal data to be exported as a JSON object.

Each TIS operated at a frame rate of approximately 8 frames per second. To provide synchronisation for the data capture, whenever images were recorded by the TISs, their timestamps were also stored for comparison. If there was a difference of 500 ms or more between the capture of any of the frames, the frame capture was not considered to have been synchronised and the frames were discarded.

3.2. Thermal Imagery for Training and Testing the CNN

The TISs were first used to capture the training data, which consisted of five pose classes:

Arm Forward,

Arm Side,

Arms Down,

Bend and

Sitting. The classes were selected as the poses are commonly performed during the completion of activities within the kitchen. Accurate classification of the poses, therefore, could eventually be used to provide further knowledge with respect to the ADLs being performed. Examples of how each pose appeared to the TISs are presented in

Table 1. The inhabitant performed the poses in the centre of the environment while periodically changing the direction they faced, allowing for the TISs to record variations in each pose class. The five TISs were used to record 500 unique instances of each pose class, resulting in a total of 2500 individual pose performances within the training dataset.

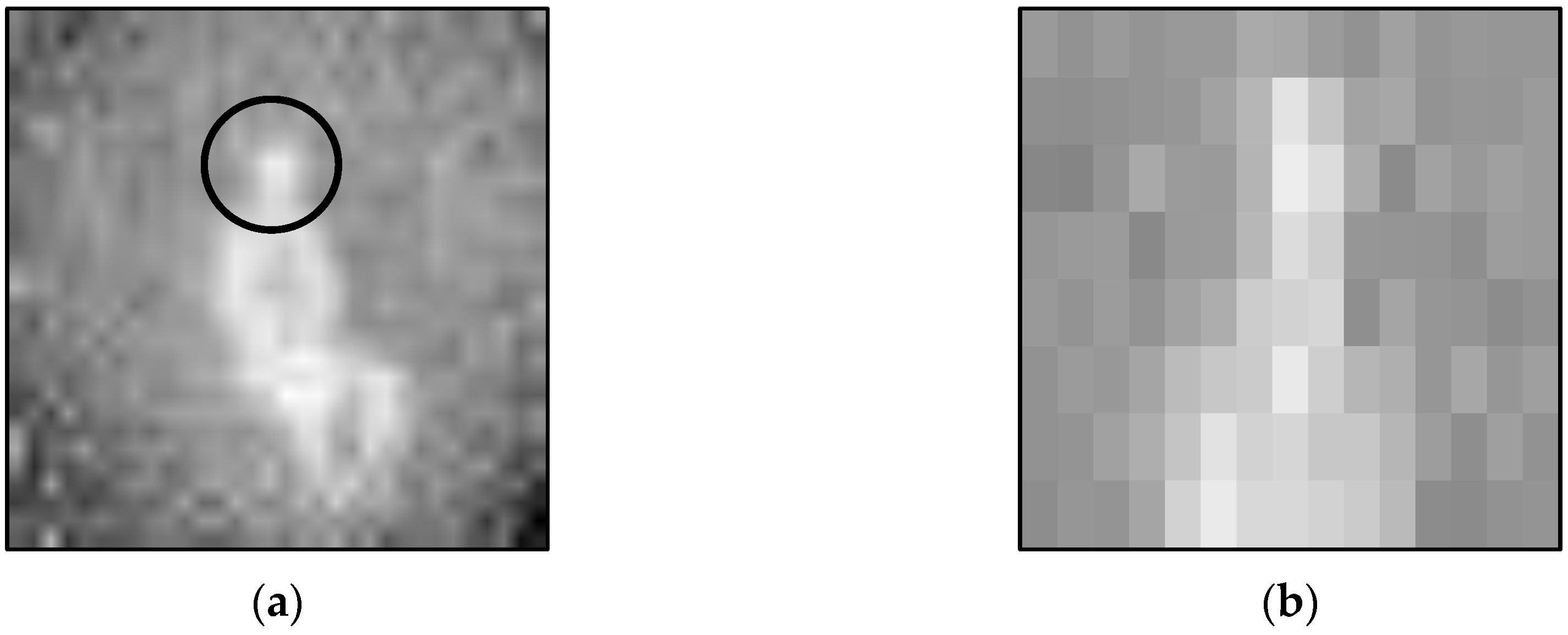

The training data was captured over several days to account for varying ambient temperatures as a significant rise in the ambient temperature would increase the amount of image noise, consequently raising the difficulty of detecting a person. A significant decrease in the ambient temperature, however, would allow for easier detection of the inhabitant as there would be a greater difference between human body temperature and the temperature of the environment, along with the objects within it. There were, however, no considerable changes between the data captured on each day. Each training TIS image was manually reviewed and annotated with the pose class that was performed within the image. Manual annotation was also conducted for the test dataset in order to provide the ground truth with which the pose predictions for the test data could be compared for calculation of the experiment results. The capture of

Sitting by C2 in

Table 1 is depicted in

Figure 4 where the image has been enlarged and zoomed in on the inhabitant’s face. The image exemplifies how the data used in this work was successful in protecting the privacy of the inhabitant as no definable characteristics of the inhabitant’s face are visible.

3.3. Architectural Design of the Convolutional Neural Network

In our previous work with TIS data, more traditional classifiers such as the random forest, SVM and decision tree were used with thermal imagery for classification purposes. The results that were achieved showed the approach to be successful, however, it was intended to improve upon the attained classification accuracy. The approach proposed within this work involved the deployment of additional TISs within the environment, to provide an increased number of viewing angles for each pose performance. It was also decided to investigate how CNNs could potentially improve the pose recognition rate achieved by the previously tested classifiers. This design choice was made due to the ability of CNNs to automatically extract low to high level features from raw image data for classification. In this work, five separate CNNs were trained, where each CNN corresponded with one of the five TISs. Each CNN was trained using only the thermal data captured by its respective TIS. Nevertheless, the CNNs maintained the same architectural design.

The CNN structure implemented for this experiment was initially based on the widely used AlexNet [

28]. For our approach to pose recognition, modifications were made to the AlexNet architecture to improve the effectiveness of the network for the low-resolution thermal data captured by TISs. For a detailed explanation of AlexNet, readers may refer to [

28]. Our CNN architecture is depicted in

Figure 5, and it consisted of 30 layers in which eight were learnable: five convolutional and three fully connected. Unlike AlexNet, the output from the third fully connected layer was input into a five-way softmax layer as there were only five class labels rather than 1000. The only additional layer type included in our architecture, which is not used in AlexNet, was the batch normalisation layer. This addition facilitated faster network training due to the resulting higher learning rates, subsequently allowing for more efficient experimentation of the network. A batch normalisation layer was added after each of the five convolutional layers and before the activation functions, as such functions can result in non-Gaussian distributions [

29].

Irrespective of the additional batch normalisation layers and the potential risk for overfitting that their inclusion created, the dropout layers used in AlexNet were maintained within our network. Experimentation also found that the omission of the dropout layers resulted in a worse training performance. A range of the hyperparameters for each convolutional layer were altered where the stride of the first convolutional layer was changed from four to one as irrespective of the detrimental impact that a smaller stride could have towards training speed, it ultimately favoured accuracy in comparison to a larger stride [

30]. The number of filters for each of the five convolutional layers were reduced in AlexNet from 96, 256, 384, 384 and 256 to 8, 16, 32, 64 and 128, respectively. Due to the low-resolution images used in our approach, it was estimated that the number of patterns that could be detected from the data would be much lower than the RGB images used to train AlexNet. It was, therefore, hypothesised that it would be more appropriate to use a lower number of filters for the less complex low-resolution imagery. The number of filters increased with each new convolutional layer so that the deeper layers could effectively analyse the increasingly higher detail features, as performed with the architecture created in [

30]. Like AlexNet, response-normalisation and max-pooling layers followed the first and second convolutional layers and a third max-pooling layer followed the fifth convolutional layer. The ReLU (Rectified Linear Unit) activation function was also maintained with AlexNet, however, the placement of the ReLU layers within our architecture was different. Instead of following each convolutional and fully connected layer, the ReLU layers followed each batch normalisation layer.

The image input layer was provided images of size 30 × 32 × 1 where the first convolutional layer applied 8 sliding convolutional filters of size 11 × 11 × 1 with a one-pixel stride. Due to the single pixel stride, the ‘same; padding hyperparameter used for the first convolutional layer in AlexNet was maintained so that the spatial output size remained the same as the input size for the layer. The second convolutional layer applied 16 sliding convolutional filters of size 5 × 5 × 8 to the output of the first convolutional layer once the output had passed through its respective pooling and normalisation layers. The third convolutional layer applied 32 sliding filters of size 3 × 3 × 16 to the pooled and response-normalised output of the second convolutional layer. The fourth convolutional layer used 64 sliding filters of size 3 × 3 × 32 where the fifth convolutional layer utilised 128 sliding filters of size 3 × 3 × 64.

3.4. Evaluation Methodology

The five TISs were used to capture a total of 2500 unique pose performances in which 500 frames of each of the five pose classes were recorded. As previously stated, each of the five TISs had a corresponding CNN where the CNNs were trained only with the frames captured by their respective TISs. The thermal data delegated to training each CNN was stratified and 10-fold cross-validation was implemented, leaving 90% of the data for training and 10% for validation. Each CNN was trained with its 90% training partition and validated with the 10% validation partition to give an indication of the capabilities to predict poses from the CNN’s respective TIS viewing angle. The process was repeated 10 times, allowing a different 10% partition of the data to act as the validation data each time, resulting in 10 models. The model that achieved the highest accuracy score with its respective validation partition was selected as the CNN for the particular TIS viewing angle to later apply to the test dataset for the final results. With respect to the checkpoint rule applied by the deep learning model throughout the training phase, the most recently updated model was saved, irrespective of the results it attained with the validation data. This training process was conducted for each of the TISs’ CNNs.

The test dataset was used to evaluate the performances of the trained CNNs. A total of 250 unique pose performances were captured for the test dataset where 50 frames of each pose class were included. Just as for the training dataset, the test dataset was captured from the five TIS viewing angles so that each CNN could be evaluated using only the data captured by its corresponding TIS. This test dataset was manually annotated to provide the ground truth to be used to produce the final results that are later presented in this paper.

3.5. Experimental Design

In our experiments, we investigated the use of CNNs to recognise poses performed in a smart kitchen. The assumption was made that the poses were conducted in the centre of the environment so that the pose classification capabilities of the CNNs could be evaluated with minimal occlusions of the pose performances. With a separate CNN trained for each TIS, a total of five trained CNNs were used for the experiment. Introducing five viewing angles into a pose recognition solution increased its robustness. If, e.g., a TIS’s view of the person was occluded or the TIS malfunctioned, the other TISs were in place to fulfil the classification.

As each CNN was trained only with data captured by its respective TIS, it was important to ensure that the pose instances were clearly depicted in each thermal frame. For instances of

Arm Forward and

Arm Side, it was possible that if the pose was performed with the arm extended towards the TIS, the pose may have looked more similar to the

Arms Down class. The example frames in

Table 1 show evidence of this. Upon capturing the training data, each frame was, therefore, manually checked to ensure that the pose was clearly depicted, however, if the pose was not, the image was replaced with one in which the pose class was clearly depicted. This was only conducted for the training dataset to improve the training of each CNN and no replacement frames were used for the test dataset. Using the trained CNNs in conjunction with one another, it was investigated how accurately the test data could be classified with regards to recall, specificity, precision and F1-score. An examination was conducted to find a permutation of the TISs that would be more in favour of cost, scalability and practicality, while still maintaining a high recognition rate.

For the experiments with the test dataset, the five frames that were captured during an instance of a pose performance were used to produce a prediction for the class of pose. Each TIS frame was input into its respective CNN and each CNN produced its own pose prediction. To produce the prediction output, the softmax layer converted a vector of real values, received from the previous layer, into a vector of values where each value represented one of the pose classes. The values summed to 1 so that they could be interpreted as probabilities. As each pose class was accompanied by a probability value, the class with the highest probability was selected as the CNN’s own pose prediction output. As each of the CNNs outputted a prediction for a single pose instance, a method for selecting one of the five predictions was required. A Majority Vote scheme was applied, where the class, which was predicted most among the five CNNs, was selected as the prediction for the pose instance.

Due to practical limitations, installation of the sensor configuration used in this experiment may not be possible in all environments. With respect to the ceiling TIS, the material or layout above the ceiling may not be fit for installing the TIS If an environment had a lower ceiling height than the environment in which the CNN’s were trained, the ceiling TIS’s FOV may not be sufficient for providing complete coverage of the environment. Detection of the inhabitant and their actions would, consequently, be limited. It would also be possible for the ceiling height to be higher than that of the training environment. If the ceiling was over 5 m, detection of the inhabitant’s heat signature would become more difficult for the ceiling TIS. Oversensorising the environment would also not be in the best interest of cost and postinstallation maintenance, especially if similar accuracies could be obtained with less TISs.

It is important to highlight that it is possible for an environment to be large enough that the corner TISs and CNNs could be subject to the same distance-based issues as the ceiling TIS. Unlike the ceiling TIS, however, the laterally positioned TISs are not limited to a fixed distance from the inhabitant and so the exact positioning of the TISs can be altered, dependent on the environment in which they are deployed. The task of installing and positioning lateral TISs was more practical than with a ceiling TIS and so the dependency on the ceiling TIS for pose recognition was omitted in further tests.

The pose classification capabilities of permutations of the corner TISs’ CNNs were tested, while excluding the ceiling TIS’s CNN from providing predictions due to the stated practical limitations. The ceiling TIS’s individual results were used as the benchmark as these results were the highest from any of the individual TIS performances. The majority voting, Most Confident CNN and Soft Voting prediction selection methods were implemented for the experiments and the results attained by each method were compared. The Most Confident CNN scheme was implemented by analysing the prediction probabilities generated by each of the five CNNs. As previously detailed, to provide a pose prediction, each CNN generated a probability distribution for the five pose classes, upon which the class with the highest corresponding probability value was selected as the CNN’s prediction. For the Most Confident CNN prediction selection approach, the CNN whose pose prediction was accompanied by the highest probability value was chosen as the most confident CNN and so its prediction was selected as the final prediction output for the pose instance.

The third method implemented was the

Soft Voting approach. Given that each classifier represented a different viewing angle of the sensed environment and the inhabitant, weight values were assigned to each CNN as one may have been more capable of classifying the poses than the others. After each TIS image was input into the appropriate CNN, each CNN outputted a probability distribution where each probability corresponded with one of the five possible pose classes. The weight value assigned to a CNN was multiplied by each of the five pose probability values from the probability distribution, resulting in five weighted probabilities where each one corresponded with one of the five pose classes. After the weighted probabilities were calculated for each CNN probability distribution, the weighted probability values for each pose class were summed. For example, a weighted probability value was calculated for the

Arms Down class by each CNN. Each of the

Arms Down weighted probability values were summed, resulting in a single weighted sum value for the

Arms Down class. A weighted sum value was calculated for each pose class and the class with the highest weighted sum was selected as the final prediction for the pose performance. The

Soft Voting calculation for finding the weighted sum is shown in Equation (1) [

31]:

where

is the number of CNNs,

is the weight applied to the probability distribution that was generated by the

th CNN,

is the probability that class

is the correct class prediction according to CNN

and

is the class representing the largest weighted sum.

To determine the weight values for the classifiers, a naïve brute-force approach was employed [

32]. In this approach, the range of values that could be used for the weights was determined by the number of CNNs involved. For example, if the CNNs for the four corner TISs were being used, then the values used for each CNN’s weight could only range from one to four. As each of the four CNNs had a possible four values to use as their weight, there was a total of 24 permutations of weight values that could be utilised. Each permutation of values for the weights were tested to find weights that yielded the most accurate pose predictions. This naïve approach to assigning weights was preferable over the use of the training results as upon capturing the training dataset and prior to training the CNNs, pose instances were replaced if they did not clearly depict their class. The validation data to produce the training results for each CNN, therefore, consisted only of favourable samples of each pose. The training results were not representative of how the CNNs would perform with the synchronised pose performances from the test dataset and so the weights could not be determined from the training results.

5. Discussion

The aim of this study was to investigate how effectively unobtrusive thermal imagery could be used to train a deep learning network to recognise poses in previously unseen data, while preserving the privacy of the inhabitant. The deep learning network was inspired by AlexNet where several modifications were made to benefit the low-resolution imagery captured by the TISs and improve the classification results. The Long Short-Term Memory (LSTM) architecture was considered for this work, however, as the problem involved the classification of single poses, the advantage of capturing temporal dependencies between different input images that the LSTM would provide was less relevant. A TIS was installed in the ceiling and each of the room’s four corners. The five TISs were used to capture an equal number of frames for each of the five pose classes. Each TIS’s captured data was used to train a CNN, resulting in five CNNs trained to recognise poses from the viewing angle of their respective TIS. Upon finishing the training phase, unseen thermal data were used to test the CNNs. Much like in the training dataset, the test dataset was made up of five viewing angles for each pose performance. The TIS frame from each viewing angle was, therefore, input into its respective CNN. The output from this process was five pose predictions which were narrowed down to one final prediction using a Majority Vote scheme. This use of five TISs returned a high F1-score of 0.9920, demonstrating the successful implementation of the deep learning network for pose recognition.

The use of five TISs allowed for multiple perspectives of each pose performance and added robustness to the approach. The lateral perspectives provided by the corner TISs aided the ceiling TIS whenever it struggled to either detect the person or differentiate between poses with similar appearances. For example, from the perspective of the ceiling TIS, bending and sitting looked very similar and so the lateral perspectives helped differentiate between the classes. The ceiling TIS provided an important contribution with its strong capability to recognise whenever the person was extending an arm outwards and whether this was sideward or forward.

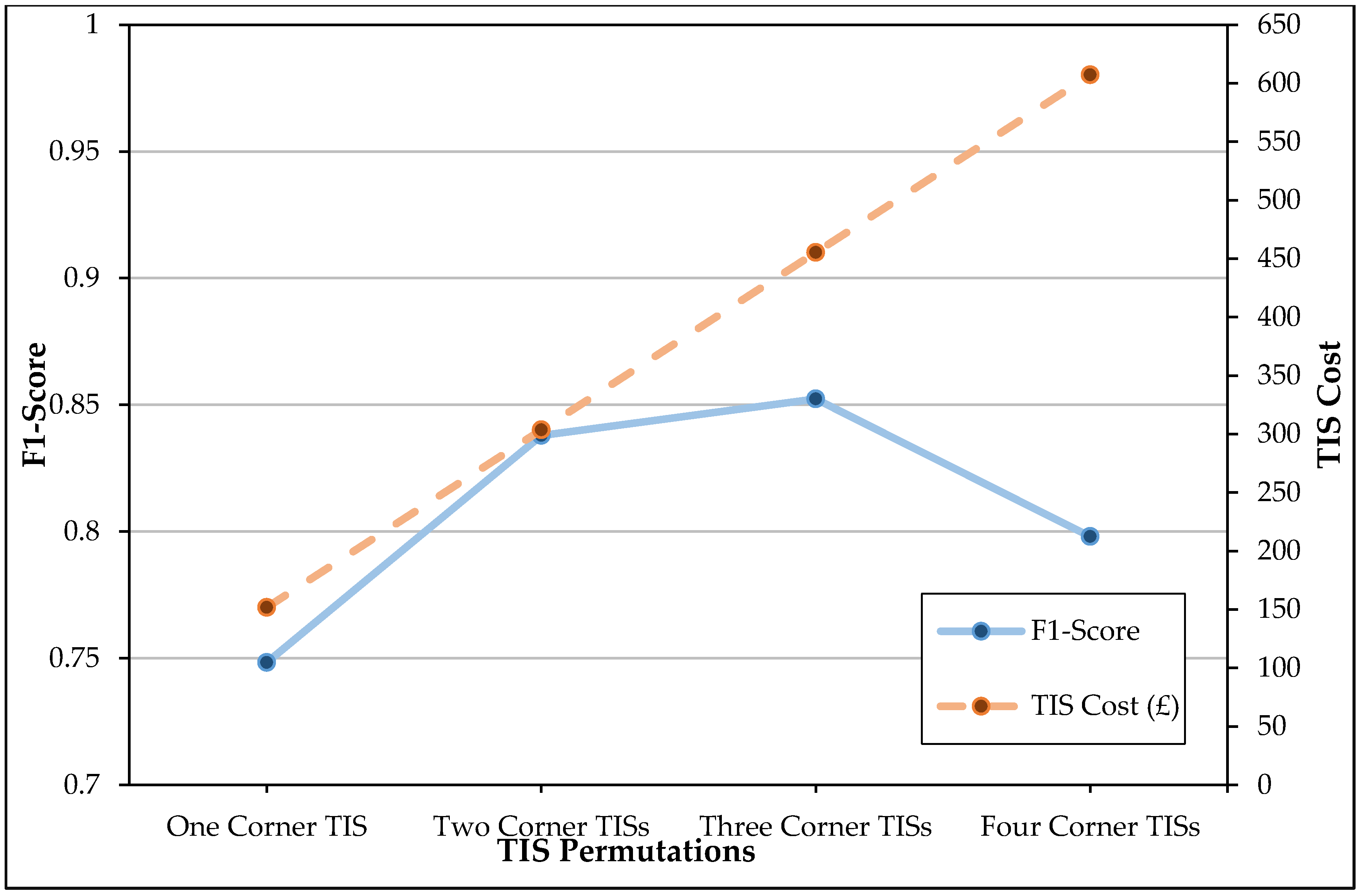

Beyond the successful pose classification that was achieved with five TISs, the most practical and cost-effective permutation of TISs was determined. The ceiling TIS did not fit the model of practicality due to the difficulty involved in deploying the TIS in an unobtrusive manner, e.g., ensuring no cables were visible. The F1-score from the ceiling TIS’s individual performance was the highest among all five TISs and so it was used as the benchmark with which to compare the performances of corner TIS permutations. There can be concern for environments varying in width and length, potentially causing the same distance-related issues suffered by the ceiling TIS for ceilings of varying height. For the laterally positioned TISs, however, the sensors are not limited to a fixed distance from the inhabitant. If necessary, it would be possible to position the TISs closer to where it is expected that the inhabitant will be most active.

From using four, three and two corner TISs, the CNNs achieved F1-scores of 0.9266, 0.9149 and 0.8468, respectively. These are promising results as the permutations of four and three corner TISs reached F1-scores that were very similar to that of the benchmark, suggesting that both accuracy and practicality can be achieved. A financial drawback, however, is that more than one corner TIS was required to achieve these scores. While the two-TIS permutation’s recognition rate was not as close to the benchmark, it uses only one additional TIS and so is a more cost-effective sensor deployment. The 0.0681 decrease from the three-TIS permutation and 0.1010 decrease from the benchmark could be considered as a justifiable sacrifice to ensure a more practical and financially sound approach. Selecting between the permutations will, however, ultimately depend on the context and resources at hand.

The experiments were conducted a second time where the CNN architecture was replaced with a random forest machine learning algorithm used in our previous work. The results were compared with the CNN results, and it was found that the best F1-score for a single corner TIS was achieved with a random forest model. The score was, however, still too low to justify the use of only one corner-based TIS, irrespective of the low cost. The CNNs significantly outperformed the random forests with three and four corner TIS permutations, however, a similar result was achieved with the permutation of two corner TISs. With respect to the individual pose class scores, however, the F1-scores attained by CNNs were more favourable. As a more promising balance between performance, practicality and cost can be achieved with the use of CNNs and several permutations of corner TISs, our previous classification approach has been successfully improved upon.

In each experiment, TISs were used as the only means for monitoring the environment and capturing pose performances. The sole use of TISs allowed for the preservation of privacy in our approach. While multiple thermal images were recorded of the inhabitant during each pose performance, the low-resolution and greyscale nature of the images prevented any discernible characteristics of the inhabitant from being visible. As an improvement upon other privacy-preserving approaches, image processing techniques are not required to hide an inhabitant’s face and there is no manner of accessing identifiable features of the inhabitant should the system be hacked. There is, however, a significant loss in environmental detail through the utilisation of low-resolution images. Nevertheless, only changes in the shape of the inhabitant’s heat signature is required for our approach and this is sufficiently accomplished with the use of the TISs. Privacy has, therefore, been successfully preserved with our approach to collecting data within a smart environment for pose recognition.

6. Conclusions

High classification scores have been produced while preserving privacy with the various approaches proposed in this work, however, limitations are present with respect to the data capture process and the sizes of both the training and test datasets. The thermal data captured for the experiments sufficiently accounts for pose classes that are commonly performed throughout the completion of ADLs, as well as the variable appearances of each pose that are dependent on where the inhabitant is facing relative to the TISs. The sizes of the datasets are, however, limited as the training data consist of only 500 instances of each class, while the test dataset consists only of 250 total pose performances. A concern is that a larger dataset may expose further limitations in the work through negatively impacting the recognition results. In further work, we will aim to increase the size of the datasets by capturing more data or by employing data augmentation. The datasets will also be expanded with respect to the number of environments in which poses are performed. Evaluating the proposed approach with the inclusion of other environments will facilitate the testing of additional poses and activities, as well as different positions of the laterally based TISs relative to the inhabitant.

The process of capturing the pose instances for the training and test datasets was limited due to the assumption that the inhabitant would only stand in the centre of the room to perform the selected poses while rotating in varying directions. This assumption was made so that the TISs would have an unobstructed view of the pose performances. The poses could have instead been performed under realistic conditions such as interacting with the environment during the completion of ADLs. Nevertheless, the aim for this work was to improve upon our previous classification approach, while also establishing an understanding of the capabilities for predicting poses, using multiple viewing angles in conjunction with the proposed CNN architecture. Further work is required to determine the predictive capabilities of CNNs when movement of the inhabitant, subtle pose performances, and occlusions of the inhabitant are each considered. In future work, pose instances for both training and testing will, therefore, involve interactions between the inhabitant and the environment, such as Arm Forward at the microwave or Bend at the fridge.

The results achieved with the thermal imagery can be improved still with the integration of image segmentation techniques. In future work, active contour-based segmentation techniques will be investigated for application to the TIS data to allow for simpler detection of the inhabitant. In future work, we will also investigate the inclusion of abnormal behaviour within the dataset to test whether such behaviour could be differentiated from poses and activities. Successful implementation of abnormal behaviour detection will help indicate whether TISs are suitable devices for deployment within care homes as such behaviour can aid in the diagnosis of age-related diseases.

As deep learning has been successfully implemented with the TISs to recognise poses, this will be expanded to establish a deep learning-based methodology for recognising ADLs. As shown by our previous work, the ability to recognise poses has proven to be extremely useful for inferring subactivities such as using the fridge and sitting at the kitchen table. It is intended to implement an HMM to work in conjunction with the CNNs detailed in this research to classify sequences of subactivities as ADLs. Both a deep learning and a more conventional machine learning approach will be implemented for performance comparisons. This work will continue to use TISs as the only sensory data sources so that privacy can continue to be preserved.