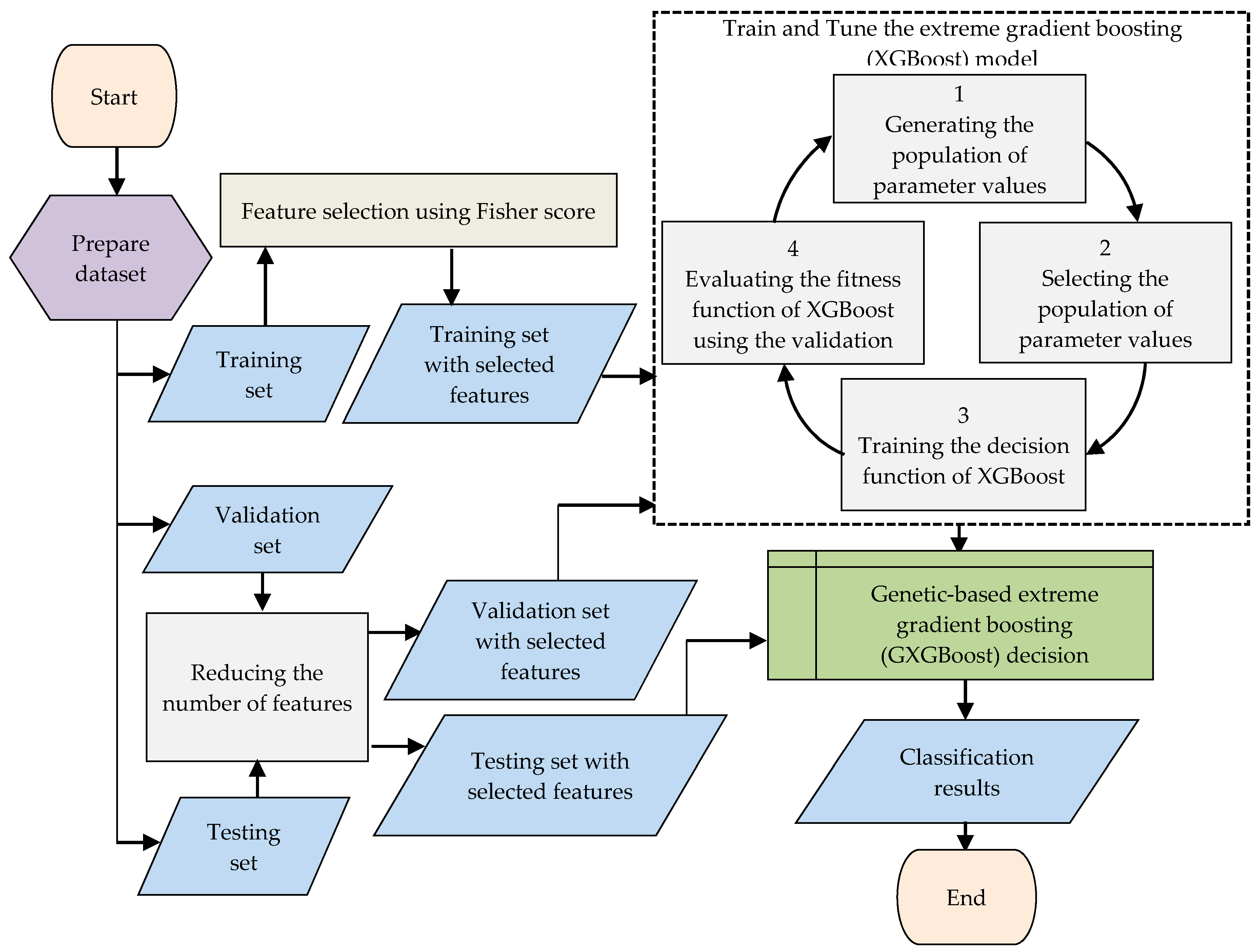

4.1. N-BaIoT Dataset

N-BaIoT is a public dataset used for detecting botnet attacks in IoT devices. It was created by Meidan et al. [

31]. This dataset contains more than 5 million points of real traffic data used to address the unavailability of real public IoT botnets. It was collected from nine IoT devices attacked by two families of botnets from an isolated network. The two families of botnets are Bashlite and Mirai. They are the most common botnets in IoT devices and they have harmful capabilities. In this dataset, the number of instances is different for each attack in each device. The N-BaIoT dataset contains a set of files; each file has 115 features, in addition to the class labels “benign” or “transmission control protocol (TCP) attack” for binary classification. The TCP attacks are also divided into Mirai and Bashlite attacks for multiclass classification. The 115 features comprise aggregated statistics for each data point in the network raw streams, representing five time windows—1 min, 10 s, 1.5 s, 500 ms, and 100 ms, which are coded as L0.01, L0.1, L1, L3, and L5, respectively. These features are distributed within five major categories, as shown in

Table 1.

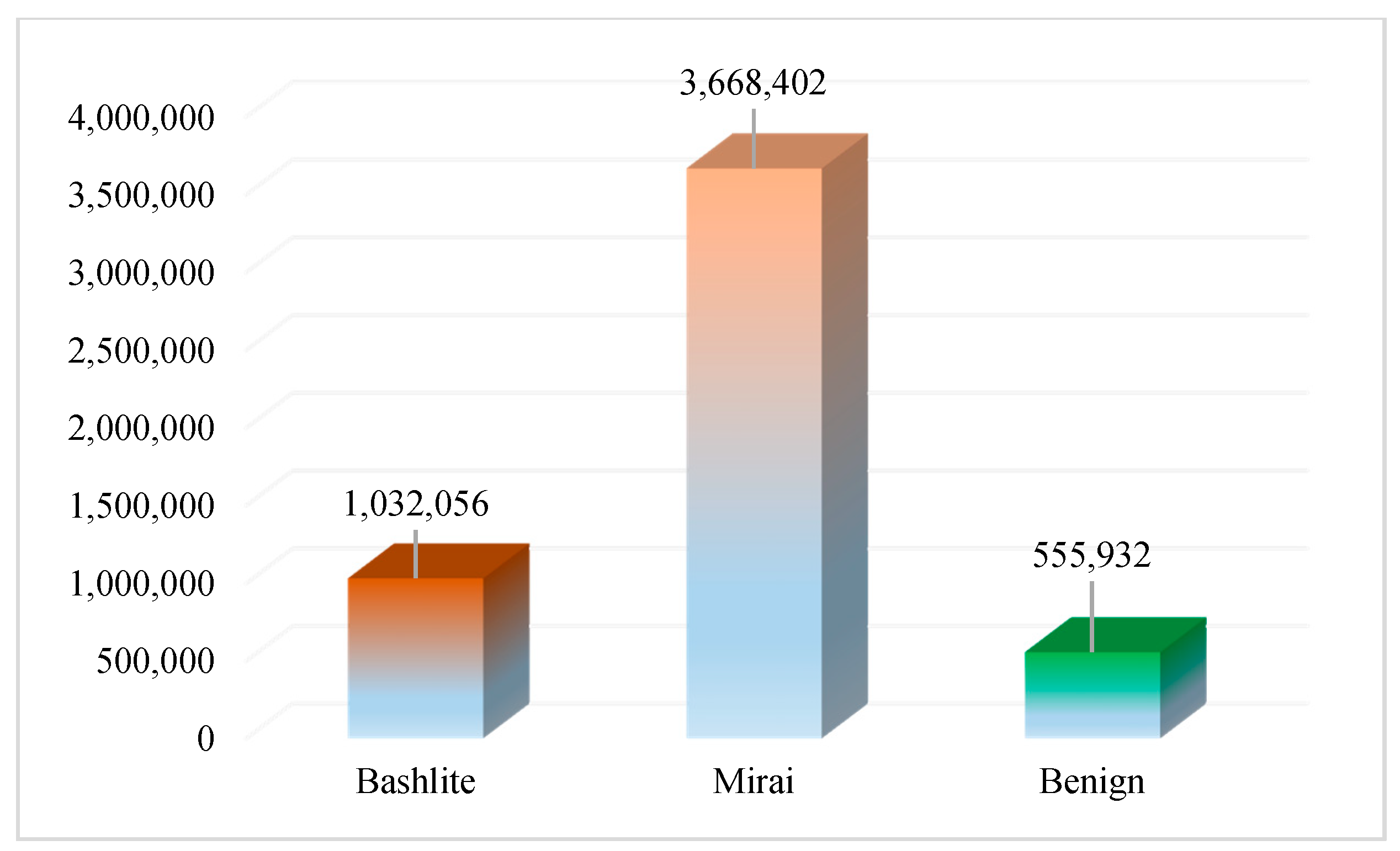

For each category, the mean, packet size, packet count, and variance are calculated. For the socket and channel category, supplementary statistics are provided, such as the packet size correlation coefficient, magnitude, radius, and covariance. The distribution of class labels in the dataset is visualized in

Figure 3.

Due to the dataset being dominated by the Mirai class, and because we wanted to compare the “benign” class with other classes, the instances of Bashlite and Mirai classes were sampled at the “benign” class size to make them more balanced.

Figure 4a shows the distribution of instances according to the Mirai, Bashlite, and benign classes in the dataset.

Figure 4b illustrates the distribution of instances according to attack and benign classes in the dataset.

4.2. Experimental Results

The experimental results were figured out through the implementation of the proposed approach using Python programming language on a laptop with and Intel Core i7 2.2 GHz CPU, 32 GB RAM, and 64-bit Windows 10 operating system.

These results were assessed using a number of performance measures, including a confusion matrix; the numbers of true positive (

TP) instances, true negative (

TN) instances, false positive (

FP) instances, and false negative (

FP) instances; and the accuracy, precision, recall, and F1-scores. The confusion matrix is a table that represents the number of instances for each class that are correctly classified and the number of instances that are not correctly classified. One should note that the

TP, TN, FP, and

FN instances are obtained from the confusion matrix. The other performance measures can be defined using the following equations:

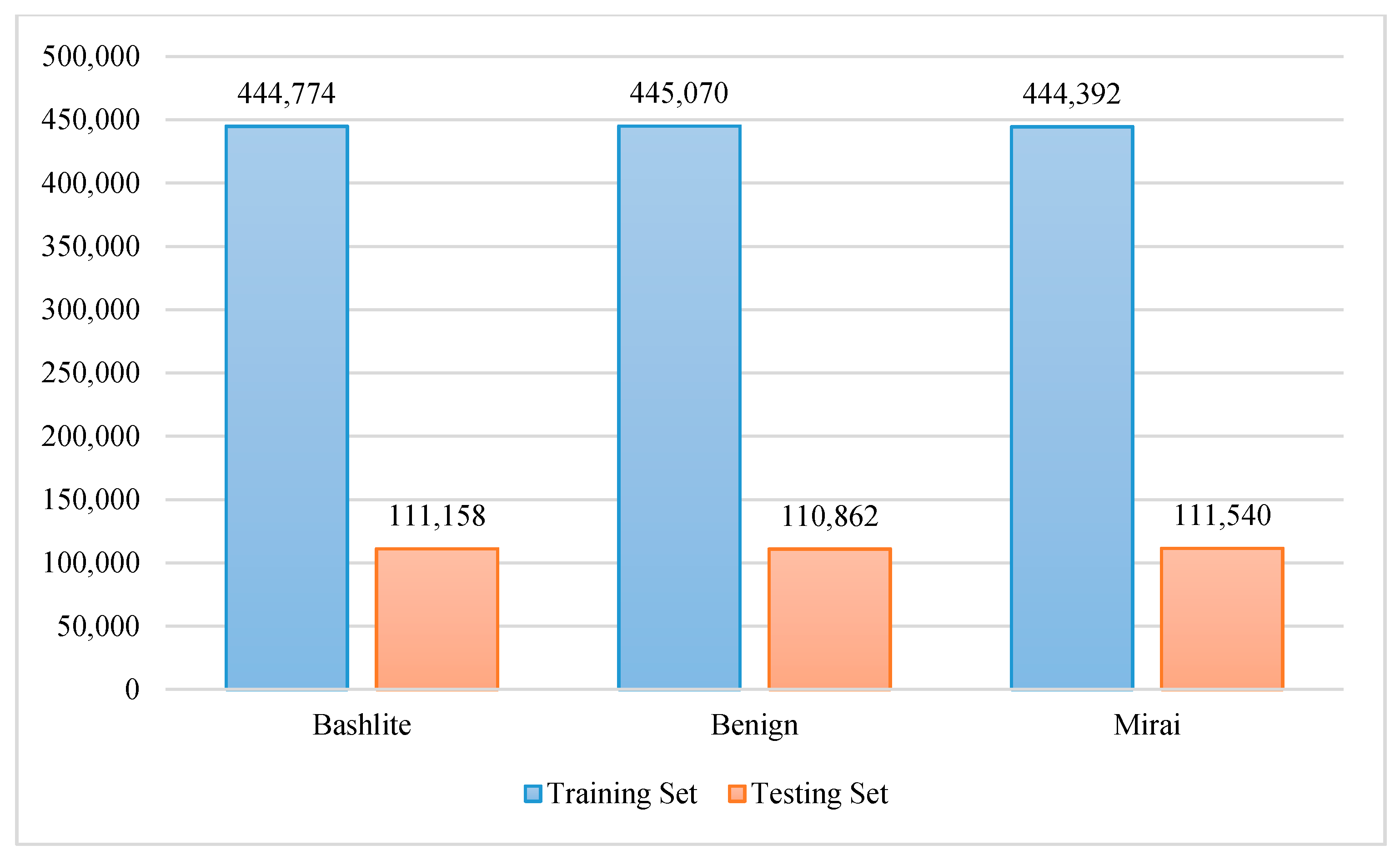

The testing sets for the experiments were obtained from the network-based IoT (BaIoT) dataset using holdout and 10-fold cross-validation techniques. In the holdout technique, the dataset is divided, with 80% being used for training and 20% being used for testing.

Figure 5 and

Figure 6 show the number of instances in the training and testing sets for binary and multiclass detection.

For the 10-fold cross-validation technique, the dataset is divided into 10 parts, one of which is used for testing, with the others being used for training. Regarding the training set for the holdout technique, 20% is used as a validation set to tune the parameters of the GXGBoost model.

All instances in the training, validation, and testing sets are normalized and then the Fisher score method is applied to the training set to rank the features in descending order based on their scores.

Table 2 lists the features and their Fisher scores. In

Table 1, the features are sorted in decreasing order based on their Fisher scores in order to target labels.

These scores are defined as the distances between the means of instances for each class label and each feature, which are divided by their variances. Hence, the Fisher score method ranks each feature independently according to Fisher criterion. From

Table 1, we can see that the features “MI_dir_L0.01_weight”, “H_L0.01_weight”, “MI_dir_L0.01_mean”, “H_L0.01_mean”, and “MI_dir_L0.1_mean”, which have the numbers 13, 28, 14, 29, and 11, respectively, are the top five features. Consequently, we expect that these features will achieve the best results.

The next step is the training process for the GXGBoost model, involving the training set and tuning or optimization using the GA on the validation set, as shown in Algorithm 2. The three most important parameters of the GXGBoost model are the learning rate, the max depth, and the number of estimators. These parameters are initialized with random values that represent the minimum and maximum values. The tuning process for the GXGBoost model tunes these parameters to have values of 0.2, 5, and 20, respectively. The other parameters are left to have default values.

Figure 6 and

Figure 7 show the F1-score results for different numbers of features when detecting attack and benign classes, and when detecting Mirai, Bashlite, and benign classes, respectively.

Figure 7 and

Figure 8 show that feature numbers 13, 28, and 14, are the minimum numbers of features that achieved the highest weighted average F1-scores when detecting both the attack and benign classes, and detecting the Mirai, Bashlite, and benign classes.

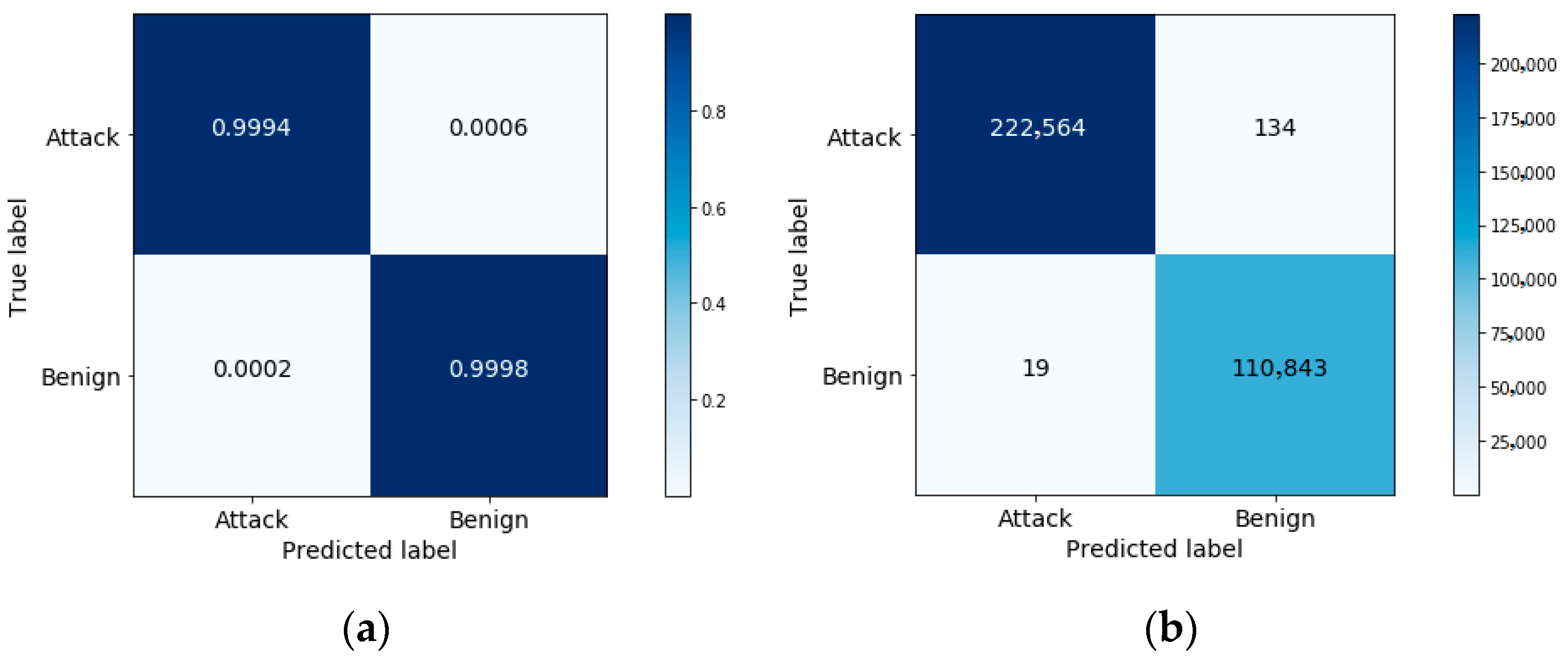

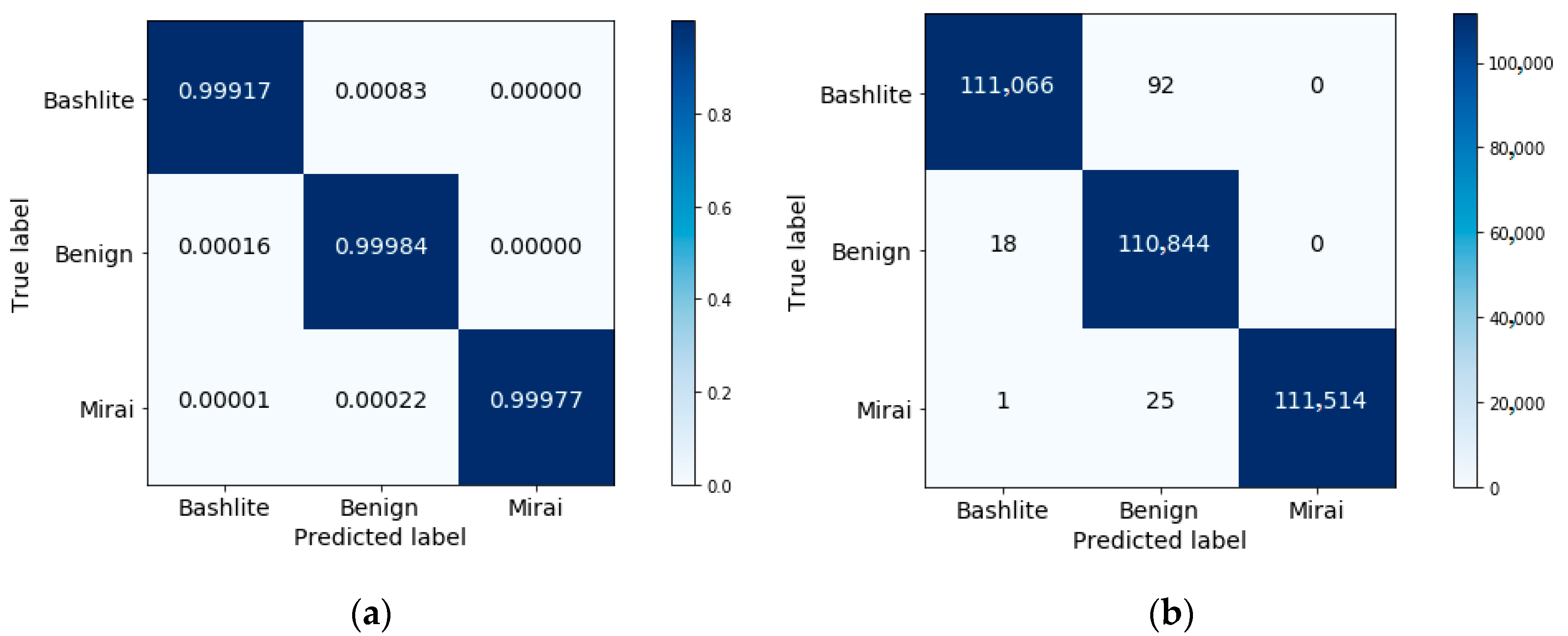

To explore this further, the confusion matrices using the selected three features are shown in

Figure 9 and

Figure 10.

Table 3 and

Table 4 list the results for the other performance measures.

In

Figure 9b, we can see that the model correctly detects 222,564 instances out of 222,583 as “attack” class, which represents the TP measure, and incorrectly detects 19 instances out of 222,583 as “benign” class, which represents the FP measure. In addition, the model correctly detects 110,843 instances out of 110,977 as “benign” class, which represents the TN measure, and incorrectly detects 134 instances out of 110,977 as “attack” class, which represents the FN measure. To compute the TP rate, we divide the TP over the total true attack class number (222,564/(222,564 + 134)) to get 0.99940. To compute the FP rate, we divide the FP over the total true benign class number (19/(19 + 110,843)) to get 0.00017. From these results, we notice that the approach can achieve a low FP rate and a high TP rate when effectively differentiating attack patterns from benign data traffic.

From

Figure 9 and

Figure 10, as well as the results reported in

Table 3 and

Table 4, one can see that the performance attained using the selected features exceeds that achieved using all features.

To validate the results of the holdout technique for the proposed approach, we reported the results of the 10-fold cross-validation to detect the attack and benign classes using the selected features in

Table 5,

Table 6 and

Table 7. Similarly,

Table 8,

Table 9 and

Table 10 show the results of the 10-fold cross-validation for detecting Mirai, Bashlite, and benign classes based on the selected features.

Moreover, to evaluate the efficiency of the proposed approach, the execution time averages for training the GXGBoost model on 1,334,236 instances and testing it on 333,560 instances were computed using the three selected features and using all features, as shown in

Table 11.

Table 11 demonstrates clearly that the average execution time taken to train and test the GXGBoost model using the selected features is significantly lower than the average execution time spent using all features. This confirms the efficiency and feasibility of the proposed approach for real-time systems in which the quantitative expression of time is needed to describe the detection response in such systems. This approach is suitable for systems with hard real-time constraints, whereby these systems must first detect the incoming attacks in a corresponding timeframe before they can do any damage. On the other hand, to compute the time complexity in order to construct the GXGBoost model, we use a big-O notation. Big-O notation is a mathematical notation, used in a computer science field to describe how the run time of an algorithm scales according to the size of input variables. Thus, we assumed that we had

n populations and

n iterations in the worst case. In addition, the time complexity for building the gradient boosting model is

, where

represents the number of features and

is the number of data samples [

51]. Therefore, the time complexity for constructing the GXGBoost model is

, a cubic polynomial time. Because the proposed approach reduced the number of features,

, to three, this made the running time more efficient when detecting botnet attacks on IoT devices, which have limited computing resources.

Table 12 and

Table 13 summarize and introduce a comparison between the proposed approach and the recent related works on the N-BaIoT dataset.

Table 12 compares the results with related works on the detection of attack and benign classes.

Table 13 compares the results of the proposed work with related works on detecting Mirai, Bashlite, and benign classes.

From

Table 12 and

Table 13, one can obviously see that the proposed approach outperforms the related works in detecting botnet attacks on IoT devices using three features. Although the deep autoencoder-based approach in [

55] achieved a competitive accuracy result for detecting attack and benign classes using three features, our proposed approach attained the highest accuracy result, with the advantage that it can be executed once to select the best features, compared to the autoencoder-based feature reduction approach, which is executed in each run to reduce the features, adding an extra overload to the detection task. Another disadvantage of the autoencoder-based approach is the large number of parameters that need to be optimized and initialized in the training process. Accordingly, the proposed approach exhibits many advantages that improve the effectiveness and efficiency of botnet attack detection for resource-constrained IoT devices.

4.3. Statistical Tests

In this subsection, we report the results of the statistical test conducted to validate the obtained accuracy results. This test is meant to judge the significance of the obtained results and to ensure the fairness of the comparison with the relevant methods. To conduct this statistical test, a one-sample t-test was used to compare the accuracy results of the 10-fold cross-validation with the accuracy results of comparative methods. The one-sample t-test is a statistical parametric analysis measure that compares the mean of random samples to a hypothesized mean value and tests for a deviation from that hypothesized value. Using the one-sample t-test, we formulated the hypotheses for the statistical analysis as follows:

- •

Null hypothesis: The mean of the accuracy results for 10-fold cross-validation of the proposed approach is equal to the hypothesized accuracy value, which is 99.96%.

- •

Alternative hypothesis: The mean of accuracy results for the 10-fold cross-validation of the proposed approach is not equal to the hypothesized accuracy value, which is 99.96%.

After conducting the analysis using SPSS software, the test results were obtained, which are documented in

Table 14 and

Table 15.

Table 14 shows the one-sample t-test results for the accuracy of attack and benign classification using a 10-fold cross-validation technique. In addition,

Table 15 illustrates the one-sample t-test results for the accuracy of classification of the Mirai, Bashlite, and benign classes.

In

Table 14 and

Table 15, the degrees of freedom (DF) represent the amount of information in the data sample of size

n-1 that can be provided to compute the variability of the estimates for the parameter values of an unknown population. Thus, the one-sample t-test applies a t-distribution with

n-1 DF. As we used a 10-fold cross-validation technique in our statistical analysis test, the sample size was 10; one of the samples was used to estimate the mean of the accuracy results and the remaining nine DF were used to estimate the variability.

From the results of the one-sample

t-test shown in

Table 14, we can see that the

p-value is 1 for the accuracy of the attack and benign classification. Moreover, as can be seen in

Table 15, the

p-value reaches 0.343 for the accuracy of the Mirai, Bashlite, and benign classification. For both cases, the

p-value is greater than 0.05. Therefore, we can accept the null hypothesis for the proposed approach for classifying benign and IoT botnet attack classes. Consequently, the mean of the accuracy results for the proposed approach for all test sets of the 10-fold technique is equal to the hypothesized accuracy value, which is 99.96%. This result was used to compare the approach with recent related works. This means that the distribution of accuracies for all test sets is almost the same and there is no statistically significant differences between them, which confirms the stability and effectiveness of the proposed approach against the overfitting problem.